Low Complexity HEVC Encoder for Visual Sensor Networks

Abstract

:1. Introduction

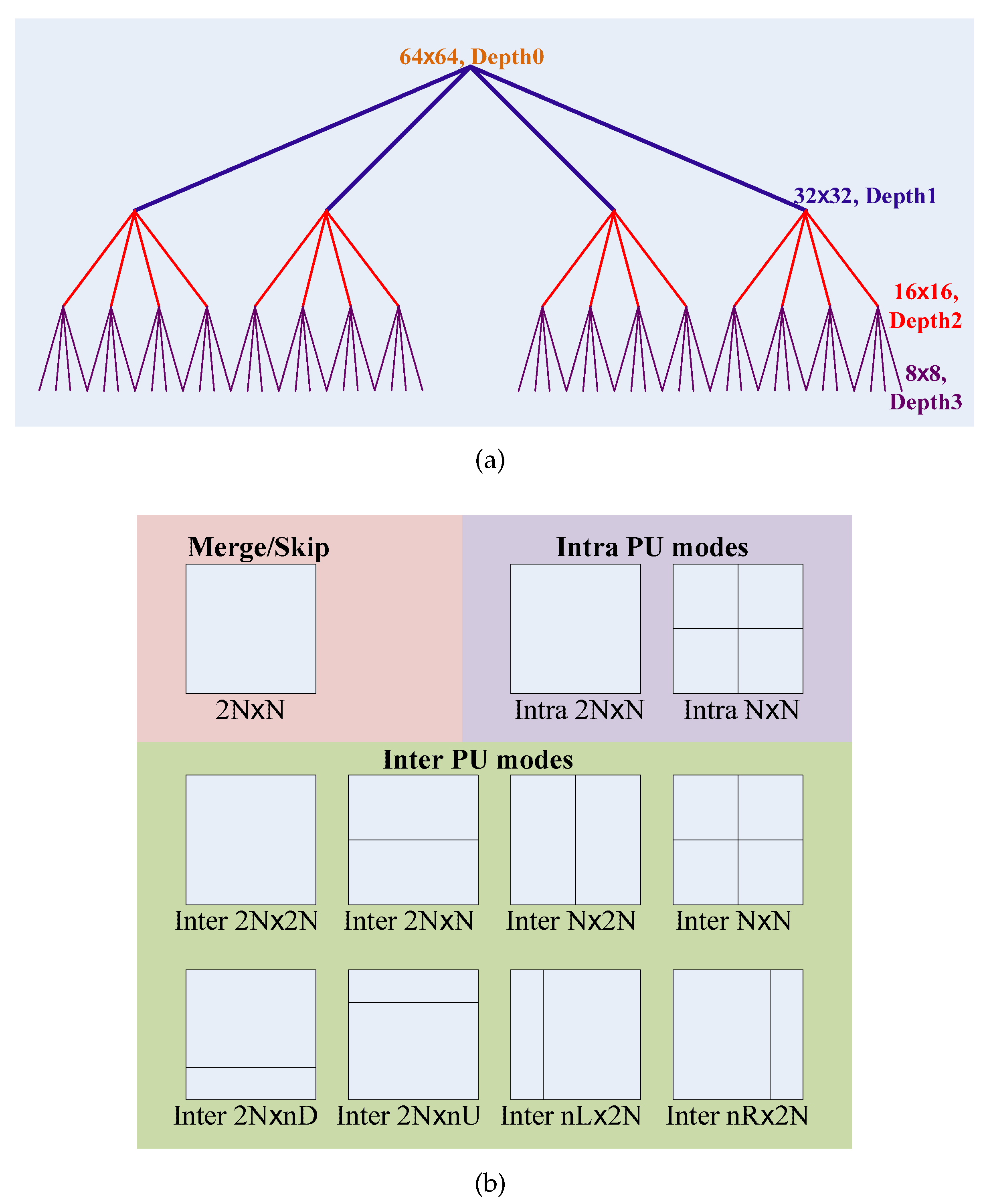

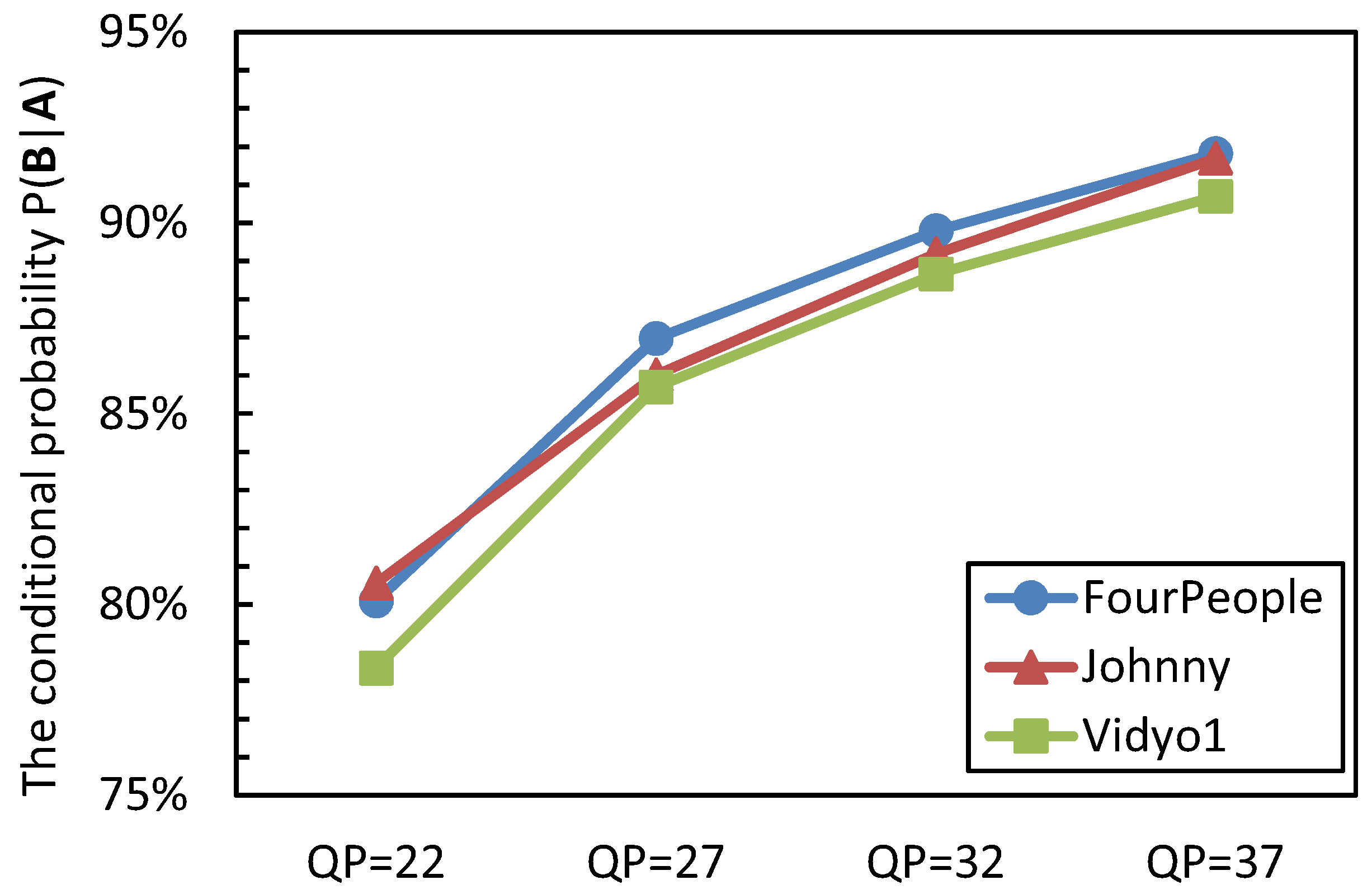

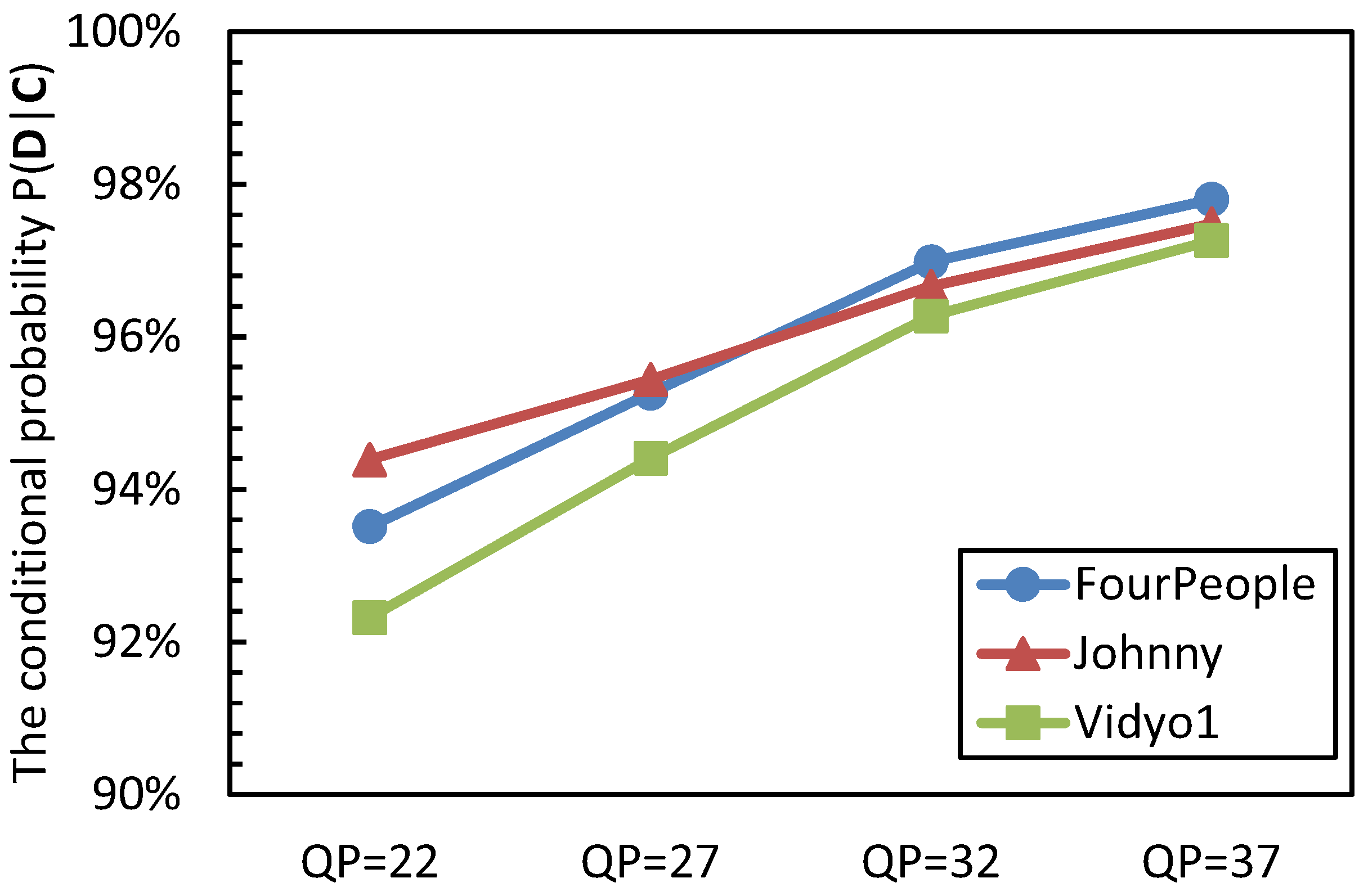

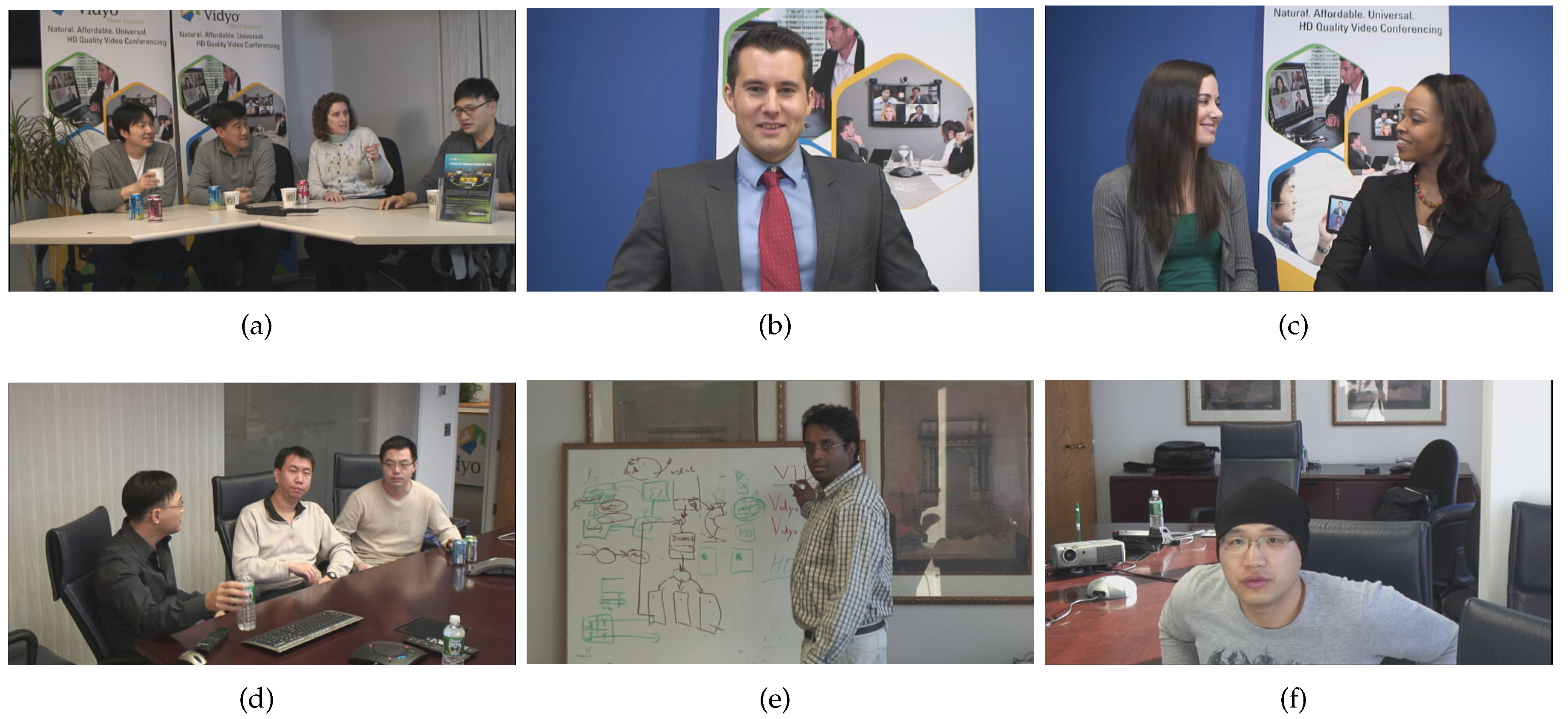

2. Motivations and Statistical Analyses

| Sequence | QP | Level 0 | Level 1 | Level 2 | Level 3 |

|---|---|---|---|---|---|

| FourPeople | 22 | 49.48 | 24.79 | 22.13 | 3.60 |

| 27 | 66.59 | 16.50 | 15.19 | 1.71 | |

| 32 | 74.69 | 11.88 | 12.46 | 0.98 | |

| 37 | 79.57 | 8.78 | 11.15 | 0.50 | |

| Johnny | 22 | 51.30 | 26.48 | 19.91 | 2.31 |

| 27 | 68.30 | 17.63 | 13.26 | 0.81 | |

| 32 | 76.74 | 11.87 | 10.95 | 0.44 | |

| 37 | 82.57 | 7.58 | 9.59 | 0.25 | |

| Vidyo1 | 22 | 48.94 | 26.55 | 21.07 | 3.44 |

| 27 | 65.94 | 18.27 | 14.51 | 1.27 | |

| 32 | 74.66 | 13.12 | 11.66 | 0.55 | |

| 37 | 80.34 | 9.35 | 10.07 | 0.24 | |

| Average | 68.26 | 16.07 | 14.33 | 1.34 | |

3. The Proposed Low Complexity HEVC Encoder for VSNs

3.1. The Proposed All-Zero Block-Based Fast CU Depth Decision Method

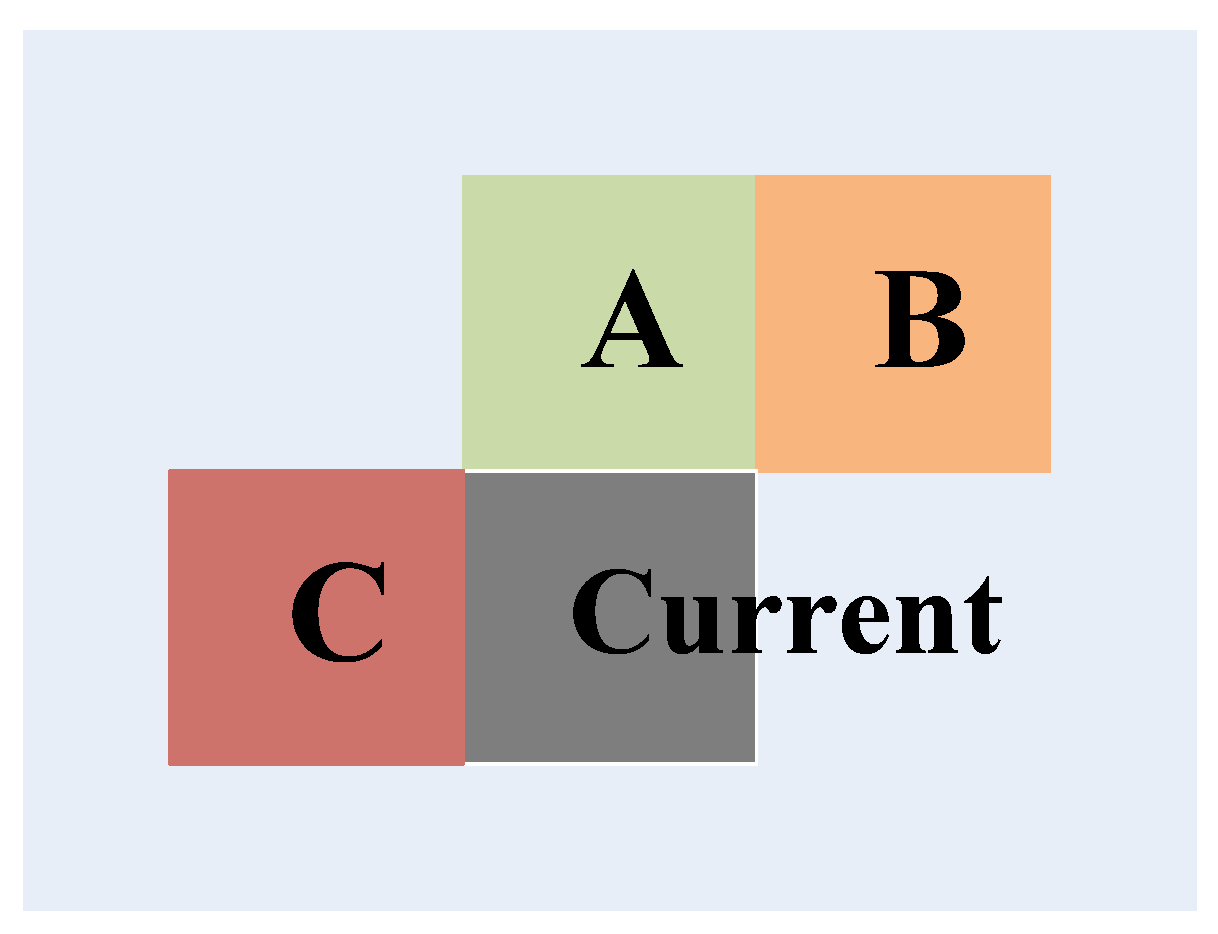

3.2. Efficient Distortion Estimation Based on Spatial Correlation

3.3. The Overall Algorithm

| Algorithm 1 Proposed fast CU size decision method for the low complexity H.265/HEVC encoder. |

| Input: CTU size = 64 × 64, the maximum quadtree depth level = 4 |

| for Depth level=0 to 3 do |

| Encode the current CU with the Merge/Skip mode |

| Encode the current CU with the inter-2N×2N mode |

| if then |

| The predictive distortion of the remaining inter-prediction modes is obtained by Equation (4) and Equation (5) |

| else |

| The predictive distortion of the remaining inter-prediction modes is achieved by the original motion estimation |

| end if |

| if then |

| The CU size decision process is terminated |

| else |

| Encode the current CTU with the next quadtree depth level |

| end if |

| Output: The best CU quadtree depth level |

| end for |

| Process the next CTU |

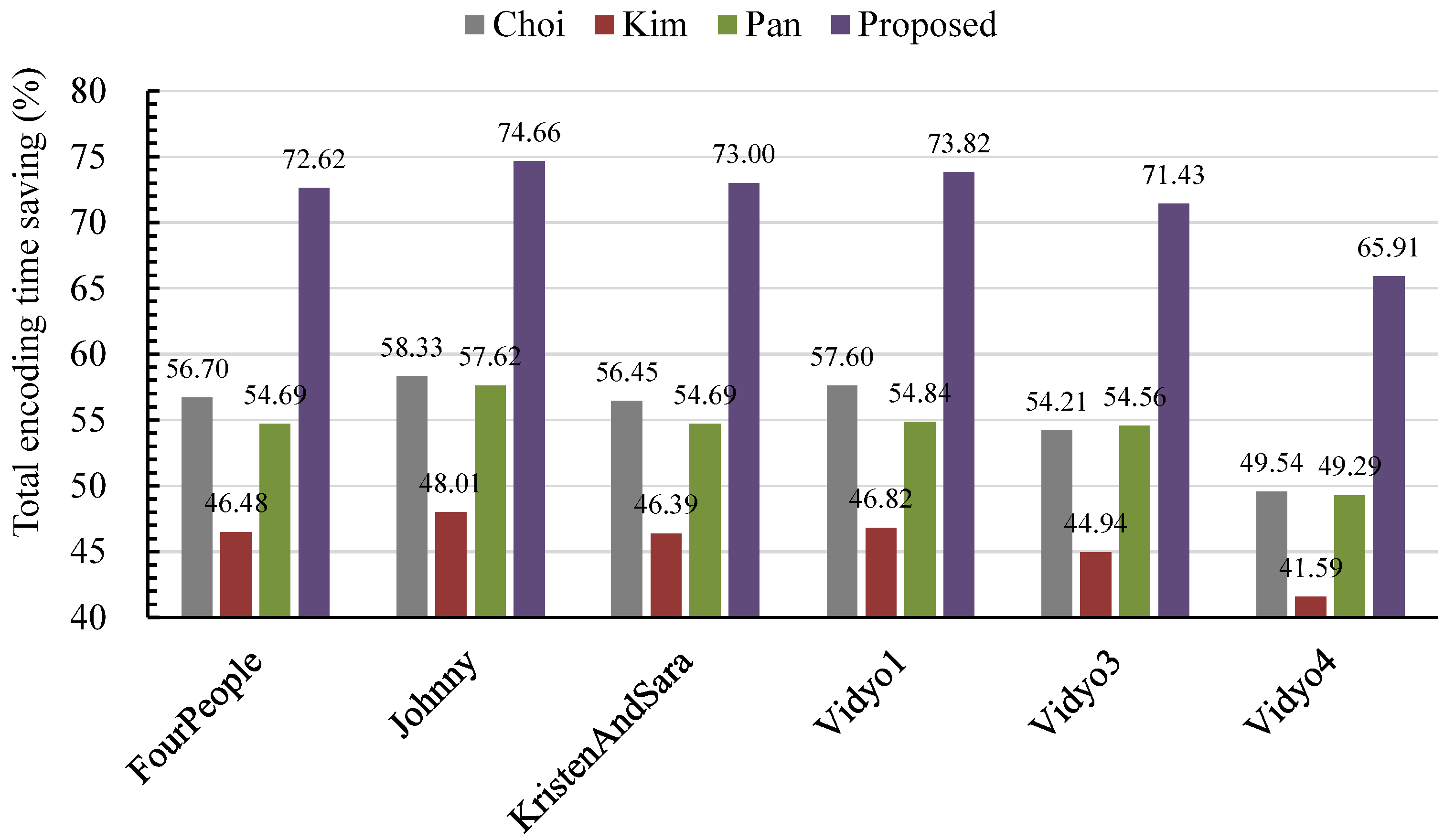

4. Experimental Results

| Sequence | QP | Choi [11] vs. HM | Kim [20] vs. HM | Pan [12] vs. HM | Proposed vs. HM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ΔPSNR | ΔBR | ΔT | ΔPSNR | ΔBR | ΔT | ΔPSNR | ΔBR | ΔT | ΔPSNR | ΔBR | ΔT | ||

| (dB) | (%) | (%) | (dB) | (%) | (%) | (dB) | (%) | (%) | (dB) | (%) | (%) | ||

| FourPeople | 22 | −0.030 | −1.32 | −41.84 | −0.008 | 0.07 | −35.70 | −0.014 | −0.08 | −39.27 | −0.034 | −1.13 | −58.04 |

| 27 | −0.039 | −1.28 | −55.15 | −0.013 | −0.41 | −45.36 | −0.027 | 0.15 | −52.35 | −0.041 | −0.99 | −71.59 | |

| 32 | −0.025 | −0.65 | −62.45 | 0.001 | −0.12 | −50.67 | −0.029 | 0.81 | −60.81 | −0.047 | −0.57 | −78.38 | |

| 37 | −0.029 | −0.62 | −67.37 | −0.012 | 0.04 | −54.20 | −0.019 | −0.38 | −66.34 | −0.063 | 0.06 | −82.46 | |

| Average | −0.004 | 0.09 | −56.70 | −0.002 | 0.06 | −46.48 | −0.011 | 0.34 | −54.69 | −0.021 | 0.61 | −72.62 | |

| Johnny | 22 | −0.024 | −1.22 | −41.67 | −0.010 | −0.52 | −36.45 | −0.019 | −0.32 | −39.61 | −0.032 | −1.04 | −58.17 |

| 27 | −0.030 | −1.16 | −56.50 | −0.014 | −0.21 | −46.92 | −0.016 | −0.57 | −56.67 | −0.047 | −1.43 | −74.11 | |

| 32 | −0.046 | −1.21 | −64.98 | −0.029 | −0.35 | −52.46 | −0.042 | −0.53 | −65.08 | −0.064 | −1.62 | −81.09 | |

| 37 | −0.039 | −1.30 | −70.17 | −0.026 | −0.43 | −56.22 | −0.021 | −0.38 | −69.12 | −0.075 | −1.26 | −85.26 | |

| Average | −0.003 | 0.22 | −58.33 | −0.009 | 0.44 | −48.01 | −0.008 | 0.57 | −57.62 | −0.017 | 0.69 | −74.66 | |

| KristenAndSara | 22 | −0.035 | −1.12 | −41.23 | −0.010 | −0.28 | −35.41 | −0.014 | −0.08 | −39.27 | −0.047 | −1.08 | −57.91 |

| 27 | −0.047 | −1.44 | −54.02 | −0.014 | −0.09 | −44.77 | −0.027 | 0.15 | −52.35 | −0.072 | −2.35 | −71.39 | |

| 32 | −0.050 | −1.99 | −62.69 | −0.013 | 0.00 | −50.86 | −0.029 | 0.81 | −60.81 | −0.079 | −1.59 | −79.14 | |

| 37 | −0.053 | −1.27 | −67.84 | −0.012 | 0.19 | −54.51 | −0.019 | −0.38 | −66.34 | −0.089 | −0.84 | −83.58 | |

| Average | −0.004 | 0.01 | −56.45 | −0.012 | 0.39 | −46.39 | −0.031 | 1.15 | −54.69 | −0.019 | 0.68 | −73.00 | |

| Vidyo1 | 22 | −0.034 | −1.40 | −43.63 | −0.003 | 0.21 | −36.67 | −0.014 | −0.36 | −40.42 | −0.042 | −1.51 | −59.66 |

| 27 | −0.042 | −1.28 | −55.67 | −0.013 | −0.22 | −45.59 | −0.026 | −0.82 | −52.61 | −0.056 | −0.78 | −72.37 | |

| 32 | −0.043 | −1.06 | −62.99 | −0.024 | −0.46 | −50.79 | −0.027 | −0.40 | −61.17 | −0.063 | −0.57 | −79.38 | |

| 37 | −0.036 | −1.40 | −68.12 | −0.022 | −0.76 | −54.25 | −0.023 | 0.12 | −65.18 | −0.070 | −0.64 | −83.88 | |

| Average | 0.033 | −0.98 | −57.60 | 0.030 | −0.90 | −46.82 | −0.009 | 0.29 | −54.84 | 0.033 | −1.02 | −73.82 | |

| Vidyo3 | 22 | −0.038 | −0.80 | −40.47 | −0.010 | −0.16 | −34.68 | −0.017 | −0.29 | −39.41 | −0.053 | −1.11 | −57.07 |

| 27 | −0.045 | −0.69 | −51.98 | −0.020 | 0.06 | −43.51 | −0.025 | −0.05 | −51.86 | −0.061 | −0.40 | −69.79 | |

| 32 | −0.059 | −0.84 | −59.36 | −0.041 | −0.30 | −49.01 | −0.036 | 0.52 | −60.94 | −0.114 | −0.39 | −76.91 | |

| 37 | −0.071 | −0.75 | −65.03 | −0.017 | 0.69 | −52.55 | −0.034 | 0.39 | −66.03 | −0.112 | 0.16 | −81.96 | |

| Average | −0.024 | 0.70 | −54.21 | −0.025 | 0.68 | −44.94 | −0.032 | 1.04 | −54.56 | −0.064 | 1.98 | −71.43 | |

| Vidyo4 | 22 | −0.029 | −0.69 | −32.54 | −0.008 | −0.09 | −29.06 | −0.015 | −0.26 | −33.82 | −0.030 | −0.72 | −51.27 |

| 27 | −0.022 | −0.72 | −46.57 | −0.009 | −0.20 | −39.92 | −0.024 | −0.14 | −47.37 | −0.037 | −0.58 | −57.46 | |

| 32 | −0.026 | −0.38 | −56.17 | −0.011 | 0.29 | −46.56 | −0.016 | −0.03 | −55.37 | −0.041 | 0.70 | −74.86 | |

| 37 | −0.028 | −0.40 | −62.89 | −0.009 | 0.03 | −50.81 | −0.017 | 0.03 | −60.62 | −0.049 | 0.46 | −80.07 | |

| Average | −0.009 | 0.39 | −49.54 | −0.008 | 0.38 | −41.59 | −0.018 | 0.60 | −49.29 | −0.036 | 1.46 | −65.91 | |

| Average | −0.002 | 0.07 | −55.47 | −0.005 | 0.18 | −45.71 | −0.018 | 0.67 | −54.28 | −0.021 | 0.73 | −71.91 | |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Soro, S.; Heinzelman, W. A survey of visual sensor networks. Adv. Multimed. 2009, 2009, 1–21. [Google Scholar] [CrossRef]

- Costa, D.G.; Silva, I.; Guedes, L.A.; Vasques, F.; Portugal, P. Availability issues in wireless visual sensor networks. Sensors 2014, 14, 2795–2821. [Google Scholar] [CrossRef] [PubMed]

- Charfi, Y.; Wakamiya, N.; Murata, M. Challenging issues in visual sensor networks. IEEE Wirel. Commun. 2009, 2, 44–49. [Google Scholar]

- Chung, Y.; Lee, S.; Jeon, T.; Park, D. Fast Video Encryption Using the H.264 Error Propagation Property for Smart Mobile Devices. Sensors 2015, 15, 7953–7968. [Google Scholar] [CrossRef] [PubMed]

- Costa, D.G.; Guedes, L.A. A survey on multimedia-based cross-layer optimization in visual sensor networks. Sensors 2011, 11, 5439–5468. [Google Scholar] [CrossRef] [PubMed]

- Xie, S.; Wang, Y. Construction of tree network with limited delivery latency in homogeneous wireless sensor networks. Wirel. Pers. Commun. 2014, 1, 231–246. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 12, 1649–1668. [Google Scholar] [CrossRef]

- ISO/IEC 23008-2:2013 ITU-T Rec. H.265: Information technology-High Efficiency Coding and Media Delivery in Heterogeneous Environments-Part 2: High Efficiency Video Coding; ISO: Geneva, Switzerland, 2013.

- Pan, Z.; Kwong, S. A direction-based unsymmetrical-cross multi-hexagon-grid search algorithm for H.264/AVC motion estimation. J. Signal Process. Syst. 2013, 7, 59–72. [Google Scholar] [CrossRef]

- Lei, J.; Feng, K.; Wu, M.; Li, S.; Hou, C. Rate control of hierarchical B prediction structure for multi-view video coding. Multimed. Tools Appl. 2014, 72, 825–842. [Google Scholar] [CrossRef]

- Choi, K.; Park, S.-H.; Jang, E.S. Coding tree pruning based CU early termination. In Proceedings of the ITU-T/ISO/IEC Joint Collaborative Team on Video Coding (JCT-VC) Document JCTVC-F092, Torino, Italy, 14–22 July 2011.

- Pan, Z.; Kwong, S.; Sun, M.-T.; Lei, J. Early MERGE mode decision based on motion estimation and hierarchical Ddepth correlation for HEVC. IEEE Trans. Broadcast. 2014, 62, 405–412. [Google Scholar] [CrossRef]

- Pan, Z.; Kwong, S.; Zhang, Y.; Lei, J.; Yuan, H. Fast coding tree unit depth decision for high efficiency video coding. In Proceedings of the IEEE International Conference on Image Processing(ICIP), Paris, France, 27–30 October 2014; pp. 3214–3218.

- Shi, H.; Fan, L.; Chen, H. A fast CU size decision algorithm based on adaptive depth selection for HEVC encoder. In Proceedings of the 2014 International Conference onAudio, Language and Image Processing (ICALIP), Shanghai, China, 7–9 July 2014; pp. 143–146.

- Zhang, Y.; Kwong, S.; Wang, X.; Yuan, H.; Pan, Z.; Xu, L. Machine learning-based coding unit depth decisions for flexible complexity allocation in high efficiency video coding. IEEE Trans. Image Process. 2015, 24, 2225–2238. [Google Scholar] [CrossRef] [PubMed]

- Ahn, S.; Lee, B.; Kim, M. A novel fast CU encoding scheme based on spatiotemporal encoding parameters for HEVC inter coding. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 422–435. [Google Scholar]

- Goswami, K.; Kim, B.; Jun, D.; Jung, S.; Choi, J. Early coding unit-splitting termination algorithm for High Efficiency Video Coding (HEVC). ETRI J. 2014, 36, 407–417. [Google Scholar] [CrossRef]

- Tian, G.; Goto, S. Content adaptive prediction unit size decision algorithm for HEVC intra coding. In Proceedings of the 2012 Picture Coding Symposium (PCS), Krakow, Poland, 7–9 May 2012; pp. 405–408.

- Kim, M.; Ling, N.; Song, L.; Gu, Z. Fast skip mode decision with rate-distortion optimization for high efficiency Video coding. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6.

- Kim, J.; Yang, J.; Won, K.; Jeon, B. Early determination of mode decision for HEVC. In Procedings of the 2012 Picture Coding Symposium (PCS), Krakow, Poland, 7–9 May 2012; pp. 449–452.

- Lee, H.; Shim, H.J.; Park, Y.; Jeon, B. Early Skip mode decision for HEVC encoder with emphasis on coding quality. IEEE Trans. Broadcast. 2015, 61, 388–397. [Google Scholar] [CrossRef]

- Ohm, J.-R.; Sullivan, G.J.; Schwarz, H.; Tan, T.K.; Wiegand, T. Comparison of the coding efficiency of video coding standards-including High Efficiency Video Coding (HEVC). IEEE Trans. Circuits Syst. Video Technol. 2012, 12, 1669–1684. [Google Scholar] [CrossRef]

- Bossen, F.; Flynn, D.; Suehring, K. JCT-VC AHG Report: HEVC HM Software Development and Software Technical Evaluation (AHG3); (JCT-VC) Document JCTVC-O0003; ITU-T/ISO/IEC Joint Collaborative Team on Video Coding: San Jose, CA, USA, 2013. [Google Scholar]

- Pan, Z.; Lei, J.; Zhang, Y.; Yan, W.; Kwong, S. Fast transform unit depth decision based on quantized coefficients for HEVC. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Hong Kong, China, 9–12 September 2015; pp. 1127–1132.

- Ma, L.; Li, S.; Ngan, K.N. Reduced-reference image quality assessment in reorganized DCT domain. Signal Process. Image Commun. 2013, 28, 884–902. [Google Scholar] [CrossRef]

- Ma, L.; Ngan, K.N.; Zhang, F.; Li, S. Adaptive block-size transform based just-noticeable difference model for images/videos. Signal Process. Image Commun. 2011, 26, 162–174. [Google Scholar] [CrossRef]

- Ma, L.; Li, S.; Zhang, F.; Ngan, K.N. Reduced-reference image quality assessment using reorganized DCT-based image representation. IEEE Trans. Multimed. 2011, 13, 824–829. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, J.; He, Y.; Zheng, J. Fast inter-pel and fractional-pel motion estimation for H.264/AVC. J. Vis. Commun. Image Represent. 2007, 17, 264–290. [Google Scholar] [CrossRef]

- Pan, Z.; Kwong, S.; Xu, L.; Zhang, Y.; Zhao, T. Predictive and distribution-oriented fast motion estimation for H.264/AVC. J. Real-Time Image Proc. 2014, 4, 597–607. [Google Scholar] [CrossRef]

- Bjontegaard, G. Calculation of Average PSNR Differences between RD-Curves. In Proceedings of the ITU-T Video Coding Experts Group (VCEG) Thirteenth Meeting, Austin, TX, USA, 2–4 April 2001.

- Pan, Z.; Zhang, Y.; Kwong, S. Efficient motion and disparity estimation optimization for low complexity multiview video coding. IEEE Trans. Broadcast. 2015, 61, 166–176. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Z.; Chen, L.; Sun, X. Low Complexity HEVC Encoder for Visual Sensor Networks. Sensors 2015, 15, 30115-30125. https://doi.org/10.3390/s151229788

Pan Z, Chen L, Sun X. Low Complexity HEVC Encoder for Visual Sensor Networks. Sensors. 2015; 15(12):30115-30125. https://doi.org/10.3390/s151229788

Chicago/Turabian StylePan, Zhaoqing, Liming Chen, and Xingming Sun. 2015. "Low Complexity HEVC Encoder for Visual Sensor Networks" Sensors 15, no. 12: 30115-30125. https://doi.org/10.3390/s151229788