Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV

Abstract

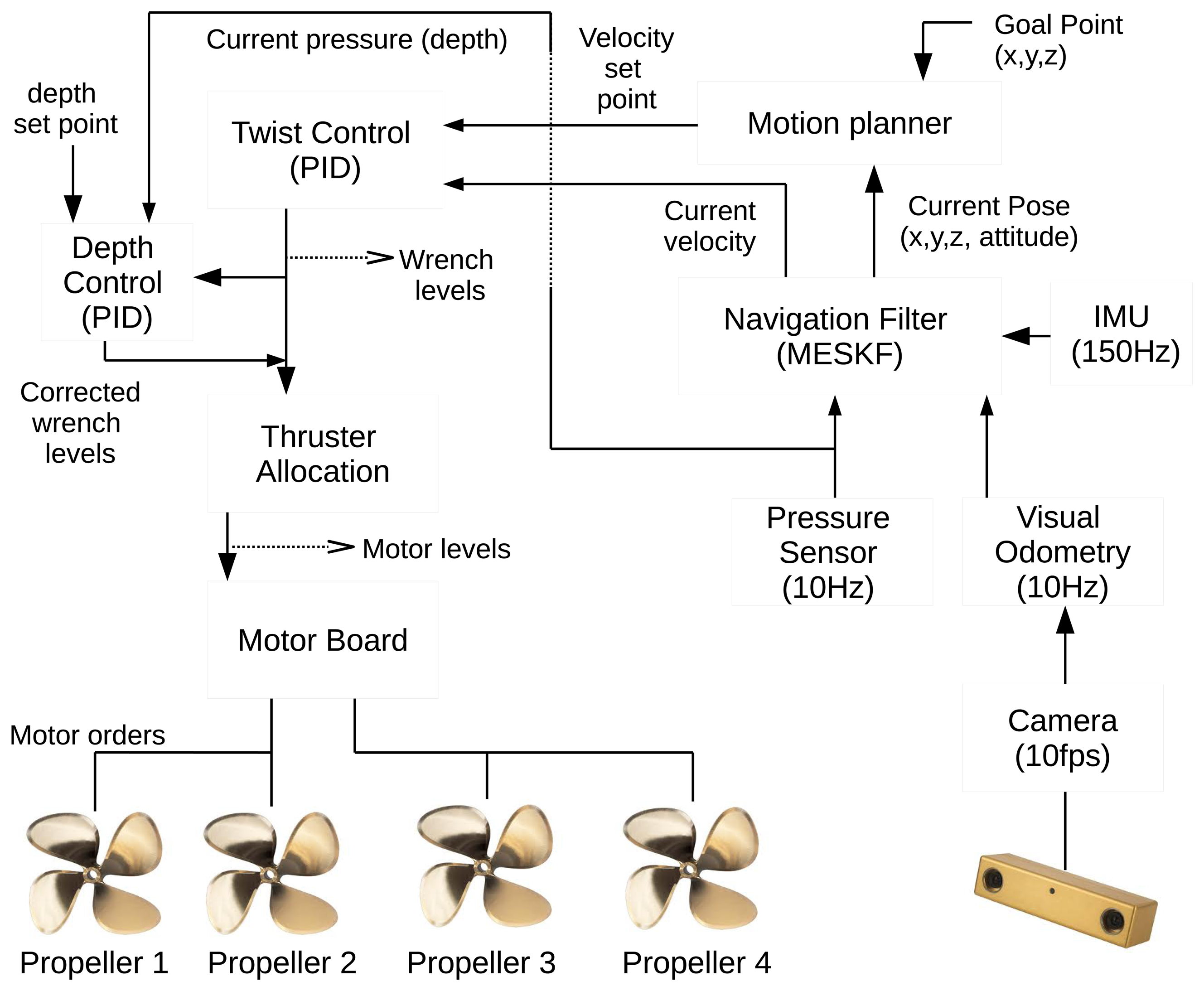

: This paper presents a new solution for underwater observation, image recording, mapping and 3D reconstruction in shallow waters. The platform, designed as a research and testing tool, is based on a small underwater robot equipped with a MEMS-based IMU, two stereo cameras and a pressure sensor. The data given by the sensors are fused, adjusted and corrected in a multiplicative error state Kalman filter (MESKF), which returns a single vector with the pose and twist of the vehicle and the biases of the inertial sensors (the accelerometer and the gyroscope). The inclusion of these biases in the state vector permits their self-calibration and stabilization, improving the estimates of the robot orientation. Experiments in controlled underwater scenarios and in the sea have demonstrated a satisfactory performance and the capacity of the vehicle to operate in real environments and in real time.1. Introduction and Related Work

In the last decade, technology applied to autonomous underwater vehicles (AUVs) has experienced a spectacular development. Scientific, industrial or security applications related to remote observation, object identification, wreck retrieval, mapping and rescuing, among others, have boosted such technological advances. AUVs are progressively becoming fundamental tools for underwater missions, minimizing human intervention in critical situations and automatizing as many procedures as possible. Despite this increasing interest and the great number of vehicles developed far and wide, their cost, size, weight and depth rate are the main factors that mostly restrict their commercialization and extensive use worldwide.

Compared to their larger counterparts, micro-AUVs are smaller, lighter and cheaper to acquire and maintain. These reasons make small AUVs a valuable tool for exploring and mapping shallow waters or cluttered aquatic environments, addressing a wide variety of applications. Conversely, the reduced size of these vehicles limits seriously the equipment that they can carry; thus, they have to be endowed with a reduced sensor set, which can meet, simultaneously, navigation and mission purposes.

The literature is very scarce in this kind of compact system. Smith et al. [1] proposed a micro-AUV to perform inspection, hydrographic surveying and object identification in shallow waters. Watson et al. [2] detail the design of a micro-AUV specially designed to work in swarms, forming mobile underwater sensor networks for monitoring nuclear storage ponds. The hull of this micro-AUV consists of a hemisphere of 150 mm in diameter, and it contains several mini acoustic sensors, a pressure sensor, a gyroscope and a magnetic compass. Wick et al. presented in [3] a miniature AUV for minefield mapping. This robot can work alone or in groups to perform cooperative activities. The dimensions of this vehicle are 60 cm in length by 9 cm in diameter, and it is equipped with a compass, a gyroscope, an accelerometer and a pressure sensor. Both vehicles ([2,3]) have a limited data storage capacity and are mostly prepared to transmit the collected information to an external unit regularly.

Some micro-AUVs incorporate cameras inside their hull, but to the best of our knowledge, none of them have been endowed with two stereo rigs. For instance, Heriot Watt University designed mAUVe [4], a prototype micro-AUV that weighs only 3.5 kg and is equipped with an inertial unit, a pressure sensor and a camera. There are mini-AUVs currently operative that incorporate vision as an important sensor, but all of them have higher dimensions and weight (for instance, DAGON [5], REMUS [6] or SPARUS-II [7]).

In micro-AUVs, navigation, guidance and control modules are usually approached from the point of view of hydrodynamic and kinematic models, since the power of the thrusters is limited by their small volume and light weight, and they are easily influenced by waves or currents [8,9]. These models generate complex non-linear equation systems that usually depend on the structure of the vehicle (mass and shape) and on the external forces that act on it (gravity, buoyancy, hydrodynamics, thrusters and fins) [10]. As a consequence, these models have to be reconsidered if any significant modification is made to the vehicle, adding a considerable complexity to the system without producing, sometimes, the expected results [11]. Furthermore, a control system optimization with respect to its propelling infrastructure and its hydrodynamic behavior is unavoidable, especially if the energy storage and the power supply are limited [12]. An inefficient control strategy will have negative effects on the vehicle autonomy. On the contrary, AUV navigation can be performed by using the data provided by proprioceptive or exteroceptive sensors in an adaptive control unit. This unit estimates continuously the state of the vehicle (pose and twist) regardless of its shape, size and the affecting set of external forces. Assuming general motion models and using inertial measurements to infer the dynamics of the vehicle simplifies the system, facilitates its application to different robots and makes it dependable on the sensor suit rather than on the robot itself [13]. A remarkable approach for cooperative localization of a swarm of underwater robots with respect to a GPS-based geo-referenced surface vehicle is detailed in [14,15]. The algorithm applies a geometric method based on a tetrahedral configuration, and all AUVs are assumed to be equipped with low cost inertial measurement units (IMU), a compass and a depth sensor, but only one of them is equipped with a Doppler velocity log (DVL).

The size and price of equipment have to be optimized when designing micro-AUVs. Low-cost sensors generally provide poor quality measures, so significant errors and drifts in position and orientation can compromise the mission achievement. In this case, software algorithms to infer accurately the vehicle pose and twist become a critical issue since small errors in orientation can cause important drifts in position after a certain time.

Although the vehicle global pose could be inferred by integrating the data provided by an inertial navigation system (INS), in practice, and due to their considerable drift, this information is only valid to estimate motion during a short time interval. Thus, INSs are usually assisted by a suite of other exteroceptive sensors with varying sampling frequencies and resolutions.

A complementary filter [16] could contribute to smoothing the orientation data given by the gyro and accelerometer, and the rest of the position/velocity variables could be taken from the aiding raw sensorial data. However, merging the data of multiple navigation sensors in an extended Kalman filter (EKF) goes one step beyond, since they take into account the motion (dynamic) model of the system, they correct the inertial estimates with external measurements and they consider the uncertainties of all of these variables. Furthermore, they are a widely-extended solution in autonomous robots [17]. Strategies based on Kalman filters also permit the continuous control of the vehicle despite the different frequencies at which the diverse sensors provide measurements and although, for example, any of those sensors may get temporally lost.

The error state formulation of Kalman filters (ESKFs), also called indirect Kalman filters, deal separately with nominal variables and their errors. Although the classical EKF formulation and an ESKF should lead to similar results, the later generates lower state covariance values, and variations on the error variables are much slower than changes on the nominal navigation data [18,19], being better represented as linear. Consequently, ESKFs are especially preferable, but not limited, for vehicles with six degrees of freedom (DOF) with fast dynamics, providing high stability in the navigation performance [20,21]. Besides, ESKF formulations permit continuous vehicle pose estimation, integrating the INS data, in case either the filter or all of the aiding sensors fail.

This paper offers, as a main contribution, a detailed explanation of the structure, the sensorial equipment and the main navigation software components of Fugu-C, a new micro-AUV developed by Systems, Robotics and Vision, a research group of the University of the Balearic Islands (UIB). Fugu-C was initially developed as a testing platform for research developments, but it rapidly became a truly useful tool for underwater image recording, observation, environment 3D reconstruction and mosaicking in shallow waters and in aquatic environments of limited space.

The goal was to implement a vehicle fulfilling a set of key factors and requirements that differentiated it from other micro-AUVs that incorporated some of the same facilities. Fugu-C has the advantage of a micro-AUV in size and weight, but with additional strengths typical of bigger vehicles.

Its most important features are:

- (1)

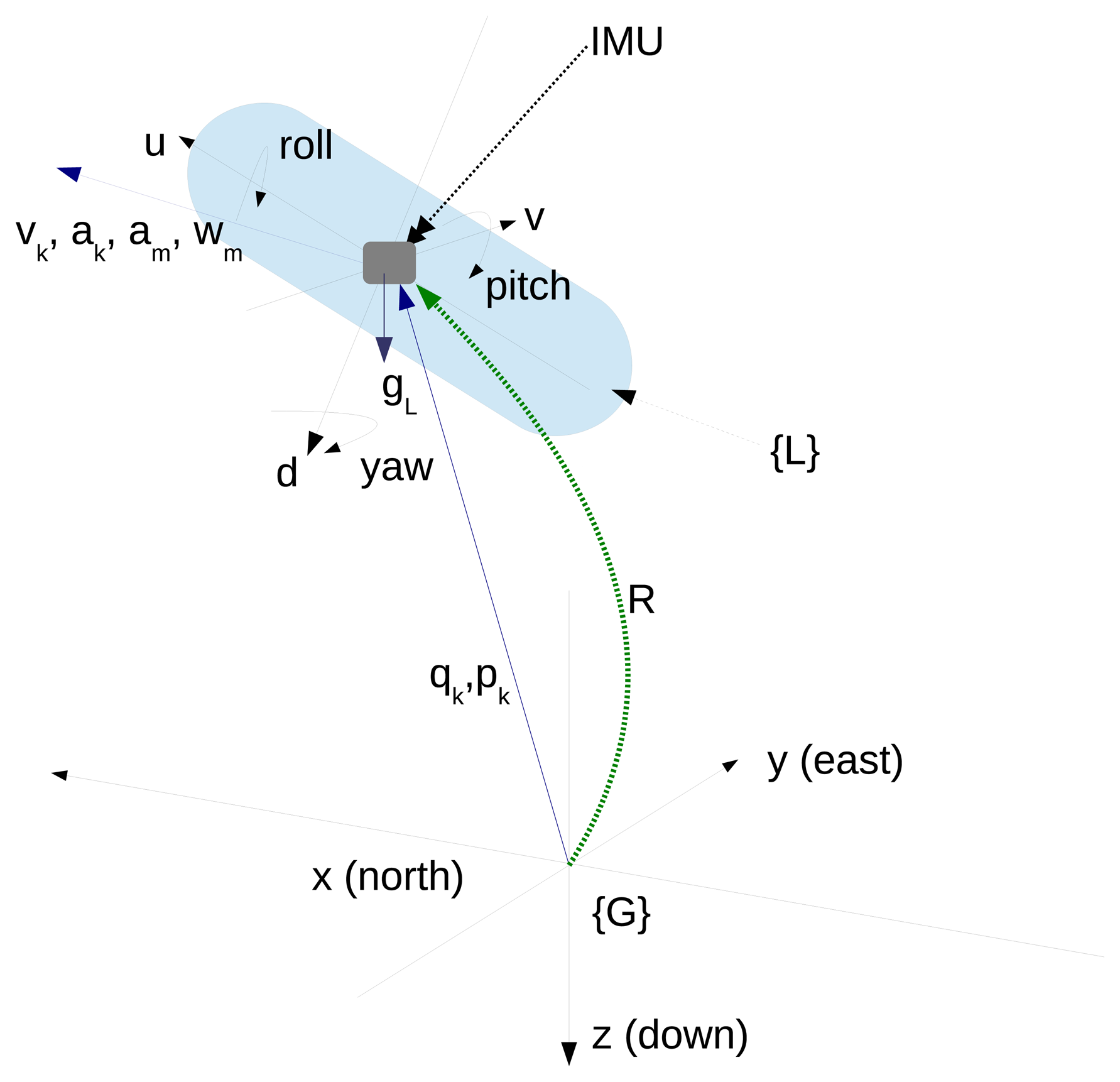

The navigation and guidance modules are based uniquely on the sensor suite. The vehicle is equipped with two stereo rigs, one IMU and a pressure sensor. This paper pays special attention to the navigation filter used in Fugu-C to fuse all of the data coming from all of the navigation sensors. The filter is a particularization of an ESKF approach and includes some strategic points present in similar solutions described in [21–24]. Similarly to [21], the state vector includes not only the pose and velocity of the vehicle, but also the biases of the inertial sensors, permitting a rapid compensation of these systematic errors accumulated in the estimates of the vehicle orientation. As for all of these aforementioned pieces of work, this is a multiplicative filter, which means that all orientations are expressed in the quaternion space, avoiding errors due to attitude singularities. Contrary to other similar references, this filter has a general design prepared to work with vehicles with slow or fast dynamics (AUVs or unmanned aerial vehicles (UAV), respectively). Similarly to [23], the error state is reset after each filter iteration, avoiding the propagation of two state vectors and limiting the covariance variations to the error dynamics. While the other referenced ESKF-based solutions assume non-linear and sometimes complex observation models, we propose to simplify the definition of the predicted measurement errors in the Kalman update stage, applying a linearized observation function, which returns the same values contained in the error state vector. Experimental results shown in Section 5 validate our proposal. Furthermore, the implementation in C++ and its wrapper in the robot operating system (ROS, [25]) are published in a public repository for its use and evaluation [26].

- (2)

Two stereo cameras point to orthogonal directions, covering wider portions of the environment simultaneously. AQUASENSOR [27] is a very similar video structure for underwater imaging. It can be attached to an underwater vehicle, but contrary to Fugu-C, it has only one stereo rig connected to the same hardware structure. The spatial configuration of both cameras is very important to reconstruct, in 3D, the larger parts of the environment with less exploration time and, thus, with less effort. Furthermore, while the down-looking camera can be used for navigation and 3D reconstruction, the frontal one can be used for obstacle detection and avoidance.

- (3)

Fugu-C can be operated remotely or navigate autonomously describing lawn-mowingpaths at a constant depth or altitude. The autonomy of the vehicle is variable, depending on the capacity of the internal hard-drive and the powering battery.

- (4)

The software computation capacity and its internal memory outperform the other cited micro-AUVs, which mostly collect data and send them to external computers to be processed.

This work is organized as follows: Section 2 describes the Fugu-C hardware and software platforms. Section 3 details the whole visual framework and functionalities. Section 4 outlines the modular navigation architecture and the detailed design of the error state Kalman filter. Section 5 presents experimental results concerning navigation and 3D reconstruction, and finally, Section 6 extracts some conclusions about the presented work and points out some details concerning forthcoming work.

2. Platform Description

2.1. Hardware

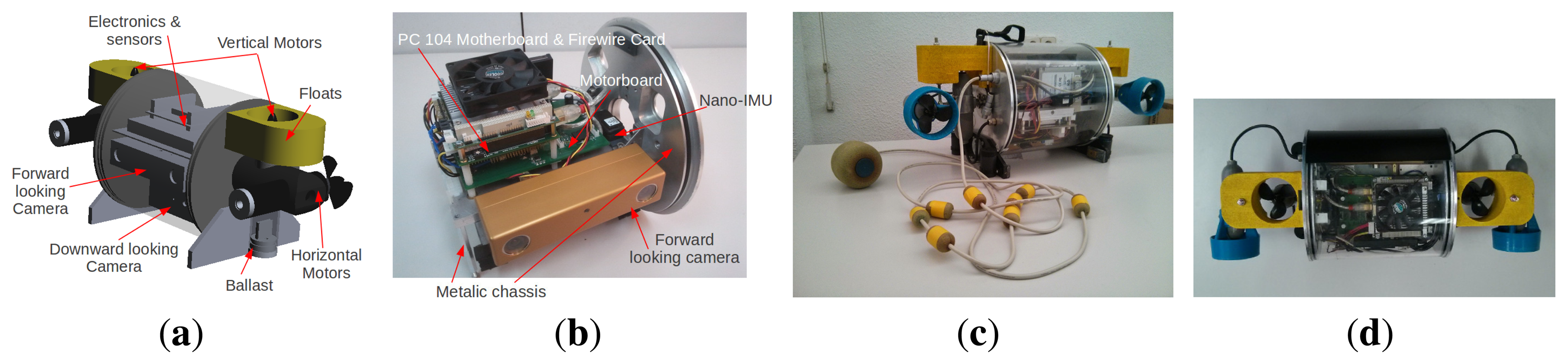

The Fugu-C structure consists of a cylindrical, transparent, sealed housing containing all of the hardware and four propellers, two horizontal and two vertical. The dimensions of the cylinder are ø212 × 209 mm, and the motor supports are 120 mm long. Despite the fact that the propeller configuration could provide the vehicle with four DOFs, surge, heave, roll and yaw, due to the vehicle dynamics, it is almost passively stable in roll and pitch. Consequently, the robot has three practical DOFs, surge, heave and yaw: (x, z, yaw).

Figure 1a shows the 3D CAD model design of the vehicle, Figure 1b shows the hardware and the supporting structure, Figure 1c,d show two views of the final result.

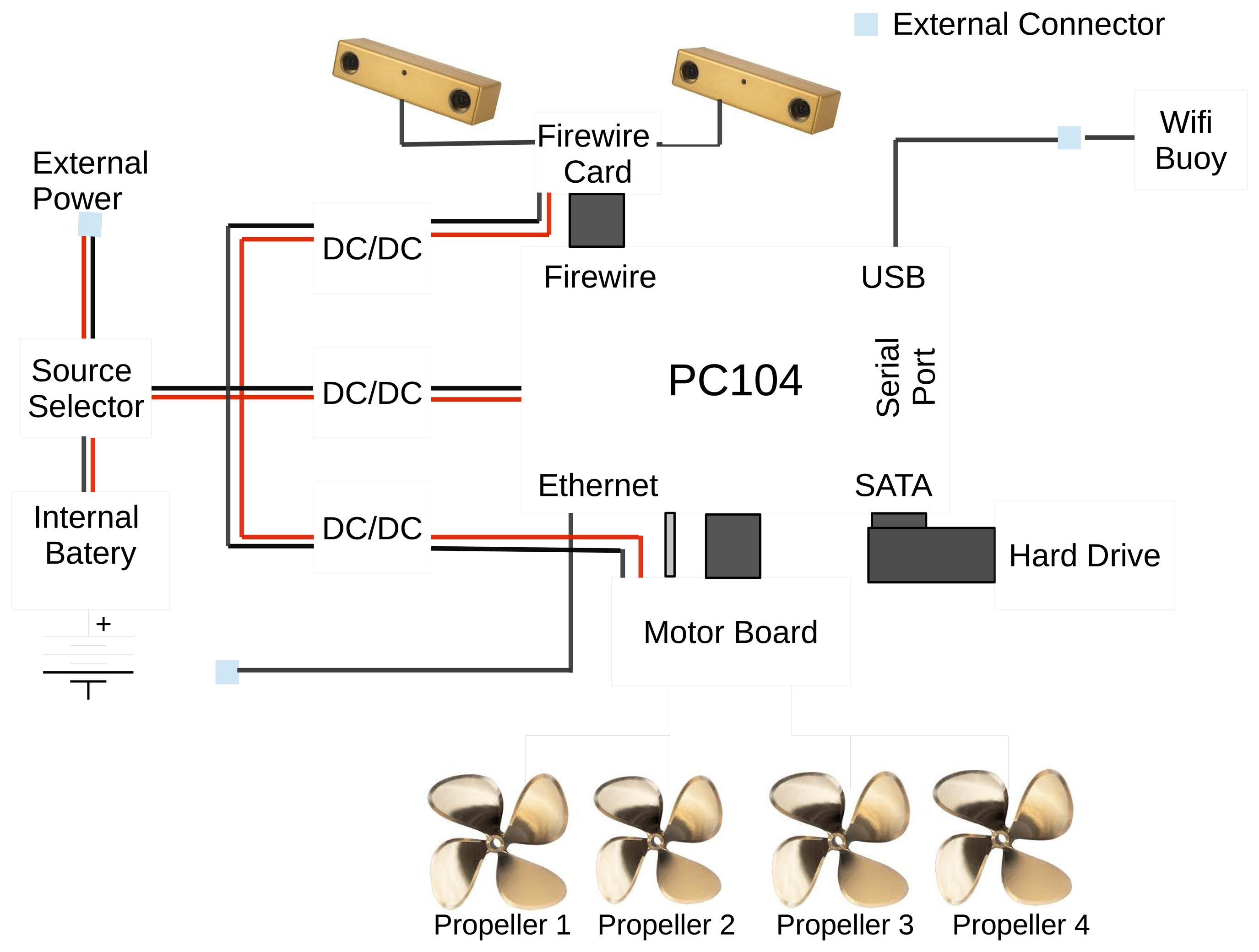

Fugu-C can operate as an AUV, but it can also be teleoperated as a remotely operated vehicle (ROV) with a low-cost joystick. Its hardware includes (see Figure 2):

Two IEEE-1394a stereo cameras, one of them oriented toward the bottom, whose lenses provide a 97° horizontal field of view (HFOV), and another one oriented toward the forward direction with a 66° HFOV.

A 95-Wh polymer Li-Ion battery pack. With this battery, Fugu-C has an autonomy for about 3 h, depending on how much processing is done during the mission. For an autonomous mission with self-localization, the autonomy is reduced to two hours.

A PC104 board based on an Atom N450 at 1.66 GHz with a 128-GB SSD 2.5-inch hard drive.

A three port 800 FireWire PC104 card to connect the cameras to the main computer board.

A nano IMU from Memsense, which provides triaxial acceleration, angular rate (gyro) and magnetic field data [28].

A power supply management card, which permits turning on/off the system, charging the internal battery and the selection between the external power supply or the internal battery.

A microcontroller-based serial motor board that manages some water leak detectors, a pressure sensor and four DC motor drivers.

An external buoy containing a USB WiFi antenna for wireless access from external computers.

A set of DC-DC converters to supply independent power to the different robot modules.

Four geared, brushed DC motors with 1170 rpm of nominal speed and 0.54 kg·cm torque.

A 16-pin watertight socket provides the connectivity for wired Ethernet, external power, battery changing and a WiFi buoy. Note that not all connections are needed during an experiment. If the wired Ethernet is used (tethered ROV in use), then the buoy is not connected. Alternatively, if the buoy is connected (wireless ROV/AUV), then the wired Ethernet remains disconnected.

The autonomy of the robot when operating in AUV mode with the current type of battery has been calculated taking into account that its average power consumption at maximum processing (all sensors on, including both cameras, and all of the electronics and drivers operative) and with the motors engaged is around 44.5 W, so the battery would last (96 W·h)/(44.5 W) = 2.15 h at full charge. If it was necessary to include two LED bulbs (10 W) attached to the vehicle enclosure to operate in environments with poor illumination conditions, the internal power consumption would increase to 64.5 W and the battery would last (96 W·h)/(64.5 W) = 1.49 h.

The robot can operate in shallow waters up to 10 m in depth. However, another version for deeper waters could be made by thickening the acrylic cylinder.

Experiments showed an average speed in surge of 0.5 m/s and an average speed in heave of 1 m/s.

2.2. Software

All of the software was developed and run using ROS middleware [25] under GNU/Linux. Using ROS makes the software integration and interaction easier, improves their maintainability and sharing and strengthens the data integrity of the whole system. The ROS packages installed in Fugu-C can be classified into three categories: internal state management functions, vision functions and navigation functions.

The internal state management function continuously checks the state of the water leak sensors, the temperature sensor and the power level. The activation of any of these three alarms induces the system to publish a ROS topic-type alarm, to terminate all running software and to shutdown the horizontal motors, while it activates the vertical ones to launch the vehicle quickly up to the surface.

The visual functions include image grabbing, stereo odometry, 3D point cloud computation and environment reconstruction, which is explained in Section 3.

The navigation functions include the MESKF for sensor fusion and pose estimation and all of the modules for twist and depth control, and it is detailed in Section 4.

3. The Vision Framework

3.1. Image Grabbing and Processing

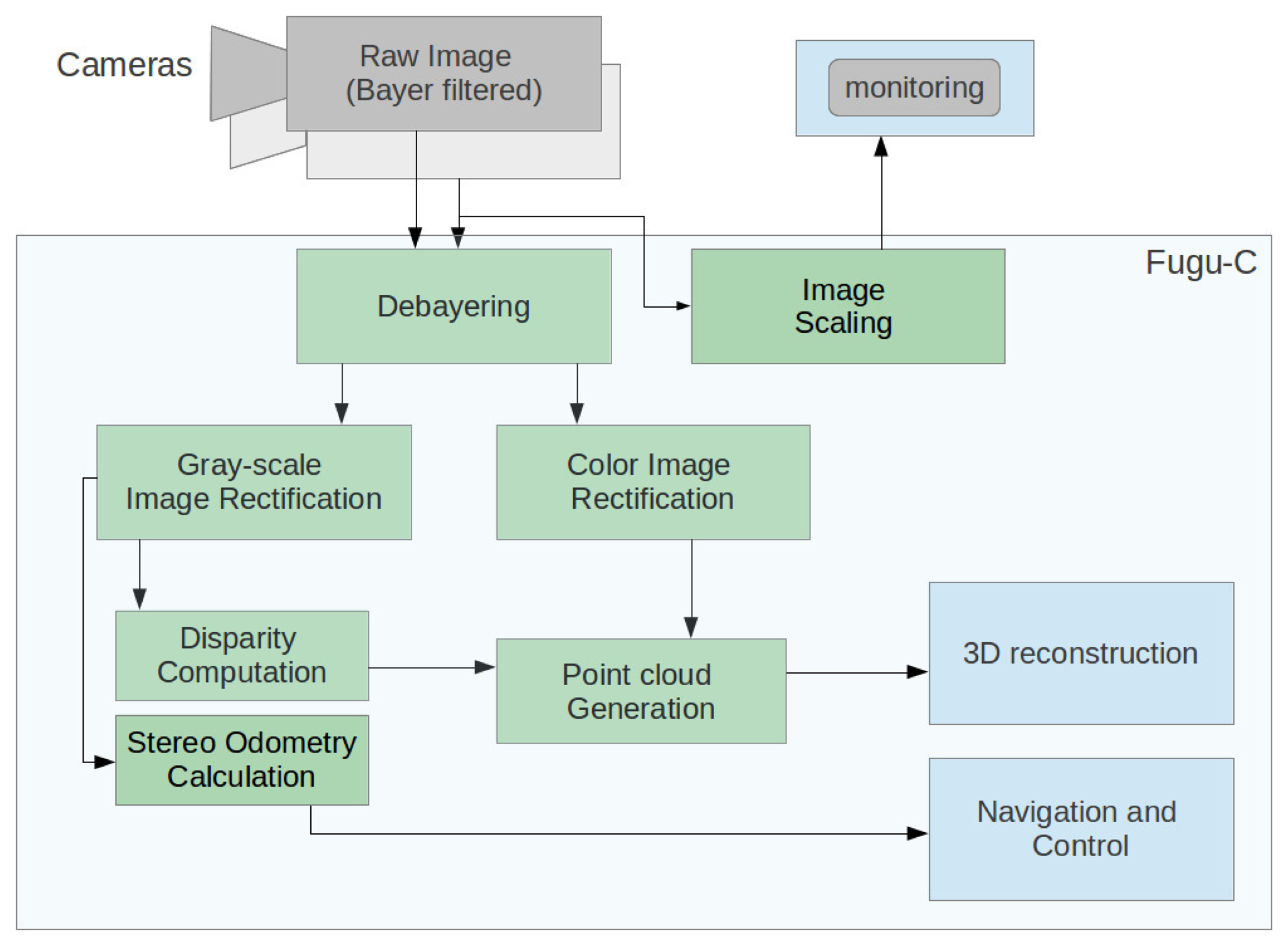

In order to save transmission bandwidth, raw images provided by any of the stereo cameras are encoded using a Bayer filter and sent directly to the computer, where they are processed as follows:

- (1)

First, RGB and gray-scale stereo images are recovered by applying a debayer interpolation.

- (2)

Then, these images are rectified to compensate for the stereo camera misalignment and the lens and cover distortion; the intrinsic and extrinsic camera parameters for the rectification are obtained in a previous calibration process.

- (3)

Optionally, they are downscaled from the original resolution (1024 × 768) to a parameterizable lower rate (power of two divisions); this downsampling is done only if there is a need to monitor the images from remote computers without saturating the Ethernet communications. Normally, a compressed 512 × 384 px image is enough to pilot the vehicle as an ROV without compromising the network capacity. If the vehicle is being operated autonomously, this last step is not performed.

- (4)

Following the rectification, disparity calculation is done using OpenCV's block matching algorithm to finally project these disparity images as 3D points.

- (5)

Odometry is calculated from the bottom-looking camera with the LibViso2 library. In our experiments, the forward-looking camera has been used only for monitoring and obstacle detection, although it could also be used to compute the frontal 3D structure.

Figure 3 shows the image processing task sequence performed in Fugu-C.

3.2. Visual Odometry

Stereo visual odometry is used to estimate the displacement of Fugu-C and its orientation. Many sophisticated visual odometry approaches can be found in the literature [29]. An appropriate approach for real-time applications that uses a relatively high image frame rate is the one provided by the Library for Visual Odometry 2 (LibViso2) [30]. This approach has shown good performance and limited drift in translation and rotation, in controlled and in real underwater scenarios [31].

The main advantages derived from LibViso2 and that make the algorithm suitable for real-time underwater applications are three-fold: (1) it simplifies the feature detection and tracking process, accelerating the overall procedure; in our system, the odometer can be run at 10 fps using images with a resolution of 1024 × 768 pixels; (2) a pure stereo process is used for motion estimation, facilitating its integration and/or reutilization with the module that implements the 3D reconstruction; (3) the great number of feature matches found by the library at each consecutive stereo image makes it possible to deal with high resolution images, which is an advantage to increase the reliability of the motion estimates.

3.3. Point Clouds and 3D Reconstruction

3D models of natural sea-floor or underwater installations with strong relief are a very important source of information for scientists and engineers. Visual photo-mosaics are a highly valuable tool for building those models with a high degree of reliability [32]. However, they need a lot of time to be obtained, and they are rarely applicable online. On the contrary, the concatenation of successive point clouds registered by accurate pose estimates permits building and watching the environment online and in 3D, but with a lower level of detail. Thus, photo-mosaicking and point cloud concatenation can be viewed at present as complementary instruments for optic-based underwater exploration and study.

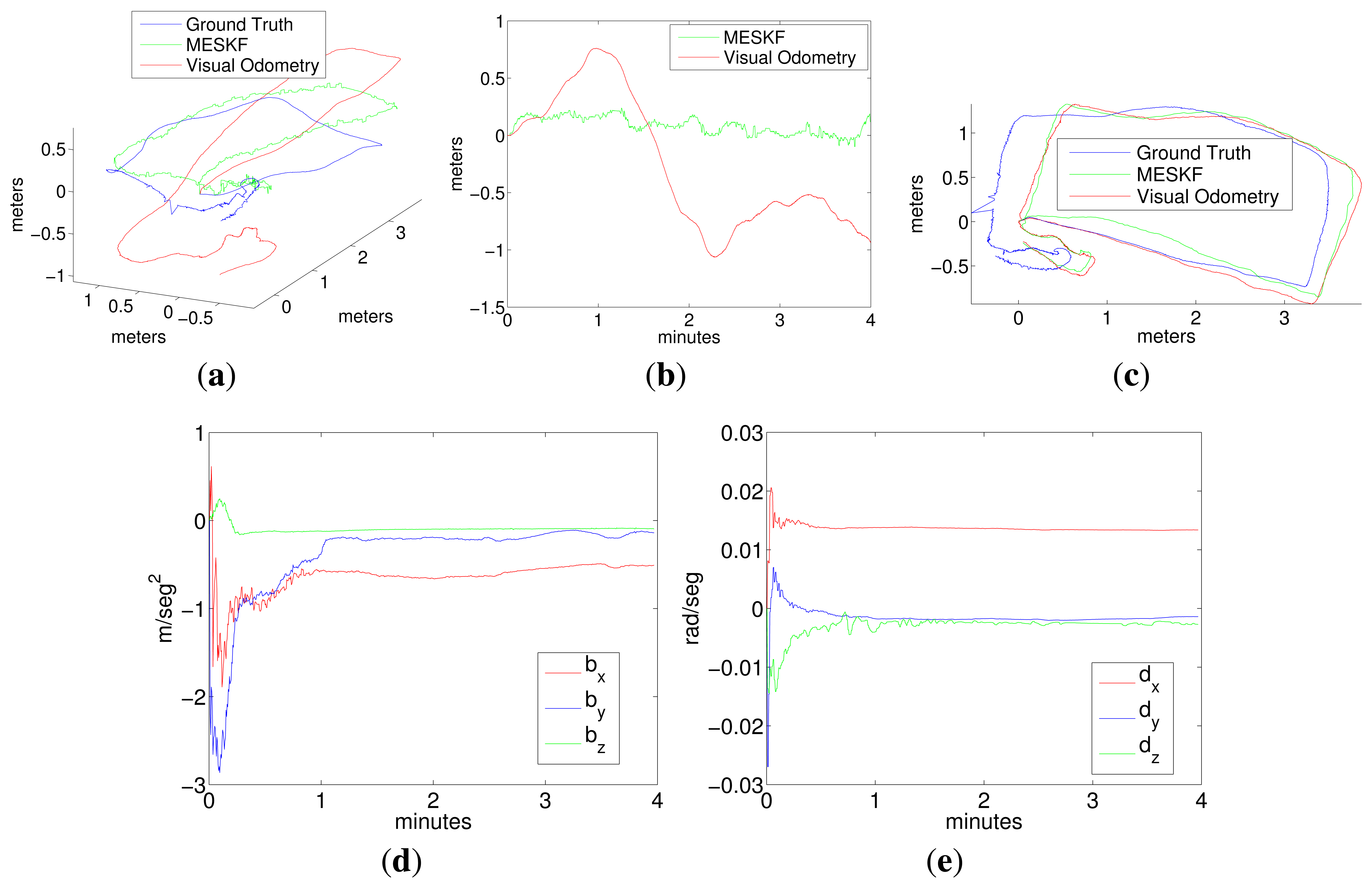

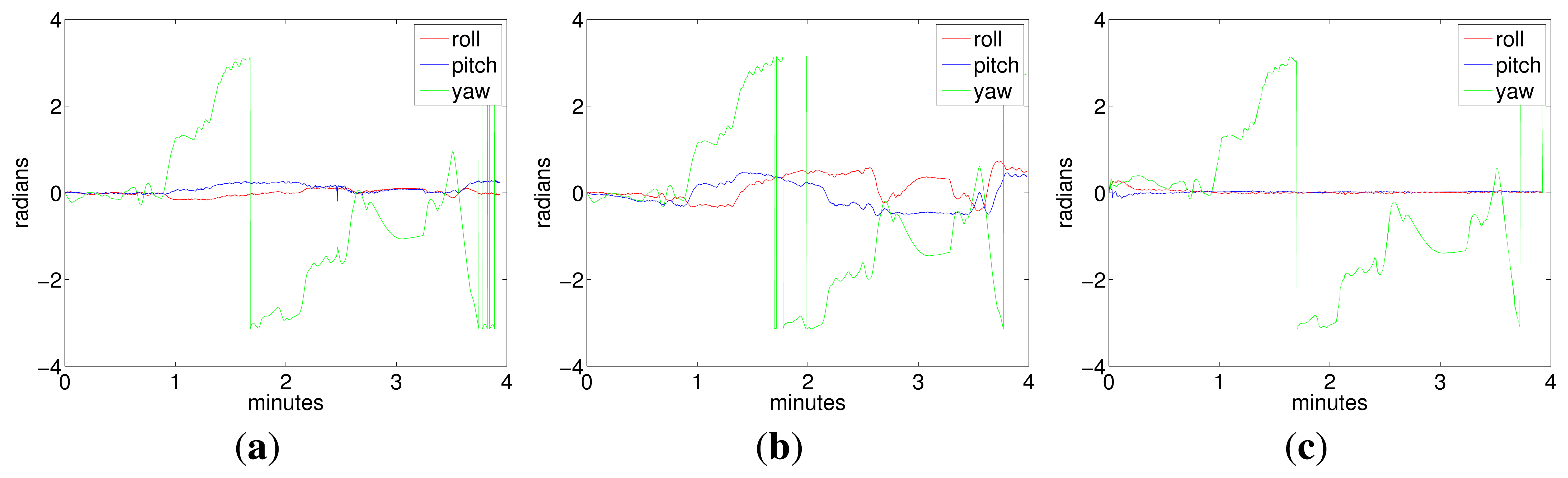

Dense stereo point clouds computed from the disparity maps [33] are provided by Fugu-C at the same rate as the image grabber. They are accumulated to build a dense 3D model of the underwater environment, visible online if a high rate connection between the vehicle and an external computer is established. The concatenation and meshing of the partial reconstructed regions must be done according to the robot pose. Although the odometric position (x, y, z) accumulates drift during relatively long routes, the system can reconstruct, with a high degree of reliability, static objects or short-medium stereo sequences, if no important errors in orientation are accumulated. However, if the orientation of the vehicle diverges from the ground truth, especially in roll and pitch, the successive point clouds will be concatenated with evident misalignments, presenting differences in inclination between them, thus distorting the final 3D result.

In order to increase the reliability of the reconstructed areas in longer trajectories and to minimize the effect of the orientation errors in the 3D models, we have implemented a generic ESKF, which corrects the vehicle attitude estimated by the visual odometer in the three rotation axis and minimizes the effect of the gyroscope drift.

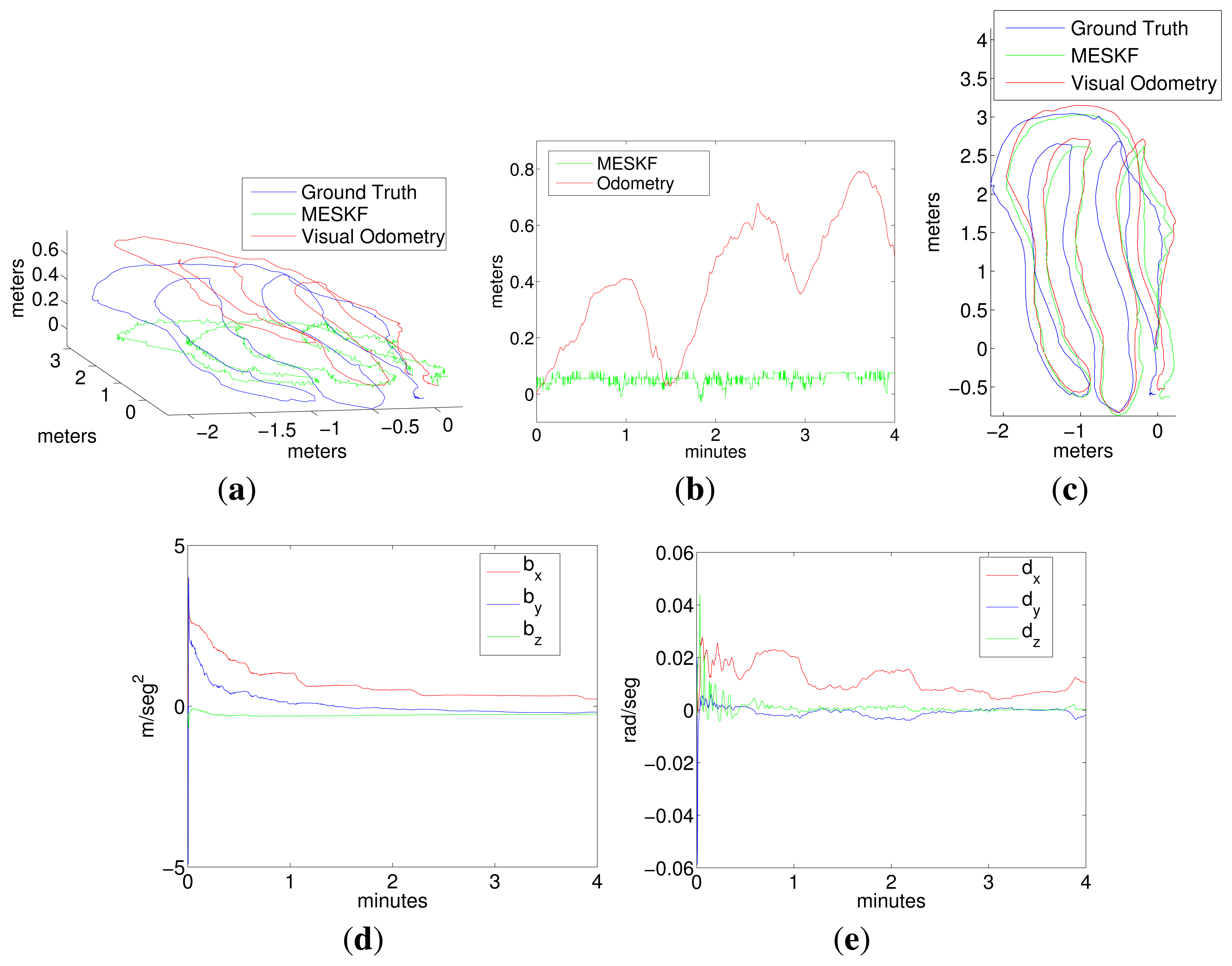

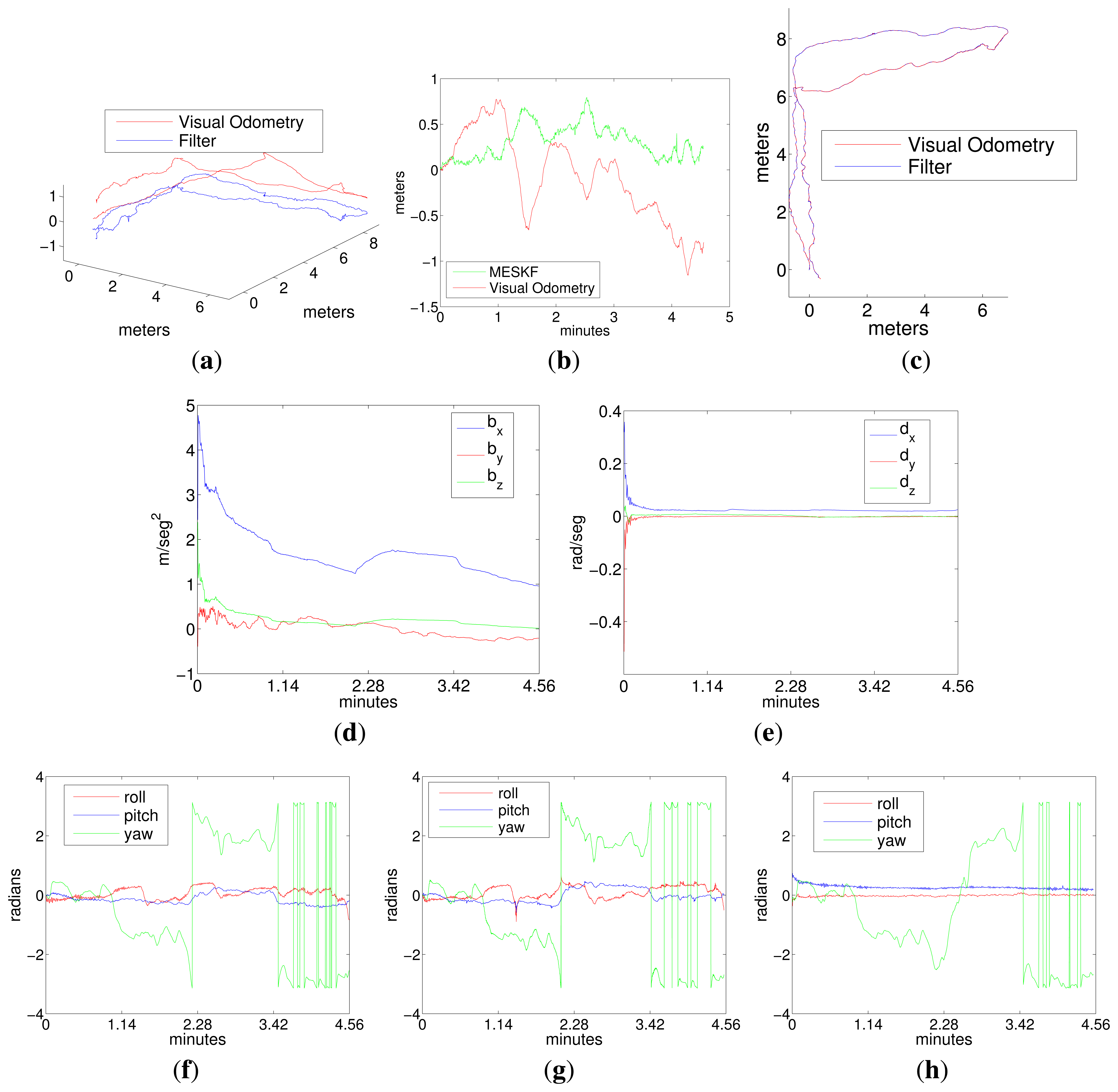

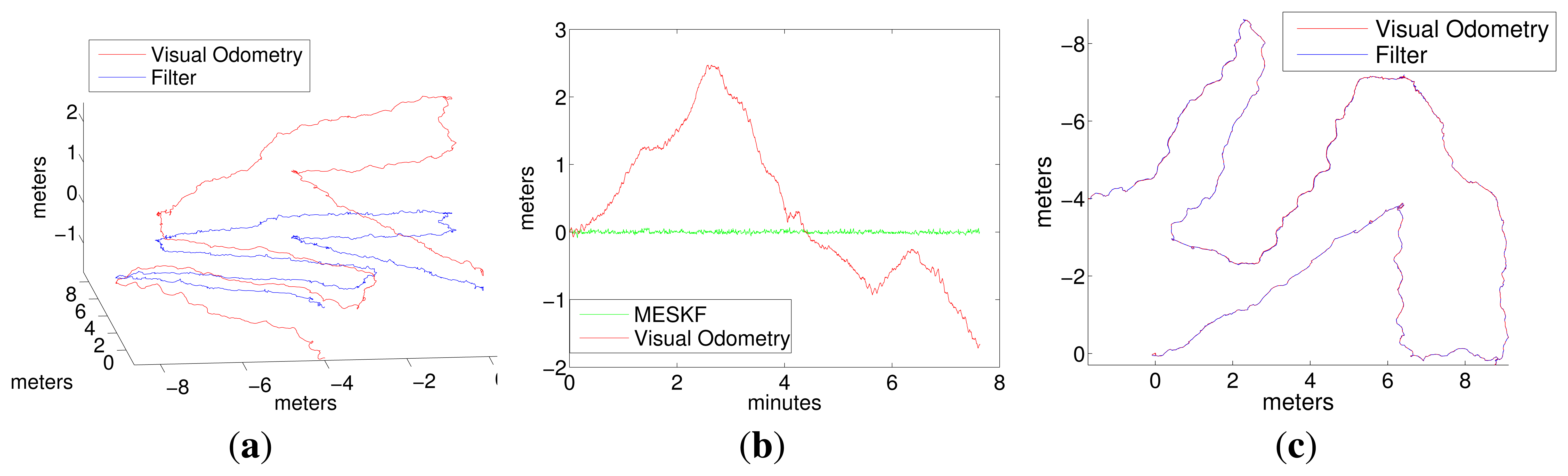

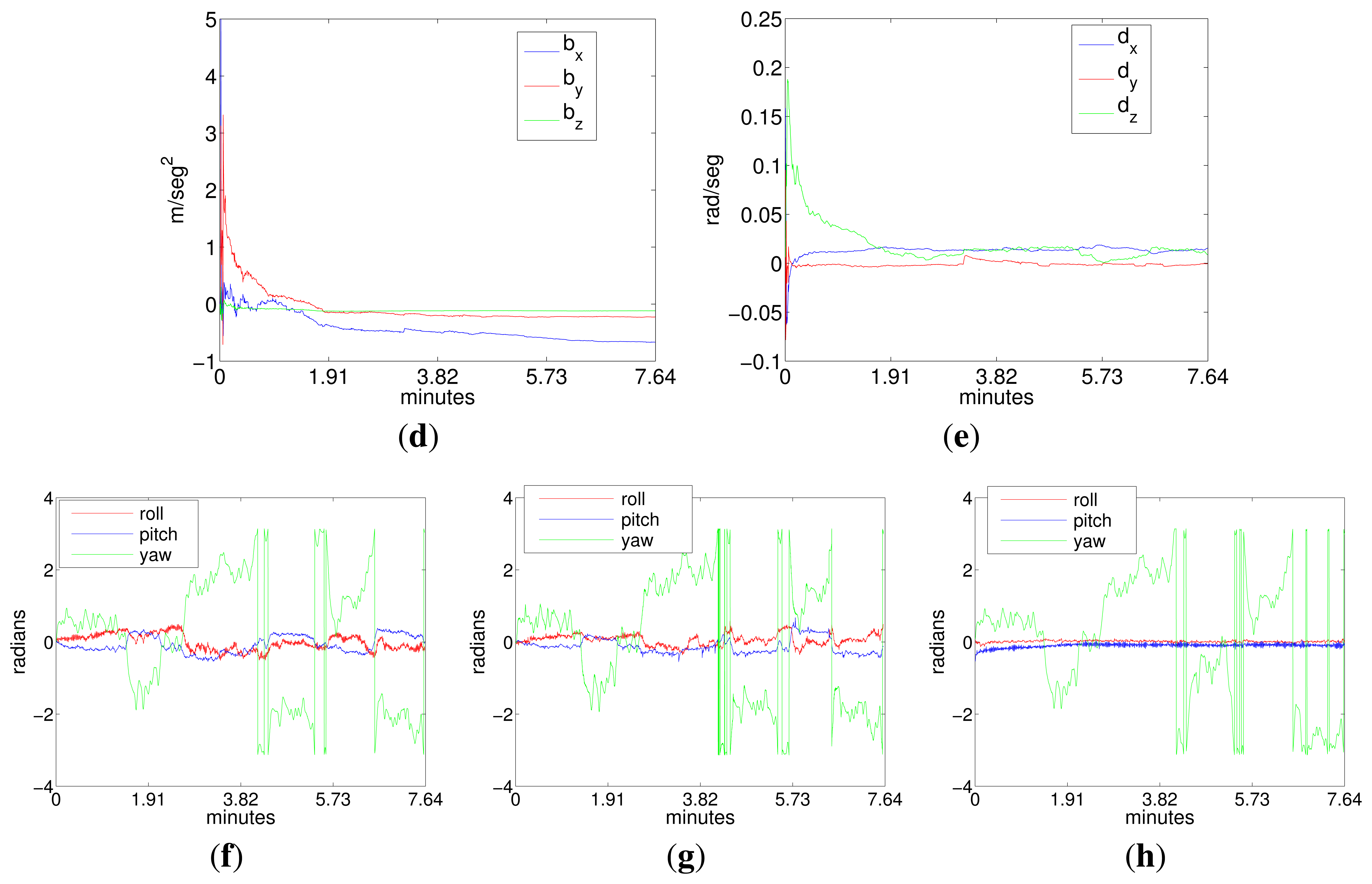

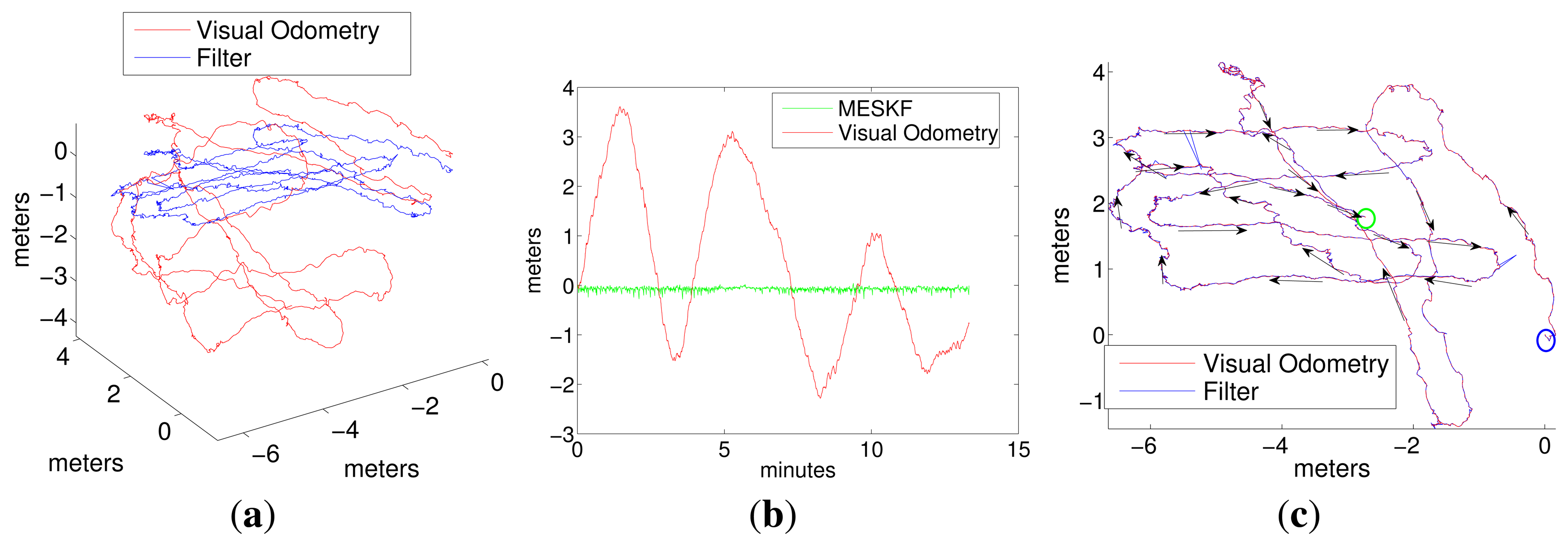

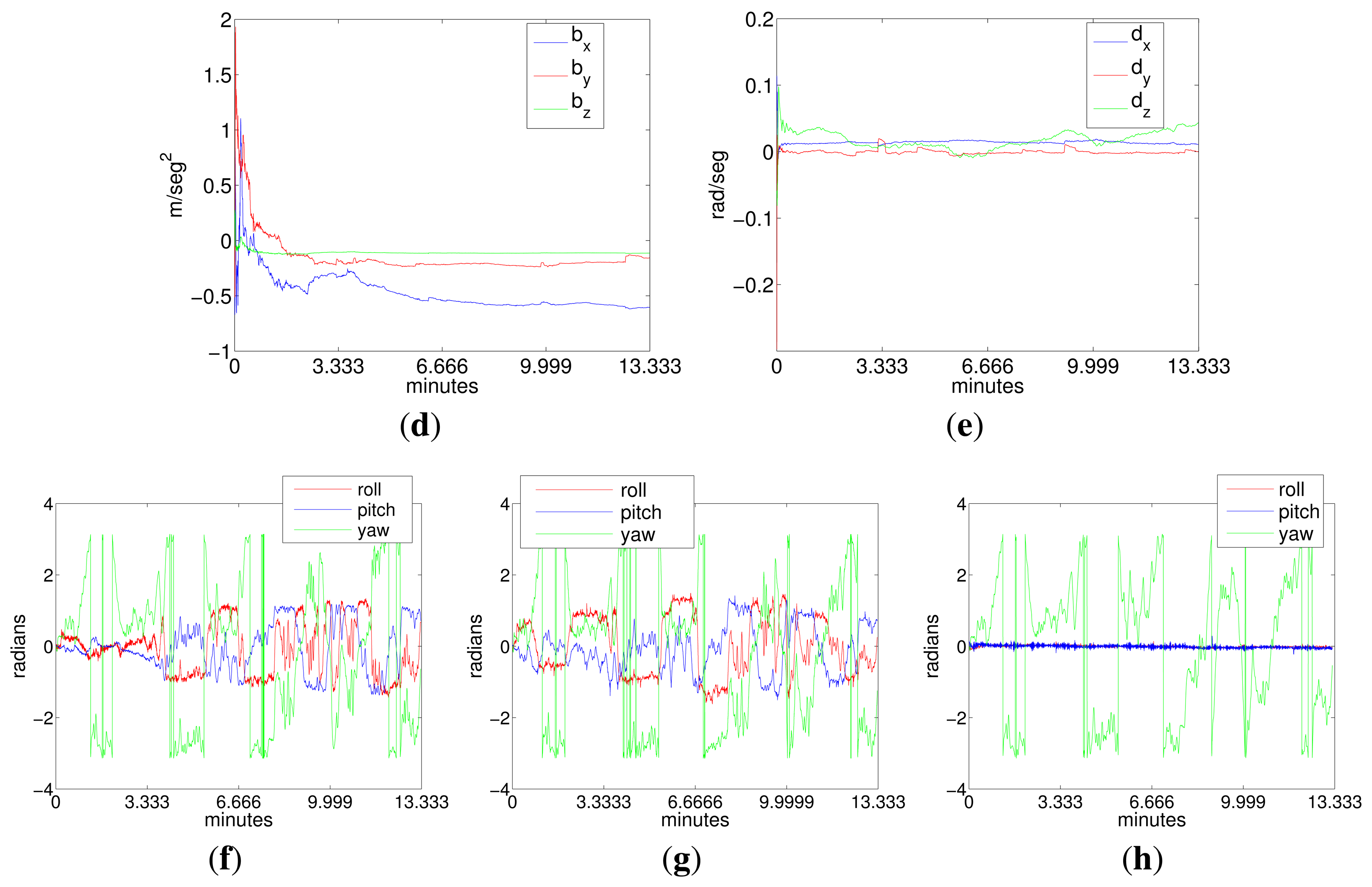

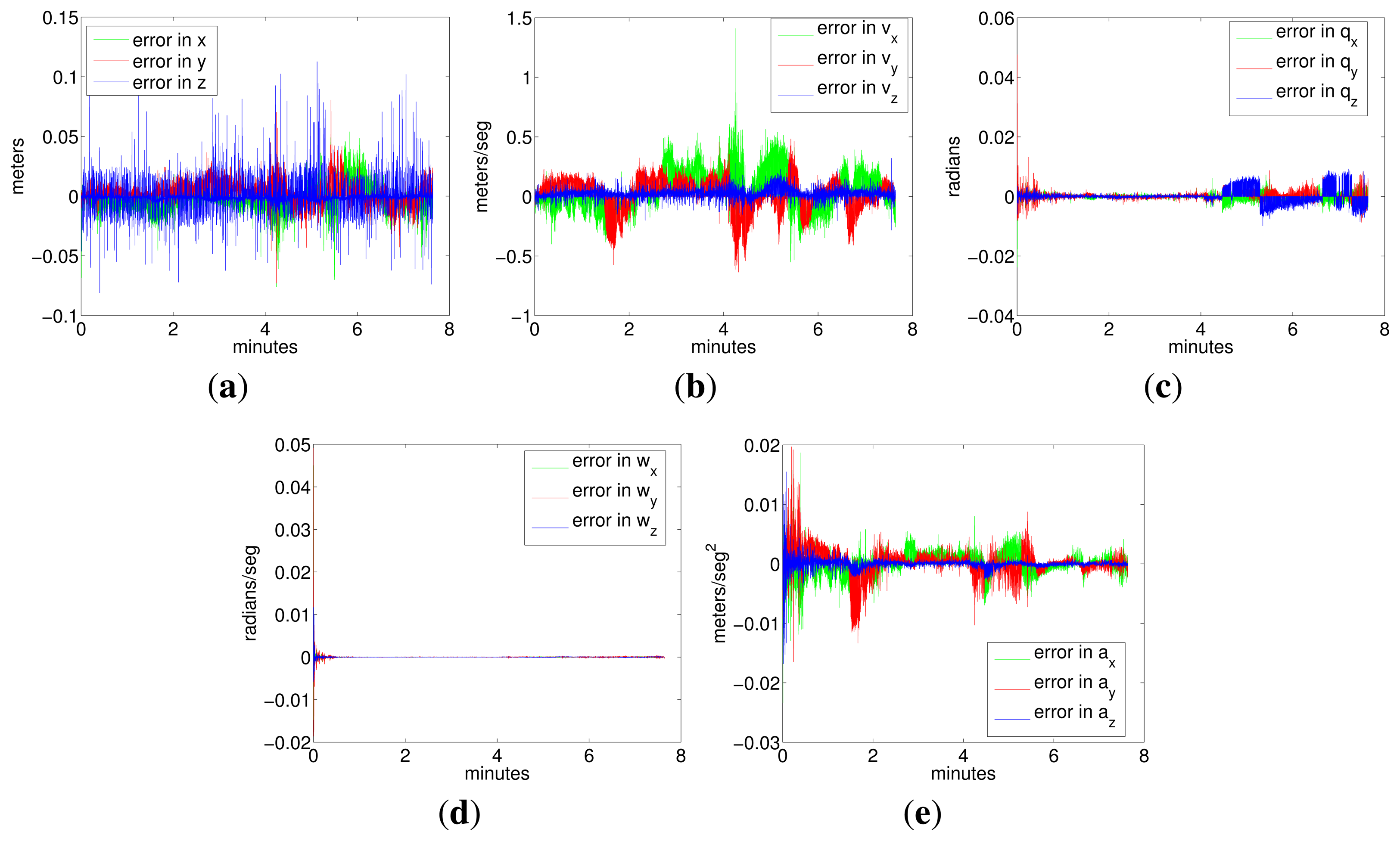

5. Experimental Results

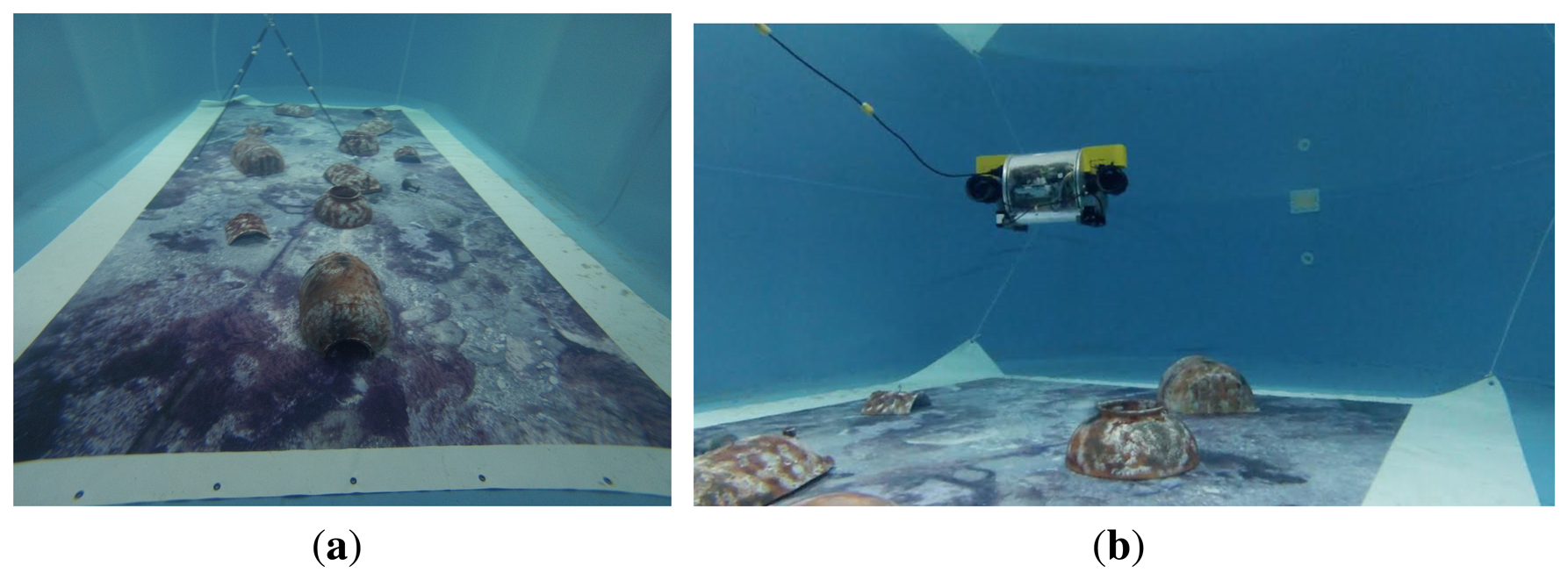

The experimental results are organized in experiments in a controlled environment, experiments in the sea and 3D reconstruction.

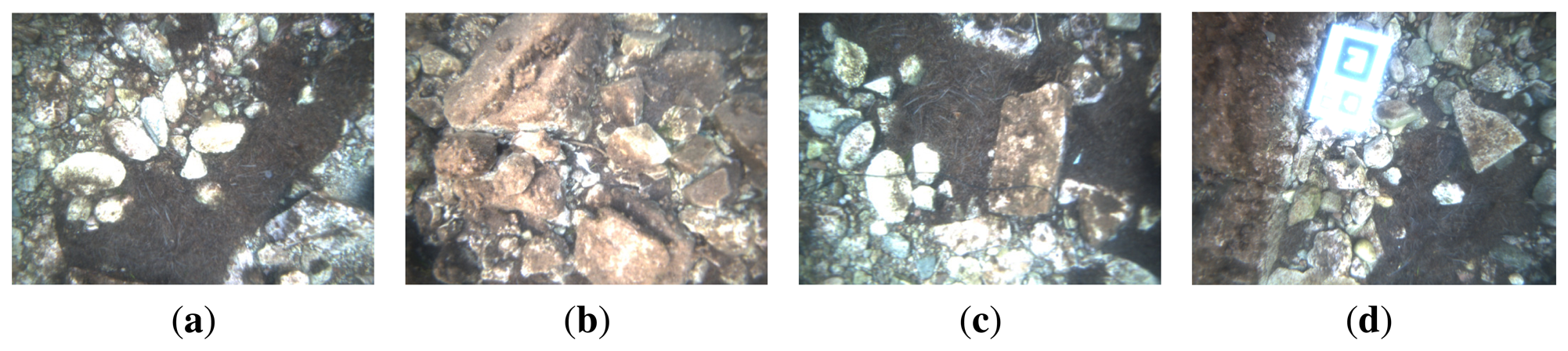

5.3. 3D Reconstruction

Stereo video sequences grabbed in the Port of Valldemossa in the ROS-bag [25] format were played offline to simulate the process of building online the 3D map of the environment by concatenating the successive point clouds. The dense point cloud generation was performed at the same rate as the image grabber (10 frames/s), permitting the reconstruction of the environment in real time. The correction in the vehicle estimated attitude increases the precision in the assembly of these point clouds, resulting in a realistic 3D view of the scenario where the robot is moving.

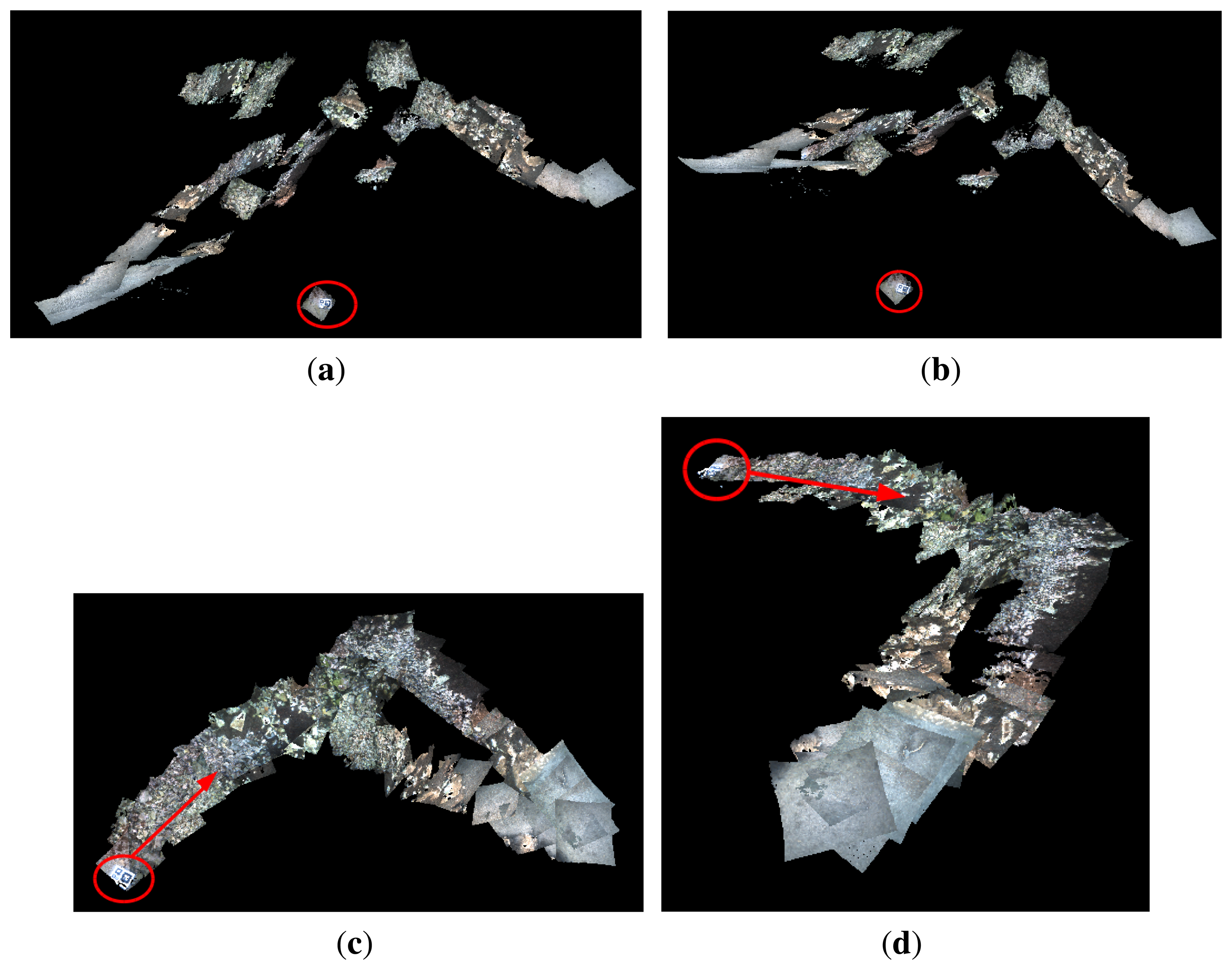

Figure 16a,b shows two different 3D views of the marine environment where Experiment 3 was performed. The successive point clouds were registered using the vehicle odometry pose estimates. Figure 16c,d shows two 3D views of the same environments, but registering the point clouds using the vehicle pose estimates provided by the MESKF. In all figures, the starting point and the direction of motion are indicated with a red circle and a red arrow, respectively. A marker was placed in the ground to indicate the starting/end point.

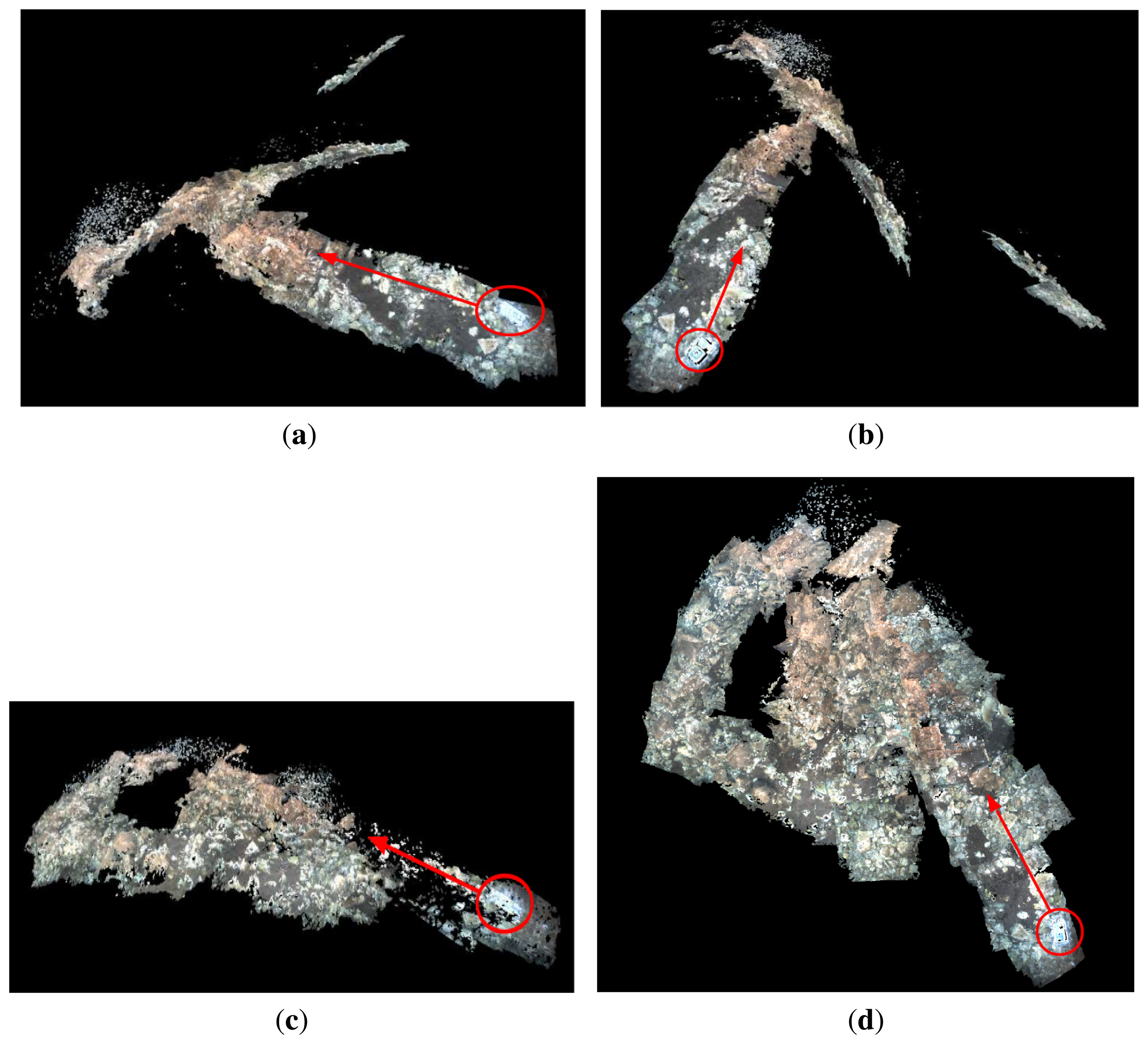

Figure 17a,b shows two different 3D views built during Experiment 4, registering the successive point clouds with the vehicle odometry pose estimates. Figure 17c,d shows two 3D views of the same environments, but registering the point clouds using the vehicle pose estimates provided by the MESKF. Again, in all figures, the starting point and the direction of motion are indicated with a red circle and a red arrow, respectively.

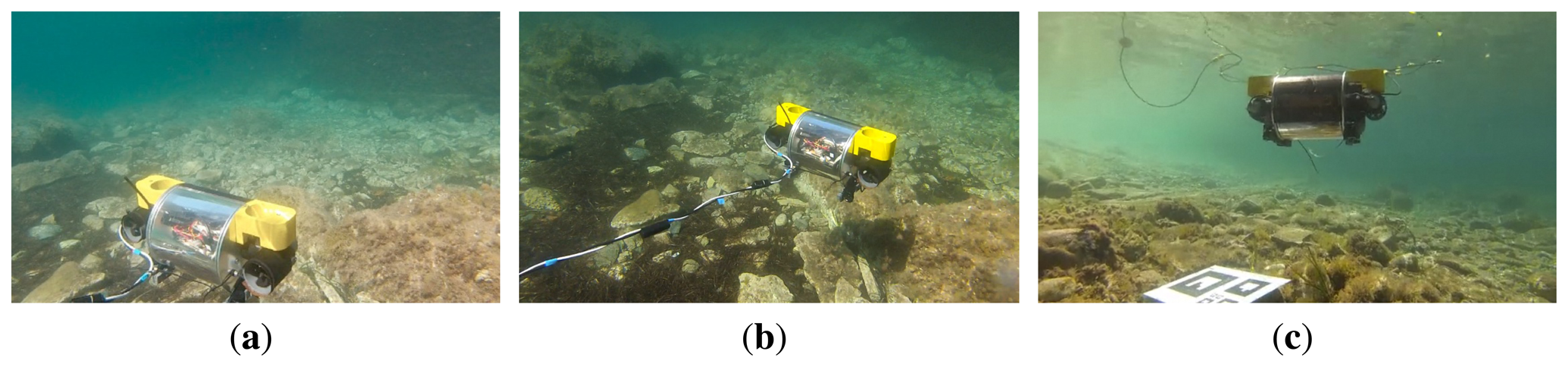

Figure 18 shows three images of Fugu-C navigating in this environment. In Figure 18c, the artificial marker deposited on the sea ground can be observed at the bottom of the image.

Raw point clouds are expressed with respect to the camera frame, but then, they are transformed to the global coordinates frame by composing their camera (local) coordinates with the estimated vehicle global coordinates. 3D maps of Figures 16a,b and 17a,b show clear misalignments between diverse point clouds. These misalignments are due to the values of the estimated roll, and pitch vehicle orientations are, at certain instants, significantly different from zero. As a consequence, the orientation of the point cloud has a value different from zero, causing them to be inclined and/or displaced with respect to those immediately contiguous and causing also the subsequent point clouds to be misaligned with respect to the horizontal plane. This effect is particularly evident in Figure 16a,b, where very few point clouds are parallel to the ground, most of them being displaced and oblique with respect to the ground.

However, as the vehicle orientations in roll and pitch estimated by the MESKF are all approximately zero, all of the point clouds are nearly parallel to the ground plane, without any significant inclination in pitch/roll or important misalignment, and providing a highly realistic 3D reconstruction. Notice how the 3D views shown in Figures 16c,d and 17c,d coincide with the trajectories shown in Figures 11 and 12, respectively.

The video uploaded in [42] shows different perspectives of the 3D map built from the dataset of Experiment 4, registering the point clouds with the odometry (at the left) and with the filter estimates (at the right). Observing the 3D reconstructions from different view points offers a better idea of how sloping, misaligned and displaced with respect to the ground, can be some of the point clouds, due to those values of roll and pitch different from zero. The improvement in the 3D map structure when using the filtered data is evident, as all of the point clouds are placed consecutively, totally aligned and parallel to the sea ground.

6. Conclusions

This paper presents Fugu-C, a prototype micro-AUV especially designed for underwater image recording, observation and 3D mapping in shallow waters or in cluttered aquatic environments. Emphasis has been made on the vehicle structure, its multiple-layer navigation architecture and its capacity to reconstruct and map underwater environments in 3D. Fugu-C combines some of the advantages of a standard AUV with the characteristics of the micro-AUVs, outperforming other micro underwater vehicles in: (1) its ability to image the environment with two stereo cameras, one looking downwards and another one looking forward; (2) its computational and storage capacity; and (3) the possibility to integrate all of the sensorial data in a specially-designed MESKF that has multiple advantages.

The main benefits of using this aforementioned filter and their particularities with respect to other similar approaches are:

- (1)

A general configuration permitting the integration of as many sensors as needed and applicable in any vehicle with six DOF.

- (2)

It deals with two state vectors, the nominal and the error state; it represents all nominal orientations in quaternions to prevent singularities in the attitude estimation; however, the attitude errors are represented as rotation vectors to avoid singularities in the covariance matrices; the prediction model assumes a motion with constant acceleration.

- (3)

The nominal state contains the biases of the inertial sensors, which permits a practical compensation of those systematic errors.

- (4)

Linearization errors are limited, since the error variables are very small and their variations much slower than the changes on the nominal state data.

- (5)

This configuration permits the vehicle to navigate by just integrating the INS data when either the aiding sensor or the filter fail.

Extensive experimental results in controlled scenarios and in the sea have shown that the implemented navigation modules are adequate to maneuver the vehicle without oscillations or instabilities. Experiments have also evidenced that the designed navigation filter is able to compensate, online, the biases introduced by the inertial sensors and to correct errors in the vehicle z coordinate, as well as in the roll and pitch orientations estimated by a visual odometer. These corrections in the vehicle orientation are extremely important when concatenating stereo point clouds to form a realistic 3D view of the environment without any misalignment.

Furthermore, the implementation of the MESKF is available to the scientific community in a public repository [26].

Future work plans are oriented toward the following: (1) the aided inertial navigation approach presented in this paper is unable to correct the vehicle position in (x, y), since it is not using any technique to track environmental landmarks or to adjust the localization by means of closing loops; the use of stereo GraphSLAM [43] to correct the robot position estimated by the filter will be the next step, applying, afterwards, techniques for fine-point cloud registration when they present overlap; (2) obviously, the twist and depth simple PID controllers described in Section 4.1 could be changed by other, more sophisticated systems that take into account other considerations, such as external forces, hydrodynamic models and the relation with the vehicle thrusters and its autonomy; one of the points planned to be investigated in forthcoming work consists of trying to find a trade off between controlling the vehicle only with the navigation sensorial data and the incorporation of a minimal number to structural considerations.

Appendix

A. Velocity Error Prediction

Let ṽk be the estimated linear velocity defined according to Equation (25) as:

According to Equations (21) and (A1), it can be expressed as:

The rotation error matrix is given by its Rodrigues formula [44], which, for small angles, can be approximated as: , where [K]x is a skew symmetric matrix that contains the rotation vector corresponding to and I3×3 is the 3 × 3 identity matrix.

Consequently, Equation (A3) can be expressed as:

Segregating the error terms from both sides of Equation (A4), we obtain the expression to predict the error in the linear velocity:

Since [K]x contains the vector corresponding to the rotation error and the acceleration bias is expressed as δak in the error state vector, Equation (A5) can also be seen as:

B. Position Error Prediction

Let p̃k be the estimated vehicle position defined according to Equation (23) as:

According to Equations (1) and (A7), it can be expressed as:

Analogously to Equation (A4), the term δ

Operating Equation (A9) and separating the nominal and the error terms in both sides of the expression, it gives:

Assuming that errors are very small between two filter iterations, taking into account that [K]x contains the rotation vector error and representing any product by [K]x as a cross product, Equation (A12) can be re-formulated as:

Acknowledgments

This work is partially supported by the Spanish Ministry of Economy and Competitiveness under Contracts PTA2011-05077 and DPI2011-27977-C03-02, FEDER Funding and by Govern Balear grant number 71/2011.

Author Contributions

Francisco Bonin-Font and Gabriel Oliver carried out a literature survey, proposed the fundamental concepts of the methods to be used and wrote the whole paper. The mathematical development and coding for the MESKF was carried out by Joan P. Beltran and Francisco Bonin-Font. Gabriel Oliver, Joan P. Beltran and Miquel Massot Campos did most of the AUV platform design and development. Finally, Josep Lluis Negre Carrasco and Miquel Massot Campos contributed to the code integration, to the experimental validation of the system and to the 3D reconstruction methods. All authors revised and approved the final submission.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Smith, S.M.; An, P.E.; Holappa, K.; Whitney, J.; Burns, A.; Nelson, K.; Heatzig, E.; Kempfe, O.; Kronen, D.; Pantelakis, T.; et al. The Morpheus ultramodular autonomous underwater vehicle. IEEE J. Ocean. Eng. 2001, 26, 453–465. [Google Scholar]

- Watson, S.A.; Crutchley, D.; Green, P. The mechatronic design of a micro-autonomous underwater vehicle. J. Mechatron. Autom. 2012, 2, 157–168. [Google Scholar]

- Wick, C.; Stilwell, D. A miniature low-cost autonomous underwater vehicle. Proceedings of the 2001 MTS/IEEE Conference and Exhibition (OCEANS), Honolulu, HI, USA, 5–8 November 2001; pp. 423–428.

- Heriot Watt University. mAUV. Available online: http://osl.eps.hw.ac.uk/virtualPages/experimentalCapabilities/Micro%20AUV.php (accessed on 15 November 2014).

- Hildebrandt, M.; Gaudig, C.; Christensen, L.; Natarajan, S.; Paranhos, P.; Albiez, J. Two years of experiments with the AUV Dagon—A versatile vehicle for high precision visual mapping and algorithm evaluation. Proceedings of the 2012 IEEE/OES Autonomous Underwater Vehicles (AUV), Southampton, UK, 24–27 September 2012.

- Kongsberg Maritime. REMUS. Available online: http://www.km.kongsberg.com/ks/web/nokbg0240.nsf/AllWeb/D241A2C835DF40B0C12574AB003EA6AB?OpenDocument (accessed on 15 November 2014).

- Carreras, M.; Candela, C.; Ribas, D.; Mallios, A.; Magi, L.; Vidal, E.; Palomeras, N.; Ridao, P. SPARUS. CIRS: Underwater, Vision and Robotics. Available online: http://cirs.udg.edu/auvs-technology/auvs/sparus-ii-auv/ (accessed on 15 November 2014).

- Wang, B.; Su, Y.; Wan, L.; Li, Y. Modeling and motion control system research of a mini underwater vehicle. Proceedings of the IEEE International Conference on Mechatronics and Automation (ICMA'2009), Changchun, China, 9–12 August 2009.

- Yu, X.; Su, Y. Hydrodynamic performance calculation of mini-AUV in uneven flow field. Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Tianjin, China, 14–18 December 2010.

- Liang, X.; Pang, Y.; Wang, B. Chapter 28, Dynamic modelling and motion control for underwater vehicles with fins. In Underwater Vehicles; Intech: Vienna, Austria, 2009; pp. 539–556. [Google Scholar]

- Roumeliotis, S.; Sukhatme, G.; Bekey, G. Circumventing dynamic modeling: Evaluation of the error-state Kalman filter applied to mobile robot localization. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Detroit, MI, USA, 10–15 May 1999; pp. 1656–1663.

- Allotta, B.; Pugi, L.; Bartolini, F.; Ridolfi, A.; Costanzi, R.; Monni, N.; Gelli, J. Preliminary design and fast prototyping of an autonomous underwater vehicle propulsion system. Inst. Mech. Eng. Part M: J. Eng. Marit. Environ. 2014. [Google Scholar] [CrossRef]

- Kelly, J.; Sukhatme, G. Visual-inertial sensor fusion: Localization, mapping and sensor-to-sensor self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar]

- Allotta, B.; Pugi, L.; Costanzi, R.; Vettori, G. Localization algorithm for a fleet of three AUVs by INS, DVL and range measurements. Proceedings of the International Conference on Advanced Robotics (ICAR), Tallinn, Estonia, 20–23 June 2011.

- Allotta, B.; Costanzi, R.; Meli, E.; Pugi, L.; Ridolfi, A.; Vettori, G. Cooperative localization of a team of AUVs by a tetrahedral configuration. Robot. Auton. Syst. 2014, 62, 1228–1237. [Google Scholar]

- Higgins, W. A comparison of complementary and Kalman filtering. IEEE Trans. Aerosp. Electron. Syst. 1975, AES-11, 321–325. [Google Scholar]

- Chen, S. Kalman filter for robot vision: A survey. IEEE Trans. Ind. Electron. 2012, 59, 263–296. [Google Scholar]

- An, E. A comparison of AUV navigation performance: A system approach. Proceedings of the IEEE OCEANS, San Diego, CA, USA, 22–26 September 2003; pp. 654–662.

- Suh, Y.S. Orientation estimation using a quaternion-based indirect Kalman filter With adaptive estimation of external acceleration. IEEE Trans. Instrum. Meas. 2010, 59, 3296–3305. [Google Scholar]

- Miller, P.A.; Farrell, J.A.; Zhao, Y.; Djapic, V. Autonomous underwater vehicle navigation. IEEE J. Ocean. Eng. 2010, 35, 663–678. [Google Scholar]

- Achtelik, M.; Achtelik, M.; Weiss, S.; Siegwart, R. Onboard IMU and monocular vision based control for MAVs in unknown in- and outdoor environments. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011.

- Weiss, S.; Achtelik, M.; Chli, M.; Siegwart, R. Versatile distributed pose estimation and sensor self calibration for an autonomous MAV. Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 31–38.

- Markley, F.L. Attitude error representations for Kalman filtering. J. Guid. Control Dyn. 2003, 26, 311–317. [Google Scholar]

- Hall, J.K.; Knoebel, N.B.; McLain, T.W. Quaternion attitude estimation for miniature air vehicles using a multiplicative extended Kalman filter. Proceedings of the 2008 IEEE/ION Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2008; pp. 1230–1237.

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A. ROS: An open source robot operating system. Proceedings of ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009.

- Negre, P.L.; Bonin-Font, F. GitHub. Available online: https://github.com/srv/pose_twist_meskf_ros (accessed on 15 November 2014).

- Hogue, A.; German, J.Z.; Jenkin, M. Underwater 3D mapping: Experiences and lessons learned. Proceedings of the 3rd Canadian Conference on Computer and Robot Vision (CRV), Quebec City, QC, Canada, 7–9 June 2006.

- MEMSENSE nIMU Datasheet. Available online: http://memsense.com/docs/nIMU/nIMU_Data_Sheet_DOC00260_RF.pdf (accessed on 15 November 2014).

- Fraundorfer, F.; Scaramuzza, D. Visual odometry. Part II: Matching, robustness, optimization and applications. IEEE Robot. Autom. Mag. 2012, 19, 78–90. [Google Scholar]

- Geiger, A.; Ziegler, J.; Stiller, C. StereoScan: Dense 3D reconstruction in real-time. Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011.

- Wirth, S.; Negre, P.; Oliver, G. Visual odometry for autonomous underwater vehicles. Proceedings of the MTS/IEEE OCEANS, Bergen, Norway, 10–14 June 2013.

- Gracias, N.; Ridao, P.; Garcia, R.; Escartin, J.; L'Hour, M.; C., F.; Campos, R.; Carreras, M.; Ribas, D.; Palomeras, N.; Magi, L.; et al. Mapping the Moon: Using a lightweight AUV to survey the site of the 17th Century ship “La Lune”. Proceedings of the MTS/IEEE OCEANS, Bergen, Norway, 10–14 June 2013.

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Fossen, T. Guidance and Control of Ocean Vehicles; John Wiley: New York, NY, USA, 1994. [Google Scholar]

- Trawny, N.; Roumeliotis, S.I. Indirect Kalman filter for 3D attitude estimation; Technical Report 2005-002; University of Minnesota, Dept. of Comp. Sci. & Eng.: Minneapolis, Minnesota, EEUU, 2005. [Google Scholar]

- Bonin-Font, F.; Beltran, J.; Oliver, G. Multisensor aided inertial navigation in 6DOF AUVs using a multiplicative error state Kalman filter. Proceedings of the IEEE/MTS OCEANS, Bergen, Norway, 10–14 June 2013.

- Miller, K.; Leskiw, D. An Introduction to Kalman Filtering with Applications; Krieger Publishing Company: Malabar, Florida, EEUU, 1987. [Google Scholar]

- Ahmadi, M.; Khayatian, A.; Karimaghaee, P. Orientation estimation by error-state extended Kalman filter in quaternion vector space. Proceedings of the 2007 Annual Conference (SICE), Takamatsu, Japan, 17–20 September 2007; pp. 60–67.

- Bujnak, M.; Kukelova, S.; Pajdla, T. New efficient solution to the absolute pose problem for camera with unknown focal length and radial distortion. Lect. Notes Comput. Sci. 2011, 6492, 11–24. [Google Scholar]

- Fischler, M.; Bolles, R. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar]

- Moore, T.; Purvis, M. Charles River Analytics. Available online: http://wiki.ros.org/robot_localization (accessed on 15 November 2014).

- Bonin-Font, F. Youtube. Available online: http://youtu.be/kKe1VzViyY8 (accessed on 7 January 2015).

- Negre, P.L.; Bonin-Font, F.; Oliver, G. Stereo graph SLAM for autonomous underwater vehicles. Proceedings of the The 13th International Conference on Intelligent Autonomous Systems, Padova, Italy, 15–19 July 2014.

- Ude, A. Filtering in a unit quaternion space for model-based object tracking. Robot. Auton. Syst. 1999, 28, 163–172. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bonin-Font, F.; Massot-Campos, M.; Negre-Carrasco, P.L.; Oliver-Codina, G.; Beltran, J.P. Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV. Sensors 2015, 15, 1825-1860. https://doi.org/10.3390/s150101825

Bonin-Font F, Massot-Campos M, Negre-Carrasco PL, Oliver-Codina G, Beltran JP. Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV. Sensors. 2015; 15(1):1825-1860. https://doi.org/10.3390/s150101825

Chicago/Turabian StyleBonin-Font, Francisco, Miquel Massot-Campos, Pep Lluis Negre-Carrasco, Gabriel Oliver-Codina, and Joan P. Beltran. 2015. "Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV" Sensors 15, no. 1: 1825-1860. https://doi.org/10.3390/s150101825

APA StyleBonin-Font, F., Massot-Campos, M., Negre-Carrasco, P. L., Oliver-Codina, G., & Beltran, J. P. (2015). Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV. Sensors, 15(1), 1825-1860. https://doi.org/10.3390/s150101825