Multi-Agent Cooperative Target Search

Abstract

: This paper addresses a vision-based cooperative search for multiple mobile ground targets by a group of unmanned aerial vehicles (UAVs) with limited sensing and communication capabilities. The airborne camera on each UAV has a limited field of view and its target discriminability varies as a function of altitude. First, by dividing the whole surveillance region into cells, a probability map can be formed for each UAV indicating the probability of target existence within each cell. Then, we propose a distributed probability map updating model which includes the fusion of measurement information, information sharing among neighboring agents, information decay and transmission due to environmental changes such as the target movement. Furthermore, we formulate the target search problem as a multi-agent cooperative coverage control problem by optimizing the collective coverage area and the detection performance. The proposed map updating model and the cooperative control scheme are distributed, i.e., assuming that each agent only communicates with its neighbors within its communication range. Finally, the effectiveness of the proposed algorithms is illustrated by simulation.1. Introduction

With the fast development of high resolution imaging devices and processing technologies, unmanned aerial vehicles (UAVs) with air-borne cameras are increasingly employed in civil and military applications such as environmental monitoring, battlefield surveillance and map building, where ground-target search is one of the major applications [1,2]. Target tracking and search have been one of the most popular utilizations of UAVs [3,4]. The conventional method for target search by UAVs in a closed region divides the whole surveillance region into cells, and associates each cell with a probability or confidence of target existence in the cell which constitutes a probability map for the whole region [5,6].

In [7], an online planning and control method is proposed for cooperative search by a group of UAVs, where each agent keeps an individual probability map for the whole region updated according to the Dempster-Shafer theory. A path planning algorithm is designed by using the obtained measurement information, which requires each agent to directly communicate with all other agents. In [8], target detection is considered as part of an integrated mission including coverage control and data collection as parallel tasks for multi-agent networks. The coverage control method aims to maximize the joint detection probability of random events and the probability of target existence is updated by measurements based on the Bayesian rule. However, only the measurement information of direct neighbors is exchanged, which makes it difficult to obtain the target information of the whole surveillance region. In [9], a decentralized gradient-based control strategy is proposed for multiple autonomous mobile sensor agents searching for targets of interest by minimizing the joint team probability of no detection within action horizon based on range detection sensing model. However, each agent is required to collect detection information from all other agents. In [10], a decentralized search algorithm is developed which includes a two-step updating procedure for the probability maps. Each agent first obtains observations over the cells within its sensing region and updates its individual probability map by the Bayesian rule. Then, each agent transmits its individual probability map to its neighbors for map fusion. This algorithm is distributed and full network connectivity is not required. However, the lack of information correlation makes the map fusion difficult and only a heuristic fusion method is given in [10], the performance of which has not been analyzed. In our recent work [11], a distributed iterative map updating model is proposed to fuse the information from measurements and the maps of neighbors based on a logarithmic transformation of the Bayesian rule. Through this, the nonlinear Bayesian update is replaced by a linear one which simplifies the computation. The convergence speed of individual probability map of an agent is also analyzed under fixed detection and false alarm probabilities for the search of static targets.

The cooperative control is an important task for efficient target search by a group of UAVs. Compared with the centralized control algorithms, distributed control algorithms are more robust to accidental failures of UAVs and breaks of communication links [12]. In [13], a distributed multi-agent coverage control method is proposed based on a given sensing performance function related to the distance to robots and gradient descent algorithms are designed for a class of utility functions to optimize the coverage and sensing performance. In [14], a distributed, adaptive control law is developed to achieve an optimal sensing configuration for a network of mobile robots which obtain sensory information of a static environment and exchange their estimates of the environment with neighbors. In [15], a three dimensional distributed control strategy is proposed to deploy hovering robots with downward facing cameras to collectively monitor an environment. A new optimization criterion is defined as the information obtained by each pixel of a camera. In [16], a dynamic awareness model is proposed to control a multi-vehicle sensor network with intermittent communications. The state of awareness of each individual vehicle is updated by its own sensing model and sharing information with its neighbors. However, none of the coverage control schemes mentioned above has considered the detection results of target existence which may affect UAVs' movement decisions in target search. Moreover, there are very few works addressing the issue of distributed vision-based cooperative search for multiple mobile targets with probabilistic detections.

In this paper, we investigate the vision-based cooperative search for multiple ground mobile targets by a group of UAVs with limited sensing and communication capabilities. The main contribution of this paper is that a distributed strategy of information fusion and cooperative control is proposed for searching multiple mobile targets using multi-agent networks based on probabilistic detections. In addition, the time-varying detection and false alarm probabilities are considered which are due to the varying altitudes of the agents with 3-dimensional dynamics. Each agent under our search strategy shares local target information and controls its own behavior in a distributed manner. Based on the probability map updating model proposed in [11], we generalize the model by considering the information decay and transmission between cells due to environmental changes such as the target movement. The influence of the time-varying detection probability on the update of probability maps due to the three-dimensional UAV dynamics is also analyzed. Then, a coverage optimization problem is formulated to balance the coverage area and detection performance. The proposed map updating model and cooperative control scheme are distributed, i.e., each agent only communicates with the agents within its communication range.

This paper is organized as follows: Section 2 describes the basic notations and assumptions used in this paper. Section 3 presents the probability map updates by measurements and information sharing with time-varying detection probabilities. In Section 4, a three-dimensional coverage control method is presented for target search. Simulation results are shown in Section 5, and the conclusions are drawn in Section 6.

2. Basic Definitions and Assumptions

The surveillance region

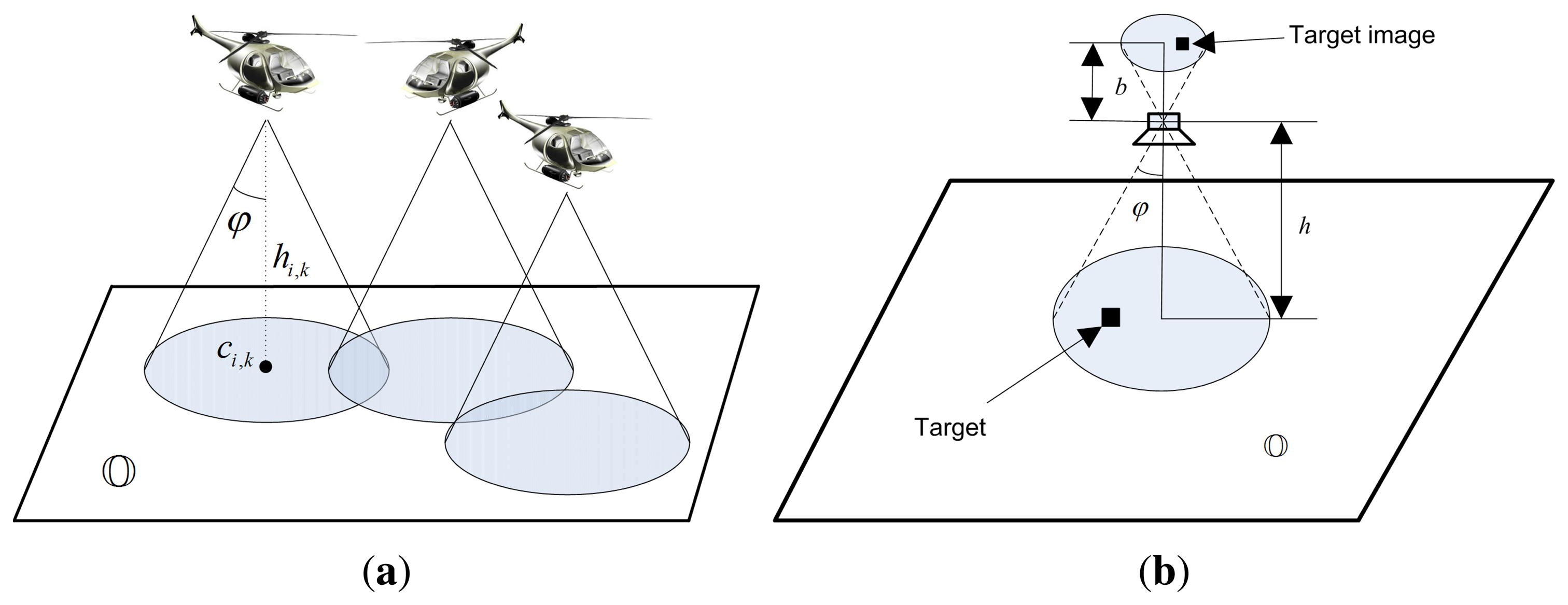

In this paper, we mainly discuss about the vision-based detections where each agent carries an airborne camera facing downward to surveillance region (as shown in Figure 1a). Each agent independently takes measurements Zi,g,k over the cells within its sensing region ℂi,k at time k, where

The topology of the network of all agents at time k is modeled by an undirected graph

3. Probability Map Update

3.1. Bayesian Update and Consensus-Based Map Fusion

In [11], we proposed a cooperative control scheme for target search in multi-agent systems. In a group of UAVs, each agent i keeps an individual probability map

i,g,0 < 1 and 1 > pi,k,qi,k > 0. For the cases with pi,k = 0 or 1 or qi,k = 0 or 1, simplified conclusions can be obtained as shown in [11] and will not be considered in this paper. By letting

i,g,0 < 1 and 1 > pi,k,qi,k > 0. For the cases with pi,k = 0 or 1 or qi,k = 0 or 1, simplified conclusions can be obtained as shown in [11] and will not be considered in this paper. By letting

Keeping Qi,g,k as the updated term instead of

Then, each agent i transmits the updated Qi,g,k of the whole region to its neighbors for map fusion, which is given by:

i,k (j ≠ i) and wi,j,k= 0 for j ∉

i,k (j ≠ i) and wi,j,k= 0 for j ∉

i,k. Then, a matrix composed of wi,j,k can be defined as:

i,k. Then, a matrix composed of wi,j,k can be defined as:

3.2. Time-Varying Detection Probability

In [11], we only considered a 2-dimensional control scheme assuming that all agents move on a fixed plane parallel to the ground plane. However, in the real world, UAVs such as helicopters can change their altitudes according to their task requirements so as to enlarge their sensing area (here we only consider cameras with a fixed zooming level). Therefore, in this paper, we will consider the influence of 3-dimensional dynamics of UAVs on the detection performance.

For vision-based detection, the detection probability relies on the picture resolutions. Figure 1b shows the basic imaging scheme by an airborne camera similar to the one given in [15,22]. In general, a desirable property for good target recognition is a “right” ratio between the size of the image and the size of the target, where “right” depends on the target type and the detection algorithm that is employed. To be more simplified, it can be assumed that the larger the image of a target in the picture (in terms of the number of occupied pixels) obtained by the UAV, the easier for the UAV to discriminate the target no matter what recognition method is used. Hence, we can model the target discriminability of a UAV as a function ρ proportional to the ratio between the size of a target image taken by the camera denoted by STI and the size of one pixel denoted by SP, i.e., . Here, we assume that all targets are of the same visual properties such as color, shape and size that are influential on target discriminability. It is also assumed that each camera has a fixed focal length so that we can only consider the change of ρ due to the variation of agent altitude. Then, by denoting the size of the projection of a target on the ground plane as ST, we can derive that:

The target discriminability determines the detection probability when a UAV is detecting the existence of targets within each cell under surveillance. It is natural to conceive that the detection probability pi,k increases and the false alarm probability qi,kdecreases as ρi,k increases. When the altitude of the UAV becomes larger than a threshold h̄, it runs out of its ability to discriminate any target from the background environment, which means that the detection result dose not rely on the true existence of the target any more. That is, if hi,k ⩾ h̄, we have P (Zi,g,k = 0|θg = 1) = P (Zi,g,k = 1|θg = 1) = P (Zi,g,k = 1|θg = 0) = P (Zi,g,k = 1|θg = 0), i.e., pi,k = qi,k = 0.5. Generally, when h̲i,k ∈ [h, h̄] (h̲<h̄), pi,k should be a monotonically increasing function of ρi,k, or more explicitly, a monotonically decreasing function of hi,k. Therefore, we assume the following detection probability model:

Remark 1

Model (9) is motivated by the natural understanding of the interaction between the altitude of an agent and its detection and false alarm probabilities. It only reflects the general relation between those parameters, and is not restricted to a specific parametric representation of f1 and f2. Hence, our method is applicable for any detection probability function that fits for the model. An experimental detection probability model of CCD camera has been given in [22].

Remark 2

pi,k and qi,k can also be cell-dependent, i.e., they may vary from place to place due to environment conditions. For example, the target is often easier to be discriminated on an open ground than on a land with trees. In complex environments, agents must know the detection probability and false alarm probability models of different type of regions. For the ease of expression, we assume the models to be constant across the whole surveillance region.

Denoting by mi,g,k the number of observations taken over cell g up to time k by agent i and defining , we can get the following conclusions for the update of Qi,g,k.

Theorem 1

Given the initial prior probability map 0 <

- (1)

If a target is present within cell g, (i.e., ) ∀i ∈

as mg,k → +∞.

- (2)

If no target is present within cell g, (i.e., ) ∀i ∈

as mg,k → +∞.

Proof

See Appendix A.

3.3. Environment-Based Probability Map

In the map updates (5) and (6), the effect of the environmental changes such as the information decay and transmission between cells has not been considered. For example, if targets may randomly appear or disappear during search, the historical information about the target existence cannot reflect the true current situation and revisits of certain frequency to the detected cells are needed for information update. This problem can be formulated as the information decay for each cell. If a target may move from one cell to another, then part of the information for the former cell should be removed and counted as the new information for the latter cell. This problem can be formulated as the information transmission between each two cells. Therefore, we need to generalize the aforementioned map updating model to be applicable to the case with such environmental changes. Similar to the assumption made in [16], we assume that Qi,g,k decays exponentially for each cell if there is no prior knowledge and/or no measurement information. The information transmission between cells due to target movement is modeled based on the transition of probabilities. In addition, the prior knowledge about the environmental change is taken as the system input. All these lead to the following generalized updating model for Qi,g,k:

Remark 3

ai,g,r,k and bi,g,r,k are defined based on the physical meaning of information transmission due to the target movement in the real world. Since the combination of Qi,r,k (r ∈

Here we give a simple example to illustrate how the parameters are designed if the true target dynamic model is give by x k+1 = Ψxk where xk is a vector including the target location. In this case, one can calculate the transition probability P(θg,k+1 = 1|θr,k= 1) for any two cells r and g where θr,k = 1 represents that the target locates within cell r at time k. Following this, given the current accumulated information on target existence Qi,r,k of agent ifor cell r at time k, one can calculate bi,g,r,k following its definition. Further, with the results of bi,g,r,k for any two cells r and g, one can calculate ai,g,r,k according to its definition in Equation (12). If the target will not suddenly disappear/appear, the decaying factor α can be set as zero.

Define the following augmented variables:

According to Theorem 1, ‖Qk‖ can be seen as the gathered information for decision making on the target existence and the larger the ‖Qk‖, the less the uncertainty about the target existence or nonexistence. Hence, our aim of controlling the UAVs is to maximize ‖Qk‖ in some sense, which will be discussed in the following section.

4. Cooperative Coverage Control

In the previous section, a distributed map updating scheme was proposed for fusion of the knowledge of multiple agents. In this section, we will design a cooperative control strategy that optimizes the trajectories of agents for target search based on their real-time updated knowledge about the target information. Within each time interval, an agent first updates its probability map by the the map updating scheme designed in Section 3.3 and then makes a control decision on which place it should move to for the next observation by collective optimization which will be addressed in this section. The two steps make the whole network form a closed-loop sensing and feedback control system.

Here we consider the waypoint motion model for each agent:

Following Equation (13), we can get

Notice that Equation (16) is not an approximation of Equation (15), but a new cost function we intend to optimize which is an upper bound of Equation (16). Such way of defining the cost function is very common in statistics and estimation theory such as the Cramér–Rao lower bound, which is often selected as the cost function if the true variance of estimation error is time-varying and unknown.

Following Equation (16), we should try to maximize and the collective sensing area of all agents. From Equation (4), we get for g ∈ ℂj,k:

It is straightforward to find that is monotonically increasing with respect to pj,k and monotonically decreasing with respect to qj,k no matter θg,k = 1 or not. Thus, Equation (16) is further replaced with the following optimization problem:

at time k, and its influence on the control law will be shown later. {

at time k, and its influence on the control law will be shown later. {

1,k,…

1,k,…  N,k} is a partition of

N,k} is a partition of

at time k subject to μi,k ∈

at time k subject to μi,k ∈

i,k, such as the Voronoi partition. The introduction of the partition is for avoidance of collision between UAVs and ease of dealing with the overlapped sensing regions between neighboring agents, which will be discussed later. Since pi,k ⩾ qi,k,

i,k, such as the Voronoi partition. The introduction of the partition is for avoidance of collision between UAVs and ease of dealing with the overlapped sensing regions between neighboring agents, which will be discussed later. Since pi,k ⩾ qi,k,

(μk) is always nonnegative and

(μk) is always nonnegative and

(μk) = 0 if hi,k ⩾ h̄. Denoting by ∂(•) the boundary of the corresponding region and n∂(•) (r) the outward pointing normal vector of the boundary ∂ (•) at point r, we can compute the gradient of

(μk) = 0 if hi,k ⩾ h̄. Denoting by ∂(•) the boundary of the corresponding region and n∂(•) (r) the outward pointing normal vector of the boundary ∂ (•) at point r, we can compute the gradient of

(μk) as follows.

(μk) as follows.Theorem 2

The gradient of the cost function

for hi,k ∈ (h̲, h̄), and

Proof

See Appendix B.

Following Theorem 2, a gradient-based control law is given by

Remark 4

Note that the control input is always upper bounded in real systems, i.e., ‖ui,k‖ ⩽ umax for some positive number umax and the height of each agent is also bounded by hi,k < h̄ to have meaningful detections. Moreover, to avoid collision when neighboring agents are at the same altitude, the motion of each agent should be constrained by ci,k+1 ∈

where λi,k is a scaling factor defined by

Λi,k is a buffer region enclosing the border of

Generally, it is favored that UAVs stay longer in the region with less gathered information to take more measurements. Thus, we define the weighting function ϕi,k (g) as a function of the gathered information ‖Qi,g,k−1‖ for each cell, i.e.:

Remark 5

The partition {

5. Simulation

5.1. Simulation Environment

We deploy multiple UAVs to search for four ground targets. The whole surveillance region is a square region of [0, 50] × [0, 50] m2 as shown in Figure 2a, within which lie two crossing roads denoted by

Since the convergence of the individual probability map

5.2. Simulation Results

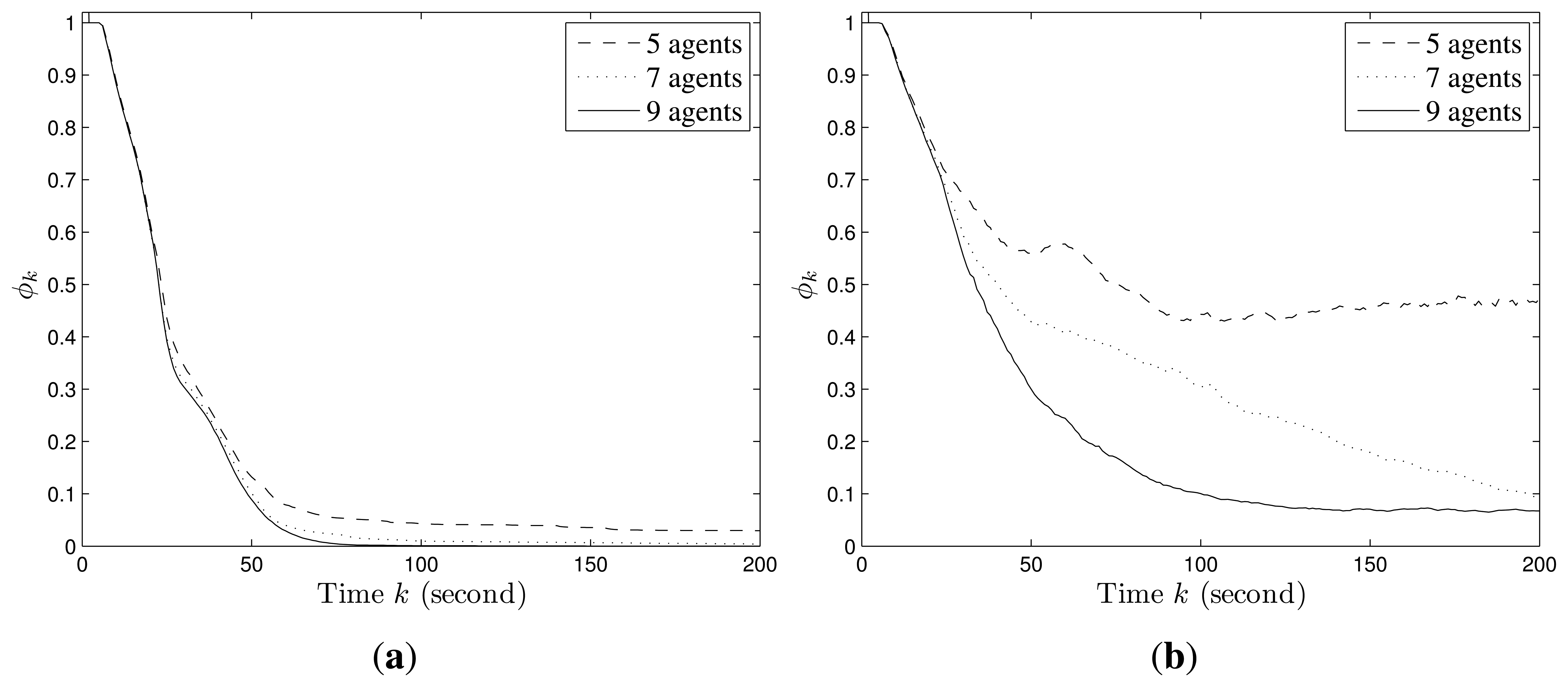

Figure 2 shows an example of the convergence process of individual probability maps in Scenario I with stationary targets, where the probabilities converge to 1 for the cells within which targets truly exist and 0 for the cells within which no target exists. The snapshots of UAVs in Scenario I are shown in Figure 3. Additionally, ϕk finally converges to 0 and the more agents are deployed, the faster it converges as shown in Figure 4a.

The convergence process of an individual probability map in Scenario II with mobile targets is shown in Figure 5, where the probabilities for the cells around targets may not converge to 0 as in Scenario I due to the random mobility of targets. However, we still can infer that there are four targets on the roads and have a rough estimation of their positions based on the envelopes of the final probability maps of UAVs. The snapshots of UAVs in Scenario II at according times are shown in Figure 6. In this case, ϕk does not converge to 0 as shown in Figure 4b. However, a smaller ϕk can be obtained with more agents deployed since the collective sensing area becomes larger. Compared with the results in Scenario I, the number of deployed agents has a greater impact on the convergence performance of probability maps in Scenario II with random target mobility. Hence, the algorithm is more robust with more UAVs deployed.

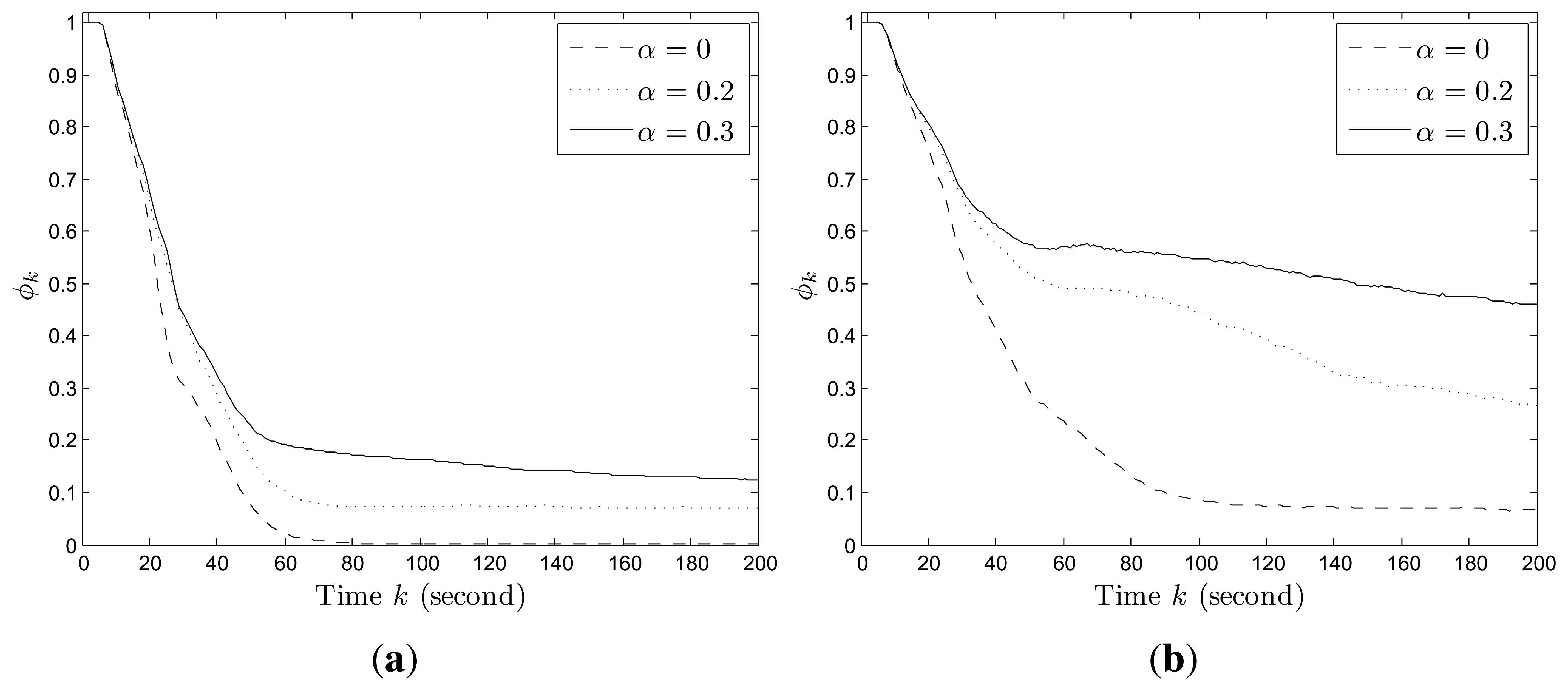

In addition, we also test the impact of the information decaying factor on the convergence results. According to the simulation results (as shown in Figure 7), a larger decaying factor will lead to larger average uncertainty about the target existence in the whole region, because the accumulated information for each cell decays faster. In fact, the design purpose of the decaying factor is to let agents revisit each cell at certain frequency to update the latest information about target existence in the cell. Therefore, the tradeoff lies in that, a larger decaying factor leads to larger uncertainty, but makes the agents pay more attention to the cells with fewer observations. However, there is no quantitative means of choosing the decaying factor and users may find a proper one via simulation method.

6. Conclusions

In this paper, we studied the three-dimensional vision-based cooperative control and information fusion in target search by a group of UAVs with limited sensing and communication capabilities. First, heuristic detection probability and false alarm probability models were built which are related to the target discriminability of a camera and varies as a function of altitude. Then, we formulated the target search problem as a coverage optimization problem by balancing the coverage area and the detection performance. A generalized probability map updating model was proposed, by considering the information decay and transmission due to environmental changes such as the target movement. The simulation results showed that the proposed algorithms can make the individual probability maps of all agents converge to the same one which reflects the true environment when the targets are stationary. The influence of target mobility and the number of deployed UAVs on the convergence of probability maps has also been illustrated by simulation. Following this work, there is still a big potential area for future development and generalization of the proposed method. For example, the extension for detection by heterogenous sensors is an interesting topic since more types of information can be combined to improve the detection performance. More realistic environmental and system conditions that can affect the search results need to be considered such as the light intensity, block on the line of sight, camera with adjustable focus, asynchronous communication, etc.

Author Contributions

Jinwen Hu, Lihua Xie and Jun Xu conceived and designed the study. Jinwen Hu and Zhao Xu designed and implemented the simulation and method validation. Jinwen Hu wrote the paper. Lihua Xie and Jun Xu reviewed and edited the manuscript. All authors read and approved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Theorem 1

First, we consider Case 1, where a target is present within cell g. Define the following augmented notations for the entire system:

Then, the updating rule (5) and (6) can be replaced by the following equation:

Hence,

{g∈ ℂj,t} is the indicator function defined as:

{g∈ ℂj,t} is the indicator function defined as:

Since Qi,g,0, υj1,g,η1 and υj2,g,η2 are independent for j1 ≠ j2 or η1 ≠ η2, we can get the variance

Considering that D [υj,g,t] is a continuous function of pj,t and 1 > p̌ ⩾ pj,t ⩾ 0.5 + ε > 0.5, 0 < qˆ ⩽ qj,t ⩽ 0.5 − ε < 0.5, there exists a constant real number such that D [υj,g,t] ⩽ σ2 for g ∈ ℂj,t, which implies

According to the Kolmogorov Strong Law of Large Numbers, [24] we get

Hence,

On the other hand, since pj,t > qj,t, it is straightforward to get

Hence,

Following the same procedure of the proof above, we can prove the conclusion of Case 2.

Appendix B. Proof of Theorem 2

We first consider hi,k ∈ (h̲, h̄). From Equation (17), we get

Defining a set of agents for agent i, i.e.,

and j1 ≠ j2 we have

. For r ∈

, we have

. Hence,

and j1 ≠ j2 we have

. For r ∈

, we have

. Hence,

From Equation (9) and according to the results of [15], we get

According to Equation (9) pi,k = p̌ for hi,k ∈ (0, h̲). It is straightforward to get Equation (19) following the same procedure of proof as above.

References

- Garzón, M.; Valente, J.; Zapata, D.; Barrientos, A. An Aerial-Ground Robotic System for Navigation and Obstacle Mapping in Large Outdoor Areas. Sensors 2013, 13, 1247–1267. [Google Scholar]

- Heredia, G.; Caballero, F.; Maza, I.; Merino, L.; Viguria, A.; Ollero, A. Multi-Unmanned Aerial Vehicle (UAV) Cooperative Fault Detection Employing Differential Global Positioning (DGPS), Inertial and Vision Sensors. Sensors 2009, 9, 7566–7579. [Google Scholar]

- Kocur, D.; Švecová, M.; Rovňáková, J. Through-the-Wall Localization of a Moving Target by Two Independent Ultra Wideband (UWB) Radar Systems. Sensors 2013, 13, 11969–11997. [Google Scholar]

- Xu, Y.; Xu, H.; An, W.; Xu, D. FISST Based Method for Multi-Target Tracking in the Image Plane of Optical Sensors. Sensors 2012, 12, 2920–2934. [Google Scholar]

- Bertuccelli, L.; How, J. Robust UAV search for environments with imprecise probability maps. Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 12–15 December 2005; pp. 5680–5685.

- Yang, Y.; Minai, A.; Polycarpou, M. Decentralized cooperative search by networked UAVs in an uncertain environment. Proceedings of the American Control Conference, Boston, MA, USA, 30 June–2 July 2004; Volume 6, pp. 5558–5563.

- Yang, Y.; Polycarpou, M.; Minai, A. Multi-UAV cooperative search using an opportunistic learning method. J. Dyn. Syst. Meas. Control 2007, 129, 716–728. [Google Scholar]

- Zhong, M.; Cassandras, C. Distributed coverage control and data collection with mobile sensor networks. Proceedings of the IEEE Conference on Decision and Control, Atlanta, GA, USA, 15–17 December 2010; pp. 5604–5609.

- Gan, S.K.; Sukkarieh, S. Multi-UAV target search using explicit decentralized gradient-based negotiation. Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 751–756.

- Millet, P.; Casbeer, D.; Mercker, T.; Bishop, J. Multi-Agent Decentralized Search of a Probability Map with Communication Constraints. Proceedings of the AIAA Guidance, Navigation, and Control Conference, Toronto, ON, Canada, 2–5 August 2010.

- Hu, J.; Xie, L.; Lum, K.Y.; Xu, J. Multiagent Information Fusion and Cooperative Control in Target Search. IEEE Trans. Control Syst. Technol. 2013, 21, 1223–1235. [Google Scholar]

- Arai, T.; Pagello, E.; Parker, L.E. Editorial: Advances in multi-robot systems. IEEE Trans. Robot. Autom. 2002, 18, 655–661. [Google Scholar]

- Cortes, J.; Martinez, S.; Karatas, T.; Bullo, F. Coverage control for mobile sensing networks. IEEE Trans. Robot. Autom. 2004, 20, 243–255. [Google Scholar]

- Schwager, M.; Rus, D.; Slotine, J. Decentralized Adaptive Coverage Control for Networked Robots. Int. J. Robot. Res. 2009, 28, 357–375. [Google Scholar]

- Schwager, M.; Julian, B.; Angermann, M.; Rus, D. Eyes in the Sky: Decentralized Control for the Deployment of Robotic Camera Networks. Proc. IEEE 2011, 99, 1541–1561. [Google Scholar]

- Wang, Y.; Hussein, I. Awareness coverage control over large-scale domains with intermittent communications. IEEE Trans. Autom. Control 2010, 55, 1850–1859. [Google Scholar]

- Horn, R.; Johnson, C. Matrix Analysis; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Lu, J.; Chen, G. A time-varying complex dynamical network model and its controlled synchronization criteria. IEEE Trans. Autom. Control 2005, 50, 841–846. [Google Scholar]

- Solis, R.; Borkar, V.S.; Kumar, P. A new distributed time synchronization protocol for multihop wireless networks. Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 2734–2739.

- Kopetz, H.; Ochsenreiter, W. Clock synchronization in distributed real-time systems. IEEE Trans. Comput. 1987, 100, 933–940. [Google Scholar]

- Elson, J.E. Time Synchronization in Wireless Sensor Networks. Ph.D. Thesis, University of California Los Angeles, Los Angeles, CA, USA, 2003. [Google Scholar]

- Hansen, S.; McLain, T.; Goodrich, M. Probabilistic searching using a small unmanned aerial vehicle. Proceedings of AIAA Infotech@Aerospace, Rohnert Park, CA, USA, 7–10 May 2007; pp. 7–10.

- Hu, J.; Xu, Z. Distributed cooperative control for deployment and task allocation of unmanned aerial vehicle networks. IET Control Theory Appl. 2013, 7, 1574–1582. [Google Scholar]

- Ash, R.; Doleans-Dade, C. Probability and Measure Theory; Academic Press: San Diego, USA, 2000. [Google Scholar]

- Xiao, L.; Boyd, S.; Lall, S. A scheme for robust distributed sensor fusion based on average consensus. Proceedings of the 4th International SymposiumInformation Processing in Sensor Networks, Los Angeles, CA, USA, 25–27 April 2005; pp. 63–70.

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Hu, J.; Xie, L.; Xu, J.; Xu, Z. Multi-Agent Cooperative Target Search. Sensors 2014, 14, 9408-9428. https://doi.org/10.3390/s140609408

Hu J, Xie L, Xu J, Xu Z. Multi-Agent Cooperative Target Search. Sensors. 2014; 14(6):9408-9428. https://doi.org/10.3390/s140609408

Chicago/Turabian StyleHu, Jinwen, Lihua Xie, Jun Xu, and Zhao Xu. 2014. "Multi-Agent Cooperative Target Search" Sensors 14, no. 6: 9408-9428. https://doi.org/10.3390/s140609408