Contact Region Estimation Based on a Vision-Based Tactile Sensor Using a Deformable Touchpad

Abstract

: A new method is proposed to estimate the contact region between a sensor and an object using a deformable tactile sensor. The sensor consists of a charge-coupled device (CCD) camera, light-emitting diode (LED) lights and a deformable touchpad. The sensor can obtain a variety of tactile information, such as the contact region, multi-axis contact force, slippage, shape, position and orientation of an object in contact with the touchpad. The proposed method is based on the movements of dots printed on the surface of the touchpad and classifies the contact state of dots into three types—A non-contacting dot, a sticking dot and a slipping dot. Considering the movements of the dots with noise and errors, equations are formulated to discriminate between the contacting dots and the non-contacting dots. A set of the contacting dots discriminated by the formulated equations can construct the contact region. Next, a method is developed to detect the dots in images of the surface of the touchpad captured by the CCD camera. A method to assign numbers to dots for calculating the displacements of the dots is also proposed. Finally, the proposed methods are validated by experimental results.1. Introduction

Tactile receptors in the skin allow humans to sense multimodal tactile information such as the contact force, slippage, shape and temperature of a contacted object. By feeding back information from tactile receptors, humans can control their muscles dexterously. Therefore, tactile sensing is a crucial factor for robots to imitate skilled human behaviors. In consideration of practical applications, tactile sensors should meet three specific requirements. Firstly, flexible sensor surfaces are desirable because sensors should fit the object geometrically to avoid the contacted object from collapsing and enhance stability of the contact. Secondly, a simple structure is required for compactness of robots. Thirdly, for achieving dexterous and multifunctional robots, we need a sensor which can obtain various types of tactile information simultaneously.

Many types of tactile sensors have been developed using various sensing elements such as resistive, capacitive, piezoelectric, ultrasonic or electromagnetic devices [1,2]. In order to estimate the slippage of a contacted object, a sensor with array of strain gauges embedded in an elastic body has been proposed [3]. Standing cantilevers and piezo resistors arrayed in an elastic body have been developed for detecting shear stress [4]. Schmitz et al. have implemented twelve capacitance-to-digital converter (CDC) chips in a robot finger, providing twelve 16-bit measurements of capacitance [5]. Sensing elements based on a capacitive method have been arrayed on conductive rubber at regular intervals for measuring three components of stress [6].

However, the crucial practical issues remain unresolved. The structures of these sensors are complex and cannot satisfy the second requirement as described in the previous paragraph because theses sensors require many sensing elements and complicated wiring. Although a wire-free tactile sensor using transmitters/receivers [7] and a sensor using micro coils changing impedance by contact force [8] have been proposed, they are also packed in complex structures. Small sensors using microelectromechanical system (MEMS) have been manufactured [9–12]. However, the surfaces of these sensors are minimally deformable and cannot satisfy the first requirement as described above.

Differently to these sensors, vision-based sensors are suitable for tactile sensing [13–15]. Typical vision-based sensors can satisfy the first and second requirements as described above because they consist of the following two components: a deformable contact surface made of elastic material to fit its shape to contacted objects; and a camera to observe the deformation of the contact surface. Since multiple sensing elements and complex wiring are not required, compact vision-based sensors can be easily fabricated. Analysis of the deformation of the surface yields multiple types of tactile information. The two-layered dot markers embedded in the elastic body of the sensors have visualized the three-dimensional deformation of the elastic body to measure a three-axis contact force [16,17]. The markers are observed by a charge-coupled device (CCD) camera. The sensors consisting of rubber sheets with nubs, a transparent acrylic plate, a light source and a CCD camera have been developed [18,19]. Light traveling through the transparent plate is diffusely reflected at which the nubs contact the plate. The intensity of the reflected light captured by the CCD camera is transformed into the three-axis contact force. The sensor reported in [20] has estimated the orientation of an object by using the four corner positions of the reflector chips embedded in the deformable surface of the sensor. However, these sensors cannot satisfy the third requirement because they only detect single type of tactile information.

Moreover, although the sensors in the literature have provided information such as the contact force, slippage and shape of an object, the contact region between the sensor and an object also gives crucial information. The contact region allows us to estimate the shapes of objects in an accurate manner when combined with shape information from a sensor surface. Since geometric fit between a sensor and object occurs only in the contact region, the accurate estimation of an object's shape requires information about not only the sensor's shape but also the contact region. In consideration of implementing the sensor in robot hands, when the contact region is small, the grasped object can be easily rotated because the feasible contact moment is also small. In order to avoid the risk that the sensor surface tears or a grasped object collapses, and to enhance a grasping task's stability with a sufficiently large contact area, it is necessary to evaluate the contact pressure based on the contact region.

Among many sensors to sense contact forces, some sensors to measure force pressure distribution may detect the contact region based on the distribution [16–19]. In ideal situations, the force pressure becomes zero outside the contact region and does not become zero in the contact region. However, this assumption is violated by the stiffness of the elastic body. Moreover, in order to measure the pressure distribution, the sensors requires many arrayed sensing elements and wiring as described in above sentences. Some sensors have been proposed for obtaining the contact region directly. A sensor using regularly-arrayed cantilevers has been developed for estimating the contact region of objects from the deformation of the cantilevers in the elastic body [21]. However, this sensor also requires many arrayed sensing elements and the measurement error depends on the direction and position of the cantilevers. A large error can be caused when the cantilevers is far away from the contacted object. A finger-shaped tactile sensor based on optical phenomena has been developed for detecting the contact location [22]. The light travels from optical fibers into a hemispherical optical waveguide in the elastic sensor surface. When the sensor surface contacts the internal optical waveguide due to the contact between the sensor surface and the objects, light is reflected in the contact region. A position sensitive detector (PSD) receives the reflected light and thus the contact region is detected from the signals of the PSD. However, the surfaces of these sensors cannot fit with in the objects geometrically because the surfaces has elasticity but the inside optical waveguide is not deformed. Therefore, large contact region cannot be generated and that leads unstable contact.

We have proposed a vision-based tactile sensor that can sense multiple types of tactile information simultaneously including the slippage [23,24], contact region [25], shape [26], multi-axis contact force [27], position [25] and orientation [25] of an object. We have applied this sensor to prevent the object from slipping [28]. The sensor consists of a CCD camera, light-emitting diode (LED) lights and a hemispherical elastic touchpad for contacting the object. Because of the simple structure of the sensor and the deformable touchpad, our proposed sensor can satisfy the above three requirements: a deformable surface for the sensor; simple structure; and simultaneous acquisition of various types of tactile information. However, the previous method used for estimating the contact region required the strict restriction that the contact surface of the object must be flat or convex [25].

The purpose of this study is to estimate the contact region between the sensor and a contacted object without strict assumptions. A new proposed method is based on the movements of dots printed on the surface of the sensor. The contact state of the dots is classified into three types—the non-contacting dot, the sticking dot and the slipping dot. Considering the movements of the dots, equations are formulated to discriminate between the contacting dots and the non-contacting dots and modified by selecting the appropriate time interval and introducing the threshold values. A set of the contacting dots discriminated by the formulated equations can construct the contact region. Next, an image processing method is proposed to detect the dots in images of the surface of the sensor captured by the CCD camera. A method to assign numbers to dots for calculating the displacements of the dots is also proposed. Finally, the methods proposed methods are validated by experimental results.

2. Vision-Based Tactile Sensor

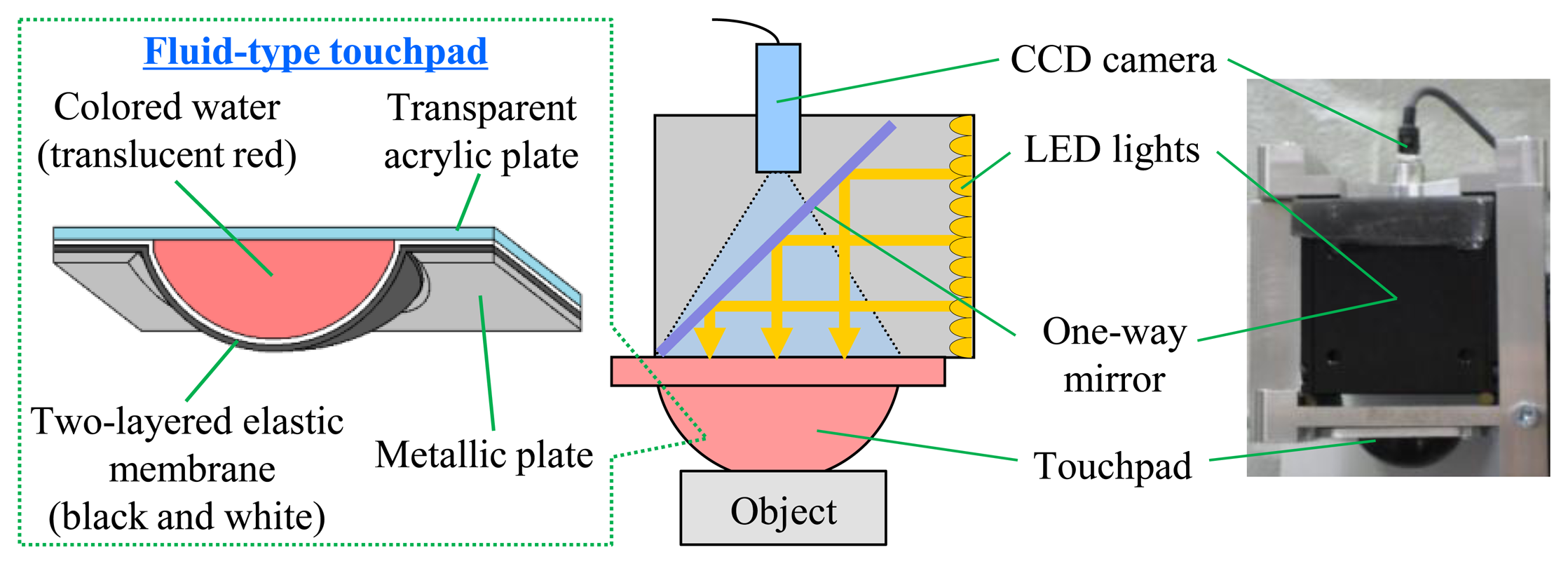

Figure 1 shows the configuration of a vision-based tactile sensor which consists of a CCD camera, LED lights, and a deformable fluid-type touchpad. The dimensions of the LED lights and the CCD camera are 60 × 60 × 60 mm and 8 × 8 × 40 mm, respectively. The fluid-type touchpad is hemispherical, with a curvature radius and height of 20 mm and 13 mm. The surface of the touchpad is made of an elastic membrane constructed of silicon rubber; the inside of the membrane is filled with translucent, red-colored water. A dotted pattern is printed on the inside of the touchpad surface to observe the deformation of the touchpad. When the touchpad comes in contact with objects, analysis of the deformations yields multimodal tactile information, using an image of the inside of the deformed touchpad captured by the CCD camera. Figure 2 shows the captured images, sized 640 × 480 effective pixels, when the touchpad does not contact and contact an object, respectively. Our proposed sensor can obtain multiple types of tactile information, including the contact region, multi-axis contact force, slippage, shape, position and orientation of an object [23–27].

3. Estimation of Contact Region

3.1. Theory for Estimating the Contact Region

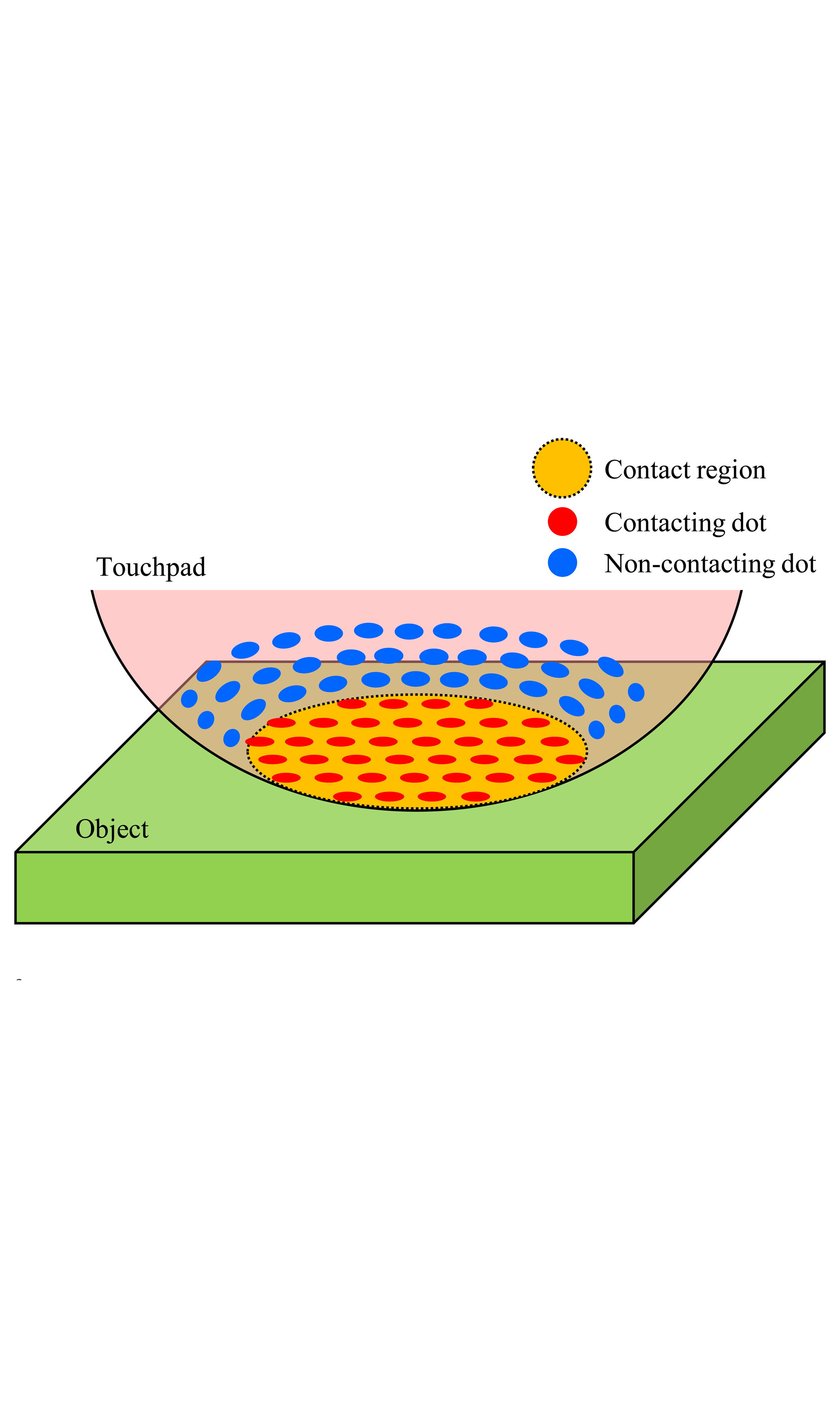

In order to estimate the contact region between the touchpad and an object, we focus on the approach that the printed dot patterns on the surface of the touchpad can be considered to be sensing elements. If each dot contacting the object can be discriminated, the contact region can be constructed as a set of dots in the contact region as shown in Figure 3. Although the other sensors use many sensing devices and wiring for obtaining the contact region [16–19,21], our approach is advantageous because fewer sensing elements and less wiring is required, thereby generating a more compact size and structure. Moreover, the method is generalized because it can be applied to other sensors including dots/markers on the sensor surface. Differently to using many sensing devices, the size of dots can easily be produced in smaller sizes by the printing technique, and thus high resolution is expected. The sensor for obtaining the contact region in [22] cannot fit with in the objects geometrically because of the inside solid body. Our previous work in [25] required the strict restriction that the contact surface of the object must be flat or convex. Differently from these previous works, our sensor without many sensing devices can deform because of the elastic touchpad and does not require strict assumptions to objects, which are also advantageous. In the next section, we discriminate dots to construct the contact region.

3.2. Discrimination of Dots

The contact state of a dot is classified into three types—the non-contacting dot, the sticking dot and the slipping dot. The non-contacting dot is a dot that lies outside of the contact region. The sticking dot is defined as a dot that is in the contact region but does not slip, while the slipping dot slips on an object in the contact region. The contacting dots include the sticking dots and the slipping dots. In order to construct the contact region, the multiple types of dots are discriminated, and the contacting dots are extracted.

To solve the problem of discriminating among the dots, we focus on dynamic information concerning the dots. Considering the movements of the dots with reference to an object, we formulate equations to discriminate between the contacting dots and the non-contacting dots. Here, calculation of the positions and movements of the dots is described in the next section.

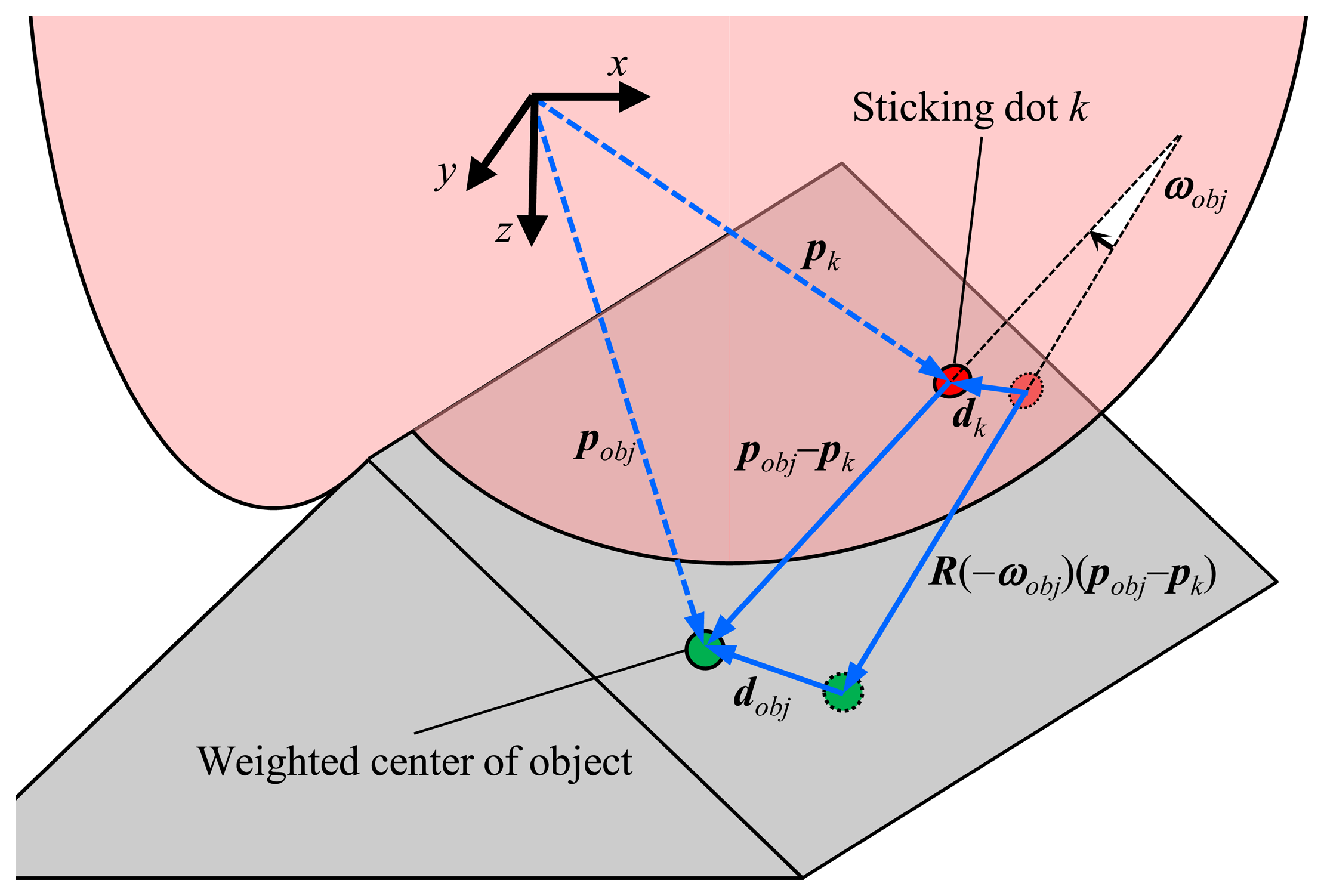

Firstly, we address the discrimination of the sticking dots. When a certain dot is in contact with the object without slippage, the movement of the dot is geometrically-determined from the movement of the object. The sticking dot must satisfy the following equation:

In order to apply Equation (1) to all dots, we must obtain the rotation angle, the displacement and the position of the object. Although the values cannot be directly obtained, our previous method can be used to estimate the rotation angle and the displacement of the object under the assumption that the at least a set of nine dots are does not slip on the surface of an object [25]. This assumption is achieved by preventing the object from slipping when we apply our previous method [28].

We introduce the contact reference dot previously proposed in [25] to calculate the rotation angle and the displacement of the object. From among the multiple dots printed on the surface of the touchpad, the contact reference dot kref, is defined in the following equation as the dot with the greatest displacement from the initial position:

The contact reference dot is defined such that it is always in contact with the object. Moreover, the contact reference dot does not slip on the surface of a contacted object, because our previous method can be applied to prevent the object from slipping [28]. As a result of this characteristic, the displacement and the rotation angle of the contact reference dot are equal to those of the object as demonstrated in an earlier manuscript [25]. Therefore, the contact reference dot always satisfies the following equations based on Equation (1):

where ωref, dref and pref are the rotation angle, the displacement and the position of the contact reference dot kref.

Next, we calculate the position of the object. When ωobj = ωref = 0 without rotation, pobj is eliminated in Equations (1) and (3) because R(–ωobj) = R(–ωref) = I. When ωref is not equal to zero, pobj is given as follows by transforming Equation (3):

Secondly, the discrimination of non-contacting dots is considered. When a dot is not in contact with the object, the dot does not satisfy Equation (7) as follows:

Thirdly, the difficult problem of the discrimination of slipping dots is considered—the displacements of the slipping dots are not equal to the displacement of the object because of the slippage. The approach used in this paper to solve this problem is to use the normal component of the displacement, which is perpendicular to the surface of the object. Although the slipping dot slips on the surface of the object, the dot does not move in the normal direction that is perpendicular to the surface of the object. Therefore, the normal component of the displacement of the dot is independent of the slippage. The normal component of the displacement of the slipping dot is equal to that of the object as follows:

We have formulated the conditions Equations (7)–(9) for discriminating among sticking dots, non-contacting dots, and slipping dots. In fact, the estimation of the contact region only requires the discrimination between the non-contacting dots and the contacting dots. However, the non-contacting dots may satisfy Equations (8) and (9) simultaneously. In order to avoid this problem, Equations (7) and (9) are applied to discriminate among the dots depending on the previous (one sampling step earlier) contact state of the dots. When a dot was a non-contacting dot in the previous state, we apply Equation (7) to determine the current contact state. The dot satisfying Equation (7) is regarded as the contacting dot. Otherwise, it is regarded as a non-contacting dot. When a dot was the contacting dot in the previous state, Equation (9) is applied. The dot satisfying Equation (9) is regarded as a contacting dot. Otherwise, it is regarded as a non-contacting dot. In the following section, the three-dimensional positions and displacements of the dots are calculated, and Equations (7) and (9) are modified for accuracy.

3.3. Modification of Equations Discriminating among the Dots

In order to calculate the three-dimensional positions of dots, the three-dimensional shape of the surface of the touchpad is used, which is estimated in our previous research reported in [26]. Images captured by the CCD camera contain the two-dimensional positions of the dots. By combining the three-dimensional shape of the surface of the touchpad with the two-dimensional positions of the dots in the images, the three-dimensional positions of the dots are calculated based on the geometric relationship described in [25].

Next, the displacements of the dots are calculated as the changes in positions from the previous time tp to the current time tc. Here, the value for the previous time tp must be selected appropriately because a large passage of time decreases the responsiveness of the proposed method. Moreover, the contact state of a dot may change between the previous time tp to the current time tc when tc–tp and the displacement of the dot are significantly large. Conversely, if the displacement of the dot is too small because of small tc–tp, the value is inappropriate, because it is significantly influenced by noise and the error of estimating the positions of the dots. In order to address this compromise, we introduced a threshold value Dmax for determining tp as given in the following equation:

Here, Equations (7) and (9) cannot be directly applied because the calculated positions of dots include the estimation error due to the shape-sensing error [26] and noise in the captured image. Therefore, Equation (7) is modified to decrease the effects of the estimation error of the positions as follows:

Here, although we consider distinguishing slipping dots temporarily to discriminate the contacting dot appropriately, the estimation of the contact region finally requires only the discrimination between contacting dots and non-contacting dots. It is not required to consider slipping dots directly because the only Equations (12) and (14) can conclude the discrimination.

3.4. Image Processing for Detecting the Dots in Captured Images

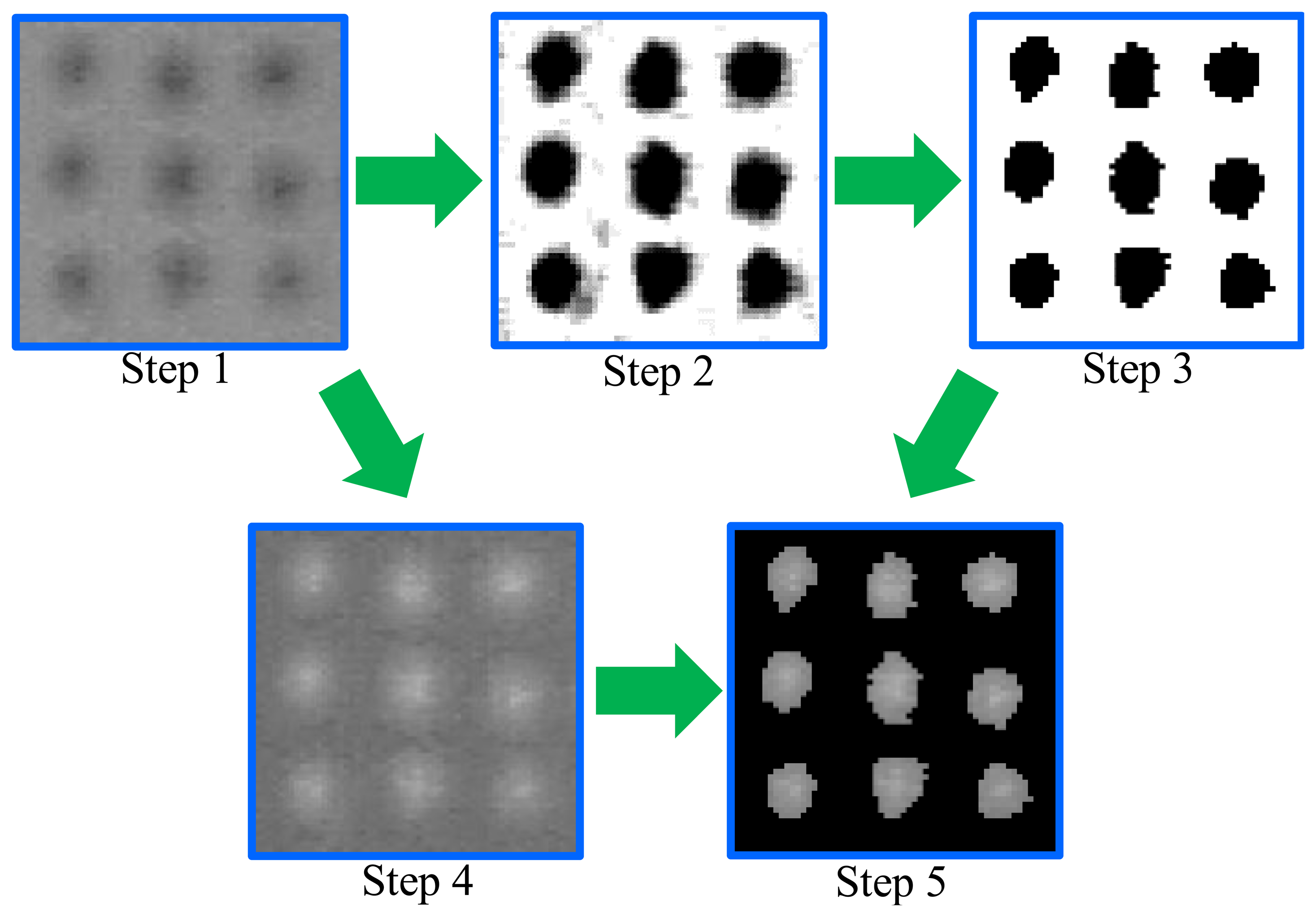

In the previous sections, we have proposed a method using the two-dimensional positions of the dots in images captured by the CCD camera for calculating the three-dimensional positions. Therefore, accuracy of the proposed method depends on the detection accuracy of the dots in the images. An image processing method is proposed to detect the dots accurately. The following six steps yield the positions of the dots as shown in Figure 5.

- Step 1:

The captured color image is transformed into a gray scale image.

- Step 2:

The contrast in the gray scale image is emphasized.

- Step 3:

The regions of the dots are extracted by binarizing the emphasized image. The extracted regions are represented by the black color in Figure 5.

- Step 4:

The brightness of the gray scale image is inverted.

- Step 5:

The brightness of the inverted image is extracted in the regions of the dots

- Step 6:

The position of each dot is obtained by calculating the brightness center in the region based on 0- and 1st-order moments as follows:

where (xk, yk) are the x- and y-directional positions of the dot k in the image. The v- and w-order moments are defined as follows:where I(i, j) is the inverted brightness of the pixel (i, j), and Rk is the region of the dot k extracted in Step 3. Here, inverting the brightness of the image in Step 3 can increase the detection accuracy of the dots. Note that the border of the extracted region of each dot may contain the error because of binarizing the image. If the brightness of the image is not inverted, the brightness at the border of the region is larger than the brightness at the center of the region. Therefore, the brightness of the border is dominant and thus decreases accuracy.

3.5. Numbering the Detected Dots

By the method introduced in the previous section, the positions of the dots have been obtained. However, the data are insufficient for calculating the displacements of the dots. In order to obtain the displacements, the identical dots must be identified between two different images. One approach is tracking the position of each dot by starting from the previous position. However, when the dots move quickly and the displacements of the dots are large, as shown in the difference between Figure 2, tracking methods may not be successfully applied, and thus a different dot is identified.

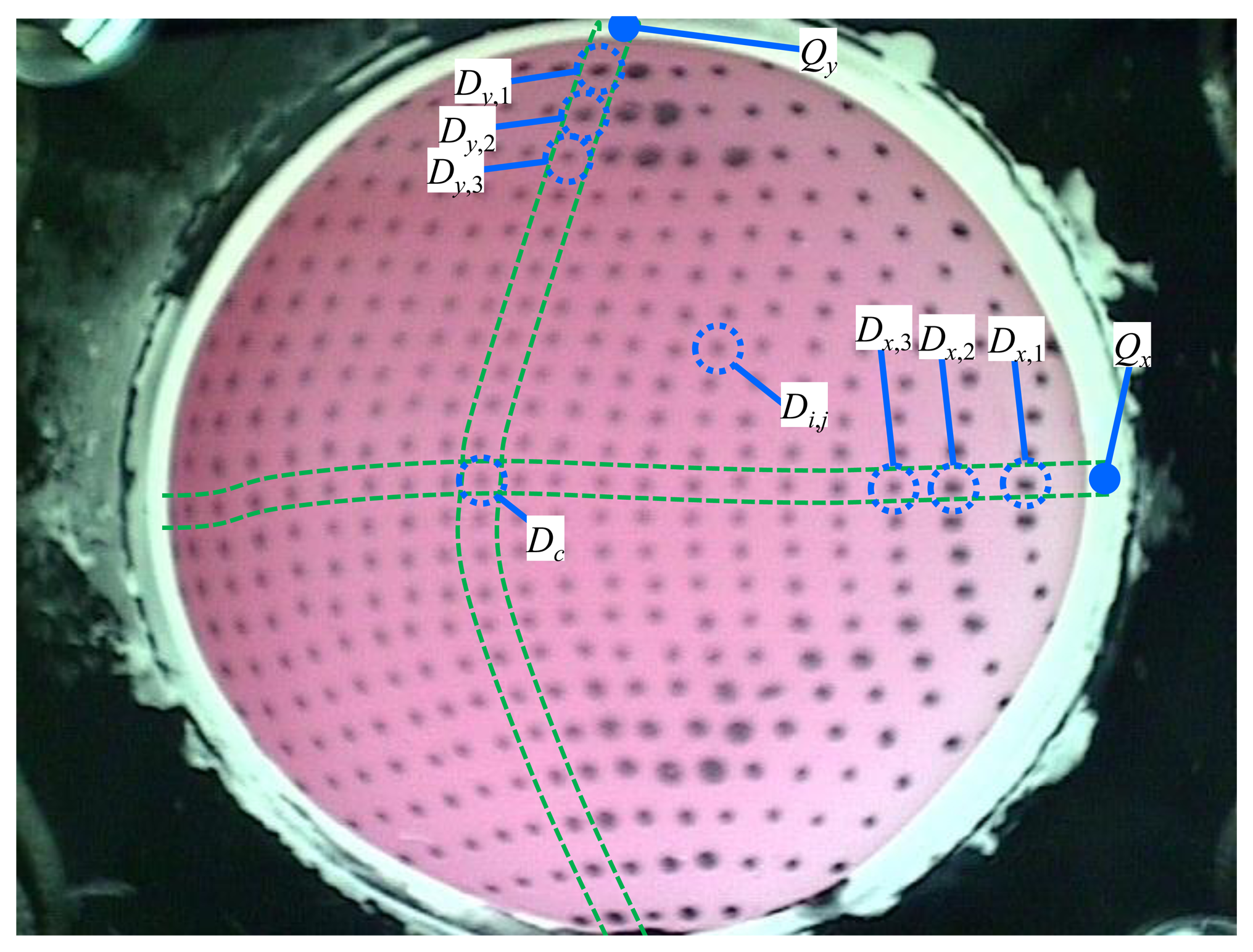

As an alternative to the tracking methods, the approach applied in this paper is to assign an identification number to each dot. When each dot has a fixed number, it can be easily identified even if it moves quickly. The following five steps are applied to assign numbers to the dots as shown in Figure 6:

- Step 1:

The constant points, Qx and Qy, were defined.

- Step 2:

The dots Dx,1 and Dy,1 which are the dots nearest to Qx and Qy, respectively, are selected.

- Step 3:

The dots Dx,k and Dy,k are located starting from Dx,1 and Dy,1, respectively, in order of increasing k. Here, Dx,k and Dy,k are found near the positions P'x,k and P'y,k, respectively, which predict the positions of Dx,k and Dy,k. Because the distances between two dots should be gradually changed when the next dots are searched, the following approximations are satisfied:

where Px,k and Py,k are the positions of Dx,k and Dy,k, respectively. Therefore, the positions P'x,k and P'y,k predicting Px,k and Py,k are defined as follows:P'x,k and P'y,k can predict Px,k and Py,k accurately even if the surface of the touchpad is significantly deformed because of the relationships defined in Equation (17). When k is 2, a constant vector is applied instead of (Px,k−1−Px,k−2) and (Py,k−1−Py,k−2) in Equation (18).- Step 4:

The central dot Dc is identified, which corresponds to Dx,v and Dy,w detected in Step 3, where v and w are arbitrary. There is only one dot defined as Dc in the image. The central dot Dc is assigned the value (12,12).

- Step 5:

The dot with the number i, j that is the i-th dot from the left and the j-th dot from the top is defined. When starting from Dc (12,12), each dot i, j is searched by using the predicted position P'i,j which is determined based on the relationship in Equation (17) as follows:

The equations are used to predict Pi,j. In these steps, numbers (I = 1,2,…,23, j = 1,2,…,23) can be assigned to all dots in each image.

4. Experimental Results

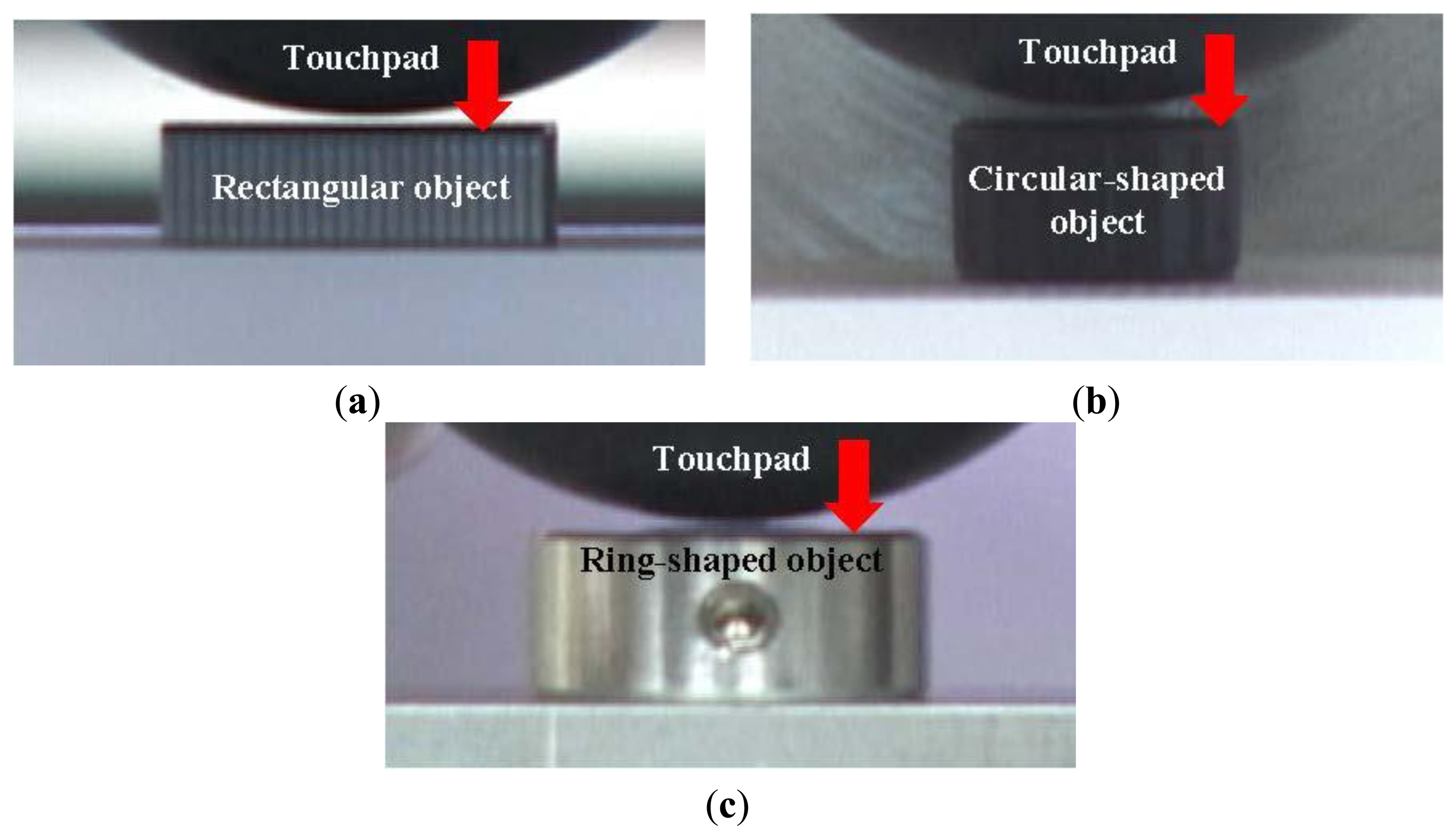

In this section, the proposed method is confirmed by the experimental results. The proposed sensor was fixed on a movable stage, in contact with variously-shaped objects such as a rectangular object, a circular-shaped object and a ring-shaped object as shown in Figure 7. When the object was moved in the normal direction, the contact region was calculated by using the proposed method. The actual shapes of the contact regions were observed from the image of the inside of the touchpad when the objects were enough deeply contacted. We set the parameters Dmax and δd to 12.0 pixel and 2.5 pixel, respectively, according to trial and error.

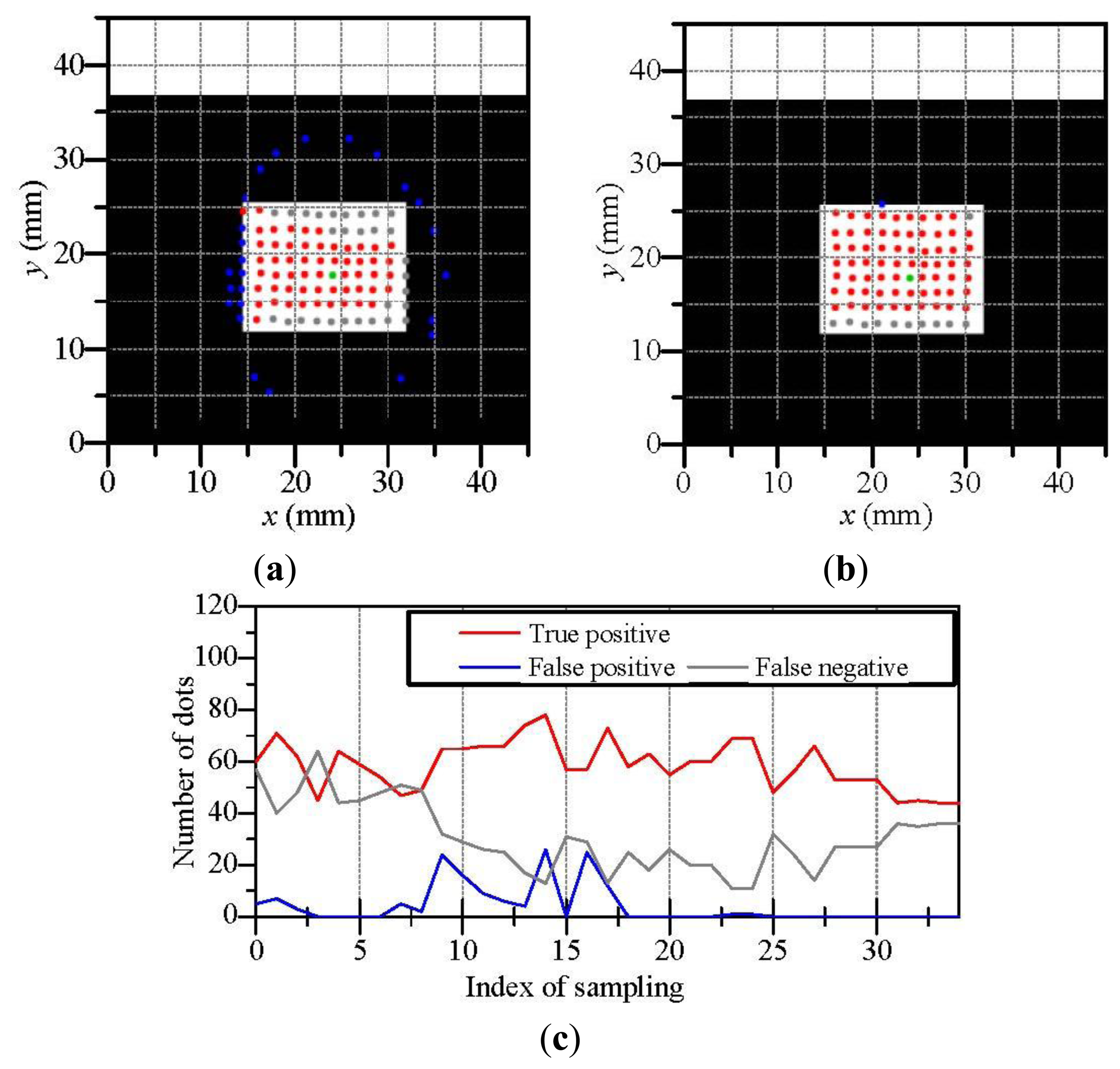

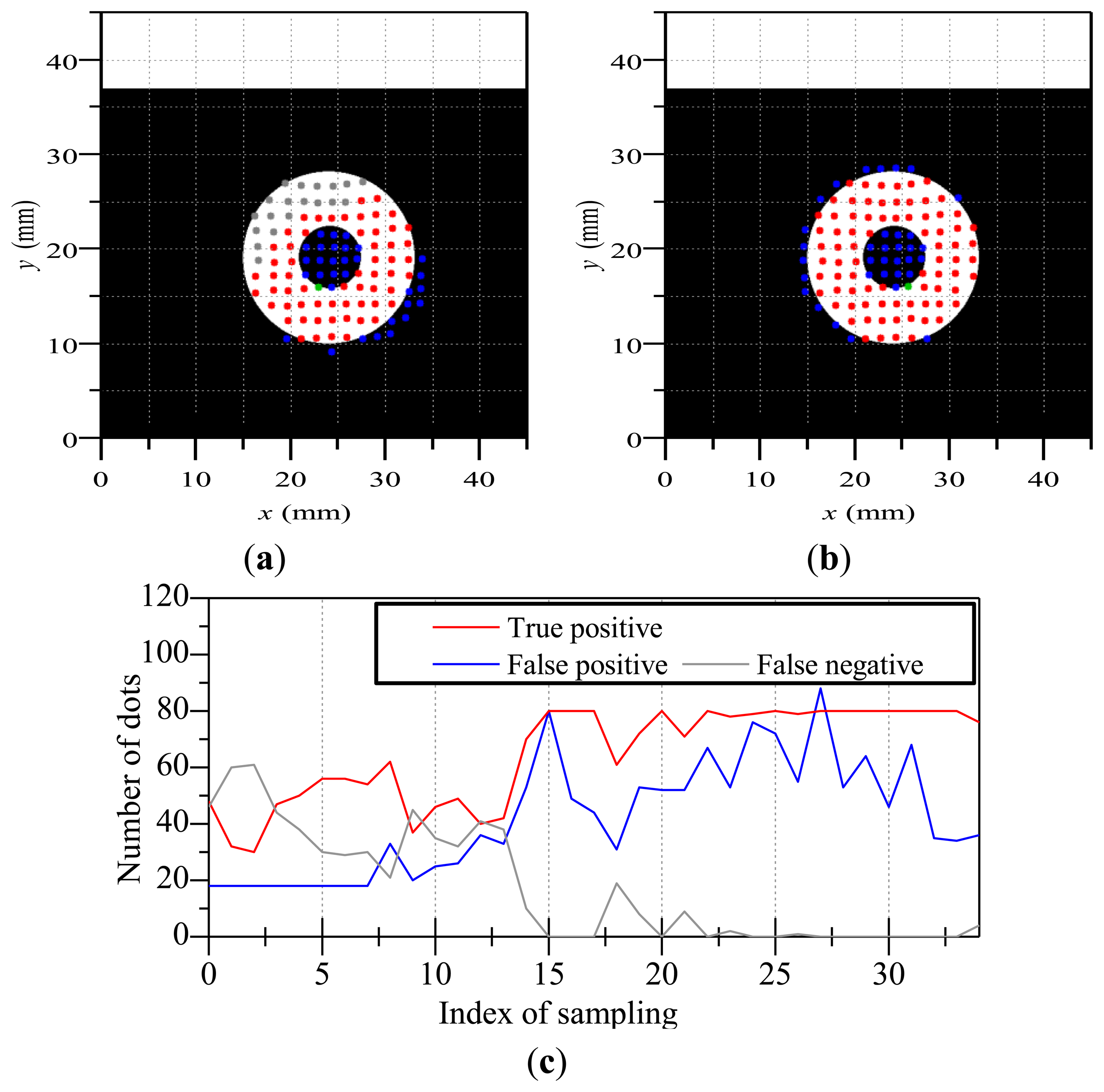

We evaluate the proposed method by considering the results of discriminated dots in the experiment. In the following figures, true positive dots are correctly discriminated contacting dots. On the other hand, false positive dots are non-contacting dots really but are discriminated as the contacting dots. False negative dots are contacting dots really but are discriminated as the non-contacting dots. Therefore, when the proposed method are successfully applied, there are few false positive/negative dots.

Figure 8 shows the results of the estimated contact region when the touchpad contacted a rectangular object. Although a worse case in Figure 8a includes the some false positive/negative dots, there are few false positive/negative dots in a better case in Figure 8b. Figure 8c shows the variation of the numbers of true positive, false positive and false negative dots. Here, the touchpad was moved such that the contact of the object became increasingly deeper with an increase in the index of sampling. The contact became enough deep for estimating the contact region after the index of sampling became approximately 10. In the experiment for the rectangular object, false positive dots were hardly occurred whereas there were some false negative dots. It seems that the three-dimensional dot positions based on the touchpad shape contain positional errors because the estimation accuracy of the touchpad shape by the previous work [26] can degrade around the sharp edge of the rectangular object, where the touchpad shape is used for calculating dot positions. However, we can see that the set of the contacting dots in Figure 8b construct a rectangular shape approximately. We regard the shape of the contact region as a set of contacting dots which constructing the contact region.

Next, Figure 9 illustrates the results of the estimated contact region when the touchpad contacted a cylinder-shaped object. Figure 9a,b shows a worse case and a better case, respectively. Figure 9c shows the variation of the numbers of true positive, false positive and false negative dots. The contact of the object became increasingly deeper with an increase in the index of sampling and the contact became enough deep for estimating the contact region after the index of sampling became approximately 10. We can see that the set of the contacting dots in the better case construct a circular shape whereas there are some worse cases as shown in Figure 9c. It seems that the estimation error of the dot positions also invokes false positive/negative dots.

Finally, Figure 10 illustrates the results of the estimated contact region when the touchpad contacted a ring-shaped object. Figure 10a,b shows a worse case and a better case, respectively. Figure 10c shows the variation of the numbers of true positive, false positive and false negative dots. The contact of the object became increasingly deeper with an increase in the index of sampling and the contact became enough deep for estimating the contact region after the index of sampling became approximately 15. In the case of the ring-shaped object, false positive dots were occurred on the inside of the ring. This is because that the membrane on the inside of the ring can move along with the ring-shaped object due to the stiffness of the membrane. However, many dots were appropriately discriminated except the inside of the ring. When we use a softer/thinner membrane for the surface of the touchpad, false positive dots will be diminished on the inside of the ring.

In these results of Figures 8, 9 and 10, we have observed some errors of false positive/negative dots. These errors are due to the estimation error of the three-dimensional positions of dots which are invoked by the estimation error of the touchpad shape [26] and the estimation error of the two-dimensional dot position in images. However, it seems that the these errors can be decrease by using a camera with higher resolution and higher signal-noise ratio and by painting the dot pattern with higher accuracy, where the dot pattern is currently painted by hand work. Moreover, the sets of the contacting dots can construct the shape of the actual contact region in some cases. Therefore, we consider that the proposed method can be applied for estimating the contact region and the estimation accuracy of the proposed method will be increased by improving the hardware such as a camera and a dot pattern.

5. Conclusions and Discussion

We have proposed a new method to estimate the contact region between a touchpad and a contacted object without strict assumptions. The proposed method is based on the movements of dots printed on the surface of the touchpad. The contact state of the dots has been defined as three types—the non-contacting dot, the sticking dot and the slipping dot. In consideration of the movements of the dots, equations have been formulated to discriminate between the contacting dots and the non-contacting dots. The equations have been modified to decrease the effects of noise and the error of estimating the positions of the dots. A set of the contacting dots discriminated by the formulated equations can construct the contact region. Next, a six-step image processing has been also proposed to detect the dots in captured images. Next, a method has been developed to assign numbers to dots for calculating their displacements. Finally, the validation of our proposed methods has been confirmed by experimental results.

Although some errors of false positive/negative dots remained in the experimental results, more accurate discrimination of the dots will be expected by enhancing the calculation accuracy of the three-dimensional positions of dots. It seems that the better estimation of the positions can be achieved by using a camera with higher resolution and higher signal-noise ratio and by painting the dot pattern with higher accuracy, where the dot pattern is currently painted by hand work.

The method can be generalized because it can be applied to other sensors including dots/markers on the sensor surface. The size of each dot of the developed sensor in this research is relatively large because the dot pattern is painted by hand work. However, differently to using many sensing elements, the size of the dots can easily be made smaller by the printing technique, and thus high resolution is expected. The proposed sensor can be fabricated easily at minimal cost, because it has a simple structure and does not require complex sensing elements or wiring. Although the size of the sensor developed and used in this research is relatively large, it can be easily downsized by using a smaller CCD or complementary metal-oxide semiconductor (CMOS) camera with high resolution.

In the process of contributing to this paper, our vision-based sensor has been developed to a greater level of practicality. Combined with our previous research [23–27], we believe that the vision-based sensor can simultaneously obtain multiple types of tactile information, including the contact region, multi-axis contact force, slippage, shape, position and orientation of an object in contact with the touchpad. Future investigation will include the implementation of fluid-type tactile sensors in industrial and medical applications, and in various practical applications, such as for robot hands for dexterous handling.

Acknowledgments

The authors would like to thank K. Yamamoto for his help in constructing the experimental setup.

Author Contributions

Yuji Ito conceived and designed the proposed method in this study. Yuji Ito and Goro Obinata conceived and designed the concept of the proposed sensor. Yuji Ito did the experiments and wrote the paper. All authors discussed the method, the experiment and the results to improve this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lee, M.H.; Nicholls, H.R. Tactile sensing for mechatronics—A state of the art survey. Mechatronics 1999, 1, 1–31. [Google Scholar]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile Sensing—From Humans to Humanoids. IEEE Trans. Robot. 2010, 26, 1–20. [Google Scholar]

- Yamada, D.; Maeno, T.; Yamada, Y. Artificial Finger Skin having Ridges and Distributed Tactile Sensors used for Grasp Force Control. J. Robot. Mechatron. 2002, 14, 140–146. [Google Scholar]

- Noda, K.; Hoshino, K.; Matsumoto, K.; Shimoyama, I. A shear stress sensor for tactile sensing with the piezoresistive cantilever standing in elastic material. Sens. Actuators A 2006, 127, 295–301. [Google Scholar]

- Schmitz, A.; Maggiali, M.; Natale, L.; Bonino, B.; Metta, G. A Tactile Sensor for the Fingertips of the Humanoid Robot iCub. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2212–2217.

- Hakozaki, M.; Shinoda, H. Digital tactile sensing elements communicating through conductive skin layers. Proceedings of the IEEE/RSJ International Conference on Intelligent Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 3813–3817.

- Yamada, K.; Goto, K.; Nakajima, Y.; Koshida, N.; Shinoda, H. Wire-Free Tactile Sensing Element Based on Optical Connection. Proceedings of the 19th Sensor Symposium, Toyko, Japan, 5–7 August 2002; pp. 433–436.

- Yang, S.; Chen, X.; Motojima, S. Tactile sensing properties of protein-like single-helix carbon microcoils. Carbon 2006, 44, 3352–3355. [Google Scholar]

- Takao, H.; Sawada, K.; Ishida, M. Monolithic Silicon Smart Tactile Image Sensor With Integrated Strain Sensor Array on Pneumatically Swollen Single-Diaphragm Structure. IEEE Trans. Electron. Devices 2006, 53, 1250–1259. [Google Scholar]

- Engel, J.; Chen, J.; Liu, C. Development of polyimide flexible tactile sensor skin. J. Micromech. Microeng. 2003, 13, 359–366. [Google Scholar]

- Mei, T.; Li, W.J.; Ge, Y.; Chen, Y.; Ni, L.; Chan, M.H. An integrated MEMS three-dimensional tactile sensor with large force range. Sens. Actuators A 2000, 80, 155–162. [Google Scholar]

- Engel, J.; Chen, J.; Liu, C. Development of a multimodal, flexible tactile sensing skin using polymer micromachining. Proceedings of the 12th International Conference on Transducers, Solid-State Sensors, Actuators and Microsystems, Boston, MA, USA, 8–12 June 2003; pp. 1027–1030.

- Ferrier, N.J.; Brockett, R.W. Reconstructing the Shape of a Deformable Membrane from Image Data. Int. J. Robot. Res. 2000, 19, 795–816. [Google Scholar]

- Saga, S.; Kajimoto, H.; Tachi, S. High-resolution tactile sensor using the deformation of a reflection image. Sens. Rev. 2007, 27, 35–42. [Google Scholar]

- Johnson, M.K.; Adelson, E.H. Retrographic sensing for the measurement of surface texture and shape. Proceedings. of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1070–1077.

- Kamiyama, K.; Vlack, K.; Mizota, T.; Kajimoto, H.; Kawakami, N.; Tachi, S. Vision-Based Sensor for Real-Time Measuring of Surface Traction Fields. IEEE Comput. Graphi. Appl. 2005, 25, 68–75. [Google Scholar]

- Sato, K.; Kamiyama, K.; Nii, H.; Kawakami, N.; Tachi, S. Measurement of Force Vector Field of Robotic Finger using Vision-based Haptic Sensor. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 488–493.

- Ohka, M.; Mitsuya, Y.; Matsunaga, Y.; Takeuchi, S. Sensing characteristics of an optical three-axis tactile sensor under combined loading. Robotica 2004, 22, 213–221. [Google Scholar]

- Ohka, M.; Takata, J.; Kobayashi, H.; Suzuki, H.; Morisawa, N.; Yussof, H.B. Object exploration and manipulation using a robotic finger equipped with an optical three-axis tactile sensor. Robotica 2009, 27, 763–770. [Google Scholar]

- Yamada, Y.; Iwanaga, Y.; Fukunaga, M.; Fujimoto, N.; Ohta, E.; Morizono, T.; Umetani, Y. Soft Viscoelastic Robot Skin Capable of Accurately Sensing Contact Location of Objects. Proceedings of the IEEE/RSJ/SICE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Taipei, Taiwan, 15–18 August 1999; pp. 105–110.

- Miyamoto, R.; Komatsu, S.; Iwase, E.; Matsumoto, K.; Shimoyama, I. The Estimation of Surface Shape Using Lined Strain Sensors (in Japanese). Proceedings of Robotics and Mechatronics Conference 2008 (ROBOMEC 2008), Nagano, Japan, 6–7 June 2008; pp. 1P1–I05.

- Maekawa, H.; Tanie, K.; Kaneko, M.; Suzuki, N.; Horiguchi, C.; Sugawara, T. Development of a Finger-Shaped Tactile Sensor Using Hemispherical Optical Waveguide. J. Soc. Instrum. Control Eng. 2001, E-1, 205–213. [Google Scholar]

- Obinata, G.; Ashis, D.; Watanabe, N.; Moriyama, N. Vision Based Tactile Sensor Using Transparent Elastic Fingertip for Dexterous Handling. In Mobile Robots: Perception & Navigation; Kolski, S., Ed.; Pro Literatur Verlag: Germany, 2007; pp. 137–148. [Google Scholar]

- Ito, Y.; Kim, Y.; Obinata, G. Robust Slippage Degree Estimation based on Reference Update of Vision-based Tactile Sensor. IEEE Sens. J. 2011, 11, 2037–2047. [Google Scholar]

- Ito, Y.; Kim, Y.; Nagai, C.; Obinata, G. Contact State Estimation by Vision-based Tactile Sensors for Dexterous Manipulation with Robot Hands Based on Shape-Sensing. Int. J. Adv. Robot. Syst. 2011, 8, 225–234. [Google Scholar]

- Ito, Y.; Kim, Y.; Nagai, C.; Obinata, G. Vision-based Tactile Sensing and Shape Estimation Using a Fluid-type Touchpad. IEEE Trans. Autom. Sci. Eng. 2012, 9, 734–744. [Google Scholar]

- Ito, Y.; Kim, Y.; Obinata, G. Multi-axis Force Measurement based on Vision-based Fluid-type Hemispherical Tactile Sensor. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Toyko, Japan, 3–7 November 2011; pp. 4729–4734.

- Watanabe, N.; Obinata, G. Grip Force Control Using Vision-Based Tactile Sensor for Dexterous Handling. In European Robotics Symposium; Springer: Berlin, Germany, 2008; pp. 113–122. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Ito, Y.; Kim, Y.; Obinata, G. Contact Region Estimation Based on a Vision-Based Tactile Sensor Using a Deformable Touchpad. Sensors 2014, 14, 5805-5822. https://doi.org/10.3390/s140405805

Ito Y, Kim Y, Obinata G. Contact Region Estimation Based on a Vision-Based Tactile Sensor Using a Deformable Touchpad. Sensors. 2014; 14(4):5805-5822. https://doi.org/10.3390/s140405805

Chicago/Turabian StyleIto, Yuji, Youngwoo Kim, and Goro Obinata. 2014. "Contact Region Estimation Based on a Vision-Based Tactile Sensor Using a Deformable Touchpad" Sensors 14, no. 4: 5805-5822. https://doi.org/10.3390/s140405805