Localization with a Mobile Beacon in Underwater Acoustic Sensor Networks

Abstract

: Localization is one of the most important issues associated with underwater acoustic sensor networks, especially when sensor nodes are randomly deployed. Given that it is difficult to deploy beacon nodes at predetermined locations, localization schemes with a mobile beacon on the sea surface or along the planned path are inherently convenient, accurate, and energy-efficient. In this paper, we propose a new range-free Localization with a Mobile Beacon (LoMoB). The mobile beacon periodically broadcasts a beacon message containing its location. Sensor nodes are individually localized by passively receiving the beacon messages without inter-node communications. For location estimation, a set of potential locations are obtained as candidates for a node's location and then the node's location is determined through the weighted mean of all the potential locations with the weights computed based on residuals.1. Introduction

For a long time, there has been significant interest in monitoring underwater environments to collect oceanographic data and to explore underwater resources. These harsh underwater environments have limited human access and most of them remain poorly understood. Recent advances in hardware and network technology had enabled sensor networks capable of sensing, data processing, and communication. A collection of sensor nodes, which have a limited sensing region, processing power, and energy, can be randomly deployed and connected to form a network in order to monitor a wide area. Sensor networks are particularly promising for use in underwater environments where access is difficult [1–3]. In underwater acoustic sensor networks (UASN), localization of sensor nodes is essential because it is difficult to accurately deploy sensor nodes to predetermined locations. In addition, all the information collected by sensor nodes may be useless unless the location of each sensor node is known [4].

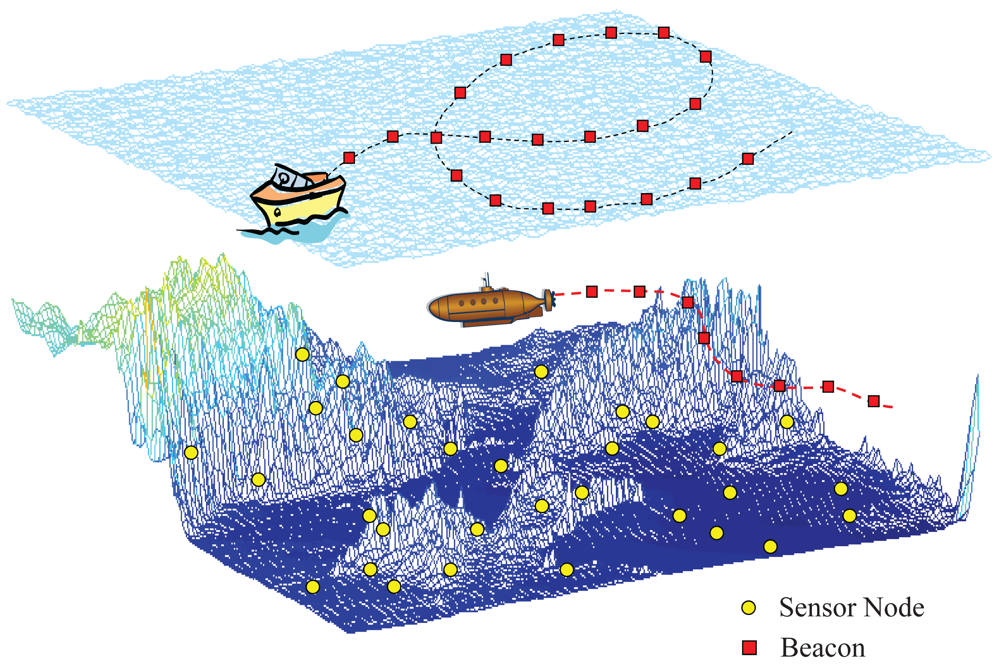

To localize unknown nodes, static beacons [5–11] or a mobile beacon [12–16] can be used; typically, buoys are used for static beacons and an autonomous underwater vehicle (AUV) for a mobile beacon. The use of a mobile beacon has a similar effect to the use of many static beacons. For this reason, localization using a mobile beacon is inherently more accurate and cost-effective than localization using static beacons. Further, the vehicle can be conveniently maneuvered on the sea surface or underwater, based on a planned or random path, as shown in Figure 1.

Localization schemes can also be classified as range-based or range-free schemes. Range-based schemes use the distance and/or angle information measured by the Time of Arrival (ToA), Time Difference of Arrival (TDoA), Angle of Arrival (AoA), or Received Signal Strength Indicator (RSSI) techniques. In underwater environments, range-based schemes that use the ToA and TDoA techniques have been proposed [5–10,13–15]. Time synchronization between sensor nodes is typically required for the ToA and TDoA techniques [6]. However, precise time synchronization is challenging in underwater environments [17]. In addition, even though the ToA and TDoA techniques are used without time synchronization, the techniques are based on the speed of sound and this varies with temperature, pressure, salinity, and depth. In contrast to range-based schemes, range-free schemes localize a sensor node by deducing distance information instead of measuring distances [11,12,16]. Because range-free schemes do not require additional devices in order to measure distances and do not suffer from distance measurement errors, range-free schemes are a promising approach for underwater sensor networks [16].

Localization is one of the challenges associated with underwater sensor networks and many studies have focused on localization in recent years. Among these studies, LDB is a range-free localization scheme with a mobile beacon [16]. In LDB, sensor nodes can localize themselves through passively listening to beacons sent by an AUV that has an acoustic directional transceiver. Because sensor nodes just receive the beacons from the AUV without inter-node communications, LDB is energy-efficient because it reduces the energy consumption due to transmitting; the power consumed by transmitting is often 100 times higher than the power consumed by receiving [18]. In addition, because a sensor node can localize itself using its own received beacons from the AUV independently of the other sensor nodes, LDB has fine-grained accuracy even in sparse networks. The depth of a sensor node is directly determined by using a cheap pressure sensor. After the first and last received beacons, which are called beacon points, from the AUV are projected onto the horizontal plane on which a sensor node resides, the 2D location of a sensor node can be determined based on the distance between the two projected beacon points. At this point, the location of the sensor node remains ambiguous, because the two projected beacon points provide two possible node locations. If the sensor node obtains two more beacon points, the ambiguity can be resolved. The first two beacon points are used for location computation and the other two beacon points are used for resolving the ambiguity. However, if the two beacon points for location computation have a large error, the error in the estimated sensor location also increases. Even though the location of a sensor node can be simply computed from the first two beacon points, the use of just two points out of all the beacon points can make localization very error-prone. To improve the localization accuracy in challenging underwater environments, we propose a new localization scheme LoMoB that obtains a set of potential locations as candidates for a node's location using the bilateration method and then localizes the sensor node through the weighted mean of all the potential locations with the weights computed based on residuals.

2. Related Work

In this section, we briefly explain LDB in the viewpoint of system environment, beacon point selection, and location estimation [16].

2.1. System Environment

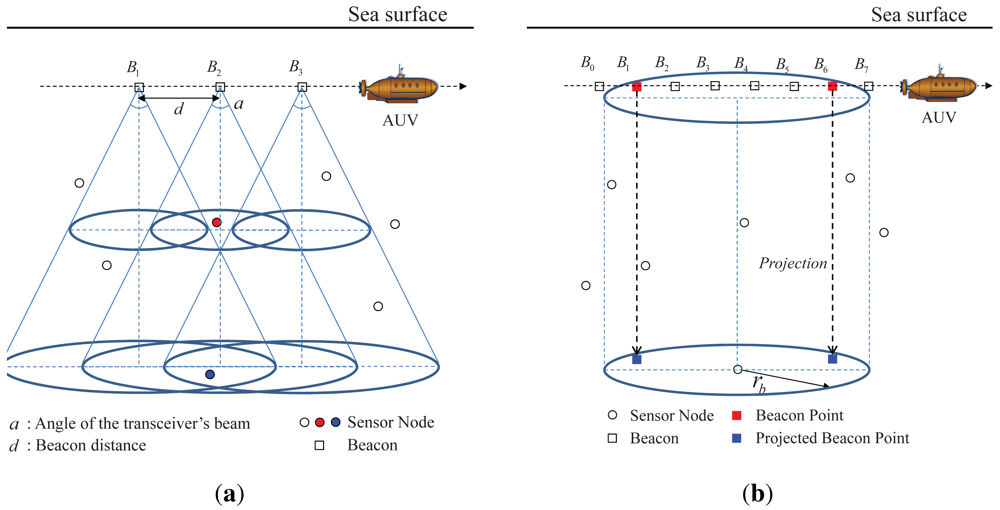

In LDB, an AUV with a directional transceiver moves at a fixed depth in water and broadcasts its location, which called a beacon, and the angle of its transceiver's beam (a) at regular intervals, called beacon distance (d), as shown in Figure 2(a). When the AUV sends a beacon, the sensor nodes that fall in the conical beam receive the beacon; e.g., in Figure 2(a), the sensor node in red can receive the beacon B2 and the sensor node in blue can receive the beacons B1, B2, and B3. The conical beam forms circles with different radii according to the depth of the sensor nodes, as shown in Figure 2(a). When the z coordinates of the AUV and a sensor node are zA and zs and the angle of the transceiver's beam is a, the radius of the circle formed by the beam for the sensor node is given as follows:

2.2. Beacon Point Selection

From the viewpoint of a sensor node located at (x, y, zs), when a beacon is within the circle centered at (x, y, zA) with a radius rb, the sensor node can receive the beacon; e.g., in Figure 2(b), the sensor node receives the beacons B1 to B6. Among the series of received beacons, the first beacon is defined as the first-heard beacon point and the last beacon is defined as the last-heard beacon point. The location of a sensor node is estimated using only the beacon points, and not all the received beacons.

Because the sensor depth is known from the pressure sensor, the beacon points can be projected onto the horizontal plane in which the sensor node resides, as shown in Figure 2(b). After projection, 3D localization is transformed into a 2D localization problem.

2.3. Location Estimation

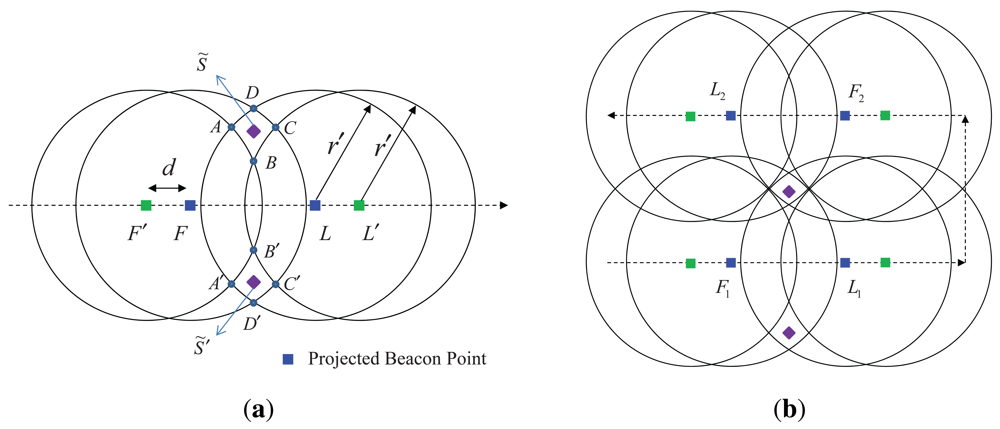

We describe location estimation based on the projected beacon points. As shown in Figure 3(a), the projected first-heard beacon point is denoted by F and the projected last-heard beacon point is denoted by L. The projected point of the beacon received just before the first-heard beacon point is defined as the projected prior-heard beacon point F′ (e.g., in Figure 2(b), the projected point of B0), and the projected point of the beacon after the last-heard beacon point is defined as the projected post-heard beacon point L′ (e.g., in Figure 2(b), the projected point of B7).

Four circles are then drawn with radius rb centered at the four points F′, F, L, and L′. Because the sensor node should be located outside the circles centered at F′ and L′ and inside the circles centered at F and L, the intersection areas ABCD and A′B′C′D′ represent possible locations of the sensor node. One of the two points, S̃ and S̃′, within the two areas will be estimated as the location of the sensor node. Here, S̃ is the midpoint of B and D and S̃′ is the midpoint of B′ and D′. When just one projected first-heard beacon point and one projected last-heard beacon point are used, two possible node locations are found. The choice between the two points S̃ and S̃′ can be made after an additional two beacon points are obtained, as shown in Figure 3(b). In LDB, the first two beacon points are used to compute two possible points for the location of a sensor node based on geometric constraints and the next two beacon points are used for the choice between the two possible points.

3. LoMoB Localization Scheme

In this section, we explain the system environment, beacon point selection, and location estimation method for LoMoB. Because LoMoB can be applied to systems that use either a directional transceiver or an omnidirectional transceiver, we explain LoMoB for both systems. In addition, because LoMoB improves LDB, we explain LoMoB by comparing and contrasting it with LDB.

3.1. System Environment

LoMoB considers a system that uses an omnidirectional transceiver in addition to a system that uses a directional transceiver [19]. Because the system environment using a directional transceiver was explained in Section 2, we explain here the system environment using an omnidirectional transceiver.

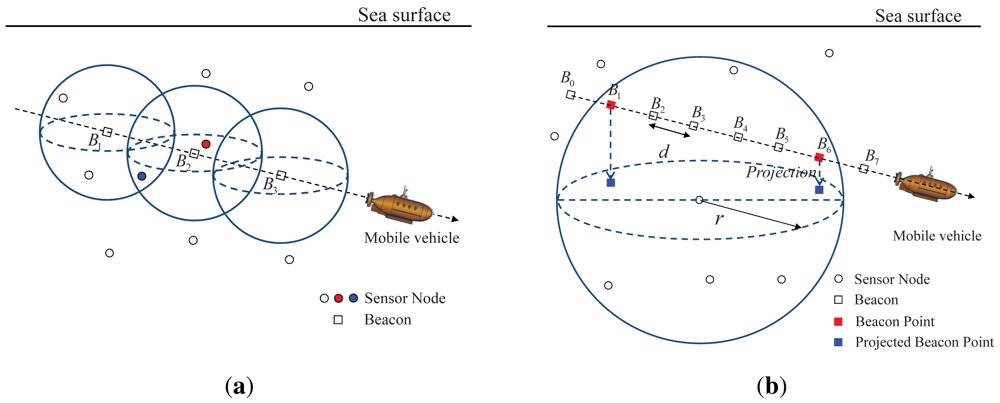

A mobile beacon moves on the sea surface or underwater. When a mobile beacon has an omnidirectional transceiver, 3D movement of the mobile beacon is possible whereas a mobile beacon that has a directional transceiver is restricted to 2D movement at a fixed depth. The mobile beacon is assumed to know its own location and to broadcast a beacon containing its location information at regular distance intervals, which are called the beacon distance d. When the mobile beacon transmits a beacon, the sensor nodes that are located within the communication range of the mobile beacon receive the beacon; e.g., in Figure 4(a), the sensor node in red can receive the beacon B2 and the sensor node in blue can receive the beacons B1 and B2. Here, the communication range r is assumed to be constant in 3D [11,12,20]. In addition, because movement of a mobile beacon in a straight line is more controllable than curved movement, the mobile beacon is assumed to follow the random waypoint (RWP) model [21]; the mobile beacon moves in a series of straight paths to random destinations.

3.2. Beacon Point Selection

From the viewpoint of a sensor node, the sensor node can receive a beacon when the beacon is within the communication range of the sensor node; e.g., in Figure 4(b), the sensor node receives the beacons B1 to B6. The selection of beacon points from the received beacons is performed as in LDB. When a sensor node receives the first beacon from a mobile beacon, the first beacon is selected as a beacon point; i.e., B1 in Figure 4(b). If the sensor node receives no further beacons during a predefined time after receiving its last beacon, the last beacon is selected as a beacon point; i.e., B6 in Figure 4(b). The above process is repeated each time the mobile beacon passes through the communication sphere of the sensor node.

As shown in Figure 4(b), the beacon points do not lie exactly on the communication sphere of the sensor node, because the sensor node receives a beacon at every beacon distance d. When the communication range of the sensor node is r, the beacon points are located between the distances r − d and r from the sensor node. Based on the distance range between a beacon point and a sensor node, the middle value of the range, r − d/2, is estimated as the distance between the beacon point and sensor node in order to minimize the error in the estimated distance.

Sensor nodes are assumed to have a pressure sensor, and therefore they are assumed to know their depth [22]. With this depth information, the beacon points can be projected onto the horizontal plane in which the sensor node resides, as shown in Figure 4(b). When the z coordinates of a sensor node and the ith beacon point are zs and zi, the distance between the sensor node and the ith projected beacon point is .

For a system using a directional transceiver, all the projected beacon points are located between the distances rb − d and rb from a sensor node; here, rb is the radius of the circle formed by the beam for the sensor node. Subsequently, all the estimated distances between the sensor node and projected beacon points are identically rb − d/2.

3.3. Location Estimation

In this subsection, we explain how to estimate the location of a sensor node based on the distances between the sensor node and the projected beacon points. First, potential locations are obtained as candidates for the location of the sensor node. Second, the location of the sensor node is estimated using the weighted mean of the potential locations.

3.3.1. Obtaining potential locations

After estimating the distances between a sensor node and projected beacon points, the following equations need to be solved for localization:

Let us explain how to estimate the location of a sensor node based on the bilateration method with Figures 5 and 6. When N projected beacon points are obtained, N circles can be drawn satisfying Equation (2); i.e., four circles with centers , , , and and radii , , , and can be drawn, as shown in Figure 5. The points of intersection of the N circles that are near the sensor node are obtained as potential locations for the sensor node's estimated location, as shown in Figure 5; some or all of the potential locations may overlap. However, because a potential location can be either of the two intersection points of two circles, a decision process is needed; here, because the intersection points are obtained using just two of many projected beacon points, this method is called bilateration [23]. One of the two intersection points of the two circles with centers at two projected beacon points is closer to the circles with centers at the other projected beacon points than the other intersection point; i.e., C12(1) is closer to the two circles with centers and than C12(2) in Figure 6. When Cjk(1) and Cjk(2) are the intersection points of the circles with centers and and the following inequality is satisfied, Cjk(1) is selected as the potential location P̂jk; otherwise, Cjk(2) is selected.

In rare cases, the number of intersection points between two circles can be one or zero. If there is one intersection point, this single point becomes the potential location. If there is no intersection point, which occurs when the distance between the two beacon points and is larger than , the point that divides the line segment joining the points and into a ratio becomes the potential location. In these two cases, a decision process is not needed.

3.3.2. Estimating the Location of a Sensor Node Using the Weighted Mean

The location of a sensor node can be estimated using all the potential locations. The location of a sensor node can simply be estimated as the mean of all the potential locations. However, to improve the estimation accuracy, a weighted mean of the potential locations can be used instead. Here, the weight is determined based on a residual, which we define here. Given x̂ as a solution of x at f(x) = b, the residual is b − f(x̂), which indicates how far f(x̂) is from the correct value of b, and the error is x̂ − x. As we do not know x, we cannot compute the error, but we can compute the residual. From Equation (2) N residuals associated with the potential location Pjk(x̂jk, ŷjk) can be computed as follows:

Less than three beacon points may be acquired for some sensor nodes in certain circumstances. In this case, because the location of a sensor node cannot be estimated through Equation (6), another approach is needed. When just two beacon points and are acquired for a sensor node, the intersection points C12(1) and C12(2) of two circles with centers and and radii and are obtained. Because there are no other beacon points available to the decision-making process, the neighboring sensor nodes can play the role of an additional beacon point in order to estimate the sensor location. The sensor node can receive the location information of neighboring sensor nodes by sending a request to the neighboring sensor nodes that know their location after localization. The sensor node just knows that the neighboring sensor nodes are within the communication range without the distance information between the sensor node and the neighboring sensor nodes. If one of the intersection points is close to the sensor node, more sensor nodes among neighboring sensor nodes of the sensor node are expected to be within the communication range of the intersection point, compared to the other intersection point. By comparing the number of neighboring sensor nodes of the sensor node within the communication range for each intersection point, one of the two intersection points that has more neighboring sensor nodes of the sensor node is estimated as the location of the sensor node. When a sensor node has less than two beacon points, the estimated locations of the neighboring sensor nodes can be used. In this case, the location of the sensor node is estimated as the midpoint of all the neighboring sensor nodes.

It is noteworthy that the main feature of LoMoB that can improve LDB is the use of the weighted mean of all the potential locations. In LDB, when a sensor node has four projected beacon points F1, L1, F2, and L2, as shown in Figure 3(b), two possible points are obtained based on F1 and L1 and one point between the two is estimated as the location of the sensor node based on F2 and L2. Here, the error in the estimated location depends largely on the distance between F1 and L1 [16]. If F1 and L1 are located very close to each other, the location error is expected to be large. In this case, a better choice may be that F2 and L2 (or another two projected beacon points) are used for the two possible points, rather than F1 and L1. However, the selection of two projected beacon points for the possible points has not yet been studied. If the errors of the beacon points F1 and L1 increase because of some factors such as irregularities in the radius of the circle formed by the transceiver's beam and the location error of a mobile beacon, the errors of the two possible points also increase, which results in an increase of the location error. Even though more beacon points, in addition to F1, L1, F2, and L2, are obtained, the localization accuracy is not improved because the additional beacon points are not used for localization. As in LDB, LoMoB obtains two intersection points based on two beacon points and determines one point between the two as a potential location for the location of the sensor node based on the other beacon points. However, compared to LDB, LoMoB estimates the location of a sensor node based on all the potential locations, not just one potential location. In addition, potential locations with a high weight contribute more to the estimation of the location of the sensor node than other potential locations. For these reasons, LoMoB is expected to improve LDB in challenging underwater environments even though LoMoB uses just four beacon points, as does LDB. If more than four beacon points are used, the localization accuracy is expected to improve further.

4. Performance Evaluations

In this section, we first describe the simulation parameters, then introduce the metric to evaluate the localization accuracy and, finally, we compare the localization accuracy of LoMoB with that of LDB through simulations.

4.1. Simulation Setup

The sensing space for UASN is a rectangular parallelepiped of 1 km × 1 km × 100 m, in which 100 sensor nodes are randomly deployed. A mobile beacon is assumed to move linearly at a velocity of 1 m/s and broadcasts a beacon at every beacon interval, i.e., each 1 s. To fairly compare LoMoB and LDB, we assume that a mobile beacon has a directional transceiver and just four beacon points are used for localization because LDB works for a system using a directional transceiver and uses four beacon points. The angle of the directional transceiver's beam is 60°. Because it is more convenient to move a mobile beacon on the sea surface than underwater in real underwater environments, the mobile beacon is assumed to move on the sea surface.

In LoMoB and LDB, localization begins by selecting beacon points. Any projected beacon points that are not exactly on the circle formed by the transceiver's beam for a sensor node cause an estimation error of the distance between the projected beacon point and the sensor node, which results in an error in the estimated location. The beacon distance causes projected beacon points to be located not exactly on the circle formed by the beam. In addition, the phenomena such as reflection, diffraction, and refraction in underwater environments cause irregularities in the radius of the circle formed by the beam. To improve the reality of our simulations, irregularities in the radius of the circle are modeled as r̂ = r + er, where er is a random value with the Gaussian distribution N (0, ). Because the GPS error in a mobile beacon, water fluctuations, and tidal currents can cause errors in the location of the mobile beacon, we assume the location information broadcasted from a mobile beacon is error prone [24]. The location error of a mobile beacon is modeled as x̂m = xm + em, where x̂m = [x̂, ŷ]T is the measured location of the mobile beacon located at xm = [x, y]T and em = [ex, ey]T is a random vector whose ex and ey are independent and identical random values with the Gaussian distribution N(0, ). We use Matlab to perform these simulations.

4.2. Metrics

To compare the localization accuracy of LoMoB and LDB, we define the average location error as follows:

Because the average location error may be seriously affected by a small number of large location errors, the ratio of localized sensor nodes with location errors below a given threshold can be used as an additional metric for evaluating the localization accuracy. The ratio of localized sensor nodes below a given threshold allows the localization accuracy to be assessed in greater depth than the average location error alone.

4.3. Simulation Results

Because the beacon distance, irregularities in the radius of the circle formed by the transceiver's beam, and the location error of a mobile beacon all influence the accuracy of distance estimation between a sensor node and a projected beacon point, the localization accuracy is analyzed with respect to these factors.

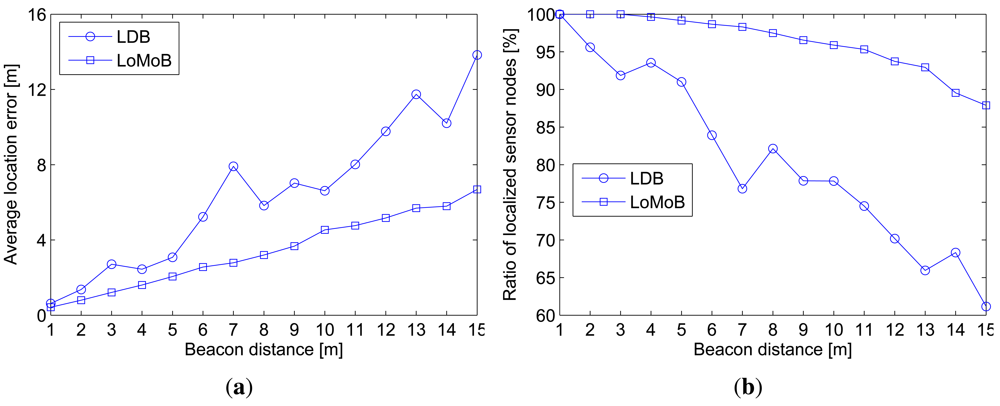

Figure 7(a) compares the average location error as a function of the beacon distance and Figure 7(b) compares the ratio of localized sensor nodes with location errors below 10 m. The average location error of LDB is shown to fluctuate more and to be larger than that of LoMoB. As the beacon distance increases, the performance gap between LoMoB and LDB tends to widen. Figure 7 demonstrates that LoMoB is less vulnerable to the beacon distance than LDB.

In real underwater environments, the radius of the circle formed by the transceiver's beam is expected to fluctuate. Irregularities in the radius of the circle cause sensor nodes to provide erroneous estimates of distances between the projected beacon points and a sensor node, in addition to the error associated with the beacon distance. Figure 8(a) compares the average location error as a function of the standard deviation σr in the radius of the circle and Figure 8(b) compares the ratio of localized sensor nodes with location errors below 10 m. As shown in the figures, the average location error increases as irregularities in the radius of the circle increase. LoMoB has 32.4%–45.8% smaller location errors than LDB. The ratios of localized sensor nodes show a maximum difference of 7.4%. LoMoB is verified to be more tolerant to irregularities in the radius of the circle formed by the transceiver's beam than LDB.

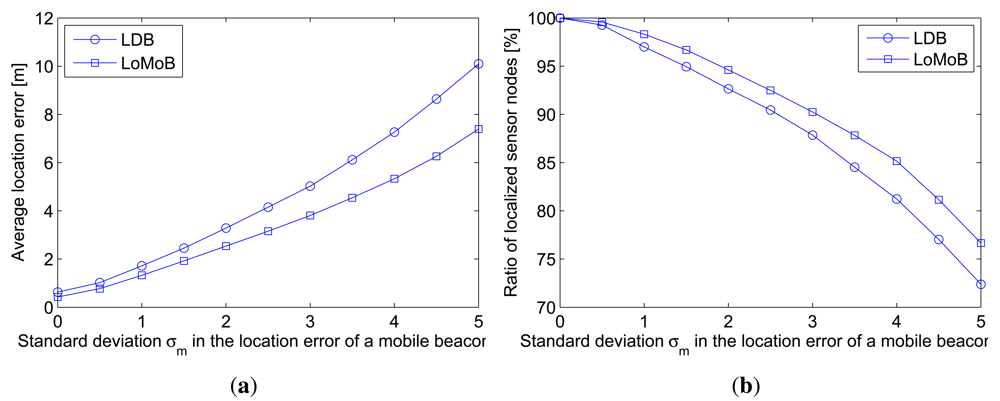

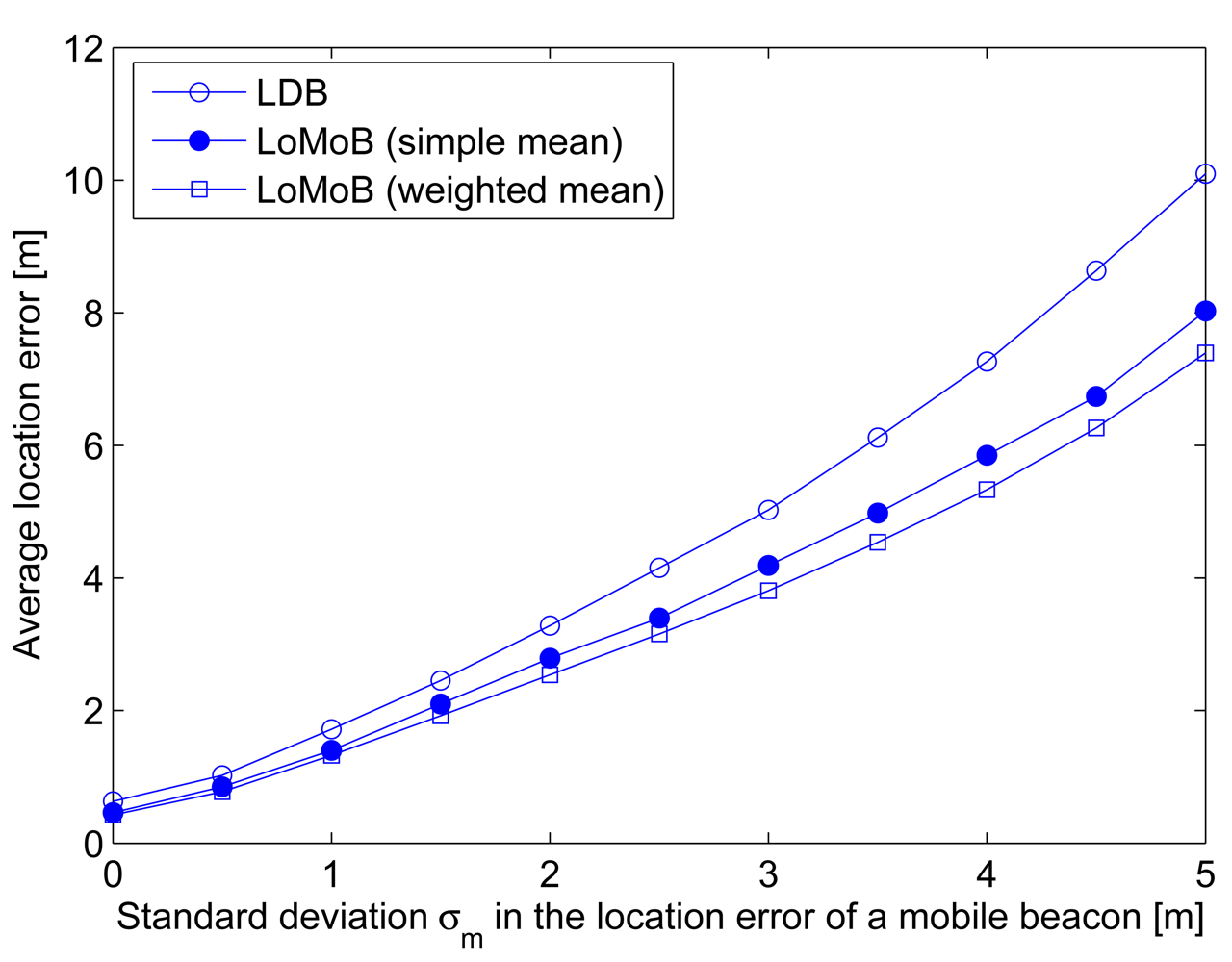

A mobile beacon is generally assumed to know its own location. In reality, the location information of a mobile beacon may be erroneous. As well as irregularities in the radius of the circle formed by the transceiver's beam, the location error of a mobile beacon needs to be considered because this also causes erroneous estimation of the distances between the projected beacon points and a sensor node. Figure 9(a) compares the average location error according to the standard deviation σm in the location error of a mobile beacon and Figure 9(b) compares the ratio of localized sensor nodes with location errors below 10 m. LoMoB improves the average location error by 21.5%–32.4% compared to LDB. The ratios of localized sensor nodes show a maximum difference of 4.3%. In addition, Figure 10 shows the average location error of LDB and LoMoB applying a simple mean and the weighted mean; if all the weights are equal, then the weighted mean is the same as the simple mean. LoMoB applying a simple mean and the weighted mean improves the average location error by 14.3%–26.9% and 21.5%–32.4%, respectively. This indicates that the use of all the potential locations in LoMoB is the main factor to improve LDB and the use of the weights results in additional increase in the localization accuracy.

The localization accuracy performance of LoMoB and LDB was compared through the average location error and the ratio of localized sensor nodes with location errors below 10 m according to the beacon distance, irregularities in the radius of the circle formed by the transceiver's beam, and the location error of a mobile beacon. The simulation results verify that LoMoB is more tolerant to estimation errors of the distances between the projected beacon points and a sensor node. Subsequently, LoMoB is more promising than LDB in harsh underwater environments.

5. Conclusions

In this paper, we proposed a range-free localization scheme for UASN with a mobile beacon that provides the potential locations with weighting factors according to residuals and estimates the location of a sensor node through the weighted mean of the potential locations. Because LoMoB localizes a sensor node based on the weights of the potential locations, it improves the localization accuracy and is more tolerant to errors in the estimation of the distance between projected beacon points and sensor nodes. Simulation results show that LoMoB significantly improves the localization accuracy of LDB, especially in underwater environments that cause irregularities in the radius of the circle formed by the transceiver's beam and the location error of a mobile beacon. Our simulations demonstrated that LoMoB is more robust with respect to errors in distance estimation than LDB.

Acknowledgments

This work was supported by the World-Class University Program through the National Research Foundation of Korea (R31-10026), and Grant (K20903001804-11E0100-00910) funded by the Ministry of Education, Science, and Technology (MEST), also by a grant (07SeaHeroB01-03) from Plant Technology Advancement Program funded by Ministry of Construction and Transportation.

References

- Mohamed, N.; Jawhar, I.; Al-Jaroodi, J.; Zhang, L. Sensor network architectures for monitoring underwater pipelines. Sensors 2011, 11, 10738–10764. [Google Scholar]

- Yang, X.; Ong, K.G.; Dreschel, W.R.; Zeng, K.; Mungle, C.S.; Grimes, C.A. Design of a wireless sensor network for long-term, in-situ monitoring of an aqueous environment. Sensors 2002, 2, 455–72. [Google Scholar]

- Sozer, E.M.; Stojanovic, M.; Proakis, J.G. Underwater acoustic networks. IEEE J. Ocean. Eng. 2000, 25, 72–83. [Google Scholar]

- Teymorian, A.Y.; Cheng, W.; Ma, L.; Cheng, X.; Lu, X.; Lu, Z. 3D underwater sensor network localization. IEEE Trans. Mob. Comput. 2009, 8, 1610–1621. [Google Scholar]

- Zhou, Z.; Cui, J.; Zhou, S. Efficient localization for large-scale underwater sensor networks. Ad Hoc Netw. 2010, 8, 267–279. [Google Scholar]

- Cheng, X.; Shu, H.; Liang, Q.; Du, D.H.-C. Silent positioning in underwater acoustic sensor networks. IEEE Trans. Veh. Technol. 2008, 57, 1756–1766. [Google Scholar]

- Cheng, W.; Thaeler, A.; Cheng, X.; Liu, F.; Lu, X.; Lu, Z. Time-Synchronization Free Localization in Large Scale Underwater Acoustic Sensor Networks. Proceedings of the 29th IEEE ICDCS, Montreal, QC, Canada, 22–26 June 2009; pp. 80–87.

- Cui, J.H.; Zhou, Z.; Bagtzoglou, A. Scalable Localization with Mobility Prediction for Underwater Sensor Networks. Proceedings of the 2nd ACM International Workshop on UnderWater Networks, Montreal, QC, Canada, 14 September 2007; pp. 2198–2206.

- Mirza, D.; Schurgers, C. Motion-Aware Self-Localization for Underwater Networks. Proceedings of the 3rd ACM International Workshop on UnderWater Networks, San Francisco, CA, USA, 15 September 2008; pp. 51–58.

- Mirza, D.; Schurgers, C. Collaborative Localization for Fleets of Underwater Drifters. Proceedings of the IEEE OCEANS, Vancouver, BC, Canada, 30 September–4 October 2007; pp. 1–6.

- Chandrasekhar, V.; Seah, W. An Area Localization Scheme for Underwater Sensor Networks. Proceedings of the IEEE OCEANS Asia Pacific Conference, Sydney, Australia, 16–19 May 2006; pp. 1–8.

- Zhou, Y.; He, J.; Chen, K.; Chen, J.; Liang, A. An area localization Scheme for large scale Underwater Wireless Sensor Networks. Proceedings of the WRI International Conference on Communications and Mobile Computing, Kunming, China, 6–8 January 2009; pp. 543–547.

- Erol, M.; Vieira, L.; Gerla, M. Localization with DiveNRise (DNR) Beacons for Underwater Acoustic Sensor Networks. Proceedings of the 2nd ACM International Workshop on UnderWater Networks, Montreal, QC, Canada, 14 September 2007; pp. 97–100.

- Erol, M.; Vieira, L.F.M.; Caruso, A.; Paparella, F.; Gerla, M.; Oktug, S. Multi Stage Underwater Sensor Localization Using Mobile Beacons. Proceedings of the 2nd International Conference on Sensor Technologies and Applications, Cap Esterel, France, 25–31 August 2008; pp. 710–714.

- Erol, M.; Vieira, L.; Gerla, M. AUV-Aided Localization for Underwater Sensor Networks. Proceedings of the International Conference on Wireless Algorithms, Systems and Applications, Chicago, IL, USA, 1–3 August 2007; pp. 44–54.

- Luo, H.; Guo, Z.; Dong, W.; Hong, F.; Zhao, Y. LDB: Localization with directional beacons for sparse 3D underwater acoustic sensor networks. J. Netw. 2010, 5, 28–38. [Google Scholar]

- Syed, A.A.; Heidemann, J. Time Synchronization for High Latency Acoustic Networks. Proceedings of the 25th IEEE International Conference on Computer Communications, Barcelona, Spain, 23–29 April 2006; pp. 1–12.

- Syed, A.A.; Ye, W.; Heidemann, J. T-Lohi: A New Class of MAC Protocols for Underwater Acoustic Sensor Networks. Proceedings of the 27th IEEE International Conference on Computer Communications, Phoenix, AZ, USA, 13–18 April 2008; pp. 231–235.

- Won, T.-H.; Park, S.-J. Design and implementation of an omni-directional underwater acoustic micro-modem based on a low-power micro-controller unit. Sensors 2012, 12, 2309–2323. [Google Scholar]

- Lurton, X. An Introduction to Underwater Acoustics—Principles and Applications; Springer, Praxis Publishing: Berlin, Germany, 2002. [Google Scholar]

- Broch, J.; Maltz, D.A.; Johnson, D.B.; Hu, Y.C.; Jetcheva, J.G. A Performance Comparison of Multi-Hop Wireless Ad Hoc Network Routing Protocols. Proceedings of the ACM International Conference on Mobile Computing Networking, Dallas, TX, USA, July 1998; pp. 85–97.

- Isik, M.T.; Akan, O.B. A three dimensional localization algorithm for underwater acoustic sensor networks. IEEE Trans. Wirel. Commun. 2009, 8, 4457–4463. [Google Scholar]

- Cheng, W.; Teymorian, A.Y.; Ma, L.; Cheng, X.; Lu, X.; Lu, Z. Underwater Localization in Sparse 3D Acoustic Sensor Networks. Proceedings of the 27th IEEE International Conference on Computer Communications, Phoenix, AZ, USA, 13–18 April 2008; pp. 798–806.

- He, T.; Huang, C.; Blum, B.M.; Stankovic, J.A.; Abdelzaher, T.F. Range-free localization schemes for large scale sensor networks. ACM Trans. Embed. Comput. Syst. 2005, 4, 877–906. [Google Scholar]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Lee, S.; Kim, K. Localization with a Mobile Beacon in Underwater Acoustic Sensor Networks. Sensors 2012, 12, 5486-5501. https://doi.org/10.3390/s120505486

Lee S, Kim K. Localization with a Mobile Beacon in Underwater Acoustic Sensor Networks. Sensors. 2012; 12(5):5486-5501. https://doi.org/10.3390/s120505486

Chicago/Turabian StyleLee, Sangho, and Kiseon Kim. 2012. "Localization with a Mobile Beacon in Underwater Acoustic Sensor Networks" Sensors 12, no. 5: 5486-5501. https://doi.org/10.3390/s120505486

APA StyleLee, S., & Kim, K. (2012). Localization with a Mobile Beacon in Underwater Acoustic Sensor Networks. Sensors, 12(5), 5486-5501. https://doi.org/10.3390/s120505486