Dynamic Experiment Design Regularization Approach to Adaptive Imaging with Array Radar/SAR Sensor Systems

Abstract

: We consider a problem of high-resolution array radar/SAR imaging formalized in terms of a nonlinear ill-posed inverse problem of nonparametric estimation of the power spatial spectrum pattern (SSP) of the random wavefield scattered from a remotely sensed scene observed through a kernel signal formation operator and contaminated with random Gaussian noise. First, the Sobolev-type solution space is constructed to specify the class of consistent kernel SSP estimators with the reproducing kernel structures adapted to the metrics in such the solution space. Next, the “model-free” variational analysis (VA)-based image enhancement approach and the “model-based” descriptive experiment design (DEED) regularization paradigm are unified into a new dynamic experiment design (DYED) regularization framework. Application of the proposed DYED framework to the adaptive array radar/SAR imaging problem leads to a class of two-level (DEED-VA) regularized SSP reconstruction techniques that aggregate the kernel adaptive anisotropic windowing with the projections onto convex sets to enforce the consistency and robustness of the overall iterative SSP estimators. We also show how the proposed DYED regularization method may be considered as a generalization of the MVDR, APES and other high-resolution nonparametric adaptive radar sensing techniques. A family of the DYED-related algorithms is constructed and their effectiveness is finally illustrated via numerical simulations.1. Introduction

Space-time adaptive processing (STAP) for high-resolution radar imaging with sensor arrays and synthetic aperture radar (SAR) systems has been an active research area in the environmental remote sensing (RS) field for several decades, and many sophisticated techniques are now available (see among others [1–4] and the references therein). The problem of radar/SAR imaging can be formalized in terms of nonlinear inverse problems of nonparametric estimation of the power spatial spectrum pattern (SSP) of the random wavefield scattered from the remotely sensed scene observed through a kernel signal formation operator (SFO) with the kernel structure specified by the employed radar/SAR signal modulation and contaminated with random Gaussian observation noise [1,2,5]. Thus, formally, the RS imaging problem falls into a category of stochastic ill-posed nonlinear inverse problems. The simplest radar/SAR-oriented robust approach to such the problem implies application of a method known as “matched spatial filtering” (MSF) to process the recorded data signals [1–3]. Stated formally [2,3] the MSF method implies application of the adjoint SFO to the recorded data, squared detection of the filter outputs and their averaging over the actually recorded samples (snapshots) [1] of the independent data observations. One of the challenging aspects of the array radar/SAR imaging relates to development of high-resolution efficient consistent STAP techniques applicable to the scenarios with low number of recorded array snapshots (one snapshot data vector as a limiting case) or only one recorded realization of the trajectory data signal in a SAR system. In both cases, the data sample covariance matrix is rank deficient (rank-1 in the single look SAR case), and none of the conventional nonparametric beamformers [6–9], nor the maximum likelihood (ML) related high-resolution STAP techniques [1,4,7–11] are able to produce consistent SSP estimates. Moreover, speckle noise and possible array/SAR calibration errors constitute additional multiplicative sources of data degradations that inevitably aggravate the problem inconsistency resulting in the heavily distorted speckle-corrupted scene images. In addition, because the real-world RS scenes are implicitly associated with distributed inhomogeneous fields (i.e., not composed of a small number of point-type targets), none of the recently developed sparsity-based techniques such as independent component analysis [12], principal component analysis [13] or kernel independent component analysis [14] are able to cope with such type of ill-conditioned RS imaging problems. To alleviate the inconsistency (and to perform adaptive image despeckling [5,15]), another group of the variational analysis (VA) related methods that fall into the category of the so-called “blind” or “model-free” image enhancement approaches have recently been adapted to RS image enhancement, e.g., [16–20] but without their aggregation with the resolution enhancing “model-based” nonparametric regularized imaging techniques [21–24].

Another possible way to alleviate the ill-posedness of the nonlinear radar/SAR imaging problems is to incorporate a priori model considerations regarding the desired geometrical scene image properties into the STAP procedures via performing randomization of the SSP model and application of the Bayesian minimum risk (MR) or maximum a posteriori probability (MAP) nonparametric adaptive spatial spectral estimation strategies [3,21]. Unfortunately, such approaches lead to the nondeterministic polynomial-type (NP) hard computational procedures [21], and hence result in technically unrealizable SSP estimators. An alternative way that we propose and describe in this study is to incorporate (as the second dynamic regularization level) the anisotropic kernel window operator (WO) into the overall descriptively regularized ML-based iterative adaptive SSP estimator and perform projections onto convex sets (POCS) that ensure the consistency and at the same time enforce the convergence of the resulting doubly regularized adaptive iterative imaging procedures. First, we adapt the most prominent recently proposed nonparametric ML inspired amplitude and phase estimation (APES) method (ML-APES method) [24] to the imaging problem at hand following the descriptive experiment design (DEED) regularization paradigm [25,26]. Second, to transform the DEED-optimized adaptive nonlinear imaging technique into the iterative convergent procedure the POCS regularization is employed. To guarantee the consistency, the anisotropic kernel WO is incorporated into the composed POCS operator adjusted to the metrical properties of the desired images in the Sobolev-type solution (image) space. Thus, the “model-free” variational analysis (VA)-based image enhancement approach [16–20] and the “model-based” descriptive experiment design (DEED) regularization paradigm [25,26] are unified into a new dynamic experiment design (DYED) regularization framework. Application of the proposed DYED framework to the high-resolution array radar/SAR imaging problems leads to a class of two-level (DEED-VA) regularized SSP reconstruction techniques that aggregate the anisotropic kernel adaptive dynamic processing with projections onto convex sets to enforce the consistency and convergence of the overall iterative SSP estimators. We also show how the proposed DYED regularization approach may be considered as a generalization of the APES [24], and some other novel high-resolution “model-based” nonparametric radar imaging techniques [15,27], on one hand, and the VA-related anisotropic diffusion [16,18], selective anisotropic information fusion [20] and other nonparametric “model-free” robust adaptive beamforming based image enhancement approaches [28–33], on the other hand.

The reminder of the paper is organized as follows. In Section 2, we provide the formalism of the radar/SAR inverse imaging problem at hand with necessary experiment design considerations. In Section 3, we compare the ML-APES approach with the DEED-related family of the SSP estimators. The performance guarantees are conceptualized in Section 4. An extension of the VA-based dynamic POCS regularization unified with the DEED paradigm that results in a new proposed DYED framework is addressed in Section 5 followed by some illustrative simulations and discussion in Sections 6 and conclusions in Section 7, respectively.

2. Background

The general mathematical formalism of the problem at hand and the DEED regularization framework that we employ in this paper are similar in notation and structure to that described in [10,11,25,34] and some crucial elements are repeated for convenience to the reader.

2.1. Problem Formalism

In a general continuous-form (functional) formalism, a random temporal-spatial realization of the data field, u, is considered to be created by some continuous distribution of the far-distant radiation/scattering sources e as plane or spherical wavefronts, which sweep across the radar sensor array (moving antenna in the case of SAR). These fields satisfy an operator-form linear stochastic equation (the so-called equation of observation (EO) [10,21]):

It is convenient in the RS applications to assume that due to the integral signal formation model (3), the central limit theorem conditions hold [3,23,34,35], hence the fields e, n, u in (1), (3) are considered to be the zero-mean complex-valued random Gaussian fields. Next, since in all RS applications the regions of high correlation of e(r) are always small in comparison with the resolution element on the probing scene [3,10,11,34], the signals e(r) scattered from different directions r, r′ ∈ R are assumed to be uncorrelated, i.e., characterized by the correlation function:

2.2. Experiment Design Considerations

The formulation of the data discretization and sampling in this paper follows the experiment design formalism given in [10,23,30,34] that enables one to generalize the finite-dimensional approximations of Equations (1,3) independent of the particular system configuration and the method of data measurements and recordings employed. Following [10,34], consider the sensor array (synthesized array) specified by a set of distanced in space (i.e., orthogonal) tapering functions (in the SAR case, the are synthesized by the moving antenna over L spatial recordings [4,34]). Consider next, that the output signals in such spatially distributed measurement channel are then converted to I samples at the outputs of identical temporal sampling filters defined by their impulse response functions where complex conjugate is taken for notational convenience. Without loss of generality [2–4,10,21,34], the sets {κl} and {vi} are assumed to be orthonormal (e.g., via proper filter design and sensor antenna calibration [4,10]). The composition {hm(p) = κl(ρ)vi(t); m = (l, i) =1,..., L × I = M} ordered by multi-index m = (l, i) composes a set of the orthonormal spatial-temporal decomposition functions (base functions) that explicitly determine the vector of outcomes:

In analogy to Equation (6), one can define now the K-D vector-form approximation of the scene random scattering field:

With the specified decompositions (6), (7), the discrete (vector-form) approximation of the continuous-form EO (1), (3) is given by:

The nonlinear inverse problem of radar/SAR imaging with the discrete-form measurement data (8) is formulated now as follows: to derive an estimator for the SSP vector b and use it to reconstruct the SSP distribution:

2.3. Conventional Kernel Spectral Estimator

We, first, recall the conventional continuous-form kernel estimator [2,21] that is an MSF-based extension of the periodogram smoothing spectral analysis technique [36,37]. With such the method, the SSP estimate b̂(r) is derived from only one random observation (realization) of the data field u(p) as the generalized periodogram (the so-called sufficient statistics [21]) formed as a squared modulus of the MSF output | (+u)(r)|2 smoothed by the kernel window operator (WO) (i.e., pseudo averaged):

3. Related Work

3.1. ML-Based Approach

In this Section, we extend the recently proposed high-resolution maximum likelihood-based amplitude phase estimator (ML-APES) [24] to the SSP estimation problem at hand via its modification adapted to the distributed RS scene (not composed of sparse multiple point-type targets as originated in [24]). In the considered low snapshot sample case (e.g., one recorded SAR trajectory data signal), the sample data covariance matrix is rank deficient (rank-1 in the single radar snapshot and single look SAR cases, J = 1). As it is shown in [24], minimization of the negative likelihood function with respect to the SSP vector b related to Ru = Ru(b) via Equation (10) is equivalent to minimizing the covariance fitting Stein’s loss, . The solution to such minimization problem found in [24] results in the solution-dependent ML-APES estimator ([24], Equation (32)):

In the APES terminology (as well as in the minimum variance distortionless response (MVDR) [9,33] and other ML-related approaches [23,35], etc.), sk represents the so-called steering vector in the kth look direction, which in our notational conventions is essentially the kth column vector of the SFO matrix S. By the authors’ design [24], the numerical implementation of the ML-APES algorithm (13) assumes application of an iterative fixed-point technique by building the model-based estimate R̂u = Ru (b̂[i]) of the unknown covariance Ru modeled by Equation (10) from the latest (ith) iterative SSP estimate [b̂[i]] with the zero step initialization b̂[0] = b̂MSF computed applying the conventional MSF estimator.

Let us adapt the algorithm (13) to the considered here single snapshot/single look case (J = 1) substituting Y by uu+, taking into account the properties of the convergent MVDR estimates of the SSP, which in a coordinate/pixel form are given by (also referred to as properties of a conventional Capon beamformer [9,24]), and making the use of a fixed-point nature of the algorithm (13) according to which the ML-APES estimates in the vector form are to be found as a numerical solution to the nonlinear matrix-vector equation:

3.2. DEED Regularization Framework

The DEED regularization framework proposed and developed in [25,26,30,34] can be viewed as a problem-oriented formalization of the requirements to the signal/image processing/post-processing co-design aimed at satisfying the desirable properties of the reconstructed RS images, namely: (i) maximization of spatial resolution balanced with noise suppression, (ii) consistency, (iii) positivity, (iv) continuity and agreement with the data [2,25,34]. Within the general DEED framework [25,34], all these aspects are formalized via the design of balanced resolution-enhancement-over-noise-suppression SSP estimation techniques unified with the POCS regularization. Such unification-balancing is descriptive in the sense that the user/observer can induce the desirable metrics (geometrical) structures in the image/solution space, and next, specify the type, the order and the amount of the employed regularization via constructing the related resolution-enhancement-over-noise-suppression performance measures with adjustable balancing factors (regularization parameters). Different feasible assignments of such user-controllable “degrees of freedom” specify a family of the DEED-related techniques. For a detailed formalism of the DEED method we refer to [25,30] and for its implementation in a family of fixed-point iterative techniques to [26,34]. Here we provide a modification of the original DEED method [25,26] (in terms related to the presented above ML-APES strategy) to adapt it for the dynamic experiment design (DYED) regularization framework that we next develop in Section 5.

The DEED-optimal SSP estimate b̂ is to be found as the POCS-regularized solution to the nonlinear equation [26]:

Following the DEED framework [25], the strategy for deriving the optimal SO FDEED is formalized by the MR-WCSP optimization problem:

Putting F(2), F(3) in Equation (18) results in two POCS-regularized DEED-related SSP estimators that produce the SSP estimates defined as b̂(2) and b̂(3), respectively. Note that other feasible adjustments of the processing level degrees of freedom {α, NΣ, A} summarized in [26,34] for the robust RS adopted model (22) of the correlation matrix RΣ specify the family of relevant POCS-regularized DEED-related (DEED-POCS) techniques (18) represented in the general form as follows:

3.3. Relationship between DEED and ML-APES

The relationship between two high-resolution SSP estimators, the ML-APES (17) and the DEED-POCS (18), can now be established using the second equivalent form for representing the SO (16) given by (Appendix B, [21]):

4. Performance Guarantees

4.1. Consistency Guarantees

Following the DEED-POCS regularization formalism [26,34], the POCS-level regularization operator in Equation (18) could have a composite structure, i.e., could be constructed as a composition of operators/projectors conditioned by non-trivial prior information that formalizes some desirable properties of the solution, e.g., positivity, consistency, etc. We specify such RS-adapted composite regularization in Section 4.4. In this Section, we are going to establish that to guarantee the consistency of the DEED-related estimator(s) (18), (25) the should incorporate a kernel-type WO (not necessarily isotropic) as a necessary requirement. To verify this consistency guarantee, we limit ourselves here with the relevant simplest regularization operator model, = . Analysis of the consistency requires the hypothetical continuous asymptotes, , , the identity operators. Adopting these assumptions and white observation noise model, the DEED estimator given by Equation (25) can be expressed in the following generalized continuous functional form:

Remark 1: The APES approach, as well as other ML-based high-resolution SSP estimators [21,24], etc., do not imply regularizing windowing at all, i.e., = const· while the identity operator is not a kernel operator. Hence, in the uncertain scenarios with rank-deficient data covariance any ML-based approach inevitably produces an inconsistent estimate of the SSP. Technically it means that any ML-based resolution enhancement attempt should be combined with the relevant kernel windowing (not necessarily isotropic linear spatial smoothing) to guarantee the consistency.

4.2. Iterative Implementation

The next crucial performance issue relates to construction of convergent iterative scheme for efficient computational implementation of the POCS regularized DEED-related estimators. To convert such the technique to an iterative procedure we, first, transform the Equation (18) into the equivalent equation:

4.3. Convergence Guarantees

Following the POCS regularization formalism [2,26], the regularization operator could be constructed as a composition of projectors n onto convex sets n; n = 1,…, N with not empty intersection. Then for the composition of the relaxed projectors:

4.4. Resolution Preserving Anisotropic Windowing

The DEED-POCS framework offers a possibility to design the POCS regularization operator in such a way that to preserve high spatial resolution performances of the resulting DEED-related consistent SSP estimates. Following the VA-based image enhancement approach [16,20], this task could be performed via anisotropic image post-processing that in our statement implies anisotropic regularizing windowing over the properly constructed convex solution set in the image space. To proceed with the derivation of such a WO, in this paper, we incorporate the prior information on the desirable smoothness and geometrical properties of an image and its estimate by constructing the vector image/solution space 𝔹(K) ∋ b as a K-D discrete-form approximation to the corresponding function image space 𝔹(R), in which the initial continuous SSP functions b (r), r ∈ R reside. To formalize the geometrical information on the image changes and simultaneously on the image edge changes over the scene frame, the metrics structure in 𝔹(R) must incorporate the image norm as well as the image gradient norm [2,3]. This is naturally to perform by adopting the so-called Sobolev metrics [21]:

To proceed with designing the related WO adapted to the discrete problem model, the relevant 𝔹(K) ∋ b as a K-D discrete-form approximation to 𝔹(R) ∋ b(r) has to be defined via specifying the corresponding metrics with the metrics inducing matrix M constructed as a matrix-form approximation of given by Equation (37). For the adopted pixel-framed discrete image representation format {bk = b(kx, ky)}; k = (kx, ky); kx= 1,.., Kx; ky= 1,.., Ky; k = 1,…, K = Kx×Ky} this yields the desired metrics:

The second sum on the right hand side of Equation (38) is recognized to be a 4-nearest-neighbors difference-form approximation of the Laplacian operator in Equation (37) [2,16]; hence, it represents the metrics measure of the high frequency spatial components in the discretized SSP that corresponds to is gradient variations. From Equation (38) we easily derive the corresponding metrics inducing matrix-form operator:

Last, we restrict the solution subspace (the so-called active solution set or correctness set in the DEED terminology [25,34]) to the K-D convex set 𝔹+ ⊂ 𝔹(K) of SSP vectors with nonnegative elements (as power is always nonnegative). This is formalized by specifying the projector + onto such convex set 𝔹+ i.e., the POCS operator (as the positivity operator specifies POCS [2]) that has the effect of clipping off all the negative values. The composition:

5. DYED Regularization Framework

5.1. VA-Bases Dynamic Reconstructive Scheme

With the model (40), the discrete-form contractive progressive mapping iterative process (33) transforms into:

Associating the iterations i, i+1,… with discrete “evolution time”, i.e., i + 1 → t + Δt;i → t;τ → Δt, the Equation (41) can be rewritten in the “evolution” form:

Three practically inspired versions of Equation (37) relate to three feasible assignments to the operator . These are as follows:

= specifies the conventional Lebesgue metrics, in which case the evolution process (44) does not involve control of the image gradient flow over the scene.

, i.e., the Laplacian with respect to the space variable r = (x,y) specifies the Dirichlet variational metrics inducing operator, in which case, the right-hand side of (44) depends on the discrepancy between the corresponding Laplacian edge maps producing anisotropic gain. For short evaluation time intervals, such anisotropic gain term induces significant changes dominantly around the regions of sharp contrast resulting in edge enhancement [2,16].

combines the Lebesgue and the Dirichlet metrics, in which case the Equation (44) is transformed into the VA dynamic process defined by the partial differential equation (PDE):

For the purpose of generality, instead of two metrics balancing coefficients m(0) and m(1) we incorporated into the PDE (45) three regularizing factors c0, c1 and c2, respectively, viewed as VA-level user-controllable degrees of freedom to compete between smoothing and edge enhancement. Although due to the solution-depended nature the dynamic DEED-VA scheme in its continuous PDE form (45) cannot be addressed as a practically realizable procedure, the undertaken theoretical developments are useful for establishing the relationship between the general-form VA scheme (45) and the already existing dynamic image enhancement approaches [16–20].

5.2. VA-Relates Approaches

Different feasible assignments to the processing level degrees of freedom in the PDE (45) specify different VA-related procedures. Here beneath we consider the following ones:

The simplest case relates to the specifications: c0 = 0, c1 = 0, c2 = const = – c, c > 0, and Φ(r, r′; t) = δ(r – r′) with excluded projector +. In this case, the PDE (45) reduces to the isotropic diffusion (so-called heat diffusion [16]) equation with constant (isotropic) conduction factor c. We reject the isotropic diffusion for the purposes of radar/SAR image processing because of its resolution deteriorating nature.

The previous assignments but with the anisotropic factor, − c2 = c(r; t) ≥ 0 specified as a monotonically decreasing function of the magnitude of the image gradient distribution, i.e., a function c(r,| ∇rb̂(r; t)|) ≥ 0, transforms the Equation (45) into the celebrated Perona-Malik anisotropic diffusion method [16,18] . Because the “model-free” assignment Φ(r,r′; t) = δ(r – r′) excludes the “model-based” (DEED regularization-based) SSP reconstruction, the anisotropic diffusion provides only partial despeckling of the homogeneous regions on the low-resolution MSF images preserving their edge maps.

For the Lebesgue metrics specification c0 = 1 with c1 = c2 = 0, the PDE (45) involves only the first term at its right hand side. This case leads to the locally selective robust adaptive spatial filtering (RASF) approach investigated in details in our previous studies [25,34], where it was established that such the method provides satisfactory compromise between the resolution enhancement and noise suppression but suffers from low convergence rate.

The alternative assignments c0 = 0 with c1 = c2 = 1 combine the isotropic diffusion with the anisotropic gain controlled by the Laplacian edge map. This approach addressed in [19,20] as a selective information fusion method manifests almost the same performances as the RASF method.

The VA-based approach that we address here as the DEED-VA-fused DYED method involves all three terms at the right hand side of the PDE (45) with the equibalanced weights, c0 = c1 = c2 = const (one for simplicity), hence, it combines the isotropic diffusion (specified by the second term at the right hand side of Equation (45)) with the composite anisotropic gain dependent both on the evolution of the synthesized SSP frame and its Laplacian edge map. This produces a balanced compromise between the anisotropic reconstruction-fusion and locally selective image despeckling with edge preservation.

5.3. Numerical DEED-VA-Technique

The discrete-form approximation of the PDE (45) in “iterative time” {i = 0, 1, 2, …} yields the iterative numerical procedure:

Remark 2: With the performed extension of the DEED regularization method into the unified DEED-VA framework, the warnings about the dynamic process (46) being ill conditioned do not apply, since the purpose of the two-level regularization (the DEED level and the VA level) is aimed at curing that same ill conditioning providing the POCS-regularized iterative DYED technique (46) converges to a point in the specified convex solution set 𝔹+. Nevertheless (as it is frequently observed with nonlinear iterative processes [2,36,37]) such nonlinear iterative procedure (46) may suffer from some numerical instabilities demonstrating only local convergence.

6. Numerical Simulations and Discussion

6.1. Simulation Experiment Specifications

In the simulation experiment, we considered a fractional SAR as a sensor system, analogous to a single look fraction of a multi look focused SAR [4,5,26,31]. The resolution properties of such the RS imaging system that employs the conventional MSF processing are explicitly characterized by the AF of the unit signal S(t,ρ;r) given by the composition [4,5,26]:

Next, to comply with the technically motivated MSF fractional image formation mode, the blurred scene image was degraded with the composite (signal-dependent) noise simulated as a realization of -distributed random variables with the pixel mean value assigned to the actual degraded scene image pixel. The simulation experiment compares three most prominent SAR-adapted enhanced imaging techniques, namely: the celebrated VA-based anisotropic diffusion method [16,18] specified in Section 5.2.(ii); the ML-APES method [24] detailed in Section 3.1, and the developed fused anisotropic DYED reconstruction method aggregated with the POCS regularization performed via Equation (46). The simulations were run for two hypothetical operational scenarios. The first one corresponds to the partially compensated defocusing errors [4,22]. In the second scenario, no autofocusing was assumed, thus the degradations encompass both uncontrolled SFO distortions and MSF mismatches attributed to “heavy” propagation medium perturbations [22,31], range migration effect [4] and uncompensated carrier trajectory deviations that may occur in much more severe operational scenarios [26,29,31]. For both scenarios, the simulations were run for different values of the composite signal-to-noise ratio (SNR) μSAR defined as the ratio of the average signal component in the degraded image b̂(1) formed using the MSF algorithm (32) to the relevant composite noise component in that same speckle corrupted MSF image.

6.2. Performance Metrics

For objective evaluating of the reconstructive imaging quality, we have adopted two quality metrics traditionally used in image restoration/enhancement [2,3,11,37]. The first one evaluates the mean absolute error (MAE):

The second employed metrics is the so-called improvement in the output signal-to-noise ratio (IOSNR) [2,3,29] measured via the ratio of the corresponding squared l2 error norms:

6.3. Simulations Results and Discussions

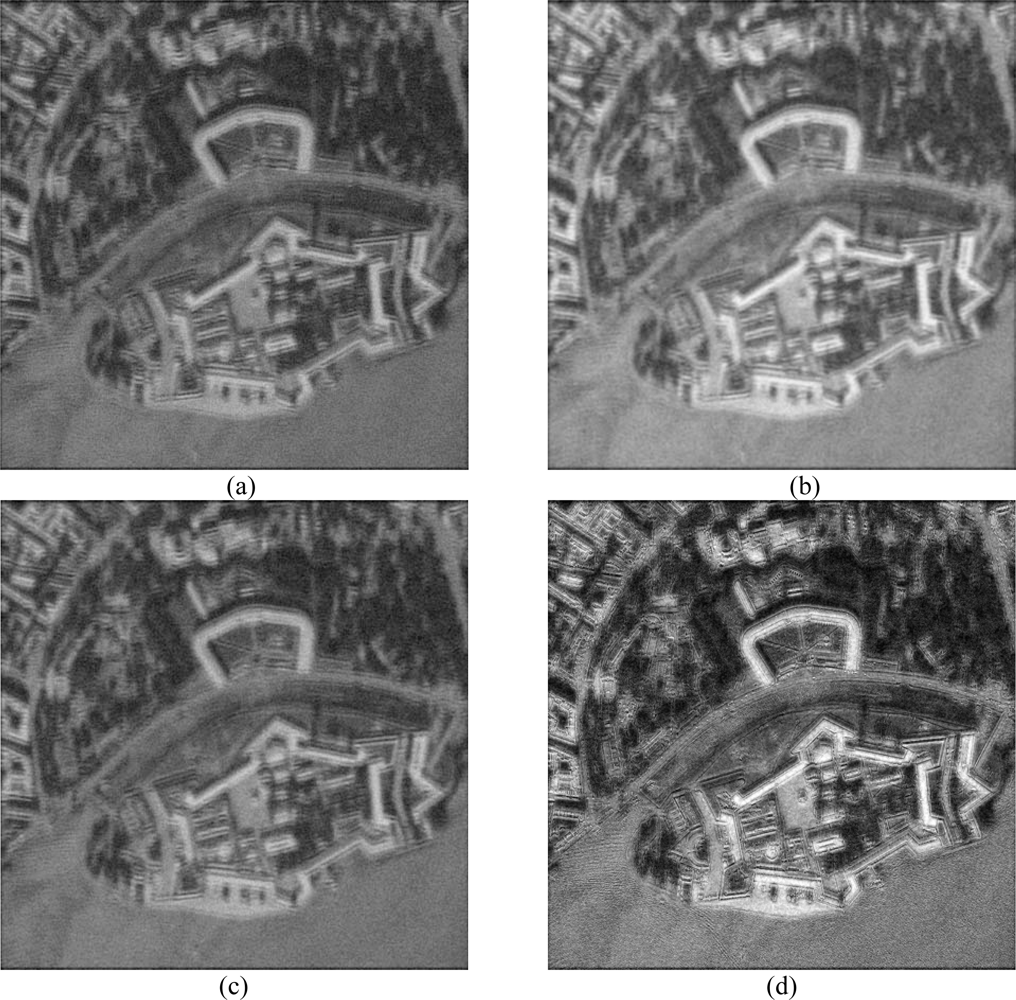

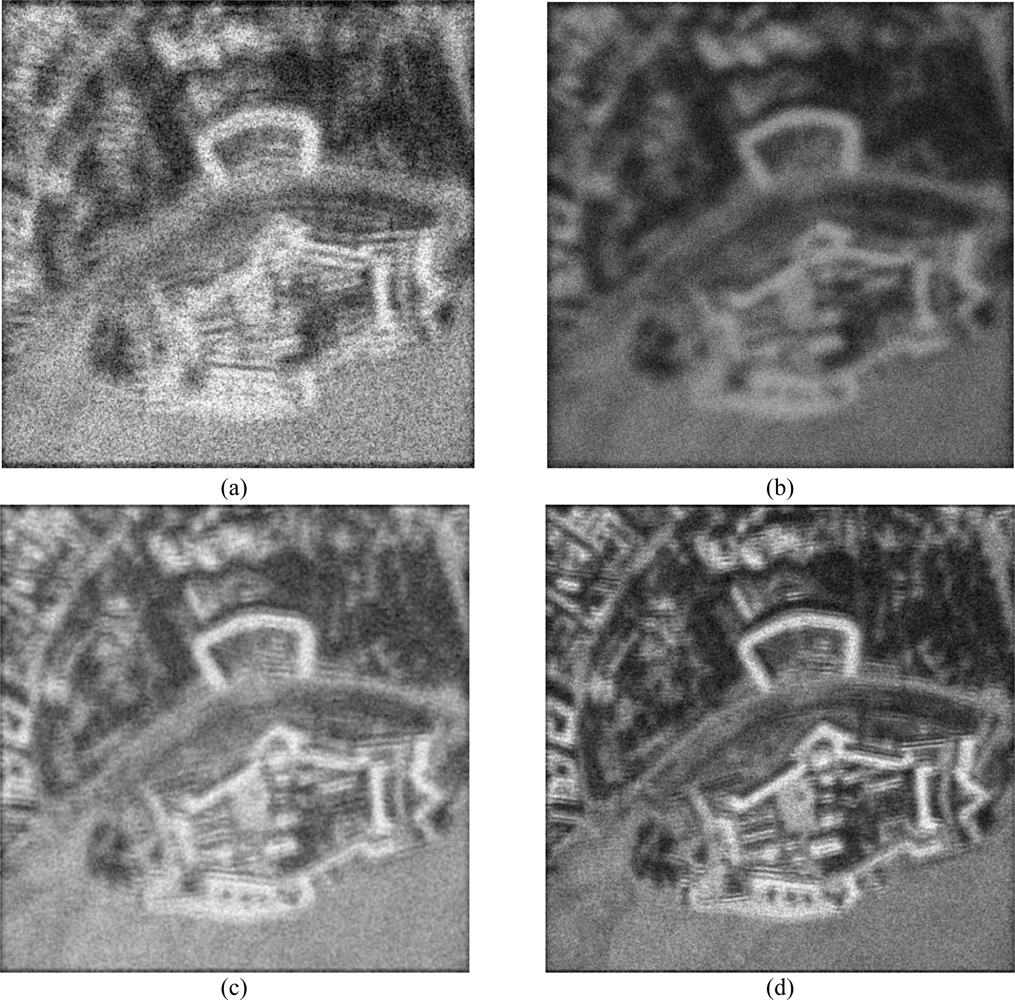

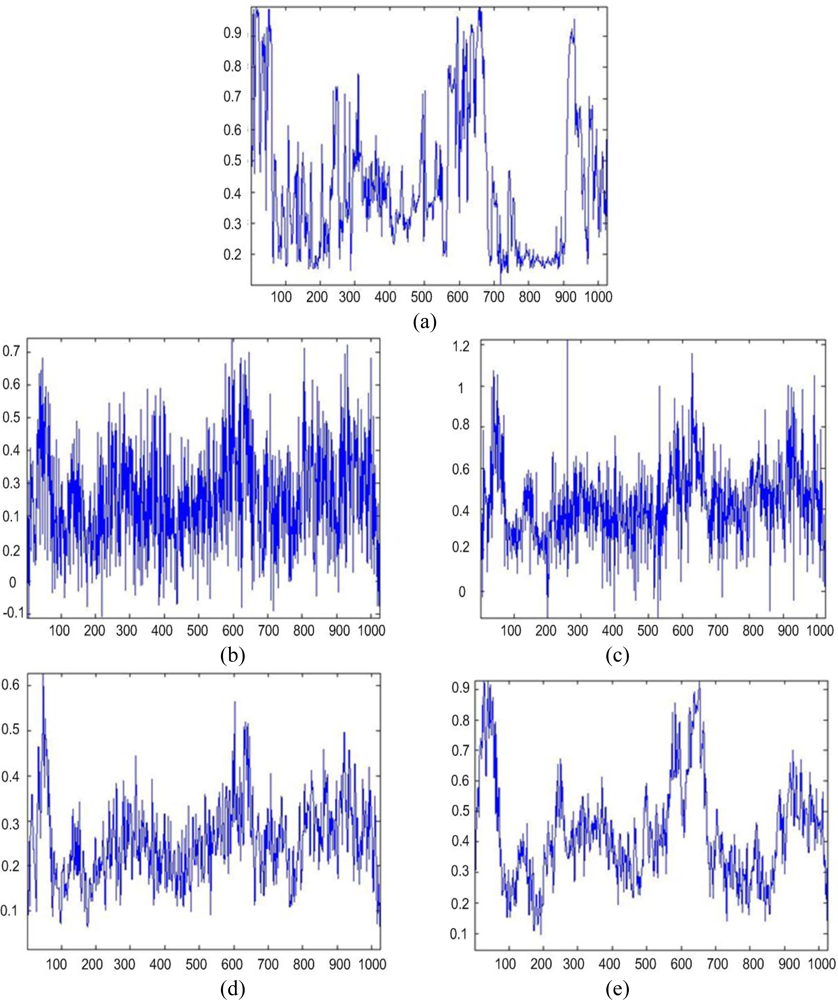

Figure 1 shows the original scene image (borrowed from the high-resolution RS imagery [38]) not observable with the simulated fractional SAR imaging systems. The images in Figures 2 and 3 present the results of image formation/enhancement applying different tested DEED-VA-related techniques in two operational scenarios as specified in the figure captions. Figures 2(a) and 3(a) demonstrate the images formed applying the conventional MSF algorithm. From these figures, one may easily observe that the MSF images suffer from imperfect spatial resolution due to the fractional aperture synthesis mode and composite observation/focusing mismatches and are corrupted by multiplicative signal-dependent noise. In the first scenario, the simulated degradations in the resolution are moderate over the range direction (κr = 10) and significantly larger over the azimuth direction (κa = 20). In the second scenario, the fractional SAR system suffers from much more severe degradations due to additional defocusing in both directions (κr = 20; κa = 40) and lower SNR.

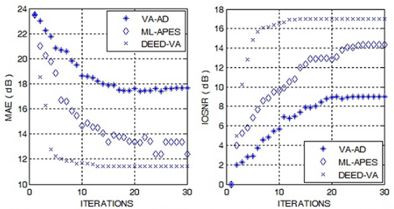

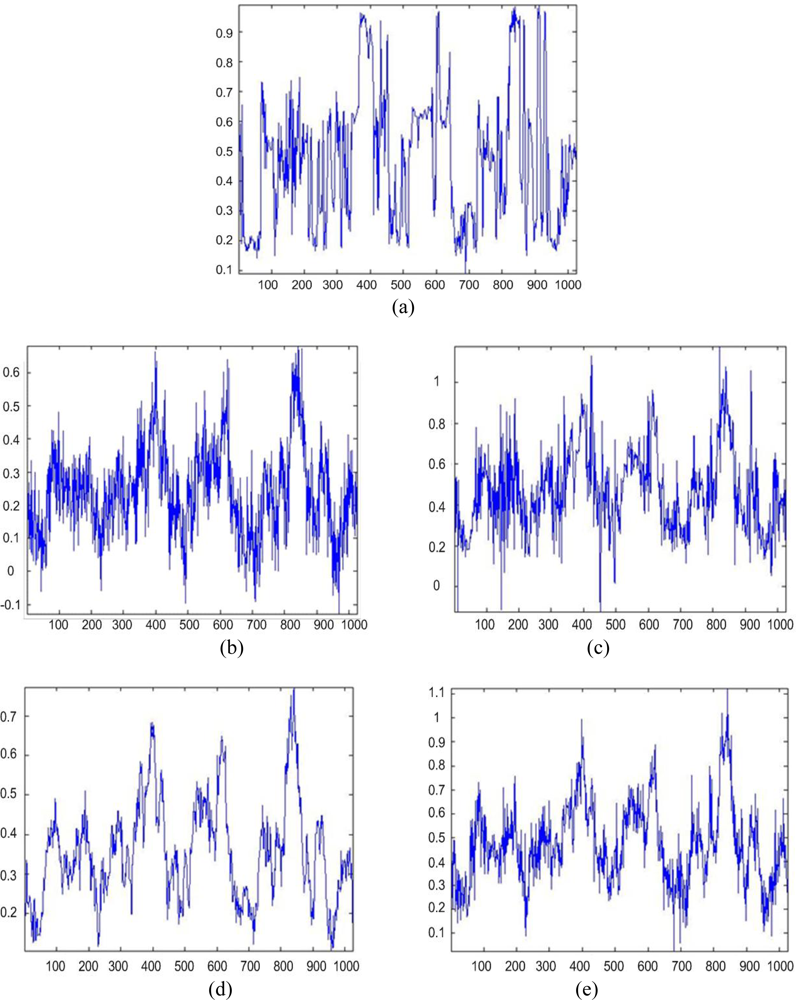

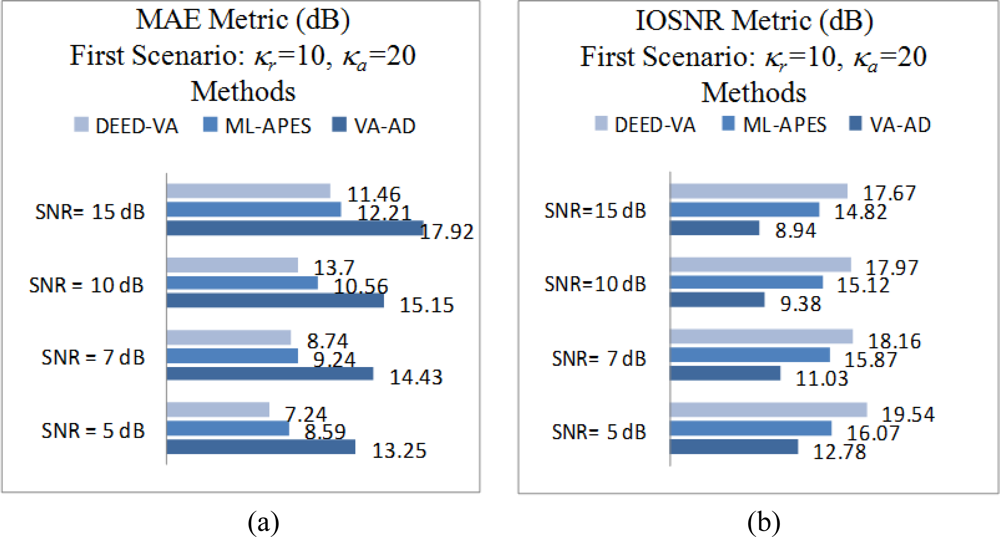

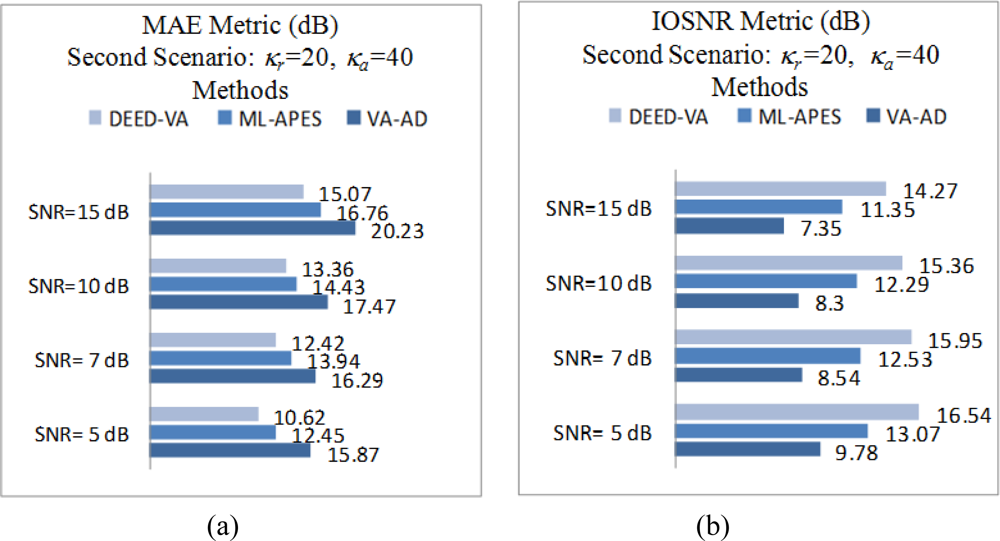

Next, Figures 2(b) and 3(b) show the images enhanced applying the anisotropic diffusion method [16,18]. The images reconstructed using the ML-APES method [24] are shown in Figures 2(c) and 3(c), and the corresponding images optimally reconstructed applying the DEED-VA-optimal technique (46) with the same equibalanced regularization factors c0 = c1 = c2 = 1, after the same 30 iterations are presented in Figures 2(d) and 3(d), respectively. Figures 4 and 5 present comparative results of reconstructive imaging in a 1-D format with the operational parameters and scenarios specified in the figure captions. Figures 6 and 7 report the quantitative performances evaluated via the two quality metrics (49) and (50) gained with three tested SSP estimation methods, namely: the VA-related anisotropic diffusion (VA-AD); the ML-APES and the DYED-optimal DEED-VA.

From the reported simulation results, the advantage of the well-designed imaging experiments (cases of the ML-APES and the optimal DEED-VA techniques) over the poorer design enhancement experiments (MSF and anisotropic diffusion (VA-AD) without ML-APES reconstruction) is evident for both scenarios. Due to the performed regularized inversions, the resolution was substantially improved. Quantitative performance improvement measures are reported in Figures 6 and 7 for the same 30 performed iterations.

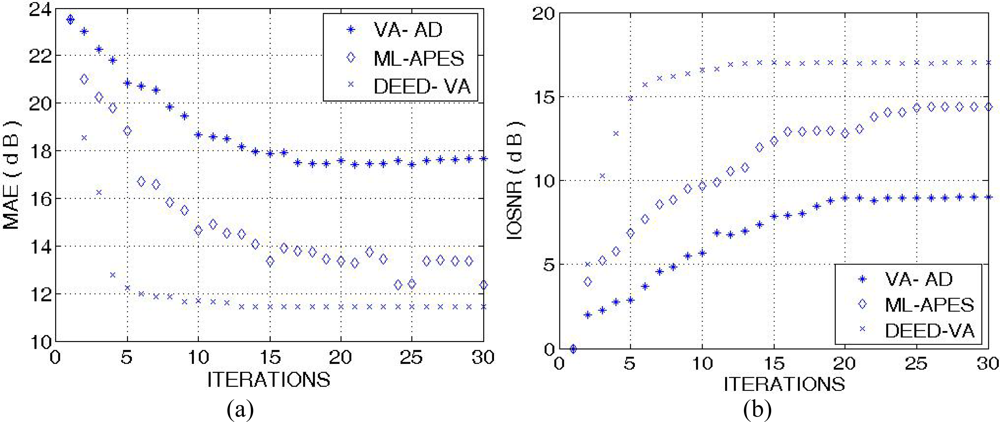

The highest values of the IOSNR, as well as the lowest values of the MAE, were obtained with the DYED-optimal SSP estimator, i.e., with the fused DEED-VA technique (46) adapted to the particular operational scenarios. Note that IOSNR (50) is basically a square-type error metric; thus, it does not qualify quantitatively the “delicate” visual features in the reconstructed RS images; hence, small differences in the corresponding IOSNRs reported in Figures 6 and 7. Furthermore, all the DYED-related estimators manifest the higher IOSNRs and lower MAEs in the case of higher SNR. Both the 2-D and 1-D RS imaging results are indicative of the superior qualitative reconstructive performances achieved with the high-resolution DYED-related estimators (17) and (46), while the DYED-optimal DEED-VA approach outperforms the ML-APES method. Last, in Figure 8, we report the convergence rates (specified via the dynamics of the corresponding IOSNR and MAE metrics versus the number of iterations) evaluated for the first test scenario for the same three dynamic enhancement/reconstruction techniques: VA-AD, ML-APES, and the developed DYED-optimal unified DEED-VA technique. The reported convergence rates are indicative of the considerably speeded-up performances manifested by the DEED-VA algorithm (46) that outperforms the most prominent existing ML-APES and anisotropic diffusion methods in the both quality metrics requiring 5–6 times less number of iterations to approach the same asymptotic convergence.

7. Conclusions

In this paper, we have addressed the unified DYED method for nonparametric high-resolution adaptive sensing of the spatially distributed scenes in the uncertain RS environment that extends the previously developed DEED regularization framework via its aggregation with the dynamic VA-based enhanced imaging approach. We have treated the RS imaging problem in an array radar/SAR adapted statement. The scene image is associated with the estimate of the SSP of the scattered wavefield observed through the randomly perturbed kernel SFO under severe snapshot limitations resulting in a degraded speckle corrupted RS image. The crucial issue in treating such a nonlinear ill-posed inverse problem relates to the development of a statistical SSP estimation/reconstruction method that balances the resolution enhancement with noise suppression and guaranties consistency, convergence and robustness of the resulting STAP procedures. In the addressed experiment design setting, all these desirable performance issues have been formalized via inducing the corresponding Sobolev-type metrics structure in the solution/image space, next, constructing the DEED-balanced resolution-enhancement-over-noise-suppression objective measures and, last, solving the relevant SSP reconstruction inverse problem incorporating the two-level regularization (the DEED level and the VA level, respectively). Furthermore, the incorporation of the second-level VA-based dynamic POCS regularization not only speeds up the related iterative processing procedures but provides also perceptually enhanced imagery. Also, the developed DYED method is user-oriented in the sense that it provides a flexibility in specifying some design (regularization) parameters viewed as processing-level degrees of freedom, which control the type, the order and the amount of the employed two-level regularization producing a variety of DYED-related techniques with different operational performances and complexity. Simulations verified that the POCS-regularized DEED-VA-optimal DYED technique outperforms the most prominent methods in the literature based on the ML and VA approaches that do not unify the DEED framework with the POCS-based convergence enforcing dynamic regularization in the corresponding applications.

Acronyms

| AF | Ambiguity function |

| ASF | Adaptive spatial filtering |

| DEED | Descriptive experiment design |

| DYED | Dynamic experiment design |

| EO | Equation of observation |

| ML | Maximum likelihood |

| MSF | Matched spatial filtering |

| PDE | Partial differential equation |

| POCS | Projections onto convex sets |

| PSF | Point spread function |

| RASF | Robust ASF |

| RS | Remote sensing |

| SAR | Synthetic aperture radar |

| SFO | Signal formation operator |

| SSP | Spatial spectrum pattern |

| STAP | Space-time adaptive processing |

| VA | Variational analysis |

| WO | Window operator |

References

- Henderson, FM; Lewis, AV. Principles and Applications of Imaging Radar, Manual of Remote Sensing, 3rd ed; Wiley: New York, NY, USA, 1998; Volume 3. [Google Scholar]

- Barrett, HH; Myers, KJ. Foundations of Image Science; Wiley: New York, NY, USA, 2004. [Google Scholar]

- Perry, SW; Wong, H-S; Guan, L. Adaptive Imaging: A Computational Intelligence Perspective; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- Curlander, JC; McDonough, R. Synthetic Aperture Radar-System and Signal Processing; Wiley: New York, NY, USA, 1991. [Google Scholar]

- Lee, JS. Speckle suppression and analysis for synthetic aperture radar images. Opt. Eng 1986, 25, 636–643. [Google Scholar]

- Cutrona, LG. Synthetic Aperture Radar. In Radar Handbook, 2nd ed; Skolnik, MI, Ed.; McGraw Hill: Boston, MA, USA, 1990. [Google Scholar]

- Wehner, DR. High-Resolution Radar, 2nd ed; Artech House: Boston, MA, USA, 1994. [Google Scholar]

- Li, J; Stoica, P. Robust Adaptive Beamforming; Wiley: New Work, NY, USA, 2005. [Google Scholar]

- Gershman, AB; Sidiropoulos, ND; Shahbazpanahi, S; Bengtson, M; Ottesten, B. Convex optimization-based beamforming: From receive to transmit and network designs. IEEE Signal Proc. Mag 2010, 27, 62–75. [Google Scholar]

- Shkvarko, YV. Unifying regularization and Bayesian estimation methods for enhanced imaging with remotely sensed data—Part I: Theory. IEEE Trans. Geosci. Remote Sen 2004, 42, 923–931. [Google Scholar]

- Shkvarko, YV. Unifying regularization and Bayesian estimation methods for enhanced imaging with remotely sensed data—Part II: Implementation and performance issues. IEEE Trans. Geosci. Remote Sen 2004, 42, 932–940. [Google Scholar]

- Hyvarinen, A; Karhunen, J; Oja, E. Independent Component Analysis; Wiley: New York, NY, USA, 2001. [Google Scholar]

- Belouchrani, A; Abed-Meraim, K; Liu, R. AMUSE: A new blind identification algorithm. IEEE Trans. Signal Process 1997, 45, 434–444. [Google Scholar]

- Bach, FR; Jordan, MI. Kernel independent component analysis. J. Mach. Learn. Res 2002, 3, 1–48. [Google Scholar]

- Aanaes, H; Sveinsson, JR; Nielsen, AA; Bovith, T; Benediktsson, JA. Integration of spatial spectral information for resolution enhancement in hyperspectral images. IEEE Trans. Geosci. Remote Sen 2008, 46, 1336–1346. [Google Scholar]

- Perona, P; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Machine Intell 1990, 12, 629–639. [Google Scholar]

- You, YL; Xu, WY; Tannenbaum, A; Kaveh, M. Behavioral analysis of anisotropic diffusion in image processing. IEEE Trans. Image Proc 1996, 5, 1539–1553. [Google Scholar]

- Black, MJ; Sapiro, G; Marimont, DH; Heeger, D. Robust anisotropic diffusion. IEEE Trans. Image Proc 1998, 7, 421–432. [Google Scholar]

- Vorontsov, MA. Parallel image processing based on an evolution equation with anisotropic gain: Integrated optoelectronic architecture. J. Opt. Soc. Amer. A 1999, 16, 1623–1637. [Google Scholar]

- John, S; Vorontsov, MA. Multiframe selective information fusion from robust error theory. IEEE Trans. Image Proc 2005, 14, 577–584. [Google Scholar]

- Shkvarko, YV. Estimation of wavefield power distribution in the remotely sensed environment: Bayesian maximum entropy approach. IEEE Trans. Signal Proc 2002, 50, 2333–2346. [Google Scholar]

- Franceschetti, G; Lanari, R. Synthetic Aperture Radar Processing; CRC Press: New York, NY, USA, 1999. [Google Scholar]

- Haykin, S; Steinhardt, A. Adaptive Radar Detection and Estimation; Wiley: New York, NY, USA, 1992. [Google Scholar]

- Yarbidi, T; Li, J; Stoica, P; Xue, M; Baggeroer, AB. Source localization and sensing: A nonparametric iterative adaptive approach based on weighted least squares. IEEE Trans. Aerospace Electr. Syst 2010, 46, 425–443. [Google Scholar]

- Shkvarko, YV. Unifying experiment design and convex regularization techniques for enhanced imaging with uncertain remote sensing data––Part I: Theory. IEEE Trans. Geosci. Remote Sen 2010, 48, 82–95. [Google Scholar]

- Shkvarko, YV. Unifying experiment design and convex regularization techniques for enhanced imaging with uncertain remote sensing data ––Part II: Adaptive implementation and performance issues. IEEE Trans. Geosci. Remote Sen 2010, 48, 96–111. [Google Scholar]

- De Maio, A; Farina, A; Foglia, G. Knowledge-aided Bayesian radar detectors and their application to live data. IEEE Trans. Aerospace Electr. Syst 2010, 46, 170–183. [Google Scholar]

- Fienup, JR. Synthetic-aperture radar autofocus by maximizing sharpness. Optic. Lett 2000, 25, 221–223. [Google Scholar]

- Shkvarko, YV. From matched spatial filtering towards the fused statistical descriptive regularization method for enhanced radar imaging. EURASIP J. Appl. Signal Process 2006, 2006, 1–9. [Google Scholar]

- Shkvarko, YV. Finite Array Observations-Adapted Regularization Unified with Descriptive Experiment Design Approach for High-Resolution Spatial Power Spectrum Estimation with Application to Radar/Sar Imaging. Proceedings of the 15th IEEE International Conference on Digital Signal Processing (DSP 2007), Cardiff, UK, 1–4 July 2007; pp. 79–82.

- Franceschetti, G; Iodice, A; Perna, S; Riccio, D. SAR sensor trajectory deviations: Fourier domain formulation and extended scene simulation of raw signal. IEEE Trans. Geosci. Remote Sen 2006, 44, 2323–2334. [Google Scholar]

- Ishimary, A. Wave Propagation and Scattering in Random Media; IEEE Press: New York, NY, USA, 1997. [Google Scholar]

- Younis, M; Fisher, C; Wiesbeck, W. Digital beamforming in SAR systems. IEEE Trans. Geosci. Remote Sen 2003, 41, 1735–1739. [Google Scholar]

- Shkvarko, Y; Perez-Meana, H; Castillo-Atoche, A. Enhanced radar imaging in uncertain environment: A descriptive experiment design regularization approach. Int. J. Navig. Obs 2008, 2008, 1–11. [Google Scholar]

- Mattingley, J; Boyd, S. Real-time convex optimization in signal processing. IEEE Signal Process. Mag 2010, 27, 50–61. [Google Scholar]

- Mathews, JH. Numerical Methods for Mathematics, Science, and Engineering, 2nd ed; Prentice Hall: Englewood Cliffs, NJ, USA, 1992. [Google Scholar]

- Ponomaryov, V; Rosales, A; Gallegos, F; Loboda, I. Adaptive vector directional filters to process multichannel images. 2007, 429–430. [Google Scholar]

- Space Imaging, High Resolution Imagery, Earth Imagery & Geospatial Services, Available online: http://www.geoeye.com/CorpSite/gallery/default.aspx?gid=52 (accessed on 18 April 2011).

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Shkvarko, Y.; Tuxpan, J.; Santos, S. Dynamic Experiment Design Regularization Approach to Adaptive Imaging with Array Radar/SAR Sensor Systems. Sensors 2011, 11, 4483-4511. https://doi.org/10.3390/s110504483

Shkvarko Y, Tuxpan J, Santos S. Dynamic Experiment Design Regularization Approach to Adaptive Imaging with Array Radar/SAR Sensor Systems. Sensors. 2011; 11(5):4483-4511. https://doi.org/10.3390/s110504483

Chicago/Turabian StyleShkvarko, Yuriy, José Tuxpan, and Stewart Santos. 2011. "Dynamic Experiment Design Regularization Approach to Adaptive Imaging with Array Radar/SAR Sensor Systems" Sensors 11, no. 5: 4483-4511. https://doi.org/10.3390/s110504483