Webcams for Bird Detection and Monitoring: A Demonstration Study

Abstract

:1. Introduction

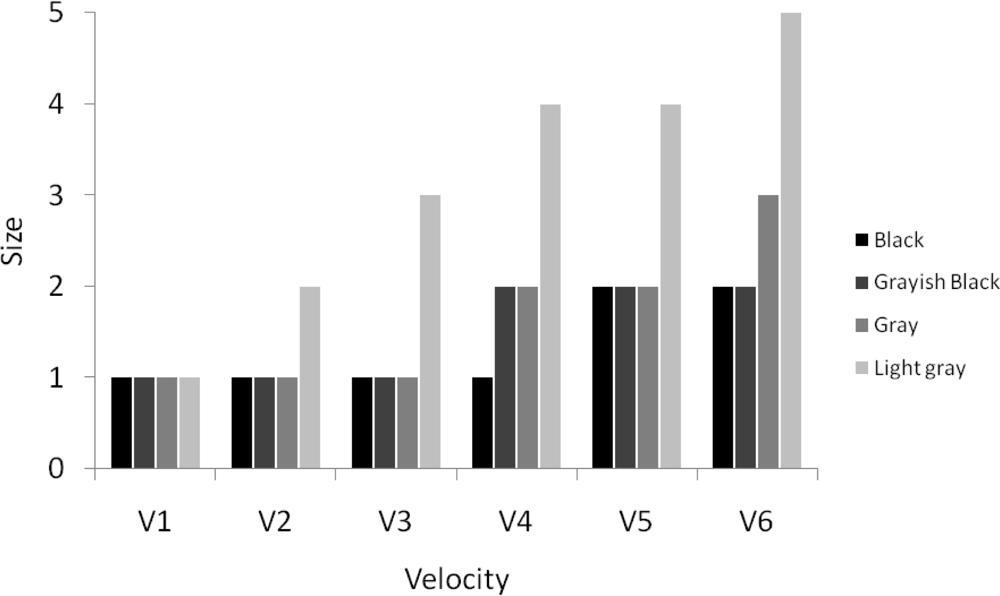

- - The first objective deals with webcam detection capability, where the detection limits for object velocity, contrast and size are analyzed in relation to the visibility of a bird on a webcam video. This is studied by means of an indoor experimental set-up recording artificial objects, i.e., pearls, attached to a pendulum to mimic flying objects.

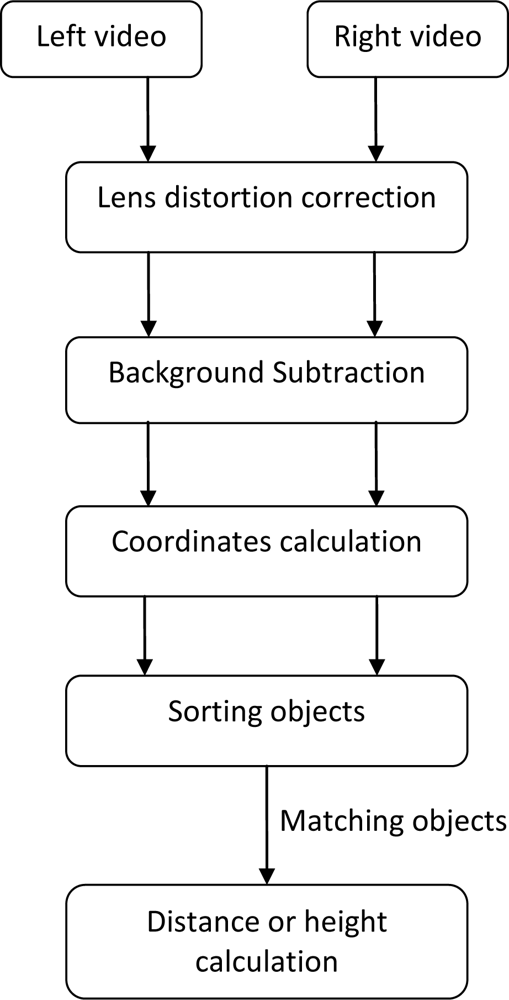

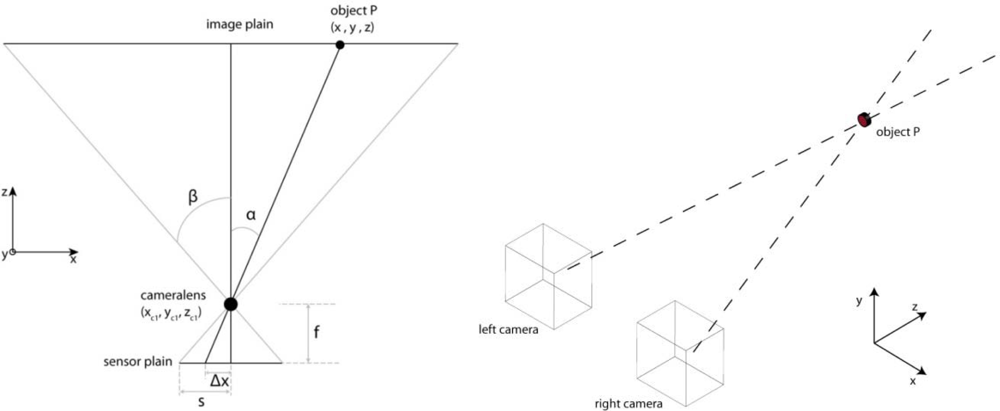

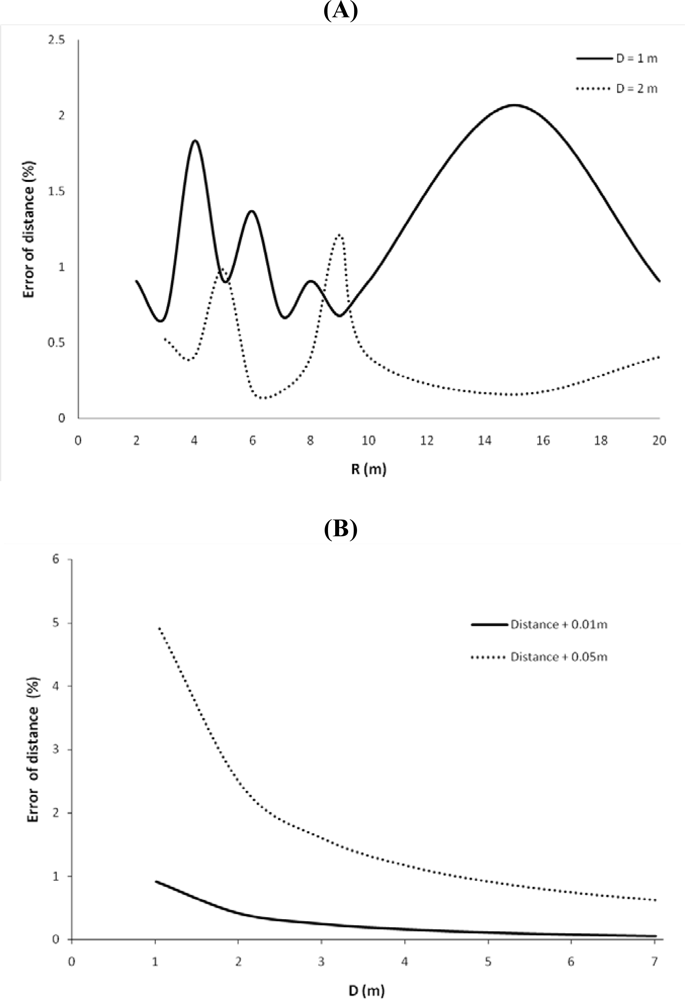

- - The second objective addresses the webcam tracking capability, where sources of error and their ranges are discussed. Therefore, a simple 3D-model was built linked to processing tools that allocate the correct coordinates to the correct objects.

2. Experimental Design

2.1. Materials

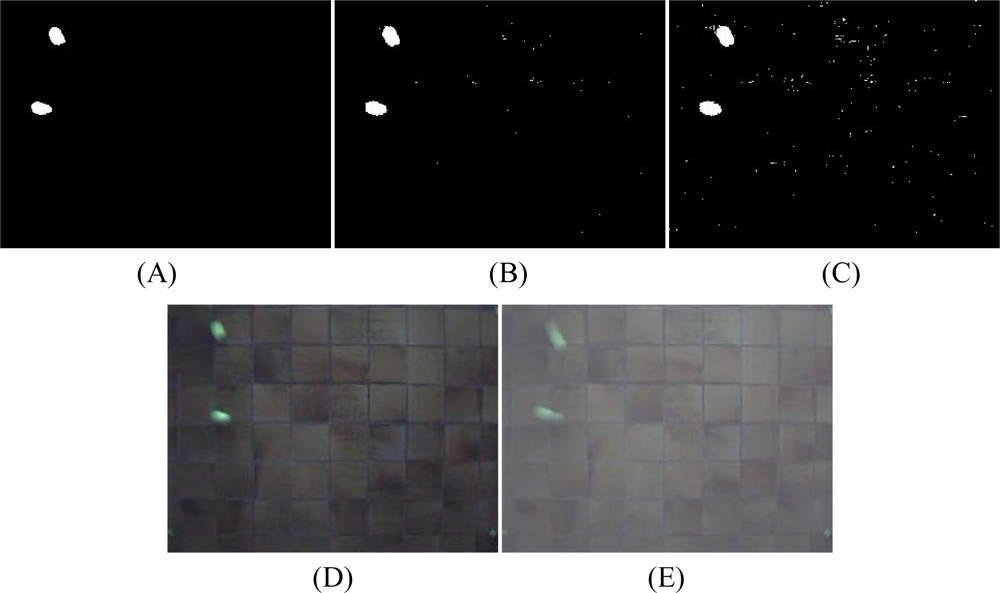

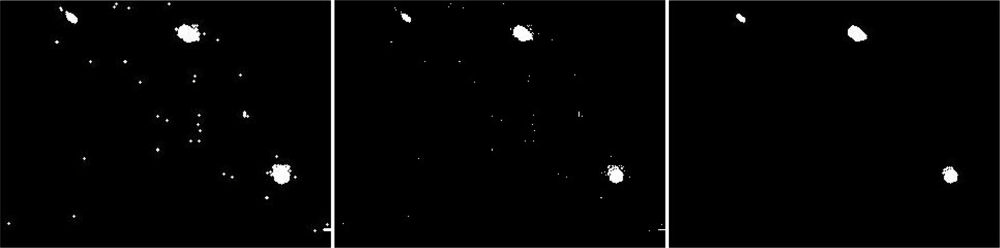

2.2. Measuring and Processing Protocols

2.3. Sources of Errors

3. Results and Discussion

3.1. The Webcam Detection Capability

3.2. The Webcam Position

3.3. The Webcam Tracking Capability: a 3D Model for Tracking Moving Objects

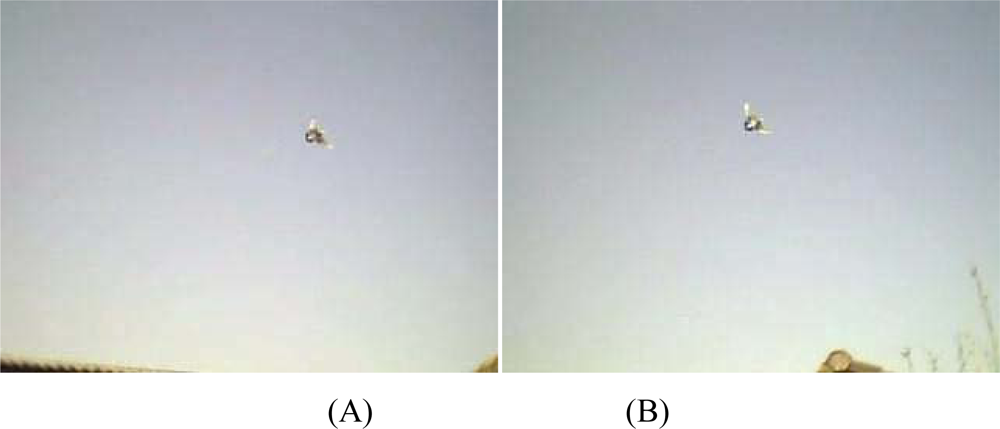

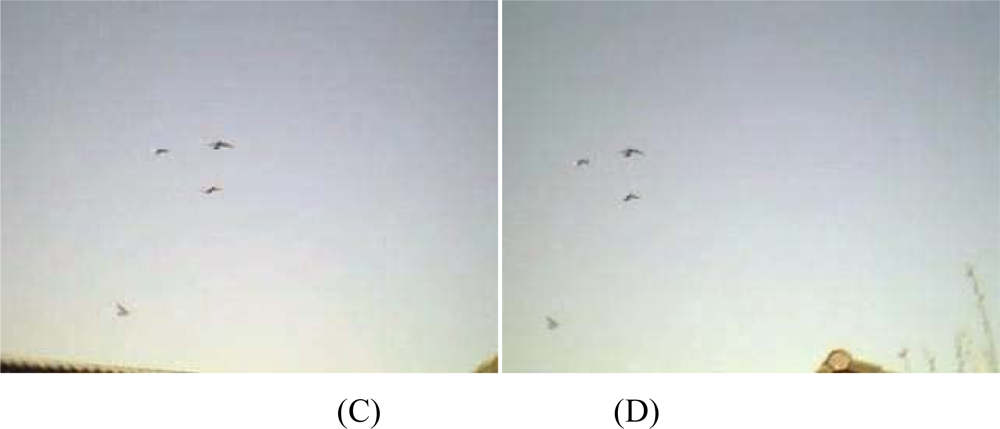

4. Application of the Webcams

5. Conclusions and Recommendations

- Establishing a complete set-up of webcams in the field in order to collect temporal and spatially distributed data. In such detailed studies the practical advantages, disadvantage, limitations and related technical issues of webcams can be revealed;

- Communication between cameras and a central data collection station (wireless);

- Real-time processing;

- Preferential hotspots to locate the networks (migratory pathways);

- Participation of volunteers (internet);

- Feedback to participants (internet);

References and Notes

- Alexander, J.D.; Seavy, N.E.; Hosten, P.E. Using conservation plans and bird monitoring to evaluate ecological effects of management: an example with fuels reduction activities in southwest Oregon. Forest Ecol. Manag 2007, 238, 375–383. [Google Scholar]

- Rao, J.R.; Millar, C.; Moore, J.E. Avian influenza, migratory birds and emerging zoonoses: unusual viral RNA, enteropathogens and Cryptosporidium in poultry litter. Biosci. Hypoth 2009, 2, 363–369. [Google Scholar]

- Tran, A.; Goutard, F.; Chamaille, L.; Baghdadi, N.; Lo Seen, D. Remote sensing and avian influenza: a review of image processing methods for extracting key variables affecting avian influenza virus survival in water from Earth Observation satellites. Int. J. Appl. Earth Obs. Geoinf 2010, 12, 1–8. [Google Scholar]

- Porter, J.; Lin, C.-C.; Smith, D.E.; Lu, S.-S. Ecological image databases: from the webcam to the researcher. Ecol. Infor 2009. [Google Scholar] [CrossRef]

- Tort, A.B.L.; Neto, W.P.; Amaral, O.B.; Kazlauckas, V.; Souza, D.O.; Lara, D.R. A simple webcam-based approach for the measurement of rodent locomotion and other behavioural parameters. J. Neurosci. Meth 2006, 157, 91–97. [Google Scholar]

- Olsen, B.; Munster, V.J.; Wallensten, A.; Waldenström, J.; Osterhaus, A.D.; Fouchier, R.A.M. Global patterns of influenza a virus in wild birds. Science 2006, 312, 384–388. [Google Scholar]

- Rappole, J.H.; Hubálek, Z. Birds and influenza H5N1 virus movement to and within North America. Emerg. Infect. Dis 2006, 12, 1486–1492. [Google Scholar]

- Salomon, S.; Webster, R.G. The influenza virus enigma. Cell 2009, 136, 402–410. [Google Scholar]

- Li, J.; Ren, Q.; Jianqin, Y. Study on transmission model of avian influenza. Proceedings of 2004 International Conference on Information Acquisition, Hefei, China, 20–25 June 2004; pp. 54–58.

- Algers, B.; Blokhuis, H.; Broom, D.; Capua, I.; Cinotti, S.; Gunn, M.; Hartung, J.; Have, P.; Vilanova, J.; Morton, D.; Pépin, M.; Pfeiffer, D.; Roberts, R.; Sánchez Vizcaino, J.; Schudel, A.; Sharp, J.; Thedoropoulos, G.; Vannier, P.; Verga, M.; Wierup, M.; Wooldridge, M. Migratory birds and their possible role in the spread of highly pathogenic Avian Influenza. EFSA J 2006, 357, 1–46. [Google Scholar]

- Paisley, L.; Vigre, H.; Bøtner, A. Avian Influenza in wild birds: evaluation of the risk of transmission to swine. Danish institute for infectious animal diseases. Available online: http://www.dfvf.dk/Admin/Public/DWSDownload.aspx?File=Files%2FFiler%2FHusdyrsygdomme%2FRisikovurdering%2FAvian_Influenza_in_wild_birds.pdf (accessed on 21 April, 2009).

- Whitworth, D.; Newman, S.H.; Mundkur, T.; Harris, P. Wild Birds and Avian Influenza: an Introduction to Applied Field Research and Disease Sampling Techniques; FAO Animal Production and Health Manual, FAO: Rome, Italy, 2007. [Google Scholar]

- Esteve, M.; Palau, C.E.; Martínez-Nohales, J.; Molina, B. A video streaming application for urban traffic management. J. Netw. Comput. Appl 2007, 30, 479–498. [Google Scholar]

- Lincoln, F.C.; Peterson, S.R.; Zimmerman, J.L. Migration of birds; US Department of the Interior, US Fish and Wildlife Service: Washington D.C. Circular 16. Northern Prairie Wildlife Research Center Online, 1998. Available online: http://www.npwrc.usgs.gov/resource/birds/migratio/index.htm. (accessed on 12 April, 2009).

- Lowery, G.H., Jr. A quantitative study of the nocturnal migration of birds. Univ. Kansas Pub. Mus. Nat. Hist 1951, 3, 361–472. [Google Scholar]

- Able, K.P.; Gauthreaux, S.A. Quantification of nocturnal passerine migration with a portable ceilometers. Condor 1975, 77, 92–96. [Google Scholar]

- Zehnder, S.; Akesson, S.; Liechti, F.; Bruderer, B. Nocturnal autumn bird migration at Falsterbo, South Sweden. J. Avian Biol 2001, 32, 239–248. [Google Scholar]

- Gauthreaux, S.A., Jr.; Livingston, J.W. Monitoring bird migration with a fixed-beam radar and a thermal-imaging camera. J. Field Ornithol 2006, 7, 319–328. [Google Scholar]

- Åkesson, S.; Karlsson, L.; Walinder, G.; Alerstam, T. Bimodal orientation and the occurrence of temporary reverse bird migration during autumn in south Scandinavia. Behav. Ecol. Sociobiol 1996, 38, 293–302. [Google Scholar]

- Whittingham, M.J. The use of radio telemetry to measure the feeding behavior of breeding European Golden Plovers. J. Field Ornithol 1996, 67, 463–470. [Google Scholar]

- Lewis, T.L.; Esler, D.; Boyd, W.S.; Zydelis, R. Nocturnal foraging behavior of wintering Surf Scoters and White-winged Scoters. Condor 2005, 107, 637–647. [Google Scholar]

- Steiner, I.; Burgi, C.; Werffeli, S.; Dell’Omo, G.; Valenti, P.; Troster, G.; Wolfer, D.P.; Lipp, H.P. A GPS logger and software for analysis of homing in pigeons and small mammals. Physiol. Behav 2000, 71, 589–596. [Google Scholar]

- Fitzpatrick, J.W.; Lammertink, M.; Luneau, D. Ivory-billed Woodpecker (Campephilus principalis) Persist in Continental North America. Science 2005, 308, 1460–1462. [Google Scholar]

- Bruderer, B. The Radar Window to Bird Migration. In Avian Migration; Berthold, P., Gwinner, E., Sonnenschein, E., Eds.; Springer-Verlag: Heidelberg, Germany, 2003; pp. 347–358. [Google Scholar]

- Brown, M.Z.; Burschka, D.; Hager, G.D. Advances in computational stereo. IEEE T. Pattern Ana. Mach. Intell 2003, 25, 993–1008. [Google Scholar]

- Wakabayashi, Y.; Aoki, M. Traffic flow measurement using stereo slit camera. IEEE 8th International Conference on Intelligent Transportation System, Vienna, Austria, February, 2005; pp. 727–732.

- Harvey, E.; Cappo, M.; Shortis, M.; Robson, S.; Buchanan, J.; Speare, P. The accuracy and precision of underwater measurements of length and maximum body depth of southern bluefin tuna (Thunnus maccoyii) with a stereo-video camera system. Fish. Res 2003, 63, 315–326. [Google Scholar]

- Costa, C.; Loy, A.; Cataudella, S.; Davis, D.; Scardi, M. Extracting fish size using dual underwater cameras. Aquacult. Eng 2006, 35, 218–227. [Google Scholar]

- Tjandranegara, E. Distance Estimation Algorithm for Stereo Pair Images; Technical report; School of Electrical and Computer Engineering, Purdue University: Purdue, IN, USA, 2005. [Google Scholar]

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE T. Pattern Ana. Mach. Intell 2003, 25, 1337–1342. [Google Scholar]

- Cheung, S.-C.; Kamath, C. Robust techniques for background subtraction in urban traffic video. Visual Communications and Image Processing, San Jose, CA, USA, 2004; pp. 881–892.

- Piccardi, M. Background subtraction techniques: a review. IEEE International Conference on Systems, Man & Cybernetics, The Hague, The Netherlands, 10–13 October, 2004; pp. 3099–3104.

- Matlab 7.5.0 Product Manual; The Math Works, Inc: Natick, MA, USA, 2007.

- Jacobson, R.E.; Ray, S.F.; Attridge, G.G.; Axford, R. The Manual of Photography: Photographic and Digital Imaging; Focal Press: Oxford, UK, 2000; p. 459. [Google Scholar]

- Tiddeman, B.; Duffy, N.; Rabey, G. A general method for overlap control in image warping. Comput. Graph 2001, 25, 59–66. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE T. Pattern Ana. Mach. Intell 2000, 22, 1330–1334. [Google Scholar]

- Sturm, P.; Ramalingam, S. A generic concept for camera calibration. Comput. Vis. Image Underst 2004, 2, 1–13. [Google Scholar]

- Chen, C.; Liang, J.; Zhao, H.; Hu, H.; Tian, J. Frame difference energy image for gait recognition with incomplete silhouettes. Pattern Recogn. Lett 2009, 30, 977–984. [Google Scholar]

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision; Volume I, Addison-Wesley: Reading, MA, USA, 1992; pp. 28–48. [Google Scholar]

- Magee, D.R. Tracking multiple vehicles using foreground, background and motion models. Image Vision Comput 2004, 22, 143–155. [Google Scholar]

- Stauffer, C.; Grimson, W.E.L. Learning patterns of activity using real-time tracking. IEEE T. Pattern Ana. Mach. Intell 2000, 22, 747–757. [Google Scholar]

- Elgammal, A.; Duraiswami, R.; Davis, L.S. Efficient non-parametric adaptive color modeling using fast Gauss transform. Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 2001; pp. 563–570.

- Okutomi, M.; Kanade, T. A multiple-baseline stereo. IEEE T. Pattern Ana. Mach. Intell 1993, 15, 353–363. [Google Scholar]

- Koch, R.; Pollefeys, M.; Van Gool, L. Multi-viewpoint stereo from uncalibrated videosequences. Proceedings of 5th European Conference on Computer Vision, Freiburg, Germany, 1998; pp. 55–71.

- Nakabo, Y.; Mukai, T.; Hattori, Y.; Takeuchi, Y.; Ohnishi, N. Variable baseline stereo tracking vision system using high-speed linear slider. Proceedings of 2005 IEEE Robotics Automation, Barcelona, Spain, April 18–22, 2005; pp. 1567–1572.

- Lerma, J.L.; Cabrelles, M. A review and analyses of plumb-line calibration. Photogramm. Rec 2007, 22, 135–150. [Google Scholar]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. IEEE International Conference on Intelligent Robots and Systems, Beijing, China, 2006; pp. 5695–5701.

- Remondino, F.; Fraser, C. Digital Camera Calibration Methods: Considerations and Comparisons. ISPRS Commission V Symposium Image Engineering and Vision Metrology, Dresden, Germany, 2006; pp. 266–272.

- Phar, M.; Humphreys, G. Physically Based Rendering: from Theory to Implementation; Morgan Kaufmann: San Francisco, CA, USA, 2004. [Google Scholar]

- Beven, K. A manifesto for the equifinality thesis. J. Hydrol 2006, 320, 18–36. [Google Scholar]

- Verstraeten, W.W.; Veroustraete, F.; Heyns, W.; Van Roey, T.; Feyen, J. On uncertainties in carbon flux modelling and remotely sensed data assimilation: the Brasschaat pixel case. Adv. Space Res 2008, 41, 20–35. [Google Scholar]

- Barthel, P.H.; Dougalis, P. New Holland European Bird Guide; New Holland Publishers: London, UK, 2008. [Google Scholar]

- Schmaljohann, H.; Bruderer, B.; Liechti, F. Sustained bird flights occur at temperatures far beyond expected limits. Anim. Behav 2008, 76, 1133–1138. [Google Scholar]

- Infrastructure for Measurements of the European Carbon Cycle: an Integrated Infrastructure Initiative (I3) under the Sixth Framework Programme of the European Commission. Available online: http://imecc.ipsl.jussieu.fr/index.html (accessed on 10 March 2010).

- FLUXNET. Available online: http://www.fluxnet.ornl.gov/fluxnet/index.cfm (accessed on 10 March 2010).

| Size | S1 | S2 | S3 | S4 | S5 | S1 | S2 | S3 | S4 | S5 |

|---|---|---|---|---|---|---|---|---|---|---|

| Velocity | Black | Grayish black | ||||||||

| V1 | 78 | 78 | 79 | 77 | 110 | 78 | 77 | 77 | 77 | 106 |

| V2 | 77 | 78 | 78 | 77 | 94 | 78 | 77 | 78 | 77 | 82 |

| V3 | 77 | 77 | 77 | 78 | 86 | 78 | 77 | 78 | 78 | 80 |

| V4 | 77 | 78 | 77 | 78 | 81 | 78 | 77 | 78 | 78 | 79 |

| V5 | 77 | 79 | 78 | 78 | 78 | 78 | 77 | 77 | 79 | 78 |

| V6 | 79 | 79 | 80 | 79 | 77 | 80 | 80 | 79 | 80 | 78 |

| Gray | Light gray | |||||||||

| V1 | 77 | 77 | 77 | 77 | 91 | 77 | 76 | 77 | 76 | 93 |

| V2 | 78 | 77 | 77 | 77 | 82 | 78 | 77 | 76 | 77 | 76 |

| V3 | 77 | 77 | 77 | 78 | 78 | 78 | 77 | 77 | 77 | 76 |

| V4 | 77 | 78 | 78 | 78 | 77 | 77 | 77 | 77 | 77 | 77 |

| V5 | 78 | 77 | 76 | 77 | 78 | 77 | 76 | 78 | 77 | 77 |

| V6 | 79 | 77 | 81 | 79 | 79 | 77 | 76 | 78 | 76 | 77 |

| Scientific name | English name | L (cm) | S (cm) |

|---|---|---|---|

| Anas penelope | Wigeon | 48 | 80 |

| Anser indicus | Bar-headed goose | 75 | 150 |

| Cygnus olor | Mute swan | 150 | 220 |

| Numenius arquata | Eurasian curlew | 54 | - |

| Tringa totanus | Common redshank | 26 | - |

| Charadrius dubius | Little ringed plover | 16 | - |

| Larus occidentalis | Western gull | 60 | - |

| Ardea cinerea | Grey heron | 95 | 185 |

| R (m) | Width (m) | Number of pixels per meter | |||

| Horizontal pixels | |||||

| 320 | 640 | 800 | 1024 | ||

| 1 | 0.93 | 343.12 | 686.24 | 857.80 | 1097.99 |

| 10 | 9.33 | 34.31 | 68.62 | 85.78 | 109.80 |

| 50 | 46.63 | 6.86 | 13.72 | 17.16 | 21.96 |

| 100 | 93.26 | 3.43 | 6.86 | 8.58 | 10.98 |

| 200 | 186.52 | 1.72 | 3.43 | 4.29 | 5.49 |

| 500 | 466.31 | 0.69 | 1.37 | 1.72 | 2.20 |

| R (m) | height (m) | Vertical pixels | |||

| 240 | 480 | 600 | 768 | ||

| 1 | 1.24 | 193.01 | 386.01 | 482.51 | 617.62 |

| 10 | 12.43 | 19.30 | 38.60 | 48.25 | 61.76 |

| 50 | 62.17 | 3.86 | 7.72 | 9.65 | 12.35 |

| 100 | 124.35 | 1.93 | 3.86 | 4.83 | 6.18 |

| 200 | 248.70 | 0.97 | 1.93 | 2.41 | 3.09 |

| 500 | 621.74 | 0.39 | 0.77 | 0.97 | 1.24 |

© 2010 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Verstraeten, W.W.; Vermeulen, B.; Stuckens, J.; Lhermitte, S.; Van der Zande, D.; Van Ranst, M.; Coppin, P. Webcams for Bird Detection and Monitoring: A Demonstration Study. Sensors 2010, 10, 3480-3503. https://doi.org/10.3390/s100403480

Verstraeten WW, Vermeulen B, Stuckens J, Lhermitte S, Van der Zande D, Van Ranst M, Coppin P. Webcams for Bird Detection and Monitoring: A Demonstration Study. Sensors. 2010; 10(4):3480-3503. https://doi.org/10.3390/s100403480

Chicago/Turabian StyleVerstraeten, Willem W., Bart Vermeulen, Jan Stuckens, Stefaan Lhermitte, Dimitry Van der Zande, Marc Van Ranst, and Pol Coppin. 2010. "Webcams for Bird Detection and Monitoring: A Demonstration Study" Sensors 10, no. 4: 3480-3503. https://doi.org/10.3390/s100403480