Abstract

Transfer learning seeks to improve the generalization performance of a target task by exploiting the knowledge learned from a related source task. Central questions include deciding what information one should transfer and when transfer can be beneficial. The latter question is related to the so-called negative transfer phenomenon, where the transferred source information actually reduces the generalization performance of the target task. This happens when the two tasks are sufficiently dissimilar. In this paper, we present a theoretical analysis of transfer learning by studying a pair of related perceptron learning tasks. Despite the simplicity of our model, it reproduces several key phenomena observed in practice. Specifically, our asymptotic analysis reveals a phase transition from negative transfer to positive transfer as the similarity of the two tasks moves past a well-defined threshold.

1. Introduction

Transfer learning [1,2,3,4,5] is a promising approach to improving the performance of machine learning tasks. It does so by exploiting the knowledge gained from a previously learned model, referred to as the source task, to improve the generalization performance of a related learning problem, referred to as the target task. One particular challenge in transfer learning is to avoid so-called negative transfer [6,7,8,9], where the transferred source information reduces the generalization performance of the target task. Recent literature [6,7,8,9] shows that negative transfer is closely related to the similarity between the source and target tasks. Transfer learning may hurt the generalization performance if the tasks are sufficiently dissimilar.

In this paper, we present a theoretical analysis of transfer learning by studying a pair of related perceptron learning tasks. Despite the simplicity of our model, it reproduces several key phenomena observed in practice. Specifically, the model reveals a sharp phase transition from negative transfer to positive transfer (i.e., when transfer becomes helpful) as a function of the model similarity.

1.1. Models and Learning Formulations

We start by describing the models for our theoretical study. We assume that the source task has a collection of training data , where is the source feature vector and denotes the label corresponding to . Following the standard teacher–student paradigm, we assume that the labels are generated according to the following model:

where is a scalar deterministic or probabilistic function and is an unknown source teacher vector.

Similar to the source task, the target task has access to a different collection of training data , generated according to

where is an unknown target teacher vector. We measure the similarity of the two tasks using

with indicating two uncorrelated tasks whereas means that the tasks are perfectly aligned.

For the source task, we learn the optimal weight vector by solving a convex optimization problem:

where is a regularization parameter and denotes some general loss function that can take one of the following two forms:

where is a convex function.

In this paper, we consider a common strategy in transfer learning [4], which consists of transferring the optimal source vector, i.e., , to the target task. One popular approach is to fix a (random) subset of the target weights to values of the corresponding optimal weights learned during the source training process [10]. In our learning model, this amounts to the following target learning formulation:

The vector is the optimal solution of the source learning problem, and is a diagonal matrix with diagonal entries drawn independently from a Bernoulli distribution with probability . Here, m denotes the number of transferred components. Thus, on average, we retain number of entries from the source optimal vector . In addition to a possible improvement in the generalization performance, this approach can considerably lower the computational complexity of the target learning task by reducing the number of free optimization variables. In what follows, we refer to as the transfer rate and call (6) the hard transfer formulation.

Another popular approach in transfer learning is to search for target weight vectors in the vicinity of the optimal source weight vector . This can be achieved by adding a regularization term to the target formulation [11,12], which in our model becomes

with denoting some weighting matrix. In what follows, we refer to (8) as the soft transfer formulation, since it relaxes the strict equality in (6). In fact, the hard transfer in (6) is just a special case of the soft transfer formulation, if we set to be a diagonal matrix in which the diagonal entries are either (with probability ) or 0 (with probability ).

To measure the performance of the transfer learning methods, we use the generalization error of the target task. Given a new data sample with , we assume that the target task predicts the corresponding label as

where is a predefined scalar function that might be different from . We then calculate the generalization error of the target task as

where the expectation is taken with respect to the new data . The variable allows us to write a more compact formula: is taken to be 0 for a regression problem and for a binary classification problem. Finally, we use the training error

to quantify the performance of the training process. Here, we measure the training error on the training data without regularization.

1.2. Main Contributions

The main contributions of this paper are two-fold, as summarized below:

1.2.1. Precise Asymptotic Analysis

We present a precise asymptotic analysis of the transfer learning approaches introduced in (6) and (8) for Gaussian feature vectors and under regularity conditions on the eigenvalue distribution of the weighting matrix . Specifically, we show that, as the dimensions grow to infinity with the ratios fixed, the generalization errors of the hard and soft formulations can be exactly characterized by the solutions of two low-dimensional deterministic optimization problems. (See Theorem 1 and Corollary 1 for details.) Our asymptotic predictions hold for any convex loss functions used in the training process, including the squared loss for regression problems and logistic loss commonly used for binary classification problems.

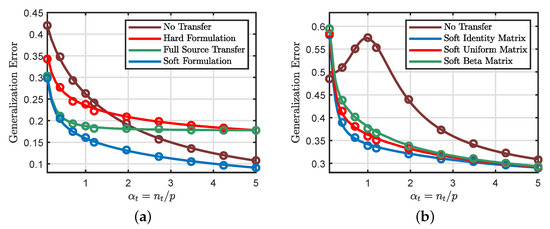

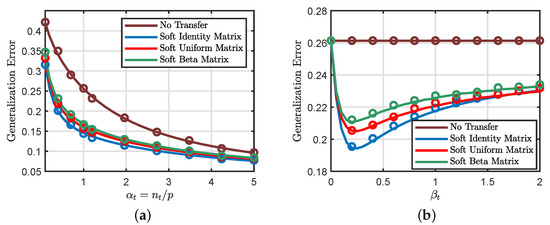

As illustrated in Figure 1, our theoretical predictions (drawn as solid lines in the figures) reach excellent agreement with the actual performance (shown as circles) of the transfer learning problem. Figure 1a considers a binary classification setting with logistic loss, and we plot the generalization errors of different transfer approaches as a function of the target data/dimension ratio . We can see that the hard transfer formulation (6) is only useful when is small. In fact, we encounter negative transfer (i.e., hard transfer performing worse than no transfer) when becomes sufficiently large. Moreover, the soft transfer formulation (8) seems to achieve more favorable generalization errors compared to the hard formulation. In Figure 1b, we consider a regression setting with a squared loss and explore the impact of different weighting schemes on the performance of the soft formulation. We can see that the soft formulation indeed considerably improves the generalization performance of the standard learning method (i.e., learning the target task without any knowledge transfer).

Figure 1.

Theoretical predictions v.s. numerical simulations obtained by averaging over 100 independent Monte Carlo trials with dimension . (a) Binary classification with logistic loss. We take , , , and , where and . The functions and are both the sign function. For hard transfer, we set the transfer rate to be . Full source transfer corresponds to , whereas no transfer corresponds to . (b) Nonlinear regression using quadratic loss, where is the ReLu function and is the identity function. Soft identity, beta, and uniform matrices refer to different choices of the weighting matrix in (8). Soft Identity Matrix: is an identity matrix. Soft Uniform Matrix: is a random matrix with diagonal elements drawn from the uniform distribution. Soft Beta Matrix: is a random matrix with diagonal elements drawn from the beta distribution. We scale all diagonal elements of to have the same mean. We also take , , and .

1.2.2. Phase Transitions

Our asymptotic characterizations reveal a phase transition phenomenon in the hard transfer formulation. Let

be the optimal transfer rate that minimizes the generalization error of the target task. Clearly, corresponds to the negative transfer regime, where transferring the knowledge of the source task will actually hurt the performance of the target task. In contract, signifies that we have entered the positive transfer regime, where transfer becomes helpful.

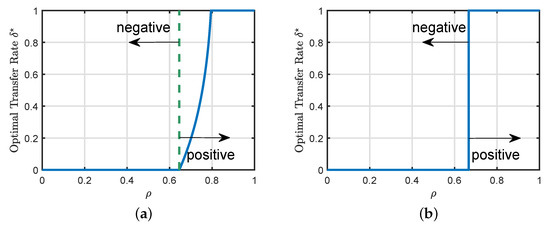

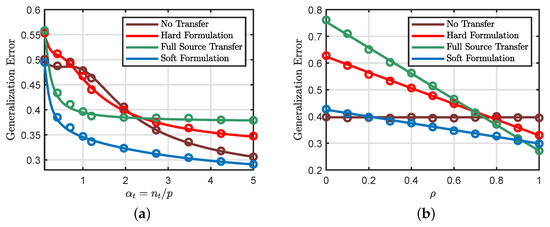

Figure 2a illustrates the phase transition from negative to positive transfer regimes in a binary classification setting, as the similarity between the two tasks moves past a critical threshold. Similar phase transition phenomena also appear in nonlinear regression, as shown in Figure 2b. Interestingly, for this setting, the optimal transfer rate jumps from to at the transition threshold.

Figure 2.

Phase transitions of the hard transfer formulation. When the similarity between the two tasks is small, we are in the negative transfer regime, where we should not transfer the knowledge from the source task. However, as moves past a critical threshold, we enter the positive transfer regime. (a) Binary classification with squared loss, with parameters , , and . Both and are the sign function. (b) Nonlinear regression with squared loss, with parameters , , and . is the ReLu function and is the identity function.

For general loss functions, the exact locations of the phase transitions can only be found numerically by solving the deterministic optimization problems in our asymptotic characterizations. For the special case of squared loss with no regularization, however, we are able to obtain the following simple analytical characterization for the phase transition threshold: We are in the positive transfer regime if and only if

where z is a standard Gaussian random variable. This result is shown in Proposition 1.

By the Cauchy–Schwarz inequality, . It follows that is an increasing function of and a decreasing function of . This property is consistent with our intuition: As we increase , the target task has more training data to work with, and thus, we should set a higher bar in terms of when to transfer knowledge. As we increase , the quality of the optimal source vector becomes better, in which case, we can start the transfer at a lower similarity level. In particular, when , we have and, thus, the inequality in (11) is never satisfied (because by definition). This indicates that no transfer should be done when the target task has more training data than the source task.

1.3. Related Work

The idea of transferring informaton between different domains or different tasks was first proposed in [1] and further developed in [2]. It has been attracting significant interest in recent literature [4,5,6,7,8,9,11,12]. While most work focuses on the practical aspects of transfer learning, there have been several studies (e.g., [13,14]) that seek to provide analytical understandings of transfer learning in simplified models. Our work is particularly related to [14], which considers a transfer learning model similar to ours but for the special case of linear regression. The analysis in this paper is more general as it considers arbitrary convex loss functions. We would also like to mention an interesting recent work that studies a different but related setting referred to as knowledge distillation [15].

In term of technical tools, our asymptotic predictions are derived using the convex Gaussian min–max theorem (CGMT). The CGMT was first introduced in [16] and further developed in [17]. It extends a Gaussian comparison inequality first introduced in [18]. It particularly uses convexity properties to show the equivalence between two Gaussian processes. The CGMT has been successfully used to analyze convex regression formulations [17,19,20] and convex classification formulations [21,22,23,24].

1.4. Organization

The rest of this paper is organized as follows. Section 2 states the technical assumptions under which our results are obtained. Section 3 provides an asymptotic characterization of the soft transfer formulation. Precise analysis of the hard transfer formulation is presented in Section 4. We provide remarks about our approach in Section 5. Our theoretical predictions hold for general convex loss functions. We specialize these results to the settings of nonlinear regression and binary classification in Section 6, where we also provide additional numerical results to validate our predictions. Section 7 provides detailed proof of the technical statements introduced in Section 3 and Section 4. Section 8 concludes the paper. The Appendix provides additional technical details.

2. Technical Assumptions

The theoretical analysis of this paper is carried out under the following assumptions.

Assumption 1

(Gaussian Feature Vectors). The feature vectors and are drawn independently from a standard Gaussian distribution. The vector can be expressed as , where the vectors and are independent from the feature vectors, and they are generated independently from a uniform distribution on the unit sphere.

Moreover, our results are valid in a high-dimensional asymptotic setting, where the dimensions p, , , and m grow to infinity at fixed ratios.

Assumption 2

(High-dimensional Asymptotic). The number of samples and the number of transferred components in hard transfer satisfy , , and , with , , and as .

The CGMT framework makes specific assumptions about the loss function and the feasibility sets. To guarantee these assumptions, this paper considers a family of loss functions that satisfy the following conditions. Note that the assumption is stated for the target task, but we assume that it is also valid for the source task.

Assumption 3

(Loss Function). If , the loss function defined in (5) is a proper convex function in . If , the loss function defined in (5) is a proper strongly convex function in , where the constant is a strong convexity parameter. In this case, we only consider the case when . Define a random function , where , with being a collection of independent standard normal random variables and ∼ denoting equality in distribution. Denote by the sub-differential set of . Then, for any constant , there exists a constant such that

Furthermore, we consider the following assumption to guarantee that the generalization error defined in (10) concentrates in the large system limit.

Assumption 4

(Regularity Conditions). The data-generating function is independent from the feature vectors. Moreover, the following conditions are satisfied.

- and are continuous almost everywhere in . For every and , we have and .

- For any compact interval , there exists a function such thatAdditionally, the function satisfies , where .

Finally, we introduce the following assumption to guarantee that the training and generalization errors of the soft formulation can be asymptotically characterized by deterministic optimization problems.

Assumption 5

(Weighting Matrix). Let , where Σ is the weighting matrix in the soft transfer formulation. Let and denote its two smallest eigenvalues. There exists a constant such that

Moreover, we assume that empirical distribution of the eigenvalues of the matrix Λ converges weakly to a probability distribution .

The above assumptions are essential to show that the soft formulation in (8) concentrates in the large system limit. We provide more details about these assumptions in Appendix A.

3. Sharp Asymptotic Analysis of Soft Transfer Formulation

In this section, we study the asymptotic properties of soft transfer formulation. Specifically, we provide a precise characterization of the training and generalization errors corresponding to (8).

The asymptotic performance of the source formulation defined in (4) has been studied in the literature [24]. In particular, it has been shown that the asymptotic limit of the source formulation in (4) can be quantified by the following deterministic optimization problem:

where and and are two independent standard Gaussian random variables. Furthermore, the function introduced in the scalar optimization problem (14) is the Moreau envelope function defined as

The expectation in (14) is taken over the random variables and .

In our work, we focus on the target problem with soft transfer, as formulated in (8). It turns out that the asymptotic performance of the target problem can also be characterized by a deterministic optimization problem:

where , and and are independent standard Gaussian random variables. Additionally, represents the minimum value of the random variable with distribution as defined in Assumption 5. In the formulation (16), the constants and are optimal solutions of the asymptotic formulation given in (14). Moreover, the functions and are defined as follows:

where the expectations are taken over the probability distribution defined in Assumption 5.

Theorem 1

(Precise Analysis of the Soft Transfer). Suppose that Assumptions 1–5 are satisfied. Then, the training error corresponding to the soft transfer formulation in (8) converges in probability as follows:

where denotes the minimum value achieved by the scalar formulation introduced in (16), and and are optimal solutions of the scalar formulation in (16). Moreover, the generalization error introduced in (10) corresponding to soft transfer formulation converges in probability as follows:

where and are two jointly Gaussian random variables with zero mean and a covariance matrix given by

The proof of Theorem 1 is based on the CGMT framework [17] (Theorem 6.1). A detailed proof is provided in Section 7.3. The statements in Theorem 1 are valid for a general convex loss function and general learning models that can be expressed as in (1) and (2). The analysis in Section 7.3 shows that the deterministic problems in (14) and (16) are the asymptotic limits of the source and target formulations given in (4) and (8), respectively. Moreover, it shows that the deterministic problems (14) and (16) are strictly convex in the minimization variables. This implies the uniqueness of the optimal solutions of the minimization problems.

Remark 1.

The results of the theorem show that the training and generalization errors corresponding to soft transfer formulation can be fully characterized using the optimal solutions of scalar formulation in (16). Moreover, from its definition, (16) depends on the optimal solutions of the scalar formulation in (14) of the source task. This shows that the precise asymptotic performance of the soft transfer formulation can be characterized after solving two scalar deterministic problems.

4. Sharp Asymptotic Analysis of Hard Transfer Formulation

In this section, we study the asymptotic properties of hard transfer formulation. We then use these predictions to rigorously prove the existence of phase transitions from negative to positive transfer.

4.1. Asymptotic Predictions

As mentioned earlier, the hard transfer formulation can be recovered from (8) as a special case where the eigenvalues of the matrix are with probability and 0 otherwise. Thus, we obtain the following result as a simple consequence of Theorem 1.

Corollary 1.

Suppose that Assumptions 1–4 are satisfied. Then, the asymptotic limit of the hard formulation defined in (6) is given by the following deterministic formulation:

Additionally, the training and generalization errors associated with the hard formulation converge in probability to the limits given in (17) and (18), respectively.

4.2. Phase Transitions

As illustrated in Figure 2, there is a phase transition phenomenon in the hard transfer formulation, where the problem moves from negative transfer to positive transfer as the similarity of the source and target tasks increases. For general loss functions, the exact location of the phase transition boundary can only be determined by numerically solving the scalar optimization problem in (19).

For the special case of squared loss, however, we are able to obtain analytical expressions. For the rest of this section, we restrict our discussions to the following special settings:

- (a)

- (b)

- (c)

- The data/dimension ratios and satisfy and .

We first consider a nonlinear regression task, where the function in the generative models (1) and (2) can be arbitrary and where the function in (9) is the identity function.

Proposition 1

The result of Proposition 1, for which the proof can be found in Section 7.4, shows that is the phase transition boundary separating the negative transfer regime from the positive transfer regime. When the similarity metric is , the optimal transfer ratio is , indicating that we should not transfer any source knowledge. Transfer becomes helpful only when moves past the threshold. Note that, for this particular model, there is also an interesting feature that the optimal jumps to 1 in the positive transfer phase, meaning that we should fully copy the source weight vector.

4.3. Sufficient Condition

Next, we consider a binary classification task, where the nonlinear functions and are both the sign function. In this part, we provide a sufficient condition for when the hard transfer is beneficial. Before stating our predictions, we need a few definitions related to the Moreau envelope function defined in (15). For simplicity of notation, we refer to the Moreau envelope function as . Based on [25], is differentiable in . We refer to its derivatives with respect to the first and second arguments as and , respectively. If is twice differentiable, we refer to its second derivative with respect to the first and second arguments as and , respectively. Additionally, we refer to its second derivative with respect to the first then the second arguments as .

We define , , and as the optimal solutions of the standard learning formulation (i.e., in (19)). Moreover, we define the constants and as follows:

where and are optimal solutions of the deterministic source formulation given in (14). Define the constants , , , and as follows:

where , and H and S are two independent standard Gaussian random variables. Now, define the constants , , , and as follows:

Finally, define the constants , , , and as follows:

Now, we are ready to state our sufficient condition.

Proposition 2

(Classification). Assume that the Moreau envelope function is twice continuously differentiable almost everywhere in and that the above expectations are all well-defined. Moreover, assume that both and are the sign function. Then,

where and are solutions to the following linear system of equations:

We prove this result at the end of Section 7. Note that Proposition 2 is valid for a general family of loss functions and general regularization strength . For instance, we can see that the results stated in Proposition 2 are valid for the squared loss and the least absolute deviation (LAD) loss, i.e.,

Unlike (20), the result in (22) only provides a sufficient condition for when the hard transfer is beneficial. Nevertheless, our numerical simulations show that the sufficient condition in (22) provides a good prediction of the phase transition boundary for the majority of parameter settings.

5. Remarks

5.1. Learning Formulations

Given that the target task predicts the new label with , it is more natural to consider loss functions satisfying the following form:

In this case, the convexity assumption is not necessarily satisfied since the loss function can be viewed as the composition of a convex function with a nonlinear function. To guarantee the convexity, we need additional assumptions on the function . Moreover, note that, once the convexity is guaranteed, the function can be absorbed by the loss function .

5.2. Transition from Negative to Positive Transfer

Our first simulation example in Figure 2 shows that the optimal transfer rate can be 1 while the similarity is still less than 1. Here, we provide an intuitive explanation of this behavior.

Given that the source and target feature vectors are generated from the same distribution, one can see that the source labels can be equivalently expressed as follows:

where is an additive noise caused by the mismatch between the source and target hidden vectors. Moreover, note that the noise strength depends on the similarity measure .

First, consider the case when the number of source samples is bigger than the number of target samples (i.e., ). We can see that a large value of means that the source and target models are very closely related. Then, one can expect that the additional available data in the source task will be capable of defeating the effects of noise in (25) for large values of . Specifically, it is expected in this regime that the source model will perform better than the standard learning formulation for values of close to 1. However, as we decrease the similarity , the source model will have a small information about the target data. Then, the performance of the hard formulation is expected to be lower than the standard formulation for small values of . In this regime, the source information may hurt the generalization performance of the target task. Then, we need to only transfer a portion of the source information (see Figure 2a). In some settings, the transition is sharp, which means that the source information is irrelevant for the target task when is smaller than a threshold (see Figure 2b).

Second, consider the case when the number of source samples is smaller than the number of target samples (i.e., ). Given the observation in (25), the performance of the standard method is expected to be better than the hard formulation for all possible values of in this regime (see Figure 7).

6. Additional Simulation Results

In this section, we provide additional simulation examples to confirm our asymptotic analysis and illustrate the phase transition phenomenon. In our experiments, we focus on the regression and classification models.

6.1. Model Assumptions

For the regression model, we assume that the source, target, and test data are generated according to

The data can be the training data of the source or target tasks. In this regression model, we assume that the function is the identity function, i.e., . Then, the generalization error corresponding to the soft formulation converges in probability as follows:

where c and v are defined as follows

where z is a standard Gaussian random variable and and are defined in Theorem 1. Additionally, the asymptotic limit of the generalization error corresponding to the hard formulation can be expressed in a similar fashion.

For the binary classification model, we assume that the source, target, and test data labels are binary and generated as follows:

where the data can be the training data of the source and target tasks. In this classification model, the objective is to predict the correct sign of any unseen sample . Then, we fix the function to be the sign function. Following Theorem 1, it can be easily shown that the generalization error corresponding to the soft formulation given in (8) converges in probability as follows:

Here, and are optimal solutions of the target scalar formulation given in (16). The generalization error corresponding to the hard formulation given in (6) can be expressed in a similar fashion.

6.2. Phase Transitions in the Hard Formulation

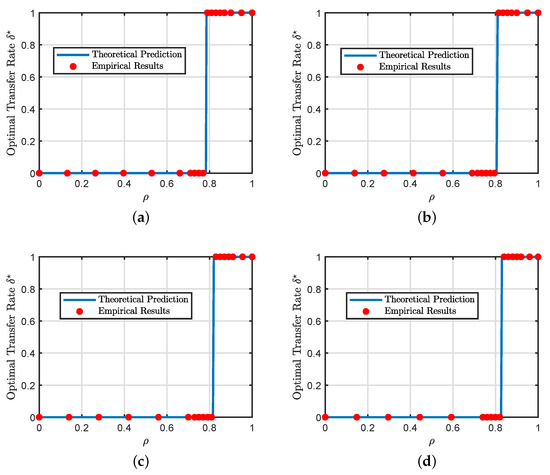

In Section 4, we presented analytical formulas for the phase transition phenomenon but only for the special case of squared loss with no regularization. The main purpose of this experiment, shown in Figure 3, is to demonstrate that the phase transition phenomenon still takes place in more general settings with different loss functions and regularization strengths.

Figure 3.

Additional illustrations of the phase transition phenomenon. (a) Regression (squared loss, , and ) (b) Regression (squared loss, , and ) (c) Binary classification (squared loss, , and ) (d) Binary classification (hinge loss, , and ). In all the experiments, we set the regularization strength to be . The blue line represents our theoretical predictions of the optimal transfer rate obtained by solving our asymptotic results in Section 4 for multiple values of . The empirical results are averaged over 100 independent Monte Carlo trials with .

6.3. Sufficient Condition for the Hard Formulation

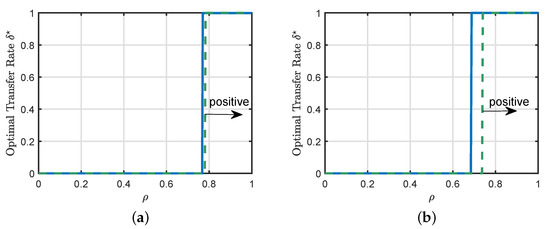

In Section 4, we presented a sufficient condition for positive transfer. This sufficient condition is valid for a general family of loss functions and a general regularization strength. The main purpose of this experiment, shown in Figure 4, is to illustrate the precision of the sufficient condition for two particular loss functions, i.e., the squared loss and LAD loss.

Figure 4.

Illustrations of the sufficient condition in Proposition 2. (a) Classification (squared loss, , and ) (b) Classification (LAD loss, , and ). In all the experiments, we set the regularization strength to be . The blue line represents our theoretical predictions of the optimal transfer rate obtained by solving our asymptotic results in Section 4 for multiple values of . The green line represents our sufficient condition for positive transfer stated in Proposition 2.

In all the cases shown in Figure 4, we can see that the transition from negative to positive transfer is a discontinuous jump from standard learning to full source transfer. Additionally, Figure 4a,b show that the sufficient condition summarized in Proposition 2 provides a good prediction of the phase transition boundary for the considered setting.

6.4. Soft Transfer: Impact of the Weighting Matrix and Regularization Strength

In this experiment, we empirically explore the impact of the weighting matrix on the generalization error corresponding to the soft formulation. We focus on the binary classification problem with logistic loss. The weighting matrix in (8) takes the following form:

where is a diagonal matrix generated in three different ways. (1) Soft Identity: is an identity matrix; (2) Soft Uniform: the diagonal entries of are drawn independently from the uniform distribution and then scaled to have their mean equal to 1; and (3): Soft Beta: similar to (2), but with the diagonal entries drawn from the beta distribution, followed by rescaling to the unit mean.

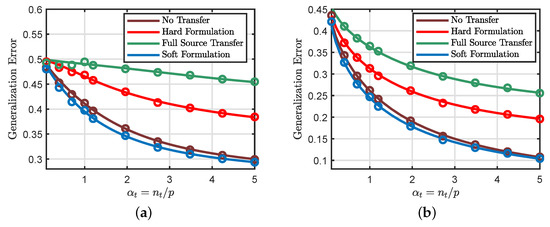

Figure 5a shows that the considered weighting matrix choices have similar generalization performances, with the identity matrix being slightly better than the other alternatives. Moreover, Figure 5b illustrates the effects of the parameter in (28) on the generalization performance. It points to the interesting possibility of “designing” the optimal weight matrix to minimize the generalization error.

Figure 5.

Continuous line: theoretical predictions. Circles: numerical simulations. (a) , , , and . (b) , , , and . In all the experiments, we consider the binary classification problem with the logistic loss function. The empirical results are averaged over 50 independent Monte Carlo trials, and we set .

6.5. Soft and Hard Transfer Comparison

In this simulation example, we consider the regression model and compare the performances of the hard and soft transfer formulations as functions of and .

Figure 6a shows that the soft formulation provides the best generalization performance for all values of . Moreover, we can see that the hard transfer formulation is only useful for small values . Figure 6b shows that the performance of the soft and hard transfer formulations depend on the similarity between the source and target tasks. Specifically, the generalization performances of different transfer approaches all improve as we increase the similarity measure . We can also see that the full source transfer approach provides the lowest generalization error when the similarity measure is close to 1, while the soft transfer method leads to the best generalization performance at moderate values of the similarity measure. At very small values of , which means that the two tasks share little resemblance, the standard learning method (i.e., no transfer) is the best scheme one should use.

Figure 6.

Continuous line: theoretical predictions. Circles: numerical simulations. (a) , , and . (b) , , and . In all the experiments, we consider the regression setting with a squared loss. The hard transfer formulation uses , and the soft transfer formulation uses an identity weighting matrix. The empirical results are averaged over 50 independent Monte Carlo trials and we set .

6.6. Effects of the Source Parameters

In the last simulation example, we consider the regression and classification models. We study the performance of the hard and soft transfer formulations when .

Figure 7a considers the regression model. It first shows that the soft transfer formulation provides a slightly better generalization performance compared to the standard method. This behavior can be explained by the fact that the soft formulation requires the target weight vector to be close and not necessarily equal to the source weight vector. Additionally, the source model carries some information about the target task.

Figure 7.

Continuous line: theoretical predictions. Circles: numerical simulations. (a) , , and . We consider the regression setting with a squared loss. (b) , , and . We consider the classification setting with a logistic loss. The hard transfer formulation uses , and the soft transfer formulation uses an identity weighting matrix. The empirical results are averaged over 60 independent Monte Carlo trials, and we set .

We can also see that the hard transfer approach is not beneficial when the number of source samples is smaller than the number of target samples. This result can be explained by the fact that the hard formulation restricts some entries in the target weight vector to be exactly equal to the corresponding entries in the source weight vector. Moreover, the source model is not perfectly aligned with the target model and has smaller data than the target model (see Section 5.2).

The same behavior can be observed in Figure 7b, which considers the classification model.

7. Technical Details

In this section, we provide a detailed proof of Theorem 1, and Proportions 1 and 2. Specifically, we focus on analyzing the generalized formulation in (8) using the CGMT framework introduced in the following part.

7.1. Technical Tool: Convex Gaussian Min–Max Theorem

The CGMT provides an asymptotic equivalent formulation of primary optimization (PO) problems of the following form:

Specifically, the CGMT shows that the PO given in (29) is asymptotically equivalent to the following formulation:

referred to as the auxiliary optimization (AO) problem. Before showing the equivalence between PO and AO, the CGMT assumes that , , and ; that all have independent and identically distributed standard normal entries; that the feasibility sets and are convex and compact; and that the function is continuous convex-concave on . Moreover, the function is independent of the matrix . Under these assumptions, the CGMT [17] (Theorem 6.1) shows that, for any and , the following holds:

Additionally, the CGMT [17] (Theorem 6.1) provides the following conditions under which the optimal solutions of the PO and AO concentrates around the same set.

Theorem 2

(CGMT Framework). Consider an open set . Moreover, define the set . Let and be the optimal cost values of AO formulation in (30) with feasibility sets and , respectively. Assume that the following properties are all satisfied:

- (1)

- There exists a constant ϕ such that the optimal cost converges in probability to ϕ as p goes to .

- (2)

- There exists a positive constant such that with probability going to 1 as .

Then, the following convergence in probability holds:

where and are the optimal cost and the optimal solution of the PO formulation in (29).

Theorem 2 allows us to analyze the generally easy AO problem to infer the asymptotic properties of the generally hard PO problem. Next, we use the CGMT to rigorously prove the technical results presented in Theorem 1.

7.2. Precise Analysis of the Source Formulation

The source formulation defined in (4) is well-studied in recent literature [26]. Specifically, it has been rigorously proven that the performance of the source formulation can be fully characterized after solving the following scalar formulation:

where , and and are two independent standard Gaussian random variables. The expectation in (32) is taken over the random variables and . Furthermore, the function introduced in the scalar optimization problem (32) is the Moreau envelope function defined in (15).

7.3. Precise Analysis of the Soft Transfer Approach

In this part, we provide a precise asymptotic analysis of the generalized transfer formulation given in (8). Specifically, we focus on analyzing the following formulation:

where is the optimal solution of the source formulation given in (4). Note that the vector is independent of the training data of the target task. For simplicity of notation, we denote by the training data of the target task. Here, we use the CGMT framework introduced in Section 7.1 to precisely analyze the above formulation.

7.3.1. Formulating the Auxiliary Optimization Problem

Our first objective is to rewrite the generalized formulation in the form of the PO problem given in (29). To this end, we introduce additional optimization variables. Specifically, the generalized formulation can be equivalently formulated as follows:

where the optimization vector is formed as and the data matrix is given by . Additionally, the function denotes the convex conjugate function of the loss function . First, observe that the CGMT framework assumes that the feasibility sets of the minimization and maximization problems are compact. Then, our next step is to show that the formulation given in (34) satisfies this assumption.

Lemma 1

(Primal-Dual Compactness). Assume that and are optimal solutions of the optimization problem in (34). Then, there exist two constants and such that the following convergence in probability holds:

A detailed proof of Lemma 1 is provided in Appendix B. The proof of the above result follows using Assumption 3 to prove the compactness of the optimal solution . Moreover, it uses the asymptotic results in [27] (Theorem 2.1), which provides the concentration properties of the minimum and maximum eigenvalues of random matrices. To show the compactness of the optimal dual vector , we use Assumption 3 and the result in [25] (Proposition 11.3), which provides the inversion rules for subgradient relations.

The theoretical result in Lemma 1 shows that the optimization problem in (34) can be equivalently formulated with compact feasibility sets on events with probability going to one. Then, it suffices to study the constrained version of (34). Note that the data labels depend on the data matrix . Then, one can decompose the matrix as follows:

where the matrix denotes the projection matrix onto the space spanned by the vector and the matrix denotes the projection matrix onto the orthogonal complement of the space spanned by the vector . Note that we can express as follows without changing its statistics:

where and the components of the matrix are drawn independently from a standard Gaussian distribution and where and are independent. Here, (36) represents an equality in distribution. This means that the formulation in (34) can be expressed as follows:

where the set is defined as . Note that the formulation in (37) is in the form of the primary formulation given in (29). Here, the function is defined as follows:

One can easily see that the optimization problem in (37) has compact convex feasibility sets. Moreover, the function is continuous, convex–concave, and independent of the Gaussian matrix . This shows that the assumptions of the CGMT are all satisfied by the primary formulation in (37). Then, following the CGMT framework, the auxiliary formulation corresponding to our primary problem in (37) can be expressed as follows:

where and are two independent standard Gaussian vectors. The rest of the proof focuses on simplifying the obtained AO formulation and on studying its asymptotic properties.

7.3.2. Simplifying the AO Problem of the Target Task

Here, we focus on simplifying the auxiliary formulation corresponding to the target task. We start our analysis by decomposing the target optimization vector as follows:

where is a free vector and is formed by an orthonormal basis orthogonal to the vector . Now, define the variable as follows: . Based on the result in Lemma 1 and the decomposition in (40), there exist , , and such that our auxiliary formulation can be asymptotically expressed in terms of the variables and as follows:

Here, we drop terms independent of the optimization variables and the matrix is defined as . Additionally, the feasibility set is defined as follows:

Here, the sequence of random variables and are defined as follows:

Next, we focus on simplifying the obtained auxiliary formulation. Our strategy is to solve over the direction of the optimization vector . This step requires an interchange between non-convex minimization and non-concave maximization. We can justify the interchange using the theoretical result in [17] (Lemma A.3). The main argument in [17] (Lemma A.3) is that the strong convexity of the primary formulation in (37) allows us to perform such an interchange in the corresponding auxiliary formulation. The optimization problem over the vector with fixed norm, i.e., , can be formulated as follows:

Here, we ignore constant terms independent of , and the matrix and the vector can be expressed as follows:

The optimization problem in (43) is non-convex given the norm equality constraint. It is well-studied in the literature [28] and is known as the trust region subproblem. Using the same analysis as in [20], the optimal cost value of the optimization problem (43) can be expressed in terms of a one-dimensional optimization problem as follows:

where is the minimum eigenvalue of the matrix , denoted by . This result can be seen by equivalently formulating the non-convex problem in (43) as follows:

Then, we show that the optimal satisfies a constraint that preserves the convexity over . This allows us to interchange the maximization and minimization and to solve over the vector . The above analysis shows that the AO formulation corresponding to our primary problem can be expressed as follows:

where the set has the same definition as the set except that we replace with . Here, the sequence of random functions , , , and can be expressed as follows:

Note that the formulation in (45) is obtained after dropping terms that converge in probability to zero. This simplification can be justified using a similar analysis to that in [20] (Lemma 3). The main idea in [20] (Lemma 3) is to show that both loss functions converge uniformly to the same limit.

Next, the objective is to simplify the obtained AO formulation over the optimization vector . Based on the property stated in [20] (Lemma 4), the optimization over the vector can be expressed as follows:

This result is valid on events with probability going to one as p goes to . Here, the function is the Moreau envelope function defined in (15). The proof of this property is omitted since it follows the same ideas as [20] (Lemma 4). The main idea in [20] (Lemma 4) is to use Assumption 3 to show that the optimal solution of the unconstrained version of the maximization problem is bounded asymptotically and then to use the property introduced in [25] (Example 11.26) to complete the proof. Now, our auxiliary formulation can be asymptotically simplified to a scalar optimization problem as follows:

where the functions , , and are defined as follows:

Note that the auxiliary formulation in (46) now has scalar optimization variables. Then, it remains to study its asymptotic properties. We refer to this problem as the target scalar formulation.

7.3.3. Asymptotic Analysis of the Target Scalar Formulation

In this part, we study the asymptotic properties of the target scalar formulation expressed in (46). We start our analysis by studying the asymptotic properties of the sequence of random functions , , , and as given in the following lemma.

Lemma 2

(Asymptotic Properties). First, the random variable converges in probability to , where is defined in Assumption 5. For any fixed , the following convergence in probability holds true:

Here, the deterministic functions , , , , and are defined as follows:

Moreover, the constants and are optimal solutions of the source asymptotic formulation defined in (32).

A detailed proof of Lemma 2 is provided in Appendix C. Now that we obtained the asymptotic properties of the sequence of random variables, it remains to study the asymptotic properties of the optimal cost and optimal solution set of the scalar formulation in (46). To state our first asymptotic result, we define the following deterministic optimization problem:

where and are two independent standard Gaussian random variables and . Here, the function denotes the Moreau envelope function defined in (15) and the expectation is taken over the random variables and , and the possibly random function . Now, we are ready to state our asymptotic property of the cost function of (46).

Lemma 3

(Cost Function of the Traget AO Formulation). Define as the loss function of the target scalar optimization problem given in (46). Additionally, define as the cost function of the deterministic formulation in (49). Then, the following convergence in probability holds true:

for any fixed feasible , , and .

The proof of the asymptotic property stated in Lemma 3 uses the asymptotic results stated in Lemma 2. Moreover, it uses the weak law of large numbers to show that the empirical mean of the Moreau envelope concentrates around its expected value. Based on Assumption 3, one can see that the following pointwise convergence is valid:

where H and S are independent standard Gaussian random variables and . The above property is valid for any , , and . Based on [25] (Theorem 2.26), the Moreau envelope function is convex and continuously differentiable with respect to . Combining this with [29] (Theorem 7.46), the above asymptotic function is continuous in . Then, using Lemma 2, the uniform convergence, and the continuity property, we conclude that the empirical average of the Moreau envelope converges in probability to the following function:

for any fixed feasible , , and . This completes the proof of Lemma 3.

Before continuing our analysis, we provide the convexity properties of the cost function of the deterministic problem in (49) in the following lemma.

Lemma 4

(Strong Conexity). Define as the cost function of the optimization problem in (49). Then, is concave in the maximization variable for any fixed feasible . Moreover, define the function as follows:

Then, the function is strongly convex in the minimization variables .

The proof of Lemma 4 is provided in Appendix D. Now, we use these properties to show that the optimal solution set of the formulation in (46) converges in probability to the optimal solution set of the formulation in (49).

Lemma 5

(Consistency of the Target AO Formulation). Define and as the optimal set of of the optimization problems formulated in (46) and (49). Moreover, define and as the optimal cost values of the optimization problems formulated in (46) and (49). Then, the following converges in probability holds true:

where denotes the deviation between the sets and and is defined as .

The stated result can be proven by first observing that the loss function corresponding to the deterministic formulation in (49) satisfies the following:

for any and any fixed . Combining this with the convergence result in Lemma 3, ref. [17] (Lemma B.1), and [17] (Lemma B.2), we obtain the following asymptotic result:

Here, the results in [17] (Lemma B.1) and [17] (Lemma B.2) provide convergence properties of minimization problems over open sets. Note that, if , the supremum in the above convergence result occurs at . However, it can be checked that the above convergence result still holds. Based on Lemma 4, the cost function of the minimization problem in (49) is strongly convex in . Moreover, the feasibility set of the minimization problem is convex and compact. Additionally, the cost function of the minimization problem in (49) is continuous in the feasibility set. Then, using the results in [30] (Theorem II.1) and [31] (Theorem 2.1), we obtain the convergence properties stated in Lemma 5. Here, the results in [30] (Theorem II.1) and [31] (Theorem 2.1) provide uniform convergence and consistency properties of convex optimization problems.

Now that we obtained the asymptotic problem, it remains to study the asymptotic properties of the training and generalization errors corresponding to the target formulation in (8).

7.3.4. Specialization to Hard Formulation

Before starting the analysis of the generalization error, we specialize our general analysis to the hard transfer formulation. First, note that implies that the hard transfer formulation is equivalent to the source formulation. Next, we assume that . To obtain the asymptotic limit of the hard formulation, we specialize the general results in (49) to the following probability distribution:

Note that the probability distribution in (55) satisfies Assumption 5. Then, the asymptotic limit of the soft formulation corresponding to the probability distribution , defined in (55), can be expressed as follows:

This shows that the asymptotic limit of the hard formulation is the deterministic problem (56).

7.3.5. Asymptotic Analysis of the Training and Generalization Errors

First, the generalization error corresponding to the target task is given by

where is an unseen target feature vector. Now, consider the following two random variables

Given and , the random variables and have a bivaraite Gaussian distribution with zero mean vector and covariance matrix given as follows:

To precisely analyze the asymptotic behavior of the generalization error, it suffices to analyze the properties of the covariance matrix . Define the random variables and for the target task as follows:

where is defined in Section 7.3.2. Then, the covariance matrix given in (58) can be expressed as follows:

Hence, to study the asymptotic properties of the generalization error, it suffices to study the asymptotic properties of the random quantities and .

Lemma 6

(Consistency of the Target Formulation). The random quantities and satisfy the following asymptotic properties:

where and are the optimal solutions of the deterministic formulation stated in (49).

To prove the above asymptotic result, we define and as follows:

where is the optimal solution of the auxiliary formulation in (39). Given the result in Lemma 5 and the analysis in Section 7.3.2 and Section 7.3.3, the convergence result in Lemma 5 is also satisfied by our auxiliary formulation in (39), i.e.,

The rest of the proof of the convergence result stated in Lemma 6 is based on the CGMT framework, i.e., Theorem 2. Specifically, it follows after showing that the assumptions in Theorem 2 are all satisfied. First, we define the set in Theorem 2 as follows:

where and are the optimal solutions of the deterministic formulation stated in (49). Note that the cost function of the problem (49) is strongly convex in the minimization variables. Based on the analysis in the previous sections, note that the feasibility sets of the problems defined in Theorem 2 are compact asymptotically. Moreover, the analysis in the previous sections shows that there exists a constant such that the optimal cost defined in Theorem 2 converges in probability to as p goes to . Additionally, the same analysis in the previous sections shows that there exists a constant such that the optimal cost defined in Theorem 2 converges in probability to as p goes to . The strong convexity property of the cost function of the optimization problem in (49) can then be used to show that there exists such that . This implies that the second assumption in Theorem 2 is satisfied for the considered set and any fixed . This then shows that the convergence results in Lemma 6 are all satisfied.

Note that the CGMT framework applied to prove Lemma 6 also shows that the optimal cost value of the soft target formulation in (8) converges in probability to the optimal cost value of the deterministic formulation given in (49). Combining this with the result in Lemma 6 shows the convergence property of the training error stated in (17). Now, it remains to show the convergence of the generalization error. It suffices to show that the generalization error defined in (57) is continuous in the quantities and . This follows based on Assumption 4 and the continuity under integral sign property [32]. This shows the convergence result in (18), which completes the proof of Theorem 1. Note that the above analysis of the soft target formulation in (8) is valid for any choice of and that satisfy the result in Lemma 1. One can ignore these bounds given the convexity properties of the deterministic formulation in (49). This leads to the scalar formulations introduced in (16) and (19).

7.4. Phase Transitions in Hard Formulation

In this part, we provide a rigorous proof of Proposition 1. Here, we consider the squared loss function. In this case, the deterministic source formulation given in (14) can be simplified as follows:

where the constants and are defined as and , , and is a standard Gaussian random variable. Additionally, the target scalar formulation given in (16) can be simplified as follows:

where the constants and are defined as and , , and is a standard Gaussian random variable. Under the conditions stated in Proposition 1, the source deterministic formulation given in (62) can be simplified as follows:

Note that one can easily solve the variables and . Specifically, the optimal solutions of (64) can be expressed as follows:

Moreover, the target deterministic formulation given in (63) can be expressed as follows:

where and are given by

Before solving the optimization problem in (66), we consider the following change in variable:

Note that the above change in variable is valid since the formulation in (66) requires the left-hand side of (68) to be positive. Therefore, the formulation in (66) can be expressed in terms of instead of as follows:

Now, it can be easily checked that the above optimization problem can be solved over the variable to give the following formulation:

It is now clear that one can solve the problem in (69) in closed form. Moreover, it can be easily checked that the optimal solutions of the optimization problem (66) can be expressed as follows:

Then, the asymptotic limit of the generalization error corresponding to the hard formulation can be determined in closed-form. Given that the source and target models given in (1) and (2) use the same data-generating function, the constants , , , and are all equal. We express them as v and c in the rest of the proof.

Next, we assume that the function is the identity function. Based on the asymptotic result stated in Corollary 1, the asymptotic limit of the generalization error corresponding to the hard formulation can be expressed as follows:

It can be easily checked that the generalization error can be express as follows:

Note that the generalization error obtained above depends explicitly on . Now, it suffices to study the derivative of to find the properties of the optimal transfer rate that minimizes the generalization error. Note that the derivative can be expressed as follows:

This shows that the derivative of the generalization error has the same sign as the numerator. This means that the optimal transfer rate satisfies the following:

where is given by

It can be easily shown that the condition in (72) can be expressed as the one given in (20). This completes the proof of Proposition 1.

7.5. Sufficient Condition for the Hard Formulation

In this part, we provide a rigorous proof to Proposition 2. Suppose that the assumptions in Proposition 2 are all satisfied. Additionally, we assume that the function is the sign function. Based on the asymptotic result stated in Corollary 1, the asymptotic limit of the generalization error corresponding to the hard formulation can be expressed as follows:

where and are optimal solutions to the deterministic problem in (19) for fixed . A simple sufficient condition for positive transfer is when is decreasing at . This means that there exists some such that the transfer learning method introduced in (6) is better than the standard method when the following function increases at :

After computing the derivative of the function at zero, one can see that the transfer learning method introduced in (6) is better than the standard method when the following condition is true:

where and denote the optimal solutions of the standard learning formulation (i.e., in (19)). Additionally, and denote the derivative of the functions and at . The above analysis shows that it suffices to find the values of and to fully characterize the sufficient condition in (76). Before stating our analysis, we define and as follows:

where and are the optimal solutions of the deterministic source formulation given in (14).

Note that the optimal solution of the deterministic formulation in (19) satisfy the following system of equations:

The derivative of the first equation at can be expressed as follows:

where , , and denote optimal solutions of the standard learning formulation (i.e., in (19)). This means that they are known. Moreover, , and are unknown and denote the derivative of the functions , , and at . Now, define the constants , , , and as follows:

This means that the equation in (78) can be expressed as follows:

Similarly, the derivative of the second equation at can be expressed as follows:

Now, define the constants , , , and as follows:

This means that the equation in (81) can be expressed as follows:

Moreover, the derivative of the third equation at can be expressed as follows:

We define the constants , , , and as follows:

Therefore, the equation in (84) can be expressed as follows:

The above analysis shows that the values of and can be determined after solving the following system of linear equations:

over the three unknowns , , and . This completes the proof of Proposition 2.

8. Conclusions

In this paper, we presented a precise characterization of the asymptotic properties of two simple transfer learning formulations. Specifically, our results show that the training and generalization errors corresponding to the considered transfer formulations converge to deterministic functions. These functions can be explicitly found by combining the solutions of two deterministic scalar optimization problems. Our simulation results validate our theoretical predictions and reveal the existence of a phase transition phenomenon in the hard transfer formulation. Specifically, it shows that the hard transfer formulation moves from negative transfer to positive transfer when the similarity of the source and target tasks move past a well-defined critical threshold.

Author Contributions

Conceptualization, O.D. and Y.M.L.; methodology, O.D. and Y.M.L.; software, O.D.; validation, O.D. and Y.M.L.; formal analysis, O.D. and Y.M.L.; investigation, O.D. and Y.M.L.; resources, O.D. and Y.M.L.; data curation, O.D.; writing—original draft preparation, O.D.; writing—review and editing, O.D. and Y.M.L.; visualization, O.D.; supervision, Y.M.L.; project administration, Y.M.L.; funding acquisition, Y.M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Harvard FAS Dean’s Fund for Promising Scholarship and by the US National Science Foundations under grants CCF-1718698 and CCF-1910410.

Institutional Review Board Statement.

Not applicable

Informed Consent Statement.

Not applicable

Data Availability Statement.

Not applicable

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Technical Assumptions

Note that Assumption 1 is essential to show that the soft formulation in (4) concentrates in the large system limit. It also guarantees that the vectors and have correlations equal to , asymptotically. This is aligned with the definition in (3). Assumption 4 is also introduced to guarantee that the generalization error concentrates in the large system limit. It is satisfied by popular regression and classification models. For instance, observe that the conditions in Assumption 4 are all satisfied by the regression model considering . Moreover, they are satisfied by the binary classification model considering .

The analysis presented in this paper mostly focuses on regularized transfer learning formulations (i.e., ). The convexity properties in Assumption 3 are essential to apply the CGMT framework. Moreover, the properties in (12) are used to guarantee the compactness assumptions in the CGMT framework (see Theorem 2). In this appendix, we check the validity of Assumption 3 using popular loss functions, i.e., squared loss for regression tasks and logistic and hinge losses for binary classification tasks. To this end, assume that C is an arbitrary fixed positive constant.

- Squared loss: It is easy to see that the squared loss is a proper strongly convex function in , where 1 is a strong convexity parameter. Moreover, and its sub-differential set can be expressed as follows:where the vector is formed by the concatenation of . Then, there exists such thatwith probability going to 1 as p grow to . The inequality follows using the regularity condition in Assumption 4 and the weak law of large numbers. Then, the squared loss satisfies Assumption 3 for any .

- Logistic loss: Now, we consider the logistic loss applied to a binary classification model (i.e., ). Note that the logistic loss is a proper convex function in . Moreover, and its sub-differential set are given byFirst, observe that the loss satisfies the following inequality:This means that there exists such that the following inequality is valid:Additionally, the following results hold true:This means that there exists such that the following inequality is valid:Then, there exists a universal constant such that Assumption 3 is satisfied for the logistic loss for any .

- Hinge loss: Finally, we consider the hinge loss applied to a binary classification model (i.e., ). It is clear that the hinge loss is a proper convex function in . Moreover, is given by . Following [33], the sub-differential set can be expressed as follows:where is a diagonal matrix with diagonal entries . Note that the loss function satisfies the following inequality:This means that there exists such that the following inequality is valid:Moreover, the result in (A8) shows that any element in the sub-differential set satisfies the following:This means that there exists such that the following inequality is valid:Then, there exists a universal constant such that Assumption 3 is satisfied for the hinge loss for any .

Appendix B. Proof of Lemma 1

Appendix B.1. Primal Compactness

We start our analysis by assuming that . We first consider the compactness of the source problem given in (4). Note that the formulation in (4) has a unique optimal solution. Assume that is the unique optimal solution of the optimization problem given in (4). The analysis in [20] (Lemma 1) can be used to prove that there exists such that the following inequality is valid:

with probability going to one as . Moreover, observe that the formulation in (33) has a unique optimal solution. Assume that is the unique optimal solution of the optimization problem given in (33). Assumption 3 supposes that the loss function is proper. Then, we can conclude that there exists such that

Now, we define as the optimal objective value of the formulation in (33). Then, we can see that there exists such that

Given that is a feasible solution in the formulation given in (33), we obtain the following inequality:

Based on [27] (Theorem 2.1), the following convergence in probability holds:

where the matrix is formed by the concatenation of vectors . Then, there exists such that the following inequality is valid:

with probability going to one as . Combining this with the assumption in (12), we see that there exists such that the following inequality is valid:

Given that and the result in (A13), we conclude that there exists such that the following holds:

with probability going to one as .

Now, we consider the case when . Define , , and as the cost function, the optimal cost value, and the optimal solution of the formulation in (4). Moreover, define , , and as the cost function, the optimal cost value, and the optimal solution of the formulation in (33). Note that the loss function is strongly convex with a strong convexity parameter . Then, for any , the following property is valid:

This means that, for any , the following property is valid:

where n can be the number of samples of the source task or target task and are the labels of the source task or target task. Given the convexity of the norm, we obtain the following inequality:

where can be the cost value of the source task or target task formulations. Now, we focus on the source formulation. Take and . Moreover, see that the loss function is proper. Then, there exists such that

Given the assumption in (12), , , and the analysis in [27] (Theorem 2.1), there exists such that

Now, we focus on the target task. Take and . Moreover, see that the loss function is proper. Then, there exists such that

Given the assumption in (12), the result in (A25), , , and the analysis in [27] (Theorem 2.1), there exists such that

This completes the first part of the proof of Lemma 1.

Appendix B.2. Dual Compactness

The analysis in Appendix B.1 shows that the formulation in (34) can be equivalently formulated, where the primal feasibility set is given by

where is a sufficiently large constant that satisfies the analysis in Appendix B.1. Now, define as the optimal solution of the formulation in (34). Additionally, define the function as . We can see that the optimal vector solves the following maximization problem:

where the data matrix . Now, we denote by the sub-differential set of the function evaluated at . Therefore, the solution of the above maximization problem satisfies the following condition:

Now, we use the result in [25] (Proposition 11.3) to show that the condition in (A29) can be equivalently expressed as follows:

where the loss function based on [25] (Proposition 11.22). Note that the introduced constraint in (A28) is satisfied. Moreover, the analysis presented in (A17) shows that there exists such that the following inequality holds:

with probability going to one as p goes to . Now, we use the assumption in (12) to conclude that there exists such that the following inequality holds:

with probability going to one as p goes to . This completes the proof of Lemma 1.

Appendix C. Proof of Lemma 2

To prove the convergence properties stated in Lemma 2, we show first that they are valid for the auxiliary formulation corresponding to the source problem.

Appendix C.1. Auxiliary Convergence

Note that the analysis present in Section 7 is also valid for the source problem. This is because the formulation in (8) is equivalent to the source problem in (4) if is the all zero matrix and we use the source training data. Then, we can see that the optimal solution of the auxiliary formulation corresponding to the source problem, denoted by , can be expressed as follows:

where and has independent standard Gaussian components. Here, is formed by an orthonormal basis orthogonal to the vector . Additionally, our analysis in Section 7 shows that the following convergence in probability holds:

Here, and are the optimal solutions of the asymptotic limit of the source formulation defined in (14).

Note that can be expressed as follows:

Using the eigenvalue interlacing theorem, one can see that

Then, using the assumption in (13), we can see that the random variable converges in probability to , where is defined in Assumption 5. Now, we study the properties of the remaining functions using the optimal solution of the auxiliary formulation defined in (A33), i.e., , instead of . For instance, we first study the random sequence to infer the asymptotic properties of .

First, fix . Then, based on the convergence of and [34] (Proposition 3), the sequence of random functions converges in probability as follows:

Now, we express as , where . This means that the following convergence in probability holds true:

for any . Note that the functions and are both convex and continuous in the variable x in the set . Then, based on [30] (Theorem II.1), the convergence in (A38) is uniform in the variable x in the compact set . Now, note that converges in probability to . Therefore, we obtain the following convergence in probability:

valid for any fixed . Using the block matrix inversion lemma, the function can be expressed as follows:

Therefore, we obtain the following expression:

Then, using the theoretical results stated in [34] (Proposition 3), the functions converges in probability as follows:

Combine this with the above analysis to obtain the following convergence in probability:

valid for any . Based on the result in (A33), the sequence of random functions converges in probability to the following function:

Combine this with the above analysis to obtain the following convergence in probability:

valid for any . Using the same analysis and based on (A33) and (A34), one can see that the sequence of random functions converges in probability to the following function:

Combine this with the above analysis to obtain the following convergence in probability:

valid for any . The above analysis shows that the asymptotic properties stated in Lemma 2 are valid for the AO formulation corresponding to the source problem. Now, it remains to show that these properties also hold for the primary formulation.

Appendix C.2. Primary Convergence

Here, we assume that . The case when can be conducted similarly. Now, we show that the convergence properties proved above are also valid for the primary problem. To this end, we show that all the assumptions in Theorem 2 are satisfied. We start our proof by defining the following open set:

where K is defined as follows:

Now, we consider the feasibility set , where is defined in (41). Based on the analysis of the generalized target formulation in Section 7.3.2, one can see that the AO formulation corresponding to the source formulation with the set can be asymptotically expressed as follows:

Here, the feasibility set is defined in Section 7.3.2 and the feasibility set is given by

This follows based on the decomposition in (40) and where and . Note that the optimization problem given in can be equivalently formulated as follows:

Here, we replace the feasibility set by the feasibility set defined as follows:

where . This follows since the first set in satisfies the condition in the set . Now, assume that is the optimal cost value of the optimization problem and define the function as follows:

in the set . Based on the max–min inequality [35], the function can be lower bounded by the following function:

This is valid for any . Moreover, note that the following inequality holds true:

for any . Following the generalized analysis in Section 7.3.2, one can see that the auxiliary problem corresponding to the source formulation can be expressed as follows:

This means that the function can be lower bounded by the cost function of the minimization problem formulated in (A50) denoted by , i.e.,

Here, both functions are defined in the feasibility set . Now, define as the optimal cost value of the auxiliary optimization problem corresponding to the source formulation defined in Section 7.3.1. Note that the loss function is strongly convex in the variables with strong convexity parameter . This means that, for any , and , we have the following inequality:

where and . Take as , which represents the optimal solution of the optimization problem (A50). Then, the inequality in (A52) implies the following inequality:

This is valid for any in the set . Now, taking and the minimum over in the set in both sides, we obtain the following inequality:

Based on the above analysis, note that the following inequality also holds true:

Then, to verify the assumption of [17] (Theorem 6.1), it remains to show that there exists such that, the following inequality holds:

with probability going to 1 as . Note that any element in the set satisfies the following inequality:

Based on the analysis in Appendix C.1, we have the following convergence in probability:

This means that there exists such that any elements in the set satisfies the following inequality:

with probability going to 1 as . Combining this with Assumption 5 and the consistency result stated in (A34) shows that there exists such that the following inequality holds:

with probability going to 1 as . This also proves that there exists such that the following inequality holds:

with probability going to 1 as . This completes the verification of the assumptions in Theorem 2. This means that the optimal solution of the primary problem belongs to the set on events with probability going to 1 as . Since the choice of is arbitrary, we obtain the following asymptotic result:

where is the optimal solution of the source problem (4). Following the same analysis, one can also show the convergence properties stated in Lemma 2.

Appendix D. Proof of Lemma 4

Here, we assume that . The case when can be conducted similarly. The cost function of the optimization problem (49) can be expressed as follows:

Note that the function can be expressed as follows:

Here, the functions and are defined as follows:

Based on Assumption 5, the functions and are twice continuously differentiable in the feasibility set. We start our analysis by showing that the function is concave in the variable for fixed feasible . First, note that the function is concave in the feasibility set. Now, define the function as follows:

Then, we can see that the second derivative of the function can be expressed as follows:

Here, the first and second derivatives of the function can be expressed as follows:

Then, using the Cauchy–Schwarz inequality, one can see that the second derivative of the function is negative. This implies the concavity of the function . Therefore, using the properties in [35] (Section 3.2), the function is concave in the variable .

Now, we focus on proving the strong convexity properties. Define the function as follows:

Note that the term is strongly convex in the variables . Then, to prove our property it suffices to show that the following function is jointly convex in the variables in the feasibility set:

Note that the function can also be expressed as follows:

Here, the feasibility set of the variable is bounded given that the optimal satisfies . It can be easily seen that the cost function of the optimization problem in (A68) is convex in and concave in . Then, using the result in [36], the function can also be expressed as follows:

Then, to prove our property, it suffices to show that the cost function of the above problem is jointly convex in the variables . Using the positivity of the second derivative, it is easy to see that the function is convex. Now, using the analysis below Equation (161) in [20] (Appendix H), we can see that the remaining functions are jointly convex in the variables . We omit these steps since they are similar to the approach employed in [20] (Appendix H). This shows that the function is strongly convex in the variables .

References

- Pratt, L.Y.; Mostow, J.; Kamm, C.A. Direct Transfer of Learned Information among Neural Networks. In Proceedings of the Ninth National Conference on Artificial Intelligence—Volume 2, AAAI’91, Anaheim, CA, USA, 14–19 July 1991; pp. 584–589. [Google Scholar]

- Pratt, L.Y. Discriminability-Based Transfer between Neural Networks. In Advances in Neural Information Processing Systems; Hanson, S., Cowan, J., Giles, C., Eds.; Morgan-Kaufmann: Burlington, MA, USA, 1993; Volume 5, pp. 204–211. [Google Scholar]

- Perkins, D.; Salomon, G. Transfer of Learning; Pergamon: Oxford, UK, 1992. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. arXiv 2018, arXiv:1808.01974. [Google Scholar]

- Rosenstein, M.T.; Marx, Z.; Kaelbling, P.K.; Dietterich, T.G. To transfer or not to transfer. In NIPS Workshop on Transfer Learning; NIPS: Vancouver, BC, Canada, 2005. [Google Scholar]

- Bakker, B.; Heskes, T. Task Clustering and Gating for Bayesian Multitask Learning. J. Mach. Learn. Res. 2003, 4, 83–99. [Google Scholar]

- Ben-David, S.; Schuller, R. Exploiting Task Relatedness for Multiple Task Learning. In Learning Theory and Kernel Machines; Schölkopf, B., Warmuth, M.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 567–580. [Google Scholar]

- Kornblith, S.; Shlens, J.; Le, Q.V. Do Better ImageNet Models Transfer Better? arXiv 2019, arXiv:1805.08974. [Google Scholar]