1. Introduction

In the data mining field, the problem of selecting variables from a dataset to improve the accuracy of a classifier has been extensively studied. The procedures used for this purpose can depend or not depend on the properties of the classifier. In this paper, we focus on this problem for a simple but very efficient classifier: the Naive Bayes (NB). The simplicity of this classifier makes it very suitable for very large datasets. Being able to handle huge amounts of data is paramount for data mining. The emergent technologies and applications can generate vast amounts of data that can be used for a more exhaustive extraction of information. However, the performance of the classifier drops when the dataset contains irrelevant and redundant variables. Hence, a previous stage of variable selection can significantly improve the results.

Classification is a classical data mining problem. It may be generally defined in the following way: we have a dataset of observations, called the training set, and we wish to obtain a set of rules in order to assign to every new observation a value of the classification variable (discrete or discretized). After completing this procedure, the quality of the resulting set of rules is verified using a different set of observations called the test set. The variable under study is called the class variable and the rest of the variables in the dataset are called attribute variables or features. Classification is based on the use of several techniques that infer rules or patterns from a given dataset in order to predict new values of the class variable using a new set of values for the remaining variables. The applications of classification are well known in fields like medicine, bioinformatics, physics, pattern recognition, economics, etc., and they are used for tasks like disease diagnosis, generating meteorological forecasts, determining insurance risk profiles, and text classification, among many others.

The performance of a classification method can degrade if the dataset contains redundant or irrelevant variables. Another important problem for the accuracy of a classification method emerges when the dataset contains a large number of variables. For instance, in the fields of bioinformatics and text mining, datasets typically contain several thousands of variables (genes or words, respectively). This enormous amount of information can become unmanageable. It is therefore necessary to select a smaller set of variables in order to reduce or remove any irrelevant or redundant information present in the data, and to enable the automatic handling of these datasets. To solve this issue, several methods have been devised to obtain a significant subset of variables for classification purposes.

Variable selection methods can be generally grouped into two types: Filter methods select variables without relying on a classification method, while wrapper methods rely directly on the classification method used. An important reference for these methods is the work of Hall and Holmes [

1], which contains a detailed description of filter and wrapper methods that provide an excellent performance. The problem with the wrapper methods is their computational cost. They require checking subsets of variables in the base classifier for many of the steps involved. This makes them hardly suitable when the amount of features increases. For many features, filter methods are more appropriate. However, not all of the filter methods are adequate. Their computational cost must be considered, as the size of the features in the data could make them impractical.

The information gain measure developed by Quinlan [

2], called

Info-Gain (IG), is used to build decision trees via the ID3 algorithm. It can be used as a measure to select variables. Its purpose is to rank the set of attribute variables via the Info-Gain measure and select the best ones. It is a very quick variable selection model that can be applied on datasets with a huge number of features, where other procedures are not computationally efficient. This measure always gives a positive value, so the problem is where to set the threshold to select the best informative variables. Depending on the data used, this threshold can be notably different if we want to select the minimum set with the largest possible amount of information about the class variable.

The emergence of new mathematical models based on imprecise probabilities (Walley [

3]) to represent the information also resulted in the development of new ways to quantify the uncertainty information contained in those representations. These mathematical models generalize the classic probability theory (PT), where the most used measure has been Shannon’s classic entropy [

4]. Now, when the available information is expressed via a set of probability distributions normally a closed and convex set of probability distributions called a

credal set, other measures different to the known entropy must be applied.

In recent years, the literature shows many attempts to define measures on imprecise probability models that perform in a way similar to entropy for precise probabilities (see Klir [

5]). So far, the most successful one has been the maximum entropy measure (see Abellán et al. [

6], Abellán and Masegosa [

7], and Abellán and Bossé [

8]), which verifies a large set of important properties. This set of properties is similar to the one verified by entropy in PT.

The Info-Gain based on precise probabilities and entropy can be extended with imprecise probabilities and maximum entropy. This implies a different treatment of the information where imprecision is considered (see Mantas and Abellán [

9]). The equivalent criterion is called

Imprecise Info-Gain (IIG) and was introduced in Abellán and Moral [

10]. This new criterion has been used in a procedure to build decision trees, but it can also be used as an information gain measure to select variables from data in a similar manner that the IG criterion is applied, using a direct way or inside of more complex procedures. Its properties are somewhat different than those of Info-Gain, but the main difference is that the information gain can be negative for IIG. It is an important property that removes the requirement of setting a threshold for a dataset. Now, we can select a variable if its associated information gain via the IIG is positive. It represents a quick and more reasonable variable selection procedure.

In this paper, we present this new method of variable selection and use the known Naive Bayes classifier to test it on a large set of popular datasets. To show its performance, an experimental study was developed to compare the results for NB using a variable selection procedure based on IIG and a similar procedure based on IG with different thresholds. We will see that in general it is not possible to set a single threshold value for IG and get good results for every dataset, The best threshold value varies from one dataset to another. In contrast, the results obtained using IIG are generally good, improving those obtained using NB directly. Moreover, this procedure does not require setting a threshold value.

Section 2 briefly describes the background concepts of some mathematical models based on imprecise probabilities and uncertainty measures, where the maximum entropy plays an important role.

Section 3 describes the Naive Bayes classifier (which is used as the base classifier).

Section 4 explains the new variable selection model via the maximum entropy measure on imprecise probabilities, as well as the model used as a reference, which is based on precise probabilities and entropy.

Section 5 is devoted to the experiments, and

Section 6 contains the conclusions.

2. Imprecise Probabilities and Uncertainty Measures

2.1. Imprecise Probabilities

Various mathematical models can be used to represent the information available in a situation. None of these models is generally more justifiable than other, but each is more useful than the rest in specific situations. Walley [

3] compiles most of the mathematical models for representing the absence of information through imprecise probabilities. In this section, we briefly introduce the model based on imprecise probabilities that we will use: reachable sets of probability intervals.

2.1.1. Reachable Sets of Probability Intervals

As an important reference on this type of credal set, we should mention the work by Campos, Huete and Moral [

11], where we can find an excellent account of the basic operations for working with probability intervals, as well as their relation with other models such as those of upper and lower probabilities, capacities of order 2 and belief functions.

The main characteristic of this model is that there are many interesting operations between sets of probability intervals without having to leave the model, i.e., providing us with another set of probability intervals.

They can be described as follows: let

X be a variable that takes values in

. A system of probability intervals is a family of intervals

verifying that

. The credal set associated to a set of intervals

L on

X can be defined as:

expressing

as

.

One condition so that this set is nonempty is that

Any element in the set

therefore belongs to at least oneprobability distribution of

(which is why the set of intervals is defined as

reachable) and the following conditions must be verified:

for each

i. If this set of conditions is not verified, it is possible to obtain the reachable set of intervals from the following property:

Proposition 1. Given a set of probability intervals the set wheregive us the same set of probability distributions, , where this last set is a reachable set of probability intervals. 2.1.2. Imprecise Dirichlet Model

The

imprecise Dirichlet model (IDM) was introduced by Walley [

12] to infer the probability distribution of a categorical variable. Let us assume that

Z is a variable taking values on a finite set

Z and that we have a sample of size

N of independent and identically distributed outcomes of

Z. If we want to estimate the probabilities,

, with which

Z takes its values, a common Bayesian procedure consists in assuming a

prior Dirichlet distribution for the parameter vector

, and then taking the

posterior expectation of the parameters given the sample. The Dirichlet distribution depends on the parameters

s, a positive real value, and

, a vector of positive real numbers

, verifying

. The density takes the form:

where

is the gamma function.

If is the number of occurrences of value z in the sample, the expected posterior value of parameter is , which is also the Bayesian estimate of (under quadratic loss).

The imprecise Dirichlet model only depends on parameter

s and assumes all the possible values of

. This defines a convex set of

prior distributions. It represents a much weaker assumption than a precise

prior model, but it is possible to make useful inferences. In our particular case, where the IDM is applied to a single variable, we obtain a credal set for this variable

Z that can be represented by a system of probability intervals. For each parameter,

, we obtain a probability interval given by the lower and upper

posterior expected values of the parameter given the sample. These intervals can be easily computed and are given by

. The associated credal set on

X is given by all the probability distributions

on

Z, such that

. The intervals are coherent in the sense that if they are computed by taking infimum and supremum in the credal set, then the same set of intervals is again obtained. The associate credal set can be obtained in the same way as in the previous subsection,

and represents a credal set from a reachable set of probability intervals.

Parameter

s determines how quickly the lower and upper probabilities converge as more data become available; larger values of

s produce more cautious inferences. Walley [

12] does not provide a definitive recommendation, but he advocates values between

and

.

2.2. Uncertainty Measures on Credal Sets

The study of uncertainty measures in the Dempster–Shafer theory of evidence [

13,

14] has been the starting point for the development of these measures on more general theories (a study of the most important measures proposed in literature can be seen in [

5]). As a reference for the definition of an uncertainty measure on credal sets, Shannon’s entropy [

4] has been used due to its operation on probabilities. In any theory which is more general than the probability theory, it is essential that a measure be able to quantify the uncertainty that a credal set represents: the parts of

conflict and

non-specificity [

5].

Klir and Smith [

15] and Abellán and Moral [

16] justified the use of the maximum of entropy on credal sets as a good measure of total uncertainty that verifies a set of needed properties [

17]. The problem lies in separating this function into others which really do measure the parts of conflict and non-specificity, respectively, and this entails the use of a credal set to represent the information. More recently, Abellán, Klir and Moral [

6] presented a separation of the maximum of entropy into functions that are capable of coherently measuring the conflict and non-specificity of a credal set

K on a finite variable

X, as well as algorithms for facilitating its calculation in capacities of order 2 [

6,

18], and this may be expressed in the following way:

where

represents the maximum of entropy and

represents the entropy minimum on the credal set

K:

where

coherently quantifies the conflict part of the credal set

K and

represents the non-specificity part of

K [

6].

3. The Naive Bayes Classifier

In the area of machine learning, supervised classification learning can be considered an important tool for decision support. Classification can be defined as a machine learning technique used to predict group of membership for data instances. It can be applied to decision support in medicine, character recognition, astronomy, banking and other fields. A classifier may be represented using a Bayesian network, a neural network, a decision tree, etc.

The success of the model developed by Duda and Hart [

19] is mainly due to its simplicity, efficiency and effectiveness in classification problems. Before describing the classifier, we will probabilistically describe the supervised classification problem.

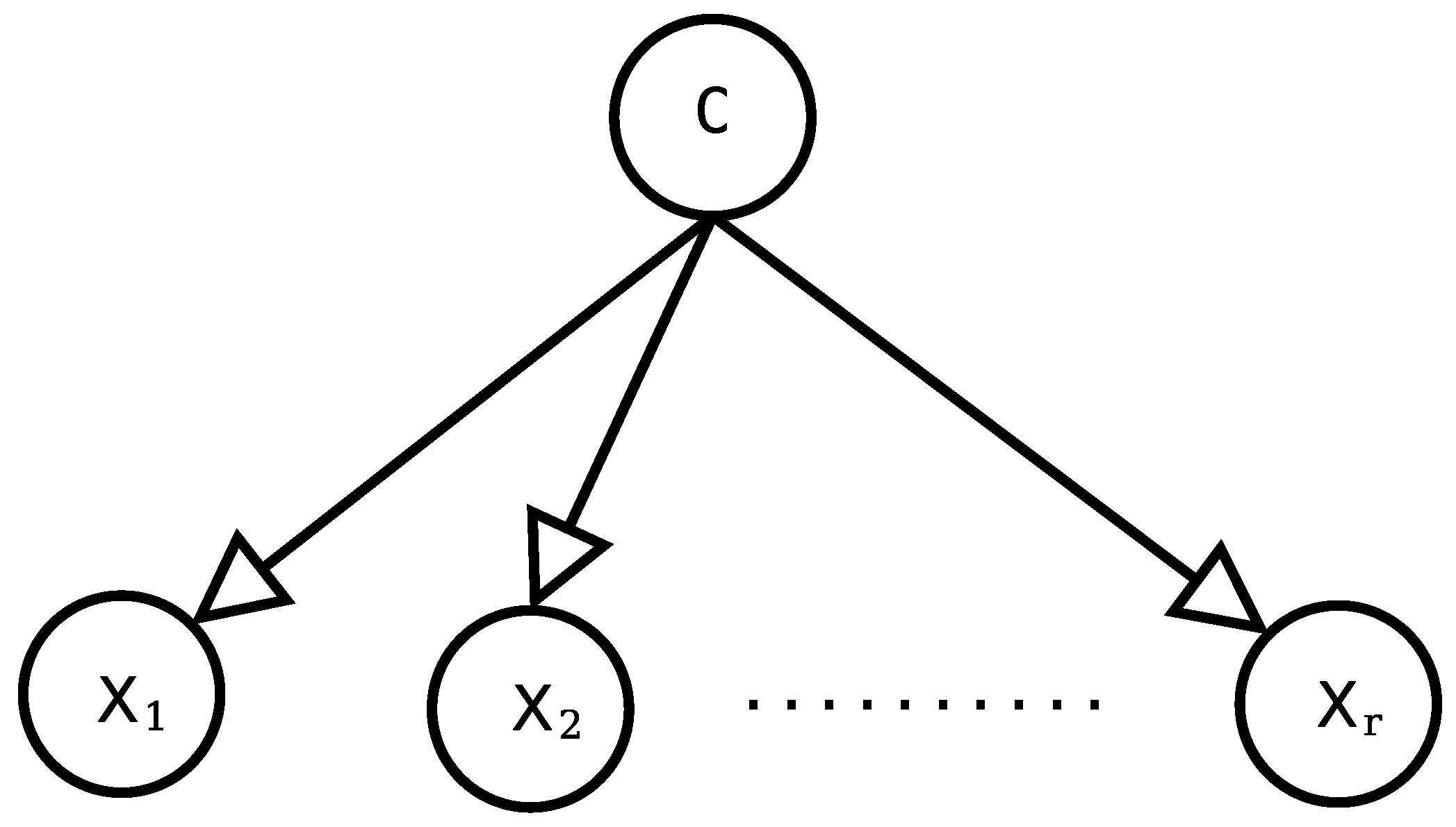

Let be a dataset, with size N, and with values in a set of (discrete or discretized) attribute variables , where each variable has a set of possible states or cases , and a class variable C, whose states are . The objective is to obtain information from the dataset in such a way that, given an observation (a set of values of all the attribute variables), it is possible to associate this with a value of the class variable.

If we represent the new sample as

, with

. The Naive Bayes (

Figure 1) predicts value

of

C in the following way:

with

the probability of each

in the subset of the training set determined by

(subset of the sample that verifies that

).

Now, based on the assumption that the attribute variables are independent given the class variable, the predicted value can be expressed as

The key to success of the Naive Bayes is its simplicity: no Bayesian network structure learning algorithm is required because its structure is fixed, the parameters of the model need only be estimated from the dataset using only bi-dimensional statistics for the class and each attribute and, as we have seen, the classification process is very efficient.

The Naive Bayes model shows remarkable results in accuracy, taking into account its clearly unrealistic assumptions: first, each attribute is conditionally independent from the other attributes given the class and, second, all of the variables have the same influence on the class. These assumptions can also cause some problems: the influence on the class of two highly correlated attributes may be overamplified by the model or a really irrelevant variable may only add noise to the classification. In any case, the solution may be to remove some attributes by using variable selection. The exhaustive search in the space of all of the variables combinations for the Naive Bayes requires the computation of structures, which is often prohibitive.

4. Variable Selection Methods

The aim of a variable selection method is to select a subset of variables that can effectively replace the original set of attributes while reducing the unfavourable effects of irrelevant or redundant features and still provide good results or, even better, improve the model performance.

Taking into account that many data mining techniques were originally not designed to work with considerable amounts of irrelevant/redundant features, such as the Naive Bayes classifier, the variable extraction is almost a requisite to work with many datasets. Furthermore, the variable selection stage will allow us to work efficiently with large datasets. Nevertheless, we must bear in mind that variable extraction adds an extra level of complexity to the process and there is no guarantee that the optimal subset of variables will be selected.

The methods to select variables depends on whether a base classifier is used as reference or not. They are generally grouped into two classes: (i) filter methods: select variables independently in relation to the classification method used; and (ii) wrapper methods: depend on the classification method subsequently used (Hall and Holmes [

1]).

The wrapper methods need to check many steps subsets of variables in the base classifier. This procedure makes them few suitable when the amount of features is huge. When the amount of data increases, the filter methods are more appropriate. Although the use of a determinate filter method depends on its computational cost, it may not be possible to be use due to the big size of data or number of features.

The most popular filter methods applied on the Naive Bayes classifier use as a tool the mutual information measure of each attribute and the class variable [

20], which is just the same procedure as the one of the information gain. A filter method that evaluates subsets of features also can have a a high computational cost, but normally lower than the ones of the wrapper methods when they are used to improve the Naive Bayes.

4.1. Info-Gain

This metric was introduced by Quinlan as the basis for his ID3 model [

2]. This model has the following main features: it was defined to obtain decision trees with discrete variables, it does not work with missing values, a pruning process is not carried out, and it is based on Shannon’s entropy [

4]. This split criterion can therefore be defined on an attribute variable

X given the class variable

C in the following way:

where

is the entropy of

C:

with

, the probability of each value of the class variable estimated in the training dataset. In the same way,

where

, is each possible state of

X and

, each possible state of

C. Finally, we can obtain the following reduced expression for the Info-Gain criterion:

This criterion is also known as the Mutual Information Criterion and it is widely used for measuring the dependence degree between an attribute variable and the class variable. It tends to select attribute variables with many states and consequently results in excessive ramification.

The IG criterion can be used as a variable selection method. It creates a rank of informative variables. It principal drawback is that it always gives a non-negative value of gain in information:

This result is a direct consequence of the Gibb’s inequality on two probability distributions

and

on a finite set

X:

In the case of the

, it can be considered the probability distribution

q on

defined as

, where

p is the above probability distribution based on the frequencies in the dataset.

As that value is always non-negative, the IG criterion creates a rank of features based on each gain in information. The problem is where to fix the threshold to use as filter to select the variables. The maximum gain in information is . Then, the function to consider as threshold must be , with .

4.2. Imprecise Info-Gain

The Imprecise Info-Gain criterion was first used for building decision trees in Abellán and Moral’s method [

10]. In a similar way to ID3, this tree is only defined for discrete variables; it cannot work with missing values; and it does not carry out a posterior pruning process. It is based on the application of uncertainty measures on convex sets of probability distributions. More specifically, probability intervals are extracted from the dataset for each case of the class variable using Walley’s imprecise Dirichlet model [

12] (IDM), which represents a specific kind of convex set of probability distributions, and on these the maximum entropy is estimated.

As we explained in previous sections, the IDM depends on a parameter

s and it estimates that (in a given dataset) the probabilities for each value of the class variable (

) are within the interval:

with

as the frequency of the set of values

in the dataset.

If we label

and

for the following sets of probability distributions

q on

:

with

as the frequency of the set of values

in the dataset, we can define the Imprecise Info-Gain for each attribute variable

X as:

where

is the maximum entropy function of a credal set.

For the previously defined intervals and for a value of

, Abellán’s procedure [

21] has a low computation cost. This value is the one recommended by Walley [

12] and the one used to build decision trees in Abellán and Moral [

10]. Given a credal set

defined as above, we must first determine the set

. Let

be the cardinal of the set

B. If we use

to denote the distribution where the maximum of entropy will be reached, the procedure of Abellán [

21] for

can be expressed in the following way:

In this procedure, we split the value of s as uniformly as it is possible between the states of C with lower frequency. For values the algorithm to find the maximum entropy value has more computational cost.

In Abellán, Klir and Moral [

6], we can see that the function of the maximum entropy includes two kinds of uncertainty: conflict and non-specificity. The first one is somewhat similar to the one in PT, well quantified by the Shannon’s entropy. The second one is a new type of uncertainty that does not appear in PT.

The maximum of entropy is the only measure that verifies a similar set of important properties on credal sets rather than the classic entropy in PT (see Abellán et al. [

6]). The use of the maximum entropy function on this type of sets is also justified with the following sentences of Jaynes [

22] about the sense of this measure:

“The fact that a certain probability distribution maximizes entropy subject to certain constraints representing our incomplete information, is the fundamental property which justifies use of that distribution for inference, it agrees with everything that is known, but carefully avoids assuming anything that is not known.”

The practical application of the IIG procedure can be easily explained without the concept of credal set, using only the frequencies and the expression of the values. Let us apply the following notation:

is the array n where the mass is shared among the minimum values (as in );

is the entropy using a notation on non-normalized values;

is the maximum entropy value of C. It is a consequence of the above points;

is the array

With the above notation, IIG can be expressed as follows:

The original procedure to build decision trees [

10], where the IIG was presented, uses the feature with the highest value of IIG to be inserted in a node.

4.3. IG versus IIG

One of the most significative differences between the IG and the IIG criteria is that the IIG can be negative. This situation never occurs with the IG criterion. This important characteristic allows the IIG criterion to discard variables that worsen the information on the class variable. This property allows us to detect directly which features are irrelevant as they will obtain negative values of information gain, which is equivalent to a waste of information.

With the IG criterion, we obtain a feature ranking, but this does not give a subset of variables, so we must decide which variables must be selected. To address this issue, the variables to be selected must have an IG value greater than or equal to a threshold. The threshold used depends on an

parameter and the number of states of the class variable for each dataset, hence we have that

where

and

.

In the Example 1, we can see a practical case where the difference between both criteria can be appreciated. In that example, we can see that some features can be selected by the IG criterion but not selected by the IIG.

Example 1. Let C be a class variable with two possible states . We consider that we have the following frequencies . We also consider that we only have two attribute variables , with possible values , and . The frequencies of each combination of states are the following ones: Considering the IG criterion, we always have an improvement in the gain of information. The values obtained with this criterion are the following ones (using the natural logarithm):Here, the feature produces the greater gain of information by the IG criterion. Now, if we set , then , and only would be selected. If we set , then , and both features would be selected. However, with the IIG criterion (and ), we have the following values:Now, none of the variables would be selected by the IIG criterion. The different results obtained in the above example are motivated by the use of the maximum entropy measure. The best value for the parameter to obtain the best set of informative features with the IG criterion is an open question. In that example, we can see that different values of that parameter produce a different set of selected variables.

To show that the both criteria perform differently, in a contrary situation to the one expressed by the Example 1, we can see the following example based on a real dataset.

Example 2. We consider the dataset anneal from the University of California Irvine (UCI) repository of machine learning datasets [23] that we will use in our experimentation in the next sections. This dataset has 38 features and its class variable has six states (). The gain in information of the last 12 features (the worse ones) for both criteria are presented in Table 1. The values are obtained using the same procedure as the one in Example 1. Considering that , the threshold for is . Hence, the IG criterion with that value of α does not select the features from the “exptl” feature to the bottom, including that feature. Observing the value of gain of information using the IIG criterion for that feature, we see that this feature is selected by the IIG criterion because it has a positive value ().

The above example expresses a situation where a feature is selected by the IIG criterion but not for the IG criterion with its less restrictive value. Then, that feature is also not selected using the rest of values.

5. Experimentation

In this section, we shall describe the experiments carried out and show the results obtained. For our purpose, we have used a wide and different set of 30 known datasets, obtained from the

UCI repository of machine learning datasets [

23]. A brief description of these can be found in

Table 2, where column “N” is the number of instances in the datasets, column “Attrib” is the number of attribute variables, column “Num” is the number of numerical variables, column “Nom” is the number of nominal variables, and column “k” is the number of cases or states of the class variable (always a nominal variable).

The

Weka software (ver. 3.8) [

24] has been used for the experimentation. The procedure of selecting attributes with the IIG method was implemented using data structures of Weka and the IDM parameter was set to

, i.e., the value used in the original methods of [

18]. The reasons to use this value were: first, it was the value recommended by Walley [

12]; and, second, the procedure to obtain the maximum entropy value reaches its lowest computational cost for this value [

21].

As we have seen above, the IIG criterion allows us to discard those variables with negative information gain, while the IG measure only obtains a attribute ranking, which means that a threshold must be chosen to discard those irrelevant features. The selected threshold is determined by the number of states of the class variable and a parameter . For our experiments, we have chosen four different values For values lower than , the IG criterion selects all of the features for many of the datasets; and for values higher than , the criterion selects a very low number of features and then the performance of the Naive Bayes is very poor—for that parameter: , , and .

In the experimentation, for each dataset, we applied the following procedure of 10-fold-cross validation repeated 10 times: the dataset is separated into 10 subsets, each one is used as a test set and the set obtained joining the other nine subsets are used as a training set. Thus, we have 10 training sets and 10 test sets. This procedure is repeated 10 times with a previous random reordering. Finally, it produces 100 training sets and 100 test sets. Using only the training set, features are selected via the IG and IIG methods obtaining a final subset of features. The Naive Bayes classifier is built on that subset, and the same subset of features is used for the test. Finally, Naive Bayes is applied on the test set. These results are compared with the Naive Bayes classifier with no variable selection scheme following the same validation procedure.

In summary, we have tested the Naive Bayes classifier without a variable selection and Naive Bayes with a previous variable selection procedure, using the IIG criterion and the IG criterion (this last one with four different thresholds).

The percentages of correct classifications for each dataset and each method, are presented in

Table 3 with the standard deviation to appreciate the variation of each iteration of the methods.

Table 4 presents the average number of attributes selected for each method of variable selection. In addition, the average times in seconds consumed for each method, when they are applied on a pair of Training and Test sets, are shown in

Table 5.

We have compared the results of the Naive Bayes classifier joint with a previous variable selection method with the ones of the original classifier without a previous procedure for variable selection. Following the recommendation of Demšar [

25], we have used the known Wilcoxon Signed-Ranks test [

26] for the pairwise comparisons. We have considered that this comparative is more appropriate than the one of comparing all the methods joined because four of them are the same method varying the value of the threshold, and the principal aim is the improvement of the Naive Bayes classifier. We have carried out the tests using the

software (ver. 2015-03-23) [

27].

The Wilcoxon Signed-Ranks test [

26] is a non-parametric test that ranks the differences in performance of two classifiers of each dataset comparing the ranks for the positive and the negative differences. It takes into account the commensurability of the differences that the Sign Test does not [

25]. It is used to compare two related samples, matched samples, or repeated measurements on a single sample to check whether their population mean ranks differently.

Given

and

two paired sets of data with a sample size of

N:

The null hypothesis is that the samples mean are equivalent, and the test is based on the following statistic:

where

is the sample size without ties;

is the function that returns the sing of a value; and

is the ranking associated with the order established by the values of the set

.

W follows a distribution with an expected value of 0 and a variance of

In

Table 6, we compare each method with the others using the Wilcoxon test.

6. Conclusions

As was pointed out in previous sections, variable selection is an important step in the preprocessing stage for data mining. In this paper, we have presented a new method to select variables based on imprecise probabilities and the maximum entropy measure to improve a very known classification method. We have shown, via an experimental study, that the predictive accuracy of the Naive Bayes classifier improves with the new variable selection method. Using an important statistical test, we obtain that the differences are statistically significant in favour of the use of the new method.

We compared the new method with a similar classical method based on precise entropy, called Info-Gain (IG). It must be remarked that in the same test carried out to compare the original Naive Bayes with the one using IG as a previous variable selection, we do not obtain significant statistical differences.

An important difference of the new method with respect to the classical one used for comparison is that the IG requires using a threshold to perform variable selection. Choosing the best threshold for each dataset is a difficult task. It should be emphasized that our proposal does not require setting a threshold, since variables with a negative information gain are discarded. The new method provides a quick and improved procedure for the preliminary step of variable selection for the Naive Bayes classifier that outperforms the IG approach, while removing the requirement of setting a threshold. The results presented in this work reinforce this assertion.

The final conclusion of this work is that the combination of the new variable selection method presented here with the Naive Bayes classifier provides an extremely useful tool for datasets with a very large number of features and a huge amount of data, where using complex methods is not computationally feasible. This is one of our tasks for future work.

A more immediate task for future work is to explore the use of the IIG criterion in complex methods to select variables based on IG. We think that this could provide an interesting improvement.