1. Introduction

The Latin word

emergere gives us an idea of what we mean when using the term emergence: become known, come to light. There are many approaches that use this term. It has been used—and abused—to describe novelty, unpredictability, holism, irreducibility and so on ([

1,

2], and references therein). In this contribution, we will be concerned with how it can be defined in a quantitative manner, rather than dismissing it as a question that is too difficult, trivial, unimportant or just a primitive concept. We wish to maintain a rigorous, scientific stance in which causality is not violated and without resorting to metaphysical arguments. As we will discuss later, this will have philosophical implications that are far from the scope of this manuscript, which will be analyzed elsewhere.

It is worth of mentioning that there have been very recent efforts to quantify emergence [

3–

5]. As can be seen below, our point of view is completely different, and can be taken as a complementary view to these contributions. We believe, in agreement with the ideas presented in [

6], that emergence must be understood not only in term of level of observation, as mentioned in the cited contribution: “The use of an emergence hierarchy to account for emergent properties is alarmingly circular, given that the levels are defined by the existence of emergent properties [

7]”.

Also relatively recently, see [

8], a notion of emergent process has been proposed. As discussed in detail below, our analysis differs from this since it is based on a different complexity measure rather than the efficiency of prediction [

8], and also probably most importantly, it emphasizes the condition of using the best theory to describe a given physical phenomenon. In general, nowadays,

emergence is broadly used to assign certain properties to features we observe in nature that have certain dependence on more basic phenomena (and/or elements), but are in some way independent from them and ultimately cannot be reduced to those other basic interactions between the basic elements. Some of the examples, cited in the literature, that we can mention as possible emergent phenomena are [

9–

12]:

physical systems that goes from the transparency of the water (or other liquid), phase transitions, and the so-called self-organized criticality state in granular systems, on one side, to the emergence of space-time at the other end of the physical scope;

biological systems, like the multicellular construct in a given organism, ending ultimately in organs, and the morphogenesis phenomena;

social organization observed in insects, mammals, and in general in every biological system consisting of agents (notice how the combination and interaction of all these subsystems also establish a higher level of emergent phenomena, as one can see, for example, in the biosphere).

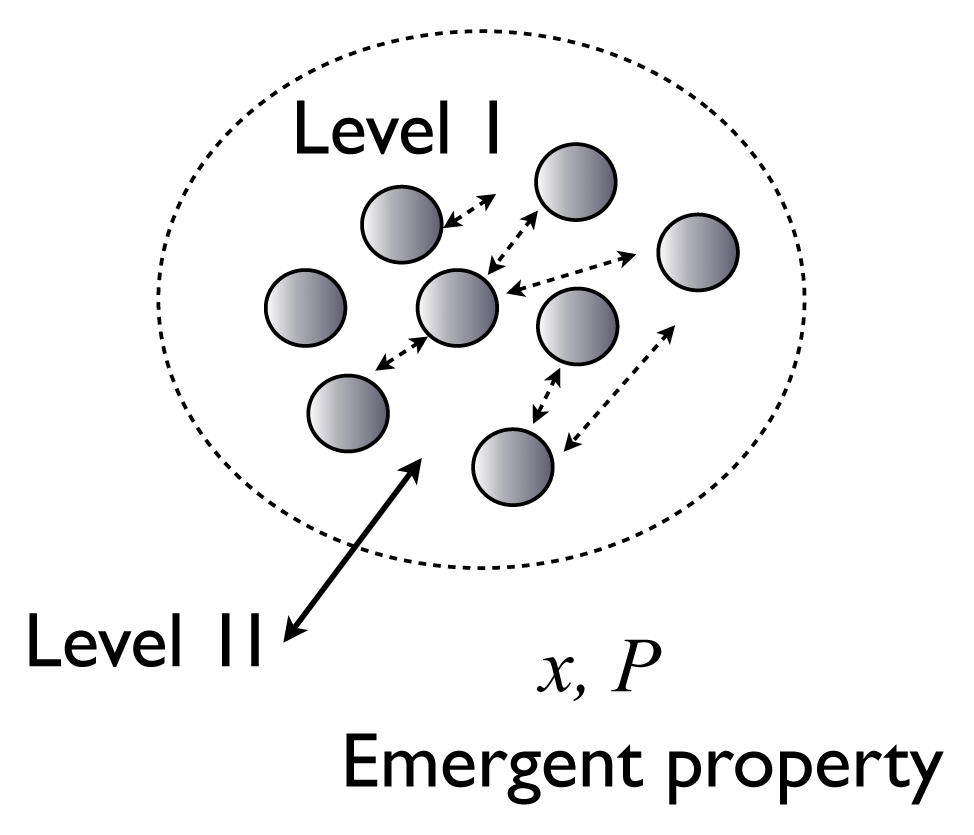

In

Figure 1 we schematized the concept of an emergent property. Notice how two levels appear naturally in this representation. In the figure, we have a base of elements (the circles) that do not present by themselves the emergent property. When they interact, a new feature arises. This new feature is context-dependent. It certainly depends on the coarse-graining at which the entity is described, the language used for this purpose, and the knowledge and intention to distinguish between regularity and randomness. The newest property should be characterized by some data

x, and the probability distribution or ensemble,

P, that produced

x.

To have the character of an emergent phenomenon, as we have mentioned, this new feature must not be reducible to the basis; it should be unexpected [

1,

2,

13].

2. Determinism and Theories

Before the twentieth-century, it was a consensus that large degrees of freedom were a necessary condition of unpredictable behavior. Probably the most beautiful (and maybe unfortunate) text written on these lines was by Laplace in his remark: “We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.” Notice here the explicitly strong deterministic view of all natural phenomena, where no room for surprise or novel properties are possible since the power of calculation of the Laplace Demon envisions all future outcomes. Nowadays, we are certain that deterministic low-dimensional systems can exhibit very complicated behavior, and moreover, they have imprinted the very idea of unpredictability (if lack of infinite power computation) when strong chaos is present.

“Prediction is difficult, especially the future” disputably attributed to Niels Bohr, and “The next great era of awakening of human intellect may well produce a method of understanding the qualitative content of equations. Today we cannot. Today we cannot see that the water flow equations contain such things as the barber pole structure of turbulence that one sees between rotating cylinders. Today we cannot see whether Schroedinger’s equation contains frogs, musical composers, or morality—or whether it does not” [

14], are two nice quotations on the power (or lack) of predictability of different physical theories we have today.

Niels Bohr, most probably, was inspired by quantum physics, concerning (putting it very simply) the uncertainty principle. Under this circumstance there is a range of novelties (or different outcomes) that a physical system can have, and the capacity for the observer to know the future is reduced to a given set of probabilities even using the best theory we have to understand such a phenomenon. The foremost part of Feynman’s quotation is related to the other face of a given theory: the power of computation and prediction of potential effects—predicted completely a priori—by the observer using a completely deterministic theory to explicate the phenomenon.

Regarding these comments, it is important to address the question of how well the theory describes the phenomenon, or in other words, the coarse-graining at which the prediction’s theory works. We should be satisfied in saying that the theory works for a given phenomenon if described at the level in which the regularities that one wants to study are better explained. An example of this can be seen in classical mechanics at the level of describing the elliptical orbits, but we cannot give a complete answer to more complicated and detailed patterns like the anomalous precession of Mercury’s perihelion using this theory. Of course, this is not the only aspect considering a physical theory. For a given phenomenon the explanation should be simple, i.e., the Kolmogorov complexity K(P) should be small, and the explanation should not be such that all possible outcomes are possible but should only contain some outcomes (x included). In other words, we should demand that the entropy of the ensemble, H(P), should be small.

3. Complex Systems

There is somehow a strong connection, at least in a huge part of the community discussing emergent phenomena and emergent properties, between complex systems and emergence [

15–

18]. These entailments can be understood if we think of a complex system as a collection of elements, each one interacting with others via simple local rules, nonlinear dynamics (as mentioned by Lewes [

19], restated in [

6]: “nonlinearity is a necessary condition for emergent properties”), and interacting with the environment with possible feedback loops. The properties that the collection of these elements exhibits, as an aggregated compound, are sometimes completely unexpected, and these cases have received the label of emergent properties or emergent phenomena.

3.1. Complexity Measures

Much literature has been produced trying to define the concept (or from now on the measure) of complexity of a given system [

20]. The story of the study of probabilistic regularities in physical systems can be traced back to 1857 with the very idea of entropy proposed by Clausius [

21]. Shannon derived the same functional form used almost one century prior to introducing the concept of information entropy [

22]. Even though the intuitive idea of complexity and information in a physical system share some similarities, it was necessary to introduce several measures in order to understand various

types of complexities and quantify properties of the system closely related with both of them [

20]: Kolmogorov complexity, logical depth, effective complexity,

etc.

Some of these measures have been proposed to study different characteristics of a given system [

23] (or string of symbols, the data

x that the system has or produce). Among all these measures, we think that the one that best captures the very idea of the complexity of an entity is effective complexity,

(

x), introduced by Gell-Mann and Lloyd [

24,

25]. In brief, the effective complexity of an entity is “the length of a highly compressed description of its regularities” [

25]. The idea is simple, elegant, and profound: If we split the algorithmic information content of some string

x into two components: One with its regularities (related to the Kolmogorov complexity

K) and the other with its random features (related to its entropy

H), the effective complexity of

x will be the algorithmic information content of its regularities only.

A perceptive reader should be noticing one very important aspect of the theory developed by Gell-Mann and Lloyd, that the effective complexity of an entity is dependent on the context and the subjectivity. We will give a

naïve example to motivate the analysis of this aspect of the theory. Imagine we are studying a particular system, e.g., a living organism, then, what is its complexity? There is no doubt that we should be more specific and mention exactly which characteristic or feature we want to study using this concept, and what set of data we have in order to do so. Not only that, but to be precise, we must have a theory that explains the data (even though this point may sound obvious, it plays a very important role in this theory, as we should see below). In what follows we will define effective complexity and emergence. For more details about the theory used and the results behind the theory, the reader should consult references [

24–

30].

4. Effective Complexity and Emergence

In order to introduce the effective complexity of an entity [

24,

25], we will define the total information: ∑. However, first imagine we have to make a good explanation (a theory) for a given data

x,

i.e., a finite binary string. For a good guess on the probability distribution that produce

x, we make two assumptions:

- (1)

The explanation should be

simple, which obviously implies a small Kolmogorov complexity,

i.e., as mentioned before,

K(

P) should be small. It is worth emphasizing that the Kolmogorov complexity of a string is closely related to the length of its shortest possible description in some fixed universal description language. For more details on this subject, the reader can see [

26].

- (2)

The explanation should

select some outcomes over others, and of course

x should be in those selected. Then, the entropy of a non-trivial distribution

H(

P) should be small. Notice that in all this work we assume that

H(

P) is finite (for specific details on the technical aspects of this section, the reader will find [

28] as an excellent reference).

The total information, ∑, will be the sum of the ensemble’s entropy H and the Kolmogorov’s complexity K of the ensemble P

Now, a good theory requires that the previously defined total information should be as small as possible. This means (like Lemma 3 in [

28]) that the total information should be close to the Kolmogorov complexity within no greater than a small parameter Δ,

i.e.,

Clearly, there should be a requirement to choose the best theory from the ones satisfying the last inequality. Following M. Gell-Mann and S. Lloyd, we will say that the best theory is the simplest theory, which in terms of this discussion means the ensemble P with the minimal Kolmogorov complexity K(P).

We will introduce a control parameter λ, which can describe the different ways a system is coupled to its environment or the characteristics of the system itself. Obviously the control parameter will be related to the theory used to explain the phenomena. Examples of control parameters can be: the Rayleigh number in the Bénard’s convection cell system, the diffusion ratio for the Turing’s pattern formation phenomena, or the number of connected cells in a neural network.

Effective complexity λ

The effective complexity of a string x is then defined as the infimum of

where

λ is the previously mentioned control parameter (say the temperature, number of interacting elements, the diffusion parameter,

etc.),

![Entropy 16 04489f2]()

is a subset that defines the constraints of the

x, Δ defines the space of good theories to

x, with

x being

λ-typical,

i.e.,

x satisfies

Emergent property,

![Entropy 16 04489f3]()

A property,

![Entropy 16 04489f4]()

of an entity will be emergent at

λc if its effective complexity measure, for this particular property characterized by

x, presents a discontinuity at

λc such that

with σ being a small positive parameter and

5. Discussion and Conclusions

In this work, we have introduced a novel concept related to the very idea of an emergent property, and how it can be quantitatively described. We paid some attention to motivate the discussion on the important aspects of the role of a given theory to explain phenomena (any discussion related to emergence should address this issue). Another point that arises in our presentation, which is also of particular importance to understand how the topic depends on subjectivity and context, is how to distinguish regular features of the entity (i.e., the regularities to be studied or described) from the incidental or random ones. It is worth mentioning here that the Kolmogorov complexity does not tackle this issue. A very simple and important problem that the Kolmogorov complexity faces in this context is a string of random zeros and ones (for example from a coin tossing experiment). The Kolmogorov complexity in this case is very large, but certainly the string is not complex at all. It is obvious that the effective complexity of this experiment will be substantially smaller, grasping our intuitive idea of how complex this string should be. As previously mentioned, thanks to the splitting of random and regular features, the effective complexity not only becomes a powerful tool to describe an entity, but also can be used to estimate how good a theory is for a given ensemble P. We think that this last remark is an important one that touches on some philosophical ground, since it provides a quantitative method to differentiate physical theories.

Under this framework, the concept emergence appears naturally. A given property of an entity (described through a string, or data, x) will be emergent if the information content of its regularities increase abnormally, as described above, for a given set of the control parameters. It is obvious to extend our definition and possible application using different characteristics of the effective complexity, for example the continuity properties of the function, its derivatives, etc.

The epistemological point of view of the definition presented here is clear. Its consequences and possible future direction of research will be communicated elsewhere.

We would like to conclude by quoting a philosophical remark, very much within the lines of our results, by Carl G. Hempel and Paul Oppenheim [

31]: “Emergence of a characteristic is not an ontological trait inherent in some phenomena; rather it is indicative of the scope of our knowledge at a given time; thus it has no absolute, but a relative character; and what is emergent with respect to the theories available today may lose its emergent status tomorrow.”

is a subset that defines the constraints of the x, Δ defines the space of good theories to x, with x being λ-typical, i.e., x satisfies

is a subset that defines the constraints of the x, Δ defines the space of good theories to x, with x being λ-typical, i.e., x satisfies![Entropy 16 04489f3]()

of an entity will be emergent at λc if its effective complexity measure, for this particular property characterized by x, presents a discontinuity at λc such that

of an entity will be emergent at λc if its effective complexity measure, for this particular property characterized by x, presents a discontinuity at λc such that