Image Fusion Based on the \({\Delta ^{ - 1}} - T{V_0}\) Energy Function

Abstract

:1. Introduction

- The Δ−1 term within the fusion energy function is proposed to remove the block effect in multi-spectral images without affecting the spatial information.

- To improve the image fusion accuracy, an alternative minimization algorithm using a non-convex L0 regularization term is proposed.

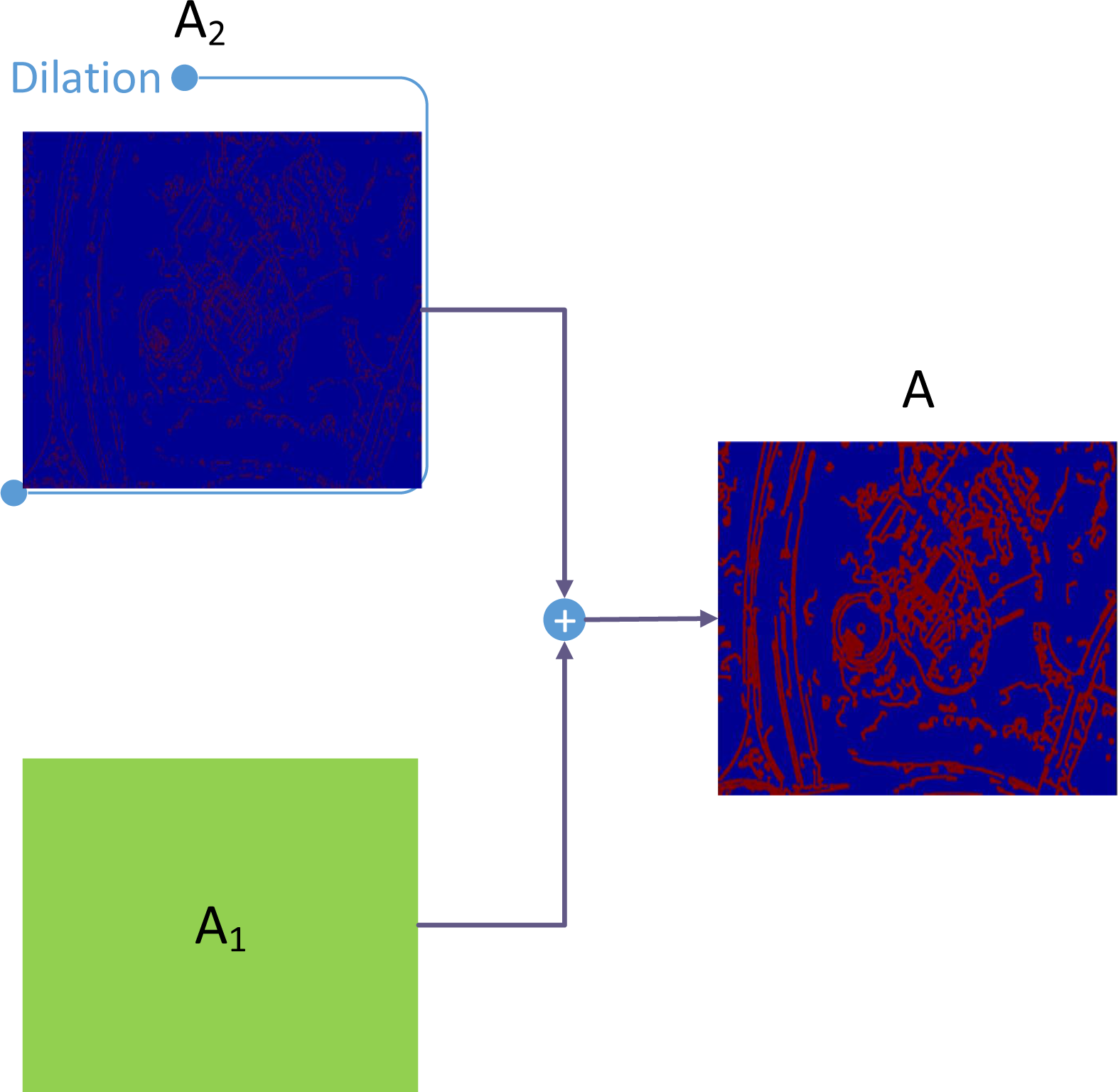

2. Computational Method

2.1. Flowchart of the Proposed Algorithm

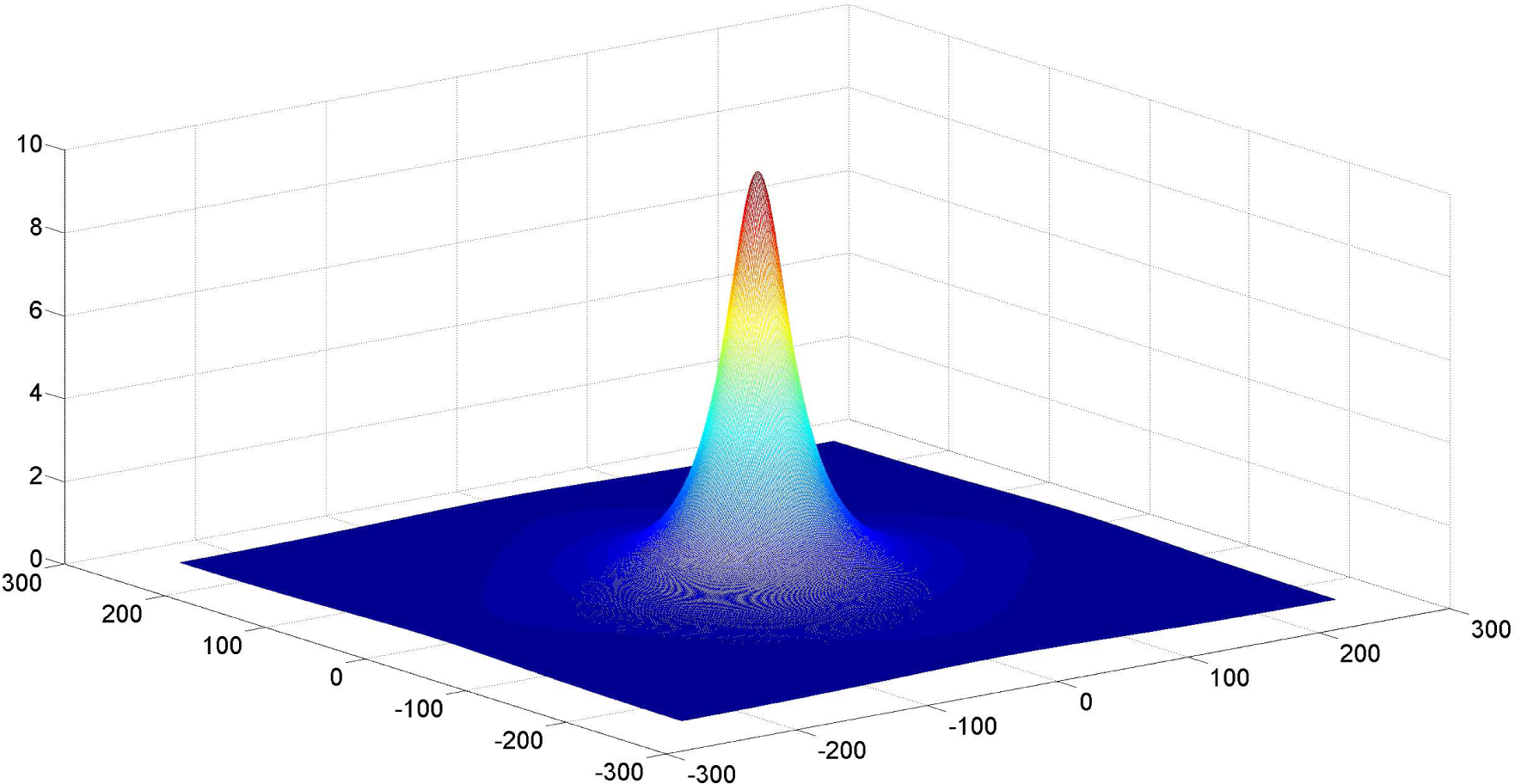

2.2. Inverse Laplace Transform Δ−1

2.3. Functional Form

2.4. Alternative Minimization Algorithm

- rgb2ihs indicates the transformation from RGB (red, green, blue) space to IHS (intensity, hue, saturation) space.

- ihs2rgb indicates the transformation from IHS space to RGB space.

- ι is a small positive constant.

2.5. The Selection of the Key Parameter λ

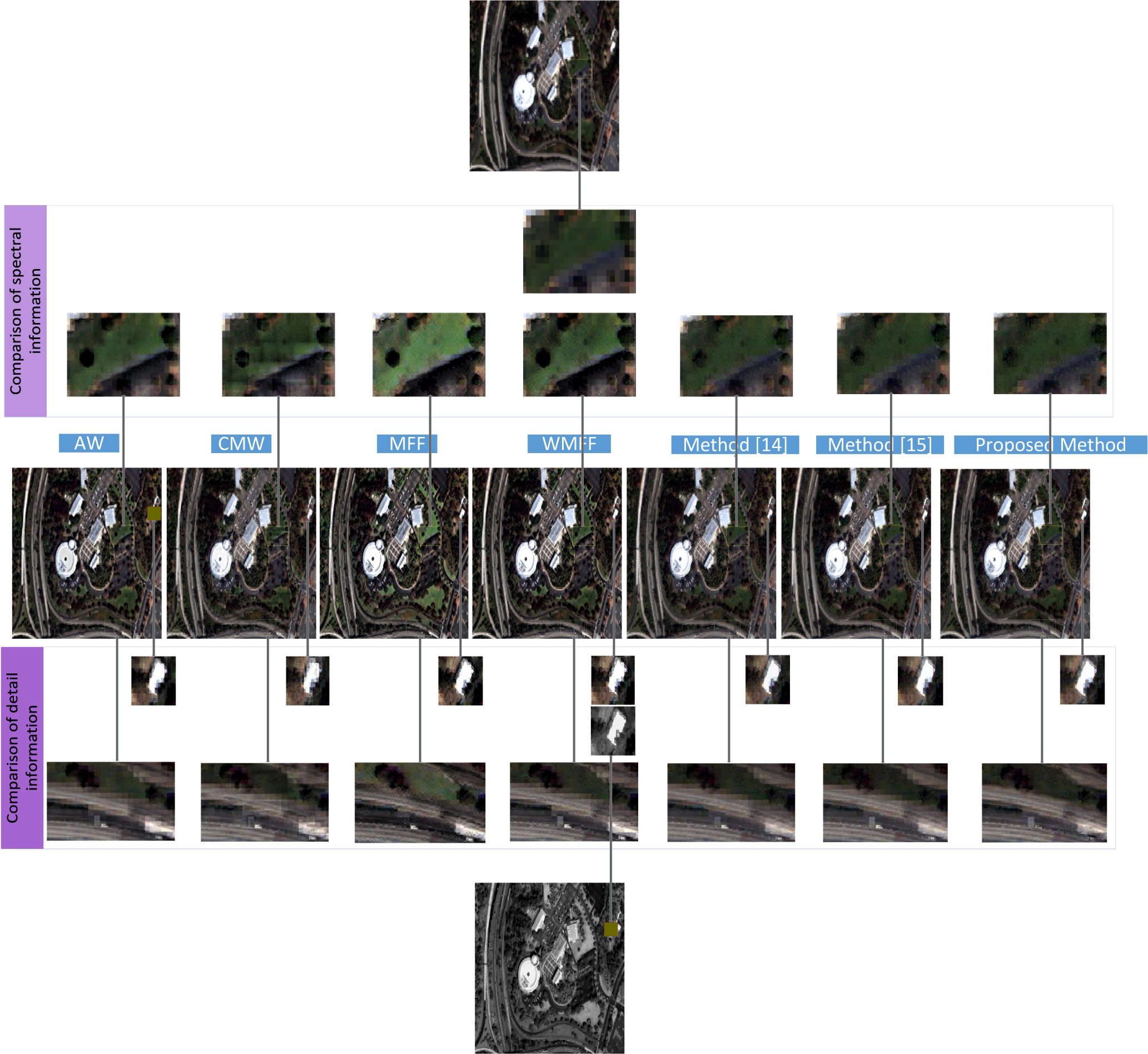

3. Experimental Results

4. Conclusion

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Nichol, J.; Wong, M.S. Satellite remote sensing for detailed landslides inventories using change detection and image fusion. Int. J. Remote Sens 2005, 26, 1913–1926. [Google Scholar]

- Schowengerdt, R.A. The nature of remote sensing. In Remote Sensing: Models and Methods for Image Processing; Academic Press: New York, NY, USA, 2006; pp. 1–44. [Google Scholar]

- Bulanon, D.M.; Burks, T.F.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng 2009, 103, 12–22. [Google Scholar]

- Fischer, M.A.; Nanz, D.; Hany, T.; Reiner, C.S.; Stolzmann, P.; Donati, O.F.; Breitenstein, S.; Schneider, P.; Weishaupt, D.; Schulthess, G.K.; et al. Diagnostic accuracy of whole-body MRI/DWI image fusion for detection of malignant tumours: A comparison with PET/CT. Mol. Imaging 2011, 21, 246–255. [Google Scholar]

- Shettigara, V.K. A generalized component substitition technique for spatial enhacement of multispectral images using a higher resolution data set. Photogramm. Eng. Remote Sens 1992, 58, 561–566. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar]

- Bayram, I.; Selesnick, I.W. A dual-tree rational-dilation complex wavelet transform. IEEE Trans. Signal Process 2011, 59, 6251–6256. [Google Scholar]

- Demirel, H.; Anbarjafari, G. Image resolution enhancement by using discrete and stationary wavelet Decomposition. IEEE Trans. Signal Process 2011, 20, 1458–1460. [Google Scholar]

- You, X.G.; Du, L.; Cheung, Y.M.; Chen, Q.H. A blind watermarking Scheme using new nontensor product wavelet filter banks. IEEE Trans. Signal Process 2010, 19, 3271–3284. [Google Scholar]

- Núñez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens 1999, 32, 1204–1211. [Google Scholar]

- Li, H.; Manjunath, B.S.; Mitra, S.K. Multisensor image fusion using the wavelet transform. Graph. Model. Image Process 1995, 57, 235–245. [Google Scholar]

- Scheunders, P. A multivalued image wavelet representation based on multiscale fundamental forms. IEEE Trans. Image Process 2002, 10, 568–575. [Google Scholar]

- Chen, T.; Guo, R.S.; Peng, S.L. Image fusion using weighted multiscale fundamental form. Proceedings of IEEE International Conference on Image Processing, 24–27 October 2004; pp. 3319–3322.

- Xie, Q.W.; He, J.C.; Long, Q.; Mita, S.; Chen, X.; Jiang, A. Image fusion based on TV − L1 function. Proceedings of the 2013 International Conference on Wavelet Analysis and Pattern Recognition, Tianjin, China, 14–17 July 2013; pp. 173–177.

- Xie, Q.W.; Long, Q.; Mita, S.; Chen, X.; Jiang, A. Image fusion based on TV − L1 – convex constrained algorithm. Proceedings of the 2013 International Conference on Wireless Communications & Signal Processing, Hangzhou, China, 24–26 October 2013; pp. 1–5.

- Xie, Q.W.; Long, Q.; Mita, S.; Guo, C.Z.; Jiang, A. Image fusion based on multi-objective optimization. Int. J. Wavelets Multiresolut. Inf. Process 2014, 12, 1450017. [Google Scholar]

- Saeedi, J.; Faez, K. Infrared and visible image fusion using fuzzy logic and population-based optimization. Appl. Soft Comput 2012, 12, 1041–1054. [Google Scholar]

- Saeedi, J.; Faez, K. A new pan-sharpening method using multi-objective particle swarm optimization and the shiftable contourlet transform. ISPRS J. Photogramm. Remote Sens 2011, 66, 365–381. [Google Scholar]

- Lacewell, C.W.; Gebril, M.; Buaba, R.; Homaifar, A. Optimization of image fusion using genetic algorithms and discrete wavelet transform. Proceedings of IEEE conference on Aerospace and Electronics, Fairborn, OH, USA, 14–16 July 2010; pp. 116–121.

- Smith, A.R. Color gamut transform pairs. ACM Comput. Graph. (SIGGRAPH) 1978, 12, 12–19. [Google Scholar]

- Osher, S.J.; Sole, A.; Vese, L.A. Image decomposition and restoration using total variation minimization and the H−1 norm. Multiscale Model. Simul 2003, 1, 349–370. [Google Scholar]

- Aujol, J.; Gilboa, G. Constrained and SNR-based solutions for TV-Hilbert space image denoising. J. Math. Imaging Vis 2006, 26, 217–237. [Google Scholar]

- Xie, Q.W.; Liu, Z.; Long, Q.; Mita, S.; Jiang, A. Remote sensing image fusion through kernel estimation based on energy minimization. Proceedings of International IEEE Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014.

- Krishnan, D.; Tay, T.; Fergus, R. Blind deconvolution using a normalized sparsity measure. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 20–25 June 2011; pp. 233–240.

- Ochs, P.; Dosovitskiy, A.; Brox, T.; Pock, T. An iterated l1 algorithm for non-smooth non-convex optimization in computer vision. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1759–1766.

- Fu, S.; Zhang, C. Adaptive non-convex total variation regularisation for image restoration. Electron. Lett 2010, 46, 907–908. [Google Scholar]

- Li, X.; Lu, C.W.; Xu, Y.; Jia, J.Y. Image smoothing via L0 gradient minimization. ACM Trans. Graph. (SIGGRAPH Asia) 2011, 30, 174. [Google Scholar]

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci 2011, 1, 248–272. [Google Scholar]

- Xydeas, C.S.; Petrovic, V. Objective image fusion performance measure. Electron. Lett 2000, 36, 308–309. [Google Scholar]

- Li, S.; Kwok, J.T.; Wang, Y. Combination of images with diverse focuses using the spatial frequency. Inf. Fusion 2001, 2, 169–176. [Google Scholar]

- Zheng, Y.; Essock, E.A.; Hansen, B.C.; Huan, A.M. A new metric based on extend spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion 2007, 8, 177–192. [Google Scholar]

| Fusion Method | CM(Red channel) | CM(Green channel) | CM(Blue channel) | QAB/F | SF |

|---|---|---|---|---|---|

| AW | 0.9341 | 0.9345 | 0.9402 | 0.4109 | 0.0642 |

| CMW | 0.9426 | 0.9443 | 0.9501 | 0.3491 | 0.0640 |

| MFF | 0.8281 | 0.8255 | 0.8497 | 0.3700 | 0.0810 |

| WMFF | 0.9268 | 0.9265 | 0.9353 | 0.4304 | 0.0738 |

| Method [14] | 0.9503 | 0.9528 | 0.9558 | 0.4311 | 0.0768 |

| Method [15] | 0.9502 | 0.9527 | 0.9555 | 0.4157 | 0.0776 |

| Proposed method | 0.9505 | 0.9534 | 0.9570 | 0.4485 | 0.0842 |

| Fusion Method | CM(Red channel) | CM(Green channel) | CM(Blue channel) | QAB/F | SF |

|---|---|---|---|---|---|

| CMW | 0.8835 | 0.8704 | 0.8616 | 0.3576 | 0.1129 |

| MFF | 0.8321 | 0.8130 | 0.7980 | 0.3542 | 0.0959 |

| WMFF | 0.8920 | 0.8745 | 0.8704 | 0.4075 | 0.0941 |

| Method [14] | 0.8921 | 0.8850 | 0.8743 | 0.4105 | 0.1360 |

| Method [15] | 0.8921 | 0.8847 | 0.8746 | 0.4073 | 0.1372 |

| Proposed method | 0.8927 | 0.8849 | 0.8751 | 0.4260 | 0.1478 |

© 2014 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Q.; Ma, C.; Guo, C.; John, V.; Mita, S.; Long, Q. Image Fusion Based on the \({\Delta ^{ - 1}} - T{V_0}\) Energy Function. Entropy 2014, 16, 6099-6115. https://doi.org/10.3390/e16116099

Xie Q, Ma C, Guo C, John V, Mita S, Long Q. Image Fusion Based on the \({\Delta ^{ - 1}} - T{V_0}\) Energy Function. Entropy. 2014; 16(11):6099-6115. https://doi.org/10.3390/e16116099

Chicago/Turabian StyleXie, Qiwei, Chao Ma, Chunzhao Guo, Vijay John, Seiichi Mita, and Qian Long. 2014. "Image Fusion Based on the \({\Delta ^{ - 1}} - T{V_0}\) Energy Function" Entropy 16, no. 11: 6099-6115. https://doi.org/10.3390/e16116099