Journal Description

Computers

Computers

is an international, scientific, peer-reviewed, open access journal of computer science, including computer and network architecture and computer–human interaction as its main foci, published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), dblp, Inspec, Ei Compendex, and other databases.

- Journal Rank: JCR - Q2 (Computer Science, Interdisciplinary Applications) / CiteScore - Q1 (Computer Science (miscellaneous))

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 16.3 days after submission; acceptance to publication is undertaken in 3.8 days (median values for papers published in this journal in the first half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Journal Cluster of Artificial Intelligence: AI, AI in Medicine, Algorithms, BDCC, MAKE, MTI, Stats, Virtual Worlds and Computers.

Impact Factor:

4.2 (2024);

5-Year Impact Factor:

3.5 (2024)

Latest Articles

DEVS Closure Under Coupling, Universality, and Uniqueness: Enabling Simulation and Software Interoperability from a System-Theoretic Foundation

Computers 2025, 14(12), 514; https://doi.org/10.3390/computers14120514 (registering DOI) - 24 Nov 2025

Abstract

►

Show Figures

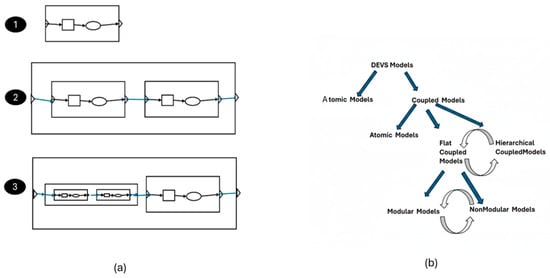

This article explores the foundational mechanisms of the Discrete Event System Specification (DEVS) theory—closure under coupling, universality, and uniqueness—and their critical role in enabling interoperability through modular, hierarchical simulation frameworks. Closure under coupling empowers modelers to compose interconnected models, both atomic and coupled,

[...] Read more.

This article explores the foundational mechanisms of the Discrete Event System Specification (DEVS) theory—closure under coupling, universality, and uniqueness—and their critical role in enabling interoperability through modular, hierarchical simulation frameworks. Closure under coupling empowers modelers to compose interconnected models, both atomic and coupled, into unified systems without departing from the DEVS formalism. We show how this modular approach supports the scalable and flexible construction of complex simulation architectures on a firm system-theoretic foundation. Also, we show that facilitating the transformation from non-modular to modular and hierarchical structures endows a major benefit in that existing non-modular models can be accommodated by simply wrapping them in DEVS-compliant format. Therefore, DEVS theory simplifies model maintenance, integration, and extension, thereby promoting interoperability and reuse. Additionally, we demonstrate how DEVS universality and uniqueness guarantee that any system with discrete event interfaces can be structurally represented with the DEVS formalism, ensuring consistency across heterogeneous platforms. We propose that these mechanisms collectively can streamline simulator design and implementation for advancing simulation interoperability.

Full article

Open AccessArticle

Effectiveness Evaluation Method for Hybrid Defense of Moving Target Defense and Cyber Deception

by

Fangbo Hou, Fangrun Hou, Xiaodong Zang, Ziyang Hua, Zhang Liu and Zhe Wu

Computers 2025, 14(12), 513; https://doi.org/10.3390/computers14120513 - 24 Nov 2025

Abstract

Moving Target Defense (MTD) has been proposed as a dynamic defense strategy to address the static and isomorphic vulnerabilities of networks. Recent research in MTD has focused on enhancing its effectiveness by combining it with cyber deception techniques. However, there is limited research

[...] Read more.

Moving Target Defense (MTD) has been proposed as a dynamic defense strategy to address the static and isomorphic vulnerabilities of networks. Recent research in MTD has focused on enhancing its effectiveness by combining it with cyber deception techniques. However, there is limited research on evaluating and quantifying this hybrid defence framework. Existing studies on MTD evaluation often overlook the deployment of deception, which can expand the potential attack surface and introduce additional costs. Moreover, a unified model that simultaneously measures security, reliability, and defense cost is lacking. We propose a novel hybrid defense effectiveness evaluation method that integrates queuing and evolutionary game theories to tackle these challenges. The proposed method quantifies the safety, reliability, and defense cost. Additionally, we construct an evolutionary game model of MTD and deception, jointly optimizing triggering and deployment strategies to minimize the attack success rate. Furthermore, we introduce a hybrid strategy selection algorithm to evaluate the impact of various strategy combinations on security, resource consumption, and availability. Simulation and experimental results demonstrate that the proposed approach can accurately evaluate and guide the configuration of hybrid defenses. Demonstrating that hybrid defense can effectively reduce the attack success rate and unnecessary overhead while maintaining Quality of Service (QoS).

Full article

(This article belongs to the Section ICT Infrastructures for Cybersecurity)

Open AccessReview

The Latest Diagnostic Imaging Technologies and AI: Applications for Melanoma Surveillance Toward Precision Oncology

by

Alessandro Valenti, Fabio Valenti, Stefano Giuliani, Simona di Martino, Luca Neroni, Cristina Sorino, Pietro Sollena, Flora Desiderio, Fulvia Elia, Maria Teresa Maccallini, Michelangelo Russillo, Italia Falcone and Antonino Guerrisi

Computers 2025, 14(12), 512; https://doi.org/10.3390/computers14120512 - 24 Nov 2025

Abstract

In recent years, the medical field has witnessed the rapid expansion and refinement of omics and imaging technologies, which have profoundly transformed patient surveillance and monitoring strategies, with stage-adapted protocols and cross-sectional imaging important in high-risk follow-up. In the melanoma context, diagnostic imaging

[...] Read more.

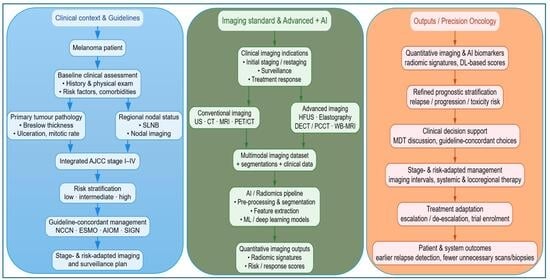

In recent years, the medical field has witnessed the rapid expansion and refinement of omics and imaging technologies, which have profoundly transformed patient surveillance and monitoring strategies, with stage-adapted protocols and cross-sectional imaging important in high-risk follow-up. In the melanoma context, diagnostic imaging plays a pivotal role in disease staging, follow-up and evaluation of therapeutic response. Moreover, the emergence of Artificial Intelligence (AI) has further driven the transition toward precision medicine, emphasizing the complexity and individuality of each patient: AI/Radiomics pipelines are increasingly supporting lesion characterization and response prediction within clinical workflows. Consequently, it is essential to emphasize the significant potential of quantitative imaging techniques and radiomic applications, as well as the role of AI in improving diagnostic accuracy and enabling personalized oncologic treatment. Early evidence demonstrates increased sensitivity and specificity, along with a reduction in unnecessary biopsies and imaging procedures, within selected care approaches. In this review, we will outline the current clinical guidelines for the management of melanoma patients and use them as a framework to explore and evaluate advanced imaging approaches and their potential contributions. Specifically, we compare the recommendations of major societies such as NCCN, which advocates more intensive imaging for stages IIB–IV; ESMO and AIOM, which recommend symptom-driven surveillance; and SIGN, which discourages routine imaging in the absence of clinical suspicion. Furthermore, we will describe the latest imaging technologies and the integration of AI-based tools for developing predictive models to actively support therapeutic decision-making and patient care. The conclusions will focus on the prospective role of novel imaging modalities in advancing precision oncology, improving patient outcomes and optimizing the allocation of clinical resources. Overall, the current evidences support a stage-adapted surveillance strategy (ultrasound ± elastography for lymph node regions, targeted brain MRI in high-risk patients, selective use of DECT or total-body MRI) combined with rigorously validated AI-based decision support systems to personalize follow-up, streamline workflows and optimize resource utilization.

Full article

(This article belongs to the Special Issue Applications of Machine Learning and Artificial Intelligence for Healthcare)

►▼

Show Figures

Graphical abstract

Open AccessArticle

Image-Based Threat Detection and Explainability Investigation Using Incremental Learning and Grad-CAM with YOLOv8

by

Zeynel Kutlu and Bülent Gürsel Emiroğlu

Computers 2025, 14(12), 511; https://doi.org/10.3390/computers14120511 - 24 Nov 2025

Abstract

Real-world threat detection systems face critical challenges in adapting to evolving operational conditions while providing transparent decision making. Traditional deep learning models suffer from catastrophic forgetting during continual learning and lack interpretability in security-critical deployments. This study proposes a distributed edge–cloud framework integrating

[...] Read more.

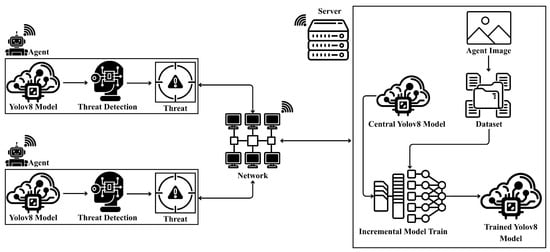

Real-world threat detection systems face critical challenges in adapting to evolving operational conditions while providing transparent decision making. Traditional deep learning models suffer from catastrophic forgetting during continual learning and lack interpretability in security-critical deployments. This study proposes a distributed edge–cloud framework integrating YOLOv8 object detection with incremental learning and Gradient-weighted Class Activation Mapping (Grad-CAM) for adaptive, interpretable threat detection. The framework employs distributed edge agents for inference on unlabeled surveillance data, with a central server validating detections through class verification and localization quality assessment (IoU ≥ 0.5). A lightweight YOLOv8-nano model (3.2 M parameters) was incrementally trained over five rounds using sequential fine tuning with weight inheritance, progressively incorporating verified samples from an unlabeled pool. Experiments on a 5064 image weapon detection dataset (pistol and knife classes) demonstrated substantial improvements: F1-score increased from 0.45 to 0.83, mAP@0.5 improved from 0.518 to 0.886 and minority class F1-score rose 196% without explicit resampling. Incremental learning achieved a 74% training time reduction compared to one-shot training while maintaining competitive accuracy. Grad-CAM analysis revealed progressive attention refinement quantified through the proposed Heatmap Focus Score, reaching 92.5% and exceeding one-shot-trained models. The framework provides a scalable, memory-efficient solution for continual threat detection with superior interpretability in dynamic security environments. The integration of Grad-CAM visualizations with detection outputs enables operator accountability by establishing auditable decision records in deployed systems.

Full article

(This article belongs to the Special Issue Deep Learning and Explainable Artificial Intelligence (2nd Edition))

►▼

Show Figures

Figure 1

Open AccessArticle

IoT-Enabled Indoor Real-Time Tracking Using UWB for Smart Warehouse Management

by

Bahareh Masoudi, Nazila Razi and Javad Rezazadeh

Computers 2025, 14(12), 510; https://doi.org/10.3390/computers14120510 - 24 Nov 2025

Abstract

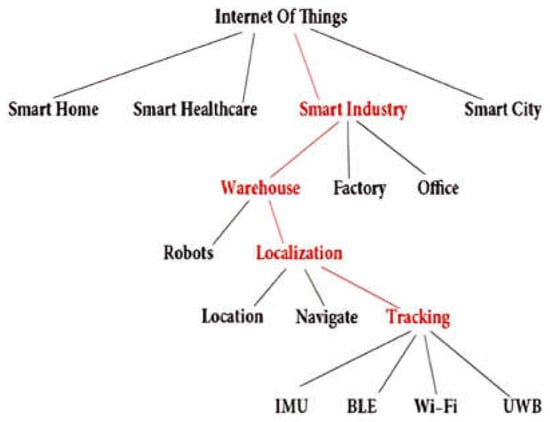

The Internet of Things (IoT) is transforming industrial operations, particularly under Industry 4.0, by enabling real-time connectivity and automation. Accurate indoor localization is critical for warehouse management, where GPS-based solutions are ineffective due to signal obstruction. This paper presents a smart indoor positioning

[...] Read more.

The Internet of Things (IoT) is transforming industrial operations, particularly under Industry 4.0, by enabling real-time connectivity and automation. Accurate indoor localization is critical for warehouse management, where GPS-based solutions are ineffective due to signal obstruction. This paper presents a smart indoor positioning system (IPS) integrating Ultra-Wideband (UWB) sensors with Long Short-Term Memory (LSTM) neural networks and Kalman filtering, employing a tailored data fusion sequence and parameter optimization for real-time object tracking. The system was deployed in a 54 m2 warehouse section on forklifts equipped with UWB modules and QR scanners. Experimental evaluation considered accuracy, reliability, and noise resilience in cluttered industrial conditions. Results, validated with RMSE, MAE, and standard deviation, demonstrate that the hybrid Kalman–LSTM model improves localization accuracy by up to 4% over baseline methods, outperforming conventional sensor fusion approaches. Comparative analysis with standard benchmarks highlights the system’s robustness under interference and its scalability for larger warehouse operations. These findings confirm that combining temporal pattern learning with advanced sensor fusion significantly enhances tracking precision. This research contributes a reproducible and adaptable framework for intelligent warehouse management, offering practical benefits aligned with Industry 4.0 objectives.

Full article

(This article belongs to the Special Issue Advanced Localization Solutions in IoT Smart Industry: Innovation, Challenges, and Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

Classification of Drowsiness and Alertness States Using EEG Signals to Enhance Road Safety: A Comparative Analysis of Machine Learning Algorithms and Ensemble Techniques

by

Masoud Sistaninezhad, Saman Rajebi, Siamak Pedrammehr, Arian Shajari, Hussain Mohammed Dipu Kabir, Thuong Hoang, Stefan Greuter and Houshyar Asadi

Computers 2025, 14(12), 509; https://doi.org/10.3390/computers14120509 - 24 Nov 2025

Abstract

Drowsy driving is a major contributor to road accidents, as reduced vigilance degrades situational awareness and reaction control. Reliable assessment of alertness versus drowsiness can therefore support accident prevention. Key gaps remain in physiology-based detection, including robust identification of microsleep and transient vigilance

[...] Read more.

Drowsy driving is a major contributor to road accidents, as reduced vigilance degrades situational awareness and reaction control. Reliable assessment of alertness versus drowsiness can therefore support accident prevention. Key gaps remain in physiology-based detection, including robust identification of microsleep and transient vigilance shifts, sensitivity to fatigue-related changes, and resilience to motion-related signal artifacts; practical sensing solutions are also needed. Using Electroencephalogram (EEG) recordings from the MIT-BIH Polysomnography Database (18 records; >80 h of clinically annotated data), we framed wakefulness–drowsiness discrimination as a binary classification task. From each 30 s segment, we extracted 61 handcrafted features spanning linear, nonlinear, and frequency descriptors designed to be largely robust to signal-quality variations. Three classifiers were evaluated—k-Nearest Neighbors (KNN), Support Vector Machine (SVM), and Decision Tree (DT)—alongside a DT-based bagging ensemble. KNN achieved 99% training and 80.4% test accuracy; SVM reached 80.0% and 78.8%; and DT obtained 79.8% and 78.3%. Data standardization did not improve performance. The ensemble attained 100% training and 84.7% test accuracy. While these results indicate strong discriminative capability, the training–test gap suggests overfitting and underscores the need for validation on larger, more diverse cohorts to ensure generalizability. Overall, the findings demonstrate the potential of machine learning to identify vigilance states from EEG. We present an interpretable EEG-based classifier built on clinically scored polysomnography and discuss translation considerations; external validation in driving contexts is reserved for future work.

Full article

(This article belongs to the Special Issue AI for Humans and Humans for AI (AI4HnH4AI))

►▼

Show Figures

Figure 1

Open AccessArticle

NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English

by

Manish Motghare, Megha Agarwal and Avinash Agrawal

Computers 2025, 14(12), 508; https://doi.org/10.3390/computers14120508 - 23 Nov 2025

Abstract

The rapid growth of digital journalism has heightened the need for reliable multi-document summarization (MDS) systems, particularly in underrepresented, low-resource, and culturally distinct contexts. However, current progress is hindered by a lack of large-scale, high-quality non-Western datasets. Existing benchmarks—such as CNN/DailyMail, XSum, and

[...] Read more.

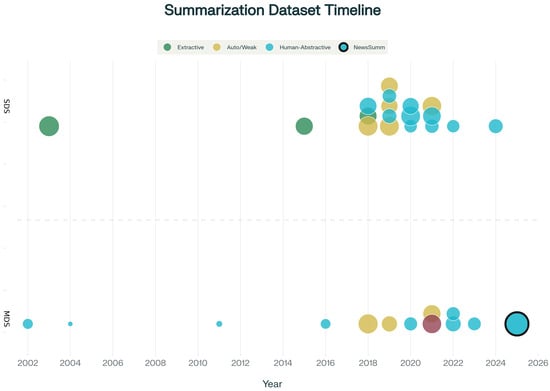

The rapid growth of digital journalism has heightened the need for reliable multi-document summarization (MDS) systems, particularly in underrepresented, low-resource, and culturally distinct contexts. However, current progress is hindered by a lack of large-scale, high-quality non-Western datasets. Existing benchmarks—such as CNN/DailyMail, XSum, and MultiNews—are limited by language, regional focus, or reliance on noisy, auto-generated summaries. We introduce NewsSumm, the largest human-annotated MDS dataset for Indian English, curated by over 14,000 expert annotators through the Suvidha Foundation. Spanning 36 Indian English newspapers from 2000 to 2025 and covering more than 20 topical categories, NewsSumm includes over 317,498 articles paired with factually accurate, professionally written abstractive summaries. We detail its robust collection, annotation, and quality control pipelines, and present extensive statistical, linguistic, and temporal analyses that underscore its scale and diversity. To establish benchmarks, we evaluate PEGASUS, BART, and T5 models on NewsSumm, reporting aggregate and category-specific ROUGE scores, as well as factual consistency metrics. All NewsSumm dataset materials are openly released via Zenodo. NewsSumm offers a foundational resource for advancing research in summarization, factuality, timeline synthesis, and domain adaptation for Indian English and other low-resource language settings.

Full article

(This article belongs to the Special Issue Natural Language Processing (NLP) and Large Language Modelling (2nd Edition))

►▼

Show Figures

Figure 1

Open AccessArticle

Formal Analysis of Bakery-Based Mutual Exclusion Algorithms

by

Libero Nigro

Computers 2025, 14(12), 507; https://doi.org/10.3390/computers14120507 - 23 Nov 2025

Abstract

Lamport’s Bakery algorithm (LBA) represents a general and elegant solution to the mutual exclusion (ME) problem posed by Dijkstra in 1965. Its correctness is usually based on intuitive reasoning. LBA rests on an unbounded number of tickets, which prevents correctness assessment by model

[...] Read more.

Lamport’s Bakery algorithm (LBA) represents a general and elegant solution to the mutual exclusion (ME) problem posed by Dijkstra in 1965. Its correctness is usually based on intuitive reasoning. LBA rests on an unbounded number of tickets, which prevents correctness assessment by model checking. Several variants are proposed in the literature to bound the number of exploited tickets. This paper is based on a formal method centered on Uppaal for reasoning about general shared-memory ME algorithms. A model can (hopefully) be verified by the exhaustive model checker (MC), and/or by the statistical model checker (SMC) through stochastic simulations. To overcome the scalability problems of SMC, a model can be reduced to actors and simulated in Java. The paper formalizes LBA and demonstrates, through simulations, that it is correct with atomic and non-atomic memory registers. Then, some representative variants with bounded tickets are studied, which prove to be accurate with atomic registers, or which confirm their correctness under atomic or non-atomic registers.

Full article

Open AccessArticle

Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework

by

Andrej Ralbovský, Ivan Kotuliak and Dennis Sobolev

Computers 2025, 14(12), 506; https://doi.org/10.3390/computers14120506 - 23 Nov 2025

Abstract

Modern on-premises threat detection increasingly relies on deep learning over network and system logs, yet organizations must balance infrastructure and resource constraints with maintainability and performance. We investigate how adopting MLOps influences deployment and runtime behavior of a recurrent-neural-network–based detector for malicious event

[...] Read more.

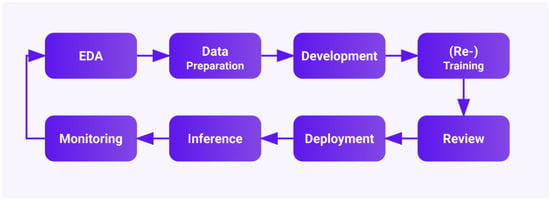

Modern on-premises threat detection increasingly relies on deep learning over network and system logs, yet organizations must balance infrastructure and resource constraints with maintainability and performance. We investigate how adopting MLOps influences deployment and runtime behavior of a recurrent-neural-network–based detector for malicious event sequences. Our investigation includes surveying modern open-source platforms to select a suitable candidate, its implementation over a two-node setup with a CPU-centric control server and a GPU worker and performance evaluation for a containerized MLOps-integrated setup vs. bare metal. For evaluation, we use four scenarios that cross the deployment model (bare metal vs. containerized) with two different versions of software stack, using a sizable training corpus and a held-out inference subset representative of operational traffic. For training and inference, we measured execution time, CPU and RAM utilization, and peak GPU memory to find notable patterns or correlations providing insights for organizations adopting the on-premises-first approach. Our findings prove that MLOps can be adopted even in resource-constrained environments without inherent performance penalties; thus, platform choice should be guided by operational concerns (reproducibility, scheduling, tracking), while performance tuning should prioritize pinning and validating the software stack, which has surprisingly large impact on resource utilization and execution process. Our study offers a reproducible blueprint for on-premises cyber-analytics and clarifies where optimization yields the greatest return.

Full article

(This article belongs to the Special Issue Using New Technologies in Cyber Security Solutions (3rd Edition))

►▼

Show Figures

Figure 1

Open AccessFeature PaperReview

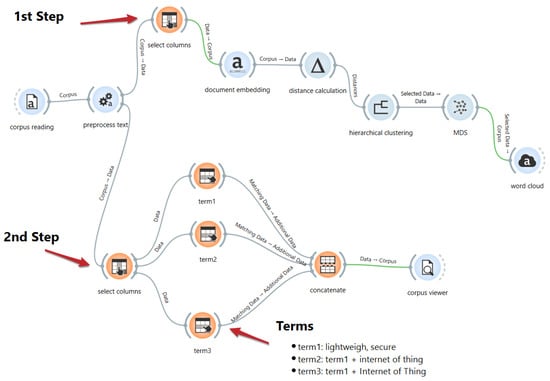

Lightweight Encryption Algorithms for IoT

by

Cláudio Silva, Nelson Tenório and Jorge Bernardino

Computers 2025, 14(12), 505; https://doi.org/10.3390/computers14120505 - 21 Nov 2025

Abstract

►▼

Show Figures

The exponential growth of the Internet of Things (IoT) has increased the demand for robust security solutions that are tailored to devices with limited resources. This paper presents a systematic review of recent literature on lightweight encryption algorithms designed to meet this challenge.

[...] Read more.

The exponential growth of the Internet of Things (IoT) has increased the demand for robust security solutions that are tailored to devices with limited resources. This paper presents a systematic review of recent literature on lightweight encryption algorithms designed to meet this challenge. Through an analysis of 22 distinct ciphers, the study identifies the main algorithms proposed and catalogues the key metrics used for their evaluation. The most common performance criteria are execution speed, memory usage, and energy consumption, while security is predominantly assessed using techniques such as differential and linear cryptanalysis, alongside statistical tests such as the avalanche effect. However, the most critical finding is the profound lack of standardized frameworks for both performance benchmarking and security validation. This methodological fragmentation severely hinders objective, cross-study comparisons, making evidence-based algorithm selection a significant challenge and impeding the development of verifiably secure IoT systems.

Full article

Figure 1

Open AccessArticle

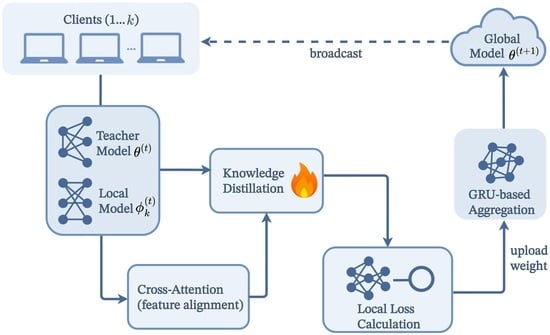

pFedKA: Personalized Federated Learning via Knowledge Distillation with Dual Attention Mechanism

by

Yuanhao Jin, Kaiqi Zhang, Chao Ma, Xinxin Cheng, Luogang Zhang and Hongguo Zhang

Computers 2025, 14(12), 504; https://doi.org/10.3390/computers14120504 - 21 Nov 2025

Abstract

Federated learning in heterogeneous data scenarios faces two key challenges. First, the conflict between global models and local personalization complicates knowledge transfer and leads to feature misalignment, hindering effective personalization for clients. Second, the lack of dynamic adaptation in standard federated learning makes

[...] Read more.

Federated learning in heterogeneous data scenarios faces two key challenges. First, the conflict between global models and local personalization complicates knowledge transfer and leads to feature misalignment, hindering effective personalization for clients. Second, the lack of dynamic adaptation in standard federated learning makes it difficult to handle highly heterogeneous and changing client data, reducing the global model’s generalization ability. To address these issues, this paper proposes pFedKA, a personalized federated learning framework integrating knowledge distillation and a dual-attention mechanism. On the client-side, a cross-attention module dynamically aligns global and local feature spaces using adaptive temperature coefficients to mitigate feature misalignment. On the server-side, a Gated Recurrent Unit-based attention network adaptively adjusts aggregation weights using cross-round historical states, providing more robust aggregation than static averaging in heterogeneous settings. Experimental results on CIFAR-10, CIFAR-100, and Shakespeare datasets demonstrate that pFedKA converges faster and with greater stability in heterogeneous scenarios. Furthermore, it significantly improves personalization accuracy compared to state-of-the-art personalized federated learning methods. Additionally, we demonstrate privacy guarantees by integrating pFedKA with DP-SGD, showing comparable privacy protection to FedAvg while maintaining high personalization accuracy.

Full article

(This article belongs to the Special Issue Mobile Fog and Edge Computing)

►▼

Show Figures

Graphical abstract

Open AccessArticle

MacHa: Multi-Aspect Controllable Text Generation Based on a Hamiltonian System

by

Delong Xu, Min Lin and Yurong Wang

Computers 2025, 14(12), 503; https://doi.org/10.3390/computers14120503 - 21 Nov 2025

Abstract

Multi-faceted controllable text generation can be viewed as an extension and combination of controllable text generation tasks. It requires the generation of fluent text while controlling multiple different attributes (e.g., negative emotions and environmental protection in themes). Current research either estimates compact latent

[...] Read more.

Multi-faceted controllable text generation can be viewed as an extension and combination of controllable text generation tasks. It requires the generation of fluent text while controlling multiple different attributes (e.g., negative emotions and environmental protection in themes). Current research either estimates compact latent spaces for multiple attributes, reducing interference between different attributes but making it difficult to control the balance between multiple attributes, or controls the balance between multiple attributes but requires complex searches for decoding. Based on these issues, we propose a new method called MacHa, which trains an attribute latent space using multiple loss functions and establishes a mapping between the attribute latent space and attributes in sentences using a VAE network. An energy model based on the Hamilton function is defined in the potential space to control the balance between multiple attributes. Subsequently, in order to reduce the complexity of the decoding process, we extract samples using the RL sampling method and send them to the VAE decoder to generate the final text. The experimental results show that the MacHa method generates text with higher accuracy than the baseline models after balancing multiple attributes and has a fast decoding speed.

Full article

(This article belongs to the Special Issue Natural Language Processing (NLP) and Large Language Modelling (2nd Edition))

►▼

Show Figures

Figure 1

Open AccessArticle

Survey on Monocular Metric Depth Estimation

by

Jiuling Zhang, Yurong Wu and Huilong Jiang

Computers 2025, 14(11), 502; https://doi.org/10.3390/computers14110502 - 20 Nov 2025

Abstract

►▼

Show Figures

Monocular metric depth estimation (MMDE) aims to generate depth maps with an absolute metric scale from a single RGB image, which enables accurate spatial understanding, 3D reconstruction, and autonomous navigation. Unlike conventional monocular depth estimation that predicts only relative depth, MMDE maintains geometric

[...] Read more.

Monocular metric depth estimation (MMDE) aims to generate depth maps with an absolute metric scale from a single RGB image, which enables accurate spatial understanding, 3D reconstruction, and autonomous navigation. Unlike conventional monocular depth estimation that predicts only relative depth, MMDE maintains geometric consistency across frames and supports reliable integration with visual SLAM, high-precision 3D modeling, and novel view synthesis. This survey provides a comprehensive review of MMDE, tracing its evolution from geometry-based formulations to modern learning-based frameworks. The discussion emphasizes the importance of datasets, distinguishing metric datasets that supply absolute ground-truth depth from relative datasets that facilitate ordinal or normalized depth learning. Representative datasets, including KITTI, NYU-Depth, ApolloScape, and TartanAir, are analyzed with respect to scene composition, sensor modality, and intended application domain. Methodological progress is examined across several dimensions, including model architecture design, domain generalization, structural detail preservation, and the integration of synthetic data that complements real-world captures. Recent advances in patch-based inference, generative modeling, and loss design are compared to reveal their respective advantages and limitations. By summarizing the current landscape and outlining open research challenges, this work establishes a clear reference framework that supports future studies and facilitates the deployment of MMDE in real-world vision systems requiring precise and robust metric depth estimation.

Full article

Figure 1

Open AccessArticle

AutoQALLMs: Automating Web Application Testing Using Large Language Models (LLMs) and Selenium

by

Sindhupriya Mallipeddi, Muhammad Yaqoob, Javed Ali Khan, Tahir Mehmood, Alexios Mylonas and Nikolaos Pitropakis

Computers 2025, 14(11), 501; https://doi.org/10.3390/computers14110501 - 18 Nov 2025

Abstract

Modern web applications change frequently in response to user and market needs, making their testing challenging. Manual testing and automation methods often struggle to keep up with these changes. We propose an automated testing framework, AutoQALLMs, that utilises various LLMs (Large Language Models),

[...] Read more.

Modern web applications change frequently in response to user and market needs, making their testing challenging. Manual testing and automation methods often struggle to keep up with these changes. We propose an automated testing framework, AutoQALLMs, that utilises various LLMs (Large Language Models), including GPT-4, Claude, and Grok, alongside Selenium WebDriver, BeautifulSoup, and regular expressions. This framework enables one-click testing, where users provide a URL as input and receive test results as output, thus eliminating the need for human intervention. It extracts HTML (Hypertext Markup Language) elements from the webpage and utilises the LLMs API to generate Selenium-based test scripts. Regular expressions enhance the clarity and maintainability of these scripts. The scripts are executed automatically, and the results, such as pass/fail status and error details, are displayed to the tester. This streamlined input–output process forms the core foundation of the AutoQALLMs framework. We evaluated the framework on 30 websites. The results show that the system drastically reduces the time needed to create test cases, achieves broad test coverage (96%) with Claude 4.5 LLM, which is competitive with manual scripts (98%), and allows for rapid regeneration of tests in response to changes in webpage structure. Software testing expert feedback confirmed that the proposed AutoQALLMs method for automated web application testing enables faster regression testing, reduces manual effort, and maintains reliable test execution. However, some limitations remain in handling complex page changes and validation. Although Claude 4.5 achieved slightly higher test coverage in the comparative evaluation of the proposed experiment, GPT-4 was selected as the default model for AutoQALLMs due to its cost-efficiency, reproducibility, and stable script generation across diverse websites. Future improvements may focus on increasing accuracy, adding self-healing techniques, and expanding to more complex testing scenarios.

Full article

(This article belongs to the Special Issue Best Practices, Challenges and Opportunities in Software Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images

by

Maryjose Devora-Guadarrama, Benjamín Luna-Benoso, Antonio Alarcón-Paredes, Jose Cruz Martínez-Perales and Úrsula Samantha Morales-Rodríguez

Computers 2025, 14(11), 500; https://doi.org/10.3390/computers14110500 - 17 Nov 2025

Abstract

Early detection of plant diseases is key to ensuring food production, reducing economic losses, minimizing the use of agrochemicals, and maintaining the sustainability of the agricultural sector. Citrus plants, an important source of vitamin C, fiber, and antioxidants, are among the world’s most

[...] Read more.

Early detection of plant diseases is key to ensuring food production, reducing economic losses, minimizing the use of agrochemicals, and maintaining the sustainability of the agricultural sector. Citrus plants, an important source of vitamin C, fiber, and antioxidants, are among the world’s most significant fruit crops but face threats such as canker and Huanglongbing (HLB), incurable diseases that require management strategies to mitigate their impact. Manual diagnosis, although common, I s imprecise, slow, and costly; therefore, efficient alternatives are emerging to identify diseases from early stages using Artificial Intelligence techniques. This study evaluated four deep learning models, specifically convolutional neural networks. In this study, we evaluated four convolutional neural network models (DenseNet121, ResNet50, EfficientNetB0, and MobileNetV2) to detect canker and HLB in citrus leaf images. We applied preprocessing and data-augmentation techniques; transfer learning via selective fine-tuning; stratified k-fold cross-validation; regularization methods such as dropout and weight decay; and hyperparameter-optimization techniques. The models were evaluated by the loss value and by metrics derived from the confusion matrix, including accuracy, recall, and F1-score. The best-performing model was EfficientNetB0, which achieved an average accuracy of 99.88% and the lowest loss value of 0.0058 using cross-entropy as the loss function. Since EfficientNetB0 is a lightweight model, the results show that lightweight models can achieve favorable performance compared to robust models, models that can be useful for disease detection in the agricultural sector using portable devices or drones for field monitoring. The high accuracy obtained is mainly because only two diseases were considered; consequently, it is possible that these results do not hold in a database that includes a larger number of diseases.

Full article

(This article belongs to the Section AI-Driven Innovations)

►▼

Show Figures

Figure 1

Open AccessArticle

Machine Learning Models for Subsurface Pressure Prediction: A Data Mining Approach

by

Muhammad Raiees Amjad, Rohan Benjamin Varghese and Tehmina Amjad

Computers 2025, 14(11), 499; https://doi.org/10.3390/computers14110499 - 17 Nov 2025

Abstract

Precise pore pressure prediction is highly essential for safe and effective drilling; however, the nonlinear and heterogeneous nature of the subsurface strata makes it extremely challenging. Conventional physics-based methods are not capable of handling this nonlinearity and variation. Recently, machine learning (ML) methods

[...] Read more.

Precise pore pressure prediction is highly essential for safe and effective drilling; however, the nonlinear and heterogeneous nature of the subsurface strata makes it extremely challenging. Conventional physics-based methods are not capable of handling this nonlinearity and variation. Recently, machine learning (ML) methods have been deployed by researchers to enhance prediction performance. These methods are often highly domain-specific and produce good results for the data they are trained for but struggle to generalize to unseen data. This study introduces a Hybrid Meta-Ensemble (HME), a meta model framework, as a novel data mining approach that applies ML methods and ensemble learning on well log data for pore pressure prediction. This proposed study first trains five baseline models including Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Deep Feedforward Neural Network (DFNN), Random Forest (RF), and Extreme Gradient Boost (XGBoost) to capture sequential and nonlinear relationships for pore pressure prediction. The stacked predictions are further improved through a meta learner that adaptively reweighs them according to subsurface heterogeneity, effectively strengthening the ability of ensembles to generalize across diverse geological settings. The experimentation is performed on well log data from four wells located in the Potwar Basin which is one of Pakistan’s principal oil- and gas-producing regions. The proposed Hybrid Meta-Ensemble (HME) has achieved an R2 value of 0.93, outperforming the individual base models. Using the HME approach, the model effectively captures rock heterogeneity by learning optimal nonlinear interactions among the base models, leading to more accurate pressure predictions. Results show that integrating deep learning with robust meta learning substantially improves the accuracy of pore pressure prediction.

Full article

(This article belongs to the Special Issue Recent Advances in Data Mining: Methods, Trends, and Emerging Applications)

►▼

Show Figures

Figure 1

Open AccessArticle

An Embedded Convolutional Neural Network Model for Potato Plant Disease Classification

by

Laila Hammam, Hany Ayad Bastawrous, Hani Ghali and Gamal A. Ebrahim

Computers 2025, 14(11), 498; https://doi.org/10.3390/computers14110498 - 16 Nov 2025

Abstract

►▼

Show Figures

Globally, potatoes are one of the major crops that significantly contribute to food security; hence, the field of machine learning has opened the gate for many advances in plant disease detection. For real-time agricultural applications, it has been found that real-time data processing

[...] Read more.

Globally, potatoes are one of the major crops that significantly contribute to food security; hence, the field of machine learning has opened the gate for many advances in plant disease detection. For real-time agricultural applications, it has been found that real-time data processing is challenging; this is due to the limitations and constraints imposed by hardware platforms. However, such challenges can be handled by deploying simple and optimized AI models serving the need of accurate data classification while taking into consideration hardware resource limitations. Hence, the purpose of this study is to implement a customized and optimized convolutional neural network model for deployment on hardware platforms to classify both potato early blight and potato late blight diseases. Lastly, a thorough comparison between both embedded and PC simulation implementations was conducted for the three models: the implemented CNN model, VGG16, and ResNet50. Raspberry Pi3 was chosen for the embedded implementation in the intermediate stage and NVIDIA Jetson Nano was chosen for the final stage. The suggested model significantly outperformed both the VGG16 and ResNet50 CNNs, as evidenced by the inference time, number of FLOPs, and CPU data usage, with an accuracy of 95% on predicting unseen data.

Full article

Figure 1

Open AccessReview

Arabic Natural Language Processing (NLP): A Comprehensive Review of Challenges, Techniques, and Emerging Trends

by

Abdulaziz M. Alayba

Computers 2025, 14(11), 497; https://doi.org/10.3390/computers14110497 - 15 Nov 2025

Abstract

Arabic natural language processing (NLP) has garnered significant attention in recent years due to the growing demand for automated text and Arabic-based intelligent systems, in addition to digital transformation in the Arab world. However, the unique linguistic characteristics of Arabic, including its rich

[...] Read more.

Arabic natural language processing (NLP) has garnered significant attention in recent years due to the growing demand for automated text and Arabic-based intelligent systems, in addition to digital transformation in the Arab world. However, the unique linguistic characteristics of Arabic, including its rich morphology, diverse dialects, and complex syntax, pose significant challenges to NLP researchers. This paper provides a comprehensive review of the main linguistic challenges inherent in Arabic NLP, such as morphological complexity, diacritics and orthography issues, ambiguity, and dataset limitations. Furthermore, it surveys the major computational techniques employed in tokenisation and normalisation, named entity recognition, part-of-speech tagging, sentiment analysis, text classification, summarisation, question answering, and machine translation. In addition, it discusses the rapid rise of large language models and their transformative impact on Arabic NLP.

Full article

(This article belongs to the Special Issue Natural Language Processing (NLP) and Large Language Modelling (2nd Edition))

►▼

Show Figures

Figure 1

Open AccessArticle

Recognizing Cattle Behaviours by Spatio-Temporal Reasoning Between Key Body Parts and Environmental Context

by

Fangzheng Qi, Zhenjie Hou, En Lin, Xing Li, Jiuzhen Liang and Wenguang Zhang

Computers 2025, 14(11), 496; https://doi.org/10.3390/computers14110496 - 13 Nov 2025

Abstract

The accurate recognition of cattle behaviours is crucial for improving animal welfare and production efficiency in precision livestock farming. However, existing methods pay limited attention to recognising behaviours under occlusion or those involving subtle interactions between cattle and environmental objects in group farming

[...] Read more.

The accurate recognition of cattle behaviours is crucial for improving animal welfare and production efficiency in precision livestock farming. However, existing methods pay limited attention to recognising behaviours under occlusion or those involving subtle interactions between cattle and environmental objects in group farming scenarios. To address this limitation, we propose a novel spatio-temporal feature extraction network that explicitly models the associative relationships between key body parts of cattle and environmental factors, thereby enabling precise behaviour recognition. Specifically, the proposed approach first employs a spatio-temporal perception network to extract discriminative motion features of key body parts. Subsequently, a spatio-temporal relation integration module with metric learning is introduced to adaptively quantify the association strength between cattle features and environmental elements. Finally, a spatio-temporal enhancement network is utilised to further optimise the learned interaction representations. Experimental results on a public cattle behaviour dataset demonstrate that our method achieves a state-of-the-art mean average precision (mAP) of 87.19%, outperforming the advanced SlowFast model by 6.01 percentage points. Ablation studies further confirm the synergistic effectiveness of each module, particularly in recognising behaviours that rely on environmental interactions, such as drinking and grooming. This study provides a practical and reliable solution for intelligent cattle behaviour monitoring and highlights the significance of relational reasoning in understanding animal behaviours within complex environments.

Full article

(This article belongs to the Topic AI, Deep Learning, and Machine Learning in Veterinary Science Imaging)

►▼

Show Figures

Figure 1

Open AccessArticle

GrowMore: Adaptive Tablet-Based Intervention for Education and Cognitive Rehabilitation in Children with Mild-to-Moderate Intellectual Disabilities

by

Abdullah, Nida Hafeez, Kinza Sardar, Fatima Uroosa, Zulaikha Fatima, Rolando Quintero Téllez and José Luis Oropeza Rodríguez

Computers 2025, 14(11), 495; https://doi.org/10.3390/computers14110495 - 13 Nov 2025

Abstract

Providing equitable, high-quality education to all children, including those with intellectual disabilities (ID), remains a critical global challenge. Traditional learning environments often fail to address the unique cognitive needs of children with mild and moderate ID. In response, this study explores the potential

[...] Read more.

Providing equitable, high-quality education to all children, including those with intellectual disabilities (ID), remains a critical global challenge. Traditional learning environments often fail to address the unique cognitive needs of children with mild and moderate ID. In response, this study explores the potential of tablet-based game applications to enhance educational outcomes through an interactive, engaging, and accessible digital platform. The proposed solution, GrowMore, is a tablet-based educational game specifically designed for children aged 8 to 12 with mild intellectual disabilities. The application integrates adaptive learning strategies, vibrant visuals, and interactive feedback mechanisms to foster improvements in object recognition, color identification, and counting skills. Additionally, the system supports cognitive rehabilitation by enhancing attention, working memory, and problem-solving abilities, which caregivers reported transferring to daily functional tasks. The system’s usability was rigorously evaluated using quality standards, focusing on effectiveness, efficiency, and user satisfaction. Experimental results demonstrate that approximately 88% of participants were able to correctly identify learning elements after engaging with the application, with notable improvements in attention span and learning retention. Informal interviews with parents further validated the positive cognitive, behavioral, and rehabilitative impact of the application. These findings underscore the value of digital game-based learning tools in special education and highlight the need for continued development of inclusive educational technologies.

Full article

(This article belongs to the Special Issue Advances in Game-Based Learning, Gamification in Education and Serious Games)

►▼

Show Figures

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Applied Sciences, Computers, Electronics, JSAN, Technologies

Emerging AI+X Technologies and Applications

Topic Editors: Byung-Seo Kim, Hyunsik Ahn, Kyu-Tae LeeDeadline: 31 December 2025

Topic in

AI, Computers, Electronics, Information, MAKE, Signals

Recent Advances in Label Distribution Learning

Topic Editors: Xin Geng, Ning Xu, Liangxiao JiangDeadline: 31 January 2026

Topic in

AI, Computers, Education Sciences, Societies, Future Internet, Technologies

AI Trends in Teacher and Student Training

Topic Editors: José Fernández-Cerero, Marta Montenegro-RuedaDeadline: 11 March 2026

Topic in

Applied Sciences, Computers, JSAN, Technologies, BDCC, Sensors, Telecom, Electronics

Electronic Communications, IOT and Big Data, 2nd Volume

Topic Editors: Teen-Hang Meen, Charles Tijus, Cheng-Chien Kuo, Kuei-Shu Hsu, Jih-Fu TuDeadline: 31 March 2026

Conferences

Special Issues

Special Issue in

Computers

Emerging Trends in Network Security and Applied Cryptography

Guest Editors: Hai Liu, Feng TianDeadline: 30 November 2025

Special Issue in

Computers

Advances in Failure Detection and Diagnostic Strategies: Enhancing Reliability and Safety

Guest Editor: Rafael PalaciosDeadline: 31 December 2025

Special Issue in

Computers

Wireless Sensor Networks in IoT

Guest Editors: Shaolin Liao, Jinxin Li, Tiaojie XiaoDeadline: 31 December 2025

Special Issue in

Computers

Transformative Approaches in Education: Harnessing AI, Augmented Reality, and Virtual Reality for Innovative Teaching and Learning

Guest Editor: Stamatios PapadakisDeadline: 31 December 2025