Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones

Abstract

:1. Introduction

1.1. Drone Sensor Systems

- GPS (Global Positioning System) sensors are used to detect the geographical position of the vehicle by evaluating signals received from multiple satellites.

- An IMU (Inertial Measurement Unit) uses gyroscopes, accelerometers and magnetometers to obtain linear velocity and attitude of the vehicle.

- A compass is another type of sensor that uses the magnetic field of the Earth to obtain the orientation of the vehicle.

- A barometric pressure sensor is used to extract altitude of the vehicle using atmospheric pressure.

- Pulse radars emit pulses with a short duration, and wait for a longer duration so that echoes from further objects can reach before another pulse is sent. The distance of the object can be determined by detecting the time difference between the sent and received pulses.

- Continuous wave (CW) radars radiate radio signals continuously at a constant frequency. Reception of a signal at the frequency of interest shows existence of an object. Since the objects in motion would cause a Doppler frequency shift in the received signals, radial velocity of the object can be measured. Distance to the object, however, cannot be determined by purely this method as the signal is transmitted continuously and without any change in time.

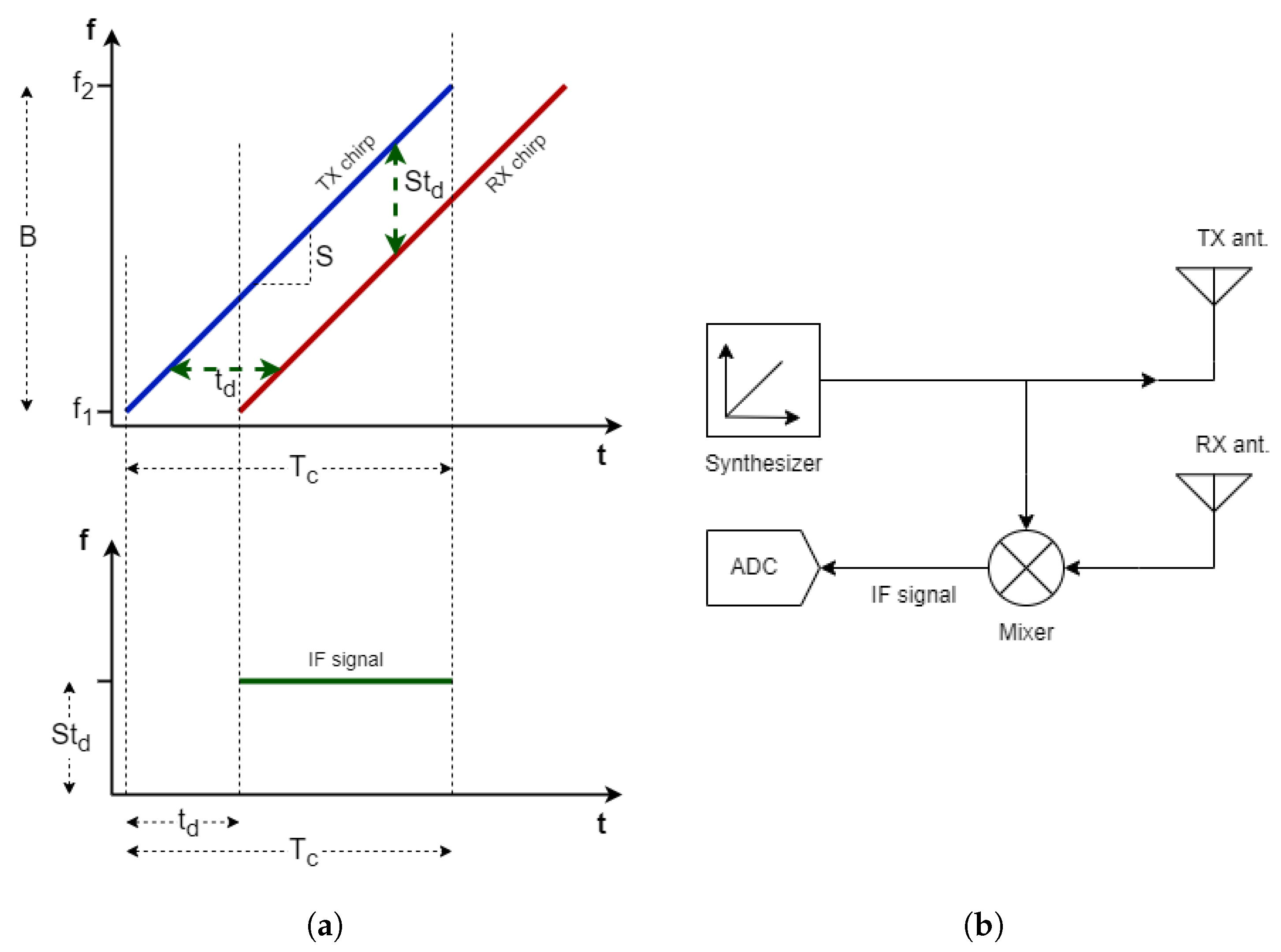

- Frequency modulated continuous wave (FMCW) radars modulate the frequency of radio waves in time, allowing measurement of distance of the objects. Velocity of the objects can also be determined by comparing successive pulses or using a triangular modulation scheme.

1.2. FMCW Radars and Applications

2. FMCW Radar System

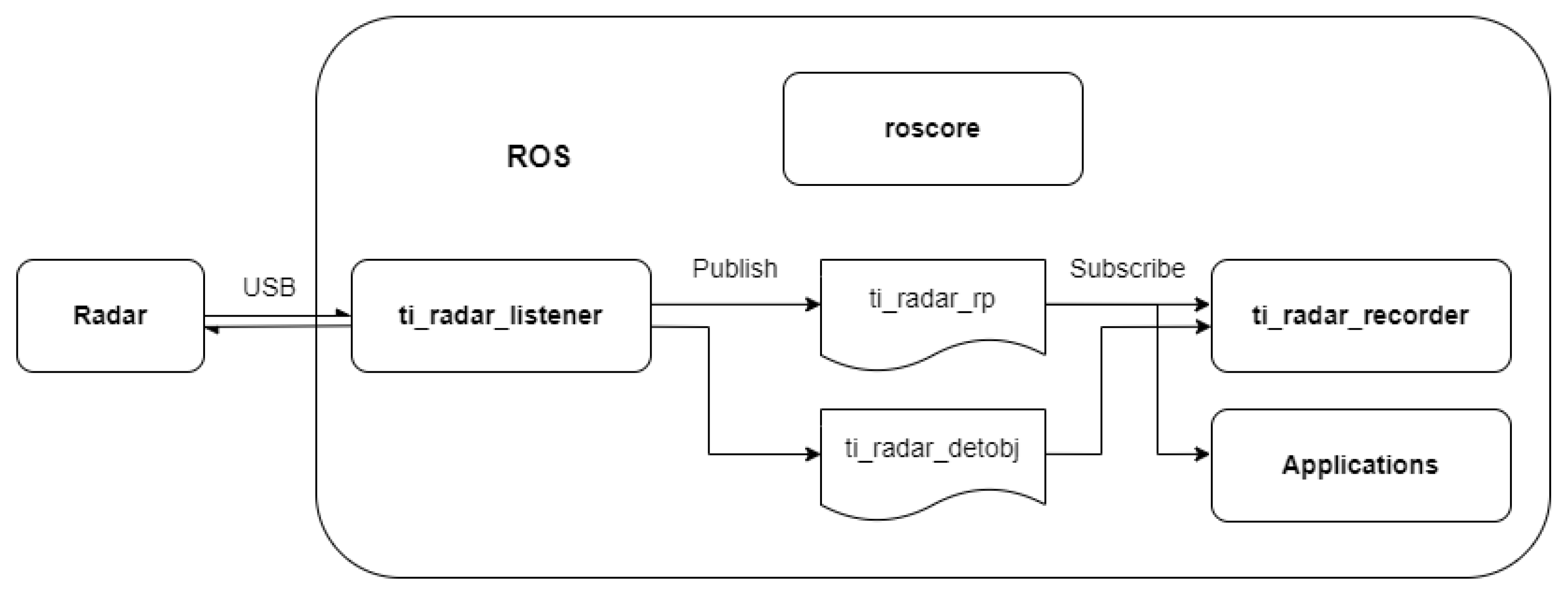

2.1. Radar System Architecture

- extracting radar parameters from a configuration file,

- configuring radar parameters,

- starting/stopping radar measurements,

- publishing received radar data to ROS,

- postprocessing the radar data,

- storing the data for later processing.

2.2. FMCW Radar Theory

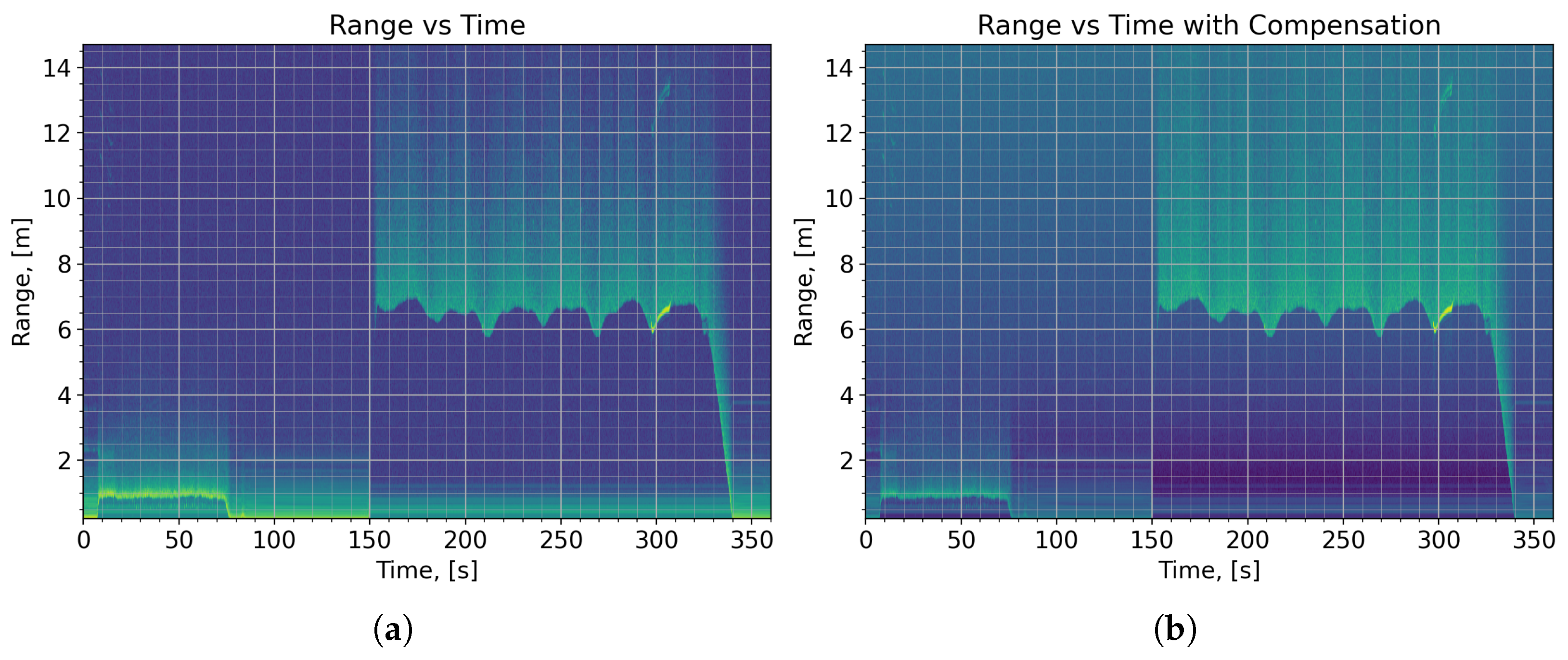

2.3. Postprocessing

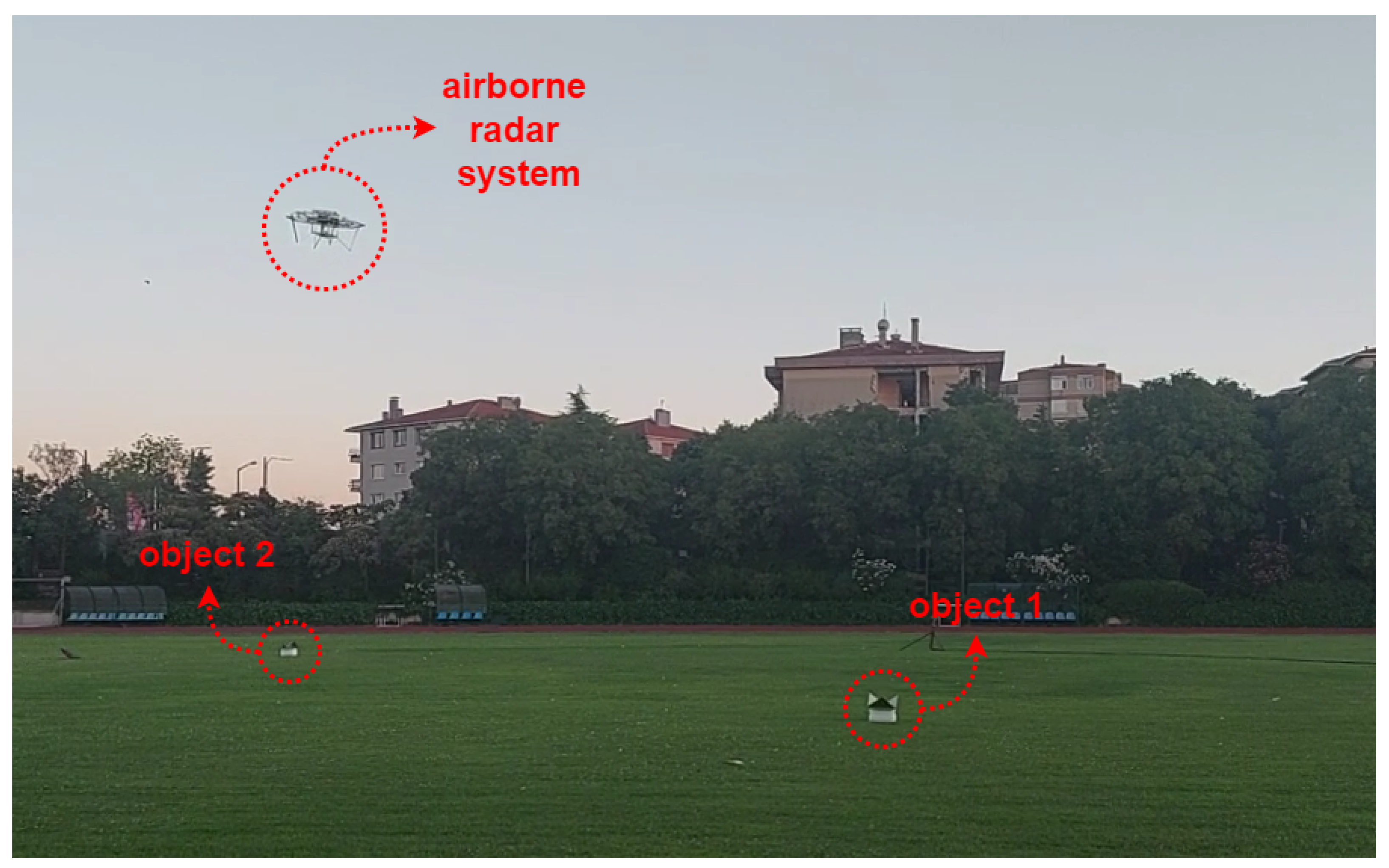

3. Experimental Results

3.1. Results and Discussion

3.2. Future Work

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chakrabarty, A.; Morris, R.; Bouyssounouse, X.; Hunt, R. Autonomous Indoor Object Tracking with the Parrot AR.Drone. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 25–30. [Google Scholar]

- Ma’Sum, M.A.; Arrofi, M.K.; Jati, G.; Arifin, F.; Kurniawan, M.N.; Mursanto, P.; Jatmiko, W. Simulation of Intelligent Unmanned Aerial Vehicle (UAV) for Military Surveillance. In Proceedings of the International Conference on Advanced Computer Science and Information Systems (ICACSIS), Sanur Bali, Indonesia, 28–29 September 2013; pp. 161–166. [Google Scholar]

- Dong, J.; Ota, K.; Dong, M. UAV-based Real-Time Survivor Detection System in Post-Disaster Search and Rescue Operations. IEEE J. Miniaturization Air Space Syst. 2021, 2, 209–219. [Google Scholar] [CrossRef]

- Georgiev, G.D.; Hristov, G.; Zahariev, P.; Kinaneva, D. Forest Monitoring System for Early Fire Detection Based on Convolutional Neural Network and UAV imagery. In Proceedings of the 28th National Conference with International Participation (TELECOM), Sofia, Bulgaria, 29–30 October 2020; pp. 57–60. [Google Scholar]

- Theodorakopoulos, P.; Lacroix, S. A Strategy for Tracking a Ground Target with a UAV. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1254–1259. [Google Scholar]

- Everaerts, J. The Use of Unmanned Aerial Vehicles (UAVs) for Remote Sensing and Mapping. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2008, 37, 1187–1192. [Google Scholar]

- Salvo, G.; Caruso, L.; Scordo, A. Urban Traffic Analysis through an UAV. Procedia-Soc. Behav. Sci. 2014, 111, 1083–1091. [Google Scholar] [CrossRef] [Green Version]

- Stehr, N.J. Drones: The Newest Technology for Precision Agriculture. Nat. Sci. Educ. 2015, 44, 89–91. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Martín-Torres, F.J.; Kumar, R. UAVs as Remote Sensing Platform in Glaciology: Present Applications and Future Prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Yarlequé, M.; Alvarez, S.; Martinez, H. FMCW GPR Radar Mounted in a Mini-UAV for Archaeological Applications: First Analytical and Measurement Results. In Proceedings of the International Conference on Electromagnetics in Advanced Applications (ICEAA), Verona, Italy, 11–15 September 2017; pp. 1646–1648. [Google Scholar]

- Garcia-Fernandez, M.; Alvarez-Lopez, Y.; Las Heras, F. Autonomous Airborne 3D SAR Imaging System for Subsurface Sensing: UWB-GPR On Board a UAV for Landmine and IED Detection. Remote Sens. 2019, 11, 2357. [Google Scholar] [CrossRef] [Green Version]

- Fernández, M.G.; López, Y.Á.; Arboleya, A.A.; Valdés, B.G.; Vaqueiro, Y.R.; Andrés, F.L.H.; García, A.P. Synthetic Aperture Radar Imaging System for Landmine Detection Using a Ground Penetrating Radar On Board a Unmanned Aerial Vehicle. IEEE Access 2018, 6, 45100–45112. [Google Scholar] [CrossRef]

- García-Fernández, M.; López, Y.Á.; Andrés, F.L.H. Airborne Multi-Channel Ground Penetrating Radar for Improvised Explosive Devices and Landmine Detection. IEEE Access 2020, 8, 165927–165943. [Google Scholar] [CrossRef]

- Colorado, J.; Perez, M.; Mondragon, I.; Mendez, D.; Parra, C.; Devia, C.; Martinez-Moritz, J.; Neira, L. An Integrated Aerial System for Landmine Detection: SDR-based Ground Penetrating Radar Onboard an Autonomous Drone. Adv. Robot. 2017, 31, 791–808. [Google Scholar] [CrossRef]

- Burr, R.; Schartel, M.; Schmidt, P.; Mayer, W.; Walter, T.; Waldschmidt, C. Design and Implementation of a FMCW GPR for UAV-based Mine Detection. In Proceedings of the IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar]

- Burr, R.; Schartel, M.; Mayer, W.; Walter, T.; Waldschmidt, C. UAV-based Polarimetric Synthetic Aperture Radar for Mine Detection. In Proceedings of the IGARSS IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9208–9211. [Google Scholar]

- Schreiber, E.; Heinzel, A.; Peichl, M.; Engel, M.; Wiesbeck, W. Advanced Buried Object Detection by Multichannel, UAV/Drone Carried Synthetic Aperture Radar. In Proceedings of the 13th European Conference on Antennas and Propagation (EuCAP), Krakow, Poland, 31 March–5 April 2019; pp. 1–5. [Google Scholar]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual Monitoring of Civil Infrastructure Systems via Camera-Equipped Unmanned Aerial Vehicles (UAVs): A Review of Related Works. Vis. Eng. 2016, 4, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Kaniewski, P.; Leśnik, C.; Susek, W.; Serafin, P. Airborne Radar Terrain Imaging System. In Proceedings of the 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 248–253. [Google Scholar]

- Ye, E.; Shaker, G.; Melek, W. Lightweight Low-Cost UAV Radar Terrain Mapping. In Proceedings of the 13th European Conference on Antennas and Propagation (EuCAP), Krakow, Poland, 31 March–5 April 2019; pp. 1–5. [Google Scholar]

- Li, C.J.; Ling, H. High-Resolution, Downward-Looking Radar Imaging Using a Small Consumer Drone. In Proceedings of the IEEE International Symposium on Antennas and Propagation (APSURSI), Fajardo, PR, USA, 26 June–1 July 2016; pp. 2037–2038. [Google Scholar]

- Li, C.J.; Ling, H. Synthetic Aperture Radar Imaging Using a Small Consumer Drone. In Proceedings of the IEEE International Symposium on Antennas and Propagation & USNC/URSI National Radio Science Meeting, Vancouver, BC, Canada, 19–24 July 2015; pp. 685–686. [Google Scholar]

- Virone, G.; Lingua, A.M.; Piras, M.; Cina, A.; Perini, F.; Monari, J.; Paonessa, F.; Peverini, O.A.; Addamo, G.; Tascone, R. Antenna Pattern Verification System Based on a Micro Unmanned Aerial Vehicle (UAV). IEEE Antennas Wirel. Propag. Lett. 2014, 13, 169–172. [Google Scholar] [CrossRef]

- García-Fernández, M.; López, Y.Á.; Arboleya, A.; González-Valdés, B.; Rodríguez-Vaqueiro, Y.; Gómez, M.E.D.C.; Andrés, F.L.H. Antenna Diagnostics and Characterization Using Unmanned Aerial Vehicles. IEEE Access 2017, 5, 23563–23575. [Google Scholar] [CrossRef]

- González-Jorge, H.; Martínez-Sánchez, J.; Bueno, M.; Arias, P. Unmanned Aerial Systems for Civil Applications: A Review. Drones 2017, 1, 2. [Google Scholar] [CrossRef] [Green Version]

- Suherman, S.; Putra, R.A.; Pinem, M. Ultrasonic Sensor Assessment for Obstacle Avoidance in Quadcopter-based Drone System. In Proceedings of the 3rd International Conference on Mechanical, Electronics, Computer, and Industrial Technology (MECnIT), Medan, Indonesia, 25–27 June 2020; pp. 50–53. [Google Scholar]

- Peng, Z.; Mu noz-Ferreras, J.M.; Tang, Y.; Liu, C.; Gómez-García, R.; Ran, L.; Li, C. A Portable FMCW Interferometry Radar with Programmable Low-IF Architecture for Localization, ISAR Imaging, and Vital Sign Tracking. IEEE Trans. Microw. Theory Tech. 2016, 65, 1334–1344. [Google Scholar] [CrossRef]

- Lampersberger, T.; Feger, R.; Haderer, A.; Egger, C.; Friedl, M.; Stelzer, A. A 24-GHz Radar with 3D-Printed and Metallized Lightweight Antennas for UAV Applications. In Proceedings of the 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; pp. 393–396. [Google Scholar]

- Kim, K.S.; Yoo, J.S.; Kim, J.W.; Kim, S.; Yu, J.W.; Lee, H.L. All-around beam switched antenna with dual polarization for drone communications. IEEE Trans. Antennas Propag. 2019, 68, 4930–4934. [Google Scholar] [CrossRef]

- Lee, C.U.; Noh, G.; Ahn, B.; Yu, J.W.; Lee, H.L. Tilted-beam switched array antenna for UAV mounted radar applications with 360° coverage. Electronics 2019, 8, 1240. [Google Scholar] [CrossRef] [Green Version]

- Ding, M.; Liang, X.; Tang, L.; Wen, Z.; Wang, X.; Wang, Y. Micro FMCW SAR with High Resolution for Mini UAV. In Proceedings of the International Conference on Microwave and Millimeter Wave Technology (ICMMT), Chengdu, China, 7–11 May 2018; pp. 1–3. [Google Scholar]

- Lort, M.; Aguasca, A.; Lopez-Martinez, C.; Marín, T.M. Initial Evaluation of SAR Capabilities in UAV Multicopter Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 127–140. [Google Scholar] [CrossRef] [Green Version]

- Ding, M.L.; Ding, C.B.; Tang, L.; Wang, X.M.; Qu, J.M.; Wu, R. A W-band 3-D Integrated Mini-SAR System with High Imaging Resolution on UAV Platform. IEEE Access 2020, 8, 113601–113609. [Google Scholar] [CrossRef]

- Svedin, J.; Bernland, A.; Gustafsson, A.; Claar, E.; Luong, J. Small UAV-based SAR System Using Low-Cost Radar, Position, and Attitude Sensors with Onboard Imaging Capability. Int. J. Microw. Wirel. Technol. 2021, 13, 602–613. [Google Scholar] [CrossRef]

- Yan, J.; Peng, Z.; Hong, H.; Zhu, X.; Lu, Q.; Ren, B.; Li, C. Indoor Range-Direction-Movement SAR for Drone-based Radar Systems. In Proceedings of the IEEE Asia Pacific Microwave Conference (APMC), Kuala Lumpur, Malaysia, 13–16 November 2017; pp. 1290–1293. [Google Scholar]

- Weib, M.; Ender, J. A 3D imaging radar for small unmanned airplanes-ARTINO. In Proceedings of the European Radar Conference, Paris, France, 3–4 October 2005; pp. 209–212. [Google Scholar]

- Noviello, C.; Gennarelli, G.; Esposito, G.; Ludeno, G.; Fasano, G.; Capozzoli, L.; Soldovieri, F.; Catapano, I. An overview on down-looking UAV-based GPR systems. Remote Sens. 2022, 14, 3245. [Google Scholar] [CrossRef]

- Meta, A.; Hoogeboom, P.; Ligthart, L.P. Signal Processing for FMCW SAR. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3519–3532. [Google Scholar] [CrossRef]

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive Radars: A Review of Signal Processing Techniques. IEEE Signal Process. Mag. 2017, 34, 22–35. [Google Scholar] [CrossRef]

- Rao, S.; Ahmad, A.; Roh, J.C.; Bharadwaj, S. 77GHz Single Chip Radar Sensor Enables Automotive Body and Chassis Applications; Texas Instruments: Dallas, TX, USA, 2017. [Google Scholar]

- Wang, F.K.; Horng, T.S.; Peng, K.C.; Jau, J.K.; Li, J.Y.; Chen, C.C. Detection of Concealed Individuals Based on Their Vital Signs by Using a See-Through-Wall Imaging System with a Self-Injection-Locked Radar. IEEE Trans. Microw. Theory Tech. 2012, 61, 696–704. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.K.; Horng, T.S.; Peng, K.C.; Jau, J.K.; Li, J.Y.; Chen, C.C. Seeing Through Walls with a Self-Injection-Locked Radar to Detect Hidden People. In Proceedings of the IEEE/MTT-S International Microwave Symposium Digest, Montreal, QC, Canada, 17–22 June 2012; pp. 1–3. [Google Scholar]

- Gunn, G.E.; Duguay, C.R.; Atwood, D.K.; King, J.; Toose, P. Observing Scattering Mechanisms of Bubbled Freshwater Lake Ice Using Polarimetric RADARSAT-2 (C-Band) and UW-Scat (X-and Ku-Bands). IEEE Trans. Geosci. Remote Sens. 2018, 56, 2887–2903. [Google Scholar] [CrossRef]

- Wang, H.; Jiang, M.; Zheng, S. Airborne Ka FMCW MiSAR System and Real Data Processing. In Proceedings of the 17th International Radar Symposium (IRS), Krakow, Poland, 10–12 May 2016; pp. 1–5. [Google Scholar]

- Park, J.; Park, S.; Kim, D.H.; Park, S.O. Leakage mitigation in heterodyne FMCW radar for small drone detection with stationary point concentration technique. IEEE Trans. Microw. Theory Tech. 2019, 67, 1221–1232. [Google Scholar] [CrossRef] [Green Version]

- Engelbertz, S.; Krebs, C.; Küter, A.; Herschel, R.; Geschke, R.; Nüßler, D. 60 GHz low phase noise radar front-end design for the detection of micro drones. In Proceedings of the 2019 16th European Radar Conference (EuRAD), Paris, France, 2–4 October 2019; pp. 25–28. [Google Scholar]

- Ramasubramanian, K. Using a Complex-Baseband Architecture in FMCW Radar Systems; Texas Instruments: Dallas, TX, USA, 2017. [Google Scholar]

| Radar Parameter | Value |

|---|---|

| Radar Configuration | MIMO (3 TX/4 RX) |

| Modulation Scheme | Sawtooth |

| Start Frequency | 77 GHz |

| Useful Bandwidth | 3.4 GHz |

| Frame Period | 100 ms |

| Range Resolution | 0.044 m |

| Max. Unambiguous Range | 14.68 m |

| Velocity Resolution | 0.62 m/s |

| Max. Radial Velocity | 4.94 m/s |

| Samples per Chirp | 416 |

| Num. of Range Bins | 512 |

| Range Bin Width | 0.036 m |

| Radar Budget Term | Symbol | Value |

|---|---|---|

| Transmit Power | +12 dBm | |

| TX Antenna Gain | +10.5 dBi | |

| RCS | +39.9 dBsm | |

| RX Antenna Gain | +10.5 dBi | |

| Range | R | 7 m |

| Wavelength | 3.9 mm | |

| Received Power | −42.1 dBm |

| Parameter | Value |

|---|---|

| Total Weight | 4.7 kg |

| Radar System Weight | ≤1.2 kg |

| Total Horizontal Distance | ∼178 m |

| Horizontal Velocity | ∼2.2 m/s |

| Takeoff Duration | 6 s |

| Preparation Duration | 10 s |

| Travel Duration | 82 s |

| Position Hold Duration | 80 s |

| Landing Duration | 13 s |

| Total Flight Duration | 191 s |

| Target Altitude | 7.0 m |

| Radar-Estimated Altitude | ∼5.7–7.2 m |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Başpınar, Ö.O.; Omuz, B.; Öncü, A. Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones. Drones 2023, 7, 86. https://doi.org/10.3390/drones7020086

Başpınar ÖO, Omuz B, Öncü A. Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones. Drones. 2023; 7(2):86. https://doi.org/10.3390/drones7020086

Chicago/Turabian StyleBaşpınar, Ömer Oğuzhan, Berk Omuz, and Ahmet Öncü. 2023. "Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones" Drones 7, no. 2: 86. https://doi.org/10.3390/drones7020086