UASea: A Data Acquisition Toolbox for Improving Marine Habitat Mapping

Abstract

:1. Introduction

2. Materials and Methods

2.1. UASea Toolbox

2.1.1. Weather Forecast Datasets

2.1.2. Ruleset

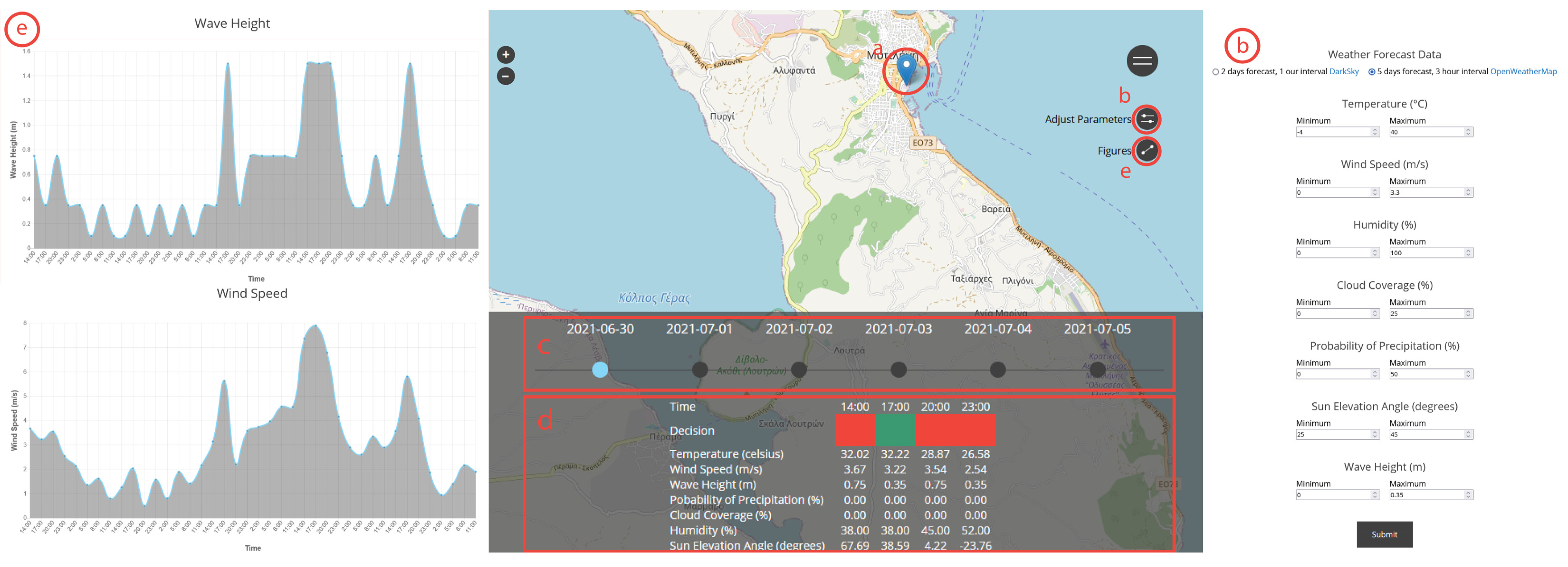

2.2. UASea Screens

2.3. Validation

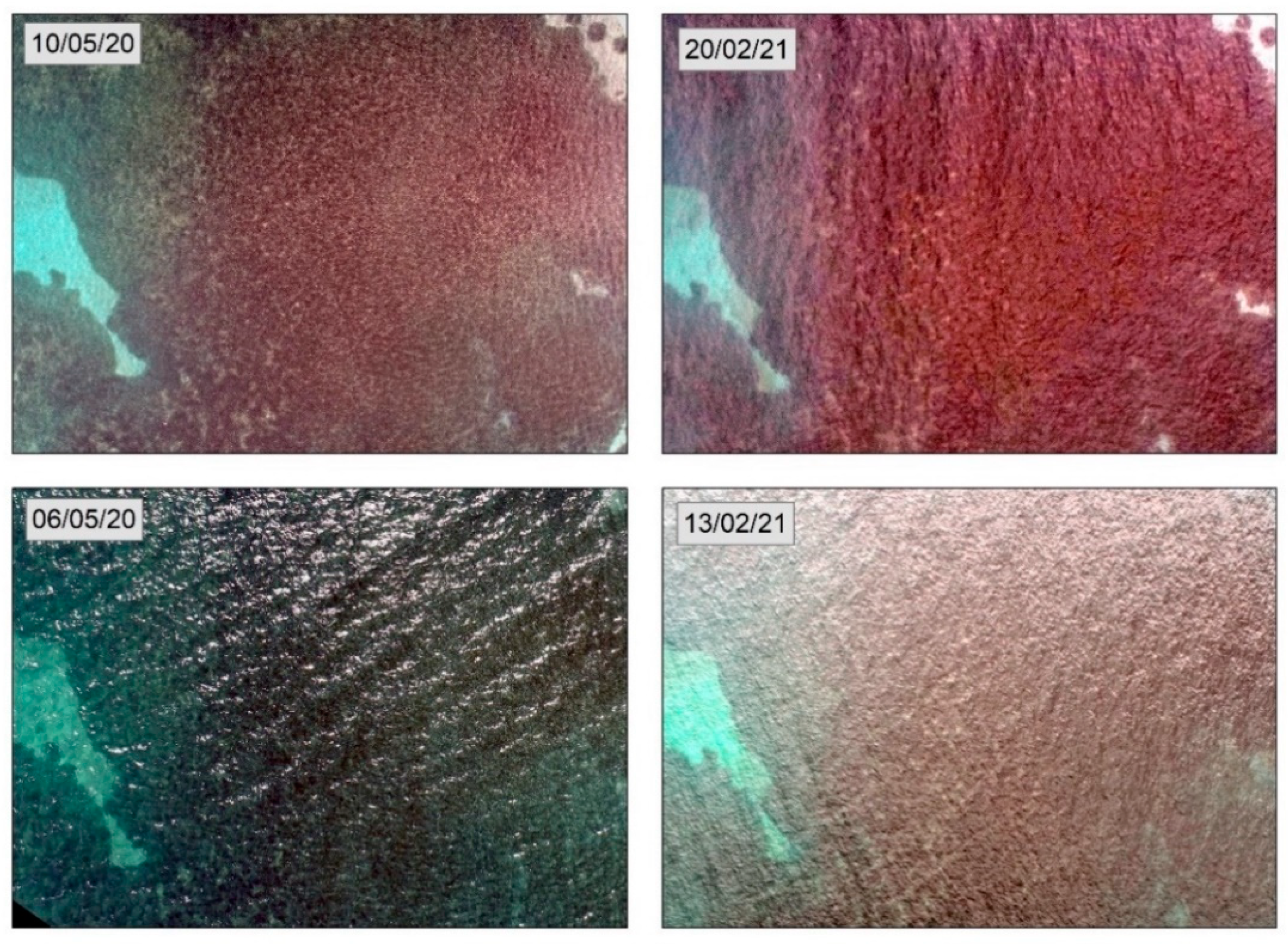

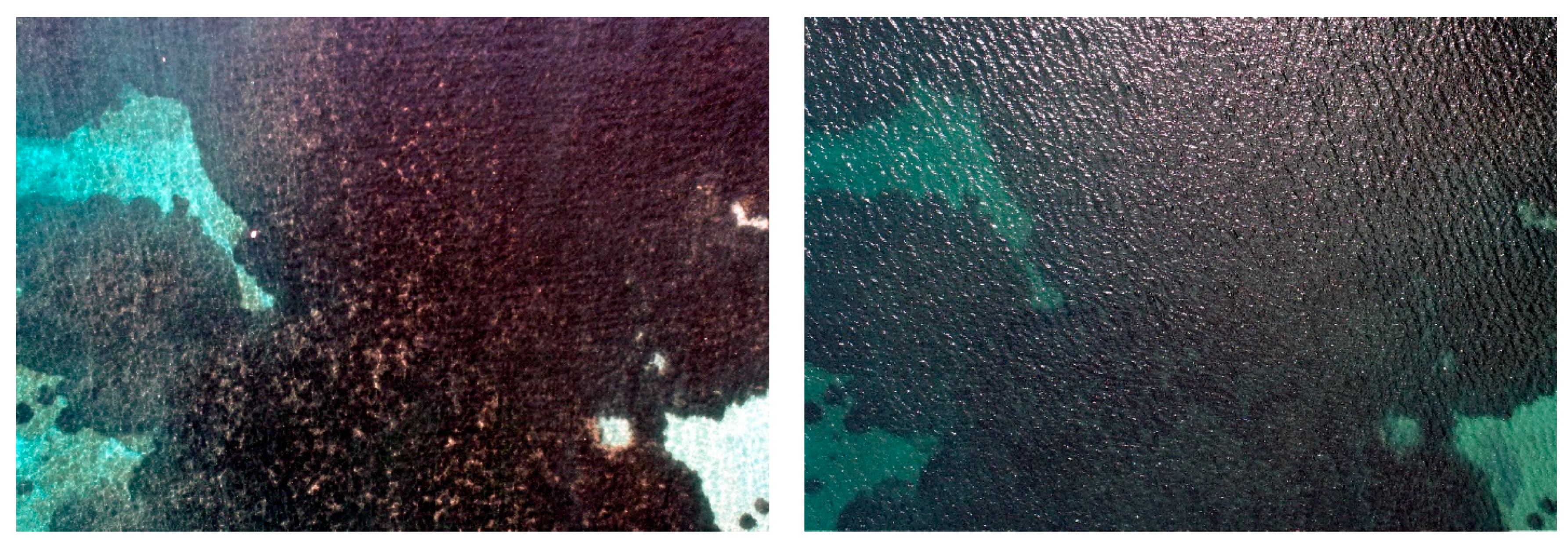

2.3.1. Image Quality Estimations (IQE)

2.3.2. Sunglint Detection

2.3.3. Turbidity Levels

2.3.4. Image Texture

2.3.5. Image Naturalness

2.3.6. Correlation Analysis

3. Results

3.1. UAS Surveys

3.2. Validation Results

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hossain, M.S.; Bujang, J.S.; Zakaria, M.H.; Hashim, M. The Application of Remote Sensing to Seagrass Ecosystems: An Overview and Future Research Prospects. Int. J. Remote Sens. 2015, 36, 61–114. [Google Scholar] [CrossRef]

- Dat Pham, T.; Xia, J.; Thang Ha, N.; Tien Bui, D.; Nhu Le, N.; Tekeuchi, W. A Review of Remote Sensing Approaches for Monitoring Blue Carbon Ecosystems: Mangroves, Sea Grasses and Salt Marshes during 2010–2018. Sensors 2019, 19, 1933. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Topouzelis, K.; Spondylidis, S.C.; Papakonstantinou, A.; Soulakellis, N. The Use of Sentinel-2 Imagery for Seagrass Mapping: Kalloni Gulf (Lesvos Island, Greece) Case Study. In Proceedings of the Fourth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2016), Paphos, Cyprus, 12 August 2016; Themistocleous, K., Hadjimitsis, D.G., Michaelides, S., Papadavid, G., Eds.; International Society for Optics and Photonics SPIE: Bellingham, WA, USA, 2016; Volume 9688, p. 96881F. [Google Scholar]

- Topouzelis, K.; Makri, D.; Stoupas, N.; Papakonstantinou, A.; Katsanevakis, S. Seagrass Mapping in Greek Territorial Waters Using Landsat-8 Satellite Images. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 98–113. [Google Scholar] [CrossRef]

- Traganos, D.; Aggarwal, B.; Poursanidis, D.; Topouzelis, K.; Chrysoulakis, N.; Reinartz, P. Towards Global-Scale Seagrass Mapping and Monitoring Using Sentinel-2 on Google Earth Engine: The Case Study of the Aegean and Ionian Seas. Remote Sens. 2018, 10, 1227. [Google Scholar] [CrossRef] [Green Version]

- Hochberg, E.J.; Andréfouët, S.; Tyler, M.R. Sea Surface Correction of High Spatial Resolution Ikonos Images to Improve Bottom Mapping in Near-Shore Environments. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1724–1729. [Google Scholar] [CrossRef]

- Pham, T.D.; Yokoya, N.; Bui, D.T.; Yoshino, K.; Friess, D.A. Remote Sensing Approaches for Monitoring Mangrove Species, Structure, and Biomass: Opportunities and Challenges. Remote Sens. 2019, 11, 230. [Google Scholar] [CrossRef] [Green Version]

- Tamondong, A.M.; Blanco, A.C.; Fortes, M.D.; Nadaoka, K. Mapping of Seagrass and Other Benthic Habitats in Bolinao, Pangasinan Using Worldview-2 Satellite Image. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 1579–1582. [Google Scholar]

- Heenkenda, M.K.; Joyce, K.E.; Maier, S.W.; Bartolo, R. Mangrove Species Identification: Comparing WorldView-2 with Aerial Photographs. Remote Sens. 2014, 6, 6064–6088. [Google Scholar] [CrossRef] [Green Version]

- Klemas, V. Remote sensing of emergent and submerged wetlands: An overview. Int. J. Remote Sens. 2013, 34, 6286–6320. [Google Scholar] [CrossRef]

- Edmund, P.; Green, P.J.M.; Clark, A.J.E.; Clark, D. Remote Sensing Handbook for Tropical Coastal Management; Unesco Publishing: Paris, France, 2000; ISBN 9231037366. [Google Scholar]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Ardizzone, G.D. Unmanned Aerial Systems (UASs) for Environmental Monitoring: A Review with Applications in Coastal Habitats, 2017. In Aerial Robots—Aerodynamics, Control and Applications; Mejia, O.D.L., Gomez, J.A.E., Eds.; IntechOpen: London, UK, 2017. [Google Scholar] [CrossRef] [Green Version]

- Sturdivant, E.J.; Lentz, E.E.; Thieler, E.R.; Farris, A.S.; Weber, K.M.; Remsen, D.P.; Miner, S.; Henderson, R.E. UAS-SfM for Coastal Research: Geomorphic Feature Extraction and Land Cover Classification from High-Resolution Elevation and Optical Imagery. Remote Sens. 2017, 9, 1020. [Google Scholar] [CrossRef] [Green Version]

- Husson, E.; Hagner, O.; Ecke, F. Unmanned Aircraft Systems Help to Map Aquatic Vegetation. Appl. Veg. Sci. 2014, 17, 567–577. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Casella, E.; Rovere, A.; Pedroncini, A.; Stark, C.P.; Casella, M.; Ferrari, M.; Firpo, M. Drones as Tools for Monitoring Beach Topography Changes in the Ligurian Sea (NW Mediterranean). Geo-Mar. Lett. 2016, 36, 151–163. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Doukari, M.; Stamatis, P.; Topouzelis, K. Coastal Management Using UAS and High-Resolution Satellite Images for Touristic Areas. Submitt. IGI Glob. J. 2017, 10, 54–72. [Google Scholar] [CrossRef] [Green Version]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote Sensing of the Environment with Small Unmanned Aircraft Systems (UASs), Part 2: Scientific and Commercial Applications. J. Unmanned Veh. Syst. 2014, 02, 86–102. [Google Scholar] [CrossRef] [Green Version]

- Ridge, J.T.; Johnston, D.W. Unoccupied Aircraft Systems (UAS) for Marine Ecosystem Restoration. Front. Mar. Sci. 2020, 7, 1–13. [Google Scholar] [CrossRef]

- Donnarumma, L.; D’Argenio, A.; Sandulli, R.; Russo, G.F.; Chemello, R. Unmanned Aerial Vehicle Technology to Assess the State of Threatened Biogenic Formations: The Vermetid Reefs of Mediterranean Intertidal Rocky Coasts. Estuar. Coast. Shelf Sci. 2021, 251, 107228. [Google Scholar] [CrossRef]

- Monteiro, J.G.; Jiménez, J.L.; Gizzi, F.; Přikryl, P.; Lefcheck, J.S.; Santos, R.S.; Canning-Clode, J. Novel Approach to Enhance Coastal Habitat and Biotope Mapping with Drone Aerial Imagery Analysis. Sci. Rep. 2021, 11, 574. [Google Scholar] [CrossRef] [PubMed]

- Klemas, V.V. Coastal and Environmental Remote Sensing from Unmanned Aerial Vehicles: An Overview. J. Coast. Res. 2015, 315, 1260–1267. [Google Scholar] [CrossRef] [Green Version]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Dor, E.B.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and Object-Based Image Analysis (OBIA) for Estimation of Substrate Changes in a Post-Bleaching Scenario on a Maldivian Reef. Remote Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

- Finkbeiner, M.; Stevenson, B.; Seaman, R. Guidance for Benthic Habitat Mapping: An Aerial Photographic Approach; NOAA/National Ocean Service/Coastal Services Center: Charleston, SC, USA, 2001.

- Coggan, R.; Curtis, M.; Vize, S.; James, C.; Passchier, S.; Mitchell, A.; Smit, C.J.; Coggan, R.; Populus, J.; White, J.; et al. Review of Standards and Protocols for Seabed Habitats Mapping. Available online: https://www.researchgate.net/profile/Jonathan_White12/publication/269630850_Review_of_standards_and_protocols_for_seabed_habitat_mapping/links/55e06b7608ae2fac471b6de3/Review-of-standards-and-protocols-for-seabed-habitat-mapping.pdf (accessed on 5 January 2021).

- Nahirnick, N.K.; Reshitnyk, L.; Campbell, M.; Hessing-Lewis, M.; Costa, M.; Yakimishyn, J.; Lee, L. Mapping with Confidence; Delineating Seagrass Habitats Using Unoccupied Aerial Systems (UAS). Remote Sens. Ecol. Conserv. 2019, 5, 121–135. [Google Scholar] [CrossRef]

- Doukari, M.; Batsaris, M.; Papakonstantinou, A.; Topouzelis, K. A Protocol for Aerial Survey in Coastal Areas Using UAS. Remote Sens. 2019, 11, 1913. [Google Scholar] [CrossRef] [Green Version]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and Practice of Acquiring Drone-Based Image Data in Marine Environments. Mar. Freshw. Res. 2018, 70, 952–963. [Google Scholar] [CrossRef]

- Duffy, J.P.; Cunliffe, A.M.; DeBell, L.; Sandbrook, C.; Wich, S.A.; Shutler, J.D.; Myers-Smith, I.H.; Varela, M.R.; Anderson, K. Location, Location, Location: Considerations When Using Lightweight Drones in Challenging Environments. Remote Sens. Ecol. Conserv. 2018, 4, 7–19. [Google Scholar] [CrossRef]

- Ellis, S.L.; Taylor, M.L.; Schiele, M.; Letessier, T.B. Influence of Altitude on Tropical Marine Habitat Classification Using Imagery from Fixed-Wing, Water-Landing UAVs. Remote Sens. Ecol. Conserv. 2020, 2, 1–14. [Google Scholar] [CrossRef]

- Doukari, M.; Katsanevakis, S.; Soulakellis, N.; Topouzelis, K. The Effect of Environmental Conditions on the Quality of UAS Orthophoto-Maps in the Coastal Environment. ISPRS Int. J. Geo-Inf. 2021, 10, 18. [Google Scholar] [CrossRef]

- Doukari, M.; Topouzelis, K. UAS Data Acquisition Protocol for Marine Habitat Mapping: An Accuracy Assessment Study. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Nice, France, 31 August–2 September 2020; Volume 43, pp. 1321–1326. [Google Scholar]

- Hodgson, A.; Kelly, N.; Peel, D. Unmanned Aerial Vehicles (UAVs) for Surveying Marine Fauna: A Dugong Case Study. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping Coral Reefs Using Consumer-Grade Drones and Structure from Motion Photogrammetry Techniques. Coral Reefs 2017, 36. [Google Scholar] [CrossRef]

- Mount, R. Acquisition of Through-Water Aerial Survey Images: Surface Effects and the Prediction of Sun Glitter and Subsurface Illumination. Photogramm. Eng. Remote Sens. 2005, 71, 1407–1415. [Google Scholar] [CrossRef]

- Leng, F.; Tan, C.M.; Pecht, M. Effect of Temperature on the Aging Rate of Li Ion Battery Operating above Room Temperature. Sci. Rep. 2015, 5. [Google Scholar] [CrossRef] [Green Version]

- Letu, H.; Nagao, T.M.; Nakajima, T.Y.; Matsumae, Y. Method for Validating Cloud Mask Obtained from Satellite Measurements Using Ground-Based Sky Camera. Appl. Opt. 2014, 53, 7523. [Google Scholar] [CrossRef] [PubMed]

- Yamashita, M.; Yoshimura, M. Development of Sky Conditions Observation Method Using Whole Sky Camera. J. Jpn. Soc. Photogramm. Remote Sens. 2008, 47, 50–59. [Google Scholar] [CrossRef] [Green Version]

- Tukey, J.W. Exploratory Data Analysis; Addison-Wesley Pub. Co.: Reading, MA, USA, 1977; ISBN 9780201076165. [Google Scholar]

- Elhag, M.; Gitas, I.; Othman, A.; Bahrawi, J.; Gikas, P. Assessment of Water Quality Parameters Using Temporal Remote Sensing Spectral Reflectance in Arid Environments, Saudi Arabia. Water 2019, 11, 556. [Google Scholar] [CrossRef] [Green Version]

- Lacaux, J.P.; Tourre, Y.M.; Vignolles, C.; Ndione, J.A.; Lafaye, M. Classification of Ponds from High-Spatial Resolution Remote Sensing: Application to Rift Valley Fever Epidemics in Senegal. Remote Sens. Environ. 2007, 106, 66–74. [Google Scholar] [CrossRef]

- Subramaniam, S.; Saxena, M. Automated algorithm for extraction of wetlands from IRS RESOURCESAT LISS III data. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 3820, 193–198. [Google Scholar] [CrossRef] [Green Version]

- Hall-Beyer, M. GLCM Texture: A Tutorial v. 3.0. Arts Res. Publ. 2017, 75. [Google Scholar] [CrossRef]

- Gadkari, D. Image Quality Analysis Using GLCM. Master’s Thesis, University of Central Florida, Orlando, FL, USA, 2004. [Google Scholar]

- Albregtsen, F. Statistical Texture Measures Computed from Gray Level Coocurrence Matrices. Image Process. Lab. Dep. Inform. Univ. Oslo 2008, 5, 1–14. [Google Scholar]

- Pan, H.; Gao, P.; Zhou, H.; Ma, R.; Yang, J.; Zhang, X. Roughness Analysis of Sea Surface from Visible Images by Texture. IEEE Access 2020, 8, 46448–46458. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. Blind/Referenceless Image Spatial Quality Evaluator. In Proceedings of the Conference Record—Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 6–9 November 2011; pp. 723–727. [Google Scholar]

- Gupta, S. Das Point Biserial Correlation Coefficient and Its Generalization. Psychometrika 1960, 25, 393–408. [Google Scholar] [CrossRef]

- Anggoro, A.; Siregar, V.P.; Agus, S.B. The Effect of Sunglint on Benthic Habitats Mapping in Pari Island Using Worldview-2 Imagery. Procedia Environ. Sci. 2016, 33, 487–495. [Google Scholar] [CrossRef] [Green Version]

- Drones for Litter Mapping: An Inter-Operator Concordance Test in Marking Beached Items on Aerial Images|Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/S0025326X21005762?token=27E3C6D6EC8334E4925DAFFD101D1FB5F6B8B8885DAA7137B78CECA2299F6076DB3282F152F51498269281A268BA4061&originRegion=eu-west-1&originCreation=20210719135501 (accessed on 19 July 2021).

- Papakonstantinou, A.; Batsaris, M.; Spondylidis, S.; Topouzelis, K. A Citizen Science Unmanned Aerial System Data Acquisition Protocol and Deep Learning Techniques for the Automatic Detection and Mapping of Marine Litter Concentrations in the Coastal Zone. Drones 2021, 5, 6. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papageorgiou, D.; Karagaitanakis, A.; Papakonstantinou, A.; Ballesteros, M.A. Remote Sensing of Sea Surface Artificial Floating Plastic Targets with Sentinel-2 and Unmanned Aerial Systems (Plastic Litter Project 2019). Remote Sens. 2020, 12, 2013. [Google Scholar] [CrossRef]

- Talavera, L.; del Río, L.; Benavente, J.; Barbero, L.; López-Ramírez, J.A. UAS as Tools for Rapid Detection of Storm-Induced Morphodynamic Changes at Camposoto Beach, SW Spain. Int. J. Remote Sens. 2018, 39, 5550–5567. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papakonstantinou, A.; Doukari, M. Coastline Change Detection Using Unmanned Aerial Vehicles and Image Processing Techniques. Fresenius Environ. Bull. 2017, 26, 5564–5571. [Google Scholar]

- Whitehead, K.; Hugenholtz, C.H. Remote Sensing of the Environment with Small Unmanned Aircraft Systems (Uass), Part 1: A Review of Progress and Challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are Unmanned Aircraft Systems (UASs) the Future of Wildlife Monitoring? A Review of Accomplishments and Challenges. Mammal Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

| Variables | Thresholds |

|---|---|

| Temperature (degrees Celsius) | −4–40 |

| Humidity (%) | 0–50 |

| Cloud cover (%) | 0–25 |

| Probability of precipitation (%) | 0–50 |

| Wind speed (m/s) | 0–3.3 |

| Wave height (m) | 0–0.35 |

| Sun elevation angle (degrees) | 25–45 |

| Image | x1 | x2 | x3 | x4 | x1 Sort | x2 Sort | x3 Sort | x4 Sort | Overall Estimates |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.02 | 0.13 | 2.76 | 4.40 | 2 | 3 | 4 | 3 | 12 |

| 2 | 0.31 | 0.22 | 2.74 | 3.93 | 7 | 10 | 2 | 2 | 21 |

| 3 | 0.18 | 0.21 | 2.70 | 11.92 | 5 | 9 | 1 | 7 | 22 |

| 4 | 0.01 | 0.36 | 2.78 | 3.34 | 1 | 18 | 5 | 1 | 25 |

| 5 | 1.88 | 0.13 | 2.81 | 11.88 | 14 | 4 | 6 | 6 | 30 |

| 6 | 0.08 | 0.85 | 2.75 | 16.40 | 3 | 20 | 3 | 9 | 35 |

| 7 | 0.40 | 0.02 | 2.98 | 23.67 | 8 | 1 | 18 | 12 | 39 |

| 8 | 1.40 | 0.17 | 2.86 | 22.72 | 13 | 5 | 11 | 11 | 40 |

| 9 | 1.38 | 0.28 | 2.83 | 22.08 | 11 | 14 | 8 | 10 | 43 |

| 10 | 3.31 | 0.12 | 2.93 | 14.98 | 19 | 2 | 14 | 8 | 43 |

| 11 | 2.56 | 0.33 | 2.82 | 11.77 | 16 | 17 | 7 | 5 | 45 |

| 12 | 0.88 | 0.25 | 2.83 | 27.88 | 9 | 13 | 9 | 14 | 45 |

| 13 | 2.09 | 0.19 | 2.86 | 33.30 | 15 | 8 | 10 | 18 | 51 |

| 14 | 0.27 | 0.25 | 2.96 | 30.19 | 6 | 12 | 17 | 17 | 52 |

| 15 | 1.21 | 0.23 | 2.88 | 34.21 | 10 | 11 | 12 | 19 | 52 |

| 16 | 0.09 | 0.41 | 3.00 | 25.02 | 4 | 19 | 19 | 13 | 55 |

| 17 | 3.56 | 0.30 | 2.96 | 7.22 | 20 | 15 | 16 | 4 | 55 |

| 18 | 2.59 | 0.17 | 2.90 | 35.67 | 17 | 6 | 13 | 20 | 56 |

| 19 | 1.39 | 0.31 | 2.95 | 28.23 | 12 | 16 | 15 | 15 | 58 |

| 20 | 2.81 | 0.18 | 3.06 | 28.85 | 18 | 7 | 20 | 16 | 61 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Doukari, M.; Batsaris, M.; Topouzelis, K. UASea: A Data Acquisition Toolbox for Improving Marine Habitat Mapping. Drones 2021, 5, 73. https://doi.org/10.3390/drones5030073

Doukari M, Batsaris M, Topouzelis K. UASea: A Data Acquisition Toolbox for Improving Marine Habitat Mapping. Drones. 2021; 5(3):73. https://doi.org/10.3390/drones5030073

Chicago/Turabian StyleDoukari, Michaela, Marios Batsaris, and Konstantinos Topouzelis. 2021. "UASea: A Data Acquisition Toolbox for Improving Marine Habitat Mapping" Drones 5, no. 3: 73. https://doi.org/10.3390/drones5030073