A Human-Computer Control System Based on Intelligent Recognition of Eye Movements and Its Application in Wheelchair Driving

Abstract

:1. Introduction

2. HCI Control System of Wheelchairs

2.1. System Overview

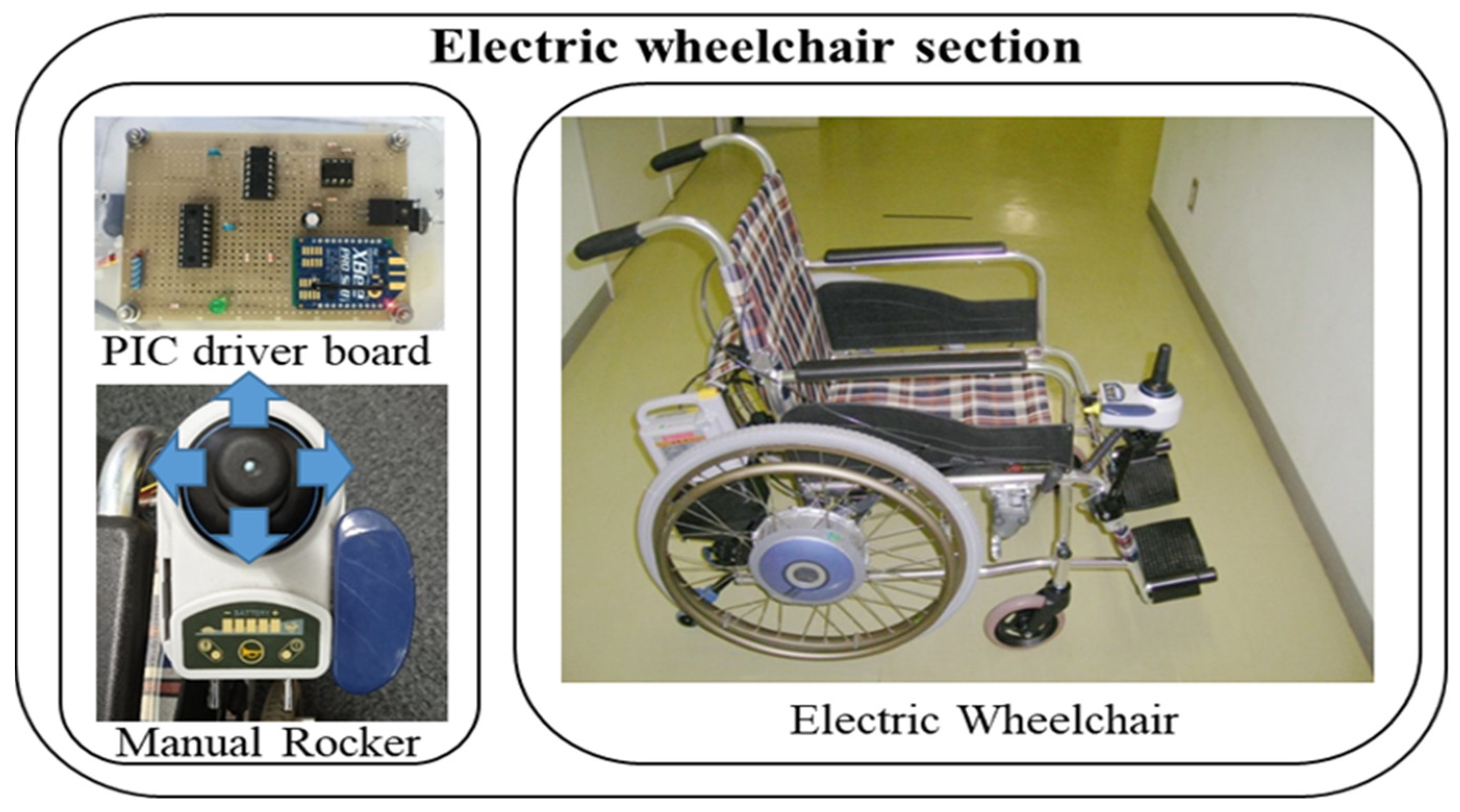

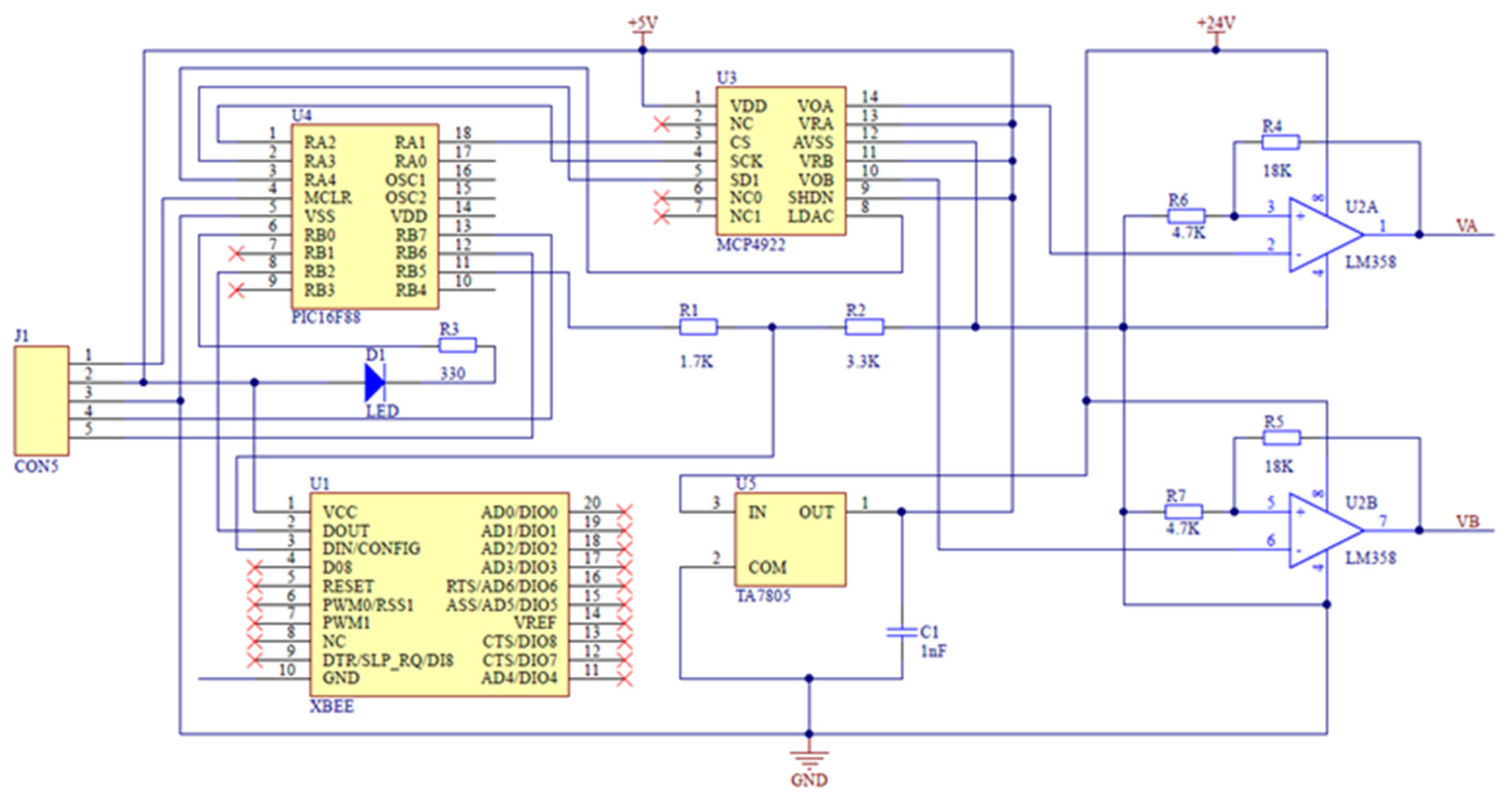

2.2. Hardware Systems for Wheelchairs

3. Eye Movement Recognition Methods

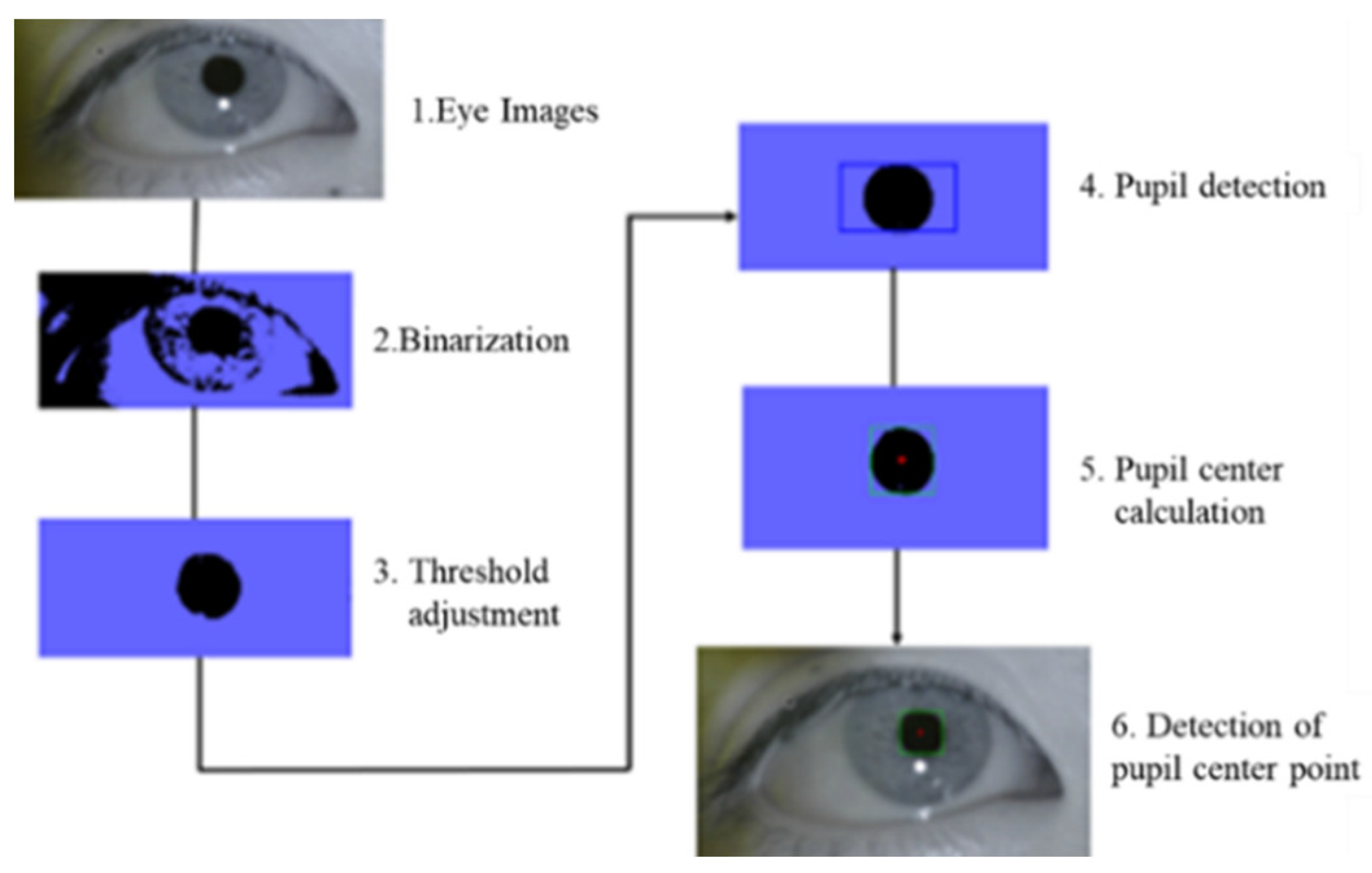

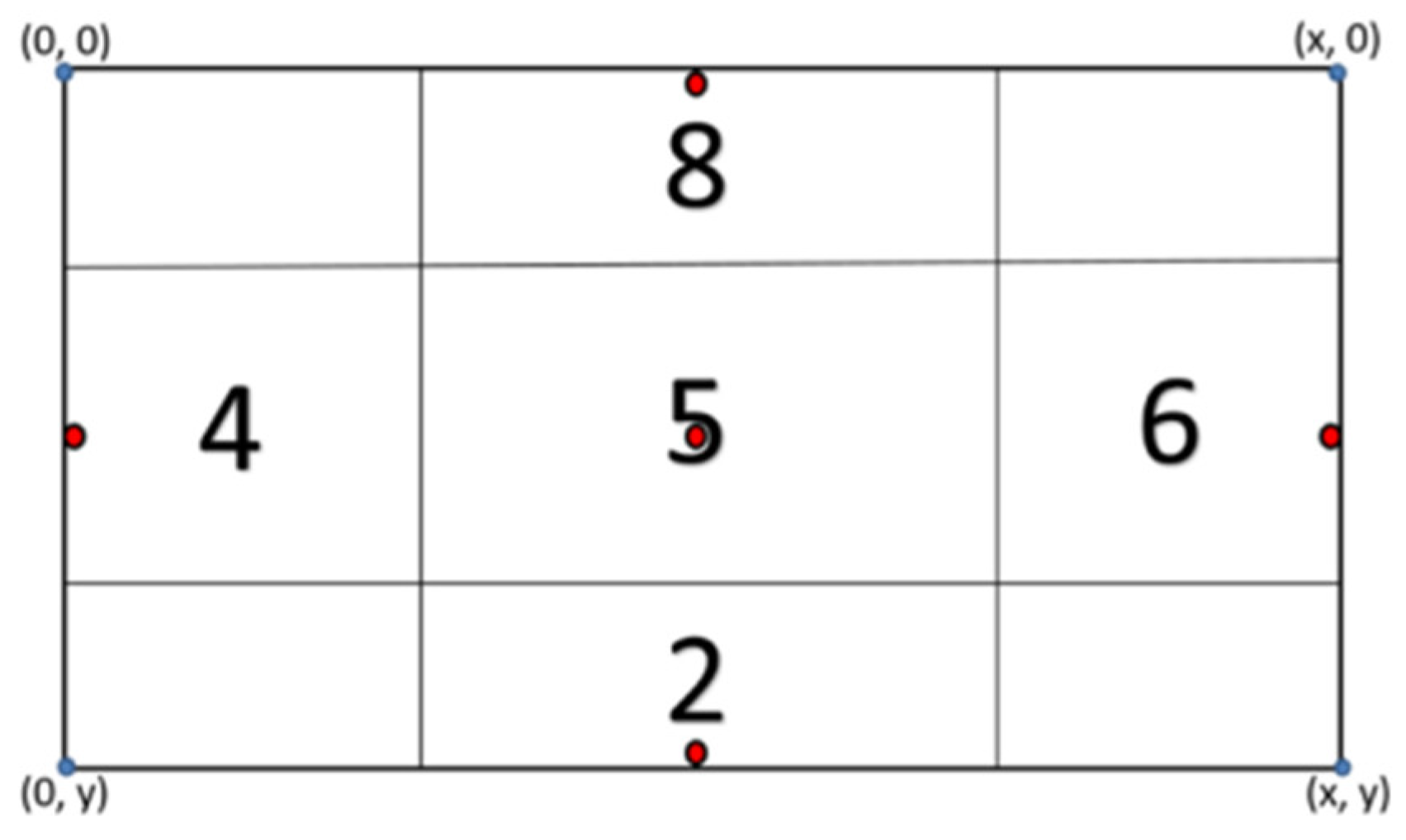

3.1. Eye Gazes Detection Method

3.2. Eye Blink Detection Method

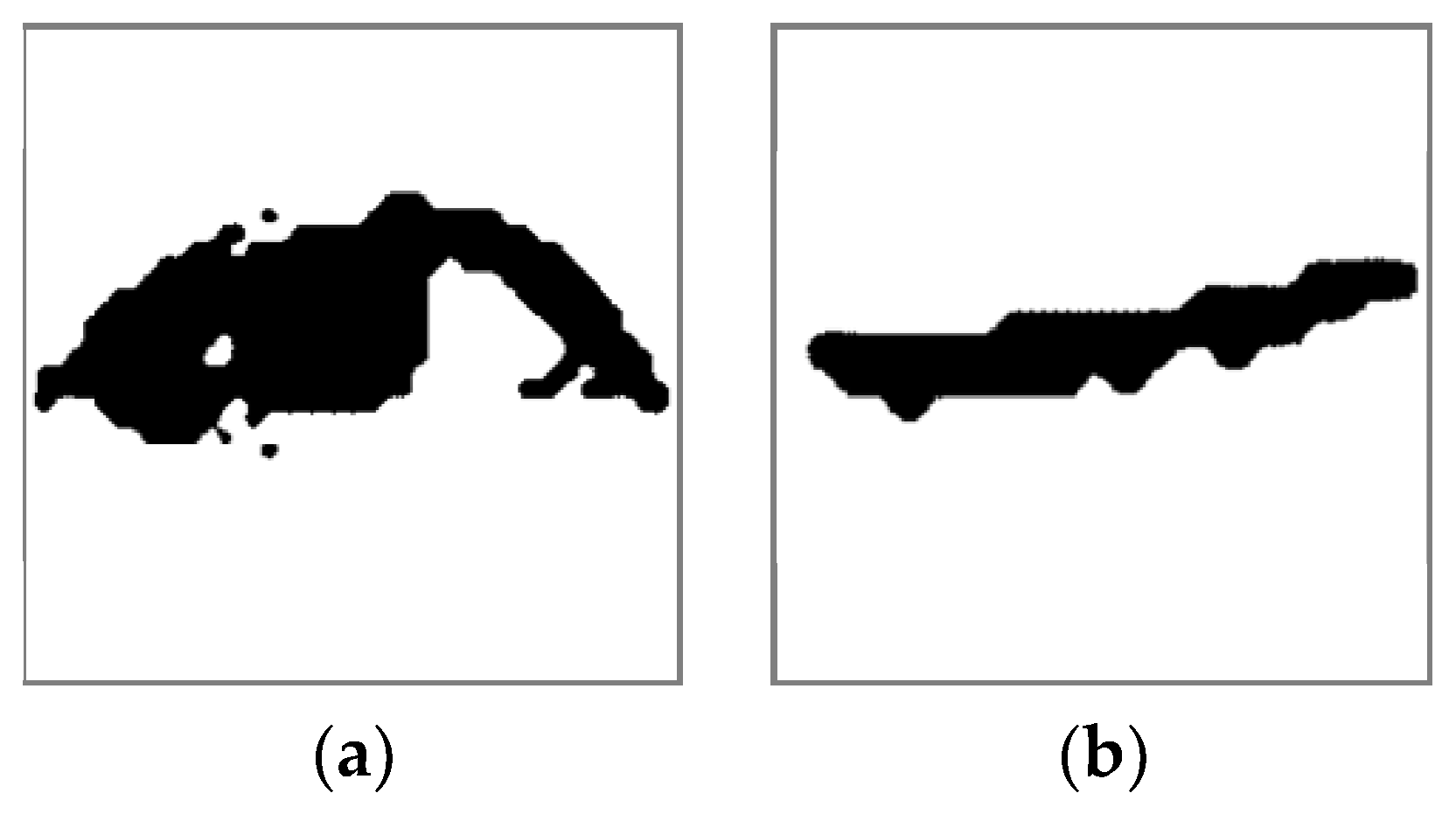

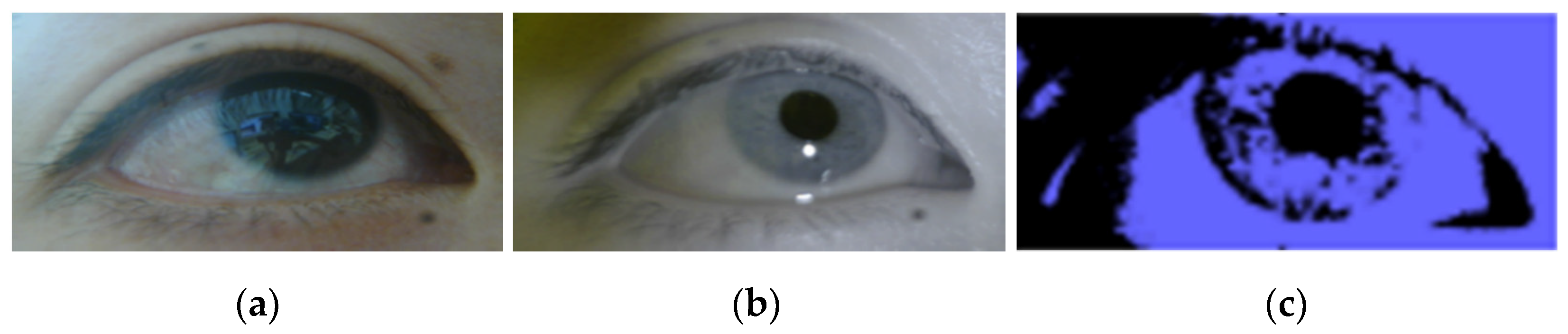

3.2.1. Pixel Ratio

3.2.2. Support Vector Machine

- A.

- Margin maximization

- B.

- Mapping of feature space

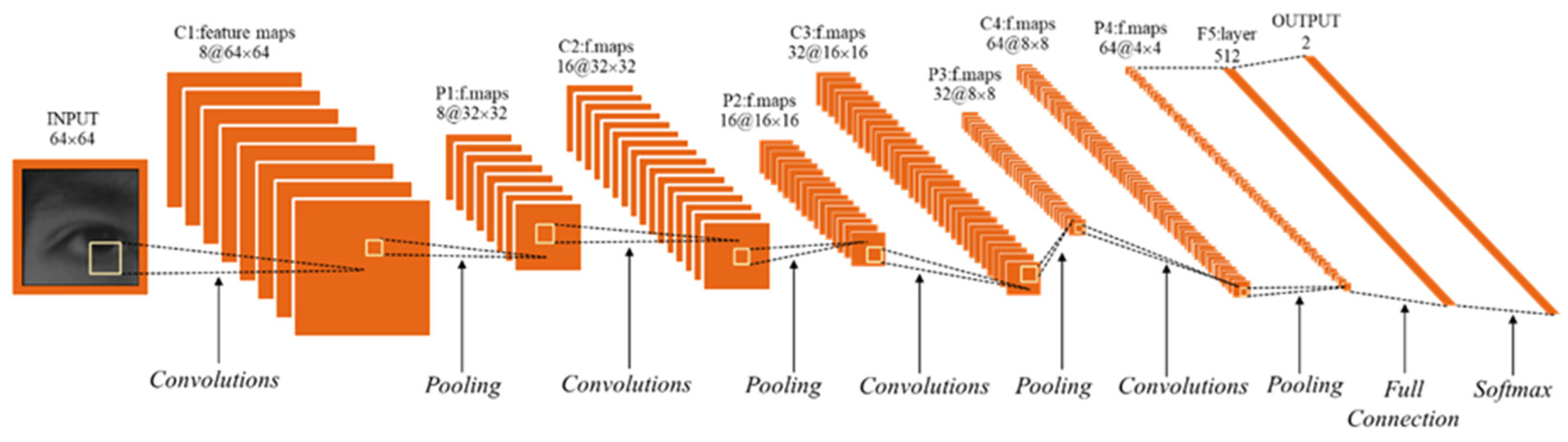

3.2.3. Convolutional Neural Network

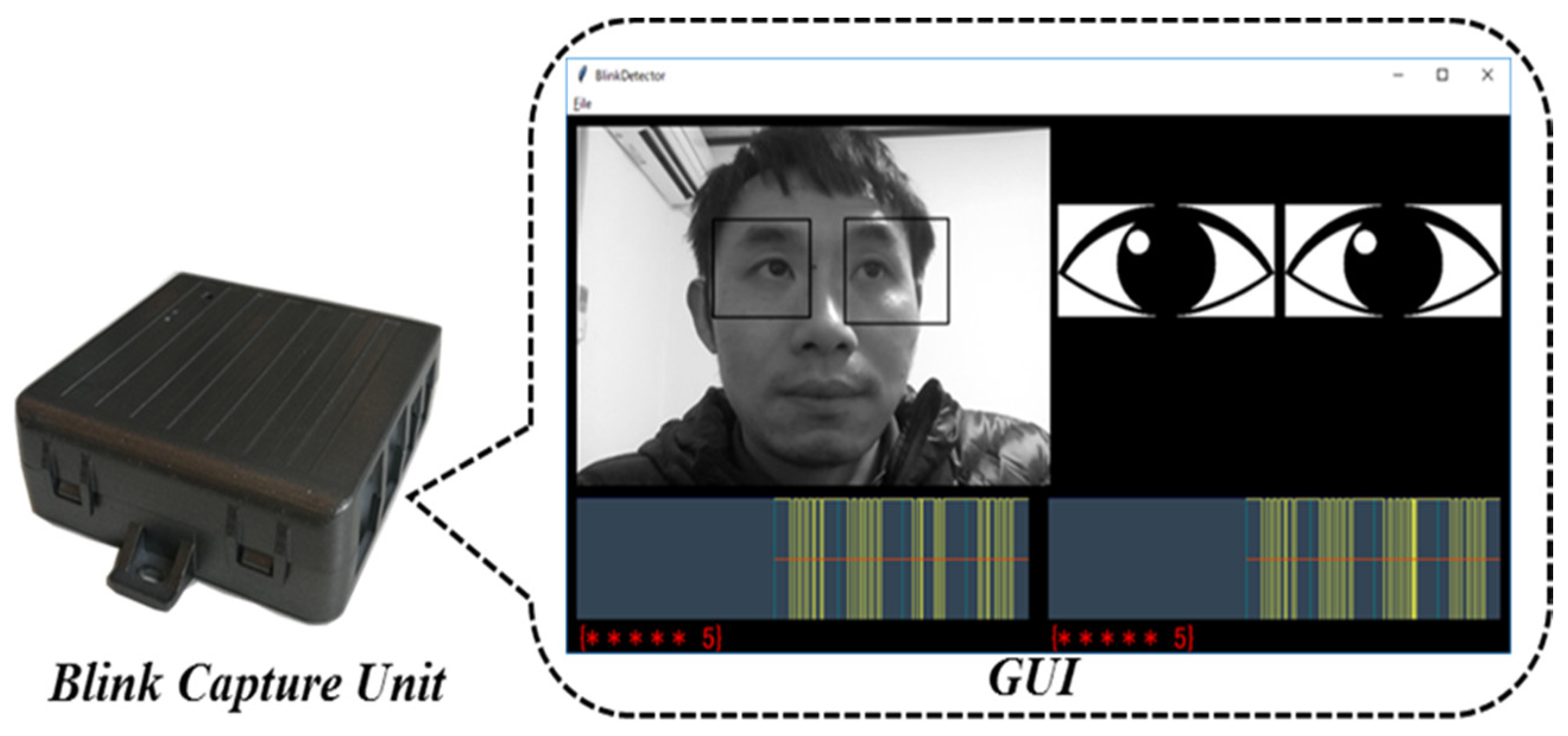

3.2.4. The Eye Blink Detection Device and Its GUI

4. Results and Discussion

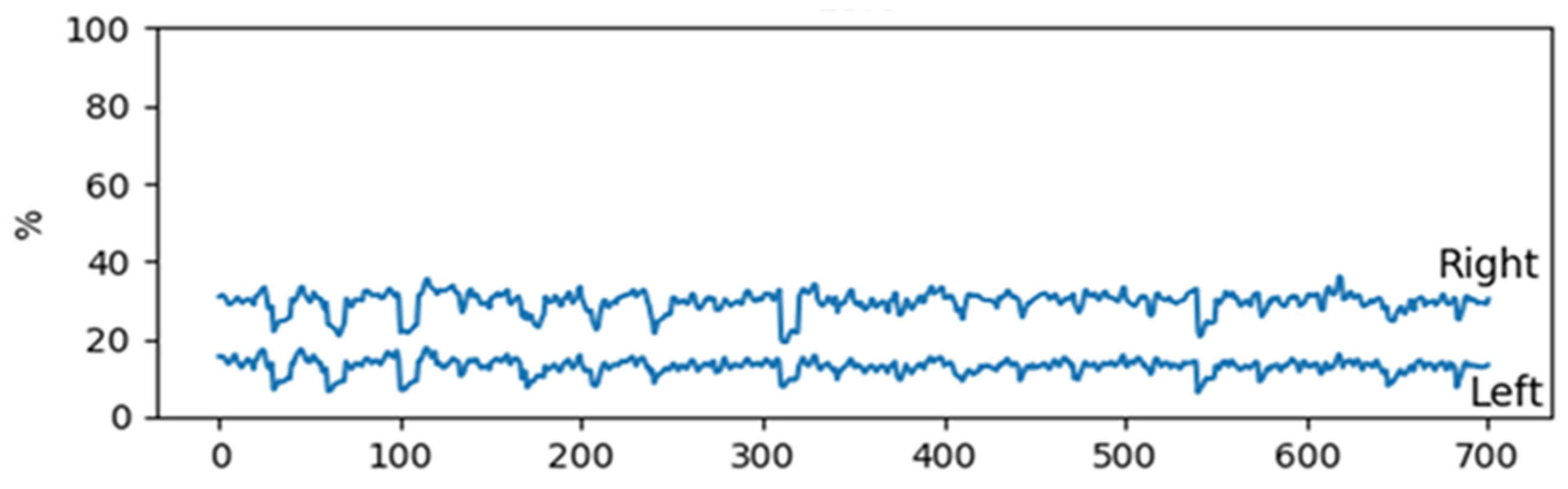

4.1. Results of Eye Gaze Direction Recognition Experiments

4.2. Eye Blinks Recognition Experimental Results

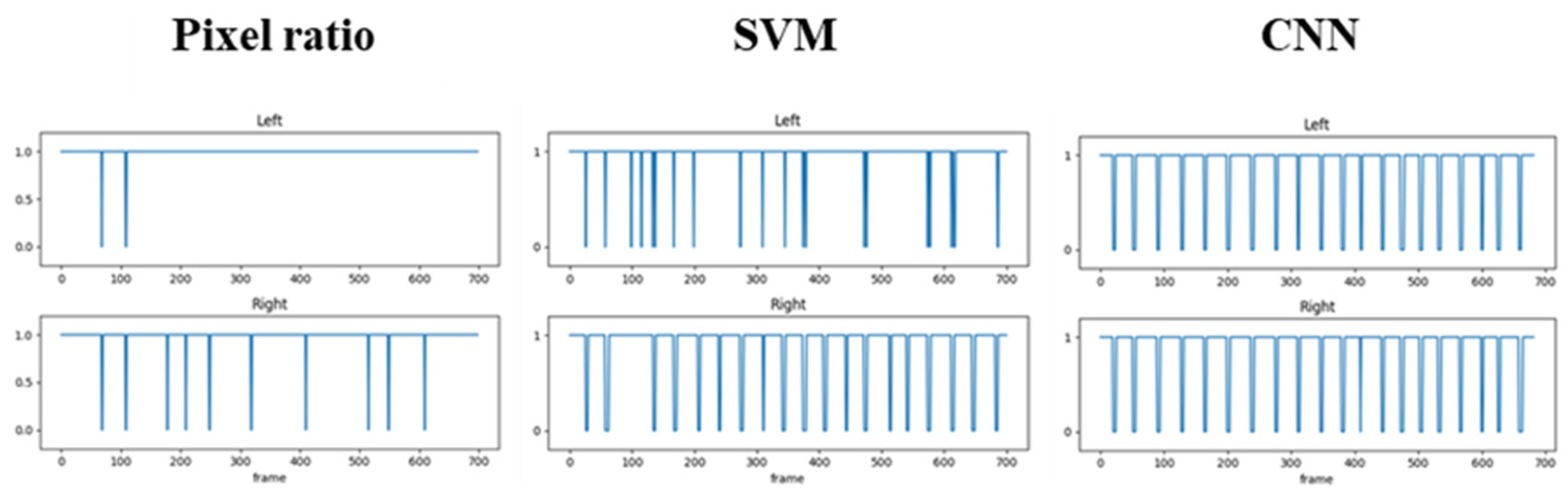

- A.

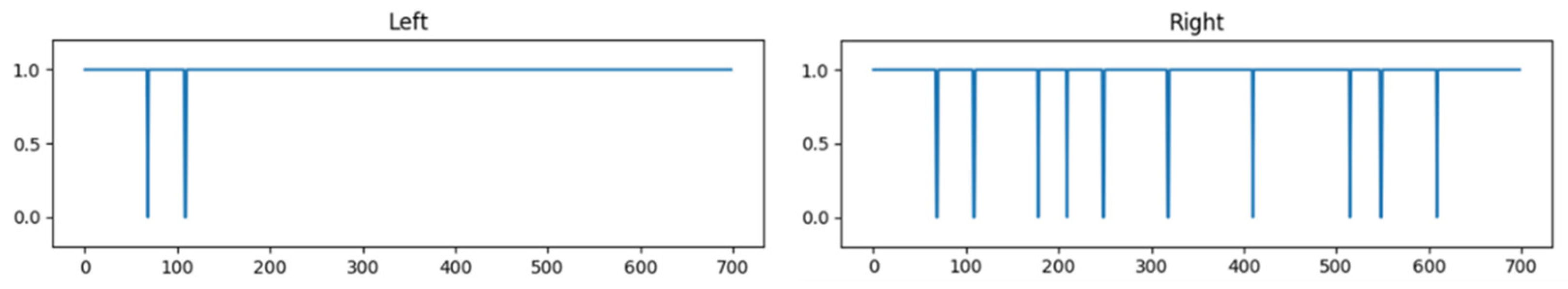

- Pixel Ratio

- B.

- Support Vector Machine

- C.

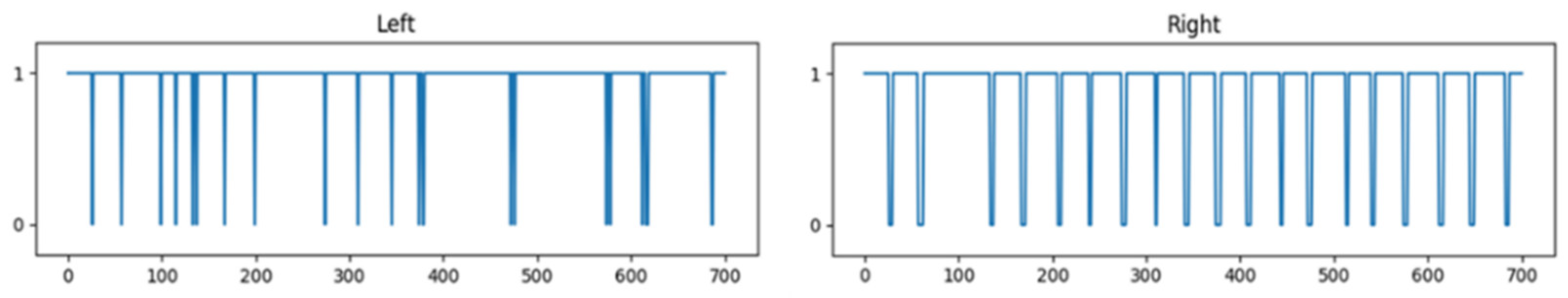

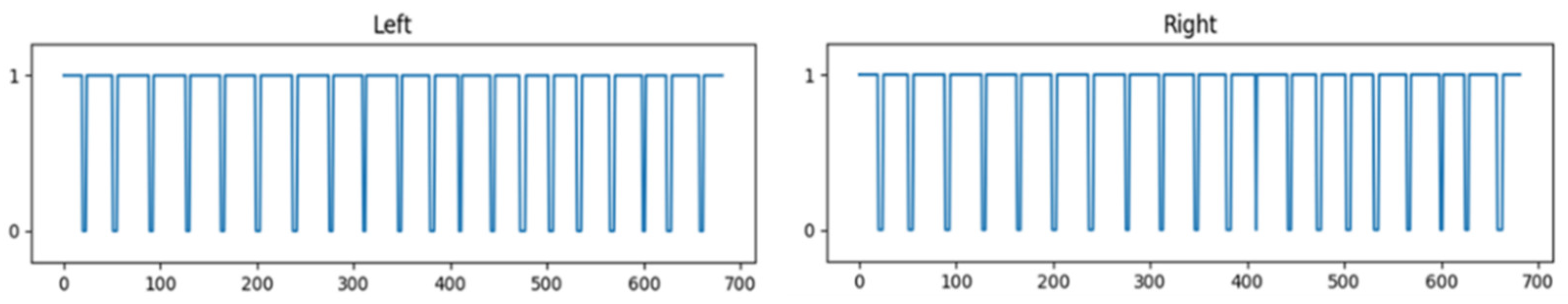

- Convolutional Neural Network

- D.

- Comparative Discussion of Results

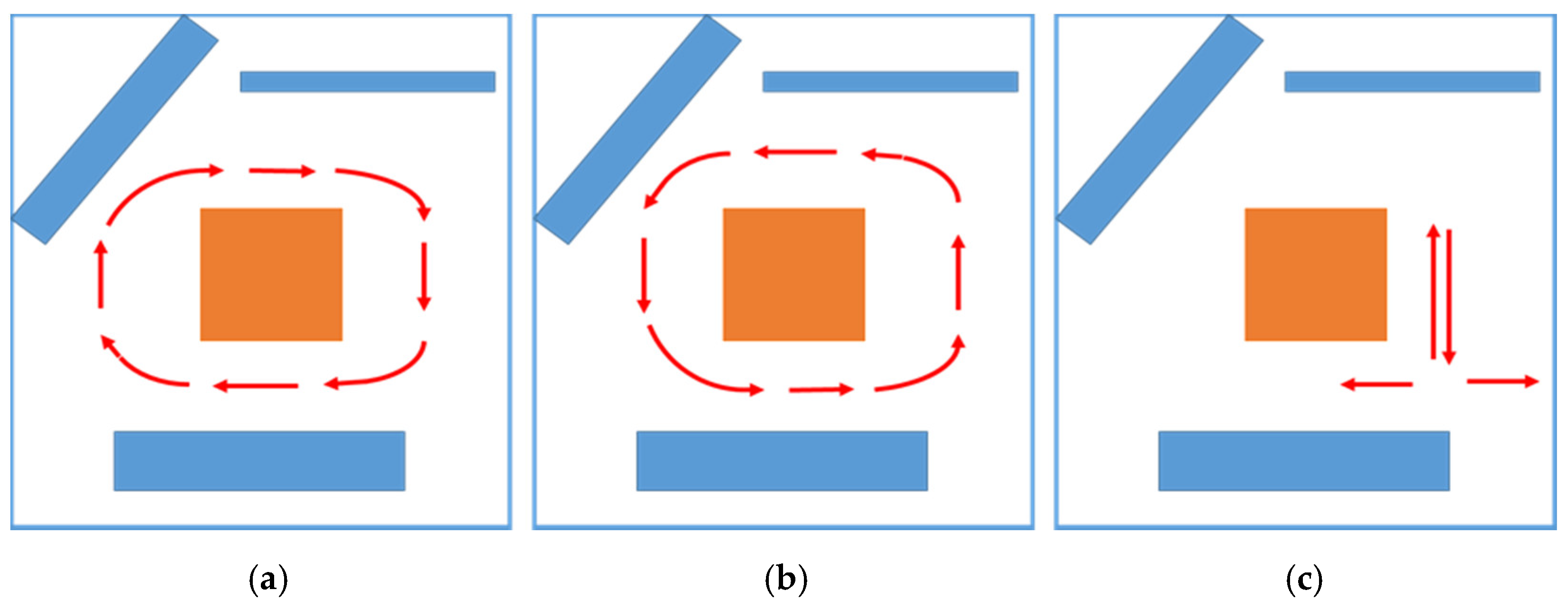

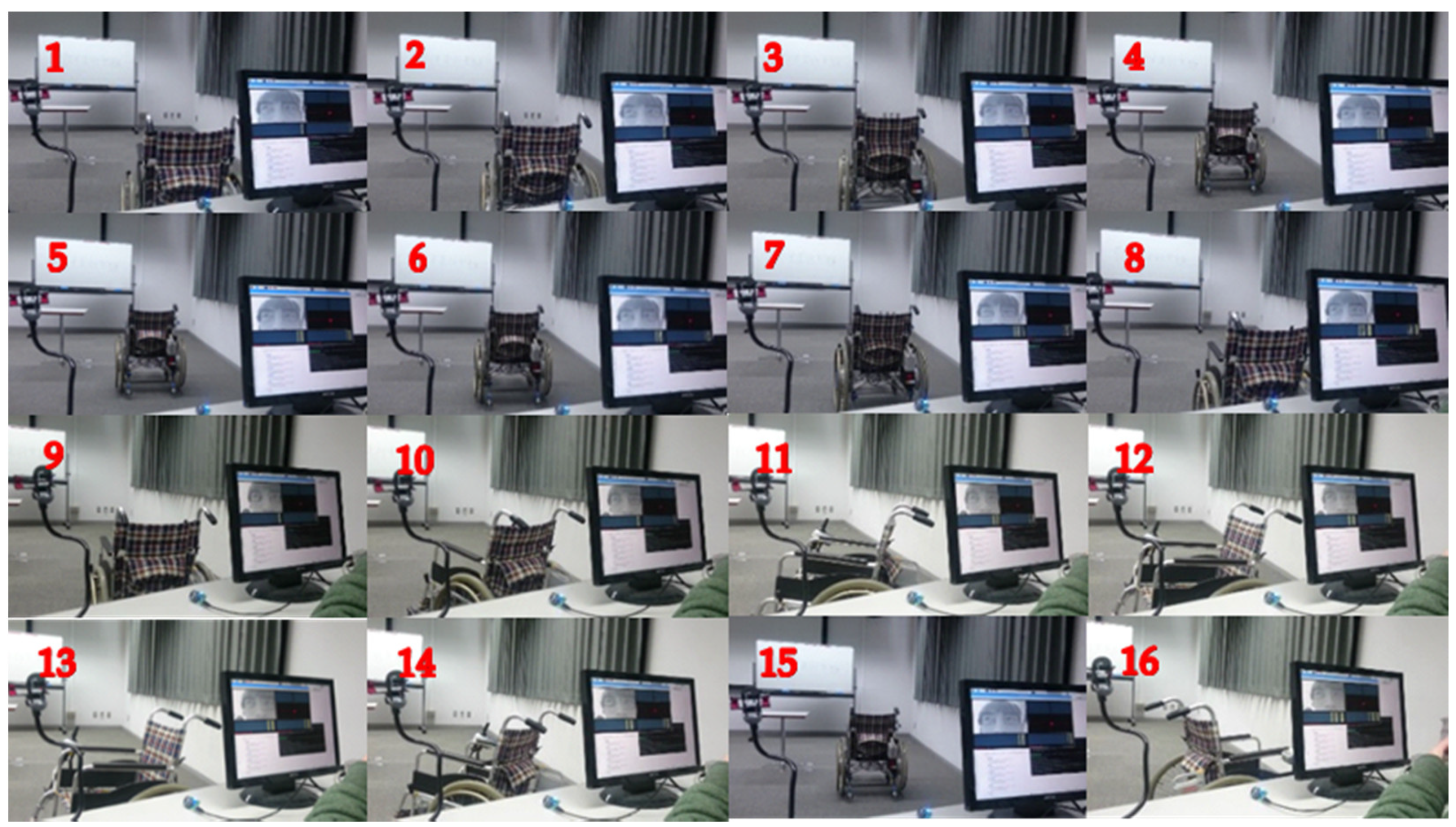

4.3. Drive Experiment Results and Discussion

- A.

- Drive Experiment Results

- B.

- Discussion of Results

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hoc, J.M. From human-machine interaction to human-machine cooperation. Ergonomics 2000, 43, 833–843. [Google Scholar] [CrossRef] [PubMed]

- Memo, A.; Zanuttigh, P. Head-mounted gesture controlled interface for human-computer interaction. Multimed. Tools Appl. 2018, 77, 27–53. [Google Scholar] [CrossRef]

- Drewes, H.; Schmidt, A. IFIP Conference on Human-Computer Interaction, Interacting with the Computer Using Gaze Gestures; Springer: Berlin/Heidelberg, Germany, 2007; pp. 475–488. [Google Scholar]

- Pfeuffer, K.; Alexander, J.; Chong, M.K.; Gellersen, H. Gaze-touch: Combining gaze with multi-touch for interaction on the same surface. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; ACM: New York, NY, USA, 2014. [Google Scholar]

- Bickenbach, J.E.; Chatterji, S.; Badley, E.M.; Üstün, T.B. Models of disablement, universalism and the international classification of impairments, disabilities and handicaps. Soc. Sci. Med. 1999, 48, 1173–1187. [Google Scholar] [CrossRef]

- Sorbello, R.; Tramonte, S.; Giardina, M.E.; La Bella, V.; Spataro, R.; Allison, B.; Guger, C.; Chella, A. A Human–Humanoid Interaction Through the Use of BCI for Locked-In ALS Patients Using Neuro-Biological Feedback Fusion. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 487–497. [Google Scholar] [CrossRef] [PubMed]

- Rupom, A.I.; Patwary, A.B. P300 Speller Based ALS Detection Using Daubechies Wavelet Transform in Electroencephalograph. In Proceedings of the International Conference on Electrical, Computer and Communication Engineering, Cox’s Bazar, Bangladesh, 7–9 February 2019. [Google Scholar]

- Korovesis, N.; Kandris, D.; Koulouras, G.; Alexandridis, A. Robot motion control via an EEG-based brain--computer interface by using neural networks and alpha brainwaves. Electronics 2019, 8, 1387. [Google Scholar] [CrossRef] [Green Version]

- Deligani, R.J.; Hosni, S.I.; Borgheai, S.B.; McLinden, J.; Zisk, A.H.; Mankodiya, K.; Shahriari, Y. Electrical and Hemodynamic Neural Functions in People With ALS: An EEG-fNIRS Resting-State Study. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3129–3139. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, T.T.; Fattah, S.A.; Shahnaz, C. ALS Detection Based on T-Location Scale Statistical Modeling of the DWT Coefficients of EMG Signals. In Proceedings of the IEEE International Conference on Biomedical Engineering, Computer and Information Technology for Health (BECITHCON), Dhaka, Bangladesh, 1 December 2019. [Google Scholar]

- Carrasquilla-Batista, A.; Quiros-Espinoza, K.; Gomez-Carrasquilla, C. An Internet of Things (IoT) application to control a wheelchair through EEG signal processing. In Proceedings of the 2017 International Symposium on Wearable Robotics and Rehabilitation (WeRob), Houston, TX, USA, 5–8 November 2017. [Google Scholar]

- Larson, A.; Herrera, J.; George, K.; Matthews, A. Electrooculography based electronic communication device for individuals with ALS. In Proceedings of the IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 11–13 March 2019. [Google Scholar]

- Hansen, D.W.; Ji, Q. In the Eye of the Beholder: A Survey of Models for Eyes and Gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 478–500. [Google Scholar] [CrossRef] [PubMed]

- Hutchinson, T.E.; White, K.P.; Martin, W.N.; Reichert, K.C.; Frey, L.A. Human-computer interaction using eye-gaze input. IEEE Trans. Syst. Man Cybern. 1989, 19, 1527–1534. [Google Scholar] [CrossRef]

- Shinde, S.; Kumar, S.; Johri, P. A Review: Eye Tracking Interface with Embedded System & IOT. In Proceedings of the International Conference on Computing Power and Communication Technologies (GUCON), Greater Noida, India, 28–29 September 2018. [Google Scholar]

- Wanluk, N.; Visitsattapongse, S.; Juhong, A.; Pintavirooj, C. Smart wheelchair based on eye tracking. In Proceedings of the 9th Biomedical Engineering International Conference (BMEiCON), Laung Prabang, Laos, 7–9 December 2016. [Google Scholar]

- Li, X.; Wee, W.G. An efficient method for eye tracking and eye-gazed FOV estimation. In Proceedings of the 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7 November 2009. [Google Scholar]

- Arai, K.; Mardiyanto, R. Eyes Based Eletric WheelChair Control System. Int. J. Adv. Comput. Sci. Appl. 2011, 2, 98–105. [Google Scholar]

- Araujo, J.M.; Zhang, G.; Hansen, J.P.P.; Puthusserypady, S. Exploring Eye-Gaze Wheelchair Control. In ACM Symposium on Eye Tracking Research and Applications; ACM: New York, NY, USA, 2020. [Google Scholar]

- Hansen, J.P.; Trudslev, A.K.; Harild, S.A.; Alapetite, A.; Minakata, K. Providing access to VR through a wheelchair. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2019. [Google Scholar]

- Pfeuffer, K.; Vidal, M.; Turner, J.; Bulling, A.; Gellersen, H. Pursuit calibration: Making gaze calibration less tedious and more flexible. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2013; ACM: New York, NY, USA, 2013. [Google Scholar]

- Purwantoa, D.; Mardiyanto, R.; Arai, K. Electric wheelchair control with gaze direction and eye blinking. Artif. Life Robot. 2009, 14, 397–400. [Google Scholar] [CrossRef]

- Varela, M. Raw eeg signal processing for bci control based on voluntary eye blinks. In Proceedings of the 2015 IEEE Thirty Fifth Central American and Panama Convention, Tegucigalpa, Honduras, 11–13 November 2015. [Google Scholar]

- Gomez-Carrasquilla, C.; Quiros-Espinoza, K.; Carrasquilla-Batista, A. Wheelchair control through eye blinking and IoT platform. In Proceedings of the 2020 IEEE 11th Latin American Symposium on Circuits Systems, San Jose, Costa Rica, 28 February 2020. [Google Scholar]

- Ren, P.; Ma, X.; Lai, W.; Zhang, M.; Liu, S.; Wang, Y.; Li, M.; Ma, D.; Dong, Y.; He, Y.; et al. Comparison of the Use of Blink Rate and Blink Rate Variability for Mental State Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 867–875. [Google Scholar] [CrossRef] [PubMed]

- El Naqa, I.; Murphy, M.J. Machine learning in radiation oncology. In What is Machine Learning; Springer: Cham, Switzerland, 2005; pp. 3–11. [Google Scholar]

- Hu, S. Document image watermarking algorithm based on neighborhood pixel ratio. Proceedings. (ICASSP ‘05). In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Philadelphia, PA, USA, 23 March 2005. [Google Scholar]

- Suthaharan, S. Machine learning models and algorithms for big data classification. In Support Vector Machine; Springer: Boston, MA, USA, 2016; pp. 207–235. [Google Scholar]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the International Conference on Engineering and Technology, Antalya, Turkey, 21–23 August 2017. [Google Scholar]

- Choi, K.; Cichocki, A. Control of a Wheelchair by Motor Imagery in Real Time. In International Conference on Intelligent Data Engineering and Automated Learning; Springer: Berlin/Heidelberg, Germany, 2008; pp. 330–337. [Google Scholar]

- Mahmoud, A.; Hamoud, M.; Ahmad, A.M.; Ahmad, A.S. Controlling a Wheelchair Using Human-Computer Interaction. Int. J. Sci. Res. 2018, 7, 681–686. [Google Scholar]

- Pande, V.V.; Ubale, N.S.; Masurkar, D.P.; Ingole, N.R.; Mane, P.P. Hand Gesture Based Wheelchair Movement Control for Disabled Person Using MEMS. J. Eng. Res. Appl. 2014, 4, 152–158. [Google Scholar]

- Chen, Y.L.; Chen, S.C.; Chen, W.L.; Lin, J.F. A head orientated wheelchair for people with disabilities. Disabil. Rehabil. 2003, 25, 249–253. [Google Scholar] [CrossRef] [PubMed]

- Mandel, C.; Luth, T. Navigating a smart wheelchair with a braincomputer interface interpreting steady-state visual evoked potentials. In Proceedings of the 2009 IEEE/RSJ International Conference, St. Louis, MO, USA, 10 October 2009. [Google Scholar]

- Lund, M.E.; Christiensen, H.V.; Caltenco, H.A.; Lontis, E.R.; Bentsen, B.; Andreasen Struijk, L.N.S. Inductive tongue control of powered wheelchairs. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 1–4 September 2010. [Google Scholar]

- Singer, C.C.; Hartmann, B. See-Thru: Towards Minimally Obstructive Eye-Controlled Wheelchair Interfaces. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

| Layer Name | Layer Type | Relate Parameters |

|---|---|---|

| Conv1_1 | convolution | 3 × 3, 8, relu, stride1 |

| Conv1_2 | convolution | 3 × 3, 8, relu, stride1 |

| Pool1 | Pooling | 2 × 2, 8, max pool, stride2 |

| Conv2_1 | convolution | 3 × 3, 16, relu, stride1 |

| Conv2_2 | convolution | 3 × 3, 16, relu, stride1 |

| Pool2 | Pooling | 2 × 2, 16, max pool, stride2 |

| Conv3_1 | convolution | 3 × 3, 32, relu, stride1 |

| Conv3_2 | convolution | 3 × 3, 32, relu, stride1 |

| Pool3 | Pooling | 2 × 2, 32, max pool, stride2 |

| Conv4_1 | convolution | 3 × 3, 64, relu, stride1 |

| Conv4_2 | convolution | 3 × 3,64, relu, stride1 |

| Pool4 | Pooling | 2 × 2, 64, max pool, stride2 |

| Fuc1 | Fully-connected | 512, sigmoid |

| Drop | Dropout | dropout-ratio 0.5 |

| Fuc2 | Fully-connected | 2, softmax |

| Methods | Detection Count | Undetected Count | False Positives Count |

|---|---|---|---|

| pixel ratio | 28 | 72 | 0 |

| SVM | 74 | 26 | 11 |

| CNN | 99 | 1 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, W.; Cao, J.; Ishikawa, K.; Ju, D. A Human-Computer Control System Based on Intelligent Recognition of Eye Movements and Its Application in Wheelchair Driving. Multimodal Technol. Interact. 2021, 5, 50. https://doi.org/10.3390/mti5090050

Luo W, Cao J, Ishikawa K, Ju D. A Human-Computer Control System Based on Intelligent Recognition of Eye Movements and Its Application in Wheelchair Driving. Multimodal Technologies and Interaction. 2021; 5(9):50. https://doi.org/10.3390/mti5090050

Chicago/Turabian StyleLuo, Wenping, Jianting Cao, Kousuke Ishikawa, and Dongying Ju. 2021. "A Human-Computer Control System Based on Intelligent Recognition of Eye Movements and Its Application in Wheelchair Driving" Multimodal Technologies and Interaction 5, no. 9: 50. https://doi.org/10.3390/mti5090050

APA StyleLuo, W., Cao, J., Ishikawa, K., & Ju, D. (2021). A Human-Computer Control System Based on Intelligent Recognition of Eye Movements and Its Application in Wheelchair Driving. Multimodal Technologies and Interaction, 5(9), 50. https://doi.org/10.3390/mti5090050