An Agent-Based Model to Reproduce the Boolean Logic Behaviour of Neuronal Self-Organised Communities through Pulse Delay Modulation and Generation of Logic Gates

Abstract

1. Introduction

2. Modelling Aspects

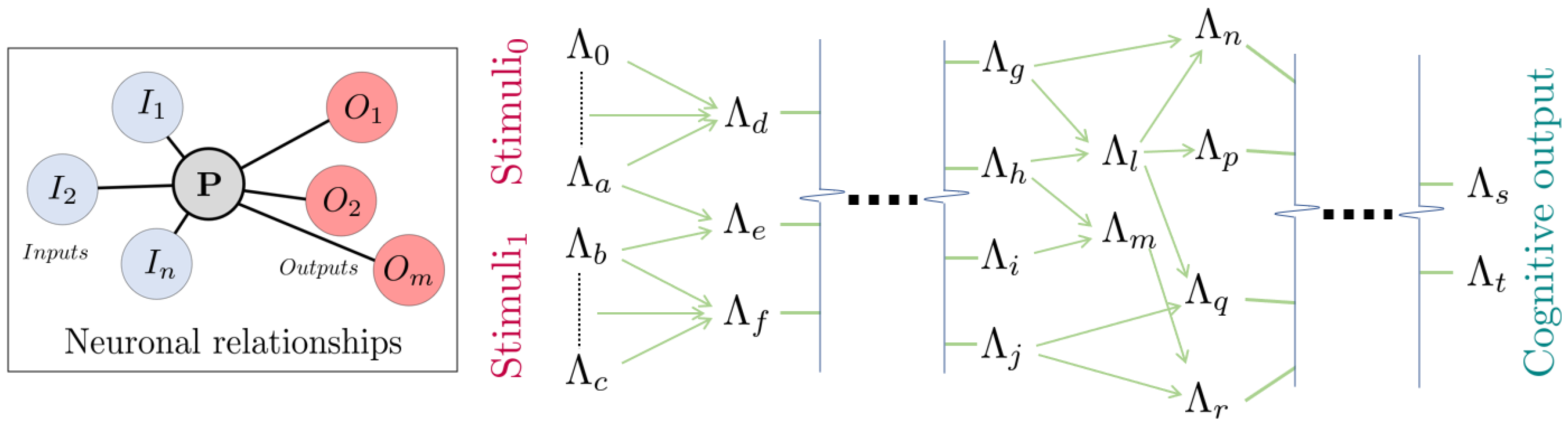

2.1. Proposed Neuronal Model and Communitarian Interactions

2.1.1. The McCulloch–Pitts Neuron Model

2.1.2. Inhibition and Excitation

2.1.3. The Plastic Remodelling Process

2.1.4. Migration

2.2. Metastability

2.3. Backpropagation

2.4. Neuroplasticity

2.5. Biological and Artificial Neural Networks

3. Methodology

3.1. Creation of Logic Gates with Neurons: Modification of the McCulloch–Pitts Boolean Model

3.1.1. AND Gate

3.1.2. OR Gate

3.1.3. NOT Gate

4. Results

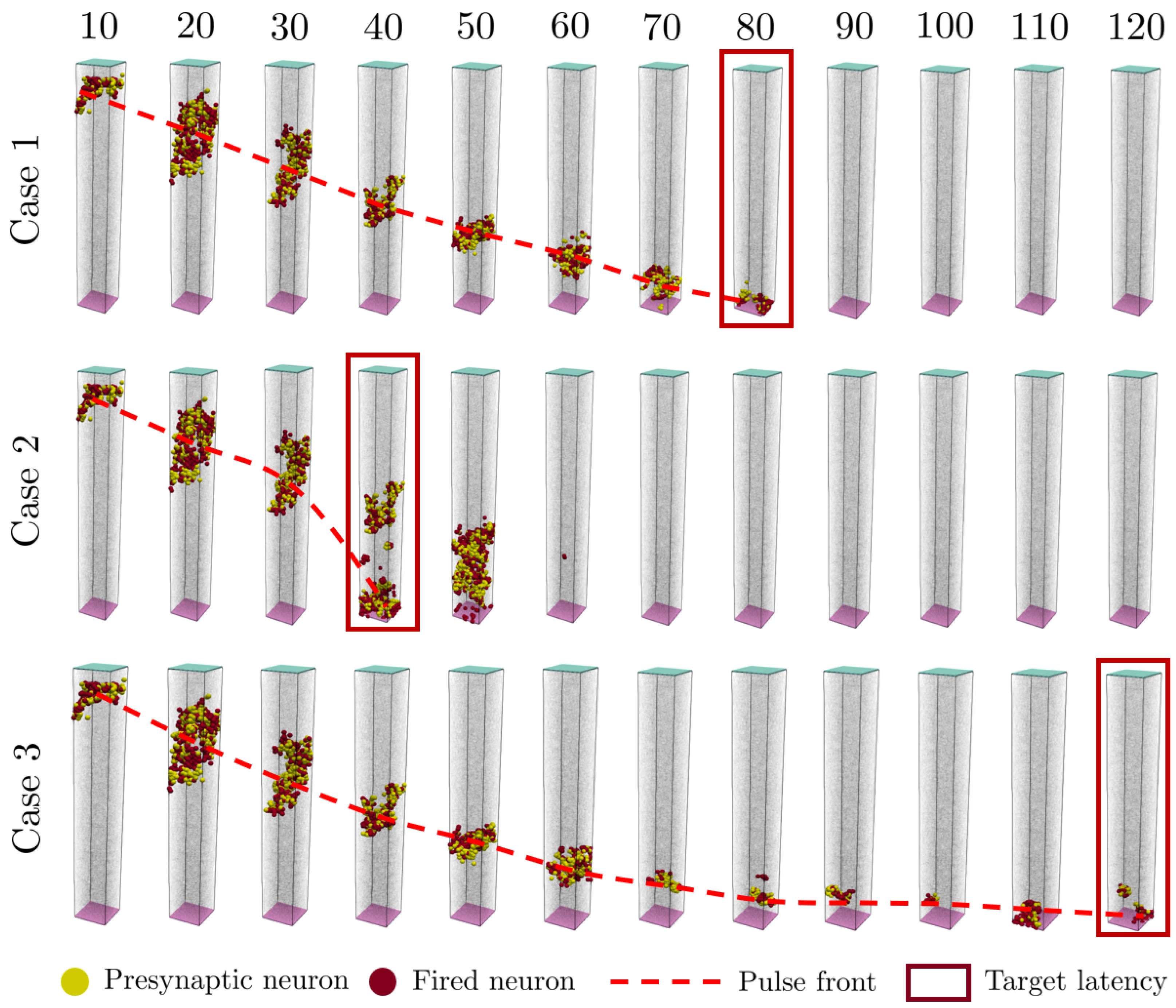

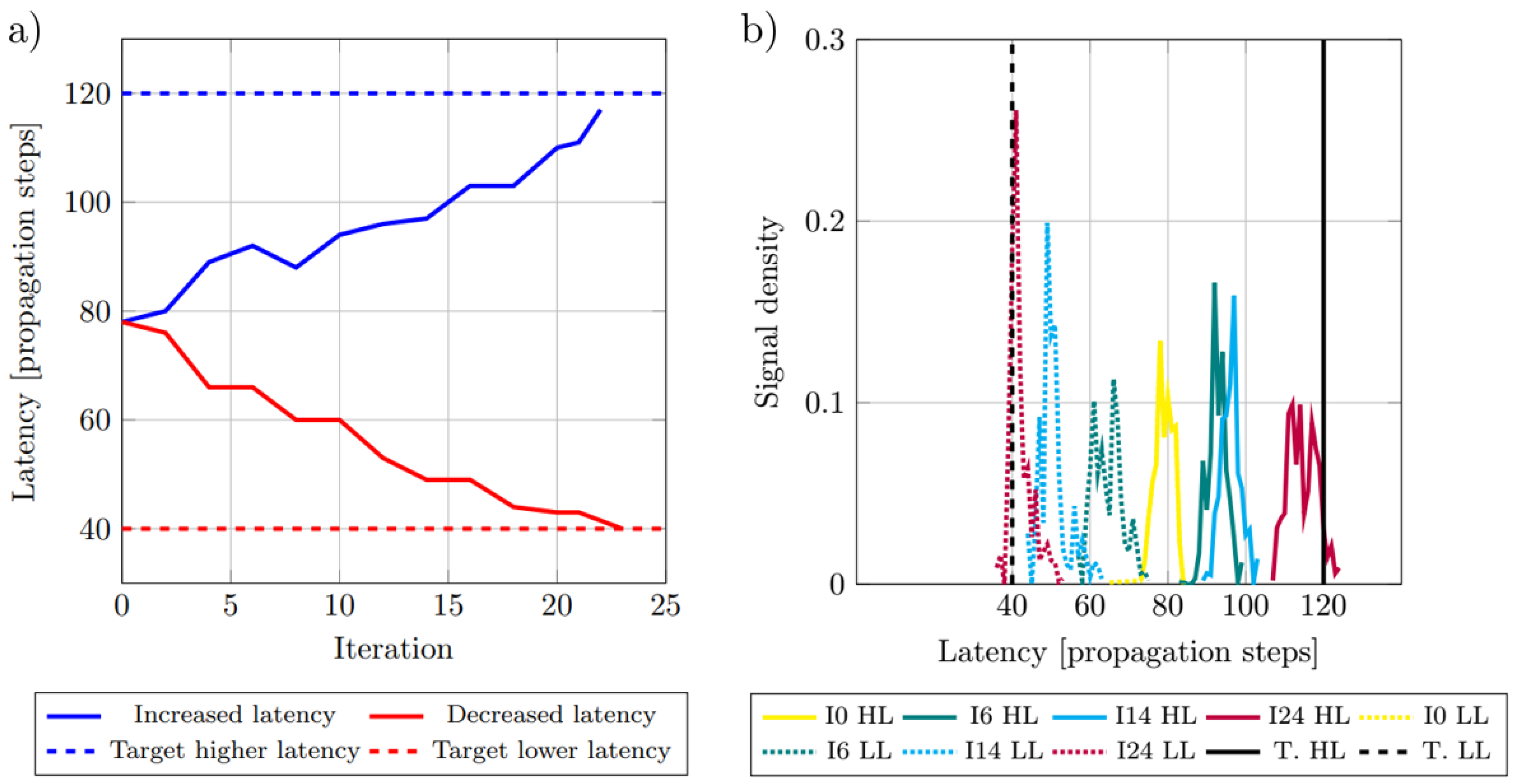

Decrease and Increase in Pulse Latency

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Srivastava, P.; Nozari, E.; Kim, J.Z.; Ju, H.; Zhou, D.; Becker, C.; Pasqualetti, F.; Pappas, G.J.; Bassett, D.S. Models of communication and control for brain networks: Distinctions, convergence, and future outlook. Netw. Neurosci. 2020, 4, 1122–1159. [Google Scholar] [CrossRef]

- Milligan, K.; Balwani, A.; Dyer, E. Brain mapping at high resolutions: Challenges and opportunities. Curr. Opin. Biomed. Eng. 2019, 12, 126–131. [Google Scholar] [CrossRef]

- Xiong, C.; Xu, X.; Zhang, H.; Zeng, B. An analysis of clinical values of MRI, CT and X-ray in differentiating benign and malignant bone metastases. Am. J. Transl. Res. 2021, 13, 7335–7341. [Google Scholar]

- Belliveau, J.; Kwong, K.K.; Kennedy, D.; Baker, J.; Stern, C.; Benson, R.; Chesler, D.; Weisskoff, R.; Cohen, M.; Tootell, R.; et al. Magnetic Resonance Imaging Mapping of Brain Function Human Visual Cortex. Investig. Radiol. 1992, 27, 59–65. [Google Scholar] [CrossRef]

- Amunts, K.; Mohlberg, H.; Bludau, S.; Zilles, K. Julich-Brain: A 3D probabilistic atlas of the human brain’s cytoarchitecture. Science 2020, 369, 988–992. [Google Scholar] [CrossRef] [PubMed]

- Borys, D.; Kijonka, M.; Psiuk-Maksymowicz, K.; Gorczewski, K.; Zarudzki, L.; Sokol, M.; Swierniak, A. Non-parametric MRI Brain Atlas for the Polish Population. Front. Neuroinform. 2021, 15, 684759. [Google Scholar] [CrossRef] [PubMed]

- Alkemade, A.; Bazin, P.L.; Balesar, R.; Pine, K.; Kirilina, E.; Möller, H.E.; Trampel, R.; Kros, J.M.; Keuken, M.C.; Bleys, R.L.A.W.; et al. A unified 3D map of microscopic architecture and MRI of the human brain. Sci. Adv. 2022, 8, eabj7892. [Google Scholar] [CrossRef]

- Zhao, B.; Li, T.; Li, Y.; Fan, Z.; Xiong, D.; Wang, X.; Gao, M.; Smith, S.M.; Zhu, H. An atlas of trait associations with resting-state and task-evoked human brain functional organizations in the UK Biobank. Imaging Neurosci. 2023, 1, 1–23. [Google Scholar] [CrossRef]

- Bandettini, P.A.; Huber, L.; Finn, E.S. Challenges and opportunities of mesoscopic brain mapping with fMRI. Curr. Opin. Behav. Sci. 2021, 40, 189–200. [Google Scholar] [CrossRef]

- Larkum, M.E.; Petro, L.S.; Sachdev, R.N.S.; Muckli, L. A Perspective on Cortical Layering and Layer-Spanning Neuronal Elements. Front. Neuroanat. 2018, 12, 56. [Google Scholar] [CrossRef]

- Silva, M.A.; See, A.P.; Essayed, W.I.; Golby, A.J.; Tie, Y. Challenges and techniques for presurgical brain mapping with functional MRI. NeuroImage Clin. 2018, 17, 794–803. [Google Scholar] [CrossRef]

- Konigsmark, B.W. Methods for the Counting of Neurons. In Contemporary Research Methods in Neuroanatomy; Springer: Berlin/Heidelberg, Germany, 1970; pp. 315–340. [Google Scholar] [CrossRef]

- Tian, Y.; Johnson, G.A.; Williams, R.W.; White, L. A rapid workflow for neuron counting in combined light sheet microscopy and magnetic resonance histology. Front. Neurosci. 2023, 17, 1223226. [Google Scholar] [CrossRef]

- West, M.J. New stereological methods for counting neurons. Neurobiol. Aging 1993, 14, 275–285. [Google Scholar] [CrossRef] [PubMed]

- Miller, D.J.; Balaram, P.; Young, N.A.; Kaas, J.H. Three counting methods agree on cell and neuron number in chimpanzee primary visual cortex. Front. Neuroanat. 2014, 8, 36. [Google Scholar] [CrossRef] [PubMed]

- Collins, C.E.; Young, N.A.; Flaherty, D.K.; Airey, D.C.; Kaas, J.H. A rapid and reliable method of counting neurons and other cells in brain tissue: A comparison of flow cytometry and manual counting methods. Front. Neuroanat. 2010, 4, 1259. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Vizcaíno, A.; Sánchez-Cruz, H.; Sossa, H.; Quintanar, J.L. Neuron cell count with deep learning in highly dense hippocampus images. Expert Syst. Appl. 2022, 208, 118090. [Google Scholar] [CrossRef]

- Shao, C.Y.; Mirra, S.S.; Sait, H.B.R.; Sacktor, T.C.; Sigurdsson, E.M. Postsynaptic degeneration as revealed by PSD-95 reduction occurs after advanced Abeta tau pathology in transgenic mouse models of Alzheimer’s disease. Acta Neuropathol. 2011, 122, 285–292. [Google Scholar] [CrossRef]

- Savioz, A.; Leuba, G.; Vallet, P.G. A framework to understand the variations of PSD-95 expression in brain aging and in Alzheimer’s disease. Ageing Res. Rev. 2014, 18, 86–94. [Google Scholar] [CrossRef] [PubMed]

- Yoo, K.S.; Lee, K.; Oh, J.Y.; Lee, H.; Park, H.; Park, Y.S.; Kim, H.K. Postsynaptic density protein 95 (PSD-95) is transported by KIF5 to dendritic regions. Mol. Brain 2019, 12, 97. [Google Scholar] [CrossRef] [PubMed]

- Kivisäkk, P.; Carlyle, B.C.; Sweeney, T.; Quinn, J.P.; Ramirez, C.E.; Trombetta, B.A.; Mendes, M.; Brock, M.; Rubel, C.; Czerkowicz, J.; et al. Increased levels of the synaptic proteins PSD-95, SNAP-25, and neurogranin in the cerebrospinal fluid of patients with Alzheimer’s disease. Alzheimer’s Res. Ther. 2022, 14, 58. [Google Scholar] [CrossRef]

- Vincent, M.; Guiraud, D.; Duffau, H.; Mandonnet, E.; Bonnetblanc, F. Electrophysiological brain mapping: Basics of recording evoked potentials induced by electrical stimulation and its physiological spreading in the human brain. Clin. Neurophysiol. 2017, 128, 1886–1890. [Google Scholar] [CrossRef] [PubMed]

- Boyer, A.; Ramdani, S.; Duffau, H.; Dali, M.; Vincent, M.A.; Mandonnet, E.; Guiraud, D.; Bonnetblanc, F. Electrophysiological Mapping During Brain Tumor Surgery: Recording Cortical Potentials Evoked Locally, Subcortically and Remotely by Electrical Stimulation to Assess the Brain Connectivity On-line. Brain Topogr. 2021, 34, 221–233. [Google Scholar] [CrossRef]

- Abar, S.; Theodoropoulos, G.K.; Lemarinier, P.; O’Hare, G.M. Agent Based Modelling and Simulation tools: A review of the state-of-art software. Comput. Sci. Rev. 2017, 24, 13–33. [Google Scholar] [CrossRef]

- Railsback, S.F.; Lytinen, S.L.; Jackson, S.K. Agent-based Simulation Platforms: Review and Development Recommendations. Simulation 2006, 82, 609–623. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Grafman, J. Conceptualizing functional neuroplasticity. J. Commun. Disord. 2000, 33, 345–356. [Google Scholar] [CrossRef]

- Finger, S.; Almli, R. Neural plasticity in the ageing brain. Brain Res. Rev. 1985, 10, 177–186. [Google Scholar] [CrossRef]

- Ghashghaei, H.T.; Lai, C.; Anton, E.S. Neuronal migration in the adult brain: Are we there yet? Nat. Rev. Neurosci. 2007, 8, 141–151. [Google Scholar] [CrossRef]

- Nadarajah, B.; Parnavelas, J.G. Modes of neuronal migration in the developing cerebral cortex. Nat. Rev. Neurosci. 2002, 3, 423–432. [Google Scholar] [CrossRef]

- Hatanaka, Y.; Zhu, Y.; Torigoe, M.; Kita, Y.; Murakami, F. From migration to settlement: The pathways, migration modes and dynamics of neurons in the developing brain. Proc. Jpn. Acad. Ser. B 2016, 92, 1–19. [Google Scholar] [CrossRef]

- Schaworonkow, N. Overcoming harmonic hurdles: Genuine beta-band rhythms vs. contributions of alpha-band waveform shape. Imaging Neurosci. 2023, 1, 1–8. [Google Scholar] [CrossRef]

- Tognoli, E.; Kelso, J.A.S. The Metastable Brain. Neuron 2014, 81, 35–48. [Google Scholar] [CrossRef] [PubMed]

- Roberts, J.A.; Gollo, L.L.; Abeysuriya, R.G.; Roberts, G.; Mitchell, P.B.; Woolrich, M.W.; Breakspear, M. Metastable brain waves. Nat. Commun. 2019, 10, 1056. [Google Scholar] [CrossRef] [PubMed]

- Demertzi, A.; Tagliazucchi, E.; Dehaene, S.; Deco, G.; Barttfeld, P.; Raimondo, F.; Martial, C.; Fernández-Espejo, D.; Rohaut, B.; Voss, H.U.; et al. Human consciousness is supported by dynamic complex patterns of brain signal coordination. Sci. Adv. 2019, 5, eaat7603. [Google Scholar] [CrossRef] [PubMed]

- Hausser, M.; Spruston, N.; Stuart, G.J. Diversity and Dynamics of Dendritic Signaling. Science 2000, 290, 739–744. [Google Scholar] [CrossRef]

- Staley, K. Epileptic Neurons Go Wireless. Science 2004, 305, 482–483. [Google Scholar] [CrossRef][Green Version]

- Lillicrap, T.P.; Santoro, A.; Marris, L.; Akerman, C.J.; Hinton, G. Backpropagation and the brain. Nat. Rev. Neurosci. 2020, 21, 335–346. [Google Scholar] [CrossRef]

- Rapp, M.; Yarom, Y.; Segev, I. Modeling back propagating action potential in weakly excitable dendrites of neocortical pyramidal cells. Proc. Natl. Acad. Sci. USA 1996, 93, 11985–11990. [Google Scholar] [CrossRef]

- Lüscher, H.R.; Larkum, M.E. Modeling Action Potential Initiation and Back-Propagation in Dendrites of Cultured Rat Motoneurons. J. Neurophysiol. 1998, 80, 715–729. [Google Scholar] [CrossRef]

- Waters, J.; Helmchen, F. Boosting of Action Potential Backpropagation by Neocortical Network Activity In Vivo. J. Neurosci. 2004, 24, 11127–11136. [Google Scholar] [CrossRef] [PubMed]

- Fuchs, E.; Flügge, G. Adult Neuroplasticity: More Than 40 Years of Research. Neural Plast. 2014, 2014, 1–10. [Google Scholar] [CrossRef]

- Gage, F.H. Structural plasticity of the adult brain. Dialogues Clin. Neurosci. 2004, 6, 135–141. [Google Scholar] [CrossRef] [PubMed]

- Nagappan, P.G.; Chen, H.; Wang, D.Y. Neuroregeneration and plasticity: A review of the physiological mechanisms for achieving functional recovery postinjury. Mil. Med Res. 2020, 7, 30. [Google Scholar] [CrossRef] [PubMed]

- Garcea, F.E.; Buxbaum, L.J. Mechanisms and neuroanatomy of response selection in tool and non-tool action tasks: Evidence from left-hemisphere stroke. Cortex 2023, 167, 335–350. [Google Scholar] [CrossRef] [PubMed]

- Ferris, C.F.; Cai, X.; Qiao, J.; Switzer, B.; Baun, J.; Morrison, T.; Iriah, S.; Madularu, D.; Sinkevicius, K.W.; Kulkarni, P. Life without a brain: Neuroradiological and behavioral evidence of neuroplasticity necessary to sustain brain function in the face of severe hydrocephalus. Sci. Rep. 2019, 9, 16479. [Google Scholar] [CrossRef] [PubMed]

- Meyer, J.S.; Obara, K.; Muramatsu, K. Diaschisis. Neurol. Res. 1993, 15, 362–366. [Google Scholar] [CrossRef] [PubMed]

- Crick, F. The recent excitement about neural networks. Nature 1989, 337, 129–132. [Google Scholar] [CrossRef] [PubMed]

- Whittington, J.C.; Bogacz, R. Theories of Error Back-Propagation in the Brain. Trends Cogn. Sci. 2019, 23, 235–250. [Google Scholar] [CrossRef] [PubMed]

- Pulvermüller, F.; Tomasello, R.; Henningsen-Schomers, M.R.; Wennekers, T. Biological constraints on neural network models of cognitive function. Nat. Rev. Neurosci. 2021, 22, 488–502. [Google Scholar] [CrossRef]

- Bartholomew-Biggs, M.; Brown, S.; Christianson, B.; Dixon, L. Automatic differentiation of algorithms. J. Comput. Appl. Math. 2000, 124, 171–190. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Shah, B.; Bhavsar, H. Time Complexity in Deep Learning Models. Procedia Comput. Sci. 2022, 215, 202–210. [Google Scholar] [CrossRef]

- Sacramento, J.; Costa, R.P.; Bengio, Y.; Senn, W. Dendritic Cortical Microcircuits Approximate the Backpropagation Algorithm. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8735–8746. [Google Scholar]

- Roelfsema, P.R.; Lamme, V.A.F.; Spekreijse, H. Object-based attention in the primary visual cortex of the macaque monkey. Nature 1998, 395, 376–381. [Google Scholar] [CrossRef] [PubMed]

- Roelfsema, P.R.; van Ooyen, A. Attention-Gated Reinforcement Learning of Internal Representations for Classification. Neural Comput. 2005, 17, 2176–2214. [Google Scholar] [CrossRef] [PubMed]

- Olivers, C.N.; Roelfsema, P.R. Attention for action in visual working memory. Cortex 2020, 131, 179–194. [Google Scholar] [CrossRef]

- Nakuci, J.; Yeon, J.; Kim, J.H.; Kim, S.P.; Rahnev, D. Multiple brain activation patterns for the same task. bioRxiv 2023. [Google Scholar] [CrossRef]

- Hodkinson, D.J.; Jackson, S.R.; Jung, J. Task-dependent plasticity in distributed neural circuits after transcranial direct current stimulation of the human motor cortex: A proof-of-concept study. Front. Pain Res. 2022, 3, 1005634. [Google Scholar] [CrossRef] [PubMed]

- Burke, S.N.; Barnes, C.A. Neural plasticity in the ageing brain. Nat. Rev. Neurosci. 2006, 7, 30–40. [Google Scholar] [CrossRef]

- Pauwels, L.; Chalavi, S.; Swinnen, S.P. Neural plasticity in the ageing brain. Aging 2018, 10, 1789–1790. [Google Scholar] [CrossRef]

- Vik, B.M.D.; Skeie, G.O.; Specht, K. Neuroplastic Effects in Patients with Traumatic Brain Injury after Music-Supported Therapy. Front. Hum. Neurosci. 2019, 13, 177. [Google Scholar] [CrossRef]

- Park, D.C.; Bischof, G.N. The aging mind: Neuroplasticity in response to cognitive training. Dialogues Clin. Neurosci. 2013, 15, 109–119. [Google Scholar] [CrossRef]

- Sakuma, S.; Mizuno-Matsumoto, Y.; Nishitani, Y.; Tamura, S. Learning Times Required to Identify the Stimulated Position and Shortening of Propagation Path by Hebb’s Rule in Neural Network. AIMS Neurosci. 2017, 4, 238–253. [Google Scholar] [CrossRef]

- Bengio, Y.; Mesnard, T.; Fischer, A.; Zhang, S.; Wu, Y. STDP-Compatible Approximation of Backpropagation in an Energy-Based Model. Neural Comput. 2017, 29, 555–577. [Google Scholar] [CrossRef] [PubMed]

- Guerguiev, J.; Lillicrap, T.P.; Richards, B.A. Towards deep learning with segregated dendrites. eLife 2017, 6, e22901. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Lukasiewicz, T.; Xu, Z.; Bogacz, R. Can the Brain Do Backpropagation?—Exact Implementation of Backpropagation in Predictive Coding Networks. Adv. Neural Inf. Process. Syst. 2020, 2020, 22566–22579. [Google Scholar]

- Wu, Y.K.; Zenke, F. Nonlinear transient amplification in recurrent neural networks with short-term plasticity. eLife 2021, 10, e71263. [Google Scholar] [CrossRef] [PubMed]

- Herculano-Houzel, S. The human brain in numbers: A linearly scaled-up primate brain. Front. Hum. Neurosci. 2009, 3, 31. [Google Scholar] [CrossRef]

- Nguyen, T. Total Number of Synapses in the Adult Human Neocortex. Undergrad. J. Math. Model. One Plus Two 2013, 3, 26. [Google Scholar] [CrossRef][Green Version]

- Sala-Llonch, R.; Junqué, C.; Arenaza-Urquijo, E.M.; Vidal-Piñeiro, D.; Valls-Pedret, C.; Palacios, E.M.; Domènech, S.; Salvà, A.; Bargalló, N.; Bartrés-Faz, D. Changes in whole-brain functional networks and memory performance in aging. Neurobiol. Aging 2014, 35, 2193–2202. [Google Scholar] [CrossRef]

- Cao, M.; Wang, J.H.; Dai, Z.J.; Cao, X.Y.; Jiang, L.L.; Fan, F.M.; Song, X.W.; Xia, M.R.; Shu, N.; Dong, Q.; et al. Topological organization of the human brain functional connectome across the lifespan. Dev. Cogn. Neurosci. 2014, 7, 76–93. [Google Scholar] [CrossRef]

- Fornito, A.; Zalesky, A.; Breakspear, M. The connectomics of brain disorders. Nat. Rev. Neurosci. 2015, 16, 159–172. [Google Scholar] [CrossRef]

- Jiang, X.; Shen, Y.; Yao, J.; Zhang, L.; Xu, L.; Feng, R.; Cai, L.; Liu, J.; Chen, W.; Wang, J. Connectome analysis of functional and structural hemispheric brain networks in major depressive disorder. Transl. Psychiatry 2019, 9, 136. [Google Scholar] [CrossRef]

- Yang, Z.; Jian, L.; Qiu, H.; Zhang, C.; Cheng, S.; Ji, J.; Li, T.; Wang, Y.; Li, J.; Li, K. Understanding complex functional wiring patterns in major depressive disorder through brain functional connectome. Transl. Psychiatry 2021, 11, 526. [Google Scholar] [CrossRef]

- Korgaonkar, M.S.; Goldstein-Piekarski, A.N.; Fornito, A.; Williams, L.M. Intrinsic connectomes are a predictive biomarker of remission in major depressive disorder. Mol. Psychiatry 2019, 25, 1537–1549. [Google Scholar] [CrossRef]

- Leuba, G.; Garey, L. Comparison of neuronal and glial numerical density in primary and secondary visual cortex of man. Exp. Brain Res. 1989, 77, 31–38. [Google Scholar] [CrossRef]

- Bowyer, A. Computing Dirichlet tessellations. Comput. J. 1981, 24, 162–166. [Google Scholar] [CrossRef]

- Watson, D.F. Computing the n-dimensional Delaunay tessellation with application to Voronoi polytopes. Comput. J. 1981, 24, 167–172. [Google Scholar] [CrossRef]

- Rebay, S. Efficient Unstructured Mesh Generation by Means of Delaunay Triangulation and Bowyer-Watson Algorithm. J. Comput. Phys. 1993, 106, 125–138. [Google Scholar] [CrossRef]

- Citri, A.; Malenka, R.C. Synaptic Plasticity: Multiple Forms, Functions, and Mechanisms. Neuropsychopharmacology 2007, 33, 18–41. [Google Scholar] [CrossRef] [PubMed]

- Luo, L.; O’Leary, D.D. Axon retraction and degeneration in development and disease. Annu. Rev. Neurosci. 2005, 28, 127–156. [Google Scholar] [CrossRef] [PubMed]

- Sahara, S.; Yanagawa, Y.; O’Leary, D.D.M.; Stevens, C.F. The Fraction of Cortical GABAergic Neurons Is Constant from Near the Start of Cortical Neurogenesis to Adulthood. J. Neurosci. 2012, 32, 4755–4761. [Google Scholar] [CrossRef] [PubMed]

- Bellman, R. On a routing problem. Q. Appl. Math. 1958, 16, 87–90. [Google Scholar] [CrossRef]

- Johnson, D.B. Efficient Algorithms for Shortest Paths in Sparse Networks. J. ACM 1977, 24, 1–13. [Google Scholar] [CrossRef]

- Floyd, R.W. Algorithm 97: Shortest path. Commun. ACM 1962, 5, 345. [Google Scholar] [CrossRef]

- Broutin, N.; Fawzi, O. Longest Path Distance in Random Circuits. Comb. Probab. Comput. 2012, 21, 856–881. [Google Scholar] [CrossRef][Green Version]

- Ando, E. The Distribution Function of the Longest Path Length in Constant Treewidth DAGs with Random Edge Length. arXiv 2019, arXiv:1910.09791. [Google Scholar] [CrossRef]

- Madraki, G.; Judd, R.P. Recalculating the Length of the Longest Path in Perturbed Directed Acyclic Graph. IFAC-PapersOnLine 2019, 52, 1560–1565. [Google Scholar] [CrossRef]

- Dillencourt, M.B. Finding Hamiltonian cycles in Delaunay triangulations is NP-complete. Discret. Appl. Math. 1996, 64, 207–217. [Google Scholar] [CrossRef][Green Version]

- Dillencourt, M.B.; Smith, W.D. Graph-theoretical conditions for inscribability and Delaunay realizability. Discret. Math. 1996, 161, 63–77. [Google Scholar] [CrossRef][Green Version]

- Oldham, S.; Fornito, A. The development of brain network hubs. Dev. Cogn. Neurosci. 2019, 36, 100607. [Google Scholar] [CrossRef]

- Iturria-Medina, Y.; Sotero, R.C.; Canales-Rodríguez, E.J.; Alemán-Gómez, Y.; Melie-García, L. Studying the human brain anatomical network via diffusion-weighted MRI and Graph Theory. NeuroImage 2008, 40, 1064–1076. [Google Scholar] [CrossRef] [PubMed]

- Farahani, F.V.; Karwowski, W.; Lighthall, N.R. Application of Graph Theory for Identifying Connectivity Patterns in Human Brain Networks: A Systematic Review. Front. Neurosci. 2019, 13, 585. [Google Scholar] [CrossRef] [PubMed]

- Sankar, A.; Scheinost, D.; Goldman, D.A.; Drachman, R.; Colic, L.; Villa, L.M.; Kim, J.A.; Gonzalez, Y.; Marcelo, I.; Shinomiya, M.; et al. Graph theory analysis of whole brain functional connectivity to assess disturbances associated with suicide attempts in bipolar disorder. Transl. Psychiatry 2022, 12, 7. [Google Scholar] [CrossRef]

- Mårtensson, G.; Pereira, J.B.; Mecocci, P.; Vellas, B.; Tsolaki, M.; Kłoszewska, I.; Soininen, H.; Lovestone, S.; Simmons, A.; Volpe, G.; et al. Stability of graph theoretical measures in structural brain networks in Alzheimer’s disease. Sci. Rep. 2018, 8, 11592. [Google Scholar] [CrossRef] [PubMed]

- Betzel, R.F.; Bassett, D.S. Multi-scale brain networks. NeuroImage 2017, 160, 73–83. [Google Scholar] [CrossRef]

- Bennett, S.H.; Kirby, A.J.; Finnerty, G.T. Rewiring the connectome: Evidence and effects. Neurosci. Biobehav. Rev. 2018, 88, 51–62. [Google Scholar] [CrossRef]

- Eschenburg, K.M.; Grabowski, T.J.; Haynor, D.R. Learning Cortical Parcellations Using Graph Neural Networks. Front. Neurosci. 2021, 15, 797500. [Google Scholar] [CrossRef] [PubMed]

- Qiu, W.; Ma, L.; Jiang, T.; Zhang, Y. Unrevealing Reliable Cortical Parcellation of Individual Brains Using Resting-State Functional Magnetic Resonance Imaging and Masked Graph Convolutions. Front. Neurosci. 2022, 16, 838347. [Google Scholar] [CrossRef]

- Liu, F.; Zhang, Y.; Rekik, I.; Massoud, Y.; Solé-Casals, J. Editorial: Graph learning for brain imaging. Front. Neurosci. 2022, 16, 1001818. [Google Scholar] [CrossRef]

- Bessadok, A.; Mahjoub, M.A.; Rekik, I. Graph Neural Networks in Network Neuroscience. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 5833–5848. [Google Scholar] [CrossRef]

- Kurucu, M.C.; Rekik, I. Graph neural network based unsupervised influential sample selection for brain multigraph population fusion. Comput. Med. Imaging Graph. 2023, 108, 102274. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yang, Y.; Kuai, H.; Chen, J.; Huang, J.; Liang, P.; Zhong, N. Systematic Fusion of Multi-Source Cognitive Networks With Graph Learning—A Study on Fronto-Parietal Network. Front. Neurosci. 2022, 16, 866734. [Google Scholar] [CrossRef]

- Najarro, E.; Sudhakaran, S.; Risi, S. Towards Self-Assembling Artificial Neural Networks through Neural Developmental Programs. arXiv 2023, arXiv:2307.08197. [Google Scholar] [CrossRef]

- Rashid, B.; Destrade, M.; Gilchrist, M.D. Hyperelastic and Viscoelastic Properties of Brain Tissue in Tension. In Proceedings of the ASME 2012 International Mechanical Engineering Congress and Exposition, Houston, TX, USA, 9 November 2012; pp. 921–929. [Google Scholar] [CrossRef]

- Rashid, B.; Destrade, M.; Gilchrist, M.D. Mechanical characterization of brain tissue in simple shear at dynamic strain rates. J. Mech. Behav. Biomed. Mater. 2013, 28, 71–85. [Google Scholar] [CrossRef] [PubMed]

- Rashid, B.; Destrade, M.; Gilchrist, M.D. Mechanical characterization of brain tissue in tension at dynamic strain rates. J. Mech. Behav. Biomed. Mater. 2014, 33, 43–54. [Google Scholar] [CrossRef]

- Cyron, C.J.; Aydin, R.C.; Humphrey, J.D. A homogenized constrained mixture (and mechanical analog) model for growth and remodeling of soft tissue. Biomech. Model. Mechanobiol. 2016, 15, 1389–1403. [Google Scholar] [CrossRef] [PubMed]

- Cyron, C.J.; Humphrey, J.D. Growth and remodeling of load-bearing biological soft tissues. Meccanica 2016, 52, 645–664. [Google Scholar] [CrossRef]

- Braeu, F.A.; Seitz, A.; Aydin, R.C.; Cyron, C.J. Homogenized constrained mixture models for anisotropic volumetric growth and remodeling. Biomech. Model. Mechanobiol. 2016, 16, 889–906. [Google Scholar] [CrossRef]

- Morin, F.; Chabanas, M.; Courtecuisse, H.; Payan, Y. Biomechanical Modeling of Brain Soft Tissues for Medical Applications. In Biomechanics of Living Organs; Elsevier: Amsterdam, The Netherlands, 2017; pp. 127–146. [Google Scholar] [CrossRef]

- Mihai, L.A.; Budday, S.; Holzapfel, G.A.; Kuhl, E.; Goriely, A. A family of hyperelastic models for human brain tissue. J. Mech. Phys. Solids 2017, 106, 60–79. [Google Scholar] [CrossRef]

- Holzapfel, G.A.; Fereidoonnezhad, B. Modeling of Damage in Soft Biological Tissues. In Biomechanics of Living Organs; Elsevier: Amsterdam, The Netherlands, 2017; pp. 101–123. [Google Scholar] [CrossRef]

- Zhu, Z.; Jiang, C.; Jiang, H. A visco-hyperelastic model of brain tissue incorporating both tension/compression asymmetry and volume compressibility. Acta Mech. 2019, 230, 2125–2135. [Google Scholar] [CrossRef]

- Fields, R.D.; Woo, D.H.; Basser, P.J. Glial Regulation of the Neuronal Connectome through Local and Long-Distant Communication. Neuron 2015, 86, 374–386. [Google Scholar] [CrossRef] [PubMed]

- Avin, S.; Currie, A.; Montgomery, S.H. An agent-based model clarifies the importance of functional and developmental integration in shaping brain evolution. BMC Biol. 2021, 19, 97. [Google Scholar] [CrossRef] [PubMed]

- Miller Neilan, R.; Majetic, G.; Gil-Silva, M.; Adke, A.P.; Carrasquillo, Y.; Kolber, B.J. Agent-based modeling of the central amygdala and pain using cell-type specific physiological parameters. PLoS Comput. Biol. 2021, 17, e1009097. [Google Scholar] [CrossRef] [PubMed]

- Joyce, K.E.; Hayasaka, S.; Laurienti, P.J. A genetic algorithm for controlling an agent-based model of the functional human brain. Biomed. Sci. Instrum. 2013, 48, 210–217. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Irastorza-Valera, L.; Benítez, J.M.; Montáns, F.J.; Saucedo-Mora, L. An Agent-Based Model to Reproduce the Boolean Logic Behaviour of Neuronal Self-Organised Communities through Pulse Delay Modulation and Generation of Logic Gates. Biomimetics 2024, 9, 101. https://doi.org/10.3390/biomimetics9020101

Irastorza-Valera L, Benítez JM, Montáns FJ, Saucedo-Mora L. An Agent-Based Model to Reproduce the Boolean Logic Behaviour of Neuronal Self-Organised Communities through Pulse Delay Modulation and Generation of Logic Gates. Biomimetics. 2024; 9(2):101. https://doi.org/10.3390/biomimetics9020101

Chicago/Turabian StyleIrastorza-Valera, Luis, José María Benítez, Francisco J. Montáns, and Luis Saucedo-Mora. 2024. "An Agent-Based Model to Reproduce the Boolean Logic Behaviour of Neuronal Self-Organised Communities through Pulse Delay Modulation and Generation of Logic Gates" Biomimetics 9, no. 2: 101. https://doi.org/10.3390/biomimetics9020101

APA StyleIrastorza-Valera, L., Benítez, J. M., Montáns, F. J., & Saucedo-Mora, L. (2024). An Agent-Based Model to Reproduce the Boolean Logic Behaviour of Neuronal Self-Organised Communities through Pulse Delay Modulation and Generation of Logic Gates. Biomimetics, 9(2), 101. https://doi.org/10.3390/biomimetics9020101