Control Synergies for Rapid Stabilization and Enlarged Region of Attraction for a Model of Hopping

Abstract

:1. Introduction

2. Biological Relevance and Novelty

3. Model

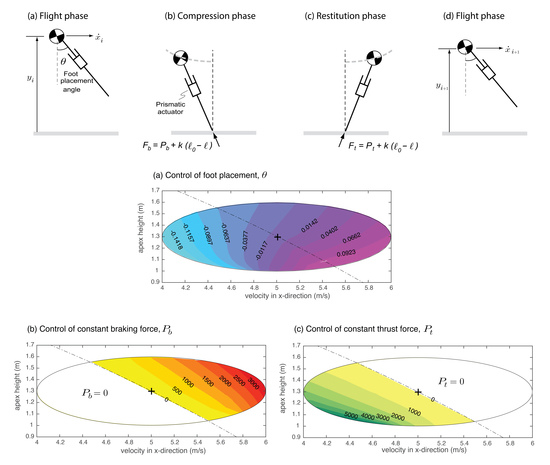

3.1. Running Model

3.2. Equations of Motion

4. Methods

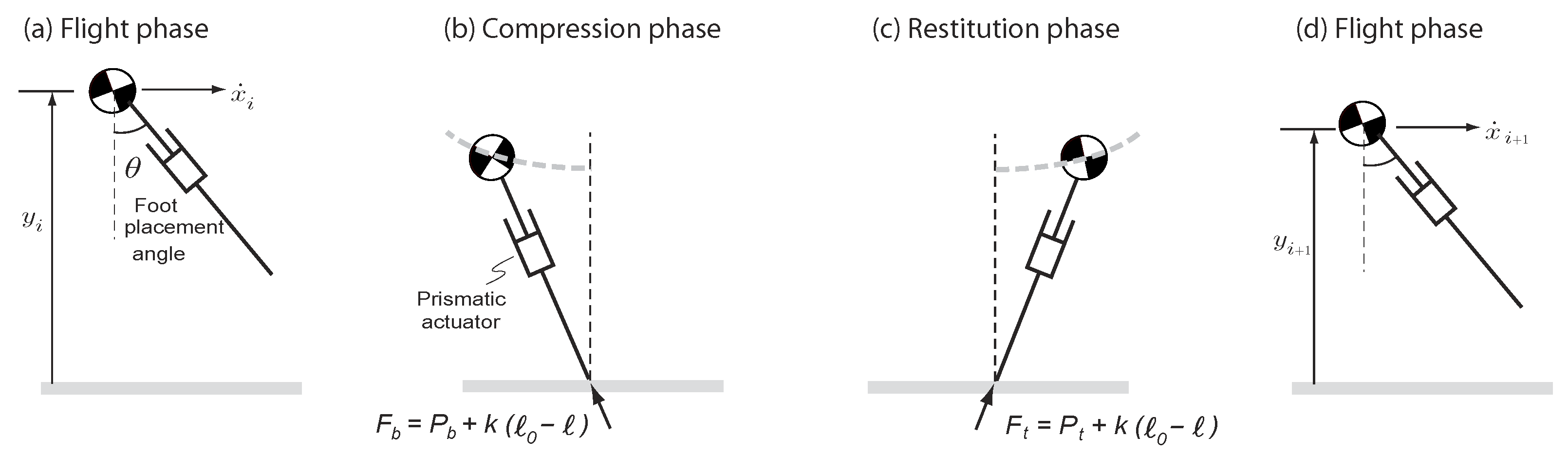

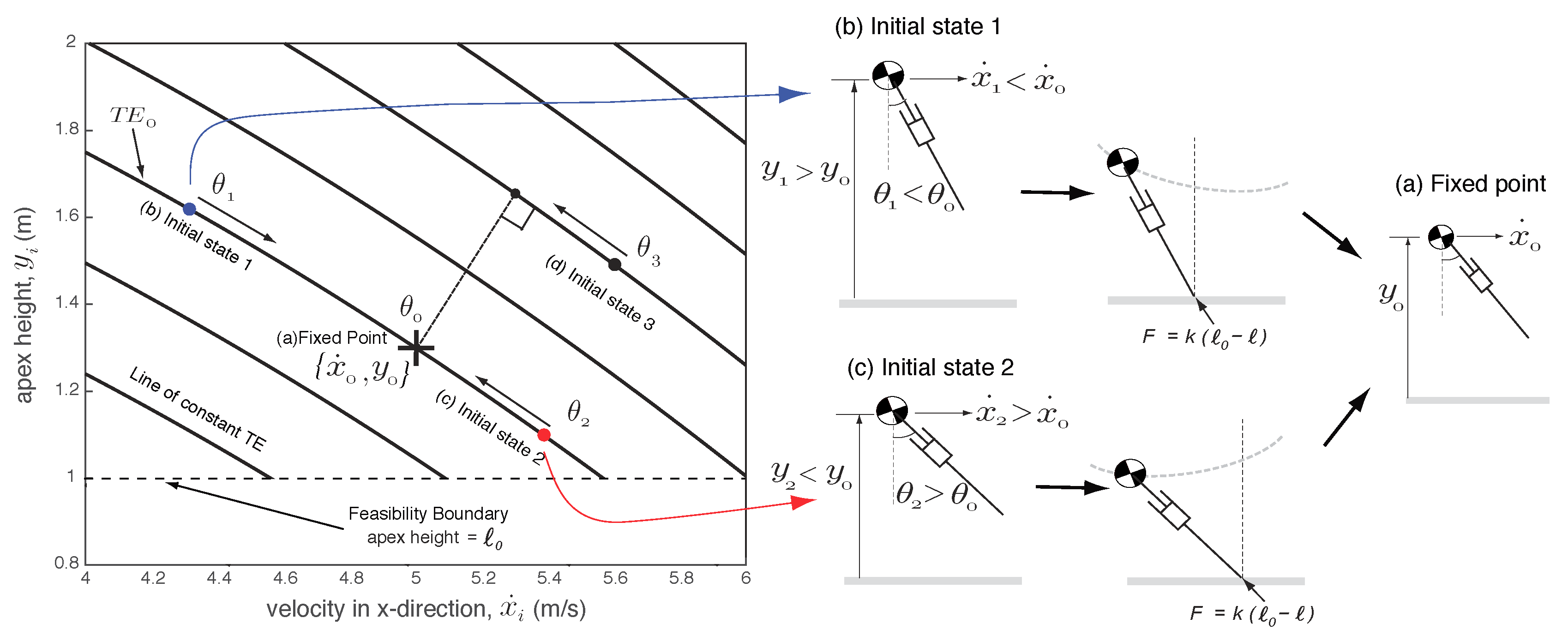

4.1. Step-to-Step Analysis Using Poincaré Map

4.2. Control Synergies for Enlarging the Region of Attraction

4.2.1. Key Ideas Behind Control Synergies

4.2.2. Exponential Convergence Using Orbital Control Lyapunov Function

4.2.3. Region of Attraction

| Algorithm 1: ROA() |

| Input: fixed point Output: Initial conditions ’s 1 FIND() such that intersects 2 foreach do // is a small positive number 3 COMPUTE(’s) on the level set . 4 FIND( for each by solving optimization problem described by Equation (10). 5 if then 6 continue 7 else 8 break 9 end 10 end |

4.2.4. Using Optimization for Exponential Stabilization

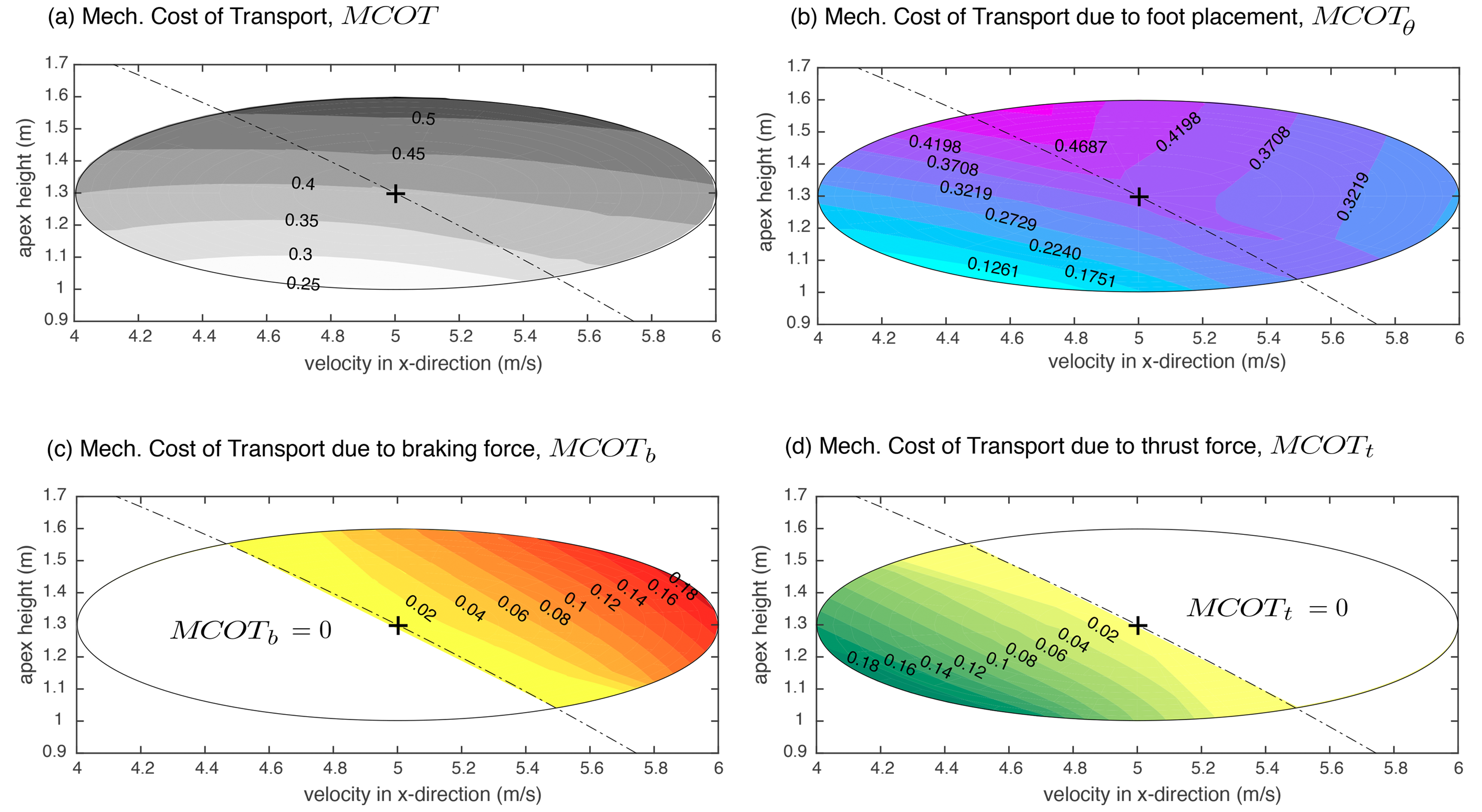

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pratt, J. Exploiting Inherent Robustness and Natural Dynamics in the Control of Bipedal Walking Robots. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2000. [Google Scholar]

- Zamani, A.; Khorram, M.; Moosavian, S.A.A. Dynamics and Stable Gait Planning of a Quadruped Robot. In Proceedings of the 11th International Conference on Control, Automation and Systems (ICCAS), Gyeonggi-do, Korea, 26–29 October 2011; pp. 25–30. [Google Scholar]

- Khorram, M.; Moosavian, S.A.A. Push recovery of a quadruped robot on challenging terrains. Robotica 2017, 35, 1670–1689. [Google Scholar] [CrossRef]

- Kasaei, M.; Lau, N.; Pereira, A. An Optimal Closed-Loop Framework to Develop Stable Walking for Humanoid Robot. In Proceedings of the 18th IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 30–35. [Google Scholar]

- Khadiv, M.; Moosavian, S.A.A.; Yousefi-Koma, A.; Sadedel, M.; Mansouri, S. Optimal gait planning for humanoids with 3D structure walking on slippery surfaces. Robotica 2017, 35, 569–587. [Google Scholar] [CrossRef]

- Massah, A.; Zamani, A.; Salehinia, Y.; Sh, M.A.; Teshnehlab, M. A hybrid controller based on CPG and ZMP for biped locomotion. J. Mech. Sci. Technol. 2013, 27, 3473–3486. [Google Scholar] [CrossRef]

- Moosavian, S.A.A.; Khorram, M.; Zamani, A.; Abedini, H. PD Regulated Sliding Mode Control of a Quadruped Robot. In Proceedings of the 2011 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 7–10 August 2011; pp. 2061–2066. [Google Scholar]

- Faraji, H.; Tachella, R.; Hatton, R.L. Aiming and Vaulting: Spider Inspired Leaping for Jumping Robots. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2082–2087. [Google Scholar]

- Rohani, F.; Richter, H.; Van Den Bogert, A.J. Optimal design and control of an electromechanical transfemoral prosthesis with energy regeneration. PLoS ONE 2017, 12, e0188266. [Google Scholar] [CrossRef] [PubMed]

- Wieber, P.B. On the Stability of Walking Systems. In Proceedings of the 3rd IARP International Workshop on Humanoid and Human Friendly Robotics, Tsukuba, Japan, 11–12 December 2002. [Google Scholar]

- Koolen, T.; Boer, T.D.; Rebula, J.; Goswami, A.; Pratt, J.E. Capturability-based analysis and control of legged locomotion. Part 1: Theory and application to three simple gait models. Int. J. Robot. Res. 2012, 31, 1094–1113. [Google Scholar] [CrossRef]

- Khadiv, M.; Herzog, A.; Moosavian, S.A.A.; Righetti, L. A robust walking controller based on online step location and duration optimization for bipedal locomotion. arXiv, 2017; arXiv:1704.01271. [Google Scholar]

- Hobbelen, D.; Wisse, M. A disturbance rejection measure for limit cycle walkers: The gait sensitivity norm. IEEE Trans. Robot. 2007, 23, 1213–1224. [Google Scholar] [CrossRef]

- McGeer, T. Passive dynamic walking. Int. J. Robot. Res. 1990, 9, 62–82. [Google Scholar] [CrossRef]

- Hobbelen, D.G.; Wisse, M. Swing-leg retraction for limit cycle walkers improves disturbance rejection. IEEE Trans. Robot. 2008, 24, 377–389. [Google Scholar] [CrossRef]

- Raibert, M. Legged Robots That Balance; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Schwind, W.J. Spring Loaded Inverted Pendulum Running: A Plant Model. Ph.D. Thesis, University of Michigan, Ann Arbor, MI, USA, 1998. [Google Scholar]

- Seyfarth, A.; Geyer, H.; Günther, M.; Blickhan, R. A movement criterion for running. J. Biomech. 2002, 35, 649–655. [Google Scholar] [CrossRef] [Green Version]

- Seyfarth, A.; Geyer, H.; Herr, H. Swing-leg retraction: A simple control model for stable running. J. Exp. Biol. 2003, 206, 2547–2555. [Google Scholar] [CrossRef] [PubMed]

- Bhounsule, P.A.; Zamani, A. Stable bipedal walking with a swing-leg protraction strategy. J. Biomech. 2017, 51, 123–127. [Google Scholar] [CrossRef] [PubMed]

- Shemer, N.; Degani, A. A flight-phase terrain following control strategy for stable and robust hopping of a one-legged robot under large terrain variations. Bioinspir. Biomim. 2017, 12, 046011. [Google Scholar] [CrossRef] [PubMed]

- Ernst, M.; Geyer, H.; Blickhan, R. Extension and customization of self-stability control in compliant legged systems. Bioinspir. Biomim. 2012, 7, 046002. [Google Scholar] [CrossRef] [PubMed]

- Andrews, B.; Miller, B.; Schmitt, J.; Clark, J.E. Running over unknown rough terrain with a one-legged planar robot. Bioinspir. Biomim. 2011, 6, 026009. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strogatz, S. Nonlinear Dynamics and Chaos; Addison-Wesley: Reading, MA, USA, 1994. [Google Scholar]

- Coleman, M.J.; Chatterjee, A.; Ruina, A. Motions of a rimless spoked wheel: A simple three-dimensional system with impacts. Dyn. Stab. Syst. 1997, 12, 139–159. [Google Scholar] [CrossRef]

- Mombaur, K.; Georg Bock, H.; Schlöder, J.; Longman, R. Stable walking and running robots without feedback. In Climbing and Walking Robots; Springer: Berlin/Heidelberg, Germany, 2005; pp. 725–735. [Google Scholar]

- Bhounsule, P.A.; Ameperosa, E.; Miller, S.; Seay, K.; Ulep, R. Dead-Beat Control of Walking for a Torso-Actuated Rimless Wheel Using an Event-Based, Discrete, Linear Controller. In Proceedings of the ASME 2016 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference (IDETC/CIE), Charlotte, NC, USA, 21–24 August 2016; pp. 1–9. [Google Scholar]

- Kuo, A.D. Stabilization of lateral motion in passive dynamic walking. Int. J. Robot. Res. 1999, 18, 917–930. [Google Scholar] [CrossRef]

- Navabi, M.; Mirzaei, H. Robust optimal adaptive trajectory tracking control of quadrotor helicopter. Latin Am. J. Solids Struct. 2017, 14, 1040–1063. [Google Scholar] [CrossRef]

- Tedrake, R. LQR-Trees: Feedback Motion Planning on Sparse Randomized Trees. In Proceedings of the Robotics Science and Systems (RSS), Zaragoza, Spain, 28 June–1 July 2009. [Google Scholar]

- Prajna, S.; Papachristodoulou, A.; Parrilo, P.A. Introducing SOSTOOLS: A General Purpose Sum of Squares Programming Solver. In Proceedings of the 41st IEEE Conference on Decision and Control, Las Vegas, NV, USA, 10–13 December 2002; Volume 1, pp. 741–746. [Google Scholar]

- Grizzle, J.; Abba, G.; Plestan, F. Asymptotically stable walking for biped robots: Analysis via systems with impulse effects. IEEE Trans. Autom. Control 2001, 46, 51–64. [Google Scholar] [CrossRef]

- Antsaklis, P.; Michel, A. Linear Systems; Birkhauser: Basel, Switzerland, 2006. [Google Scholar]

- Carver, S.; Cowan, N.; Guckenheimer, J. Lateral stability of the spring-mass hopper suggests a two-step control strategy for running. Chaos 2009, 19, 026106. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zamani, A.; Bhounsule, P.A. Foot Placement and Ankle Push-Off Control for the Orbital Stabilization of Bipedal Robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4883–4888. [Google Scholar]

- Bhounsule, P.A.; Zamani, A. A discrete control lyapunov function for exponential orbital stabilization of the simplest walker. J. Mech. Robot. 2017, 9, 051011. [Google Scholar] [CrossRef]

- Bernstein, N. The Co-Ordination and Regulation of Movements; Pergamon Press: London, UK, 1967. [Google Scholar]

- Torres-Oviedo, G.; Macpherson, J.M.; Ting, L.H. Muscle synergy organization is robust across a variety of postural perturbations. J. Neurophysiol. 2006, 96, 1530–1546. [Google Scholar] [CrossRef] [PubMed]

- Ting, L.H.; Macpherson, J.M. A limited set of muscle synergies for force control during a postural task. J. Neurophysiol. 2005, 93, 609–613. [Google Scholar] [CrossRef] [PubMed]

- Ivanenko, Y.P.; Grasso, R.; Zago, M.; Molinari, M.; Scivoletto, G.; Castellano, V.; Macellari, V.; Lacquaniti, F. Temporal components of the motor patterns expressed by the human spinal cord reflect foot kinematics. J. Neurophysiol. 2003, 90, 3555–3565. [Google Scholar] [CrossRef] [PubMed]

- Cappellini, G.; Ivanenko, Y.P.; Poppele, R.E.; Lacquaniti, F. Motor patterns in human walking and running. J. Neurophysiol. 2006, 95, 3426–3437. [Google Scholar] [CrossRef] [PubMed]

- Pratt, J.E.; Tedrake, R. Velocity-based stability margins for fast bipedal walking. In Fast Motions in Biomechanics and Robotics; Springer: Berlin, Germany, 2006; pp. 299–324. [Google Scholar]

- Wu, A.; Geyer, H. The 3-D spring–mass model reveals a time-based deadbeat control for highly robust running and steering in uncertain environments. IEEE Trans. Robot. 2013, 29, 1114–1124. [Google Scholar] [CrossRef]

- Bhounsule, P.A.; Zamani, A.; Pusey, J. Switching between Limit Cycles in a Model of Running Using Exponentially Stabilizing Discrete Control Lyapunov Function. In Proceedings of the 2018 American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018; pp. 3714–3719. [Google Scholar]

- Motahar, M.S.; Veer, S.; Poulakakis, I. Composing Limit Cycles for Motion Planning of 3D Bipedal Walkers. In Proceedings of the 55th IEEE Conference on Decision and Control (CDC), Las Vegas, NV, USA, 12–14 December 2016; pp. 6368–6374. [Google Scholar]

- Ames, A.D.; Galloway, K.; Sreenath, K.; Grizzle, J.W. Rapidly exponentially stabilizing control Lyapunov functions and hybrid zero dynamics. IEEE Trans. Autom. Control 2014, 59, 876–891. [Google Scholar] [CrossRef]

- Veer, S.; Motahar, M.S.; Poulakakis, I. Generation of and Switching among Limit-Cycle Bipedal Walking Gaits. In Proceedings of the 56th IEEE Conference on Decision and Control (CDC), Melbourne, Australia, 12–15 December 2017; pp. 5827–5832. [Google Scholar]

- Zamani, A.; Bhounsule, P.A.; Taha, A. Planning Energy-Efficient Bipedal Locomotion on Patterned Terrain. In Proceedings of the Unmanned Systems Technology XVIII, International Society for Optics and Photonics, Baltimore, MD, USA, 17–21 April 2016; p. 98370A. [Google Scholar]

- Zamani, A.; Bhounsule, P.; Hurst, J. Energy-efficient planning for dynamic legged robots on patterned terrain. In Proceedings of the Dynamic Walking Conference, Holly, MI, USA, 4–7 June 2016; p. 1. [Google Scholar]

- Srinivasan, M. Why Walk and Run: Energetic Costs and Energetic Optimality in Simple Mechanics-Based Models of a Bipedal Animal. Ph.D. Thesis, Cornell University, Ithaca, NY, USA, 2006. [Google Scholar]

- Grimes, J.A.; Hurst, J.W. The design of ATRIAS 1.0 a unique monopod, hopping robot. In Proceedings of the 14th International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines (CLAWAR), Baltimore, MD, USA, 23–26 July 2012; pp. 548–554. [Google Scholar]

- Bhounsule, P.; Zamani, A.; Krause, J.; Farra, S.; Pusey, J. Control policies for large region of attraction for dynamically balancing legged robots: A sampling-based approach. Robotica 2018. sumbitted. [Google Scholar]

- Alambeigi, F.; Khadem, S.M.; Khorsand, H.; Hasan, E.M.S. A comparison of performance of artificial intelligence methods in prediction of dry sliding wear behavior. Int. J. Adv. Manuf. Technol. 2016, 84, 1981–1994. [Google Scholar] [CrossRef]

- Alambeigi, F.; Wang, Z.; Hegeman, R.; Liu, Y.H.; Armand, M. A robust data-driven approach for online learning and manipulation of unmodeled 3-D heterogeneous compliant objects. IEEE Robot. Autom. Lett. 2018, 3, 4140–4147. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zamani, A.; Bhounsule, P.A. Control Synergies for Rapid Stabilization and Enlarged Region of Attraction for a Model of Hopping. Biomimetics 2018, 3, 25. https://doi.org/10.3390/biomimetics3030025

Zamani A, Bhounsule PA. Control Synergies for Rapid Stabilization and Enlarged Region of Attraction for a Model of Hopping. Biomimetics. 2018; 3(3):25. https://doi.org/10.3390/biomimetics3030025

Chicago/Turabian StyleZamani, Ali, and Pranav A. Bhounsule. 2018. "Control Synergies for Rapid Stabilization and Enlarged Region of Attraction for a Model of Hopping" Biomimetics 3, no. 3: 25. https://doi.org/10.3390/biomimetics3030025