End-to-End Depth-Guided Relighting Using Lightweight Deep Learning-Based Method

Abstract

:1. Introduction

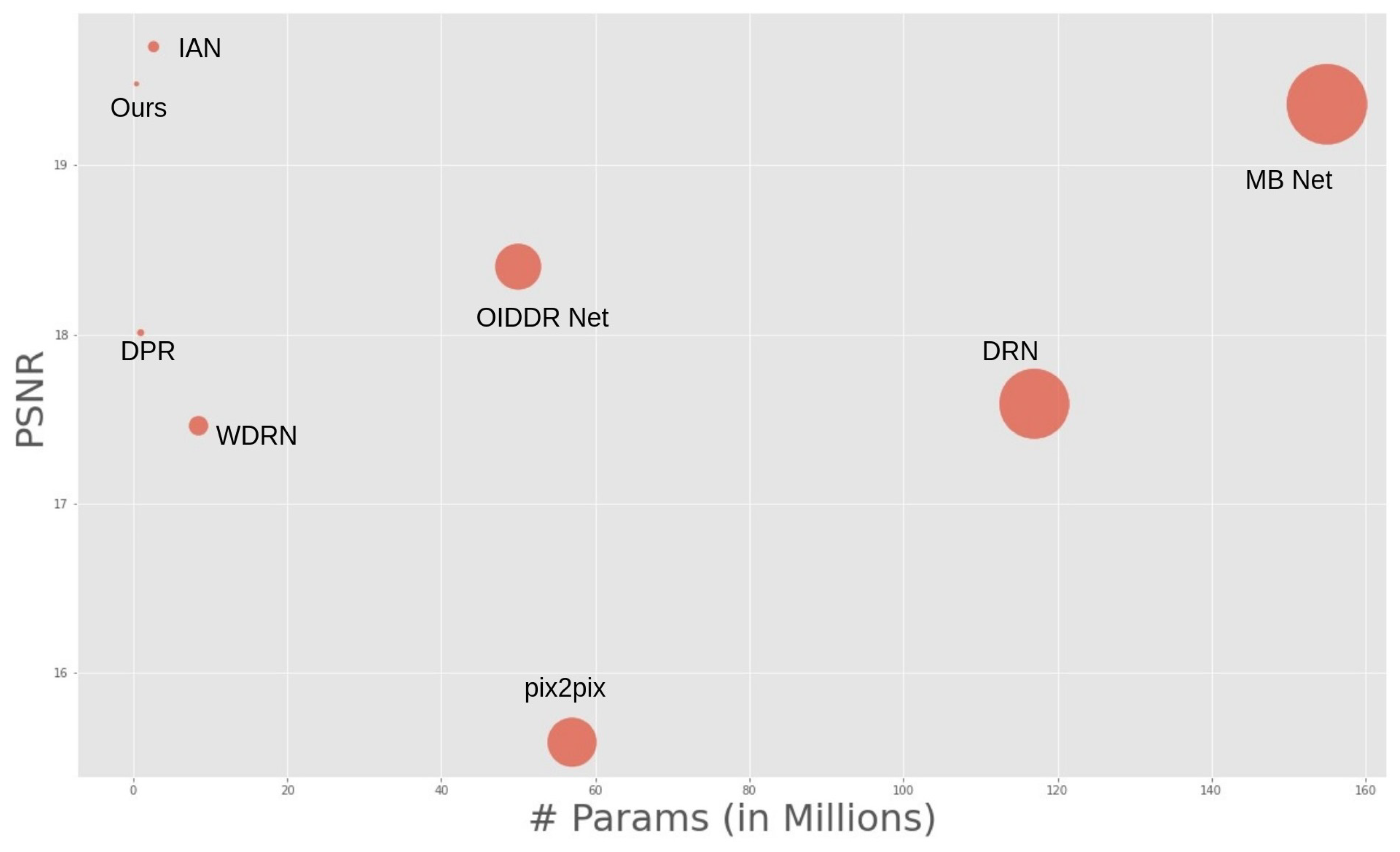

- A new U-Net-based architecture characterized by fewer parameters is proposed. To our knowledge, this is the smallest-size model among all existing methods for image relighting that can achieve competitive performance.

- A modified version of the Res2Net blocks, known as Res2Net Squeezed blocks, which implicitly extends the receptive field area and thus collects and retains more information about the image, is proposed.

- A depth-guided stream is introduced, which is merged with the corresponding RGB features of the same size and then progressively up-sampled to obtain the target images.

- A bi-modal depth-guided model that extracts the features from the depth and RGB images using two streams is designed. This model implicitly enhances the receptive field by utilizing the Res2Net Squeezed blocks for image relighting. Extensive experiments and a comparative analysis demonstrated that our proposed method outperformed the others while maintaining high computational efficiency.

2. Related Work

3. Approach and Proposed Network

3.1. Task Definition

3.2. Detail of the Architecture

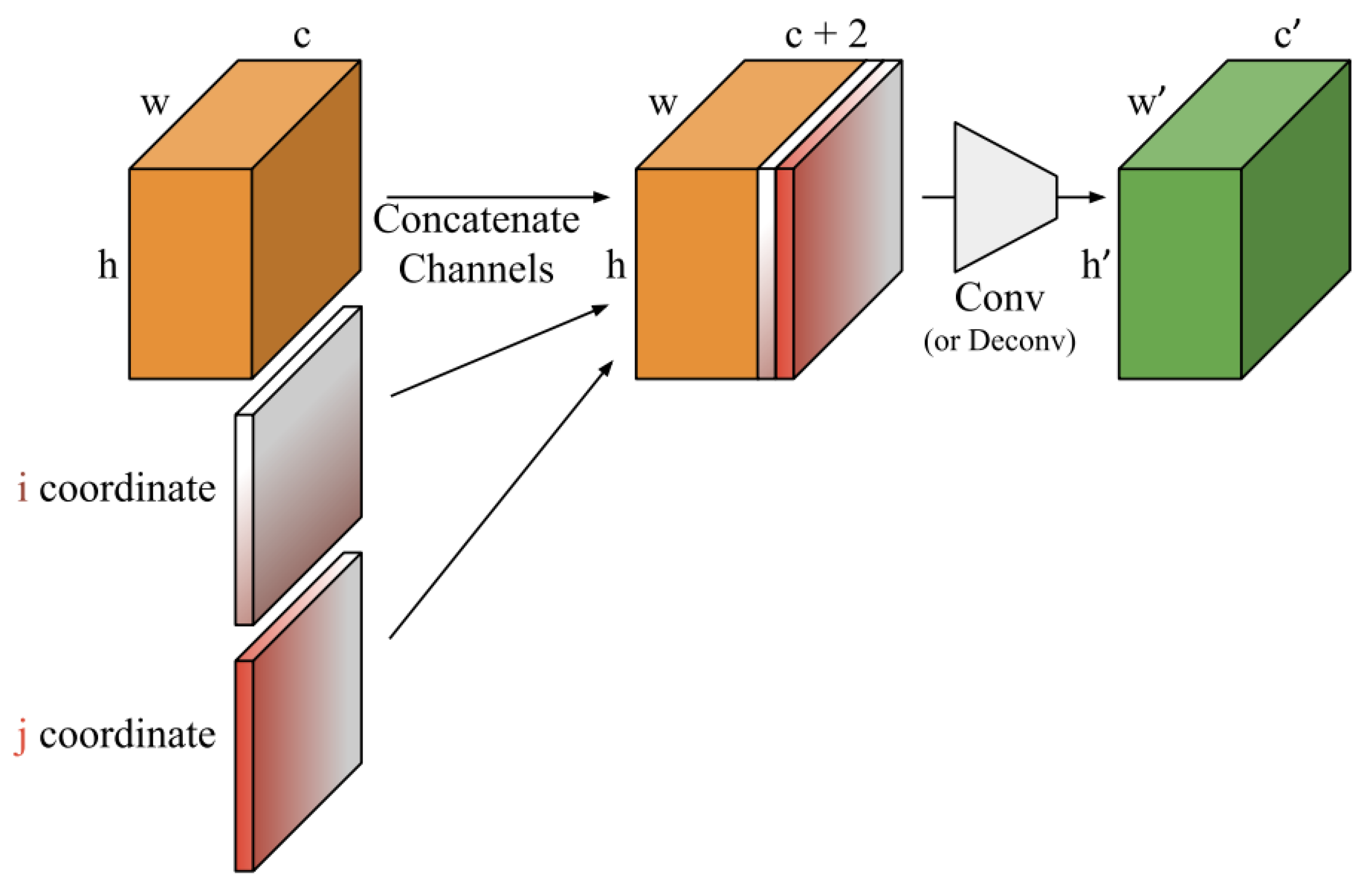

3.3. Coordinate Convolution Layer

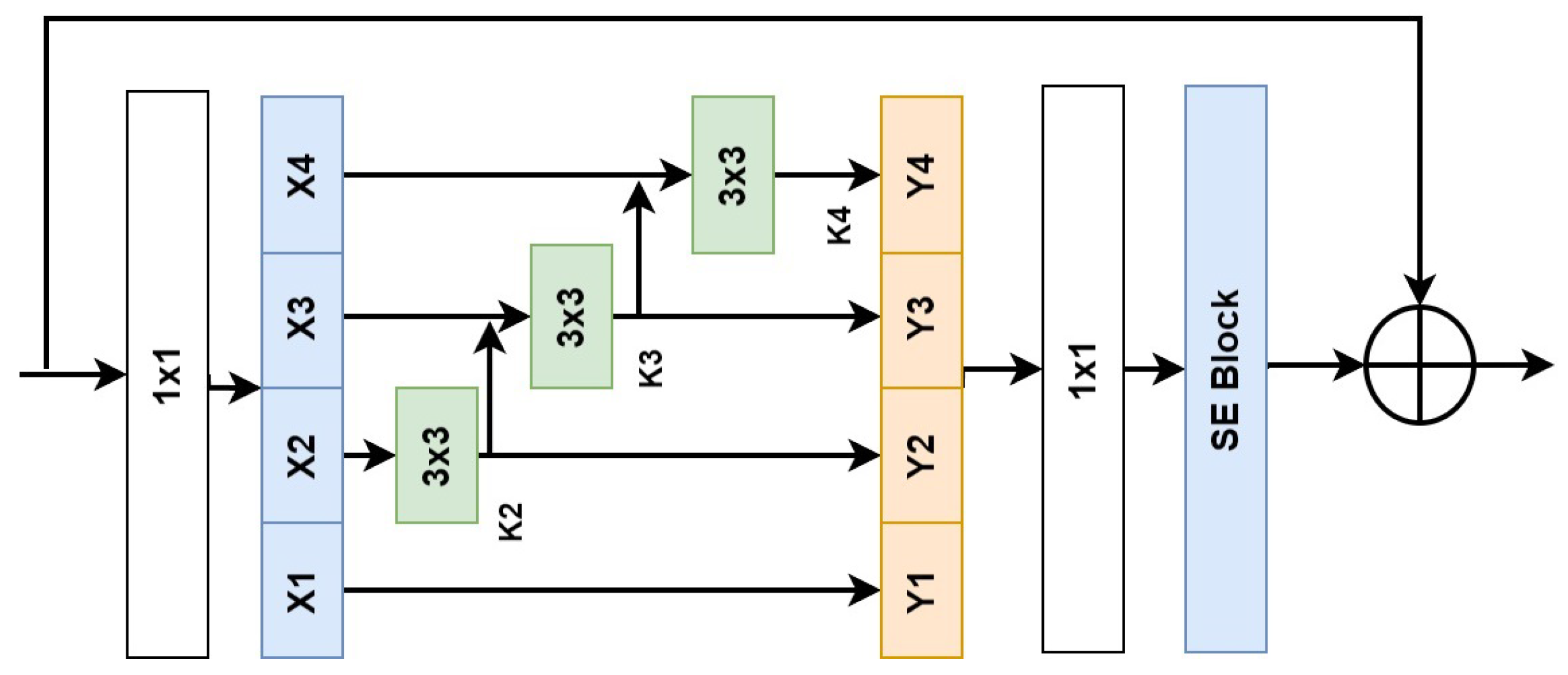

3.4. Res2Net-Squeezed

3.5. Squeeze-and-Excitation

4. Experimental Setup

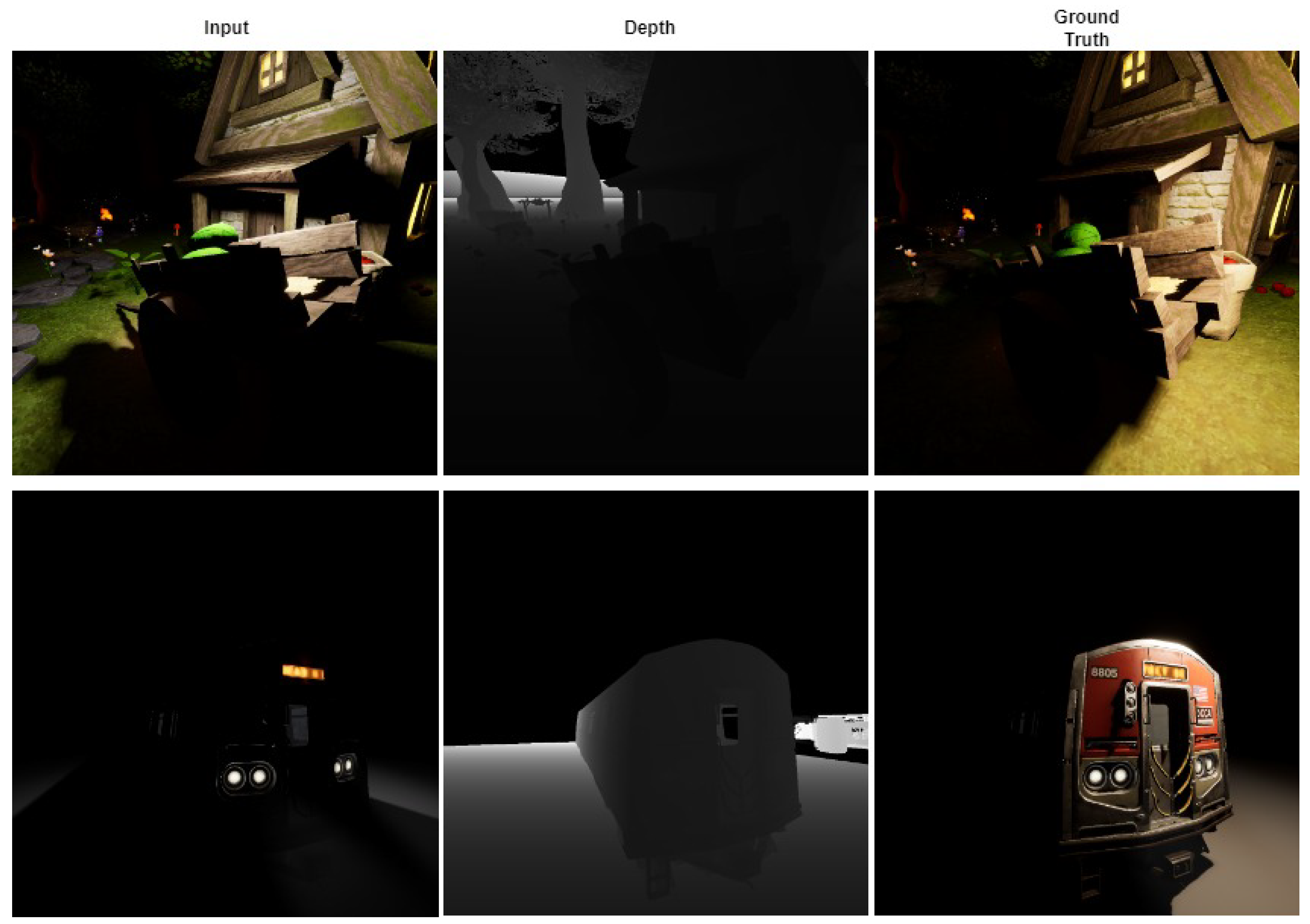

4.1. Dataset

4.2. Data Augmentation

4.3. Loss Function

4.4. Training

4.5. Ablation Study

4.5.1. Experiments on Residual Strategy

4.5.2. Experiments on Coordinate Convolutional Layer

4.6. Results and Comparison with State-of-the-Art Methods

4.6.1. Comparison for Evaluation Metrics

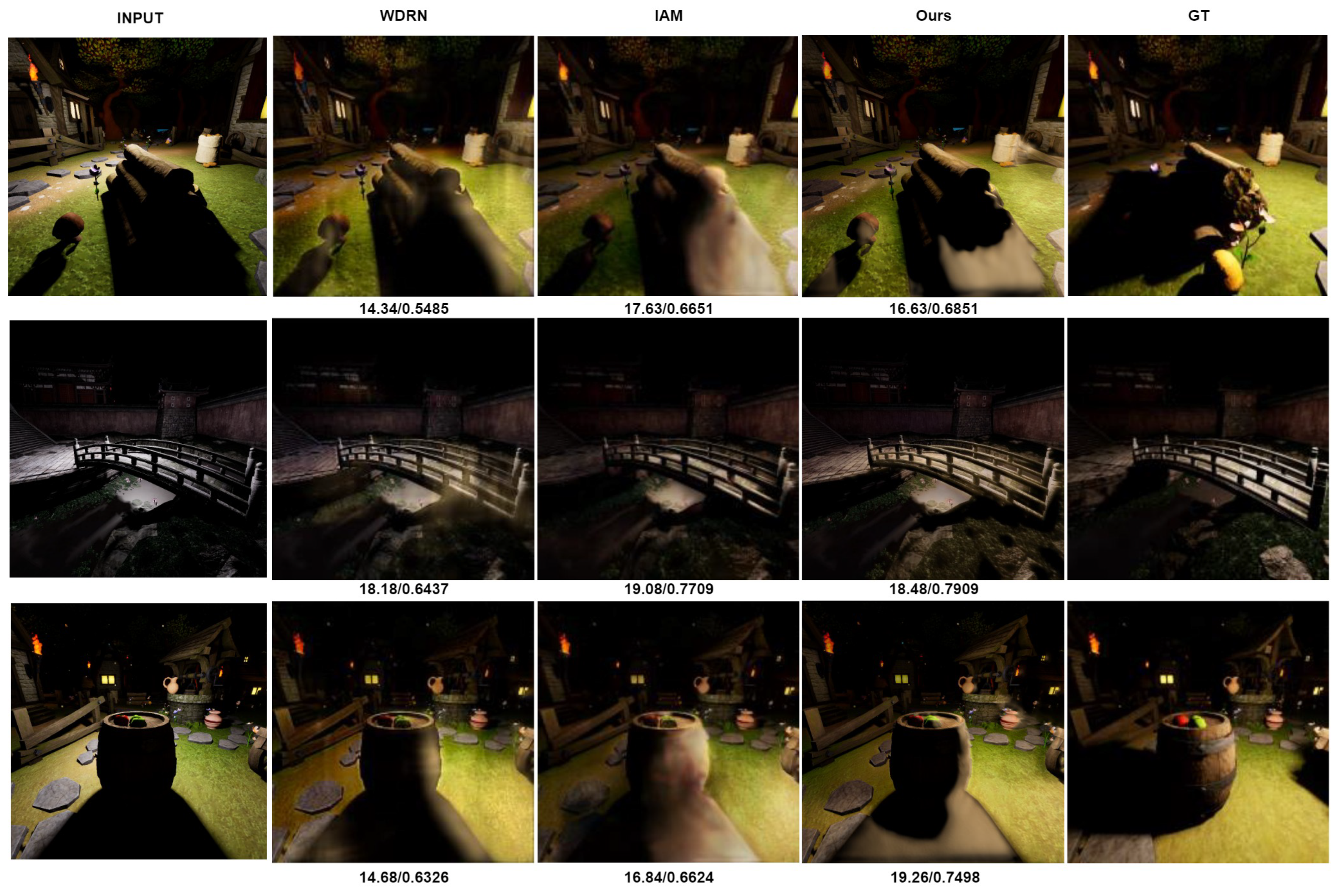

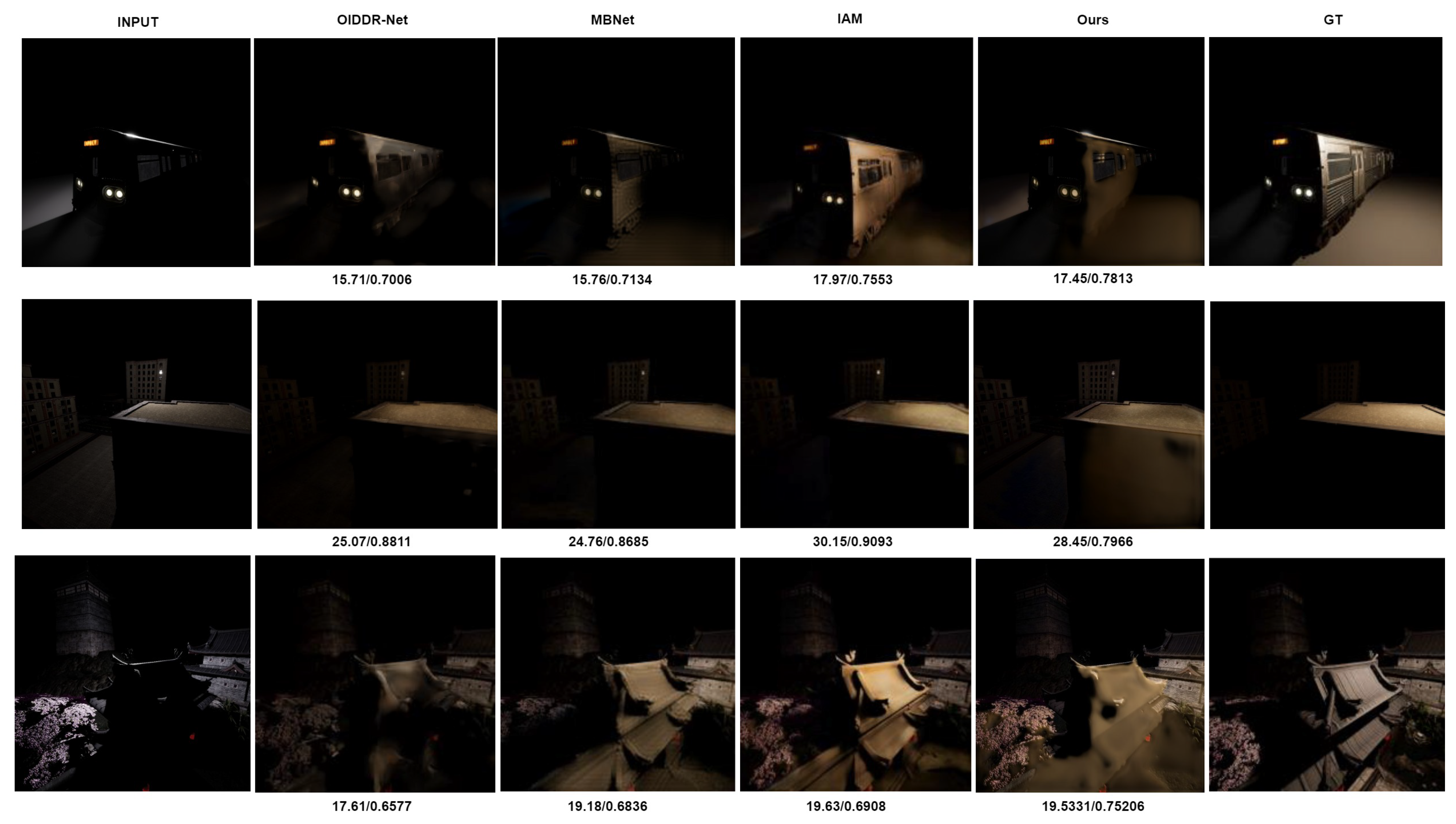

4.6.2. Comparison for Qualitative Results

4.6.3. Comparison for Model Size

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Puthussery, D.; Panikkasseril Sethumadhavan, H.; Kuriakose, M.; Charangatt Victor, J. WDRN: A Wavelet Decomposed Relightnet for Image Relighting. arXiv 2020, arXiv:2009.06678. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Wang, L.; Liu, Z.; Siu, W.; Lun, D.P.K. Lightening network for low-light image enhancement. IEEE Trans. Image Process. 2020, 29, 7984–7996. [Google Scholar] [CrossRef]

- Hu, X.; Zhu, L.; Fu, C.; Qin, J.; Heng, P. Direction-aware spatial context features for shadow detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7454–7462. [Google Scholar]

- Le, H.; Samaras, D. Shadow removal via shadow image decomposition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8578–8587. [Google Scholar]

- Helou, M.E.; Zhou, R.; Barthas, J.; Süsstrunk, S. Vidit: Virtual image dataset for illumination transfer. arXiv 2020, arXiv:2005.05460. [Google Scholar]

- Xu, Z.; Sunkavalli, K.; Hadap, S.; Ramamoorthi, R. Deep image-based relighting from optimal sparse samples, ACM. Trans. Graph. (ToG) 2018, 37, 126. [Google Scholar]

- El Helou, M.; Zhou, R.; Süsstrunk, S.; Timofte, R.; Afifi, M.; Brown, M.S.; Xu, K.; Cai, H.; Liu, Y.; Wang, L.W.; et al. AIM 2020: Scene relighting and illumination estimation challenge. In Proceedings of the European Conference on Computer Vision Workshops (ECCVW), Online, 23–28 August 2020. [Google Scholar]

- El Helou, M.; Zhou, R.; Susstrunk, S.; Timofte, R. NTIRE 2021 depth guided image relighting challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–21 June 2021. [Google Scholar]

- Yang, H.H.; Chen, W.T.; Kuo, S.Y. S3Net: A single stream structure for depth guided image relighting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–21 June 2021; pp. 276–283. [Google Scholar]

- Nathan, S.; Beham, M.P. LightNet: Deep Learning Based Illumination Estimation from Virtual Images. In Computer Vision—ECCV 2020 Workshops. ECCV 2020; Lecture Notes in Computer Science; Bartoli, A., Fusiello, A., Eds.; Springer: Cham, Switzerland, 2020; Volume 12537. [Google Scholar]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Barron, J.T.; Tsai, Y.-T.; Pandey, R.; Zhang, X.; Ng, R.; Jacobs, D.E. Portrait shadow manipulation. ACM Trans. Graph. 2020, 39, 78–81. [Google Scholar] [CrossRef]

- Yazdani, A.; Guo, T.; Monga, V. Physically inspired dense fusion networks for relighting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021. [Google Scholar]

- Niemeyer, M.; Geiger, A. Giraffe: Representing scenes as compositional generative neural feature fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Nagano, K.; Luo, H.; Wang, Z.; Seo, J.; Xing, J.; Hu, L.; Wei, L.; Li, H. Deep face normalization. ACM Trans. Graph. 2019, 6, 183. [Google Scholar] [CrossRef]

- Srinivasan, P.P.; Deng, B.; Zhang, X.; Tancik, M.; Mildenhall, B.; Barron, J.T. Nerv: Neural reflectance and visibility fields for relighting and view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Li, Z.; Xu, Z.; Ramamoorthi, R.; Sunkavalli, K.; Chandraker, M. Learning to reconstruct shape and spatially-varying reflectance from a single image. ACM Trans. Graph. 2018, 37, 269. [Google Scholar] [CrossRef]

- Basri, R.; Jacobs, D.W. Lambertian reflectance and linear subspaces. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 218–233. [Google Scholar] [CrossRef]

- Ding, B.; Long, C.; Zhang, L.; Xiao, C. Argan: Attentive recurrent generative adversarial network for shadow detection and removal. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 405–421. [Google Scholar] [CrossRef]

- Nestmeyer, T.; Lalonde, J.-F.; Matthews, I.; Lehrmann, A. Learning physics-guided face relighting under directional light. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, S.; Do, M.N. Inverse rendering and relighting from multiple color plus depth images. IEEE Trans. Image Process. 2017, 26, 4951–4961. [Google Scholar] [CrossRef] [PubMed]

- Philip, J.; Gharbi, M.; Zhou, T.; Efros, A.A.; Drettakis, G. Multi-view relighting using a geometry-aware network. ACM Trans. Graph. 2019, 38, 78–81. [Google Scholar] [CrossRef]

- Qiu, D.; Zeng, J.; Ke, Z.; Sun, W.; Yang, C. Towards geometry guided neural relighting with flash photography. arXiv 2020, arXiv:2008.05157. [Google Scholar]

- Yu, Y.; Meka, A.; Elgharib, M.; Seidel, H.-P.; Theobalt, C.; Smith, W.A. Self-supervised outdoor scene relighting. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Zhang, Y.; Tsang, I.W.; Luo, Y.; Hu, C.-H.; Lu, X.; Yu, X. Copy and paste gan: Face hallucination from shaded thumbnails. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ren, P.; Dong, Y.; Lin, S.; Tong, X.; Guo, B. Image based relighting using neural networks. ACM Trans. Graph. 2015, 34, 111. [Google Scholar] [CrossRef]

- Imageworks, S.P. Physically-Based Shading Models in Film and Game Production. 2010. Available online: https://renderwonk.com/publications/s2010-shading-course/hoffman/s2010_physically_based_shading_hoffman_a_notes.pdf (accessed on 19 July 2023).

- Green, R. Spherical harmonic lighting: The gritty details. In Proceedings of the Archives of the Game Developers Conference, San Jose, CA, USA, April 2003. [Google Scholar]

- Karsch, K.; Hedau, V.; Forsyth, D.; Hoiem, D. Rendering synthetic objects into legacy photographs. ACM Trans. Graph. 2011, 30, 1–12. [Google Scholar] [CrossRef]

- Wang, J.; Dong, Y.; Tong, X.; Lin, Z.; Guo, B. Kernel nyström method for light transport. ACM Trans. Graph. 2009, 29. [Google Scholar] [CrossRef]

- Han, Z.; Tian, J.; Qu, L.; Tang, Y. A new intrinsic-lighting color space for daytime outdoor images. IEEE Trans. Image Process. 2017, 26, 1031–1039. [Google Scholar] [CrossRef]

- Gafton, P.; Maraz, E. 2d image relighting with image-to-image translation. arXiv 2020, arXiv:2006.07816. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Pang, Y.; Zhang, L.; Zhao, X.; Lu, H. Hierarchical dynamic filtering network for rgb-d salient object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Yang, H.H.; Chen, W.T.; Luo, H.L.; Kuo, S.Y. Multi-modal bifurcated network for depth guided image relighting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–21 June 2021; pp. 260–267. [Google Scholar]

- Kansal, P.; Nathan, S. Insta Net: Recurrent Residual Network for Instagram Filter Removal. In Proceedings of the Thirteenth Indian Conference on Computer Vision, Graphics and Image Processing (ICVGIP ’22), Gandhinagar, India, 8–10 December 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Kansal, P.; Devanathan, S. EyeNet: Attention Based Convolutional Encoder-Decoder Network for Eye Region Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3688–3693. [Google Scholar] [CrossRef]

- Nathan, S.; Kansal, P. SkeletonNetV2: A Dense Channel Attention Blocks for Skeleton Extraction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2142–2149. [Google Scholar] [CrossRef]

- Liu, R.; Lehman, J.; Molino, P.; Such, F.P.; Frank, E.; Sergeev, A.; Yosinski, J. An intriguing failing of convolutional neural networks and the coordconv solution. Adv. Neural Inf. Process. Syst. 2018, 9605–9616. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss Functions for Image Restoration With Neural Networks. IEEE Trans. Comput. Imaging 2017, 3, 7–57. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Wang, L.-W.; Siu, W.-C.; Liu, Z.-S.; Li, C.-T.; Lun, D.P. Deep relighting networks for image light source manipulation. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Zhan, F.; Yu, Y.; Zhang, C.; Wu, R.; Hu, W.; Lu, S.; Ma, F.; Xie, X.; Shao, L. Gmlight: Lighting estimation via geometric distribution approximation. IEEE Trans. Image Process. 2022, 31, 2268–2278. [Google Scholar] [PubMed]

- Zhu, Z.L.; Li, Z.; Zhang, R.X.; Guo, C.L.; Cheng, M.M. Designing an illumination-aware network for deep image relighting. IEEE Trans. Image Process. 2022, 31, 5396–5411. [Google Scholar] [PubMed]

- Zhou, H.; Hadap, S.; Sunkavalli, K.; Jacobs, D.W. Deep single-image portrait relighting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2019. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. IEEE Conf. Comput. Vis. Pattern Recognit. 2018, 586–595. [Google Scholar]

| TYPE | SSIM | PSNR |

|---|---|---|

| w/o Residual Learning | 0.6734 | 16.88 |

| Vanilla Residual Block [46] | 0.6801 | 17.34 |

| Vanilla Res2Net [12] | 0.7008 | 18.09 |

| Res2Net-Squeezed block | 0.7185 | 19.48 |

| TYPE | SSIM | PSNR |

|---|---|---|

| w/o Coordinate Conv layer | 0.6878 | 16.88 |

| w Coordinate Conv layer | 0.7185 | 19.48 |

| TYPE | SSIM | PSNR | LPIPS |

|---|---|---|---|

| pix2pix [36] | 0.489 | 15.59 | 0.4827 |

| DRN [48] | 0.6151 | 17.59 | 0.392 |

| WDRN [1] | 0.6442 | 17.46 | 0.3299 |

| DPR [51] | 0.6389 | 18.01 | 0.3599 |

| OIDDR-Net [49] | 0.7039 | 18.4 | 0.2837 |

| S3Net [10] | 0.7022 | 19.24 | - |

| MBNet [38] | 0.7175 | 19.36 | 0.2928 |

| IAM [50] | 0.7234 | 19.7 | 0.2755 |

| Ours | 0.7185 | 19.48 | 0.2831 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nathan, S.; Kansal, P. End-to-End Depth-Guided Relighting Using Lightweight Deep Learning-Based Method. J. Imaging 2023, 9, 175. https://doi.org/10.3390/jimaging9090175

Nathan S, Kansal P. End-to-End Depth-Guided Relighting Using Lightweight Deep Learning-Based Method. Journal of Imaging. 2023; 9(9):175. https://doi.org/10.3390/jimaging9090175

Chicago/Turabian StyleNathan, Sabari, and Priya Kansal. 2023. "End-to-End Depth-Guided Relighting Using Lightweight Deep Learning-Based Method" Journal of Imaging 9, no. 9: 175. https://doi.org/10.3390/jimaging9090175