1. Introduction

Malaria, a widespread disease, is induced by the Plasmodium parasite and is transmitted to humans via bites from infected female Anopheles mosquitoes. In the year 2019, there were approximately 229 million reported cases of malaria globally, leading to 409,000 fatalities. Significantly, 94% of both malaria cases and associated deaths were concentrated in Africa, with children below the age of five identified as the most-susceptible demographic, constituting 67% of the total malaria-related deaths worldwide.

Although methods for the clinical diagnosis of malaria, such as molecular diagnostics with Real-Time Polymerase Chain Reaction (RT-PCR) [

1], have been proposed in recent years, microscopy is the most-appropriate method for the detection of malaria in the field [

2] and for blood diseases or infections in general. They are detected through the analysis of blood cells using peripheral blood slides under a light microscope. Thus, in addition to the diagnosis of malaria infection [

3,

4,

5], some examples include the detection of leukemia [

6,

7,

8,

9] or the counting of blood cells [

10,

11,

12,

13,

14].

Malaria, a disease caused by parasites belonging to the genus

Plasmodium, manifests in humans through the invasion of Red Blood Cells (RBCs). Transmission occurs via the bites of infected female Anopheles mosquitoes, commonly known as “malaria vectors”. There are five main types of parasites responsible for human malaria:

P. falciparum (

P.f.),

P. vivax (

P.v.),

P. ovale (

P.o.),

P. malariae (

P.m.), and

P. knowlesi (

P.k.), with the first two posing the greatest threat [

15,

16].

The life stages of these parasites within the human host include the ring, trophozoite, schizont, and gametocyte phases. Human malaria, as defined by the World Health Organization (WHO), is considered a preventable and treatable condition if diagnosed promptly. Failure to address the disease promptly may lead to severe complications such as disseminated intravascular thrombosis, tissue necrosis, and splenic hypertrophy [

16,

17,

18,

19].

Nevertheless, the symptoms induced by malaria often closely resemble those associated with diseases such as viral hepatitis, dengue fever, and leptospirosis, thereby complicating the diagnostic process [

2,

20]. Several diagnostic methods have been developed to overcome this problem. Nevertheless, the currently available diagnostic tools often neglect or fail to distinguish between non-falciparum types [

2]. In addition, several factors complicate the identification of these species. For example, parasitemia is typically very low in

P.v.- and

P.m.-infected individuals [

21];

P.v. and

P.o. are characterized by the slow development of some of their sporozoites (early stage of schizonts), forming hypnozoites, which are difficult to detect [

22]. Infections with non-falciparum species are often asymptomatic, which makes their detection even more difficult because infected people do not seek treatment at a health facility due to the lack of symptoms. These reasons include the need to keep infectious diseases under control, especially in underdeveloped countries with no medical centers nearby or capable of handling many patients [

16].

Manual microscopy of Peripheral Blood Smears (PBSs) has several advantages for malaria diagnosis, including low cost, portability, specificity, and sensitivity [

23]. However, there are many problems associated with this method. Examples include technical skills in slide preparation; lysis of red blood cells and related changes in parasite morphology (leading to errors in species identification); quality and illumination of the microscope; the staining procedure; the competence and care of the microscopist; and finally, the level of parasitemia [

2].

Moreover, the manual process is typically laborious and time-intensive, and incorrect diagnoses may result in unwarranted drug administration, with potential exposure to associated side effects or severe disease progression.

Further problems for this type of analysis are caused by the fact that, in many cases, only microscopy or rapid tests are available as diagnostic tools. Several pieces of research have shown consistent errors in

Plasmodium species identification by microscopists, such as missed

P.o. infections with low parasite densities,

P.f.-infected specimens misidentified as

P.m., and

P.o. slides misidentified as

P.v., which could lead to ineffective treatment administration and increase the risk of severe malaria. Furthermore, it is common to fail to distinguish early trophozoites of

P.v. from those of

P.f., especially when parasitemia is low, as well as

P.m. from other

Plasmodium species using a microscopic method [

24]. The similar morphologies of the malaria species can also lead to mixed infections, mostly misdiagnosed [

2]. These events can also lead to a worsening of the clinical picture.

Accurate and timely malaria diagnosis is crucial for effective treatment and preventing severe complications. While traditional methods like microscopy remain the gold standard, recent developments in deep learning, specifically deep Convolutional Neural Networks (CNNs), have shown promising results in malaria cell image analysis.

Several studies have explored the application of deep CNNs in malaria diagnosis at the single-cell level, emphasizing the importance of accurately identifying whether a cell is infected with the malaria parasite [

25,

26,

27,

28,

29,

30].

Despite the advances produced by these methods, the use of datasets composed of images presenting monocentric cells represents an overly ideal scenario in which salient and highly discriminating features can be extracted from the images. Of course, this is valid under the assumption that pathologists take crops manually or that detection systems provide perfect crops. However, this assumption is not verified in real-world application scenarios because the systems are fully automated, and therefore, the crops cannot always be accurate or perfectly centered [

31,

32,

33,

34,

35].

Other challenges exist, such as discriminating between different

Plasmodium species and managing the complexities associated with low parasitemia levels and asymptomatic infections. Consequently, the exact localization of parasites within cells, obtained through precise bounding box detection, could offer valuable insights for in-depth studies and detailed diagnosis [

5,

36,

37]. Therefore, integrating deep learning techniques with object detection capabilities becomes essential in this context. This integration allows accurate classification of infected cells and precise localization of parasites within these cells, providing comprehensive information for detailed analysis and diagnosis.

The challenges expressed motivated this work. Its objective was to devise a methodology named the Parasite Attention Module (PAM), which was seamlessly incorporated into the You Only Look Once (YOLO) architecture. This methodology was designed to automatically detect malaria parasites, addressing the limitations associated with the prevailing gold-standard microscopy technique. Specifically, the main contributions of this research are summarized as follows: (i) the development of a novel Transformer- and attention-based object-detection architecture based on the latest version of YOLO for malaria parasite detection; (ii) the investigation and extension of the proposal to the four different species for mixed or intra-species detection; (iii) the evaluation of two different datasets, including intra-dataset experimentations, based on the different species.

The rest of this article is organized as follows. First, the related work is presented in

Section 2, and then, the materials and methods are described in

Section 3. The proposed architecture is described in

Section 4, while the experiments and results are presented in

Section 5, along with a discussion of every investigation. Finally, the conclusions are drawn in

Section 6.

2. Literature Review

In recent years, the field of computer vision has proposed various Computer-Aided Diagnosis (CAD) solutions aimed at automating the detection of malaria parasites. These endeavors seek to alleviate the challenges associated with manual analysis, offering a more-reliable and standardized interpretation of blood samples. This automation, in turn, can potentially mitigate diagnostic costs [

37,

38].

Before the emergence of deep learning techniques, malaria parasite detection in images relied on classical methods involving multiple steps: image preprocessing, object detection or segmentation, feature extraction, and classification. Techniques like mathematical morphology for preprocessing and segmentation [

31,

32], along with handcrafted features [

33,

34], have been used to train machine learning classifiers. The landscape of computer vision approaches for malaria parasite detection underwent a significant transformation with the introduction of AlexNet’s Convolutional Neural Network (CNN) [

39], marking a paradigm shift.

Various deep learning approaches have been proposed as alternatives to classical methods for this task, as evidenced by numerous studies published in the last decade [

5,

25,

30,

36,

37,

40].

In the context of deep learning approaches, existing works on malaria can be divided into two categories. Works that perform classification on images containing single cells aim to identify the most-appropriate classifier to discriminate between parasitized and healthy cells by proposing custom CNN architectures or using off-the-shelf CNNs [

25,

26,

27,

28,

29,

30,

41]. Additionally, Rajaraman et al. explored the performance of deep neural ensembles [

30]. These methods typically use the NIH [

29] dataset as a reference. More recently, Sengar et al.examined the use of vision Transformers on the same dataset [

42].

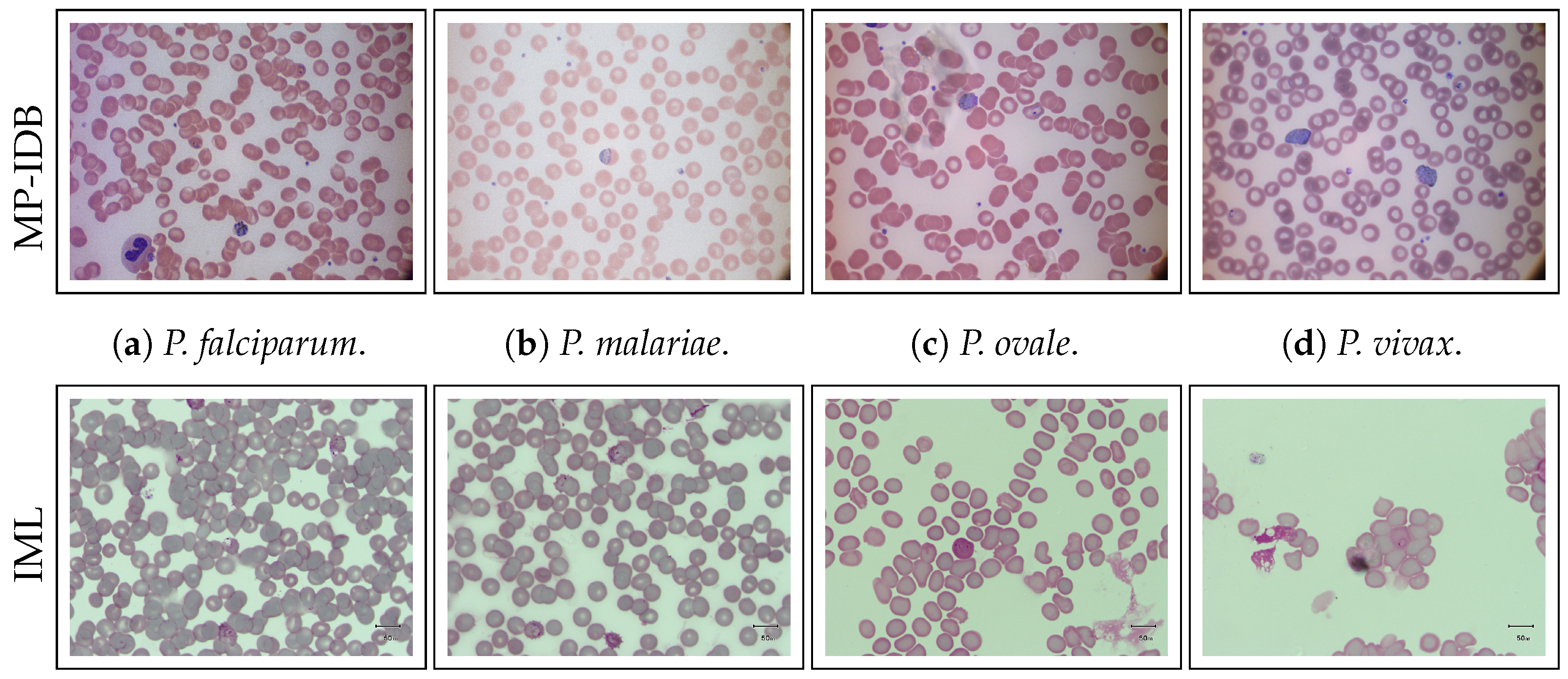

On the other hand, works proposing full pipelines typically propose parasite detection from whole images and rely on several existing datasets, such as BBC041 [

36], MP-IDB [

43,

44], IML [

40], or M5 [

37].

Arshad et al. proposed a dataset containing

P. vivax malaria species in four life cycle stages. The authors presented a deep-learning-based life cycle stage classification, where the ResNet-50v2 network was selected for single-stage multi-class classification [

40].

Sultani et al. collected a new malaria image dataset with multiple microscopes and magnifications using thin-blood smear slides. They obtained two variations of the dataset, one from Low-Cost Microscopes (LCMs) and another from High-Cost Microscopes (HCMs), aiming to replicate the challenges associated with real-world image acquisition in resource-limited environments. To address the malaria detection task, the authors used several object detectors. In addition, they also discussed the issue of microscope domain adaptation tasks and tested some off-the-shelf domain adaptation methods. The optimal performance emerged through the application of ranking combined with triplet loss, with the HCM serving as the source domain and the LCM as the target domain [

37].

Since malaria parasites consistently target erythrocytes, automated malaria detection systems must analyze these cells to determine infection and classify the associated life stages. Existing literature only addresses the classification problem without considering the detection problem. Additionally, considerable emphasis has been placed on developing mobile devices to facilitate cost-effective and rapid malaria diagnosis, particularly in underdeveloped regions where access to more-expensive laboratory facilities is limited [

34].

Regarding dataset utilization, some studies have employed the same datasets as utilized in this investigation. As of the current writing, a limited number of studies have used MP-IDB [

5,

44,

45], whereas IML has only been utilized by its proposers [

40]. Maity et al. implemented a semantic segmentation technique followed by the application of a Capsule Network (CapsNet) for the categorization of

P.f. rings [

5], whereas Rahman et al. conducted a comparative evaluation involving various off-the-shelf networks for binary classification purposes [

45].

The principal distinctions between our study and the existing state-of-the-art methodologies stem from deploying a detector with a dual objective: identifying distinct types of malaria-infected RBCs and discriminating various life stages within a unified framework.

Compared to the works defined so far, this work aimed to provide a lightweight and effective method to detect malaria parasites of any species and life stage.

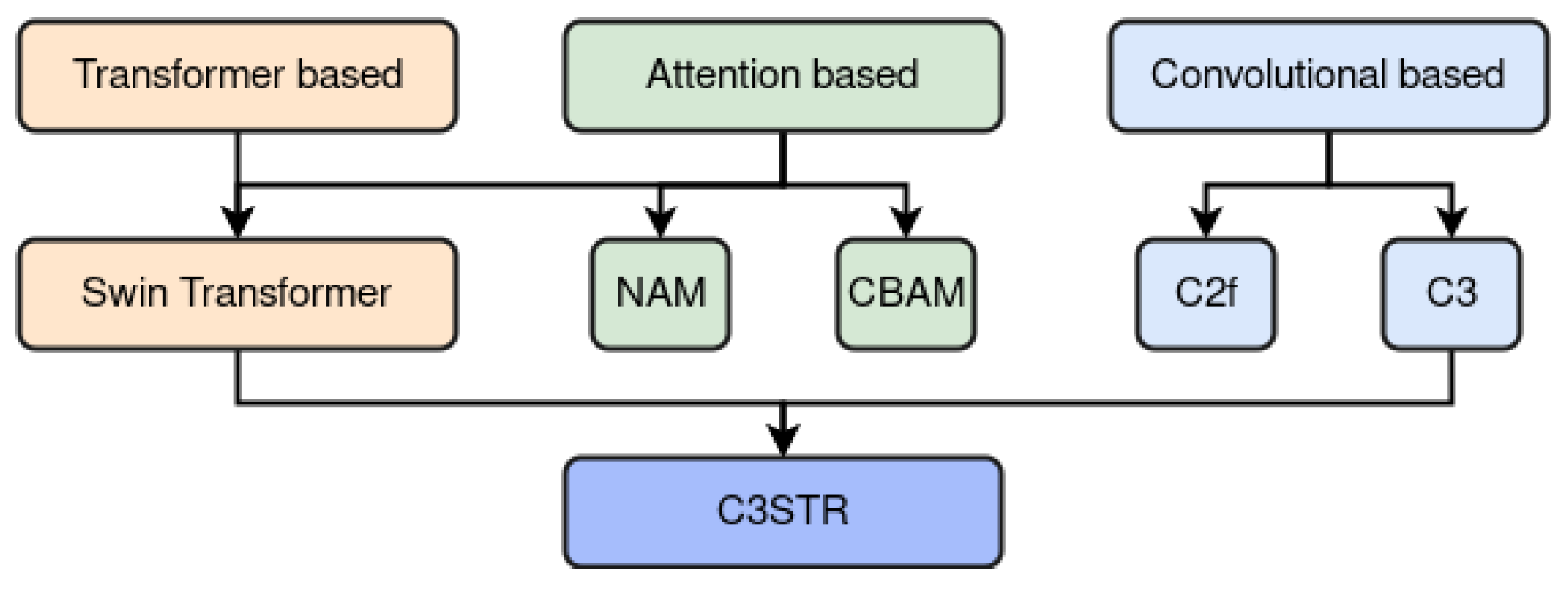

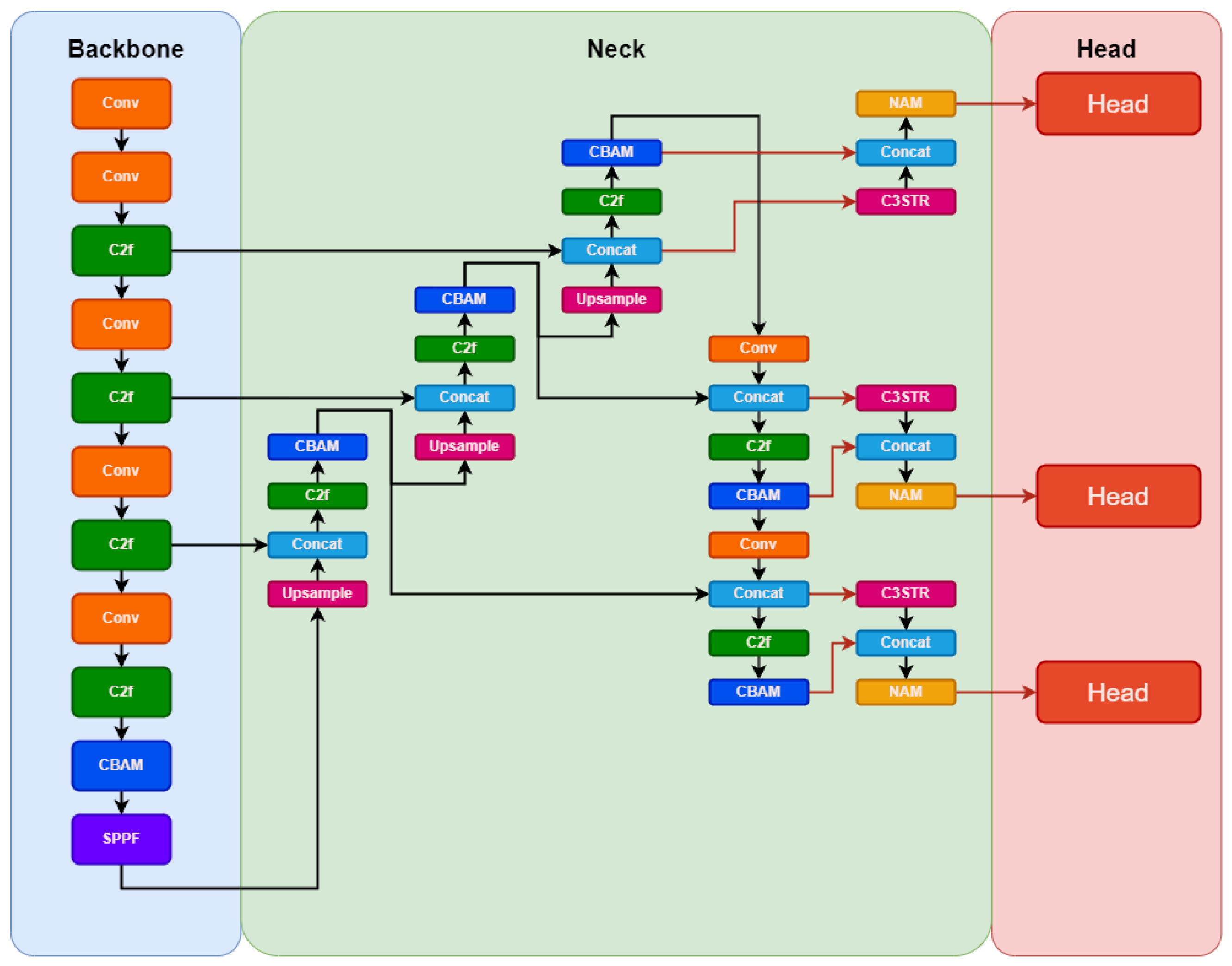

4. The Proposed Network: YOLO-PAM

In addition to proposing an efficient and precise malaria-parasite-detection system, this study aimed to overcome the limitations of existing state-of-the-art methods. Our objective was threefold: First was to achieve the speed and compactness typical of one-stage detectors while maintaining high accuracy without the need for a secondary stage, i.e., the classification stage. Second, we integrated Transformer models, steering clear of an end-to-end DETR framework to avoid excessive complexity and sluggish convergence. Last, we tackled the detection of the parasites of varying the sizes, from small to large, within a unified system, eliminating the need for an additional specialized subsystem.

Our methodology primarily focused on enhancing the efficiency and accuracy of the medium-sized one-stage detector, YOLOv5m6. Selected for its characteristics, this model comprised 35.7 million trainable parameters and was pre-trained on images sized at

pixels. Modifying its final layer equipped it to effectively detect all four phases of the malaria life cycle. This model balances network depth and parameter count, making it highly suitable for low-end computational resources and mobile devices [

66].

Our proposed YOLO-PAM model aimed for a lighter approach compared to YOLOv5m6. To achieve this, we adopted the same fundamental concepts of YOLOv5m6 for the YOLOv8m architecture. We also reduced the model’s width to 3/4 of its original, resulting in fewer filters used and enabling faster training and inference. The architectural proposal is illustrated in

Figure 3.

A key contribution involved strategically integrating multiple CBAM attention modules within vital components of the baseline architecture, such as the backbone and neck, influencing the prediction heads. This enhancement builds upon prior research [

58], demonstrating the efficacy of these modules in improving classification and detection tasks. We made specific modifications to the original YOLOv8 architecture:

- 1.

We excluded prediction heads designed for large objects, retaining those tailored for medium-sized ones. This decision aimed to prevent unnecessary computational overhead associated with handling excessively large objects and directed the architectural focus towards the precise dimensions of the objects in the images, specifically those of small and medium size in terms of pixel count.

- 2.

Moreover, an additional head was incorporated to use features from the lower layers of the model’s backbone. These layers offer less-refined features, but possess higher resolution, a critical aspect for the precise detection of smaller objects, for example. Leveraging lower backbone layers allows the extraction of high-resolution features, essential for discriminating objects occupying minimal pixels. Higher layers excel in discerning medium-sized objects, but might lose the details of smaller objects due to the reduced feature map resolution caused by the convolution.

- 3.

A further contribution entailed the integration of features extracted from the C3STR layers with those acquired from the C3 layers immediately followed by the CBAM layers. Subsequently, a NAM module was applied to introduce further attention to the resulting feature maps. This procedure endowed the prediction heads with the utmost refined features the model could generate, a detail highlighted by the orange arrows in the schematic representation illustrated in

Figure 3.

In contrast to alternative strategies that integrate Transformers and attention mechanisms by substituting the last C3 layer with Transformer Blocks [

65,

67], our approach diverged significantly. Our objective was to preserve the distinctive locally specialized features intrinsic to CNNs while incorporating global features extracted through vision Transformers. This approach involved merging these two feature types within the model heads, enabling the retention of the nuanced advantages associated with global and local features. In summary, our strategy involved integrating three different attention mechanisms—CBAM, NAM, and C3STR—aiming to leverage their respective advantages.

5. Experimental Results

This section delineates the conducted experimental evaluation, starting with a comprehensive overview of the experimental setup detailed in

Section 5.1. Additionally, it provides details regarding dataset splits and the implemented data augmentation, aiming to offer a comprehensive understanding of the experimental design. Subsequent sections are dedicated to specific aspects of the evaluation:

Section 5.2 delves into the ablation study, while

Section 5.3 and

Section 5.4, respectively, present the results on MP-IDB and IML. Furthermore,

Section 5.5 furnishes an overview of the qualitative results, and lastly,

Section 5.6 undertakes a comparative analysis between our proposed architecture and the state-of-the-art methods.

5.1. Experimental Setup

The experiments were performed on a workstation with the following hardware specifications: an

Intel(R) Core(TM) i9-8950HK @ 2.90 GHz CPU, 32 GB RAM, and an

NVIDIA GTX1050 Ti GPU with 4 GB memory. We used the PyTorch implementation of YOLOv8 (available at:

https://github.com/ultralytics/ultralytics (accessed on 28 November 2023)), developed by the Ultralytics LLC and the YOLOAir’s implementation of C3STR (available at:

https://github.com/iscyy/yoloair (accessed on 28 November 2023)). The backbones used were ResNet-50 pretrained on ImageNet with the FPN and Darknet53 for YOLO. All YOLO-based networks were initialized using pre-trained weights on the COCO2017 dataset [

55]. Adam served as the optimizer, configured with a learning rate of 0.001 and a momentum of 0.9. Each model underwent training for 100 epochs, employing a batch size of 4.

Datasets’ split: For MP-IDB, each parasite class was allocated a split of 60% for training, 20% for validation, and 20% for testing. The original splits proposed by the authors were retained for IML [

40]. Detailed information regarding the dimensions of the parasites can be found in

Table 1.

Data augmentation: We generated 35 distinct augmented samples from each original sample for every species. This augmentation strategy aimed to enhance the diversity of the training data, address data imbalance issues, bolster the models against potential object rotations, and enable targeted generalization capabilities. We chose a milder augmentation approach due to the vulnerability of certain parasites. Specific augmentation techniques, such as shearing, were observed to have the potential to adversely affect parasites, with notable implications for those of smaller dimensions [

44].

Table 2 shows the applied augmentations.

5.2. Ablation Study

Table 3 presents the results of the ablation study conducted on the

P.f. split of the MP-IDB dataset, specifically chosen due to its diverse representation of different life stages and the presence of parasites of varying sizes, ranging from small to large. The objective of these experiments was to systematically evaluate the impact of various modifications on the detection performance. Four different configurations were tested: The baseline method (YOLOv8m) achieved an AP of 78.9%. When incorporating CBAM alone, the performance improved marginally to 79.6%. By focusing solely on the modifications in the backbone architecture (C3), the AP score increased to 81.2%. The most-substantial improvement was observed when both the CBAM and C3 modifications were integrated (CBAM + C3), resulting in an AP score of 83.6%. This table provides a detailed insight into the effectiveness of each modification. It underscores the significance of the combined enhancements, demonstrating their positive impact on the accuracy of malaria parasite detection.

5.3. Experimental Results on MP-IDB

Table 4 presents a detailed quantitative assessment of malaria parasite detection performance across four species (

P.f.,

P.m.,

P.o., and

P.v.) within MP-IDB. The evaluation employed multiple detection methods: Faster R-CNN, RetinaNet, FCOS, YOLOv8m, and the proposed YOLO-PAM.

Across the

P.f. class, YOLO-PAM showcased remarkable performance, achieving an AP of 83.6%, outperforming other methods, including YOLOv8m (78.9%), and demonstrating significant improvements. It also surpassed Zedda et al.’s [

44] method by a consistent 3.7% in the AP. In the

P.m. category, YOLO-PAM excelled once again, achieving a striking AP of 93.6%, surpassing the baseline YOLOv8m’s AP of 78.8%. Similarly, in the

P.o. class, YOLO-PAM achieved an outstanding AP of 94.4%, outclassing YOLOv8m’s AP of 89.7%. In the

P.v. category, YOLO-PAM attained an AP of 87.2%, demonstrating superior performance compared to YOLOv8m’s AP of 85.9%.

Notably, YOLO-PAM consistently outperformed other methods across all parasite species, as evidenced by the bolded entries in the table. These numerical results underscore the effectiveness of the proposed YOLO-PAM in malaria parasite detection, emphasizing its accuracy and robustness in identifying parasites of varying sizes within different species.

5.4. Experimental Results on IML

Table 5 provides a detailed analysis of the malaria parasite detection performance across multiple methods within the IML dataset [

40].

In the context of the overall AP, YOLO-PAM emerged as the most-effective method, achieving a remarkable AP of 59.9%, outperforming other methods such as the FRCNN (27.9%), RetinaNet (24.2%), FCOS (7.2%), and even the baseline, YOLOv8m (56.2%). YOLO-PAM’s superior performance is further highlighted by its excellent AP score of 91.8%, indicating its ability to accurately detect parasites with a high IoU threshold.

Moreover, regarding specific AP scores, YOLO-PAM excelled across different object scales. It achieved the highest AP (medium-sized objects) at 60.0%, demonstrating its precision in detecting parasites of medium sizes. Additionally, YOLO-PAM achieved a substantial AP (large-sized objects) score of 65.0%, underscoring its capability in accurately identifying larger parasites. These results emphasize YOLO-PAM’s versatility and accuracy across various object sizes, making it a robust and reliable choice for malaria parasite detection tasks within the IML dataset. It is important to note that IML does not provide any small parasites.

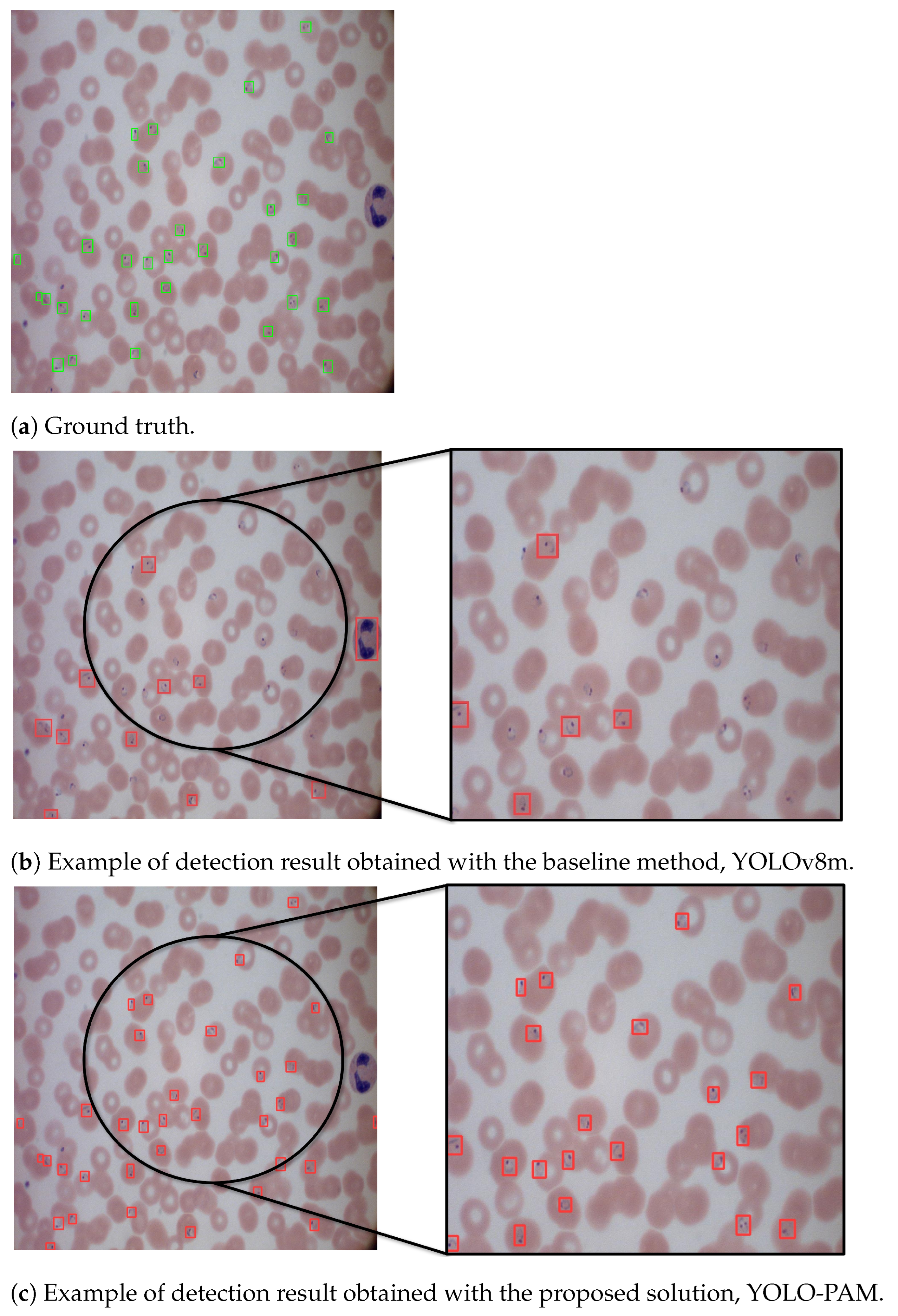

5.5. Qualitative Analysis

Figure 4 shows the predicted bounding boxes generated by the proposed architecture. As can be seen, it demonstrated a high degree of agreement with the ground truth, showing outstanding improvements over the baseline results obtained with YOLOv8. Moreover, YOLO-PAM outperformed the detectors adopted for comparison, as shown by the numerical data presented in

Table 4 and

Table 5.

5.6. System Comparison

In this section, we compare YOLO-PAM with some works present in the literature that employed the same datasets as the object of this study.

Regarding the MP-IDB dataset, Rahman et al. conducted binary classification on individual cells segmented from MP-IDB using the watershed transform. Diverging from their approach, we focused exclusively on parasite detection, omitting healthy RBCs. Thus, a direct comparison was precluded. Nevertheless, the authors achieved an 85.18% binary classification accuracy using a fine-tuned VGG-19 specialized in discriminating healthy single RBCs from infected counterparts [

45].

Maity et al. built a comprehensive system capable of segmenting infected RBCs using a multilayer feedforward Artificial Neural Network applied to full-sized images. They subsequently employed a CapsNet for the classification of the obtained crops. Their reported correct classification of 885

P. falciparum rings out of 927 yielded a classification accuracy of 95.46%. However, the authors did not extend the classification to gametocytes, trophozoites, or schizonts [

5].

Zedda et al. used a modified version of YOLOv5 for parasite detection, reporting an 84.6% mean average precision on MP-IDB [

44].

Concerning the IML dataset, Arshad et al. implemented a framework involving a segmentation step followed by multi-stage classification using off-the-shelf CNNs. Two segmentation methodologies were tested, yielding 89.33% precision with the morphological approach and 82.42% with the U-Net method [

40].

The proposed YOLO-PAM approach offers several advantages compared to existing state-of-the-art methods. First, in the critical scenario of malaria parasite detection, where swift and accurate identification is paramount for timely diagnosis, YOLO’s efficiency in providing real-time results stands as a significant advantage. Furthermore, YOLO-PAM’s reduced parameter count, compared to other architectures, strikes a balance between accuracy and speed, which is particularly beneficial in resource-constrained settings where prompt and precise detections are imperative for timely intervention.

Table 6 compares parameter counts and inference times between YOLO-PAM and other architectures, demonstrating improved results with lower parameters and comparable inference times to the reference baseline.

Second, YOLO-PAM provides a unified framework for end-to-end detection, allowing simultaneous predictions of bounding boxes and class probabilities for multiple parasite types and stages within an image.

Third, the unified architecture of YOLO-PAM, considering the entire image in a single forward pass, streamlines the detection process and enhances the model’s ability to capture spatial dependencies effectively.

By leveraging these advantages, this work aimed to contribute significantly to the field of malaria diagnosis by providing a robust and efficient solution for automated parasite detection.

6. Conclusions

In summary, this study’s experimental results and analysis demonstrated the effectiveness and superiority of the proposed malaria-parasite-detection method, YOLO-PAM, across multiple datasets and parasite species. The ablation study systematically assessed the impact of various modifications on the detection performance. Notably, integrating both CBAM and C3STR modifications significantly enhanced the accuracy, highlighting the importance of these combined enhancements.

When evaluated on MP-IDB, YOLO-PAM consistently outperformed existing methods across all four parasite species. Notably, within the

P.f. class, YOLO-PAM achieved a remarkable Average Precision (AP) of 83.6%, surpassing both the baseline YOLOv8m and the previously established state-of-the-art detection method [

44]. Similarly, in the

P.m. and

P.o. categories, YOLO-PAM exhibited high performance, demonstrating its precision in detecting parasites of varying sizes within these species.

Furthermore, the evaluation of the IML dataset reinforced YOLO-PAM’s superiority. With an overall AP of 59.9%, it outperformed the FRCNN, RetinaNet, FCOS, and the baseline, YOLOv8m, demonstrating its accuracy in detecting malaria parasites even in challenging scenarios.

YOLO-PAM exhibited precision for small objects, as observed in the P.f. class, and for medium- and large-sized parasites, underscoring its versatility across different object scales.

In conclusion, YOLO-PAM presents a robust and reliable solution for malaria parasite detection, addressing the limitations of existing methods and demonstrating superior performance across diverse datasets and parasite species. Its accuracy, versatility, and reliability make it a valuable contribution to malaria research and healthcare, promising significant advancements in malaria diagnosis and ultimately contributing to the global efforts to combat this infectious disease.

Several potential directions for future research were outlined. The primary goal was to enhance the current approach to accurately detect all malaria parasite species simultaneously. Additionally, building upon the encouraging results within the intra-dataset context, the approach will be tailored to a cross-dataset framework to enhance its resilience to potential environmental variations between the source and target data. Finally, a long-term objective is to expand the approach to encompass a multi-magnification image representation of the same blood smear, enabling more-precise detection of malaria parasites across varying magnifications.