Multi-View Optical Image Fusion and Reconstruction for Defogging without a Prior In-Plane

Abstract

:1. Introduction

2. Theory

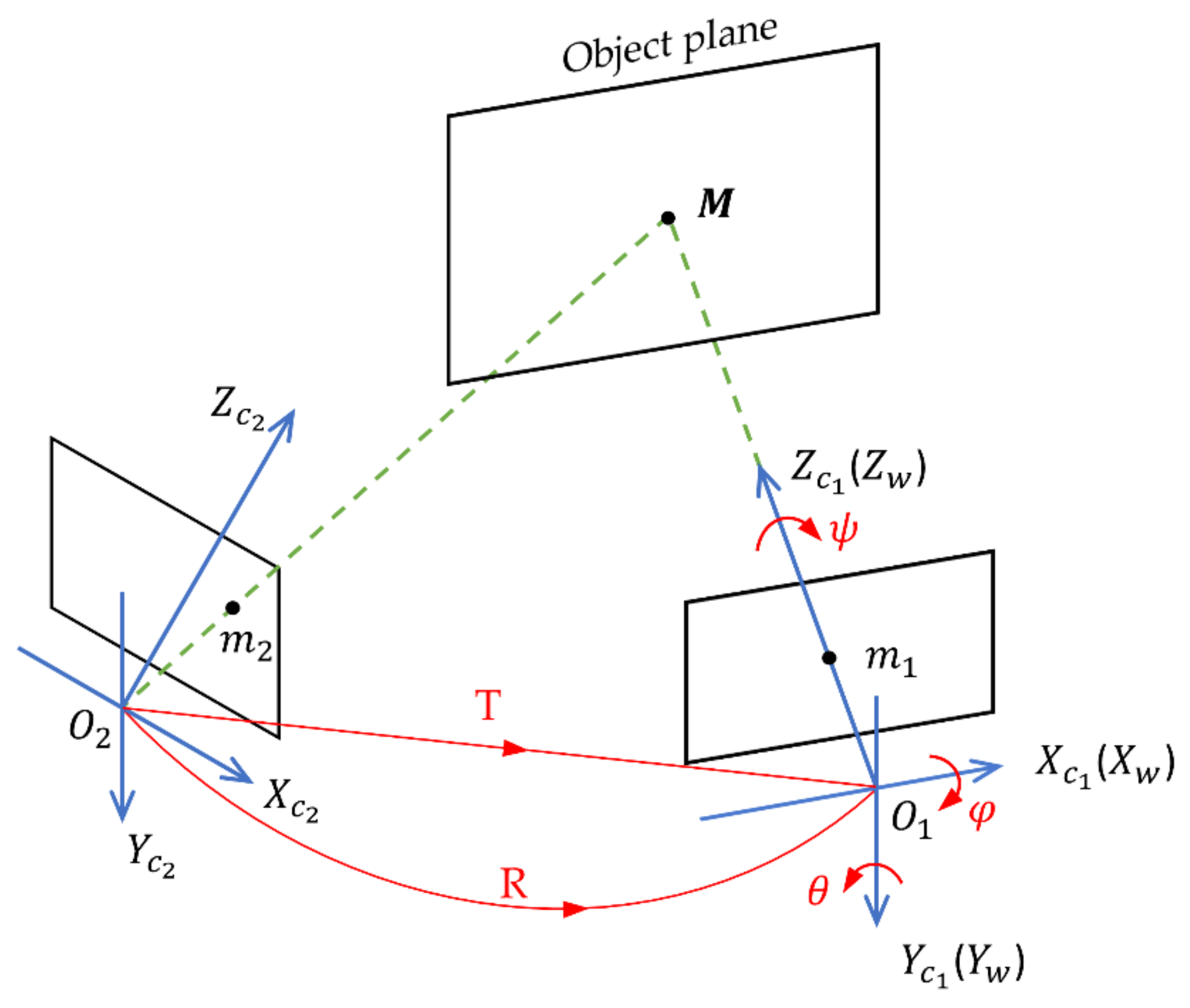

2.1. Projective Geometry

2.2. Multiple Views Motion Estimation

3. Experiment and Results

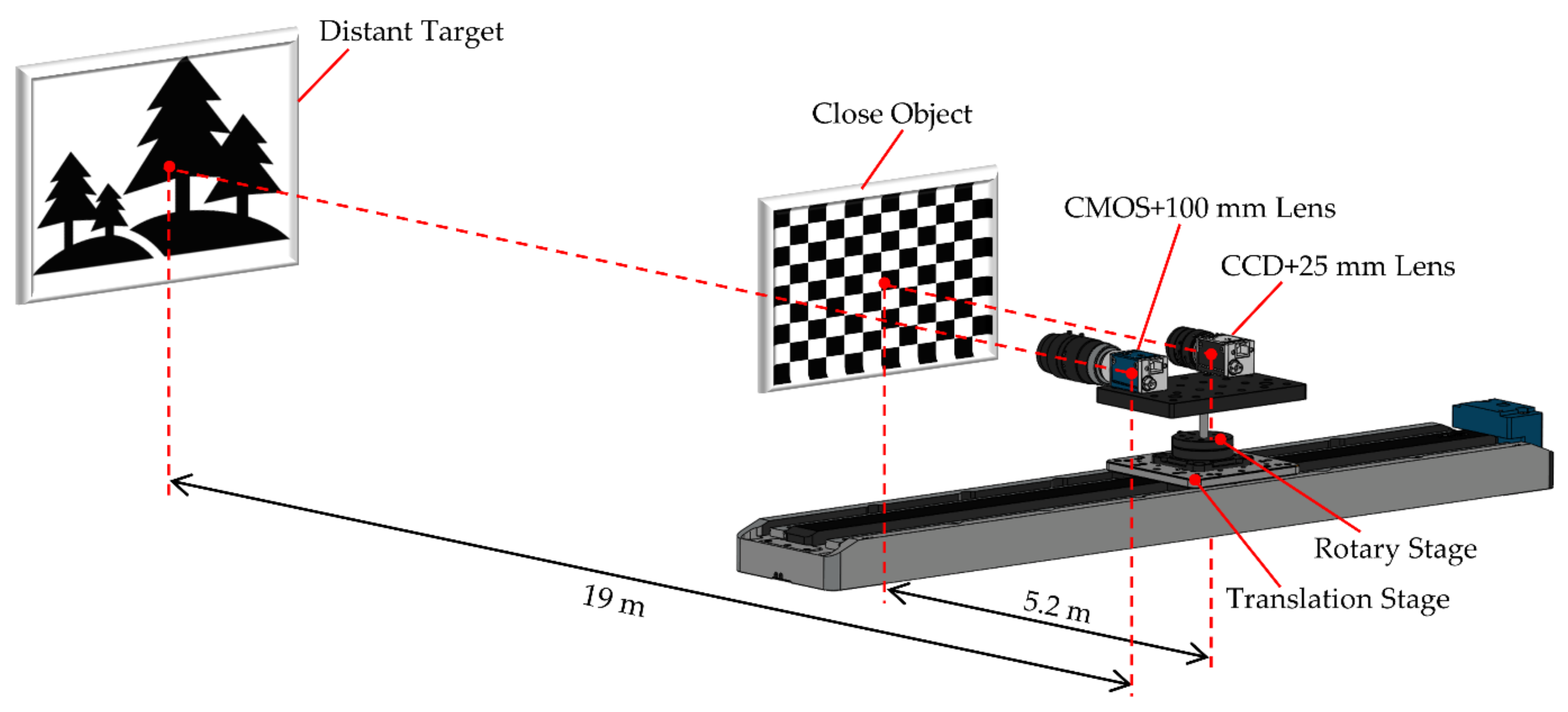

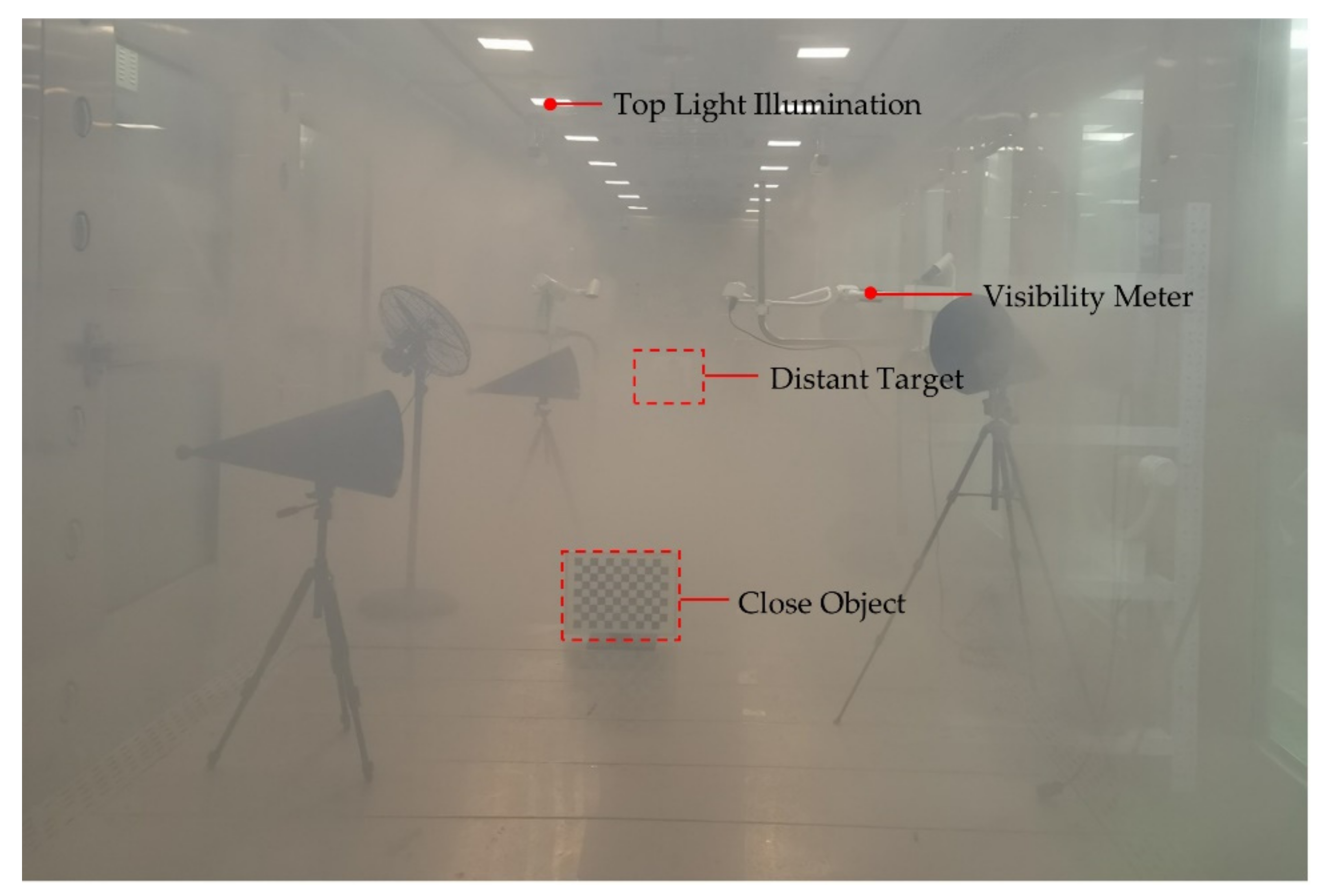

3.1. Experimental Setup

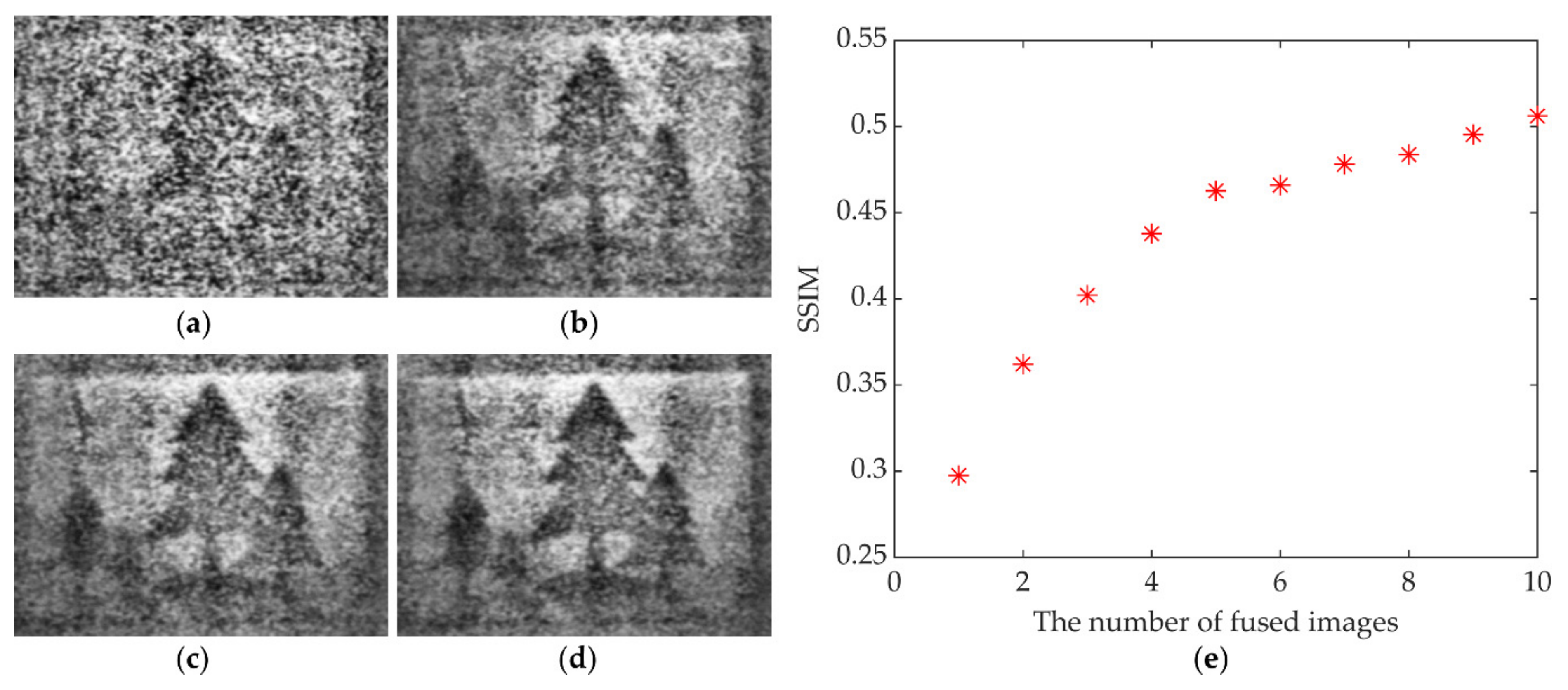

3.2. Image Acquisition and Multi-View Image Fusion

3.3. Image Defogging

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Treibitz, T.; Schechner, Y.Y. Recovery limits in pointwise degradation. In Proceedings of the 2009 IEEE International Conference on Computational Photography (ICCP 2009), San Francisco, CA, USA, 16–17 April 2009; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Treibitz, T.; Schechner, Y.Y. Polarization: Beneficial for visibility enhancement? In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Vols 1–4 (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 525–532. [Google Scholar]

- Schock, M.; Le Mignant, D.; Chanan, G.A.; Wizinowich, P.L. Atmospheric turbulence characterization with the Keck adaptive optics systems. In Proceedings of the Conference on Adaptive Optical System Technologies II, Waikoloa, HI, USA, 22–26 August 2002; pp. 813–824. [Google Scholar]

- Beckers, J. Adaptive optics for astronomy: Principles, performance, and applications. Annu. Rev. Astron. Astrophys. 2003, 31, 13–62. [Google Scholar] [CrossRef]

- Stein, K.; Gonglewski, J.D. Optics in Atmospheric Propagation and Adaptive Systems XIII. In Proceedings of the SPIE–The International Society for Optical Engineering, Toulouse, France, 20–21 September 2010; Volume 7828. [Google Scholar]

- Toselli, I.; Gladysz, S. Adaptive optics correction of scintillation for oceanic turbulence-affected laser beams. In Proceedings of the Conference on Environmental Effects on Light Propagation and Adaptive Systems, Berlin, Germany, 12–13 September 2018. [Google Scholar]

- Fan, T.H.; Li, C.L.; Ma, X.; Chen, Z.; Zhang, X.; Chen, L. An Improved Single Image Defogging Method Based on Retinex; IEEE: Manhattan, NY, USA, 2017; pp. 410–413. [Google Scholar]

- Parihar, A.S.; Singh, K. A Study on Retinex Based Method for Image Enhancement; IEEE: Manhattan, NY, USA, 2018; pp. 619–624. [Google Scholar]

- Cai, B.L.; Xu, X.M.; Jia, K.; Qing, C.M.; Tao, D.C. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.M.; Sun, J.; Tang, X.O. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.Y.; Kim, L.S.; Hwang, S.H. An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 475–484. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–128. [Google Scholar] [CrossRef] [PubMed]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 2347–2354. [Google Scholar]

- Rahman, Z.U.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the Image Processing, Lausanne, Switzerland, 19 September 1996. [Google Scholar]

- Ren, W.Q.; Liu, S.; Zhang, H.; Pan, J.S.; Cao, X.C.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In European Conference on—Computer Vision 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9906, pp. 154–169. [Google Scholar]

- Wang, F.J.; Zhang, B.J.; Zhang, C.P.; Yan, W.R.; Zhao, Z.Y.; Wang, M. Low-light image joint enhancement optimization algorithm based on frame accumulation and multi-scale Retinex. Ad Hoc Netw. 2021, 113, 102398. [Google Scholar] [CrossRef]

- Li, G.; Tang, H.Y.; Kim, D.; Gao, J.; Lin, L. Employment of frame accumulation and shaped function for upgrading low-light-level image detection sensitivity. Opt. Lett. 2012, 37, 1361–1363. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.J.; Zhang, C.C.; Li, G.; Lin, L.; Zhang, C.P.; Wang, P.M.; Yan, W.R. Multispectral heterogeneity detection based on frame accumulation and deep learning. IEEE Access 2019, 7, 29277–29284. [Google Scholar] [CrossRef]

- Zhang, T.; Shao, C.; Wang, X. Atmospheric scattering-based multiple images fog removal. In Proceedings of the 2011 4th International Congress on Image and Signal Processing (CISP 2011), Shanghai, China, 15–17 October 2011; pp. 108–112. [Google Scholar] [CrossRef]

- Zeng, H.R.; Sun, H.Y.; Zhang, T.H. High Dynamic Range Image Acquisition Based on Multiplex Cameras. Young Scientists Forum 2017. SPIE 2018, 10710, 107100N. [Google Scholar]

- Cao, L.K.; Ling, J.; Xiao, X.H. Study on the influence of image noise on monocular feature-based visual SLAM based on FFDNet. Sensors 2020, 20, 18. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Dong, H.X.; Quan, Q. A mismatching eliminating method based on camera motion information. In Proceedings of the 2015 34th Chinese Control Conference, Hangzhou, China, 28–30 July 2015; pp. 4835–4840. [Google Scholar]

- Valenzuela, W.; Soto, J.E.; Zarkesh-Ha, P.; Figueroa, M. Face recognition on a smart image sensor using local gradients. Sensors 2021, 21, 25. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.M.; Sun, M.J.; Chen, W.; Li, L.J. Illumination calibration for computational ghost imaging. Photonics 2021, 8, 8. [Google Scholar] [CrossRef]

- Malis, E.; Vargas, M. Deeper Understanding of the Homography Decomposition for Vision-Based Control; INRIA: Roquecourbe, France, 2007; p. 90. [Google Scholar]

- Cheng, Z.; Zhang, L. An aerial image mosaic method based on UAV position and attitude information. Acta Geod. Cartogr. Sin. 2016, 45, 698–705. [Google Scholar]

- Vaish, V. Synthetic Aperture Imaging Using Dense Camera Arrays; Stanford University: Stanford, CA, USA, 2007. [Google Scholar]

- Land, E.H. An alternative technique for the computation of the designator in the retinex theory of color vision. Proc. Natl. Acad. Sci. USA 1986, 83, 3078–3080. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, X.F.; Tao, C.K. Advanced multi scale retinex algorithm for color image enhancement. In Proceedings of the International Symposium on Photoelectronic Detection and Imaging: Technology and Applications 2007, Beijing, China, 9–12 September 2007; Volume 6625, p. 662514. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Viewpoint | |

|---|---|

| 01 | (0, 0, 0) |

| 02 | (62.50, 0, 0) |

| 03 | (125.0, 0, 0) |

| 04 | (187.5, 0, 0) |

| 05 | (250.0, 0, 0) |

| 06 | (312.5, 0, 0) |

| 07 | (375.0, 0, 0) |

| 08 | (437.5, 0, 0) |

| 09 | (500.0, 0, 0) |

| 10 | (525.0, 0, 0) |

| Viewpoint | Direction Parameters (ϕ, θ, ψ) /Degree |

|---|---|

| 01 | (0, 0, 0) |

| 02 | (−0.0090, −0.0019, −0.0101) |

| 03 | (−0.0171, 0.0044, −0.0425) |

| 04 | (−0.0340, 0.0072, −0.0123) |

| 05 | (−0.0500, 0.0093, −0.0655) |

| 06 | (−0.0450, −2.0028, −0.0587) |

| 07 | (−0.0476, −2.0353, 0.0548) |

| 08 | (−0.0464, −2.0359, 0.0623) |

| 09 | (−0.0371, −2.0440, 0.2273) |

| 10 | (−0.0210, −2.0484, 0.0882) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Liu, Y.; Liu, H.; Shui, Y.; Zhao, G.; Chu, J.; Situ, G.; Li, Z.; Zhou, J.; Liang, H. Multi-View Optical Image Fusion and Reconstruction for Defogging without a Prior In-Plane. Photonics 2021, 8, 454. https://doi.org/10.3390/photonics8100454

Huang Y, Liu Y, Liu H, Shui Y, Zhao G, Chu J, Situ G, Li Z, Zhou J, Liang H. Multi-View Optical Image Fusion and Reconstruction for Defogging without a Prior In-Plane. Photonics. 2021; 8(10):454. https://doi.org/10.3390/photonics8100454

Chicago/Turabian StyleHuang, Yuru, Yikun Liu, Haishan Liu, Yuyang Shui, Guanwen Zhao, Jinhua Chu, Guohai Situ, Zhibing Li, Jianying Zhou, and Haowen Liang. 2021. "Multi-View Optical Image Fusion and Reconstruction for Defogging without a Prior In-Plane" Photonics 8, no. 10: 454. https://doi.org/10.3390/photonics8100454