Hierarchical Deep LSTM for Fault Detection and Diagnosis for a Chemical Process

Abstract

:1. Introduction

- A novel hierarchical structure was developed combining passive FDD with active FDD to enhance the detection and classification accuracy for incipient faults.

- Design of PRBS signal for improving the observability of the incipient faults.

- The LSTM model was optimized with respect to data horizon for better classification of faults.

- A comprehensive comparison of the proposed method to several other methods was carried out to demonstrate the efficacy of the proposed method for both regular and incipient faults.

2. Preliminaries

2.1. Recurrent Neural Networks (RNNs)

2.2. Long Short-Term Memory (LSTM) Units

2.3. Deep LSTM Supervised Autoencoder Neural Network (DLSTM-SAE NN)

2.4. Model Structure and Specifications

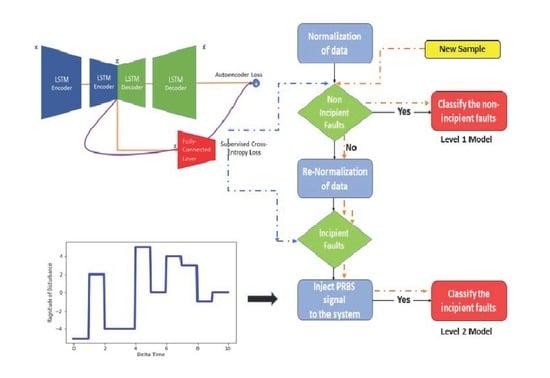

3. Hierarchical Structure

- The training data are mean centered and normalized.

- The faults are classified into two groups: group 1—easily distinguishable faults and group 2—difficult to distinguish faults, which include the incipient faults along with normal operation data class.

- A Deep LSTM-SAE model denoted as M1 is designed for identifying the faults of group 1 or identifying all faults in group 2 as a single fault.

- The data for group 2 identified in the previous step are mean centered and re-normalized.

- A neural network model is designed specifically for group 2 denoted as M2.

- For faults that are not accurately identified by M2, a PRBS is designed and injected into locations in the system that are informative about these faults.

- The data corresponding to the sample is mean centered and normalized as in step 1 of the training procedure.

- The sample is classified as either in group 1 of easy to observe faults or group 2 of difficult to identify faults.

- If sample is in group 1, it is classified accordingly by model M1. If it is in group 2, it is re-normalized according to the re-normalization in step 4 of the training procedure.

- If the sample is within group 2, it is identified by model M2 in step 5 of the training procedure.

- If the sample is not identified accurately by the model for group 2, PRBS signals are injected as specified in step 6 of the training procedure, and the corresponding faults are diagnosed from the resulting data.

Design: Pseudo-Random Binary Signal (PRBS)

- Reduce input move sizes (to reduce wear and tear on actuators).

- Reduce input and output amplitudes, power, or variance.

- Short experimental time to prevent losses

4. Results

5. Discussions

- Fault Detection Rate (FDR):FDR represents the probability that the abnormal conditions are correctly detected, which is an important criterion to compare between different methods in terms of their detection efficiency. Evidently, a very high FDR is desirable.

- False Alarm Rate (FAR):

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| FDD | Fault Detection and Diagnosis |

| PCA | Prinicipal Component Analysis |

| DPCA | Dynamic Prinicipal Component Analysis |

| DNN | Deep Neural Network |

| NN | Neural Network |

| RNN | Recurrent Neural Network |

| TEP | Tennessee Eastman Process |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Units |

| DLSTM-SAE NN | Deep LSTM Supervised Autoencoder Neural Network |

| DSAE-NN | Deep Supervised Autoencoder Neural Network |

| PRBS | Pseudo-Random Binary Signal |

| FDR | Fault Detection Rate |

| FAR | False Alarm Rate |

| GAN | Generative Adversarial Network |

| DSN | Deep Stacked Network |

| SAE | Stacked Autoencoder |

| OCSVM | One-Class SVM |

| SVM | Support Vector Machines |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

References

- Chiang, L.H.; Russell, E.L.; Braatz, R.D. Fault diagnosis in chemical processes using Fisher discriminant analysis, discriminant partial least squares, and principal component analysis. Chemom. Intell. Lab. Syst. 2000, 50, 243–252. [Google Scholar] [CrossRef]

- Hematillake, D.; Freethy, D.; McGivern, J.; McCready, C.; Agarwal, P.; Budman, H. Design and Optimization of a Penicillin Fed-Batch Reactor Based on a Deep Learning Fault Detection and Diagnostic Model. Ind. Eng. Chem. Res. 2022, 61, 4625–4637. [Google Scholar] [CrossRef]

- Yin, S.; Ding, S.X.; Xie, X.; Luo, H. A review on basic data-driven approaches for industrial process monitoring. IEEE Trans. Ind. Electron. 2014, 61, 6418–6428. [Google Scholar] [CrossRef]

- Agarwal, P.; Budman, H. Classification of Profit-Based Operating Regions for the Tennessee Eastman Process using Deep Learning Methods. IFAC-PapersOnLine 2019, 52, 556–561. [Google Scholar] [CrossRef]

- Agarwal, P.; Tamer, M.; Sahraei, M.H.; Budman, H. Deep Learning for Classification of Profit-Based Operating Regions in Industrial Processes. Ind. Eng. Chem. Res. 2019, 59, 2378–2395. [Google Scholar] [CrossRef]

- Agarwal, P.; Tamer, M.; Budman, H. Explainability: Relevance based dynamic deep learning algorithm for fault detection and diagnosis in chemical processes. Comput. Chem. Eng. 2021, 154, 107467. [Google Scholar] [CrossRef]

- Agarwal, P.; Aghaee, M.; Tamer, M.; Budman, H. A novel unsupervised approach for batch process monitoring using deep learning. Comput. Chem. Eng. 2022, 159, 107694. [Google Scholar] [CrossRef]

- Agarwal, P. Application of Deep Learning in Chemical Processes: Explainability, Monitoring and Observability. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2022. [Google Scholar]

- Zhang, Y. Enhanced statistical analysis of nonlinear processes using KPCA, KICA and SVM. Chem. Eng. Sci. 2009, 64, 801–811. [Google Scholar] [CrossRef]

- Yin, S.; Ding, S.X.; Haghani, A.; Hao, H.; Zhang, P. A comparison study of basic data-driven fault diagnosis and process monitoring methods on the benchmark Tennessee Eastman process. J. Process Control 2012, 22, 1567–1581. [Google Scholar] [CrossRef]

- Lau, C.; Ghosh, K.; Hussain, M.A.; Hassan, C.C. Fault diagnosis of Tennessee Eastman process with multi-scale PCA and ANFIS. Chemom. Intell. Lab. Syst. 2013, 120, 1–14. [Google Scholar] [CrossRef]

- Shams, M.B.; Budman, H.; Duever, T. Fault detection using CUSUM based techniques with application to the Tennessee Eastman Process. IFAC Proc. Vol. 2010, 43, 109–114. [Google Scholar] [CrossRef] [Green Version]

- Ku, W.; Storer, R.H.; Georgakis, C. Disturbance detection and isolation by dynamic principal component analysis. Chemom. Intell. Lab. Syst. 1995, 30, 179–196. [Google Scholar] [CrossRef]

- Rato, T.J.; Reis, M.S. Fault detection in the Tennessee Eastman benchmark process using dynamic principal components analysis based on decorrelated residuals (DPCA-DR). Chemom. Intell. Lab. Syst. 2013, 125, 101–108. [Google Scholar] [CrossRef]

- Odiowei, P.E.P.; Cao, Y. Nonlinear dynamic process monitoring using canonical variate analysis and kernel density estimations. IEEE Trans. Ind. Inform. 2009, 6, 36–45. [Google Scholar] [CrossRef] [Green Version]

- Isermann, R. Fault-Diagnosis Systems: An Introduction from Fault Detection to Fault Tolerance; Springer Science & Business Media: Berlin, Germany, 2005. [Google Scholar]

- Shams, M.B.; Budman, H.; Duever, T. Finding a trade-off between observability and economics in the fault detection of chemical processes. Comput. Chem. Eng. 2011, 35, 319–328. [Google Scholar] [CrossRef]

- Mhaskar, P.; Gani, A.; El-Farra, N.H.; McFall, C.; Christofides, P.D.; Davis, J.F. Integrated fault-detection and fault-tolerant control of process systems. AIChE J. 2006, 52, 2129–2148. [Google Scholar] [CrossRef]

- Heirung, T.A.N.; Mesbah, A. Input design for active fault diagnosis. Annu. Rev. Control 2019, 47, 35–50. [Google Scholar] [CrossRef]

- Cusidó, J.; Romeral, L.; Ortega, J.A.; Garcia, A.; Riba, J. Signal injection as a fault detection technique. Sensors 2011, 11, 3356–3380. [Google Scholar] [CrossRef] [Green Version]

- Busch, R.; Peddle, I.K. Active fault detection for open loop stable LTI SISO systems. Int. J. Control Autom. Syst. 2014, 12, 324–332. [Google Scholar] [CrossRef]

- Spyridon, P.; Boutalis, Y.S. Generative adversarial networks for unsupervised fault detection. In Proceedings of the 2018 European Control Conference (ECC), Limassol, Cyprus, 12–15 June 2018; pp. 691–696. [Google Scholar]

- Lv, F.; Wen, C.; Bao, Z.; Liu, M. Fault diagnosis based on deep learning. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 6851–6856. [Google Scholar]

- Hsu, C.C.; Chen, M.C.; Chen, L.S. A novel process monitoring approach with dynamic independent component analysis. Control Eng. Pract. 2010, 18, 242–253. [Google Scholar] [CrossRef]

- Singh Chadha, G.; Krishnamoorthy, M.; Schwung, A. Time Series based Fault Detection in Industrial Processes using Convolutional Neural Networks. In Proceedings of the IECON 2019—45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 173–178. [Google Scholar] [CrossRef]

- Chadha, G.S.; Schwung, A. Comparison of deep neural network architectures for fault detection in Tennessee Eastman process. In Proceedings of the 2017 22nd IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Limassol, Cyprus, 12–15 September 2017; pp. 1–8. [Google Scholar]

- Li, Y. A fault prediction and cause identification approach in complex industrial processes based on deep learning. Comput. Intell. Neurosci. 2021, 2021, 6612342. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Le, L.; Patterson, A.; White, M. Supervised autoencoders: Improving generalization performance with unsupervised regularizers. Adv. Neural Inf. Process. Syst. 2018, 31, 107–117. [Google Scholar]

- Chollet, F. Keras: The Python Deep Learning library. Astrophys. Source Code Libr. 2018, ascl-1806. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Ljung, L. System identification. In Wiley Encyclopedia of Electrical and Electronics Engineering; 1999; pp. 1–19. Available online: https://www.diva-portal.org/smash/get/diva2:316967/FULLTEXT01.pdf (accessed on 19 October 2022).

- Rivera, D.E.; Gaikwad, S.V. Systematic techniques for determining modelling requirements for SISO and MIMO feedback control. J. Process Control 1995, 5, 213–224. [Google Scholar] [CrossRef]

- Garcia-Gabin, W.; Lundh, M. Input PRBS Design for Identification of Multivariable Systems. Available online: http://users.abo.fi/khaggblo/npcw21/submissions/27_Garcia-Gabin&Lundh.pdf (accessed on 19 October 2022).

- Lee, H.J.; Rivera, D.E. An Integrated Methodology for Plant-Friendly Input Signal Design and Control-Relevant Estimation of Highly Interactive Processes; American Institute of Chemical Engineers: New York, NY, USA, 2005. [Google Scholar]

- Zhao, H.; Sun, S.; Jin, B. Sequential fault diagnosis based on LSTM neural network. IEEE Access 2018, 6, 12929–12939. [Google Scholar] [CrossRef]

- Wu, L.; Chen, X.; Peng, Y.; Ye, Q.; Jiao, J. Fault detection and diagnosis based on sparse representation classification (SRC). In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 926–931. [Google Scholar]

- Yin, S.; Gao, X.; Karimi, H.R.; Zhu, X. Study on support vector machine-based fault detection in tennessee eastman process. In Abstract and Applied Analysis; Hindawi: London, UK, 2014; Volume 2014. [Google Scholar]

- Xie, D.; Bai, L. A Hierarchical Deep Neural Network for Fault Diagnosis on Tennessee-Eastman Process. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 745–748. [Google Scholar] [CrossRef]

- Luo, L.; Xie, L.; Su, H. Deep Learning With Tensor Factorization Layers for Sequential Fault Diagnosis and Industrial Process Monitoring. IEEE Access 2020, 8, 105494–105506. [Google Scholar] [CrossRef]

| Variable Name | Variable Number | Units | Variable Name | Variable Number | Units |

|---|---|---|---|---|---|

| A feed (stream 1) | XMEAS (1) | kscmh | Reactor cooling water outlet temperature | XMEAS (21) | °C |

| D feed (stream 2) | XMEAS (2) | kg h−1 | Separator cooling water outlet temperature | XMEAS (22) | °C |

| E feed (stream 3) | XMEAS (3) | kg h−1 | Feed %A | XMEAS (23) | mol% |

| A and C feed (stream 4) | XMEAS (4) | kscmh | Feed %B | XMEAS (24) | mol% |

| Recycle flow (stream 8) | XMEAS (5) | kscmh | Feed %C | XMEAS (25) | mol% |

| Reactor feed rate (stream 6) | XMEAS (6) | kscmh | Feed %D | XMEAS (26) | mol% |

| Reactor pressure | XMEAS (7) | kPa guage | Feed %E | XMEAS (27) | mol% |

| Reactor level | XMEAS (8) | % | Feed %F | XMEAS (28) | mol% |

| Reactor temperature | XMEAS (9) | °C | Purge %A | XMEAS (29) | mol% |

| Purge rate (stream 9) | XMEAS (10) | kscmh | Purge %B | XMEAS (30) | mol% |

| Product separator temperature | XMEAS (11) | °C | Purge %C | XMEAS (31) | mol% |

| Product separator level | XMEAS (12) | % | Purge %D | XMEAS (32) | mol% |

| Product separator pressure | XMEAS (13) | kPa guage | Purge %E | XMEAS (33) | mol% |

| Product separator underflow (stream 10) | XMEAS (14) | m3h−1 | Purge %F | XMEAS (34) | mol% |

| Stripper level | XMEAS (15) | % | Purge %G | XMEAS(35) | mol% |

| Stripper pressure | XMEAS (16) | kPa guage | Purge %H | XMEAS (36) | mol% |

| Stripper underflow (stream 11) | XMEAS (17) | m3h−1 | Product %D | XMEAS (37) | mol% |

| Stripper temperature | XMEAS (18) | °C | Product %E | XMEAS (38) | mol% |

| Stripper steam flow | XMEAS (19) | kg h−1 | Product %F | XMEAS (39) | mol% |

| Compressor Work | XMEAS (20) | kW | Product %G | XMEAS (40) | mol% |

| D feed flow | XMV (1) | kg h−1 | Product %H | XMEAS (41) | mol% |

| E feed flow | XMV (2) | kg h−1 | A feed flow | XMV (3) | kscmh |

| A + C feed flow | XMV (4) | kscmh | Compressor recycle valve | XMV (5) | % |

| Purge valve | XMV (6) | % | Separator pot liquid flow | XMV (7) | m3h−1 |

| Stripper liquid product flow | XMV (8) | m3h−1 | Stripper steam valve | XMV (9) | % |

| Reactor cooling water flow | XMV (10) | m3h−1 | Condenser cooling water flow | XMV (11) | m3h−1 |

| Fault | Description | Type |

|---|---|---|

| IDV(1) | A/C feed ratio, B composition constant (stream 4) | step |

| IDV(2) | B composition, A/C ratio constant (stream 4) | step |

| IDV(3) | D Feed temperature | step |

| IDV(4) | Reactor cooling water inlet temperature | step |

| IDV(5) | Condenser cooling water inlet temperature (stream 2) | step |

| IDV(6) | A feed loss (stream 1) | step |

| IDV(7) | C header pressure loss reduced availability (stream 4) | step |

| IDV(8) | A, B, C feed composition (stream 4) | random variation |

| IDV(9) | D feed temperature | random variation |

| IDV(10) | C feed temperature (stream 4) | random variation |

| IDV(11) | Reactor cooling water inlet temperature | random variation |

| IDV(12) | Condenser cooling water inlet temperature | random variation |

| IDV(13) | Reaction kinetics | slow drift |

| IDV(14) | Reactor cooling water | valve sticking |

| IDV(15) | Condenser cooling water valve | stiction |

| IDV(16) | Deviations of heat transfer within stripper | random variation |

| IDV(17) | Deviations of heat transfer within reactor | random variation |

| IDV(18) | Deviations of heat transfer within condenser | random variation |

| IDV(19) | Recycle valve of compressor, underflow stripper and steam valve stripper | stiction |

| IDV(20) | unknown | random variation |

| Fault | PCA (15 comp.) | DPCA (22 comp.) | ICA (9 comp.) | DL (2017) | DL (2017) | DL (2018) | DL (2018) | DL (2019) | Proposed DL | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SPE | AO | SAE-NN | DSN | GAN | OCSVM | CNN | Deep LSTM-SAE | ||||

| 1 | 99.2% | 99.8% | 99% | 100% | 100% | 77.6% | 90.8% | 99.62% | 99.5% | 91.39% | 100% |

| 2 | 98% | 98.6% | 98% | 98% | 98% | 85% | 89.6% | 98.5% | 98.5% | 87.96% | 100% |

| 4 | 4.4% | 96.2% | 26% | 61% | 84% | 56.6% | 47.6% | 56.25% | 50.37% | 99.73% | 100% |

| 5 | 22.5% | 25.4% | 36% | 100% | 100% | 76% | 31.6% | 32.37% | 30.5% | 90.35% | 100% |

| 6 | 98.9% | 100% | 100% | 100% | 100% | 82.8% | 91.6% | 100% | 100% | 91.5% | 100% |

| 7 | 91.5% | 100% | 100% | 99% | 100% | 80.6% | 91% | 99.99% | 99.62% | 91.55% | 100% |

| 8 | 96.6% | 97.6% | 98% | 97% | 97% | 83% | 90.2% | 97.87% | 97.37% | 82.95% | 100% |

| 10 | 33.4% | 34.1% | 55% | 78% | 82% | 75.3% | 63.2% | 50.87% | 53.25% | 70.05% | 42.8% |

| 11 | 20.6% | 64.4% | 48% | 52% | 70 | 75.9% | 54.2% | 58% | 54.75% | 60.16% | 100% |

| 12 | 97.1% | 97.5% | 99% | 99% | 100% | 83.3% | 87.8% | 98.75% | 98.63% | 85.56% | 100% |

| 13 | 94% | 95.5% | 94% | 94% | 95% | 83.3% | 85.5% | 95% | 94.87% | 46.92% | 100% |

| 14 | 84.2% | 100% | 100% | 100% | 100% | 77.8% | 89% | 100% | 100 % | 88.88% | 100% |

| 16 | 16.6% | 24.5% | 49% | 71% | 78% | 78.3% | 74.8% | 34.37% | 36.37% | 66.84% | 100% |

| 17 | 74.1% | 89.2% | 82% | 89% | 94% | 78% | 83.3% | 91.12% | 87.25% | 77.11% | 100% |

| 18 | 88.7% | 89.9% | 90% | 90% | 90% | 83.3% | 82.4% | 90.37% | 90.12% | 82.74% | 100% |

| 19 | 0.4% | 12.7% | 3% | 69% | 80% | 67.7% | 52.4% | 11.8% | 3.75% | 70.87% | 40.4% |

| 20 | 29.9% | 45% | 53% | 87% | 91% | 77.1% | 44.1% | 58.37% | 52.75% | 72.88% | 100% |

| Average | 61.77% | 74.72% | 72.35% | 87.29% | 91.70% | 77.7% | 76.84% | 74.04% | 62.78% | 85.47% | 93.13% |

| Fault | DL (2017) | DL (2017) | DL (2018) | DL (2018) | DL (2019) | DL (2018) | DL (2021) | Proposed DL |

|---|---|---|---|---|---|---|---|---|

| SAE-NN | DSN | GAN | OCSVM | CNN | Optimized LSTM | LSTM (attention) | Deep LSTM-SAE | |

| 1 | 77.6% | 90.8% | 99.62% | 99.5% | 91.39% | 68% | 100% | 100% |

| 2 | 85% | 89.6% | 98.5% | 98.5% | 87.96% | 78% | 89% | 100% |

| 3 | 79.4% | 14.4% | 10.375% | 7.62% | 50.59% | 45% | 94% | 81.58% |

| 4 | 56.6% | 47.6% | 56.25% | 50.37% | 99.73% | 75% | 99% | 100% |

| 5 | 76% | 31.6% | 32.37% | 30.5% | 90.35% | 45% | 94% | 100% |

| 6 | 82.8% | 91.6% | 100% | 100% | 91.5% | 75% | 100% | 100% |

| 7 | 80.6% | 91% | 99.99% | 99.62% | 91.55% | 89% | 100% | 100% |

| 8 | 83% | 90.2% | 97.87% | 97.37% | 82.95% | 100% | 99% | 100% |

| 9 | 50.6% | 16.3% | 8.625% | 7.125% | 49.53% | 89% | 81% | 99.38% |

| 10 | 75.3% | 63.2% | 50.87% | 53.25% | 70.05% | 71% | 99% | 42.84% |

| 11 | 75.9% | 54.2% | 58% | 54.75% | 60.16% | 67% | 88% | 100% |

| 12 | 83.3% | 87.8% | 98.75% | 98.63% | 85.56% | 77% | 99% | 100% |

| 13 | 83.3% | 85.5% | 95% | 94.87% | 46.92% | 83% | 89% | 100% |

| 14 | 77.8% | 89% | 100% | 100 % | 88.88% | 56% | 99% | 100% |

| 15 | 55.5% | 26.7% | 12.5% | 14% | 43.54% | 89% | 22% | 100% |

| 16 | 78.3% | 74.8% | 34.37% | 36.37% | 66.84% | 99% | 31% | 100% |

| 17 | 78% | 83.3% | 91.12% | 87.25% | 77.11% | 0% | 97% | 100% |

| 18 | 83.3% | 82.4% | 90.37% | 90.12% | 82.74% | 89% | 95% | 100% |

| 19 | 67.7% | 52.4% | 11.8% | 3.75% | 70.87% | 20% | 97% | 40.4% |

| 20 | 77.1% | 44.1% | 58.37% | 52.75% | 72.88% | 88% | 85% | 100% |

| Average | 75.355% | 65.32% | 64.51% | 62.78% | 79.84% | 70.15% | 87.85% | 93.23% |

| Faults | Non-Hierarchical DL NN | Hierarchical DL NN (No PRBS) | Hierarchical DL NN+ PRBS Addition for Fault 15 | Hierarchical + PRBS Addition for Fault 15 and Fault 9 |

|---|---|---|---|---|

| Fault 3 | 36% | 42% | 88.7% | 81.5% |

| Fault 9 | 32% | 18% | 38.4% | 99.3% |

| Fault 15 | 12% | 30% | 99.4% | 100% |

| Normal Operation | 18% | 25% | 100% | 98.1% |

| Average of all other Faults | 85% | 87% | 93.1% | 93.1% |

| Averaged Test Accuracy | 73.4% | 75.90% | 90.9% | 93.4% |

| Counts of Predicted Label i | Counts of Predicted Label other than i | |

|---|---|---|

| Counts of real label i | TPi | TNi |

| Counts of real label other than i | FPi | FNi |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agarwal, P.; Gonzalez, J.I.M.; Elkamel, A.; Budman, H. Hierarchical Deep LSTM for Fault Detection and Diagnosis for a Chemical Process. Processes 2022, 10, 2557. https://doi.org/10.3390/pr10122557

Agarwal P, Gonzalez JIM, Elkamel A, Budman H. Hierarchical Deep LSTM for Fault Detection and Diagnosis for a Chemical Process. Processes. 2022; 10(12):2557. https://doi.org/10.3390/pr10122557

Chicago/Turabian StyleAgarwal, Piyush, Jorge Ivan Mireles Gonzalez, Ali Elkamel, and Hector Budman. 2022. "Hierarchical Deep LSTM for Fault Detection and Diagnosis for a Chemical Process" Processes 10, no. 12: 2557. https://doi.org/10.3390/pr10122557