Mean, standard deviation, skewness, and kurtosis indices for each observed indicator are provided in

Table 3 and

Table 4. These descriptive statistics described the data separately by each covariate (gender and ethnicity). Non-normal indicators may bias estimates of model fit, model parameters and standard errors in structural equation model analyses [

41]. With the original indicator variables, highly skewed and kurtotic indicators were identified. Based on the criteria of skewness > 2.0 and kurtosis > 7.0 [

42], variables such as “Web Links Viewed” and “Assessments Began” were considered moderately non-normal. Log transformations were applied to reduce the variable non-normality [

41]. After the log transformations were made, all the skewed and/or kurtotic indices for variables were within the appropriate range. In addition, the robust estimator of Maximum Likelihood Estimation with Robust Standard Errors (MLR) was used to attenuate the problems of non-normality of observations [

43].

Because the measures of SAT scores and the uses of course management tools were in different scales, the standard errors for variables ranged from approximately one or two units to several hundred units. The various magnitudes of standard errors led to a non-convergence problem. In order to resolve this issue, scales of SAT scores were standardized to a level comparable to other indicator variables in the data.

3.1. Measurement Model

Confirmatory factor analysis (CFA) was used to examine the underlying dimensionality of all the observed indicators. Based on a theoretical framework, these indicators should have been reflected by two theoretically derived latent factors.

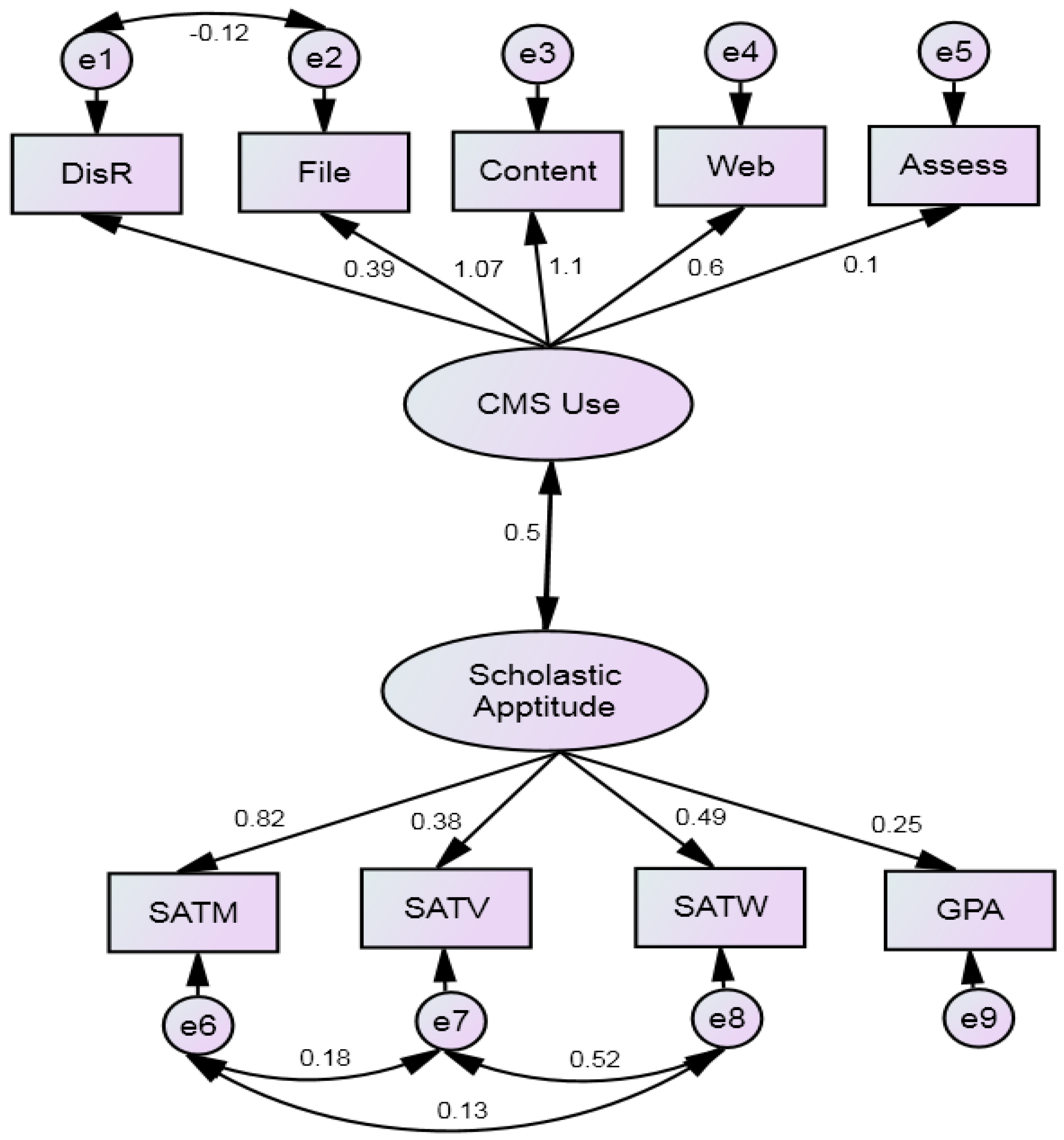

Figure 1 shows the path diagram of the measurement model. The SAT mathematic, SAT verbal, and SAT writing are the subtests of one examination so these variables were correlated with one another. The correlations were reflected by the double arrows connecting SATM, SATV, and SATW in

Figure 1. “Discussion” was connected with “File” because students had to read course files before they discussed the file content on Blackboard Vista.

Figure 1.

Confirmatory factor model.

Figure 1.

Confirmatory factor model.

The RMSEA for the two factors model was 0.07 for an acceptable model fit [

47]. Its 90% confidence interval ranges were from 0.06 to 0.08. The CFI was 0.97 and the TLI was 0.95. The SRMR was 0.06. The standardized loadings, standard error,

p-value, and correlation of the two latent constructs are shown in

Table 5. The loading of “assessment” was 0.1, because the “assessment” had a smaller mean compared with other indicators (

Table 5).

Table 5.

Loadings of CFA model.

Table 5.

Loadings of CFA model.

| | Loading | S.E. | Est./S.E. | p-Value |

|---|

| CMS Use |

| Discussion | 0.394 | 0.063 | 6.271 | <0.001 |

| Content | 1.100 | 0.027 | 40.956 | <0.001 |

| File | 1.066 | 0.028 | 38.096 | <0.001 |

| Web | 0.601 | 0.060 | 9.976 | <0.001 |

| Assessment | 0.097 | 0.043 | 2.269 | 0.023 |

| Academic Aptitude |

| GPA | 0.245 | 0.042 | 5.839 | <0.001 |

| SAT Verbal | 0.384 | 0.082 | 4.682 | <0.001 |

| SAT Math | 0.821 | 0.095 | 8.648 | <0.001 |

| SAT Writing | 0.487 | 0.094 | 5.155 | <0.001 |

| CMS Use with Academic Aptitude a | 0.497 | 0.067 | 7.397 | <0.001 |

3.2. Structural Model

Extra observed variables were used in the structural model. The two additional variables included: (1) the dependent variable of academic outcome that was represented by students’ final grades; (2) the two covariate variables-gender and ethnicity. The structural model allowed investigating the gender and ethnicity differences on computer technology use controlling for the effects of the scholastic aptitude. However, the model did not achieve a good fit (RMSEA = 0.09, CFI = 0.89, TLI = 0.86, SRMR = 0.08). The modification indices given by Mplus software [

48] indicated that additional parameters needed to be estimated in analysis: (1) “Male—SAT Mathematic”; (2) “SAT Mathematic—Computer Use”. After the two additional paths were added into the original model, the model achieved better fit (

Figure 2), CFI = 0.97, TLI = 0.95, SRMR = 0.05, and RMSEA = 0.06. The 90% confidence interval for RMSEA was 0.05 to 0.07.

The two additional parameters were selected from the modification indices given by Mplus software. There is the risk that models generated in this way may not generalize to other samples. The modified model had better model fit and was statistically significantly different from the original model on the chi-square change test (

p < 0.05). Moreover, previous research supported the theoretical appropriateness of the modified model. Since the 1960s, male students have consistently scored higher than female students on the SAT mathematic subtest by an average of 46 points [

49]. Thus, there is a great likelihood that the relationship between gender and SAT math score exists in the current data. Previous studies also provide justification for the second extra estimation “SAT Mathematic—Computer Use.” Mathematic ability has been frequently linked to positive performance in use and mastery of computer knowledge among U.S. subjects [

50,

51,

52,

53]. In most of these studies, SAT mathematic scores were used to measure mathematic ability because the SAT has been accepted as a valid measure for mathematic ability for many years. These studies theoretically supported the association between mathematic ability (represented by SAT mathematic score) and computer use in this study. In another word, the study found SAT mathematic score disadvantaged for female students.

Figure 2.

Path diagram for structural model with final grade (alternative model). Note: Gender was coded 0 = Female and 1 = Male, Ethnicity was coded 0 = Caucasian, 1 = African American & Hispanic (AH), 2 = Asian American (AA).

Figure 2.

Path diagram for structural model with final grade (alternative model). Note: Gender was coded 0 = Female and 1 = Male, Ethnicity was coded 0 = Caucasian, 1 = African American & Hispanic (AH), 2 = Asian American (AA).

Table 6 provides estimates of structural relationships depicted in

Figure 2. Standardized coefficients

(β) can be interpreted as estimates of effect size, in which less than 0.10 can be considered “small” effects, larger than 0.30 “medium” effects, and larger than 0.50 “large” effects [

47].

Table 6.

Effects of gender/ethnicity on computer use and academic achievement.

Table 6.

Effects of gender/ethnicity on computer use and academic achievement.

| CMS Use on a | Estimate (β) | S.E. | Estimate/S.E |

|---|

| Gender (Male = 1) | 0.420 | 0.076 | 5.562 * |

| African American & Hispanic | 0.153 | 0.182 | 0.841 |

| Asian | 0.459 | 0.111 | 4.147 * |

| Scholastic Aptitude on | | | |

| Gender (Male = 1) | −0.178 | 0.082 | −2.184 * |

| African American & Hispanic | −0.388 | 0.198 | −1.961 * |

| Asian | 0.118 | 0.155 | 0.758 |

| CMS Use by b | | | |

| SAT Mathematic | 0.221 | 0.027 | 8.246 * |

| SAT Mathematic on | | | |

| Gender (Male = 1) | 0.678 | 0.053 | 12.721 * |

| Final Grade on | | | |

| CMS Use | 0.068 | 0.028 | 2.450 * |

| Scholastic Aptitude | 0.944 | 0.039 | 24.259 * |

Male students had a significantly higher mean than female students in use of CMS (β = 0.42,

p < 0.001). In terms of ethnicity, Asian students had a significantly higher mean in use of the Blackboard CMS than Caucasian students (β = 0.46,

p < 0.001). There was no significant difference between African American and Caucasian students. Correspondingly, the study found that Asian students had the highest final scores among all ethnic groups. It’s worth mentioning that the causal relationship between academic achievement and use of CMS cannot be made. However, the association warrants further exploration. Further, Caucasian, African American, and Hispanic students used CMS at approximately the same frequency level, but Caucasian students had higher final scores than did African American and Hispanic students. One possible explanation is related to how students use CMS associated with their academic achievement. Green compared Caucasian and African American students’ use of Web 2.0 applications for academic purposes and found that Caucasian students were more likely to utilize the applications for academic purposes than were their African American peers [

54].

The model found that male students had significantly lower scholastic aptitude than female peers (β = −0.178,

p < 0.001). This finding resonates with recent literature. Many researchers found that gender inequality in US college entrance and achievement has been changed [

55,

56]. Chee, Pino, and Smith used survey data collected from students at a medium-size state university in the Southeast. Results of the analysis indicated that women were more likely to possess a more positive academic attitude than men and also tended to have higher GPAs.

In addition, the amount of involvement with the Blackboard CMS was found to be significantly related to students’ academic outcome. Variations in final grades were well explained by the two latent constructs. Computer use had a significant, small direct association with students’ academic outcome (β = 0.07, p < 0.001) whereas scholastic aptitude had a significant, large direct association with students’ academic outcome (β = 0.94, p < 0.001). In other word, students’ academic outcome was mainly associated with their scholastic ability, rather than the use of CMS.

Because differences in students’ use of the Blackboard CMS were found based on students’ gender and ethnicity, and such differences have been shown to be associated with students’ academic outcomes, educators may want to explore how students use different functions embedded in CMS. Such research might reveal which specific function has the most influential association with students’ learning outcomes. In this study, in order to answer this question, tests of equivalence were conducted to evaluate the specific functions embedded in CMS. Significant direct associations of gender and ethnicity on all indicator variables were of interest. The results showed that Hispanic and African American students appeared to use the discussion function more often than their Caucasian and Asian peers. But they had the lowest final course grades. The negative relationship between use of discussion function and the students’ final grade is incongruent with the existing study. Abbott

et al. [

57] suggested that students’ level of classroom involvements and interactions should have positively related with their academic achievements. The reason may be that the users may not appropriately use the “Discussion” platform as faculty expected them to. Burbules and Callister [

42] noted that technology can be used well or poorly. Its advantages can only be attained under the conditions of how the technology is used, by whom, and for what purposes. In addition, student’s learning success was not associated with technology itself, but with the quality of use specifically for learning purposes [

58].

The correlation between the CMS use and scholastic aptitude was significant. Students with higher scholastic aptitude tended to use CMS for course work more frequently, controlling for gender and ethnicity invariance. Similarly, more frequent use of CMS was closely associated with higher scholastic aptitude. The high inter-correlations indicated that there might be other factors that influence both latent factors. For example, social economic status may be a common cause for both computer use [

59] and scholastic aptitude [

60]. In addition, the imperfect representation of scholastic aptitude may also lead to the high correlation. In this study, the scholastic aptitude was represented by two variables: SAT and GPA. Extensive research has been conducted to determine what factors can accurately predict a student’s learning ability in college. Past studies have examined the relationship of SAT, ACT, and high school/current GPA on academic success [

61,

62,

63]. However, these measures, which only control for previous performance, are not sufficient to predict a student’s scholastic aptitude. Other potential factors worth exploration may include achievement motivation, cognitive development, and personality characteristics [

64,

65].