An interactive E-Learning Portal in Pediatric Endocrinology: Practical Experience

Abstract

:1. Introduction

2. Experimental Section

2.1. Plan

2.2. Do

| Competence | Question | Assessment |

|---|---|---|

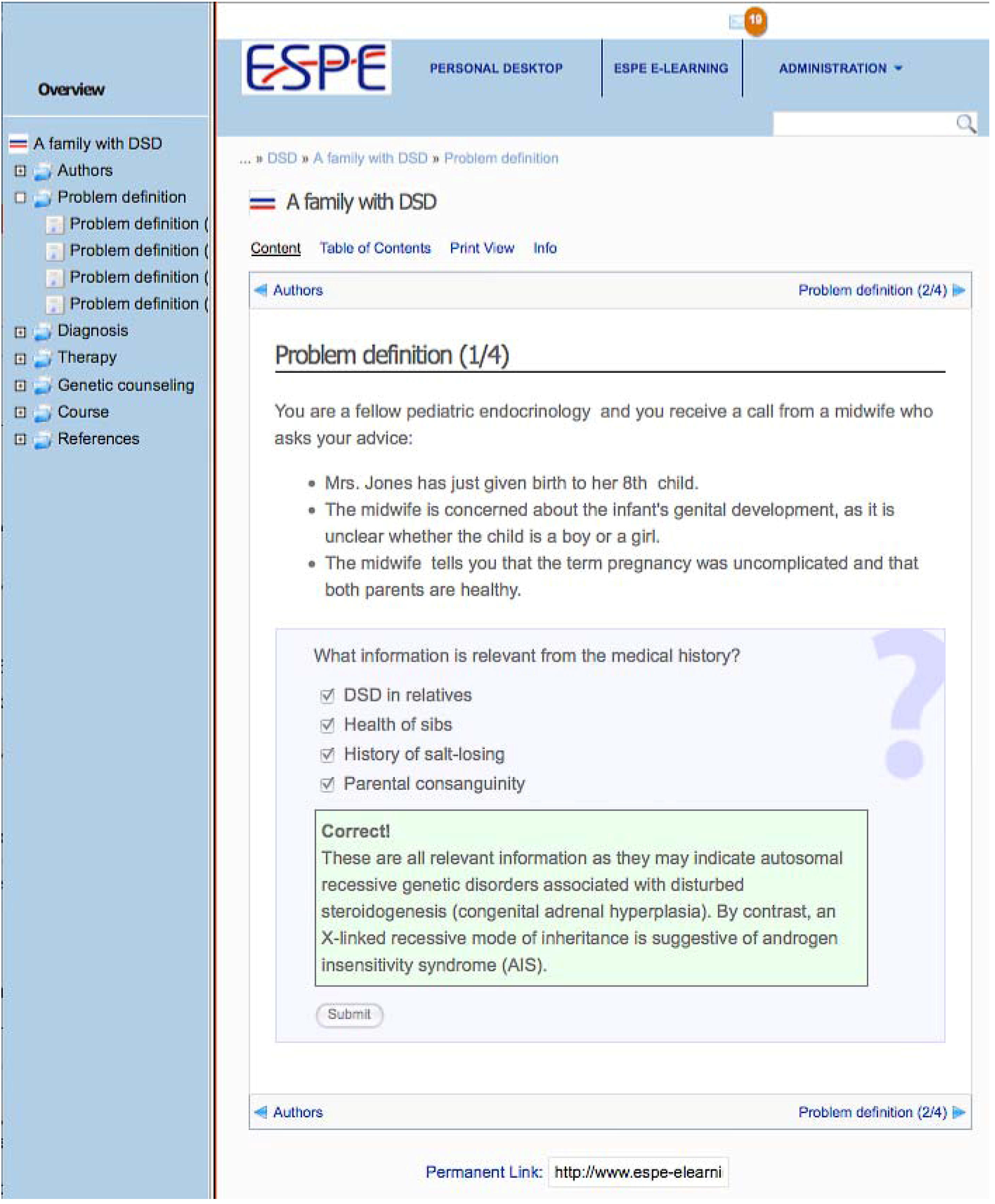

| You are a fellow pediatric endocrinologist and you receive a call from a pediatrician who asks your advice: Mrs. Johnson has just given birth to her second child. The pediatrician is concerned about the infant’s genital development, as it is unclear whether the child is a boy or a girl. | ||

| Medical expert | What information do you need from the pediatrician? | Multiple-choice question |

| Medical expert | What information is relevant to provide to the pediatrician? | Open question, provide correct items |

| Collaborator | What is your advice to the pediatrician? | Open question, feedback provided by expert |

| Communicator | What is your advice to the pediatrician to tell the parents? What to tell family and friends? | Open question, feedback provided by expert |

| The genitalia of the otherwise healthy infant indeed look very ambiguous. | ||

| Medical expert | What information regarding the physical examination are you specifically interested in? What further information needs to be collected and what tests need to be performed after the initial physical examination? | Multiple-choice question |

| The karyotype is 46,XX; based on hormonal and ultrasound investigations you diagnose congenital adrenal hyperplasia. | ||

| Medical Expert | Deficiency of which enzyme is most likely responsible? | Multiple-choice question |

| Both parents are very relieved that they have a girl and the mother says: “From the beginning, I had the feeling that I had a daughter!” | ||

| Communicator | You discuss this condition with the infant’s parents. What do you say? | Open question, feedback provided by expert |

2.3. Check

| Time period | Subject | Items studied | Participants | |

|---|---|---|---|---|

| Pilot 1 | March 2011 | User experience and quality | 3 cases, 3 chapters | 9 experts, 1 fellows, 9 residents, 6 medical students, total 35 |

| Pilot 2 | August 2011 | Interaction | 2 cases | 3 experts, 8 fellows, 3 residents, total 14 |

| Pilot 3 | October 2011 | Use for medical students | 2 cases | 4 medical students |

| Pilot 4 | November 2011 | Use for regional pediatricians | 2 cases | 9 pediatricians, 1 fellow |

| Pilot 5 | January 2012 | Use for masterclass | 2 cases | 9 experts, 1 fellow |

2.3.1. Pilot 1

2.3.2. Pilot 2

2.3.3. Pilot 3

2.3.4. Pilot 4

2.3.5. Pilot 5

3. Results and Discussion

3.1. Evaluation of Usability and Time Spent

| Evaluation by subgroup | N | Content (scale 1–10) | Illustrations (scale 1–10) | Effectiveness of learning | Time spent (min) | ||||

|---|---|---|---|---|---|---|---|---|---|

| mean | sd | mean | sd | mean | sd | mean | sd | ||

| Expert | 30 | 8.3 | 1.7 | 7.1 | 2.2 | 8.2 | 1.0 | 41 | 37 |

| Fellow | 35 | 8.6 | 1.5 | 7.0 | 2.4 | 8.3 | 0.9 | 56 | 40 |

| Medical student | 20 | 8.0 | 0.9 | 7.2 | 1.5 | 7.8 | 1.2 | 37 | 18 |

| Regional pediatrician | 20 | 8.4 | 0.9 | 7.4 | 1.7 | 8.2 | 0.8 | 19 | 8 |

| Resident | 22 | 8.3 | 1.4 | 7.2 | 1.6 | 7.9 | 1.3 | 53 | 45 |

| Total | 127 | 8.4 | 1.4 | 7.1 | 1.9 | 8.1 | 1.1 | 43 | 36 |

3.2. Personalized Feedback

3.3. Different Target Groups and Didactical Possibilities

4. Discussion

5. Conclusions

Acknowledgment

Conflicts of Interest

References

- Daetwyler, C.J.; Cohen, D.G.; Gracely, E.; Novack, D.H. Elearning to enhance physician patient communication: A pilot test of “doc.com” and “WebEncounter” in teaching bad news delivery. Med. Teach. 2010, 32, e381–e390. [Google Scholar] [CrossRef]

- Dyrbye, L.; Cumyn, A.; Day, H.; Heflin, M. A qualitative study of physicians’ experiences with online learning in a masters degree program: Benefits, challenges, and proposed solutions. Med. Teach. 2009, 31, e40–e46. [Google Scholar] [CrossRef]

- Orrel, J. Feedback on learning achievement: Rhetoric and reality. Teach. High. Educ. 2006, 11, 441–456. [Google Scholar] [CrossRef]

- Gibbs, G.; Simpson, C. Conditions under which assessment supports students’ learning. Learn. Teach. High. Educ. 2004, 5, 3–31. [Google Scholar]

- Van den Vleuten, C.P.M.; Schuwirth, L.W.T. Assessing professional competency: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef]

- Galbraith, M.; Hawkins, R.E.; Holmboe, E.S. Making self-assessment more effective. J. Contin. Educ. Health. 2008, 28, 20–24. [Google Scholar] [CrossRef]

- Schuwirth, L.W.T.; van den Vleuten, C.P.M. A plea for new psychometric models in educational assessment. Med. Educ. 2006, 40, 296–300. [Google Scholar] [CrossRef]

- Toward a theory of online learning. Available online: http://epe.lac-bac.gc.ca/100/200/300/athabasca_univ/theory_and_practice/ch2.html (accessed on 6 August 2013).

- Frank, J.R.; Danoff, D. The CanMEDS initiative: Implementing an outcomes-based framework of physician competencies. Med. Teach. 2007, 29, 642–647. [Google Scholar] [CrossRef]

- Frank, J.R.; Snell, L.S.; Cate, O.T.; Holmboe, E.S.; Carraccio, C.; Swing, S.R.; Harris, P.; Glasgow, N.J.; Campbell, C.; Dath, D.; et al. Cmpetency-based medical education: Theory to practice. Med. Teach. 2010, 32, 638–645. [Google Scholar] [CrossRef]

- Wong, R. Defining content for a competency-based (CanMEDS) postgraduate curriculum in ambulatory care: A delphi study. Can. Med. Educ. J. 2012, 3. Article 1. [Google Scholar]

- CanMEDS: Better standards, better physicians, better care. Available online: http://www.royalcollege.ca/portal/page/portal/rc/resources/aboutcanmeds (accessed on 6 August 2013).

- Stutsky, B.J.; Singer, M.; Renaud, R. Determining the weighting and relative importance of CanMEDS roles and competencies. BMC Res. Notes 2012, 5. [Google Scholar] [CrossRef]

- Michels, N.R.M.; Denekens, J.; Driessen, E.W.; van Gaal, L.F.; Bossaert, L.L.; de Winter, B.Y. A Delphi study to construct a CanMEDS competence based inventory applicable for workplace assessment. BMC Med. Educ. 2012, 12. [Google Scholar] [CrossRef]

- Espe e-learning portal. Available online: http://www.espe-elearning.org/ (accessed on 6 August 2013).

- Grijpink-van den Biggelaar, K.; Drop, S.L.S.; Schuwirth, L. Development of an e-learning portal for pediatric endocrinology: Educational considerations. Horm. Res. Paediatr. 2010, 73, 223–230. [Google Scholar]

- Deming, W.E. Out of the Crisis; MIT Center for Advanced Engineering Study: Cambridge, MA, USA, 1986. [Google Scholar]

- Bediang, G.; Stoll, B.; Geissbuhler, A.; Klohn, A.M.; Stuckelberger, A.; Nko’o, S.; Chastonay, P. Computer literacy and e-learning perception in cameroon: The case of yaounde faculty of medicine and biomedical sciences. BMC Med. Educ. 2013, 13. [Google Scholar] [CrossRef]

- Open source e-learning. Available online: http://www.ilias.de/docu/goto.php?target=root_1 (accessed on 6 August 2013).

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Kranenburg-van Koppen, L.J.C.; Grijpink-van den Biggelaar, K.; Drop, S.L.S. An interactive E-Learning Portal in Pediatric Endocrinology: Practical Experience. Pharmacy 2013, 1, 160-171. https://doi.org/10.3390/pharmacy1020160

Kranenburg-van Koppen LJC, Grijpink-van den Biggelaar K, Drop SLS. An interactive E-Learning Portal in Pediatric Endocrinology: Practical Experience. Pharmacy. 2013; 1(2):160-171. https://doi.org/10.3390/pharmacy1020160

Chicago/Turabian StyleKranenburg-van Koppen, Laura J. C., Kalinka Grijpink-van den Biggelaar, and Stenvert L. S. Drop. 2013. "An interactive E-Learning Portal in Pediatric Endocrinology: Practical Experience" Pharmacy 1, no. 2: 160-171. https://doi.org/10.3390/pharmacy1020160