Autonomous Point Cloud Acquisition of Unknown Indoor Scenes

Abstract

:1. Introduction

2. Methodology

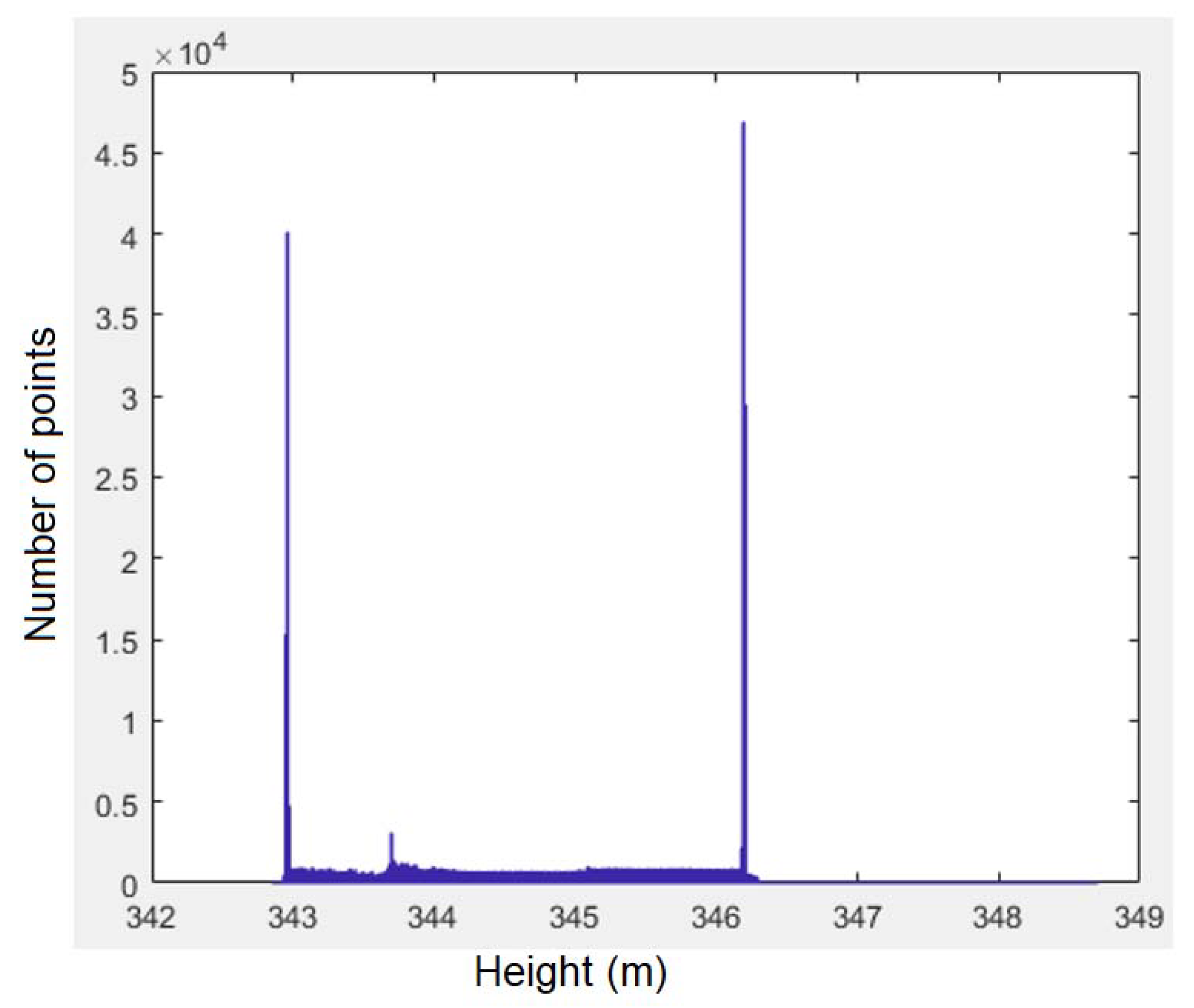

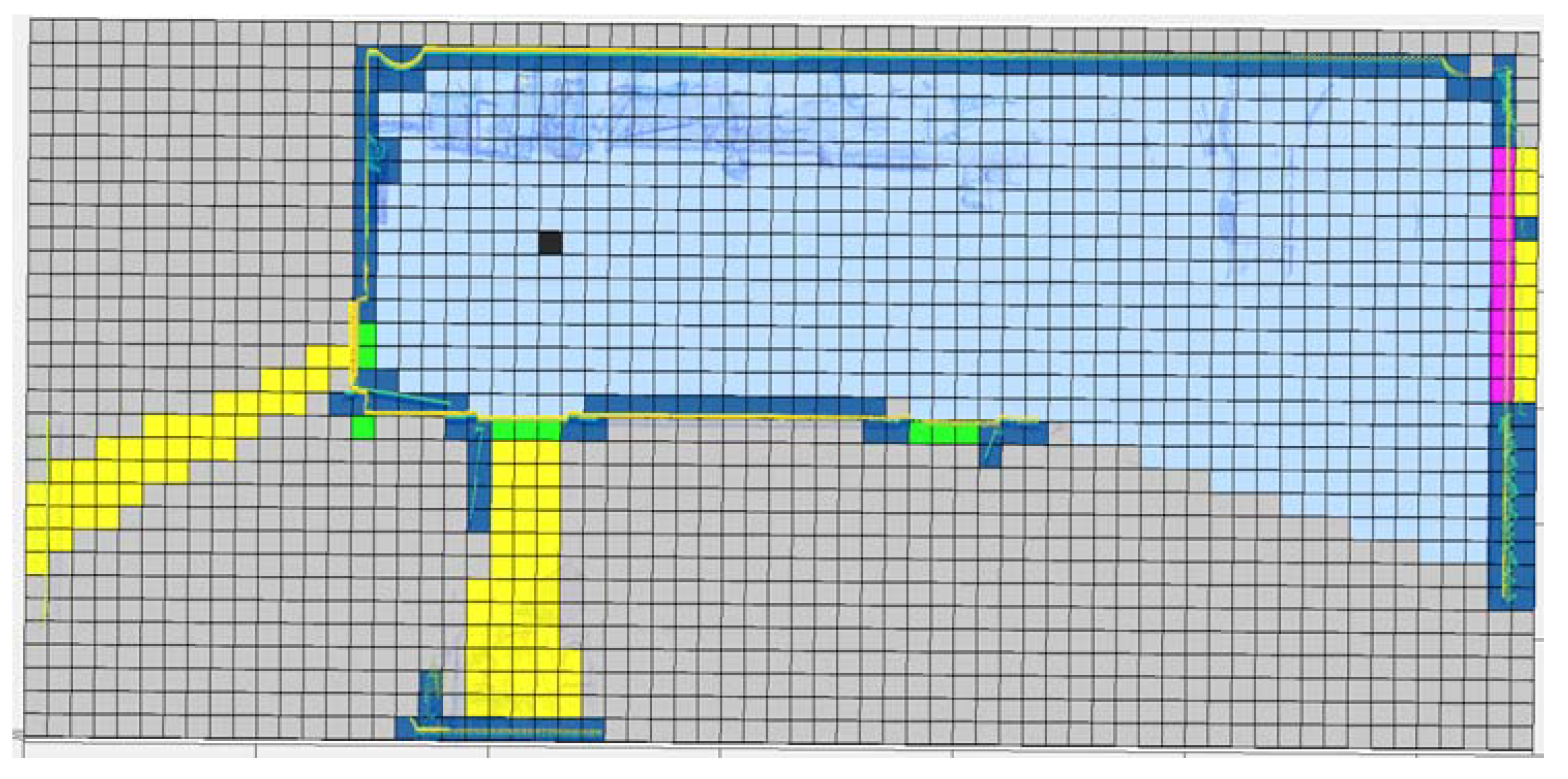

2.1. Data Collection and Pre-processing

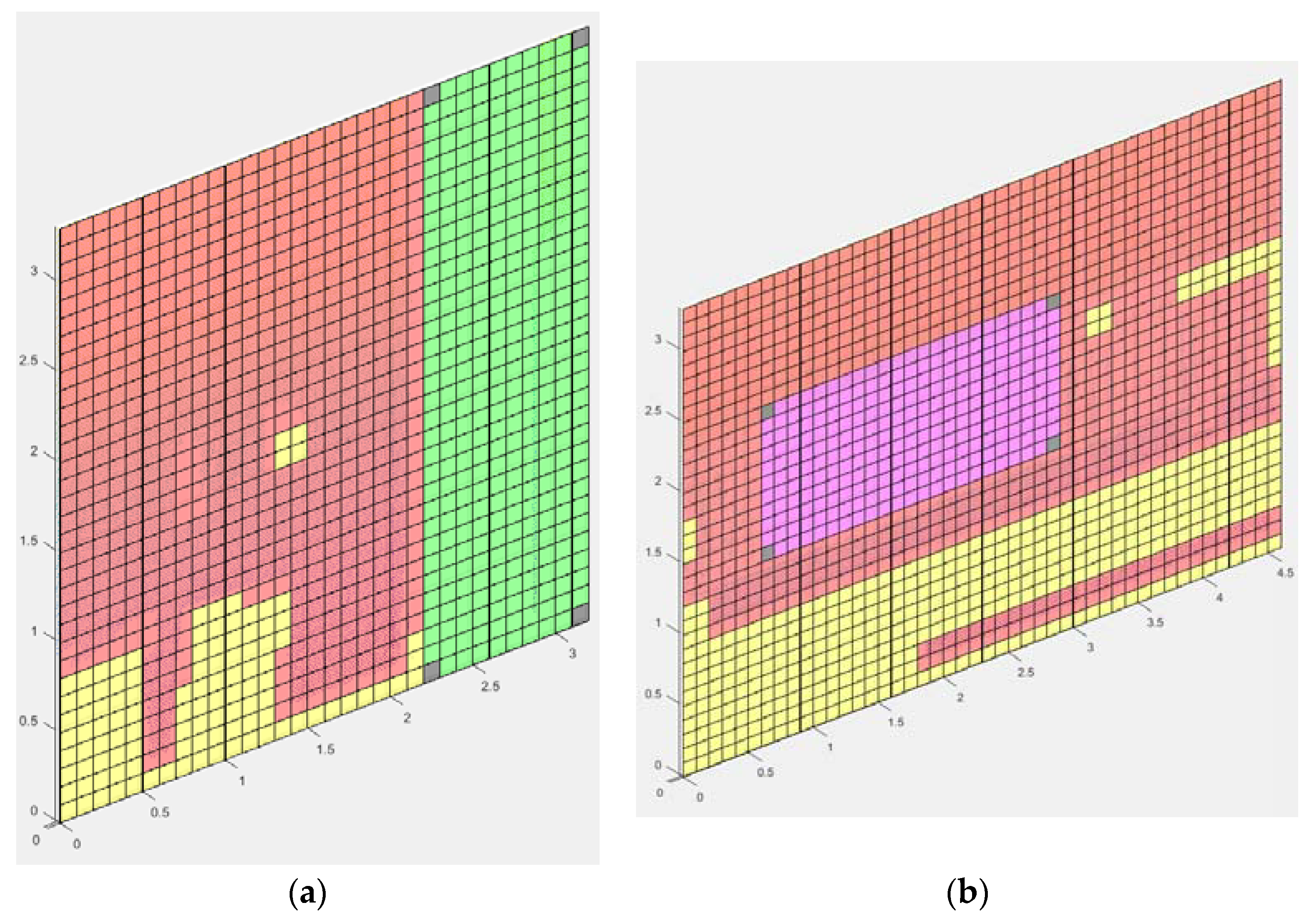

- Empty: Voxels with a direct line of sight (LOS) from the scanner position and no points inside.

- Occupied: Voxels containing a minimum number of points.

- Occluded: Empty voxels without a direct LOS from the scanner position and no points inside; their emptiness is not assured.

- NBV: (next best view) A unique empty voxel that is the optimum next scanning position.

- Window: Empty voxels that belong to a window in the room.

- Door: Empty voxels that belong to a door in the room.

- Out: Empty voxels with a direct LOS from the scanner position and through a window or door voxel.

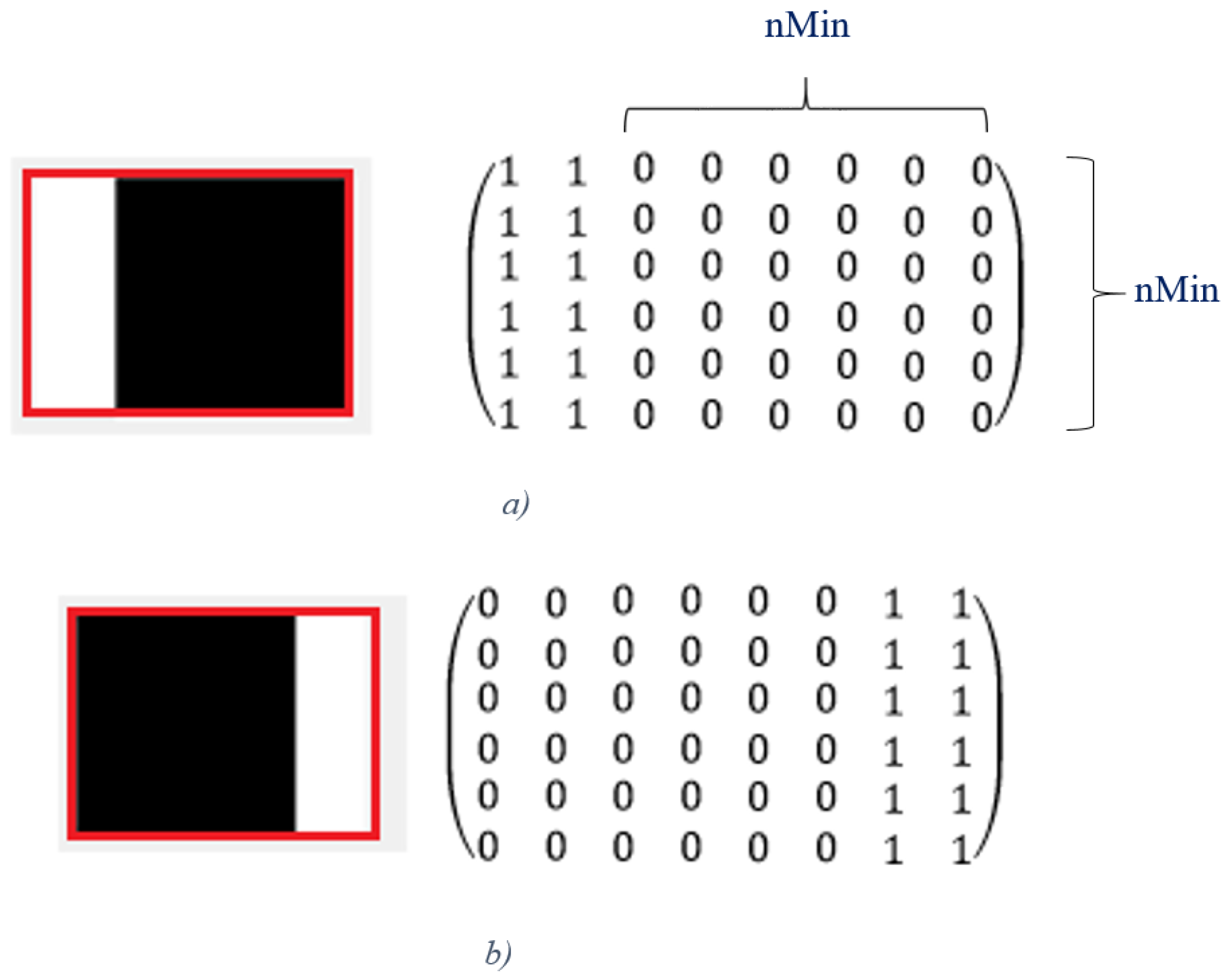

2.2. Voxel Classification

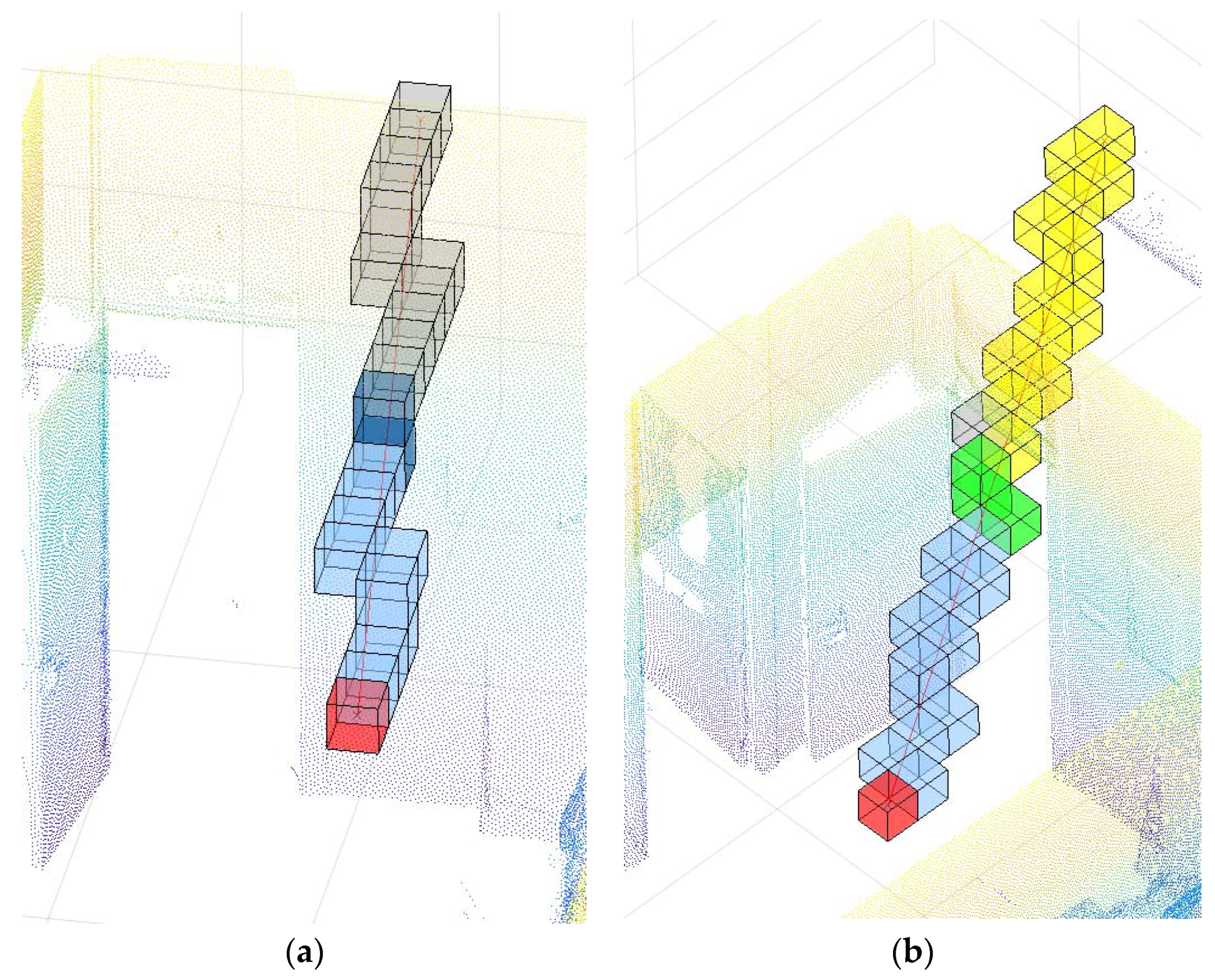

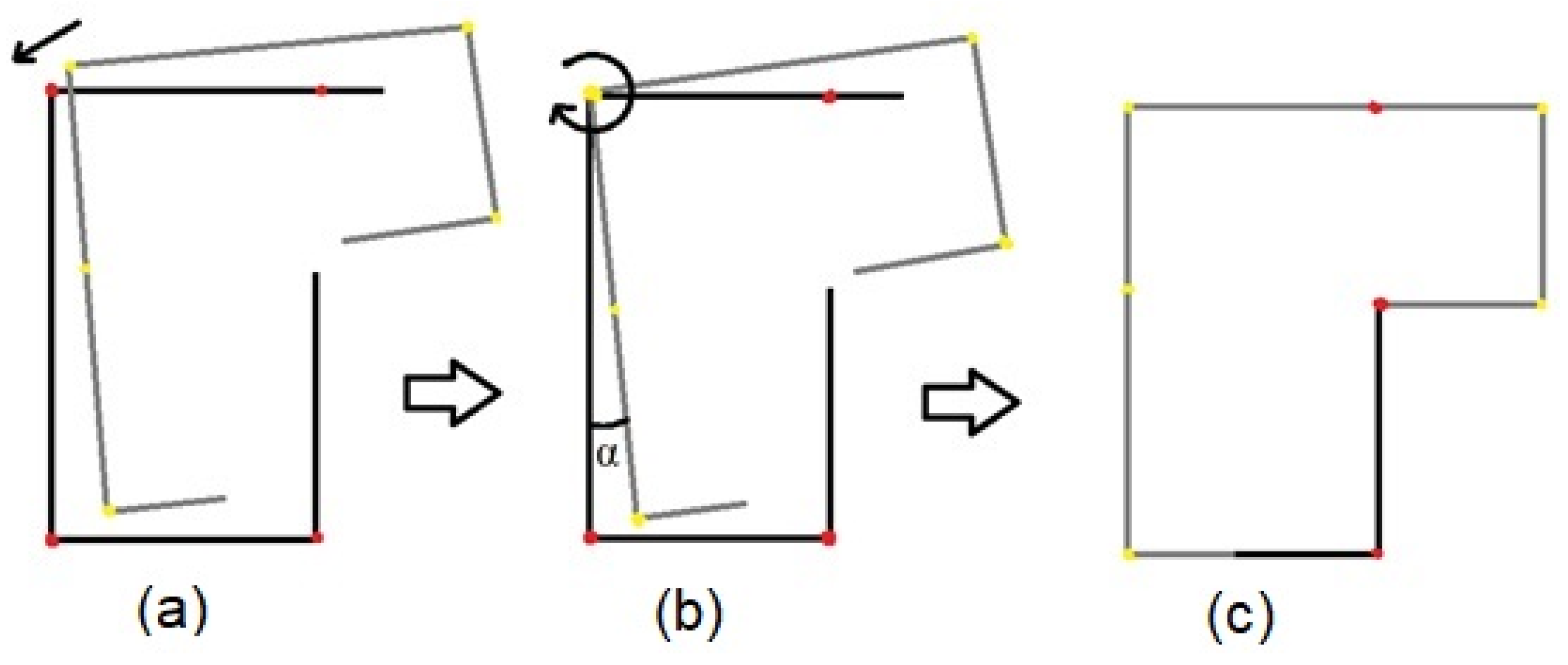

2.3. Next Best View

- Zero-point score if there was not a direct LOS between the NBV candidate and the objective occluded voxel.

- One-point score if there was a direct LOS between the NBV candidate and the objective occluded voxel.

- In case of a semi-direct LOS from the NBV candidate, the score was calculated according to Equation (7), where is the number of intermediate occluded voxels crossed.

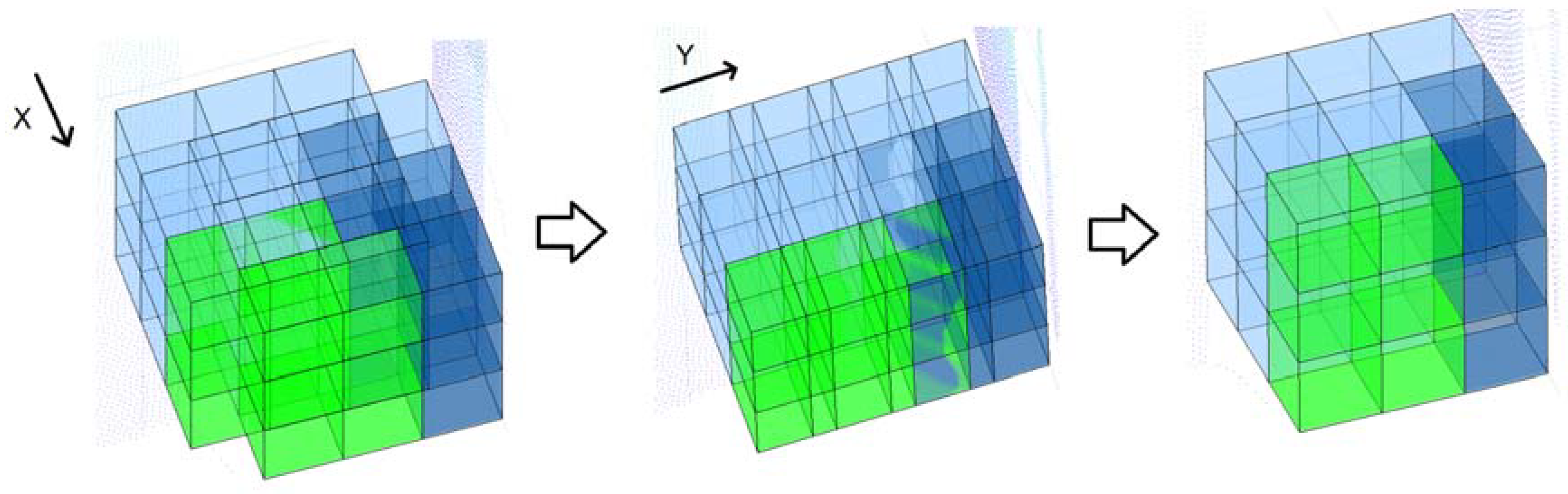

2.4. Scan Registration and Voxel Growing

3. Results and Discussion

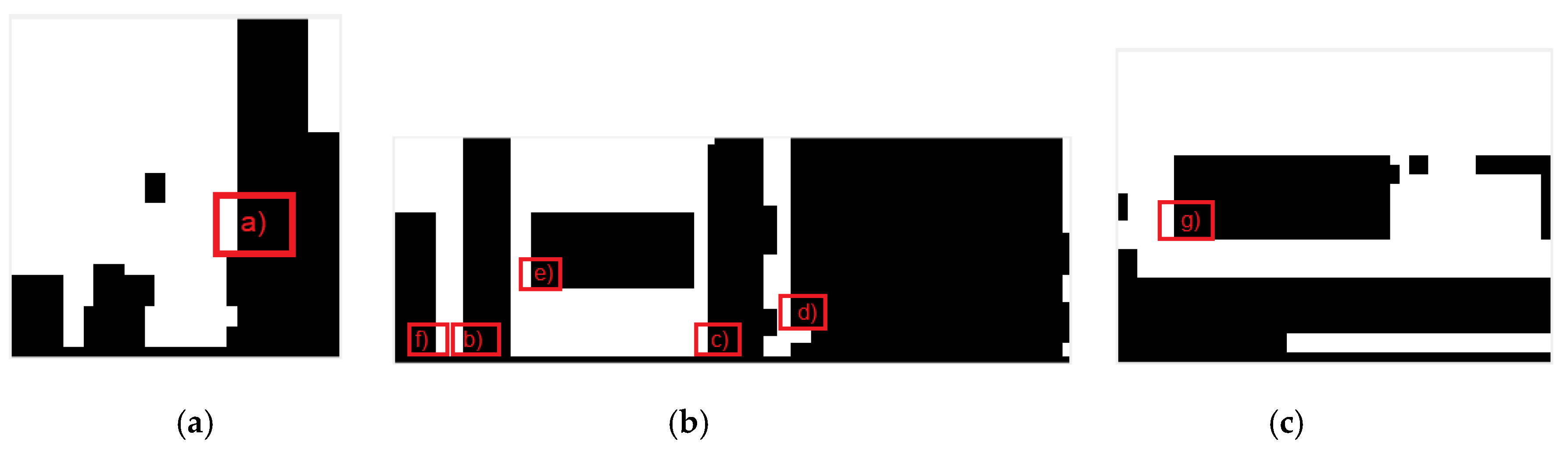

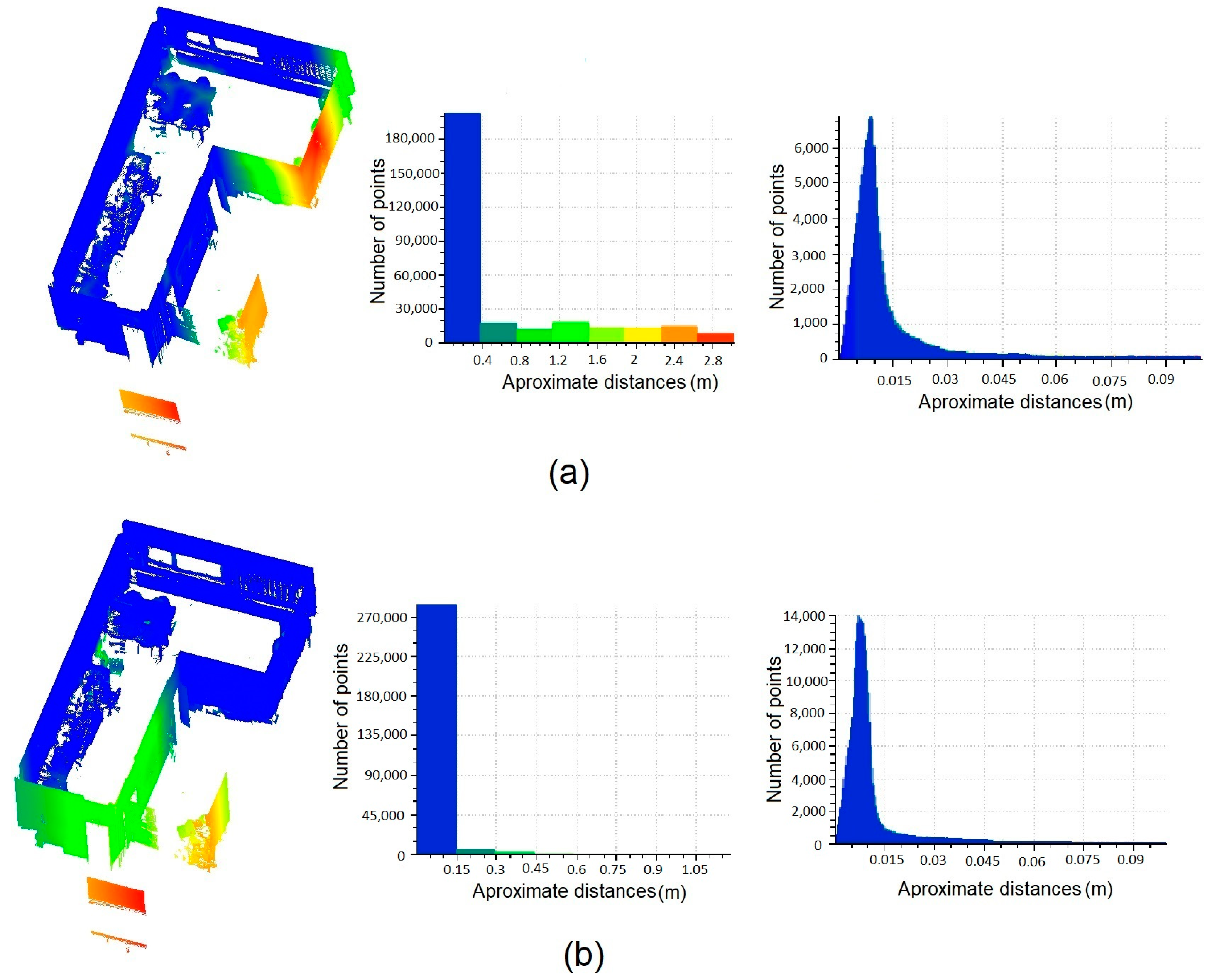

Case Study

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Navon, R. Research in automated measurement of project performance indicators. Autom. Constr. 2007, 16, 176–188. [Google Scholar] [CrossRef]

- Boukamp, F.; Akinci, B. Automated processing of construction specifications to support inspection and quality control. Autom. Constr. 2007, 17, 90–106. [Google Scholar] [CrossRef]

- Akinci, B.; Boukamp, F.; Gordon, C.; Huber, D.; Lyons, C.; Park, K. A formalism for utilization of sensor systems and integrated project models for active construction quality control. Autom. Constr. 2006, 15, 124–138. [Google Scholar] [CrossRef] [Green Version]

- Bosché, F. Automated recognition of 3D CAD model objects in laser scans and calculation of as-built dimensions for dimensional compliance control in construction. Adv. Eng. Inform. 2010, 24, 107–118. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Y.; Zhan, Q.; Pang, Q. 3D data acquisition by terrestrial laser scanning for protection of historical buildings. In Proceedings of the International Conference on Wireless Communications, Networking and Mobile Computing (WiCom 2007), Shanghai, China, 21–25 September 2007. [Google Scholar]

- Borrmann, D.; Hess, R.; Houshiar, H.; Eck, D.; Schilling, K.; Nüchter, A. Robotic mapping of cultural heritage sites. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 9. [Google Scholar] [CrossRef]

- Bosché, F.; Guenet, E. Automating surface flatness control using terrestrial laser scanning and building information models. Autom. Constr. 2014, 44, 212–226. [Google Scholar] [CrossRef]

- Díaz-Vilariño, L.; Conde, B.; Lagüela, S.; Lorenzo, H. Automatic detection and segmentation of columns in as-built buildings from point clouds. Remote Sens. 2015, 7, 15651–15667. [Google Scholar] [CrossRef]

- Bueno, M.; Díaz-Vilariño, L.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Quantitative Evaluation of CHT and GHT for Column Detection under Different Conditions of Data Quality. J. Comput. Civ. Eng. 2017, 31, 04017032. [Google Scholar] [CrossRef]

- Elberink, S.O.; Vosselman, G. Quality analysis on 3D building models reconstructed from airborne laser scanning data. ISPRS J. Photogramm. Remote Sens. 2011, 66, 157–165. [Google Scholar] [CrossRef]

- Soudarissanane, S.S.; Lindenbergh, R.C. Optimizing terrestrial laser scanning measurement set-up. In Proceedings of the ISPRS Workshop Laser Scanning, Calgary, AB, Canada, 29–31 August 2011; Volume XXXVIII (5/W12). [Google Scholar]

- López-Damian, E.; Etcheverry, G.; Sucar, L.E.; López-Estrada, J. Probabilistic view planner for 3D modelling indoor environments. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), St. Louis, MO, USA, 11–15 October 2009. [Google Scholar]

- Budroni, A.; Boehm, J. Automated 3D reconstruction of interiors from point clouds. Int. J. Archit. Comput. 2010, 8, 55–73. [Google Scholar] [CrossRef]

- Previtali, M.; Scaioni, M.; Barazzetti, L.; Brumana, R. A flexible methodology for outdoor/indoor building reconstruction from occluded point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 119. [Google Scholar] [CrossRef]

- Adan, A.; Huber, D. 3D reconstruction of interior wall surfaces under occlusion and clutter. In Proceedings of the International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), Hangzhou, China, 16–20 May 2011. [Google Scholar]

- Lee, Y.-C.; Park, S.-H. 3D map building method with mobile mapping system in indoor environments. In Proceedings of the 16th International Conference on Advanced Robotics (ICAR), Montevideo, Uruguay, 25–29 November 2013. [Google Scholar]

- Kotthauser, T.; Soorati, M.D.; Mertsching, B. Automatic reconstruction of polygonal room models from 3D point clouds. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013. [Google Scholar]

- Blaer, P.S.; Allen, P.K. Data acquisition and view planning for 3-D modeling tasks. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]

- Potthast, C.; Sukhatme, G.S. A probabilistic framework for next best view estimation in a cluttered environment. J. Vis. Commun. Image Represent. 2014, 25, 148–164. [Google Scholar] [CrossRef] [Green Version]

- Xiao, J.; Owens, A.; Torralba, A. Sun3D: A database of big spaces reconstructed using Sfm and object labels. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Maurovic, I.; Petrovic, I. Autonomous Exploration of Large Unknown Indoor Environments for Dense 3D Model Building. IFAC Proc. Vol. 2014, 47, 10188–10193. [Google Scholar] [CrossRef]

- Connolly, C. The determination of next best views. In Proceedings of the IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, USA, 25–28 March 1985. [Google Scholar]

- Surmann, H.; Nüchter, A.; Hertzberg, J. An autonomous mobile robot with a 3D laser range finder for 3D exploration and digitalization of indoor environments. Robot. Auton. Syst. 2003, 45, 181–198. [Google Scholar] [CrossRef] [Green Version]

- Grabowski, R.; Khosla, P.; Choset, H. Autonomous exploration via regions of interest. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar] [Green Version]

- Kawashima, K.; Yamanishi, S.; Kanai, S.; Date, H. Finding the next-best scanner position for as-built modeling of piping systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 313. [Google Scholar] [CrossRef]

- Albalate, M.T.L.; Devy, M.; Miguel, J.; Martin, S. Perception planning for an exploration task of a 3D environment. In Proceedings of the 16th International Conference Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002. [Google Scholar]

- Adán, A.; Quintana, B.; Vázquez, A.S.; Olivares, A.; Parra, E.; Prieto, S. Towards the automatic scanning of indoors with robots. Sensors 2015, 15, 11551–11574. [Google Scholar] [CrossRef] [PubMed]

- Prieto, S.A.; Quintana, B.; Adán, A.; Vázquez, A.S. As-is building-structure reconstruction from a probabilistic next best scan approach. Robot. Auton. Syst. 2017, 94, 186–207. [Google Scholar] [CrossRef]

- Robotnik. Available online: https://www.robotnik.es/robots-moviles/guardian/ (accessed on 26 March 2018).

- Weingarten, J.; Siegwart, R. EKF-based 3D SLAM for structured environment reconstruction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2005), Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar]

- Demim, F.; Nemra, A.; Louadj, K. Robust SVSF-SLAM for unmanned vehicle in unknown environment. IFAC-PapersOnLine 2016, 49, 386–394. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Proc. Comput. Graph. Forum 2010, 26, 214–226. [Google Scholar] [CrossRef]

- Chen, Q.; Gong, P.; Baldocchi, D.; Xie, G. Filtering airborne laser scanning data with morphological methods. Photogramm. Eng. Remote Sens. 2007, 73, 175–185. [Google Scholar] [CrossRef]

| Scan Number | V. Occluded | V. Occupied | V. Empty | V. Door | V. Window | V. Out | Total Voxels | V. Matrix Dimension |

|---|---|---|---|---|---|---|---|---|

| First Scan | 55.69% | 7.03% | 34.16% | 0.31% | 0.14% | 2.67% | 34,255 | 65 × 32 × 17 |

| Second Scan | 43.49% | 10.54% | 42.14% | 0.27% | 0.13% | 3.44% | 34,255 | 65 × 32 × 17 |

| Third Scan | 42.35% | 11.76% | 42.15% | 0.27% | 0.13% | 3.34% | 34,255 | 65 × 32 × 17 |

| Scan Number | 5 Points | 10 Points | 20 Points | 30 Points | 40 Points |

|---|---|---|---|---|---|

| First Scan | 2782 | 2646 | 2408 | 2164 | 1946 |

| Second Scan | 4061 | 4060 | 3610 | 3394 | 3171 |

| Third Scan | 4319 | 4321 | 4030 | 3678 | 3483 |

| Scan Number | nV Occluded | Stop Percentage |

|---|---|---|

| First Scan | 549 | 3.5% |

| Second Scan | 65 | 0.35% |

| Third Scan | 18 | 0.09% |

| Pattern | var(nRowsBelow) | var(nRowsAbove) | var(nColumns) | Area (m2) | Door/Window |

|---|---|---|---|---|---|

| (a) | 0 | 0 | 2.0625 | 3.3 | True |

| (b) | 0 | 0 | 0 | 2.31 | True |

| (c) | 0.1429 | 0 | 3.1894 | 2.64 | True |

| (d) | 0 | 7.14 | 6.2476 | 13.2 | False |

| (e) | 0 | 0 | 0 | 2.64 | True |

| (f) | 0 | 0 | 0 | 1.32 | True |

| (g) | 0 | 0 | 0.1944 | 2.07 | True |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-de Santos, L.M.; Díaz-Vilariño, L.; Balado, J.; Martínez-Sánchez, J.; González-Jorge, H.; Sánchez-Rodríguez, A. Autonomous Point Cloud Acquisition of Unknown Indoor Scenes. ISPRS Int. J. Geo-Inf. 2018, 7, 250. https://doi.org/10.3390/ijgi7070250

González-de Santos LM, Díaz-Vilariño L, Balado J, Martínez-Sánchez J, González-Jorge H, Sánchez-Rodríguez A. Autonomous Point Cloud Acquisition of Unknown Indoor Scenes. ISPRS International Journal of Geo-Information. 2018; 7(7):250. https://doi.org/10.3390/ijgi7070250

Chicago/Turabian StyleGonzález-de Santos, L. M., L. Díaz-Vilariño, J. Balado, J. Martínez-Sánchez, H. González-Jorge, and A. Sánchez-Rodríguez. 2018. "Autonomous Point Cloud Acquisition of Unknown Indoor Scenes" ISPRS International Journal of Geo-Information 7, no. 7: 250. https://doi.org/10.3390/ijgi7070250