1. Introduction

Drug discovery often involves multiple stages, beginning with the identification of a biological target, followed by the parallel screening of thousands of compounds and, finally, the production of the new drug. This technique is time-consuming, costly, and plagued with numerous difficulties. Virtual screening (VS) is a drug discovery computational technique that searches libraries of molecules for structures that are most able to belong to a drug target at a reasonable cost and time. Virtual screening is classified into two types: structure-based approaches, such as ligand-protein docking, and ligand-based approaches, such as similarity searching, machine learning, and pharmacophore mapping [

1,

2,

3,

4,

5]. Similarity searching is the most effective and one of the maximal broadly used equipment for ligand-based virtual screening because it requires only a bioactive molecule, or reference structure, as the point to begin a database search. The fundamental concept underlying similarity searching states that structurally compatible molecules will show off similar physicochemical and organic properties. A similarity search compares target structure characteristics with each structure’s attributes in the database. The degree of resemblance of these two sets of features is used to measure the degree of closeness. Then, the database structures are usually ranked in decreasing order of similarity to the target. When discussing LBVS, various elements must be considered, including molecular representation and similarity coefficients, among others [

6,

7,

8,

9,

10,

11]. In cheminformatics, fingerprints are a crucial and widely used concept. Their primary goal is to create numerical representations of molecules’ structure or specific properties, allowing the comparison of two molecules to be quantified [

1]. Molecular characteristics are spread from physicochemical attributes to structural features and are stored in various methods, referred to as molecular descriptors. The molecular descriptor aims to capture the most important features of the molecule. To compare the fingerprints of two chemicals, various similarity metrics can be used, such as Euclidean distance, Manhattan distance, and Dice coefficient, but the Tanimoto coefficient is the most preferred [

5].

In recent years, data fusion has gained acceptance as one of the methods for improving the performance of existing systems for ligand-based virtual screening. Data fusion is the process of integrating numerous data sources into a single source using fusion techniques, assuming that the outcomes of the fused source will be more valuable than the individual input sources. For example, when many similarity coefficients were combined, it became more active than when individual coefficients were employed [

1,

4,

12,

13]. Dasarathy presented one of the most well-known data fusion classification systems, which is made up of the following five categories: (1) Data in-Data out, in which the raw data is inputted and outputted, the outcomes are often more accurate or dependable; (2) Data in-Feature out, in which the data fusion method uses the raw data from various participants at this level to extract the features or characteristics that describe an object or class; (3) Feature in-Feature out, in which the input and output of the data fusion procedure here are the features to enhance, hone, or create new features; (4) Feature in-Decision out, this level produces a set of decisions based on a collection of features that are obtained as input; (5) Decision In-Decision Out: is also called decision fusion, to fuse the input decisions and produce superior or novel judgments [

14].

Several approaches were concerned with enhancing and increasing the retrieval effectiveness of the methods of similarity searching and the ways to calculate them. Several efforts were taken to improve and increase the retrieval efficacy of similarity searching methods, concluding that the Tanimoto coefficient is the industry-standard and outperforms others [

15,

16,

17,

18]. Some studies sought to include approaches from text document retrieval and apply them to molecular searches, such as Abdo et al., who used a Bayesian network that was originally used in the text field in document retrieval, and modified it as the retrieval model in the cheminformatic area [

6]. Furthermore, Al Dabagh et al. applied quantum mechanics physics concepts to improve the molecular similarity searching and molecular ranking of chemical compounds in LBVS [

9]. Some researchers, such as Ahmed et al., are working on weighting approaches to increase the retrieval effectiveness of a Bayesian inference network, allowing more weights to be added to relevant fragments while deleting the unnecessary ones [

19,

20,

21]. Some studies looked into data fusion and proposed that similarity measurements be merged by combining the screening results obtained by employing multiple similarity measures. Nasser et al. fused several descriptors by selecting the best features from each descriptor and then merging them in the new descriptor [

3,

4,

22]. Although the above methods outperform their predecessors, particularly when dealing with molecules with homogeneous active structural elements such as molecules’ classes in MDDR-DS2 dataset as will shown in

Section 3.1, the performances are not good or satisfactory when dealing with molecules with a structurally heterogeneous nature such as molecules’ classes in MDDR-DS3 dataset as will shown in

Section 3.1.

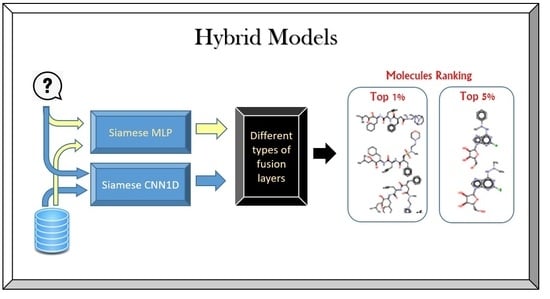

On the other hand, The Siamese network has been used for more complicated data samples, especially with heterogeneous data samples, and it is possible to employ deep learning methods with Siamese architecture, which deals efficiently with the vast volume of information stored in databases [

23,

24]. Altalib et al. employed many deep learning methods in Siamese architecture after enhancing with two similarity measures and one fusion layer to improve the retrieval effectiveness of molecules that have a structurally heterogeneous nature. The first study employed four methods of deep learning in Siamese architecture, which are: Gated Recurrent Unit (GRU), Long Short-Term Memory (LSTM), Convolutional Neural Network-two dimensions (CNN-2D), and Convolutional Neural Network-one dimension (CNN-1D). The second study employed Multilayer Perceptron (MLP) in Siamese architecture [

25,

26]. This study continues to improve the effectiveness of similarity retrieval for molecules that have structurally heterogeneous by incorporating the two best previous models into one hybrid model. The reason is that each method gives good results in some classes, so combining them in one hybrid model may improve the retrieval recall. The following are the main contributions of this study:

The Siamese architecture for selected methods will be enhanced with three similarity measures to better improve the similarity measurements between molecules.

Incorporate many designs of a hybrid model from the selected two models. As mentioned before, each method gives good results in some classes, so combining them in one hybrid model may improve the retrieval recall.

Compared to previous approaches, the proposed strategy yielded promising results in terms of overall performance, particularly when dealing with heterogeneous classes of molecules.

4. Results and Discussion

The ECFC-4 descriptor’s experimental outcomes on the MDDRDS1, MDDR-DS2, MDDR-DS3, and MUV data sets are provided in

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11 and

Table 12, respectively, using cut-offs 1 and 5%. In addition, the results of the proposed Hybrid Siamese Similarity Models are recorded in these tables compared to the benchmark Tanimoto Similarity Coefficient (TAN) and previous studies, which are Bayesian inference (BIN), quantum similarity search (SQB), Stack of deep belief networks (SDBN) in MDDR datasets only, and two of our proposal methods of Siamese architecture in previous studies, which are the SMLP similarity model and SCNN1D similarity model. The hybrid Siamese similarity model with decision fusion using two similarity measures is here called the Hybrid-D-Max2. The hybrid Siamese similarity model with decision fusion using three similarity measures is here called the Hybrid-D-Max3. The hybrid Siamese similarity model with feature fusion summation is here called the Hybrid-F-Sum. The hybrid Siamese similarity model with feature fusion max is here called the Hybrid-F-Max. Each row in the tables lists recall values for the top 1% and 5% of the activity class, and in each row, the best recall rate is shaded. In the tables, the mean row relates to the average of all activity classes, and the row of shaded cells is the total number of shaded cells with the top values for each technique over the full range of activity classes. The first column of the table represents the activity classes of the dataset. This is followed by four columns that represent the previous studies: TAN, BIN, SQR, and SDBN, and this is followed by two columns that represent the two proposed Siamese in previous studies: SMLP, and SCNN1D. It is then followed by four columns representing the proposed hybrid models in this study.

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15 show the contrast among methods for the average recall percentage of successful compound retrieval at the top of the 1% and 5% in MDDRDS1, MDDRDS2, MDDRDS3, and MUV, respectively.

The results presented in MDDR-DS1 (structurally homogeneous and heterogeneous) recall values for the 1 and 5% cut-offs recorded in

Table 5 and

Table 6 showed that the proposed hybrids of Siamese similarity models were obviously superior to the benchmark studies: TAN, BIN, SQB, SDBN, and previous two selected proposed methods: Siamese SMLP and SCNN1D. In addition, among other hybrid Siamese similarity models, the Hyper model (Hybrid-F-Max) gives the best retrieval recall results in

Table 5, in view of the mean and the number of shaded cells, followed by the Hyper model (Hybrid-F-Sum), in view of the mean, followed by the Hyper Siamese model (Hybrid-D-Max2) and the Hyper Siamese model (Hybrid-D-Max3), in view of the mean, followed by the proposed methods in objective one SCNN1D and SMLP, and followed by the SDNB, BIN, SQB, and TAN in view of the mean. The improvement percentages of the hybrid-F-Max model in the mean recall values compared with previous studies and the two proposed methods in objective one are 15.25, 21.09, 55.2, 48.28, 50.35, and 44.45 compared to SCNN1D, SMLPearly, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Sum model are 9.23,15.48, 52.03, 44.60, 46.82, 40.50 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max3 model are 0.41, 7.26, 47.37, 39.22, 41.65, 34.72 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max2 model are 0.89, 7.71, 47.62, 39.52, 41.94, and 35.03 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB respectively.

Figure 8 compares methods for the average recall percentage of successful compound retrieval at the top 1% in MDDR-DS1. By comparison, the Hybrid-F-Max proposed method gives the best retrieval recall results in

Table 6 in view of the mean, and the number of shaded cells, followed by the Hybrid-F-Sum, Hybrid-D-Max 2, Hybrid-D-Max 3, SCNN1D, and SMLP. Then, SDNB, BIN, SQB, and TAN are in view of the mean. The improvement percentages of the hybrid-F-Max model are 15.50, 18.26, 49.49, 45.24, 48.42, and 40.69 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Sum model are 11.03, 13.94, 46.82, 42.35, 45.70, and 37.55 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max3 model are 0.49, 3.74, 40.52, 35.51, 39.26, and 30.15 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max2 model are 0.68, 3.92, 40.63, 35.64, 39.38, and 30.28 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. Finally,

Figure 9 compares methods for the average recall percentage of successful compound retrieval at the top 5% in MDDR-DS1.

Furthermore, the MDDR-DS2 (structurally homogeneous) recall values recorded for the top 1% in

Table 7 show that some of the proposed Siamese similarity models’ proposed hybrids are superior to the benchmark TAN method and previous studies. The Hyper Siamese with the decision fusion max model with three similarity measures Hybrid-D-Max 3 gives the best retrieval recall results in

Table 7 in view of the mean, followed by SCNN1D in view of the mean and the number of shaded cells, and followed by Hybrid-D-Max 2, Hybrid-F-Max, Hybrid-F-Sum, SDBN, BIN, SQB, SMLP, and TAN. The improvement percentages of the hybrid-D-Max3 model are 0.05, 7.42, 27.79, 6.34, 6.89, and 5.12 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max2 model are 4.01, 25.13, 2.89, 3.46, and 1.62 compared to SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Max model are 3.74, 24.92, 2.62, 3.18, and 1.34 compared to SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Sum model are 2.97, 24.32, 1.84, 2.41, and 0.56 compared to SMLP, TAN, BIN, SQB, and SDNB, respectively.

Figure 10 shows the comparison among methods for the average recall percentage of successful compound retrieval at the top 1% in MDDR-DS2. However, the MDDR-DS2 recall values recorded for 5% cut-offs in

Table 8 show that the BIN method gave the best retrieval recall results in view of the mean and the number of shaded cells. The second best is SQB, followed by SDBN, Hybrid-D-Max 3, SCNN1D, Hybrid-D-Max 2, Hybrid-F-Max, Hybrid-F-Sum, Hybrid-D-Max 2, and finally, TAN in view of the mean values. The improvement percentages of the hybrid-D-Max model are 0.07, 5.36, and 16.24 compared to SCNN1D, SMLP, and TAN, respectively. The improvement percentages of the hybrid-D-Max2 model are 3.09 and 4.22 compared to SMLP and TAN. The improvement percentages of the hybrid-F-Max model are 2.51 and 13.71 compared to SMLP and TAN. The improvement percentages of the hybrid-F-Max model are 1.83 and 13.11 compared to SMLP and TAN.

Figure 11 shows the comparison among methods for the average recall percentage of successful compound retrieval at the top 5% in MDDR-DS2.

Moreover, the MDDR-DS3 (structurally heterogeneous) recall values for the 1 and 5% cut-offs recorded in

Table 9 and

Table 10 demonstrated that the proposed hybrids Siamese similarity models were superior to the benchmark TAN method and other studies: TAN, BIN, SQB, SDBN, and previous two selected proposed methods: Siamese MLP and CNN1D. In addition, among other hybrid Siamese similarity models, the Hyper Siamese with Feature fusion Max model (Hybrid-F-Max) gives the best retrieval recall results in

Table 9 in view of the mean and the number of shaded cells, followed by the Hyper Siamese with Feature fusion Sum model (Hybrid-F-Sum) in view of the mean, followed by SMLP, the Hybrid-D-Max3, SCNN1D, and the Hybrid-D-Max2, and followed by the SDNB, BIN, SQB, and TAN in view of the mean. The improvement percentages of the hybrid-F-Max model are 17.03, 12.64, 70.72, 65.68, 68.44, and 50.80 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Sum model are 14.62, 10.10, 69.87, 64.67, 67.52, and 49.37 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max3 model are 4.06, 66.15, 60.31, 63.51, and 43.11 compared to SCNN1D, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max2 model are 64.66, 58.57, 61.91, and 40.61 compared to TAN, BIN, SQB, and SDNB, respectively.

Figure 12 compares methods for the average recall percentage of successful compound retrieval at the top 1% in MDDR-DS3. By comparison, the Hybrid-F-Max proposed method gives the best retrieval recall results in

Table 10 in view of the mean and the number of shaded cells, followed by the Hybrid-F-Sum in view of the mean, Hybrid-D-Max 3, SMLP, SCNN1D, and Hybrid-D-Max 2. Then, SDNB, TAN, BIN, and SQB are in view of the mean. The improvement percentages of the hybrid-F-Max model are 20.08, 16.35, 68.9, 69.00, 69.63, and 58.72 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-F-Sum model are 14.62, 10.64, 66.78, 66.88, 67.56, and 55.91 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max3 model are 8.94, 4.69, 64.57, 64.67, 65.40, and 52.97 compared to SCNN1D, SMLP, TAN, BIN, SQB, and SDNB, respectively. The improvement percentages of the hybrid-D-Max2 model are 60.67, 60.78, 61.59, and 47.79 compared to TAN, BIN, SQB, and SDNB, respectively. Finally,

Figure 13 compares methods for the average recall percentage of successful compound retrieval at the top 5% in MDDR-DS3.

Furthermore, the MUV recall values for the 1% cut-offs recorded in

Table 11 demonstrated that some proposed hybrid Siamese similarity models were superior to the benchmark TAN method and other studies: TAN, BIN, SQB, and previous selected proposed method SCNN1D, except the SMLP method. In addition, among other hybrid Siamese similarity models, the Hyper Siamese with Feature fusion Sum model (Hybrid-F-Sum) gives the best retrieval recall results in

Table 11 in view of the mean, followed by the SCNN1D, Hybrid-D-Max3, Hybrid-F-Max, BIN, Hybrid-D-Max2, SQB, and TAN. The improvement percentages of the hybrid-F-SUM model are 3.05, 50.64, 19.97, and 55.95 compared to SCNN1D, SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-F-Max model are 46.02, 12.48, and 51.83 compared to SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-D-Max3 model are 46.79, 13.74, and 52.52 compared to SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-D-Max2 model are 33.08 and 40.29 compared to SQB and TAN.

Figure 14 compares the methods for the average recall percentage of successful compound retrieval at the top 1% MUV. By comparison, the Hybrid-F-Sum proposed method gives the best recall results in

Table 12 in view of the mean, followed by the Hybrid-F-Max in view of the mean, Hybrid-D-Max 3, SCNN1D, and Hybrid-D-Max 2. Then, BIN, SQB, and TAN are in view of the mean. The improvement percentages of the hybrid-F-SUM model are 7.17, 34.07, 22.10, and 38.63 compared to SCNN1D, SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-F-Max model are 5.82, 33.11, 20.97, and 37.73 compared to SCNN1D, SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-D-Max3 model are 4.33, 32.05, 19.72, and 36.75 compared to SCNN1D, SQB, BIN, and TAN, respectively. The improvement percentages of the hybrid-D-Max2 model are 17.61, 2.65, and 23.30 compared to SQB, BIN, and TAN, respectively.

Figure 15 compares the methods for the average recall percentage of the successful compound retrieval at the top 1% in MUV.

The second method that can be used to evaluate the proposed methods is the significance test. The Kendall W is the significance test that will be used in this study. Moreover,

Table 13,

Table 14,

Table 15 and

Table 16 show the ranking of the hybrid Siamese similarity models (Hybrid-F-Max, Hybrid-F-Sum, Hybrid-D-Max3, Hybrid-D-Max 2) based on previous studies TAN, BIN, SQB, Siamese MLP, and CNN1D, using Kendall W test results for MDDR-DS1, MDDR-DS2, MDDR-DS3, and MUV at the top 1% and top 5%.

For all of the data sets used, the Kendall W test of the top 1% shows that the significance test (P) values are less than 0.05; this means that the hybrid-enhanced Siamese similarity models are significant in all cases with the top 1%. Therefore, the general ranking of all methods indicates that the Hyper Siamese with Feature fusion Max model (Hybrid-F-Max) and the Hyper Siamese with Feature fusion Sum model (Hybrid-F-Sum) are superior to other methods and have the top rank in MDDR-DS1 (homogeneous and heterogeneous) and MDDR-DS3 (structurally heterogeneous). In MDDR-DS2 (structurally homogeneous), the hyper Siamese with the decision fusion max model with three similarities (Hybrid-D-Max3) has the top rank among other methods. In the MUV dataset, the Hyper Siamese with the Feature fusion Sum model (Hybrid-F-Sum) has the top rank among other methods except the SMLP method.

It is the same as with the results of the Kendall W test of the top 5%. The results indicate that significance test (P) values are less than 0.05. This means that the hybrid Siamese similarity models are significant in all cases with the top 5%. As a result, the general ranking of all methods indicates that the Hyper Siamese with Feature fusion Max model (Hybrid-F-Max) and the Hyper Siamese with Feature fusion Sum model (Hybrid-F-Sum) are superior to other methods and have the top rank in the MDDR-DS1(homogeneous and heterogeneous) and MDDR-DS3 (structurally heterogeneous). In DS2, BIN has the top rank in the top 5% and in the MUV dataset, the SMLP method has the top rank among other methods, and then the Hyper Siamese with Feature fusion Max model (Hybrid-F-Max).

Figure 16 and

Figure 17 show the ranking of the hybrid Siamese similarity models (Hybrid-D-Max2, Hybrid-D-Max3, Hybrid-F-Sum, Hybrid-F-Max) methods based on TAN, BIN, SQB, SDBN, Siamese MLP, and CNN1D using Kendall W test results for MDDR-DS1, MDDR-DS2, MDDR-DS3, and MUV in the top 1% and 5%, respectively.

Lastly, according to the experiment results, the success of the proposed methods comes from: (1) The Siamese network, which is used for more complicated data samples, especially with heterogeneous data samples, and it is possible to employ deep learning methods with Siamese architecture, which deals efficiently with the vast volume of information stored in databases. (2) Enhancing the Siamese architecture with several similarity measures because each similarity measure focused on different properties, so, when used together, they lead to an improvement in the recall metric. (3) Incorporate the two selected models in one hybrid model because each method provides good results in some classes, so combining them in one hybrid model improved the retrieval recall. The two designs of hybrid models, which used feature data fusion (Hybrid-F-Max and the Hybrid-F-Sum), gave good results compared with the other two designs of hybrid models, which used decision data fusion (Hybrid-D-Max3 and Hybrid-D-Max2) because the first two designs worked on the features, which are enhanced by using the sum and max operation, and then led to improvements in the recall metric. In comparison, the other two designs of hybrid models worked only on selecting the max results between the methods (SMLP, SCNN1D) in their hybrid designs.

Besides that, the proposed methods have good results in MDDR-DS3, MDDR-DS1, and MUV because they contain heterogeneous molecule classes. In MDDR-DS2, the proposed methods did not achieve a higher score than other traditional methods (TAN, BIN, SQB, SDBN) because the dataset has only structurally homogeneous molecules classes. However, some proposed methods have achieved better results at the top 1% only compared with traditional methods.