1. Introduction

Robust orientation estimation is of great significance in robotics tasks such as motion control, autonomous navigation, and 3D mapping. Orientation can be obtained by utilizing carried sensors like the wheel encoder, inertial measurement unit (IMU) [

1,

2,

3,

4], or cameras [

5,

6,

7]. Among these solutions, the visual-based method [

8,

9,

10,

11] is effective, as cameras can conveniently capture informative images to estimate orientation and position. In the past decades, many simultaneous localization and mapping (SLAM) systems [

12,

13] and visual odometry (VO) methods [

14,

15] have been proposed. Payá et al. [

5] proposed a global description method based on Radon Transform to estimate robots’ position and orientation with the equipped catadioptric vision sensor. These methods show good performance in estimating orientation from captured images. However, local and global maps’ construction or loop detection is needed in these approaches to reduce drift error.

For most indoor environments, there exist many parallel and orthogonal lines and planes (called the Manhattan World (MW) [

16]). These structural regularities are exploited in studies to estimate drift-free rotation without previous complex techniques (map reconstruction and loop closure) [

17,

18,

19]. Since 3D geometric structures can easily be calculated by using the camera that provides both depth information and color image with 3 channels (red, green, and blue), called the RGB-D camera. The RGB-D camera has become a popular alternative to monocular and stereo cameras for the purpose of rotation estimation, and estimation accuracy has been prominently improved by using the MW assumption with a RGB-D camera [

20,

21,

22,

23]. However, a major disadvantage of these MW-based methods is that the number of lines and planes used for tracking the MW axes must be no less than 2, which is the minimal sampling for 3 degrees of freedom (DoF). In practice, robots often encounter harsh environments without lines, and only one plane can be visible, resulting in failure in tracking MW axes to estimate the camera orientation. To address these issues, we select some frames as keyframes and exploit hybrid features (i.e., plane, line, and point) to compute the rotation matrix of each captured frame with respect to its reference keyframe instead of directly aligning with the global MW axes.

In this paper, we propose a robust and accurate approach for orientation estimation using RGB-D cameras. We detected and tracked the normal vectors of multiple planes from depth images, and we detected and matched the line and point features from color images. Then, by utilizing these hybrid features (i.e., plane, line, and point), we constructed a cost function to solve the rotation matrix of each captured frame. Meanwhile, we selected keyframes to reduce drift error and avoid directly aligning each frame with the global MW. Furthermore, we extracted the MW axes based on the normal vectors of orthogonal planes and the vanishing directions of parallel lines, and by aligning the current MW axes with the global MW axes, we refined the aforementioned rotation matrix of each keyframe and achieved drift-free orientation. Experiments showed that our proposed method produces lower drift error in a variety of indoor sequences compared to other state-of-the-art methods.

Our algorithm exploits hybrid features and adds a refinement step for keyframes, which can provide robust and accurate rotation estimation, even in harsh environments, as well as general indoor environments. The contributions of this work are as follows:

We exploited the hybrid features (i.e., plane, line, and point), which provides reliable constraints in solving the rotation matrix for the majority of indoor environments.

We refined keyframes’ rotation matrix by aligning the current extracted MW axes with the global MW axes, which achieves drift-free orientation estimation.

We evaluated our proposed approach on the ICL-NUIM and TUM RGB-D datasets, which showed robust and accurate performance.

2. Related Work

Pose estimation obtained by VO or V-SLAM systems has been extensively studied for the purpose of meaningful applications, such as autonomous robots and augmented reality. Rotation estimation is usually considered as a subproblem of pose estimation that consists of rotational and translation components. It has been gradually recognized by researchers that the main source of VO drift is inaccurate rotation estimation [

19,

20]. In the following discussion, we focus on rotation-estimation methods that exploit structural regularities with RGB-D cameras. These methods utilize surface normals, vanishing points (vanishing directions), or mixed constraints to compute camera orientation.

Surface-normal vectors were exploited to estimate camera orientation because their distribution on the unit sphere (or called Gaussian sphere) is regular and more likely around plane-normal vectors in the current environment, as shown in

Figure 1. The work of Straub et al. [

21] introduced the Manhattan-Frame model in the surface-normal space and proposed a real-time maximum a posteriori (MAP) inference algorithm to estimate drift-free orientation. Zhou et al. [

23] developed a mean-shift paradigm to extract and track planar modes in surface-normal vector distribution on the unit sphere, and achieved drift-free behavior by registering the bundle of planar modes. In the work of Kim et al. [

24], orthogonal planar structures were exploited and tracked with an efficient SO(3)-constrained mean-shift algorithm to estimate drift-free rotation. These surface-normal-based methods can provide stable and accurate rotation estimation if the number of observed orthogonal planes is not less than two.

A vanishing point (VP) of a line is obtained by intersecting the image plane with a ray parallel to the world line and passing through the camera center, and it depends only on the direction of a line [

25]. Two parallel lines determine a vanishing direction (VD), and the Euclidean 3D transformation of a VD is influenced only by rotation; the geometric relationships are shown in

Figure 2, so VPs and VDs have been widely used for estimating rotation. Bazin et al. [

17] proposed a three-line random-sample consensus (RANSAC) algorithm with the VP orthogonality constraint to estimate rotation. The work of Elloumi et al. [

26] proposed a real-time pipeline for estimating camera orientation based on vanishing points for indoor navigation assistance on a smartphone. VP-based methods need a sufficient number of lines for estimating rotation, and accuracy performance is greatly affected by line-segment noise.

Hybrid approaches use both surface normals obtained in depth image and vanishing directions extracted in the RGB image to estimate rotation, which shows more robust performance. The method proposed by Kim et al. [

22] exploited both line and plane primitives to deal with degenerate cases in surface-normal-based methods for stable and accurate zero-drift rotation estimation. In the work of Kim et al. [

27], only a single line and a single plane in RANSAC were used to estimate camera orientation, and refinement is performed by minimizing the average orthogonal distance from the endpoints of the lines parallel to the MW axes once the initial rotation estimation is found. Bazin et al. [

17] introduced a related one-line RANSAC for situations where the horizon plane is known.

3. Proposed Method

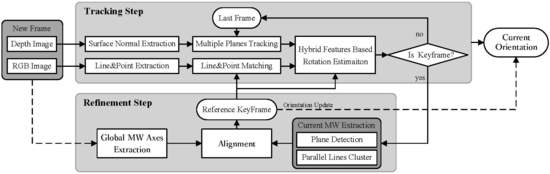

We propose a robust visual compass that exploits hybrid features (i.e., plane, line, and point) to estimate camera orientation with the RGB and depth-image pairs. Our proposed method has two main steps: (1) rotation matrix is estimated by tracking the hybrid features for each frame with respect to the reference keyframe (tracking step); and (2) refine the keyframe’s initial rotation matrix by aligning with the global MW axes to achieve drift-free orientation (refinement step). The overview of our proposed method is shown in

Figure 3.

3.1. Rotation Estimation with Hybrid Features

We simultaneously tracked multiple planes, lines, and points in the current environment, and we utilized tracked hybrid features to construct a cost function for estimating the current rotation matrix relative to its reference keyframe. This can provide camera rotation even in uncharacteristic scenes where there are no rich texture or no consistent visible lines.

3.1.1. Multiple-Plane Detection and Tracking

We detected multiple planes from the depth image with a fast plane extraction algorithm [

28]. The algorithm first constructs an initial graph that uniformly divides the depth image’s point cloud into a set of nodes with size

in the image space. It then performs agglomerative hierarchical clustering on this graph to merge nodes belonging to the same plane. It final refines the extracted planes using pixel-wise region growing. With this approach, we obtain planes

, where

is the unit normal vector of the

i-th plane, and

is the distance to the origin of the camera co-ordinate system for the current frame.

We tracked the normal vector of each detected plane with a mean shift algorithm [

23] that operates based on the density distribution of initial nodes’ normals on the Gaussian sphere, as shown in

Figure 4. We calculated the normal vector of each initial node by the least-square fitting method, and the depth image was preprocessed by a box filter to obtain the stable vectors. We used the previous frame’s tracked (detected) normal vectors as an initial value, and then performed the mean shift algorithm in the tangent plane of the Gaussian sphere to obtain the tracked results. It should be noted that the parallel planes have the same plane normal vector; we class them as the same plane cluster for rotation estimation.

If we only have plane primitives to estimate rotation, rotation matrix

with three degrees of freedom can be computed from no less than two such tracked norm vectors that are not parallel because each normal vector represents two independent constraints on

.

where

represents the

i-th detected plane normal vector in the reference keyframe, and

represents the tracked result of the

i-th plane in frame

k.

3.1.2. Line and Point Detection and Matching

We used a linear-time Line Segment Detector (LSD) [

29] to extract 2D line segments on the color image and obtain 2D line segments set

, where

is the

i-th line segment:

, with starting point

and ending point

. Pixels belonging to the line segment

are:

,

is the image domain. Then, we reconstructed their corresponding 3D points set:

, where

represents the corresponding depth value of pixel

in the color domain, and

is the inverse projection function for a camera model, with

,

being the focal lengths on the

x axis and

y axis, and

is the camera’s centre co-ordinates. Finally, the RANSAC method is used to fit 3D lines

, where

represents the

i-th line’s 3D direction, and

represents a point in this 3D line.

We match the lines that were respectively extracted from the current frame and the reference keyframe based on the Line Band Descriptor (LBD) [

30], and two pairs of matching lines that are not parallel are needed to estimate the rotation matrix in case that there are only line primitives obtained in the current environment.

where

represents the

-th detected 3D line direction in the reference keyframe, and

represents the matching line direction in frame

k.

In addition to the plane and line features, we extracted and matched the oriented fast and rotated brief (ORB) features for point tracking, as these features are extremely fast to compute and match, and they present good invariance to camera autogain, autoexposure, and illumination changes. We used the epipolar constraint method to optimize the initial matching pairs by ORB descriptors; the optimized results can provide reliable constraints to estimate the camera pose.

where

represents the 2D position of

-th detected ORB point feature in the reference keyframe and

represents the 2D position of the matching point in frame

k. It should be noted that translation component

could be obtained by solving Equation (

3), but we did not consider it, as our visual compass mainly focused on camera orientation.

3.1.3. Robust Rotation Estimation

We jointly utilized the tracked planes, lines, and points in the current environment to estimate the rotation matrix with respect to the reference keyframe. Rotation matrix

can be computed by solving:

where

represents the number of pixels contained in the

i-th tracked plane, and

represents the number of pixels contained in the

i-th tracked line, 3D points

, and

.

Cost function Equation (

4) contains three parts, corresponding to plane, line, and point constraints. A stable and accurate rotation matrix can be solved by minimizing Equation (

4) with line and plane constraints jointly in the texture-less environment that few points tracked, and the point constraints ensure that the rotation estimation is reliable in the scenes that no consistent lines or only one plane to be visible.

Keyframe Selection: By the tracking step, we constantly know the number of the tracked planes, lines and points for each frame. If there is only one tracked plane with the condition that the number of normal vectors on the Gaussian sphere around this tracked normal vector is too low, and the number of tracked points is less than a threshold, we reuse the fast plane extraction method and Line Segment Detector (LSD) method to detect planes and lines in the current frame. If the number of redetected orthogonal planes and lines is larger than 2, this frame is selected as a keyframe and performs the following refinement step.

3.2. Drift-Free Orientation Estimation

The previous tracking step estimates the rotation matrix between the current frame and its reference keyframe, and it is obvious that the accuracy of the reference keyframe’s orientation directly affects the accuracy of the current frame’s rotation matrix. To reduce drift error, we sought global MW axes in the first frame and refined each keyframe’s orientation by aligning the current extracted MW axes with the global MW axes to achieve drift-free rotation in MW scenes. We sought current and global MW axes based on the plane normal vectors, and the vanishing directions of the parallel lines. Plane normal vectors can be directly obtained by the previous fast plane extraction method, and we propose a novel vanishing direction extraction method as follows.

3.2.1. Vanishing Direction Extraction

To extract accurate VDs, we need to cluster lines that are parallel in the real world. We used the simplified Expectation–Maximization (EM) clustering method to group image lines and compute their corresponding 3D direction vectors. The original EM algorithm iterates the expectation and the maximization steps. In our simplified algorithm, we skip the expectation phase and roughly cluster the lines based on the K-means method [

31], with the Euclidean distance of all extracted lines’ 3D directions that are represented as 3D points. In the maximization phase, direction vectors are estimated by maximizing the objective function:

where

represents the

i-th 2D line segment in the

k-th initial classification,

represents the VD of the

k-th initial classification to be optimized, and the

represents the posterior likelihood of the VD.

Using the Bayes formula, the posterior likelihood of the VD is expressed as:

where

represents the prior probability of the VD, and

models the potential knowledge of the VD before we obtain the measurement. If we know nothing,

is defined as uniform distribution with a constant value. Therefore, the VD can be obtained by maximizing prior probability:

Prior probability

is defined as:

where

represents the internal camera parameters. Equation (

8) reflects the fact that the vanishing direction is perpendicular to the plane normal of a great circle that is determined by an image line

and the center of the projection of the camera, as shown in

Figure 2.

Maximizing objective function Equation (

7) is equivalent to solving a weighted least-squares problem for each

:

where

represents the length of the

i-th line, and

represents the maximum line length in rough cluster

k. Length coefficient is considered in the term because the longer the lines are, the more reliable they are. By solving Equation (

9), we can obtain the initial vanishing direction and the residual for each line. To obtain a more accurate vanishing direction, we discarded lines with a larger residual than a threshold and added additional optimization. Optimized parallel lines are used to estimate the final vanishing directions.

Figure 5 shows two results of parallel-line clustering obtained by our proposed method.

3.2.2. Global Manhattan World Seeking

The Manhattan World assumption is suitable in human-made indoor environments, and we sought global MW axes based on plane normal vectors and the vanishing directions from the first frame. MW axes can be expressed as columns of a 3D rotation matrix .

We first set the the detected plane normal vectors and the vanishing directions from the parallel lines as the candidate MW axes, which return a redundant set. In fact, most of the pixels in the frame typically belong to the planes and lines that determine the dominant MW axes, and we sought the plane that contained the largest pixels, and set its plane normal vector as the first MW axis. The remaining two axes and are determined based on the orthogonality constraint with the first axis and the number of the pixels belonging to the detected planes or the parallel lines.

If we detect three mutually orthogonal planes in the first frame, we directly set their plane-normal vectors as the MW axes. In the case that there are only two orthogonal planes, the third axis is determined by the vanishing direction from the parallel lines that is orthogonal with the two previous plane-normal vectors. Similarly, if only one plane is detected in the first frame, we sought the remaining two MW axes by the vanishing directions from the orthogonal parallel lines.

Initial MW axes

, obtained by the previous step, are not strictly orthogonal. We maintained orthogonality by reprojecting the MW axes onto the closest matrix on

. Each axis is reweighted by a factor

that is determined by the number of pixels belong to this axis’ corresponding planes or parallel lines. The final global MW axes are obtained by using singular=value decomposition (SVD):

where

and factor

describes how certain the observation of a direction is.

3.2.3. Keyframe Orientation Refinement

For each keyframe, we refined its rotation matrix by aligning the current extracted MW axes with the global MW axes. We first used the fast plane-extraction algorithm and our proposed spatial-line direction estimation method to extract plane-normal vectors and vanishing directions in the current keyframe. We then extracted the current MW axes (

) by using the same method that we used to extract global MW axes. We finally determine the corresponding pairs based on the Euclidean distance between vector

and

, where

represents the rotation matrix obtained by tracking steps for the current keyframe. Refined rotation matrix

is computed by solving:

where

and

represent the

i-th global MW axis and the current extracted corresponding MW axis.

4. Results

We evaluate our proposed approach on the synthetic dataset (ICL-NUIM [

32]), real-world dataset (TUM RGB-D [

33]), and pose-estimation application, respectively. All experiments were run on a desktop computer with an Intel Core i7, 16 GB memory, and Ubuntu 16.04 platform.

The ICL-NUIM dataset is a collection of handheld RGB-D camera sequences within synthetically generated environments. These sequences were captured in a living room and an office room with perfect ground-truth poses to fully quantify the accuracy of a given visual odometry or SLAM system. Depth and RGB noise models were used to alter the ground images to simulate realistic sensor noise. There are sequences that are captured in the environment with low texture and only one visible plane, which makes it hard to estimate rotation for whole images in this sequence.

The TUM RGB-D dataset is a famous benchmark to evaluate the accuracy of a given visual odometry or visual SLAM system. It contains various indoor sequences captured from the Kinect RGB-D sensor. The sequences were recorded in real environments at a frame rate of 30 Hz with a resolution, and their ground-truth trajectories were obtained from a high-accuracy motion-capture system. The TUM dataset is more challenging than the ICL dataset because it has some blurred images and inaccurate alignment image pairs that make it difficult to estimate the rotation matrix.

We compared our proposed approach with two state-of-the-art MW-based methods proposed by Zhou et al. [

23] and Kim et al. [

27], namely, orthogonal planes based rotation estimation (OPRE) and 1P1L. OPRE estimates absolute and drift-free rotation by exploiting orthogonal planes from depth images. 1P1L estimates 3DoF drift-free rotational motion with only a single line and plane in the Manhattan world. We used the average value of the absolute rotation error (ARE) in degrees as the performance metric for the entire sequences:

where

denotes the trace of a matrix,

and

represent the estimated and ground rotation matrix for the

i-th frame, respectively, and

N represents the number of frames in the tested sequence.

4.1. Evaluation on Synthetic Dataset

We first tested the performance of our proposed algorithm on the ICL-NUIM dataset, and measured the average ARE in degrees for each sequence; evaluation results are shown in

Table 1. The smallest average ARE values are bolded, which reveals that our proposed method is more accurate than the two other methods. For example, in ‘Office Room 0’, the average ARE of our proposed method is 0.16 degrees, while that of 1P1L and OPRE is 0.37 and 0.18 degrees, respectively. Our method outperformed the others in all cases in the ICL-NUIM benchmark. The main reason is that we jointly exploited the plane, line, and point features to estimate camera orientation even when the camera moves in scenes with no consistent lines, or where only one plane is visible; this is illustrated in

Figure 6. In ‘Living Room 0’, the OPRE method failed to estimate the rotation for the entire sequence because only one plane can be visible in some frames; we marked the result as ‘×’ in

Table 1. The last column in

Table 1 shows the number of frames in the current sequence.

The MW assumption is sufficiently suitable for the ICL-NUIM benchmark and we used the refinement step to achieve a drift-free rotation matrix. To clearly show the effect of the refinement step, we measured the ARE values in degrees for all sequences by our method without a refinement step, which corresponds to the ‘No Refinement’ column in

Table 1. We recorded the values of absolute rotation error (ARE) for each frame in the ‘Living Room 0’ sequence, and the final rotation drift with and without refinement was 0.34 and 1.43 degrees, respectively, as shown in

Figure 7. This demonstrates that the refinement step can effectively reduce rotation drift.

4.2. Evaluation on Real-World Data

We compared the performance of our proposed algorithm with other two methods on seven real-world TUM RGB-D sequences that contained structural regularities. Comparison results are shown in

Table 2. We provide the average ARE for rotation estimation, and the smallest values are indicated in bold. Our proposed method showed better performance in low-texture environments such as ‘fr3_struc_notex’ and ‘fr3_cabinet’ because we used hybrid features to estimate orientation, as shown in

Figure 8. Our method can also provide a more accurate rotation matrix in an environment with imperfect MW structure like ‘fr3_nostruc_tex’ and ‘fr3_nostruc_notex’, whereas OPRE fails because it requires at least two orthogonal planes to estimate camera orientation.

The result of refinement performance on the ‘fr3_cabinet’ sequence is shown in

Figure 9. Final rotation drift with and without refinement was 1.30 and 1.62 degrees, respectively. It is clear that our refinement step can effectively reduce drift error. The average ARE values computed by our proposed algorithm with and without refinement step were the same in sequences ‘fr3_nostruc_tex’ and ‘fr3_nostruc_notex’. The reason is that there were no perfect global MW axes extracted in the first frame, and the refinement step was not implemented.

4.3. Application to Pose Estimation

To further verify the practicability of our proposed visual compass, we used it for pose-estimation application and recorded the estimated trajectories. The three-dimensional pose has six degrees of freedom (DoF) and it consists of 3-DoF rotation and 3-DoF translation. As our proposed visual compass method can provide accurate rotation estimation, the key to performing pose localization is to estimate the translation component. We first detected and tracked ORB feature points to obtain point correspondences. Then, we recovered the 3-DoF translational motion of the images by minimizing:

where

represents the rotation matrix between the reference image and the current image, and it is obtained by our proposed visual compass, Three-dimensional points

and

are described in Equation (

4).

We tested pose estimation on four datasets, “Living Room 2”, “Office Room 3”, “fr3_struc_tex”, and “fr3_nostruc_tex”. These datasets provide the ground-truth pose for each image; we measured the root mean squared error (RMSE) of the absolute translational error (ATE) and compared it with state-of-the-art approaches, namely, ORB_SLAM [

12], dense visual odometry (DVO) [

15], and line-plane based visual odometry (LPVO) [

22]. The comparison of ATE.RMSE is shown in

Table 3; the smallest error for each sequence is indicated in bold. Estimated trajectories are shown in

Figure 10.