1. Introduction

The purpose of binary code similarity detection is to detect the similarity of two code gadgets using only binary executable files. Binary code similarity detection has a wide range of applications, such as bug searching [

1,

2], clone detection [

3,

4,

5], malware clustering [

6,

7,

8], malware genealogy tracking [

9], patch generation [

10,

11] and software plagiarism detection [

12,

13,

14], among other application scenarios. However, it is challenging to determine the similarity of binary code because a large amount of program semantics will be lost during the compilation, such as function names, variable names and data structures. In addition, there is a large amount of code coexisting during the compilation process, from different optimization levels and different architectures (e.g., ARM, MIPS, x86-64). The generated binary code can be changed significantly when using different compilers, changing compiler optimization options or selecting different CPU architectures. Although there are differences between executables generated from the same source code, binary vulnerabilities found on one architecture may also exist on other architectures.

In fact, there is a large amount of reused code in the industry. This phenomenon may occur due to the unskilled business of the programmer and other reasons, such as downloading the source code directly from github and other open-source platforms for modifications. In this way, the vulnerabilities that existed within the original code will always be there. When a vulnerability is discovered, computer security staff need to determine whether the vulnerability exists in historical versions of the program and whether the vulnerability exists on other code. Workers need a tool to quickly determine code similarity in binary files to reduce the manual review effort [

15]. It was only a short time ago that researchers first started to work on binary file similarity across architectures.

The work on binary code similarity detection was first proposed in 1999. A tool named EXEDIFF [

16] generates patches for DEC Alpha, comparing instruction sequences. A tool named BMAT [

17] enables the propagation of profile information from an older build to a newer build, thus greatly reducing the need for re-profiling. Over the next decade, there were many binary code similarity comparison methods proposed, and these efforts progressed from syntactic similarity analysis to semantic similarity and extended applications to malware analysis. Among them are the heuristic-based method for determining constructor call graphs, first proposed by H Flake [

18] in 2004, and later his work introducing the Some Primes Product (SPP) hash to identify similar basic blocks, both of which are also the basis for the bindiff plugin in the IDA decompiler [

19]. In 2005, Kruegel et al. [

20] proposed a graph coloring technique for detecting malware variants. They grouped instructions with similar functions into 14 semantic types and used these 14 semantic types to color the inter-process control flow graph. The method is robust to partial obfuscation techniques. In 2008, Gao et al. [

21] proposed BinHunt to identify the gap between two versions of the same program. The technique uses symbolic execution and a constraint solver to analyze the similarity of two basic blocks. In the last decade, a large number of binary code analysis methods have emerged and work has focused on binary code search methods [

1,

2,

3,

22,

23,

24,

25,

26,

27]. In fact, most of the binary code search methods have been proposed to search for vulnerabilities. Since 2015, work has been focused on cross-architectural similarity analysis. Feng et al. [

2] extracted the binary executable as a control flow graph with feature vectors, called the attribute control flow graph (ACFG). ACFG is based on the original features of the basic blocks in the binary code. They used the control flow graph with basic block features in a graph matching algorithm and implemented Genius, a cross-architectural bug search engine.

With the re-emergence of neural networks, there has been a neural network boom in the field of binary code similarity detection. Since 2016, a large number of neural-network-based detection methods have proliferated. For example, in 2016, Lageman et al. [

28] trained a neural network to determine whether two binary codes were compiled from the same source code. In 2017, Xu et al. [

29] proposed Gemini, a neural network model using ACFG as input features, to detect the similarity between two functions by calculating the distance between the embedding vectors. In 2020, Yu et al. [

30] from Tencent’s Cohen Lab proposed OrderMatter, a similarity analysis model based on graph neural networks. This work directed the research to the overall structure of CFG and the order of nodes, providing new ideas for future research. There were also

Diff [

31] in 2018, Asm2Vec [

3] in 2019, and DeepBinDiff [

32] in 2020, all of which have made significant contributions in the field of binary code similarity detection based on neural networks [

30,

33,

34,

35,

36].

Despite the great achievements of the current work based on neural networks, some important issues have still not been considered. Most previous work used pre-trained models to extract basic block features and then aggregate them using some kind of neural network. In this way, in the feature extraction phase, the pre-trained semantic model cannot learn the global position of the basic blocks and the information of other blocks in the graph. Moreover, in the feature aggregation phase, the neural network only learns the features of the embedded basic blocks. In addition, the same register in a control flow may be operated in multiple basic blocks. In the form of embedding before aggregation, it is difficult for a sequentially structured model to learn this connection, even when setting up some tasks such as neighbor prediction in pretraining. This is because the corpus training and the overall embedding of the basic blocks are severed. A sequential execution model would result in the loss of a large amount of global information and information about block-to-block connections.

To solve the above problem, in this paper, we propose Codeformer, a GNN-nested Transformer iterative neural network. In this work, we try to extract the basic block features and structural features of CFG iteratively. With each iteration, the node can obtain information about its more distant neighbors. As the model runs iteratively, it is possible to obtain a more accurate embedding of each basic block in the function. As a result, Codeformer can obtain a more accurate embedding feature.

The contributions of this paper are as follows:

We propose a generic framework called Codeformer to learn the graph embeddings of CFGs, which learns the node information of basic blocks in functions as well as global information.

We propose to use an iterative network model for code similarity detection for the first time. Experimentally, iterative models are shown to learn the features of binary codes in greater depth.

We analyze the learning ability of Codeformer for the structure features of function control flow graphs. Experiments show that updating graph nodes in an iteration enables the basic block to learn the structural features of its nearby basic blocks and thus better represent the information of the whole graph.

We have evaluated the model using several datasets and the results show that our proposed model has better performance than previous methods and achieves state-of-the-art results.

2. Background

2.1. Graph Neural Network

Graph neural network (GNN) is a general term for algorithms that use neural networks to learn graph structured data, and extract and discover features and patterns in graph structured data, which can meet the needs of graph learning tasks such as clustering, classification, prediction, segmentation and generation.

In the field of binary code similarity detection, CFG and its derivatives (ICFG, ACFG) can be extracted from binary codes via decompiling techniques. It has been shown that, given the same source code, there is a strong similarity in the graph structure extracted into the CFG by decompiling programs compiled at different optimization levels. The control flow graphs obtained by decompiling programs of different architectures also have certain similarities, and these similar features are sufficient for the neural network to learn. Therefore, we can study CFG using graph neural networks to analyze the similarity of functions and programs across optimization levels as well as across architectures.

2.2. Attributed Control Flow Graph

Feng et al. [

2] proposed a workflow for graph embedding called Genius. Given a binary function, Genius extracts the original features of the function in the form of an attribute control flow graph (ACFG). The ACFG is defined as a directed graph

, where

V is the set of basic blocks,

is the set of edges between basic blocks and

is the labeling function, which maps a basic block in

V to a set of attributes in ∑.

The attribute set ∑ contains two types of features: statistical features and structural features. The statistical features describe the local features within the basic block, and the structural features capture the location features of the basic block in the CFG, as shown in

Table 1.

2.3. Transformer

In 2017, Google proposed in a published paper a network based on the attention mechanism to deal with sequential model problems [

37], such as machine translation. The model can work in a highly parallel way, thus improving the training speed as well as the performance. The structure of Transformer is not complicated; the model presented by Vaswani et al. [

37] has a seq2seq encoder-decoder structure, as shown in

Figure 1. The encoder consists of multiple network layers, each with two sub-layers. The first sublayer is a multi-headed self-attention mechanism and the second is a simple location fully connected feedforward network. The decoder is also composed of multiple network layers. In addition to the two sublayers in each encoder layer, the decoder inserts a third sublayer that performs multi-headed attention on the output of the encoder stack.

2.3.1. Attention

The attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, key, value and output are all vectors. The output is a weighted sum of values, where the weight assigned to each value is calculated by the compatibility function of the query with the corresponding key.

2.3.2. Multi-Attention

Multi-attention is actually the use of the same query of multiple independent attention calculations, which works as an integration and can effectively prevent overfitting. The paper [

37] mentions that the input sequence of the model is identical. This means that each attention layer uses the same initial values to linear transform, including Q, K and V. Therefore, each attention mechanism function is responsible for only one subspace in the final output sequence, allowing the model to learn more information.

2.4. Graphformers

In 2021, Yang et al. [

38] proposed the GNN-nested Transformer model named graphformers. In this project, the target object to deal with is text graph data, where each node x in the graph G(x) is a sentence. The model plays an important role in combining a GNN with text and makes an active contribution in the field of neighborhood prediction. Since the network is a nested structure, its text encoding and full-graph information transfer can be performed iteratively. Therefore, compared with the cascading structure, the information transmission across nodes is more sufficient, obtaining better representation characteristics.

However, the graph defined by the model is one central node with five edge nodes, which does not match the complexity of the actual graph. Therefore, the model can only be applied to simple star graphs. Depending on whether it is a directed or undirected graph, the structure of the graph will be more complex. To put it simply, if the graphformers model is applied to binary similarity detection, it can only handle the basic block embedding, but cannot handle the entire control flow graph. These raw features extracted by feature engineering exhibit the basic information of a function. However, due to the low dimensionality, ACFG does not adequately represent the information of the function, and the model is not effective.

3. Codeformer

As mentioned in previous work [

39], a normalized analysis of binary assembly code can determine that the curves of the instruction distribution conform to Zipf’s law, similar to natural language. Zipf’s law is the law of word frequency distribution, where the number of occurrences of a word in the corpus of a natural language is inversely proportional to its ranking in the frequency table. Therefore, we can embed assembly instructions with Transformer as we do with natural languages.

3.1. Workflow

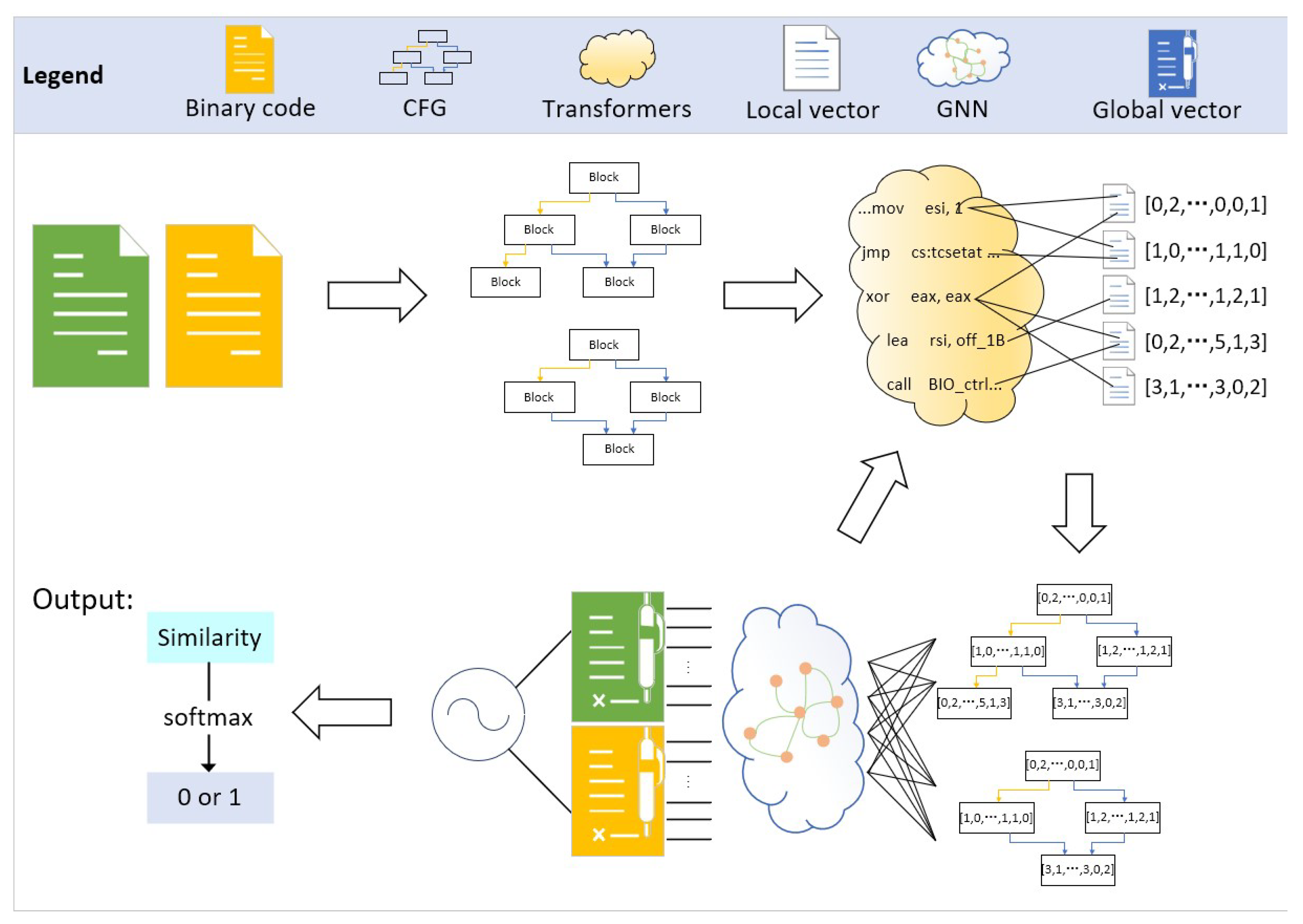

In this section, we describe the overall workflow of the model to detect binary code similarity. Our work has two components: the CFG extraction script, and the neural network for binary code similarity computation based on Transformer and a GNN. The CFG extraction script uses the decompiler tool IDA to extract the CFG of all functions. The neural network model can handle the control flow graphs of binary files, each of which is a disassembly message of a function. The information within each node in the graph is an assembly instruction for a basic block, and all nodes and edges within the function form the graph . The model iterates instruction-embedding within blocks and graph-embedding within functions to learn the embedding features of graph G. We expect that the generated embedding information can sufficiently learn the intra-block instruction information and inter-block relationship, and predict whether two functions are similar based on the graph embedding information similarity.

The input to the work is a binary file run through the IDA script to obtain a control flow graph. The granularity of the study is at the basic block level. The overall workflow is shown in

Figure 2. Transformer treats all instructions within a basic block as a paragraph and embeds this paragraph to a vector. After embedding all the basic blocks of the decompiled control flow graph pairs as vector features, the GNN learns and updates the features of these basic blocks with a full graph view. Note that such nested learning will be performed several times. Then, the aggregation function of the GNN will aggregate all nodes of the whole graph to obtain the embedding vector of the graph. Finally, the similarity of this function pair is calculated by the similarity measurement algorithm. We summarize the workflow of Codeformer as Algorithm 1.

| Algorithm 1 Calculation of CFG feature vectors. |

| Require: Control flow graph of the function G = 〈V,E〉 |

| Ensure: Hidden status of the control flow graph |

| 1: for line in iterates do |

| 2: for v ∈ V do |

| 3: for s ∈ v do |

| 4: hs = TRM(s) |

| 5: end for |

| 6: hv = MHA({∑s|s ∈ v}) |

| 7: end for |

| 8: = BMP(hV) |

| 9: end for |

| 10: hG = BMA(

) |

| 11: return hG; |

3.2. Calculation of CFG Feature Vectors and Similarity

Algorithm 1 describes the specific process that Codeformer uses to calculate the feature vector of a function, and the input of the model is a control flow graph. For each iteration of the node hidden state update, for each basic block within the graph, the instructions inside the block are embedded one by one using Transformer (TRM), and then the multi-headed attention network (MHA) aggregates the basic blocks into a hidden state (). After obtaining the hidden status of all basic blocks, the block message passing (BMP) module updates the hidden status of each basic block based on the neighboring nodes. After the last encoding layer, the block message aggregation (BMA) module aggregates all the basic blocks into an embedded feature of the graph (). In Codeformer, the BMP module is implemented by a gated recurrent neural network (GRU) and the BMA module is implemented by an MLP.

Codeformer calculates the similarity of a pair of basic block embedding features

by the cosine distance of the function pair, as shown in Equation (

10). Codeformer determines whether the two functions are similar by calculating whether the similarity of the function pair exceeds a threshold value. The specific threshold selection is discussed in

Section 5.3.1.

3.3. GNN-Nested Transformer Neural Network

The neural network proposed in this paper improves and enhances Microsoft’s graphformers [

38], a neural network that processes text graphs. We take as input the control flow graph

of the binary code, where

V is the set of vertices and inside each

node is the intra-block information of one basic block of the control flow graph;

E is the set of edges between nodes. The neural network considers the information within each block as a paragraph and embeds each paragraph into the

-dimensional vector. The update function of the GNN does not change the dimension of the vector when updating. Based on the word-embedding vector and the adjacency matrix of the CFG, the GNN aggregates the CFG of the input function into an

-dimensional vector. The entire network model is shown in

Figure 3, with multiple layers of nested Transformer (for embedding text) and GNN (for messaging and message aggregation) iterative execution. Among them, we use MPNN [

40] to implement the GNN functionality.

3.3.1. Model Structure

First, the function is disassembled to obtain control flow graph. We consider each basic block as a paragraph, each assembly instruction as a sentence, and the operators and operands within the block as tokens. For layer l, in the intra-block instruction embedding, we consider the first instruction of each block as the aggregation center and use the multi-headed attention network to embed to obtain the feature vector of each basic block. The nodes of the CFG are then updated and aggregated using a message-passing network to generate function embedding features.

3.3.2. Text Embedding in Transformer

We use the Transformer component to calculate the instruction embedding

for graph augmentation, which is calculated as follows:

In the equation above, LN is the layer-norm function, BMP is the block message-passing function for updating the embedding of basic blocks, MLP is the multi-layer projection network, MPNN is the message passing and updating part of the message passing network, and MHA is the multi-head attention. Each MHA calculates the embedding vector of each basic block and then updates the embedding of the basic block with MPNN; the updated result is used as the input of the next layer of embedding.

3.3.3. Graph Neural Network

We use a message-passing network (MPNN) for message passing and message aggregation for each CFG. In the block message-passing module, for each node, the features of its neighboring nodes and the corresponding edges are aggregated with the node itself, and then the node is updated with the obtained message and the previous features of the node to obtain the new features, called message passing. In the block message aggregation module, all the node features are aggregated into a single graph feature using the aggregation method. The formula for the MPNN is as follows:

where

denotes the message obtained by node

v for the

tth time,

is the neighbor node of node v,

is the hidden state of

for the

tth time and

is the feature of the edge between v and w.

is an arbitrary messaging method,

is an arbitrary update function and

R is an arbitrary aggregation function.

G indicates the full graph and

g is the embedding feature of the full graph.

In Codeformer, we use MLP as the messaging method

, GRU as the update function

and MLP as the aggregation function

R. In addition, there is no parameter

in Equation (

7), since there is only a simple jumping relationship between the basic blocks and the edges have no feature vectors. The formula is as follows:

3.4. Model Training

Training Objectives: The goal of this work was to improve the accuracy and efficiency of binary code similarity detection with the lowest false-positive rate. Given a pair of functions , CoderMatters learns their embeddings and calculates the similarity to determine whether the two functions are homologous.

3.4.1. Siamese Network

A Siamese network uses two structurally consistent sub-networks, which are trained simultaneously during model training and share network parameter weights. The network receives function pairs

, embeds them as vectors

, and then calculates the distance

of the two output vectors via the cosine distance metric. By learning positive and negative samples, the network can make similar inputs become closer after model embedding, while dissimilar inputs move further away.

3.4.2. Loss Function

The goal of Codeformer’s work is to detect whether the function pairs

are similar, and its loss function is the cosine similarity loss, which can determine whether the two input vectors are similar. We define the control flow graph embedding

g after BMA aggregation as the embedding of a function. Specifically,

are two vectors of the final embedding of the function pair, and

y represents the real similarity label, which belongs to

, representing two functions that are similar or dissimilar, respectively. The loss of the

i-th sample is shown in the following equation.

4. Experiment

In this section, we evaluate the functionality and performance of Codeformer.

4.1. Research Questions

The basic idea of our study is to learn the semantic and structural information of the control flow graph, and then determine the similarity of the function pairs by comparing their cosine distances. In short, we set up experiments to answer the following questions.

RQ1: Is our proposed Codeformer effective in detecting binary code similarity?

RQ2: Does our proposed Codeformer learn the structural features of control flow graphs?

RQ3: What are the main factors that affect Codeformer?

4.2. Experimental Setup

Our experiments were deployed on a server with an Intel i7-10700 CPU running at 2.90 GHz, 64 GB of RAM on board and an Nvidia RTX3080 GPU with 10 GB of video memory. The operating system is Ubuntu 20.04, the entire network uses the PyTorch architecture and the Python version is 3.8.13.

4.3. Datasets and Data Pre-Processing

The datasets compiled by IDA are OpenSSL, Clamav and Curl, where Clamav does not contain dynamic link libraries. We used all datasets for training and evaluation. The datasets include two compiled architectures, X86-64 and ARM, with four levels of optimization from O0-O3. As shown in

Table 2, the third column shows the number of binaries in a particular architecture in the dataset, and the fourth column shows the number of functions. We cleaned the original data and removed all orphan nodes (graphs with only one node). Since there is no information about streams, graph neural networks learn orphan nodes with only intra-node information, and such learning is actually no different from using Transformer alone. Therefore, orphan nodes are out of the learning scope of our model. In addition, we do not limit the data size to a fixed dimension in order to allow the model to fully learn the information within the block, a setting that is consistent with the diversity of binary codes. However, such a design gives us an uneven dataset, where the function may consist of an amount of basic blocks, and the longest statement in the basic block is too long. The problem lies in the high O(

) time complexity and space complexity in Transformer when performing the semantic embedding of code in long basic blocks, resulting in excessive consumption of memory (

L is the length of the basic block). For example, a basic block with 300 instructions would require approximately 5.4 GB of display memory to run Transformer only when the batch size is 1. When training two basic blocks of such size at the same time, the space required would already exceed the capacity of a normal GPU (10 GB for the RTX3080).

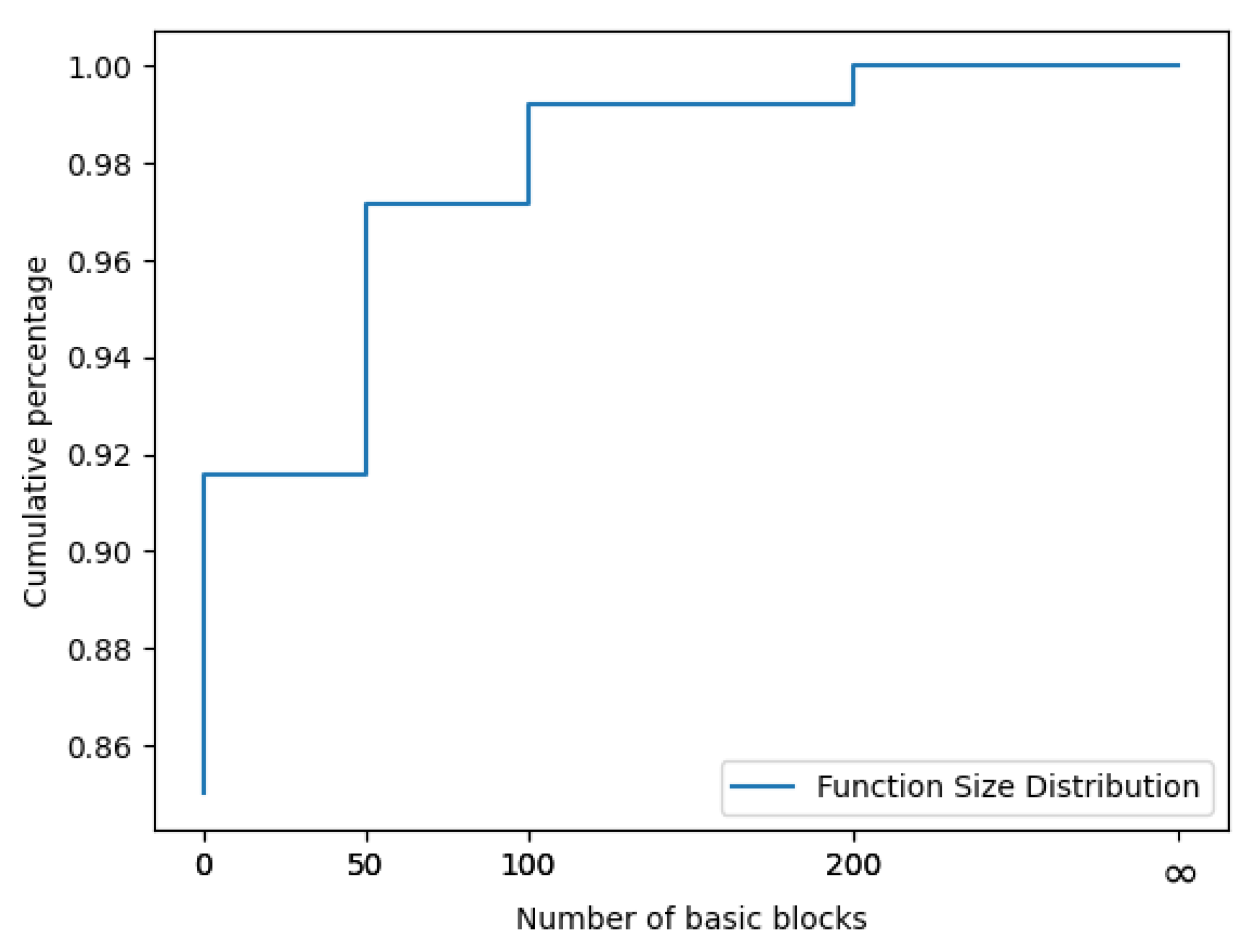

Such a data input into the model can lead to GPU memory overflow. We list the distribution of block numbers in the functions in

Table 3. Moreover,

Figure 4 shows the cumulative distribution of CFG size for 49,056 CFGs. Functions with fewer than 50 blocks account for 91.7% of all functions. Therefore, in our work, we exclude functions with oversized basic blocks from our datasets.

We decompile all the binaries to obtain several functions, among which there are a large number of renamed functions, noting that they are compiled from the same source code under different architectures and different optimization levels. In model learning, we assign the training set into several function pairs and label them according to the function names.

We first take 50% pairs of homonymous functions as positive samples. Since the homonymous functions are obtained by compiling the same source code under different optimization levels and architectures, we determine that the homonymous functions are similar and set the label as 1. Similarly, we randomly pair the remaining functions as negative samples, and label them as −1. In the random pairing, if there is a pairing of functions with the same name, it will be re-paired. Therefore, there will not be a situation in which some functions with the same name are paired together. Finally, we randomly divide these function pairs into a training set, a test set and a validation set in the ratio 8:1:1. These function pairs are actually the inputs to the Siamese network.

4.4. Evaluated Tasks

4.4.1. Binary Code Similarity Detection

In this task, we compared the performance of benchmark models and evaluated the ability of the models in detecting code similarity.

4.4.2. Evaluation of the Model’s Ability to Learn Graph Structure Information

This task aimed to evaluate the ability of our proposed Codeformer to learn the graph structure information of CFGs. We conducted comparison experiments with graphformers, a semantic learning model that aggregates only one paragraph.

4.4.3. Parameter Experiments

The task aimed to evaluate the main factors influencing the model, which we achieved by control variates. The variables being controlled were the similarity threshold, learning rate and whether iteration.

4.5. Benchmark Method

4.5.1. Gemini

Gemini uses a neural-network-based approach to compute the embedding vector of ACFG. ACFG is a graph structure with primitive features of basic blocks proposed by Xu et al. [

29]. It manually extracts the primitive features of the basic blocks as the node information of the graph. For Gemini evaluation, we obtained the ACFG, and fed it into a graph neural network for testing.

4.5.2. Asm2Vec

Asm2Vec proposes an assembly code representation learning model. It requires assembly code as input to find and merge semantic relationships between tokens that appear in the assembly code.

4.5.3. ASTERIA

The baseline extracts the binary code as an abstract syntax tree (AST) and uses a neural network to learn the semantic information of the function from the AST [

41]. We implemented the method according to the official Asteria code and tested it.

4.5.4. OrderMatter

This baseline proposes semantic-aware neural networks to extract the semantic information of the binary code and adopts a convolutional neural network (CNN) on adjacency matrices to extract the order information. We replicated the method and tested it.

4.5.5. BinShot

This baseline tackles the problem of detecting code similarity with one-shot learning (a special case of few-shot learning) and adopts a weighted distance vector with binary cross-entropy as a loss function on top of BERT. We tested the model using BinShot’s official code.

4.6. Evaluation Metrics

We would like to train Codeformer to be a conservative model. A good similarity detection method should be as free of misrepresentation as possible and reduce the percentage of false positives as much as possible. Therefore, our assessment methods were AUC (ROC) and ACC.

The receiver operating characteristic curve (ROC) and the area under the curve (AUC) are used to measure the performance of the classifier. The ROC curve can easily identify the effect of the threshold on the generalizability function of the model, which helps to choose the best threshold. Assuming that the positive sample is

P, the negative sample is

N, the correct judgment sample attribute is

T and the wrong judgment sample attribute is

F, the vertical coordinate of the ROC curve is the true positive rate

TPR and the horizontal coordinate is the false positive rate

FPR. The calculation formula is as follows.

AUC (ROC) is the area under the ROC curve, and in a 1 × 1 coordinate system, the AUC (ROC) takes values between 0.5 and 1. The closer the AUC (ROC) is to 1, the better the prediction.

The accuracy rate shows how accurately Codeformer classifies. In calculating the accuracy, we determined that the threshold of similarity is 0.8. This means that when the similarity of function pairs is greater than 80%, we determine that they are similar, and when it is less than 80%, they are non-similar. The

ACC calculation formula is as follows: