Intelligent Computation Offloading Mechanism with Content Cache in Mobile Edge Computing

Abstract

:1. Introduction

- We focus on the computation offloading problem with content cache in mobile edge computing. Specifically, edge clouds are denoted as service providers and user equipment (UEs) are requestors. With the assistance of multiple edge clouds, this network architecture tends to improve the effectiveness of computation offloading service.

- Considering the framework of communication, computation, and cache, we establish the computation offloading problem model aided by deep Q-learning algorithm. Offloading decisions are modeled as actions in this approach, which in efficient decision action space. Furthermore, we applied deep Q-learning to choose the reasonable approach in solving resource management problem.

- Extensive simulation validates the effectiveness of the proposed approach in ultra-dense networks. Numerical results demonstrate its well performance with the increasing iterations. Additionally, the impact of different parameters in this approach is analyzed.

2. Related Work

3. System Model

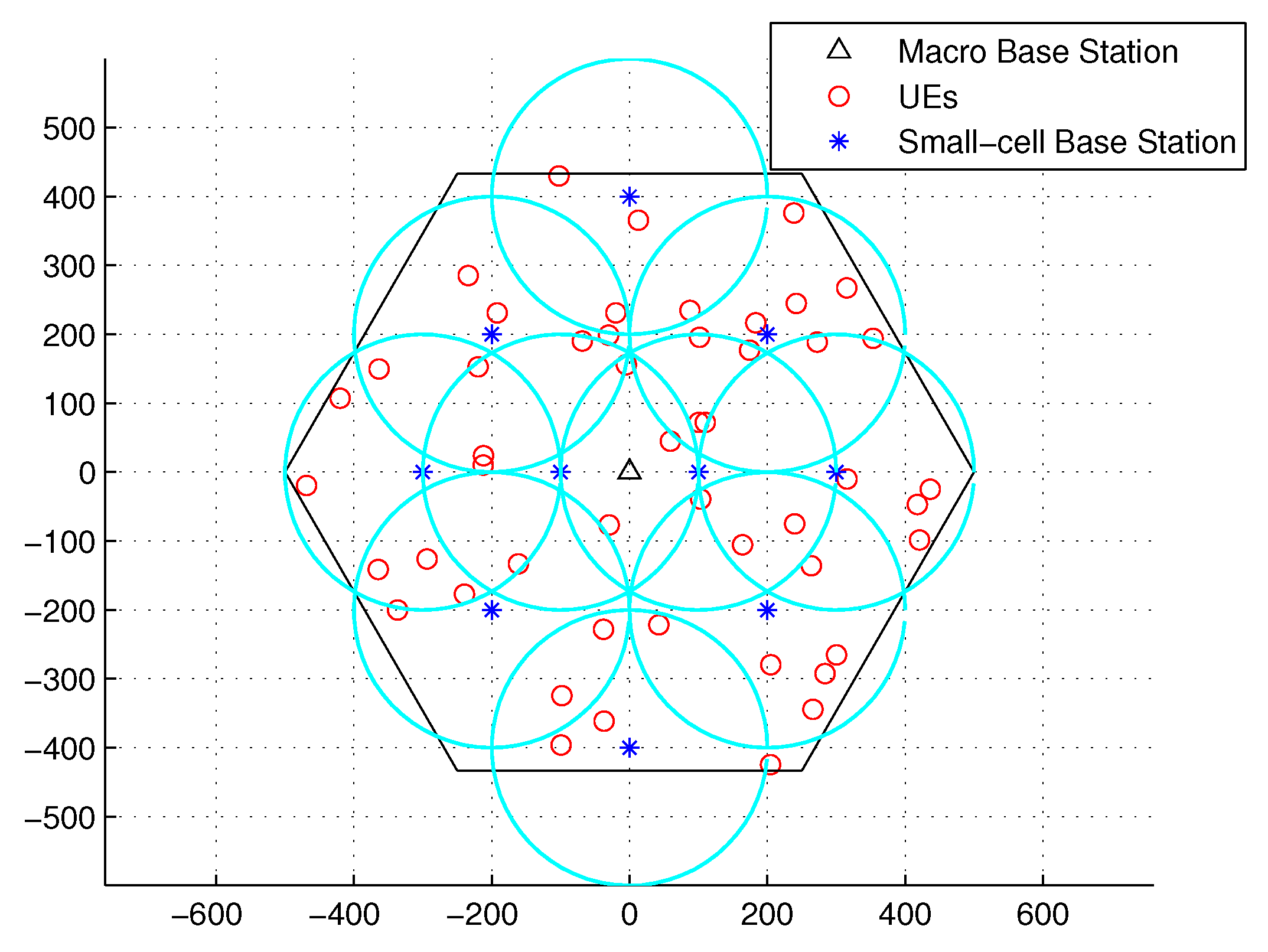

3.1. Architecture of Mobile Edge Computing

3.2. Communication Model

3.3. Computation Model

3.4. Caching Model

4. Deep Reinforcement Learning

4.1. Reinforcement Learning Algorithm

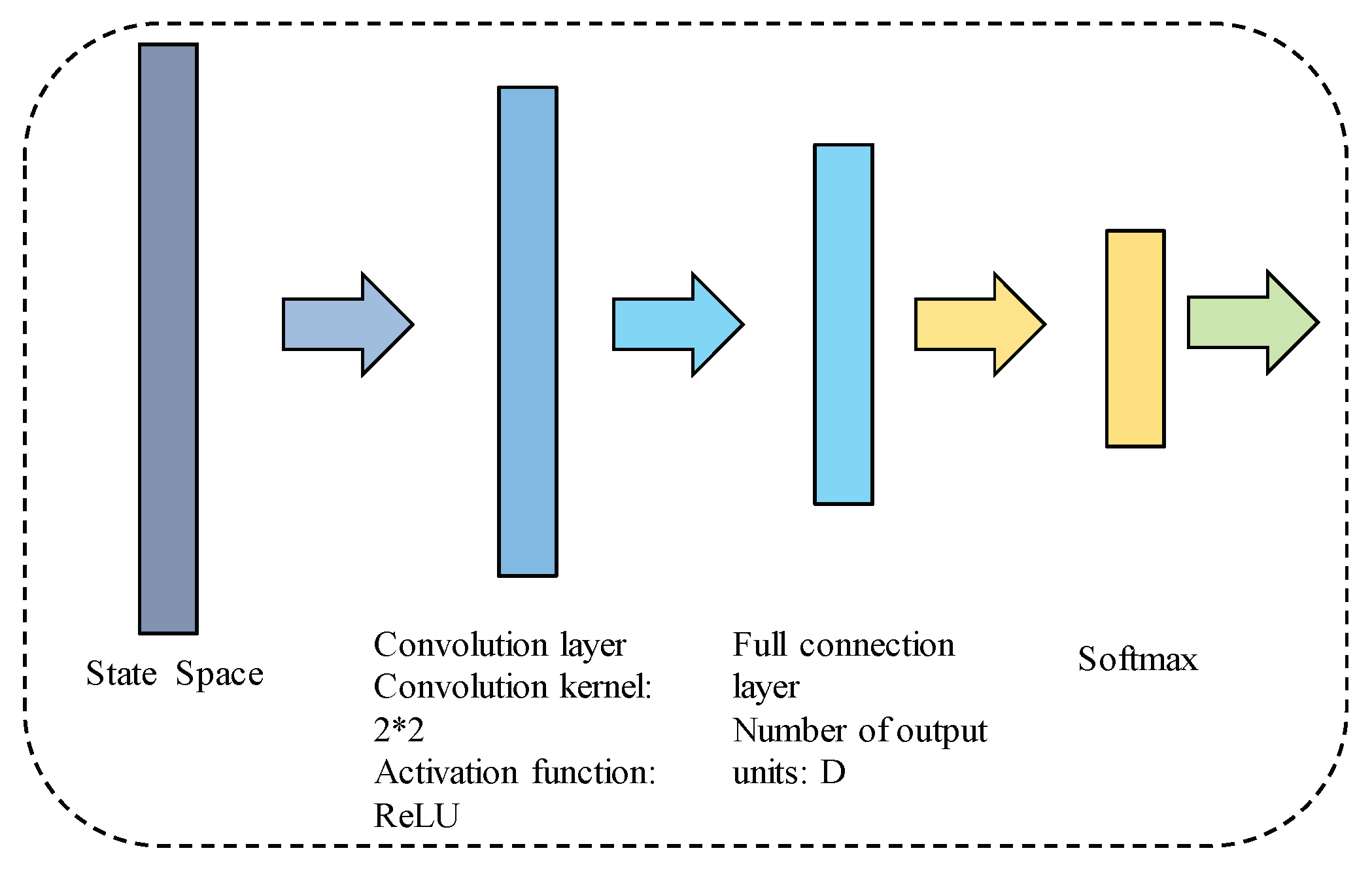

4.2. Deep Q-Network

| Algorithm 1: Deep Q-learning Algorithm |

|

5. Deep Q-Learning Aided Computation Offloading with Content Cache

6. Deep Q-Learning Aided Resource Management in Edge Cloud

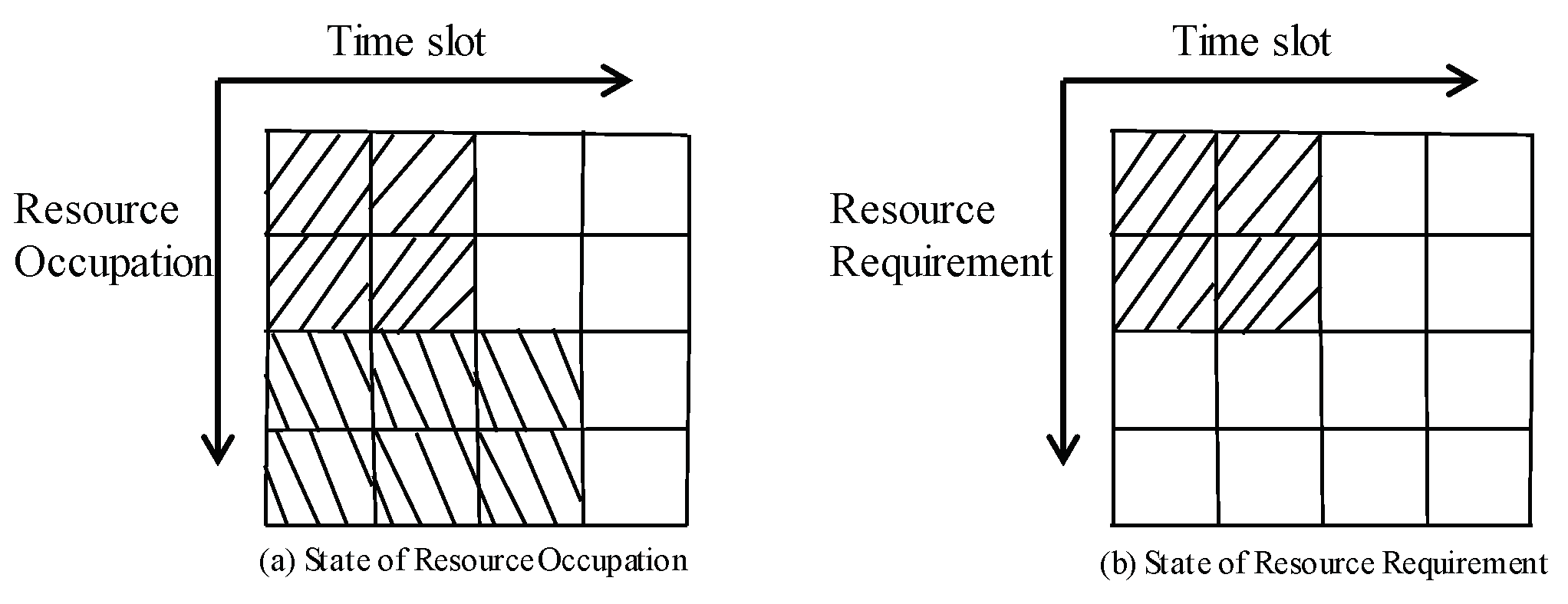

6.1. System Model and Task Model

6.2. Design of Task Schedule Strategy in Mobile Edge Cloud

7. Numerical Results

7.1. Parameter Setting

7.2. Simulation Result

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tran, T.X.; Hajisami, A.; Pandey, P.; Pompili, D. Collaborative Mobile Edge Computing in 5G Networks: New Paradigms, Scenarios, and Challenges. IEEE Commun. Mag. 2017, 55, 54–61. [Google Scholar]

- Shang, G.; Zhe, P.; Xiao, B.; Xiao, Q.; Song, Y. SCoP: Smartphone energy saving by merging push services in Fog computing. In Proceedings of the 2017 IEEE/ACM 25th International Symposium on Quality of Service (IWQoS), Vilanova i la Geltrú, Spain, 14–16 June 2017. [Google Scholar]

- Li, J.; Peng, Z.; Xiao, B. Smartphone-assisted smooth live video broadcast on wearable cameras. In Proceedings of the 2016 IEEE/ACM 24th International Symposium on Quality of Service (IWQoS), Beijing, China, 20–21 June 2016. [Google Scholar]

- Teng, Y.; Liu, M.; Yu, F.R.; Leung, V.C.M.; Song, M.; Zhang, Y. Resource Allocation for Ultra-Dense Networks: A Survey, Some Research Issues and Challenges. IEEE Commun. Surv. Tutor. 2019, 21, 2134–2168. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef] [Green Version]

- Mach, P.; Becvar, Z. Mobile Edge Computing: A Survey on Architecture and Computation Offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Y.; Zhe, P.; Xiao, B.; Guan, J. An efficient learning-based approach to multi-objective route planning in a smart city. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar]

- Zhan, C.; Hu, H.; Sui, X.; Liu, Z.; Niyato, D. Completion Time and Energy Optimization in the UAV-Enabled Mobile-Edge Computing System. IEEE Internet Things J. 2020, 7, 7808–7822. [Google Scholar] [CrossRef]

- Li, M.; Cheng, N.; Gao, J.; Wang, Y.; Zhao, L.; Shen, X. Energy-Efficient UAV-Assisted Mobile Edge Computing: Resource Allocation and Trajectory Optimization. IEEE Trans. Veh. Technol. 2020, 69, 3424–3438. [Google Scholar] [CrossRef]

- Yao, H.; Li, M.; Du, J.; Zhang, P.; Jiang, C.; Han, Z. Artificial Intelligence for Information-Centric Networks. IEEE Commun. Mag. 2019, 57, 47–53. [Google Scholar] [CrossRef]

- Yao, H.; Mai, T.; Jiang, C.; Kuang, L.; Guo, S. AI Routers & Network Mind: A Hybrid Machine Learning Paradigm for Packet Routing. IEEE Comput. Intell. Mag. 2019, 14, 21–30. [Google Scholar]

- Yang, L.; Zhang, H.; Li, M.; Guo, J.; Ji, H. Mobile edge computing empowered energy efficient task offloading in 5G. IEEE Trans. Veh. Technol. 2018, 67, 6398–6409. [Google Scholar] [CrossRef]

- Wang, Y.; Sheng, M.; Wang, X.; Wang, L.; Li, J. Mobile-edge computing: Partial computation offloading using dynamic voltage scaling. IEEE Trans. Commun. 2016, 64, 4268–4282. [Google Scholar] [CrossRef]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient multi-user computation offloading for mobile-edge cloud computing. IEEE/ACM Trans. Netw. 2016, 24, 2795–2808. [Google Scholar] [CrossRef] [Green Version]

- Muñoz, O.; Iserte, A.P.; Vidal, J.; Molina, M. Energy-latency trade-off for multiuser wireless computation offloading. In Proceedings of the IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Istanbul, Turkey, 6–9 April 2014. [Google Scholar]

- Wang, C.; Yu, F.R.; Liang, C.; Chen, Q.; Tang, L. Joint computation offloading and interference management in wireless cellular networks with mobile edge computing. IEEE Trans. Veh. Technol. 2017, 66, 7432–7445. [Google Scholar] [CrossRef]

- Li, J.; Zhe, P.; Xiao, B.; Yu, H. Make smartphones last a day: Pre-processing based computer vision application offloading. In Proceedings of the IEEE International Conference on Sensing, Seattle, WA, USA, 22–25 June 2015. [Google Scholar]

- Li, J.; Peng, Z.; Gao, S.; Xiao, B.; Chan, H. Smartphone-assisted energy efficient data communication for wearable devices. Comput. Commun. 2017, 105, 33–43. [Google Scholar] [CrossRef]

- Tan, L.T.; Hu, R.Q. Mobility-aware edge caching and computing in vehicle networks: A deep reinforcement learning. IEEE Trans. Veh. Technol. 2018, 67, 10190–10203. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, Z.; Wu, C.; Bennis, M.; Liu, H.; Ji, Y.; Zhang, H. Multi-tenant cross-slice resource orchestration: A deep reinforcement learning approach. IEEE J. Sel. Areas Commun. 2019, 37, 2377–2392. [Google Scholar] [CrossRef]

- He, Y.; Liang, C.; Yu, R.; Han, Z. Trust-based social networks with computing, caching and communications: A deep reinforcement learning approach. IEEE Trans. Netw. Sci. Eng. 2018, 7, 66–79. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.J. Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Trans. Mob. Comput. 2019, 19, 2581–2593. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Chen, H.; Chen, Y.; Lin, Z.; Vucetic, B.; Hanzo, L. Pricing and resource allocation via game theory for a small-cell video caching system. IEEE J. Sel. Areas Commun. 2016, 34, 2115–2129. [Google Scholar]

- Jin, Y.; Wen, Y.; Westphal, C. Optimal transcoding and caching for adaptive streaming in media cloud: An analytical approach. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1914–1925. [Google Scholar] [CrossRef]

- Hester, T.; Vecerik, M.; Pietquin, O.; Lanctot, M.; Schaul, T.; Piot, B.; Horgan, D.; Quan, J.; Sendonaris, A.; Osband, I.; et al. Deep q-learning from demonstrations. In Proceedings of the Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Agrawal, S.; Panigrahi, B.K.; Tiwari, M.K. Multiobjective Particle Swarm Algorithm With Fuzzy Clustering for Electrical Power Dispatch. IEEE Trans. Evol. Comput. 2008, 12, 529–541. [Google Scholar] [CrossRef]

- Yang, X.S.; He, X. Bat Algorithm: Literature Review and Applications. Int. J. Bio-Inspired Comput. 2013, 5, 141–149. [Google Scholar] [CrossRef] [Green Version]

| System Parameters | Value Setting |

|---|---|

| The access fee charged by MBS edge cloud | [3, 6] units/bps |

| The access fee charged by SBS edge cloud | [1, 3] units/bps |

| The usage cost of spectrum paid by MBS edge cloud | [] units/Hz |

| The usage cost of spectrum paid by SBS edge cloud | [] units/Hz |

| The computation fee charged by MBS edge cloud | 0.8 units/J |

| The computation fee charged by SBS edge cloud | 0.4 units/J |

| The computation cost paid by MBS edge cloud | 0.2 units/J |

| The computation cost paid by SBS edge cloud | 0.1 units/J |

| The storage fee charged by MBS edge cloud | 20 units/byte |

| The storage fee charged by SBS edge cloud | 10 units/byte |

| The backhaul cost paid by MBS edge cloud | 0.2 units/bps |

| The backhaul cost paid by SBS edge cloud | 0.1 units/bps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, F.; Fang, C.; Liu, M.; Li, N.; Sun, T. Intelligent Computation Offloading Mechanism with Content Cache in Mobile Edge Computing. Electronics 2023, 12, 1254. https://doi.org/10.3390/electronics12051254

Li F, Fang C, Liu M, Li N, Sun T. Intelligent Computation Offloading Mechanism with Content Cache in Mobile Edge Computing. Electronics. 2023; 12(5):1254. https://doi.org/10.3390/electronics12051254

Chicago/Turabian StyleLi, Feixiang, Chao Fang, Mingzhe Liu, Ning Li, and Tian Sun. 2023. "Intelligent Computation Offloading Mechanism with Content Cache in Mobile Edge Computing" Electronics 12, no. 5: 1254. https://doi.org/10.3390/electronics12051254