Ischemic Stroke Lesion Segmentation Using Mutation Model and Generative Adversarial Network

Abstract

1. Introduction

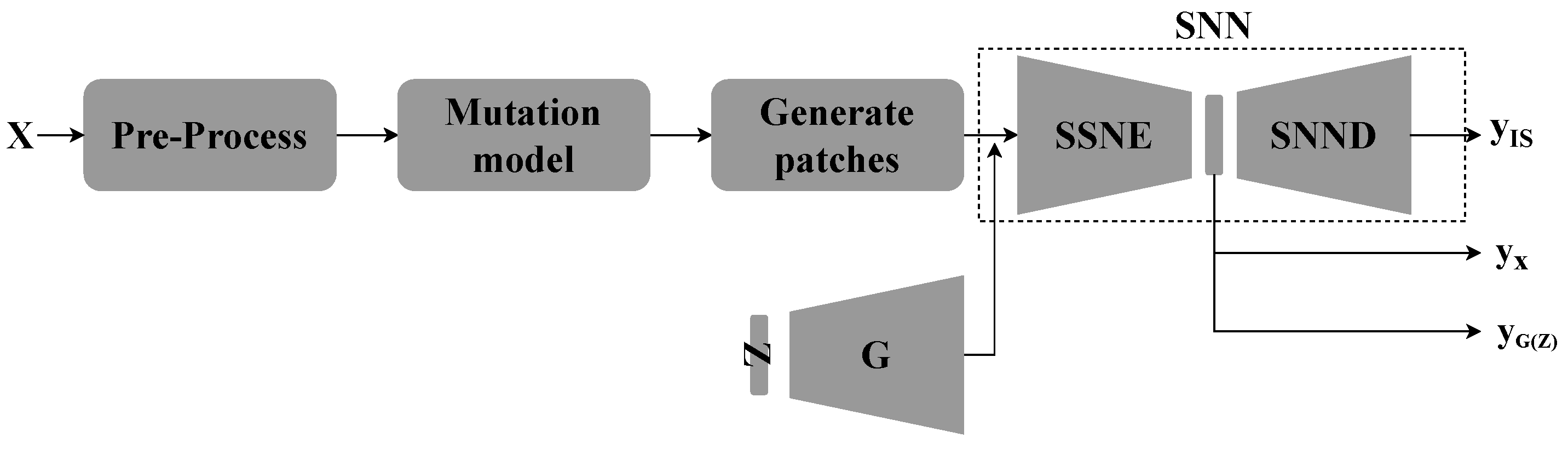

- The CTP image and the euclidean distance method are used to generate a distance map by computing distance horizontally and vertically for every two adjacent pixels.

- The distance map and mutation model are used to produce new synthetic samples. A set of adjacent pixels, locations of a region, are selected from one CTP image to assign the locations values of the modalities into different corresponding modalities while preserving the shape of these locations.

- A semi-supervised GAN model was enhanced and modified to supervised GAN model to exploit the entire knowledge and gain more meaningful information using labels.

- A shared module between segmentation and discriminator are used to reduce complexity of the GAN model and evaluate the proposed mutation method as an end-to-end model.

2. Literature Review

3. Materials and Methods

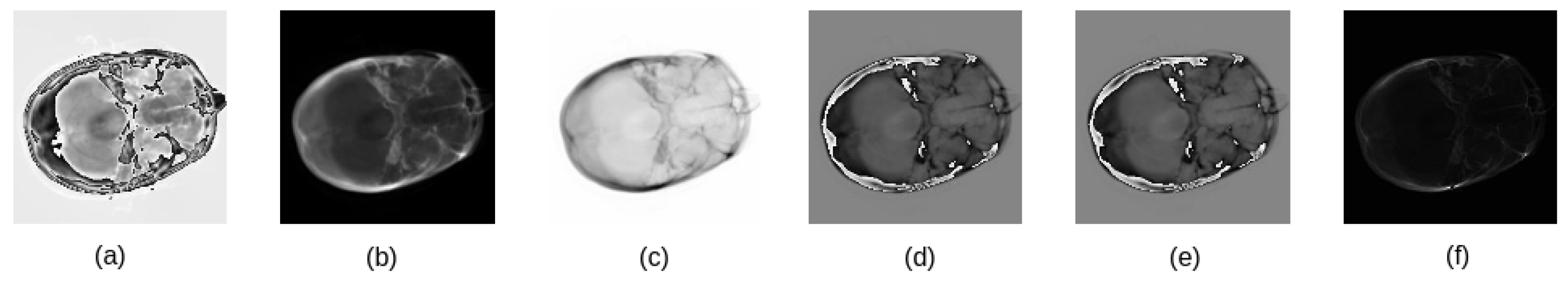

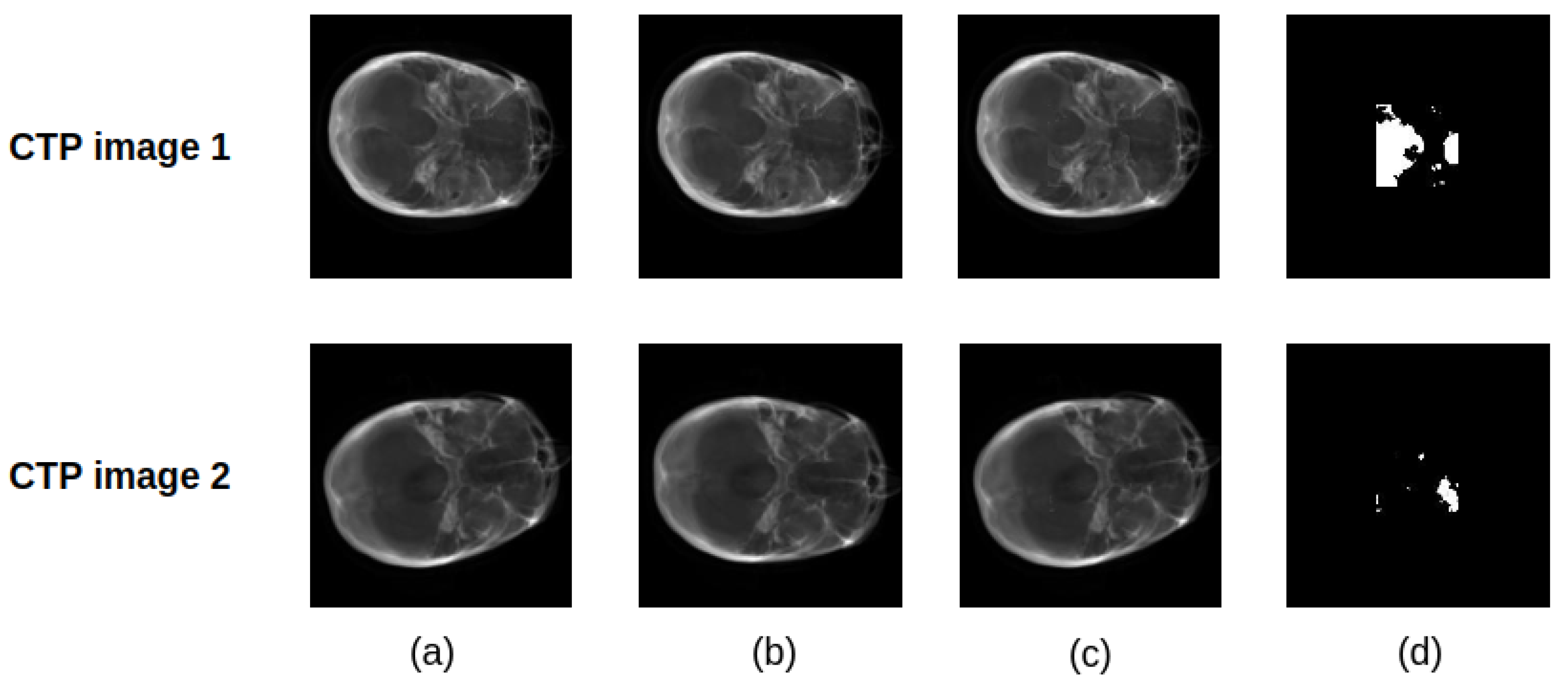

3.1. Data Pre-Processing

3.2. Mutation Model Using Distance Map

| Algorithm 1 Generate synthetic data using mutation model |

|

procedure Mutation_Model(data1, label1, data2, label2, center_point) ▷ Input : The first input consists of five modalities with dimension ▷ Input : The semantic label of the with dimension ▷ Input : The second input consists of five modalities with dimension ▷ Input : The semantic label of the with dimension ▷ Input : The determined center point of the selected region return the CTP modality from as illustrated in Algorithm 2 return the CTP modality from as illustrated in Algorithm 2 as illustrated in Algorithm 3 rotate by as illustrated in Algorithm 4 illustrated in Algorithm 5 , end procedure |

| Algorithm 2 Distance map method |

|

procedure Distance_Map_Method(ctp_image) ▷ Input : CTP image of dimension enhance the image as illustrated in Section 3.1 normalize to gray-scale as illustrated in Equation (2) of dimension end procedure |

| Algorithm 3 Rotation method |

|

procedure Rotation_Method(img1, img2) ▷ Input : Image of dimension ▷ Input : Image of dimension For a ← 1 to 90 For s ∈ [1, −1] rotate by angle equals to a*s, if the is less than a*s, if the is less than end procedure |

| Algorithm 4 Select adjacent locations from the distance map for mutation process |

|

procedure Select_Locations_Method(img, center_point) ▷ Input : Image of dimension ▷ Input : The determined center point (y, x) of the selected region ▷L: Length used for cropped image ▷T: Threshold used to select adjacent locations as illustrated in Algorithm 2 list of all locations in where their distance values are less than T end procedure |

| Algorithm 5 Mutate regions to generate new input |

|

procedure Mutation_Method(modalities_1, modalities_2, locations, angle, center_point)

▷ Input : First input consists of five modalities with dimensions ▷ Input : Second input consists of five modalities with dimensions ▷ Input : The semantic label of the that its dimension ▷ Input : The semantic label of the that its dimension ▷ Input : Set of the adjacent locations used for mutation model ▷ Input : The rotated angle that used to rotate images of the ▷ Input : The center points of the selected locations in the MIN(, ) For i ← 1 to N rotate by IF i ← 1 convert the CTP image to single channel and normalized it using Equitation (1) and Equitation (2), respectively return shifted center based on the new region in CTP image of the M1 and END IF append in axis zero END FOR, , end procedure |

3.3. Supervised GAN model

3.3.1. Semi-Supervised GAN Model

3.3.2. Proposed Supervised GAN Model

4. Experiment Result

4.1. Experimental Settings

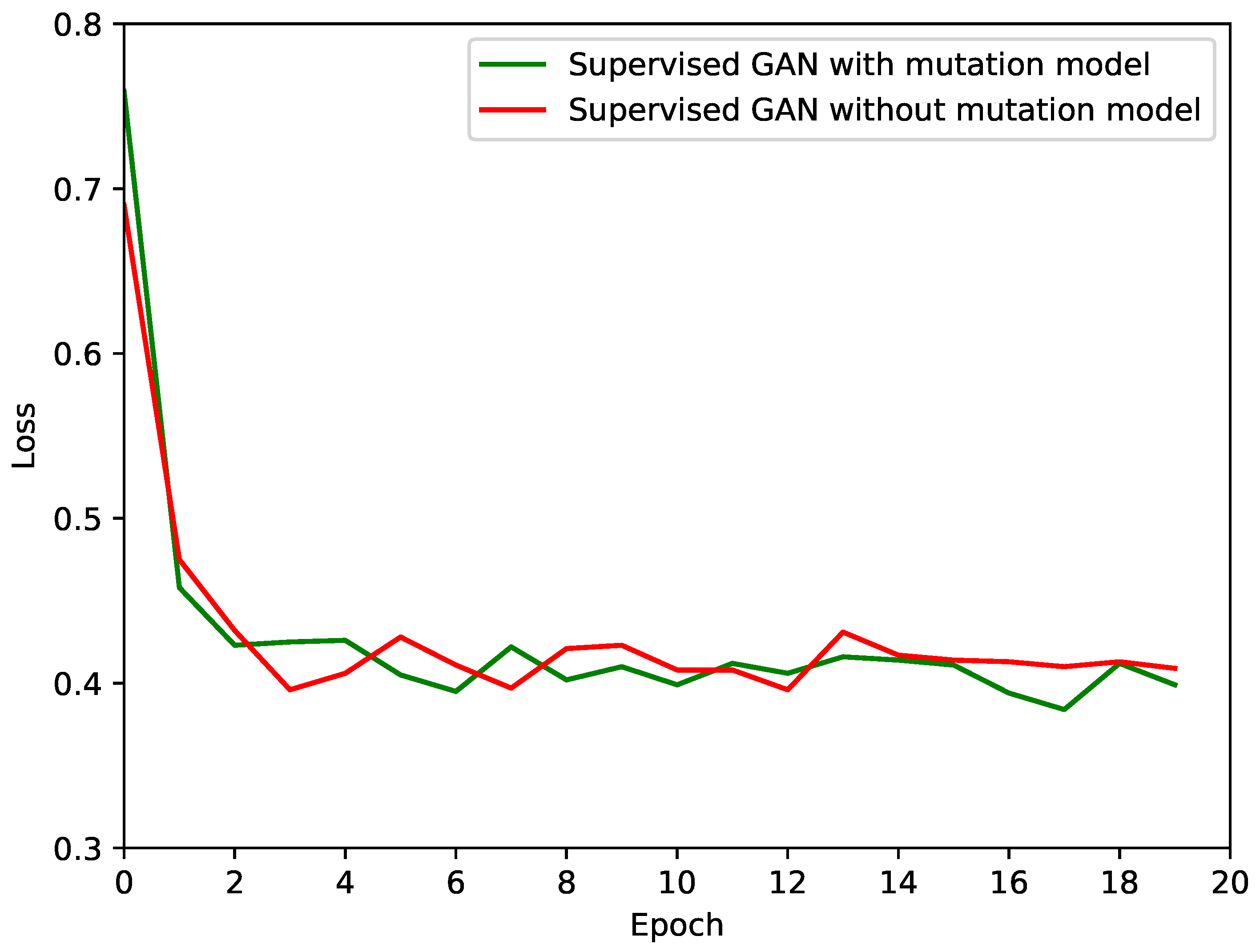

4.2. Results

5. Applications and Future Direction

5.1. Augmentation Technique

5.2. Feature Extraction

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CT | Computed tomography |

| CTP | Computed tomography perfusion |

| DWI | Diffusion-weighted imaging |

| CBF | Cerebral blood flow |

| CBV | Cerebral blood volume |

| Tmax | Time-to-maximum flow |

| MTT | Mean transit time |

| OT | Semantic segmentation label for IS lesions |

References

- Biniaz, A.; Abbasi, A. Fast FCM with spatial neighborhood information for Brain Mr image segmentation. J. Artif. Intell. Soft Comput. Res. 2013, 3, 15–25. [Google Scholar] [CrossRef]

- Shi, L.; Copot, C.; Vanlanduit, S. Evaluating Dropout Placements in Bayesian Regression Resnet. J. Artif. Intell. Soft Comput. Res. 2022, 12, 61–73. [Google Scholar] [CrossRef]

- Hakim, A.; Christensen, S.; Winzeck, S.; Lansberg, M.G.; Parsons, M.W.; Lucas, C.; Robben, D.; Wiest, R.; Reyes, M.; Zaharchuk, G. Predicting Infarct Core From Computed Tomography Perfusion in Acute Ischemia With Machine Learning: Lessons From the ISLES Challenge. Stroke 2021, 52, 2328–2337. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef]

- Sharma, N.; Aggarwal, L.M. Automated medical image segmentation techniques. J. Med. Phys. 2010, 35, 3. [Google Scholar] [CrossRef]

- Pröve, P.L.; Jopp-van Well, E.; Stanczus, B.; Morlock, M.M.; Herrmann, J.; Groth, M.; Säring, D.; Auf der Mauer, M. Automated segmentation of the knee for age assessment in 3D MR images using convolutional neural networks. Int. J. Leg. Med. 2019, 133, 1191–1205. [Google Scholar] [CrossRef] [PubMed]

- Al-Haija, Q.A.; Smadi, M.; Al-Bataineh, O.M. Early Stage Diabetes Risk Prediction via Machine Learning. In Proceedings of the 13th International Conference on Soft Computing and Pattern Recognition (SoCPaR 2021); Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; Volume 417. [Google Scholar] [CrossRef]

- Mondal, A.; Dolz, J.; Desrosiers, C. Few-shot 3d multi-modal medical image segmentation using generative adversarial learning. arXiv 2018, arXiv:1810.12241. [Google Scholar]

- Amin, J.; Sharif, M.; Yasmin, M.; Saba, T.; Anjum, M.; Fernandes, S. A new approach for brain tumor segmentation and classification based on score level fusion using transfer learning. J. Med. Syst. 2019, 43, 326. [Google Scholar] [CrossRef]

- Wang, G.; Song, T.; Dong, Q.; Cui, M.; Huang, N.; Zhang, S. Automatic ischemic stroke lesion segmentation from computed tomography perfusion images by image synthesis and attention-based deep neural networks. Med. Image Anal. 2020, 65, 101787. [Google Scholar] [CrossRef]

- Platscher, M.; Zopes, J.; Federau, C. Image translation for medical image generation: Ischemic stroke lesion segmentation. Biomed. Signal Process. Control 2022, 72, 103283. [Google Scholar] [CrossRef]

- Tureckova, A.; Rodríguez-Sánchez, A. ISLES challenge: U-shaped convolution neural network with dilated convolution for 3D stroke lesion segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 319–327. [Google Scholar]

- Andersen, A.H.; Zhang, Z.; Avison, M.J.; Gash, D.M. Automated segmentation of multispectral brain MR images. J. Neurosci. Methods 2002, 122, 13–23. [Google Scholar] [CrossRef] [PubMed]

- Al-Haija, Q.A.; Smadi, M.; Al-Bataineh, O.M. Identifying Phasic dopamine releases using DarkNet-19 Convolutional Neural Network. In Proceedings of the 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 21–24 April 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Diaz, O.; Kushibar, K.; Osuala, R.; Linardos, A.; Garrucho, L.; Igual, L.; Radeva, P.; Prior, F.; Gkontra, P.; Lekadir, K. Data preparation for artificial intelligence in medical imaging: A comprehensive guide to open-access platforms and tools. Phys. Med. 2021, 83, 25–37. [Google Scholar] [CrossRef]

- Rezaei, M.; Yang, H.; Meinel, C. Learning imbalanced semantic segmentation through cross-domain relations of multi-agent generative adversarial networks. In Proceedings of the Medical Imaging 2019: Computer-Aided Diagnosis, San Diego, CA, USA, 16–21 February 2019; p. 1095027. [Google Scholar]

- Yang, H. Volumetric Adversarial Training for Ischemic Stroke Lesion Segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 343–351. [Google Scholar]

- Shen, C.; Roth, H.R.; Hayashi, Y.; Oda, M.; Miyamoto, T.; Sato, G.; Mori, K. Cascaded fully convolutional network framework for dilated pancreatic duct segmentation. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 343–354. [Google Scholar] [CrossRef] [PubMed]

- Roy, S.; Meena, T.; Lim, S.J. Attention UW-Net: A fully connected model for automatic segmentation and annotation of chest X-ray. Comput. Biol. Med. 2022, 150, 106083. [Google Scholar]

- Zhang, C.; Lu, J.; Hua, Q.; Li, C.; Wang, P. SAA-Net: U-shaped network with Scale-Axis-Attention for liver tumor segmentation. Biomed. Signal Process. Control 2022, 73, 103460. [Google Scholar] [CrossRef]

- Indraswari, R.; Kurita, T.; Arifin, A.Z.; Suciati, N.; Astuti, E.R. Multi-projection deep learning network for segmentation of 3D medical images. Pattern Recognit. Lett. 2019, 125, 791–797. [Google Scholar] [CrossRef]

- Hashemi, S.R.; Salehi, S.S.M.; Erdogmus, D.; Prabhu, S.P.; Warfield, S.K.; Gholipour, A. Asymmetric loss functions and deep densely-connected networks for highly-imbalanced medical image segmentation: Application to multiple sclerosis lesion detection. IEEE Access 2018, 7, 1721–1735. [Google Scholar] [CrossRef]

- Zhu, W.; Huang, Y.; Tang, H.; Qian, Z.; Du, N.; Fan, W.; Xie, X. Anatomynet: Deep 3d squeeze-and-excitation u-nets for fast and fully automated whole-volume anatomical segmentation. BioRxiv 2018, 392969. [Google Scholar] [CrossRef]

- Al-Haija, Q.A.; Adebanjo, A. Breast Cancer Diagnosis in Histopathological Images Using ResNet-50 Convolutional Neural Network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Vancouver, BC, Canada, 9–12 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Rezaei, M.; Yang, H.; Meinel, C. Recurrent generative adversarial network for learning imbalanced medical image semantic segmentation. Multimed. Tools Appl. 2020, 79, 15329–15348. [Google Scholar] [CrossRef]

- Wouters, A.; Robben, D.; Christensen, S.; Marquering, H.A.; Roos, Y.B.; van Oostenbrugge, R.J.; van Zwam, W.H.; Dippel, D.W.; Majoie, C.B.; Schonewille, W.J.; et al. Prediction of stroke infarct growth rates by baseline perfusion imaging. Stroke 2022, 53, 569–577. [Google Scholar] [CrossRef]

- Ischemic Stroke Lesion Segmentation (ISLES-2018). Available online: www.isles-challenge.org (accessed on 21 June 2022).

- Böhme, L.; Madesta, F.; Sentker, T.; Werner, R. Combining good old random forest and DeepLabv3+ for ISLES 2018 CT-based stroke segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 335–342. [Google Scholar]

- Abulnaga, S.; Rubin, J. Ischemic stroke lesion segmentation in CT perfusion scans using pyramid pooling and focal loss. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 352–363. [Google Scholar]

- Clerigues, A.; Valverde, S.; Bernal, J.; Freixenet, J.; Oliver, A.; Lladó, X. Acute ischemic stroke lesion core segmentation in CT perfusion images using fully convolutional neural networks. Comput. Biol. Med. 2019, 115, 103487. [Google Scholar] [CrossRef]

- Bertels, J.; Robben, D.; Vandermeulen, D.; Suetens, P. Contra-lateral information CNN for core lesion segmentation based on native CTP in acute stroke. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 263–270. [Google Scholar]

- Rezaei, M.; Yang, H.; Meinel, C. voxel-GAN: Adversarial framework for learning imbalanced brain tumor segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 321–333. [Google Scholar]

- Liu, P. Stroke lesion segmentation with 2D novel CNN pipeline and novel loss function. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 253–262. [Google Scholar]

- Khare, S.K.; Gaikwad, N.B.; Bajaj, V. VHERS: A Novel Variational Mode Decomposition and Hilbert Transform-Based EEG Rhythm Separation for Automatic ADHD Detection. IEEE Trans. Instrum. Meast. 2022, 71, 4008310. [Google Scholar] [CrossRef]

- Khare, S.K.; Bajaj, V.; Acharya, U.R. SPWVD-CNN for automated detection of schizophrenia patients using EEG signals. IEEE Trans. Instrum. Meas. 2021, 70, 2507409. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Liu, L.; Yang, S.; Meng, L.; Li, M.; Wang, J. Multi-scale deep convolutional neural network for stroke lesions segmentation on CT images. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16 September 2018; pp. 283–291. [Google Scholar]

- Khalil, A.; Jarrah, M.; Al-Ayyoub, M.; Jararweh, Y. Text detection and script identification in natural scene images using deep learning. Comput. Electr. Eng. 2021, 91, 107043. [Google Scholar] [CrossRef]

- Chen, M.; Zheng, H.; Lu, C.; Tu, E.; Yang, J.; Kasabov, N. Accurate breast lesion segmentation by exploiting spatio-temporal information with deep recurrent and convolutional network. J. Ambient Intell. Humaniz. Comput. 2019, 1–9. [Google Scholar] [CrossRef]

- Zuluaga, M.A.; Hoyos, M.H.; Orkisz, M. Feature selection based on empirical-risk function to detect lesions in vascular computed tomography. IRBM. 2014, 35, 244–254. [Google Scholar] [CrossRef]

- Garali, I.; Adel, M.; Bourennane, S.; Ceccaldi, M.; Guedj, E. Brain region of interest selection for 18FDG positrons emission tomography computer-aided image classification. IRBM 2016, 37, 23–30. [Google Scholar] [CrossRef]

- Balasubramanian, K.; Ananthamoorthy, N.P. Robust retinal blood vessel segmentation using convolutional neural network and support vector machine. J. Ambient Intell. Humaniz. Comput. 2021, 12, 3559–3569. [Google Scholar] [CrossRef]

- Cohen, M.E.; Pellot-Barakat, C.; Tacchella, J.M.; Lefort, M.; De Cesare, A.; Lebenberg, J.; Souedet, N.; Lucidarme, O.; Delzescaux, T.; Frouin, F. Quantitative evaluation of rigid and elastic registrations for abdominal perfusion imaging with X-ray computed tomography. IRBM 2013, 34, 283–286. [Google Scholar] [CrossRef]

- Jolivet, E.; Daguet, E.; Bousson, V.; Bergot, C.; Skalli, W.; Laredo, J.D. Variability of hip muscle volume determined by computed tomography. IRBM 2009, 30, 14–19. [Google Scholar] [CrossRef]

- Mohtasebi, M.; Bayat, M.; Ghadimi, S.; Moghaddam, H.A.; Wallois, F. Modeling of neonatal skull development using computed tomography images. IRBM 2021, 42, 19–27. [Google Scholar] [CrossRef]

- Talaat, F.M.; Gamel, S.A. RL based hyper-parameters optimization algorithm (ROA) for convolutional neural network. J. Ambient Intell. Humaniz. Comput. 2022, 1–11. [Google Scholar] [CrossRef]

| Abbreviations | Description |

|---|---|

| CT | Computed tomography |

| CTP | Computed tomography perfusion |

| DWI | Diffusion-weighted imaging |

| CBF | Cerebral blood flow |

| CBV | Cerebral blood volume |

| Tmax | Time-to-maximum flow |

| MTT | Mean transit time |

| OT | Semantic segmentation label for IS lesions |

| References | Augmentation Method | Finding | Limitation |

|---|---|---|---|

| Wang et al., 2020 [10] | Synthesized pseudo DWI module | The Synthesized pseudo DWI module uses the ISLES-2018 to generate a synthetic dataset | The DWI label is used to switch lesion regions with normal regions without considering other possible choices for switching regions |

| Rezaei et al., 2019 [16] | General augmentation methods | The general augmentation techniques are used to increase the training set | The traditional augmentation processes are used to increase the training set, such as flipping and adding Gaussian noise. |

| Yang et al., 2018 [17] | - | The error of the discriminator is fed into the segmentation module for second back-propagation | The generator module is excluded, and a synthetic dataset is not provided. |

| Rezaei et al., 2018 [32] | Gaussian noise method | The Gaussian noise method is used to increase the training set | The Gaussian noise is used only for augmentation. |

| Liu and Pengbo 2018 [33] | General augmentation methods and translation method | General augmentation methods are used to increase the dataset, and the generator is used to translate the CTP modality into DWI modality | Traditional processes are used for augmentation, such as flipping and scaling images. |

| Proposed model | Mutation model based on distance map that integrated into proposed GAN model | Presents a mutation model based on a distance map that randomly selects normal or damaged regions to generate a synthetic dataset and integrate it into the GAN model. Furthermore, a supervised GAN model is proposed to exploit the generator and gain information from labels. Finally, utilize a shared network for segmentation and discriminator to reduce GAN complexity | The proposed mutation model is not adaptive, and the proposed end-to-end model suffers from overfitting |

| Model | Dice |

|---|---|

| Supervised GAN without integrated mutated model | 40.68% |

| Supervised GAN with integrated mutated model | 43.22% |

| Model | loss |

|---|---|

| Supervised GAN without mutated images | 42.99% |

| Supervised GAN with mutated images | 42.86% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghnemat, R.; Khalil, A.; Abu Al-Haija, Q. Ischemic Stroke Lesion Segmentation Using Mutation Model and Generative Adversarial Network. Electronics 2023, 12, 590. https://doi.org/10.3390/electronics12030590

Ghnemat R, Khalil A, Abu Al-Haija Q. Ischemic Stroke Lesion Segmentation Using Mutation Model and Generative Adversarial Network. Electronics. 2023; 12(3):590. https://doi.org/10.3390/electronics12030590

Chicago/Turabian StyleGhnemat, Rawan, Ashwaq Khalil, and Qasem Abu Al-Haija. 2023. "Ischemic Stroke Lesion Segmentation Using Mutation Model and Generative Adversarial Network" Electronics 12, no. 3: 590. https://doi.org/10.3390/electronics12030590

APA StyleGhnemat, R., Khalil, A., & Abu Al-Haija, Q. (2023). Ischemic Stroke Lesion Segmentation Using Mutation Model and Generative Adversarial Network. Electronics, 12(3), 590. https://doi.org/10.3390/electronics12030590