FMDL: Federated Mutual Distillation Learning for Defending Backdoor Attacks

Abstract

:1. Introduction

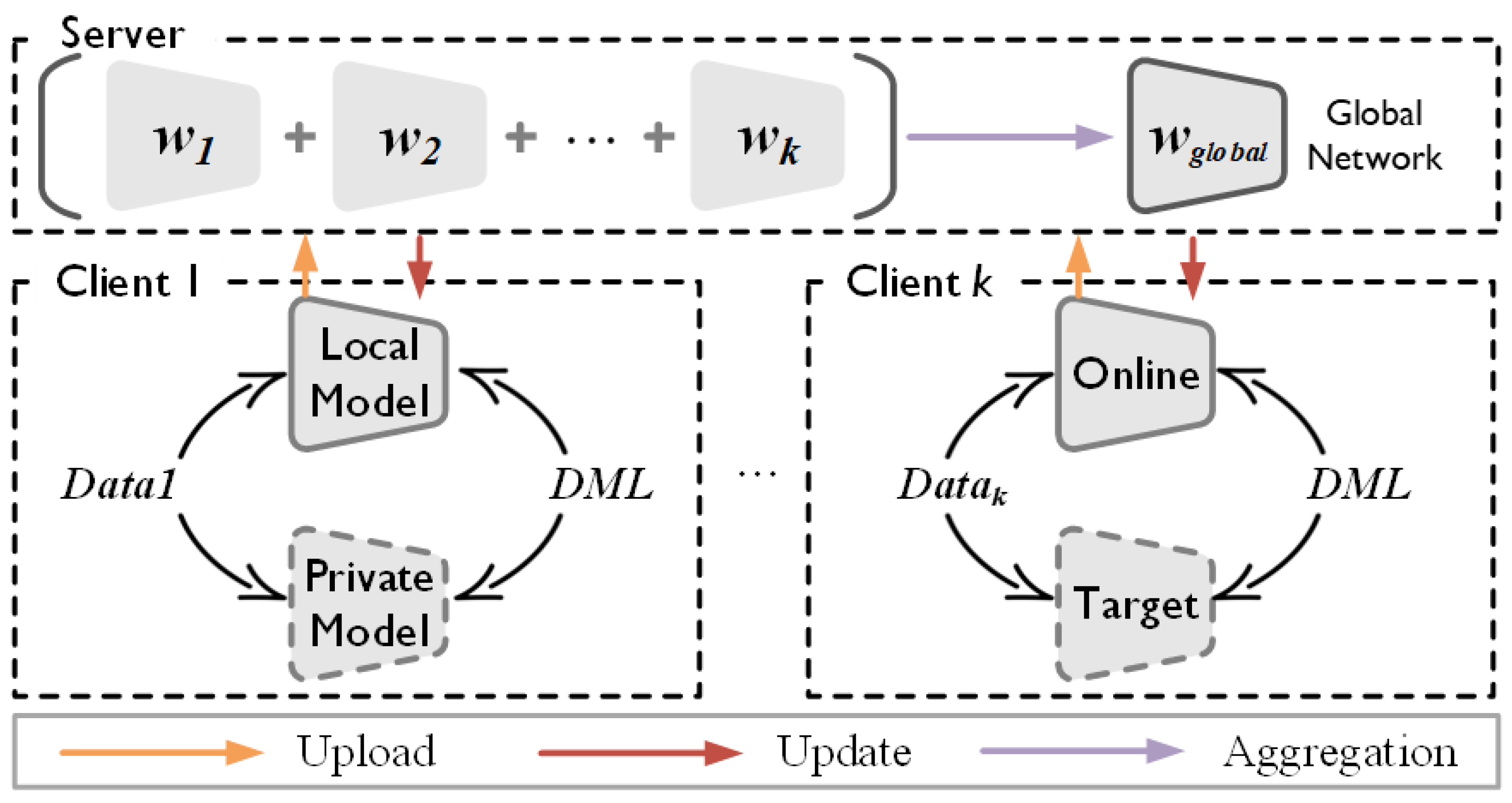

- We propose the adoption of dual-model updates in local updates of federated learning, where the meme model is used for knowledge transfer between the global model and local models, and the personalized model is designed as a private model for client data and tasks. The two models engage in deep mutual learning to address the three types of heterogeneity, enabling personalized model requirements.

- We construct a clean teacher model based on knowledge distillation to guide the training of the student model. The teacher model is fine-tuned on small, clean subsets to defend against various types of backdoor attacks. This approach significantly reduces the accuracy of backdoor attacks, approaching random guessing without causing significant performance degradation, effectively ensuring privacy and security.

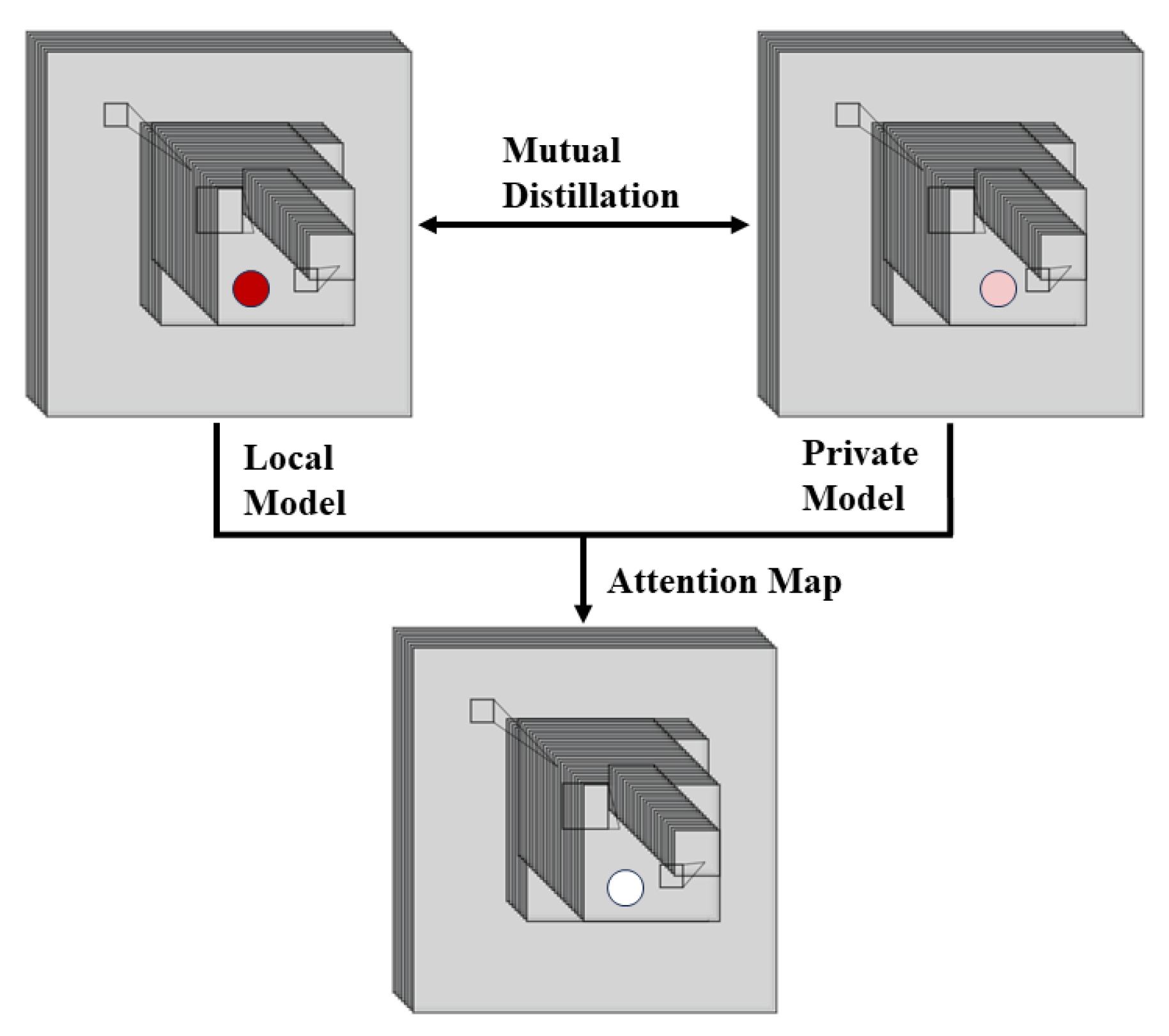

- To achieve defensive performance visualization, we utilize attention maps as an evaluation criterion and define distillation loss based on the attention maps of the teacher and student models.

- We conduct experiments on multiple benchmark datasets to validate the effectiveness of the FMDL method in addressing heterogeneity issues and its security against backdoor attacks.

2. Related Work

2.1. Federated Learning

2.2. Heterogeneous Federated Learning

2.3. Knowledge Distillation

2.4. Backdoor Attacks and Defense

3. Federated Mutual Distillation Learning

3.1. Classical Distillation Methods

3.2. Federated Mutual Learning

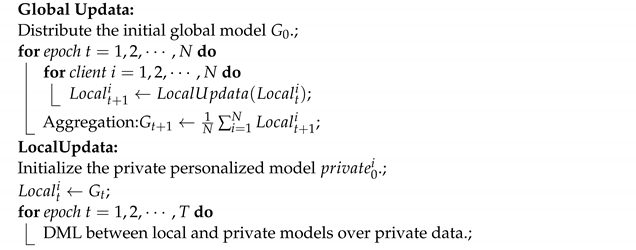

| Algorithm 1: Federated Mutual Learning |

|

3.3. Attention Distillation Defense Methods

4. Experimental Evaluations

4.1. Experimental Setup

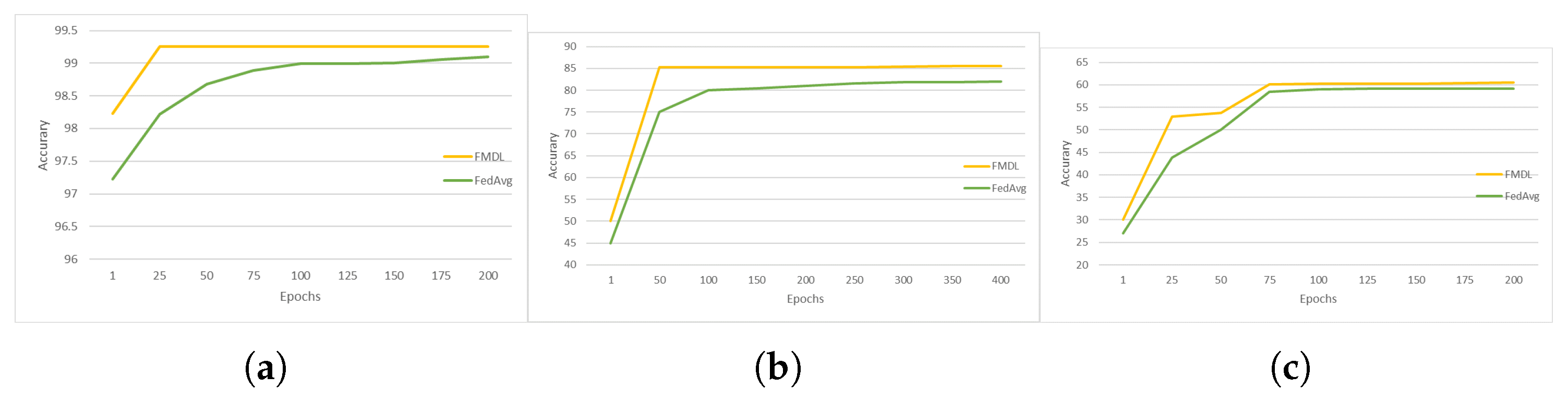

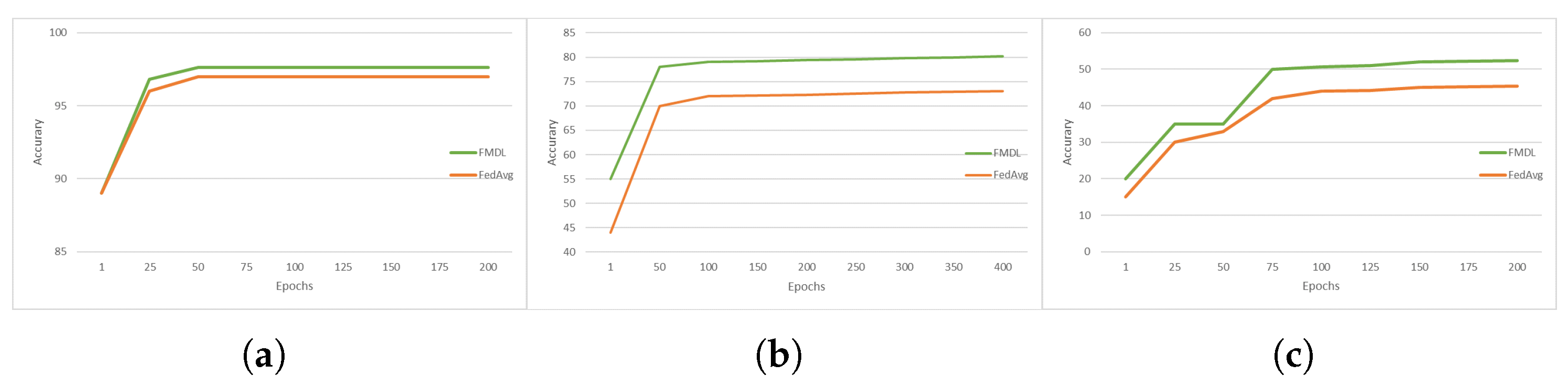

4.2. Comparison of FMDL and Traditional FL Performance

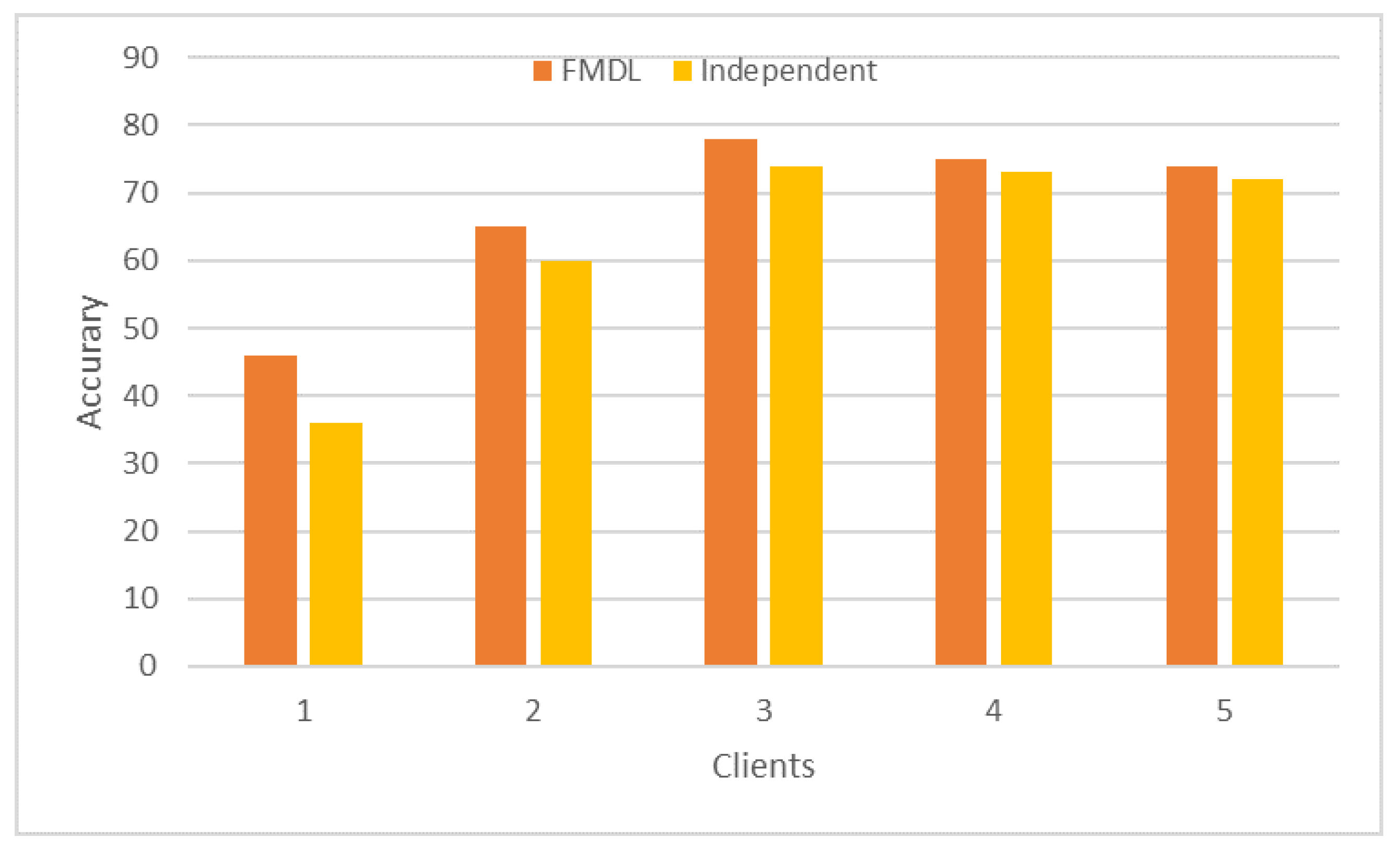

4.3. Performance of FMDL under Three Heterogeneous Settings

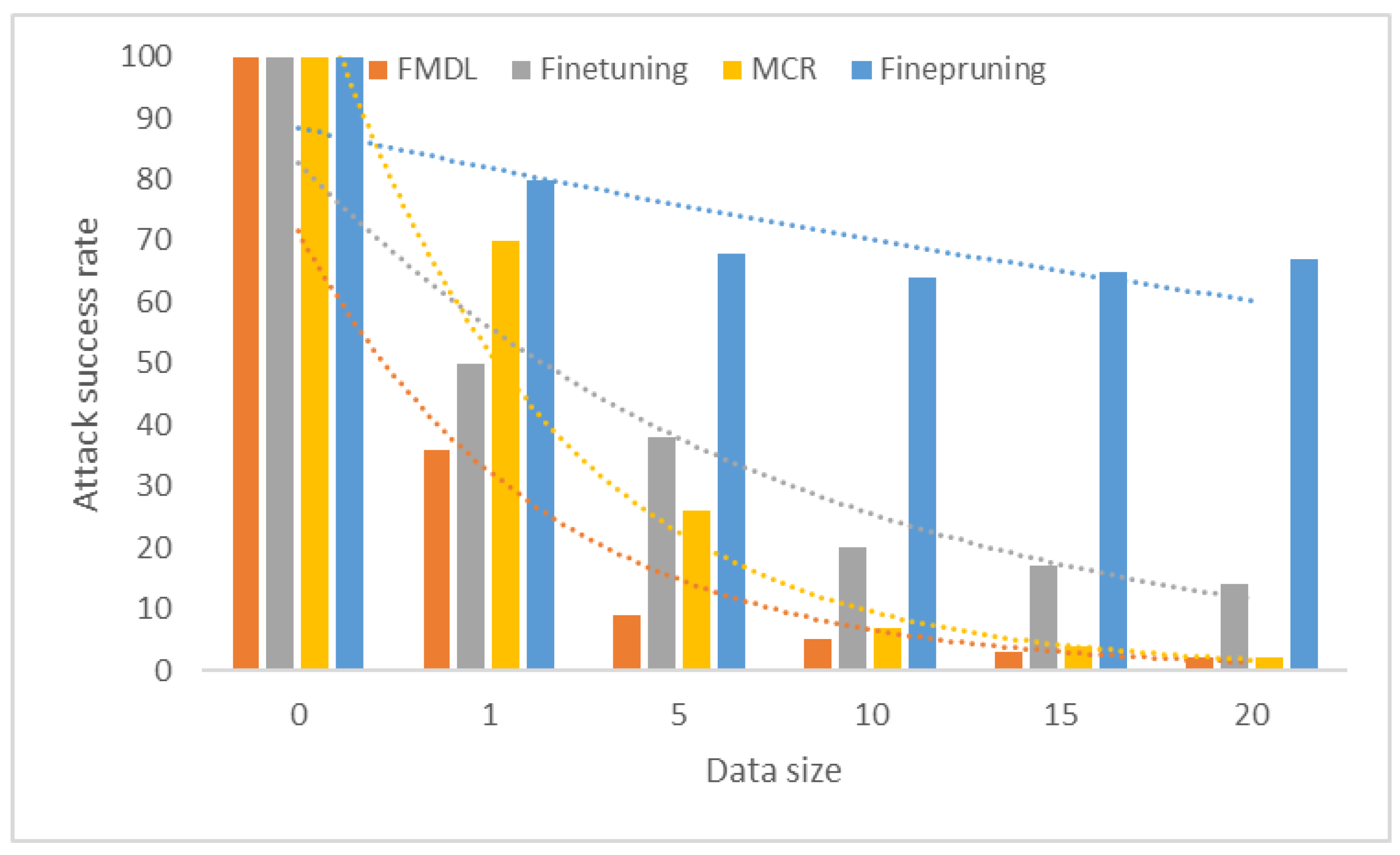

4.4. Effectiveness of FMDL in Defending Backdoors

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ribeiro, M.; Grolinger, K.; Capretz, M.A. Mlaas: Machine learning as a service. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 896–902. [Google Scholar]

- Ren, Y.; Leng, Y.; Qi, J.; Sharma, P.K.; Wang, J.; Almakhadmeh, Z.; Tolba, A. Multiple cloud storage mechanism based on blockchain in smart homes. Future Gener. Comput. Syst. 2021, 115, 304–313. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Ren, Y.; Leng, Y.; Cheng, Y.; Wang, J. Secure data storage based on blockchain and coding in edge computing. Math. Biosci. Eng 2019, 16, 1874–1892. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Konečnỳ, J.; Rush, K.; Kannan, S. Improving federated learning personalization via model agnostic meta learning. arXiv 2019, arXiv:1909.12488. [Google Scholar]

- Gu, T.; Dolan-Gavitt, B.; Garg, S. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2017, arXiv:1708.06733. [Google Scholar]

- Tran, B.; Li, J.; Madry, A. Spectral signatures in backdoor attacks. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Chen, B.; Carvalho, W.; Baracaldo, N.; Ludwig, H.; Edwards, B.; Lee, T.; Molloy, I.; Srivastava, B. Detecting backdoor attacks on deep neural networks by activation clustering. arXiv 2018, arXiv:1811.03728. [Google Scholar]

- Buciluǎ, C.; Caruana, R.; Niculescu-Mizil, A. Model compression. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 535–541. [Google Scholar]

- Urban, G.; Geras, K.J.; Kahou, S.E.; Aslan, O.; Wang, S.; Caruana, R.; Mohamed, A.; Philipose, M.; Richardson, M. Do deep convolutional nets really need to be deep and convolutional? arXiv 2016, arXiv:1603.05691. [Google Scholar]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep mutual learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar]

- Ezzeldin, Y.H.; Yan, S.; He, C.; Ferrara, E.; Avestimehr, A.S. Fairfed: Enabling group fairness in federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 7494–7502. [Google Scholar]

- Zhang, J.; Hua, Y.; Wang, H.; Song, T.; Xue, Z.; Ma, R.; Guan, H. FedALA: Adaptive local aggregation for personalized federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 11237–11244. [Google Scholar]

- Huang, W.; Ye, M.; Shi, Z.; Li, H.; Du, B. Rethinking federated learning with domain shift: A prototype view. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 16312–16322. [Google Scholar]

- Wu, X.; Huang, F.; Hu, Z.; Huang, H. Faster adaptive federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 10379–10387. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-iid data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, J.Y.; Torralba, A.; Efros, A.A. Dataset distillation. arXiv 2018, arXiv:1811.10959. [Google Scholar]

- Chen, H.; Wang, Y.; Xu, C.; Yang, Z.; Liu, C.; Shi, B.; Xu, C.; Xu, C.; Tian, Q. Data-free learning of student networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3514–3522. [Google Scholar]

- Yu, T.; Bagdasaryan, E.; Shmatikov, V. Salvaging federated learning by local adaptation. arXiv 2020, arXiv:2002.04758. [Google Scholar]

- Liu, F.; Wu, X.; Ge, S.; Fan, W.; Zou, Y. Federated learning for vision-and-language grounding problems. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11572–11579. [Google Scholar]

- Wu, B.; Dai, X.; Zhang, P.; Wang, Y.; Sun, F.; Wu, Y.; Tian, Y.; Vajda, P.; Jia, Y.; Keutzer, K. Fbnet: Hardware-aware efficient convnet design via differentiable neural architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10734–10742. [Google Scholar]

- Gao, D.; Ju, C.; Wei, X.; Liu, Y.; Chen, T.; Yang, Q. Hhhfl: Hierarchical heterogeneous horizontal federated learning for electroencephalography. arXiv 2019, arXiv:1909.05784. [Google Scholar]

- Smith, V.; Chiang, C.K.; Sanjabi, M.; Talwalkar, A.S. Federated multi-task learning. Adv. Neural Inf. Process. Syst. 2017, 30, 4427–4437. [Google Scholar]

- Khodak, M.; Balcan, M.F.F.; Talwalkar, A.S. Adaptive gradient-based meta-learning methods. Adv. Neural Inf. Process. Syst. 2019, 32, 5917–5928. [Google Scholar]

- Li, D.; Wang, J. Fedmd: Heterogenous federated learning via model distillation. arXiv 2019, arXiv:1910.03581. [Google Scholar]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a defense to adversarial perturbations against deep neural networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 582–597. [Google Scholar]

- Zhao, Y.; Xu, R.; Wang, X.; Hou, P.; Tang, H.; Song, M. Hearing lips: Improving lip reading by distilling speech recognizers. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6917–6924. [Google Scholar]

- Bagherinezhad, H.; Horton, M.; Rastegari, M.; Farhadi, A. Label refinery: Improving imagenet classification through label progression. arXiv 2018, arXiv:1805.02641. [Google Scholar]

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for thin deep nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Song, X.; Feng, F.; Han, X.; Yang, X.; Liu, W.; Nie, L. Neural compatibility modeling with attentive knowledge distillation. In Proceedings of the The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 5–14. [Google Scholar]

- Ahn, S.; Hu, S.X.; Damianou, A.; Lawrence, N.D.; Dai, Z. Variational information distillation for knowledge transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9163–9171. [Google Scholar]

- Heo, B.; Lee, M.; Yun, S.; Choi, J.Y. Knowledge transfer via distillation of activation boundaries formed by hidden neurons. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 29–31 January 2019; Volume 33, pp. 3779–3787. [Google Scholar]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Liu, Y.; Ma, S.; Aafer, Y.; Lee, W.C.; Zhai, J.; Wang, W.; Zhang, X. Trojaning attack on neural networks. In Proceedings of the 25th Annual Network And Distributed System Security Symposium (NDSS 2018), San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Shafahi, A.; Huang, W.R.; Najibi, M.; Suciu, O.; Studer, C.; Dumitras, T.; Goldstein, T. Poison frogs! Targeted clean-label poisoning attacks on neural networks. Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Turner, A.; Tsipras, D.; Madry, A. Clean-Label Backdoor Attacks. 2018. Available online: https://openreview.net/forum?id=HJg6e2CcK7 (accessed on 20 November 2023).

- Liu, Y.; Ma, X.; Bailey, J.; Lu, F. Reflection backdoor: A natural backdoor attack on deep neural networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16. pp. 182–199. [Google Scholar]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.T. Backdoor learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef] [PubMed]

- Barni, M.; Kallas, K.; Tondi, B. A new backdoor attack in cnns by training set corruption without label poisoning. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 101–105. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-pruning: Defending against backdooring attacks on deep neural networks. In International Symposium on Research in Attacks, Intrusions, and Defenses; Springer: Cham, Switzerland, 2018; pp. 273–294. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef] [PubMed]

- Truong, L.; Jones, C.; Hutchinson, B.; August, A.; Praggastis, B.; Jasper, R.; Nichols, N.; Tuor, A. Systematic evaluation of backdoor data poisoning attacks on image classifiers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 788–789. [Google Scholar]

- Zhao, P.; Chen, P.Y.; Das, P.; Ramamurthy, K.N.; Lin, X. Bridging mode connectivity in loss landscapes and adversarial robustness. arXiv 2020, arXiv:2005.00060. [Google Scholar]

- Jebreel, N.M.; Domingo-Ferrer, J. FL-Defender: Combating targeted attacks in federated learning. Knowl.-Based Syst. 2023, 260, 110178. [Google Scholar] [CrossRef]

- Jebreel, N.M.; Domingo-Ferrer, J.; Li, Y. Defending Against Backdoor Attacks by Layer-wise Feature Analysis. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Osaka, Japan, 25–28 May 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 428–440. [Google Scholar]

- Li, Y.; Lyu, X.; Koren, N.; Lyu, L.; Li, B.; Ma, X. Neural attention distillation: Erasing backdoor triggers from deep neural networks. arXiv 2021, arXiv:2101.05930. [Google Scholar]

| Symbols | Meaning |

|---|---|

| L | Loss Function. |

| Cross Entropy. | |

| Kullback Leibler (KL) Divergence. | |

| p | The predicts of the model. |

| T | A hyper-parameter mean temperature. |

| z | The logits of teacher model. |

| The hyper-parameters that control the proportion of knowledge from data or from the other model. | |

| M | A DNN model. |

| The activation output at the l-th layer. | |

| Z | An attention operator that maps an activation map to an attention representation. |

| Reflects all activation regions. | |

| Amplify the disparities between the backdoored neurons and the benign neurons by an order of p. | |

| Align the activation center of the backdoored neurons with that of the benign neurons by taking the mean over all activation regions. | |

| The dimensions of the channel, the height, and the width of the activation map. |

| Dataset | Training Samples | Test Samples | Classes | Model |

|---|---|---|---|---|

| MNIST | 60,000 | 10,000 | 10 | MLP |

| CIFAR-10 | 50,000 | 10,000 | 10 | CNN |

| CIFAR-100 | 50,000 | 10,000 | 100 | CNN |

| Backdoor | BadNets | Trojan | Blend | Clean-Label | Signal | Refool |

|---|---|---|---|---|---|---|

| Dataset | CIFAR-10 | CIFAR-10 | CIFAR-10 | CIFAR-10 | CIFAR-10 | GTSRB |

| Model | WideResNet | WideResNet | WideResNet | WideResNet | WideResNet | WideResNet |

| Inject Rate | 0.1 | 0.05 | 0.1 | 0.08 | 0.08 | 0.08 |

| Trigger | Grid | Square | Random | Grid+PGD | Sinusoidal | Reflection |

| Type | Noise | Noise | Signal | |||

| Target Size | 3 × 3 | 3 × 3 | Full Image | 3 × 3 | Full Image | Full Image |

| Attention | ||||||||

|---|---|---|---|---|---|---|---|---|

| Function | ACC | ASR | ACC | ASR | ACC | ASR | ACC | ASR |

| Baseline | 100% | 85.86% | 100% | 85.86% | 100% | 85.86% | 100% | 85.86% |

| Epoch 5 | 12.28% | 81.50% | 4.60% | 81.30% | 6.89% | 81.46% | 4.21% | 81.55% |

| Backdoor Attack | BadNets | Trojan | Blend | CL | SIG | Refool |

|---|---|---|---|---|---|---|

| Before ASR | 100 | 100 | 99.97 | 99.21 | 99.91 | 95.16 |

| ACC | 85.65 | 81.24 | 84.95 | 84.95 | 84.36 | 82.38 |

| AD ASR | 4.77 | 19.63 | 4.04 | 9.18 | 2.52 | 3.18 |

| ACC | 81.17 | 79.16 | 81.68 | 80.34 | 81.95 | 80.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, H.; Zhu, W.; Sun, Z.; Cao, M.; Liu, W. FMDL: Federated Mutual Distillation Learning for Defending Backdoor Attacks. Electronics 2023, 12, 4838. https://doi.org/10.3390/electronics12234838

Sun H, Zhu W, Sun Z, Cao M, Liu W. FMDL: Federated Mutual Distillation Learning for Defending Backdoor Attacks. Electronics. 2023; 12(23):4838. https://doi.org/10.3390/electronics12234838

Chicago/Turabian StyleSun, Hanqi, Wanquan Zhu, Ziyu Sun, Mingsheng Cao, and Wenbin Liu. 2023. "FMDL: Federated Mutual Distillation Learning for Defending Backdoor Attacks" Electronics 12, no. 23: 4838. https://doi.org/10.3390/electronics12234838

APA StyleSun, H., Zhu, W., Sun, Z., Cao, M., & Liu, W. (2023). FMDL: Federated Mutual Distillation Learning for Defending Backdoor Attacks. Electronics, 12(23), 4838. https://doi.org/10.3390/electronics12234838