1. Introduction

With the rapid development of modern manufacturing, automation technology is used widely in more and more scenarios. Due to the complex and dynamic environmental conditions inside cigarette factories, mechanical transportation and manual operation are no longer sufficient to meet production needs. AGVs can be used in cigarette factories in order to improve efficiency and quality, as they can run autonomously according to the program and transfer items back and forth during cigarette production. However, there are often many obstacles that hinder the movement of AGVs within cigarette factories, such as pillars, packaging boxes, or other production equipment. The operation of AGVs may be prevented by these obstacles, as they may collide with other equipment or employees in severe cases, leading to serious accidents and even the delay of production. Therefore, it is necessary to study an efficient AGV obstacle avoidance system based on complex and dynamic background conditions in the tobacco industry.

AGV obstacle avoidance technologies have been explored and developed in different ways. Gonzalez et al. [

1] introduced the obstacle avoidance techniques implemented in the intelligent vehicle literature and discussed their contributions to provide comprehensive references for studies in relevant fields. At present, obstacle avoidance based on sensors is the most commonly used technique. The objects around AGVs can be detected by sensors, which determine whether to avoid them. Information such as object position, shape, and distance is detected by the sensors, like laser rangefinder, ultrasonic sensor, infrared sensor, and visual sensor, which help AGVs avoid obstacles. Collision avoidance is considered a key issue in mobile robot navigation. The long lines from 2D laser scanning were extracted by Toan Van Nguyen et al. [

2] to detect static obstacles. Furthermore, the Kalman filter and global nearest neighbor (GNN) method were combined to track the position and velocity of dynamic obstacles, and the spatial information of the obstacles was obtained. The speed estimation method based on polynomial fitting was used by Zheng et al. [

3] in order to ensure the flight safety of unmanned aerial vehicles. The positions of each point scanned by LiDAR were estimated, and the distorted point clouds were corrected. The clustering algorithm based on relative distance and density (CBRDD) was used to cluster the non-uniform density point clouds to obtain the position information of obstacles. The STM32F207 was selected by Zhou et al. [

4] as the main control chip, using peripheral devices such as grayscale sensors, ultrasound, infrared sensors, cameras, wireless modules, etc., in conjunction with the μC/OS-II real-time operating system to complete a series of studies such as AGV position data acquisition, tracking, trajectory tracking, voltage acquisition and conversion, serial port 232 communication, state data storage, I/O input and output control, as well as AGV modeling and trajectory tracking. The machine vision based on the identification method for rice field stems was proposed by Liu et al. [

5] to address the identification of turning positions in visual navigation of rice planting machines. First of all, the distortion parameters were obtained through camera calibration, and the original image was corrected, and then the deviation of the average grayscale value of the image line was calculated by Python to determine whether obstacles appear. The binary and morphological processing on the obstacle image were performed, and the image was scanned in the height direction to obtain the feature points for fitting the obstacle boundary line. Finally, the least squares method was used to fit the feature points and the obstacle boundary line was obtained. The effect of using a single sensor for measurement is not ideal in the field of sensor obstacle avoidance; therefore, it is often necessary to use other sensors for compensation in order to achieve the best detection of the surrounding environment in practical applications. The motion model for differential AGV navigation adopting an inertial guidance method based on multiple sensors was established by Wang et al. [

6]. The Kalman filtering multi-sensor was established by selecting encoders, gyroscopes, acceleration sensors, ultrasonic sensors, and infrared sensors, and a data fusion navigation and obstacle avoidance model and algorithm were proposed.

The preset paths were used by the obstacle avoidance technology of path planning based on environmental simulation to avoid obstacles. The use of sensors can be reduced and the motion efficiency is improved by this method, but accurate environmental modeling and path planning are required; otherwise, AGVs may collide with obstacles. The improved artificial potential field method for optimizing obstacle avoidance path planning of robotic arms in three-dimensional space was proposed by Xu et al. [

7]. The target can leave the local minimum point where the algorithm falls, while avoiding obstacles and following a shorter feasible path along the repulsive equipotential surface for local optimization. A new method to plan the spraying path for multiple irregular and discontinuous working regional planning was proposed by Ma et al. [

8]. An improved grid method is adopted to reduce the transition work path of irregular work areas on the basis of traditional grid methods. The shortest total flight path between multiple discontinuous regions is designed by using an improved ant colony algorithm. A path planning method based on the improved A* algorithm was proposed by Wang et al. [

9] regarding the impact of scenic road conditions on route and road cost issues. The optimal scenic area route can be planned by comprehensively evaluating the weight of each extension node in the grid scenic area. Firstly, the heuristic function of the A* algorithm is exponentially attenuated and weighted to improve the computational efficiency of the algorithm. Secondly, factors affecting road conditions are introduced into the evaluation function in order to increase the practicality of the A* algorithm. Finally, the improved A* algorithm can effectively reduce computational time and road costs. The AGV path planning problem of the single line production line in the workshop was studied by Tao et al. [

10]. A mathematical model with the shortest transportation time as the objective function is established, and an improved particle swarm optimization (IPSO) algorithm is proposed to obtain the optimal path. In order to solve path planning problems, the researchers proposed an encoding method based on this algorithm, designed a crossover operation to update particle positions, and adopted a mutation mechanism to avoid the algorithm falling into local optimal positions. Huang et al. [

11] used a novel motion planning and tracking framework for automated vehicles, which relied on the artificial potential field elaborated resistance network approach. They provided several case studies to show the effectiveness of the proposed framework.

With the development of artificial intelligence technology, more researchers have applied it to the motion plan of automated vehicles [

12,

13]. For example, Akopov et al. proposed a novel parallel real-coded genetic algorithm and combined it with fuzzy clustering to approximate the optimal parameters of the multiagent fuzzy transportation system in the reference [

14,

15]. They demonstrated that the proposed method effectively improves the maneuverability of automated vehicles. Deep learning uses its feature-learning ability and accelerated training technique to recognize and analyze objects in the environment, which helps AGVs avoid obstacles [

16]. Target detection algorithms are widely used in AGV obstacle avoidance systems, such as YOLO, Faster R-CNN, and SSD. The objects can be detected and recognized by these algorithms in real-time, providing efficient and accurate obstacle avoidance solutions for AGVs. The sonar detection of image targets based on deep learning technology was studied by Yu [

17], and corresponding algorithms for improvement and functional implementation were designed in response to the current shortcomings and needs in this field. Various methods for acoustic image denoising based on multi resolution tools was proposed firstly, the natural image was divided into blocks at an appropriate rate according to the changes in the sampling matrix, and underwater natural images were measured finally. The neural networks and convolutional neural network algorithms in deep learning were studied by Su et al. [

18], and an object detection system based on these two algorithms was constructed to test the treated sewage. On the basis of YOLOv3 detection network, the improved network retaining the original basic features of the detection network was optimized and validated by Xiao et al. [

19] in the context of intestinal endoscopy. By comparing the hash values of the images, the redundant images are filtered out, and the final concise detection results are presented to the doctors. A smart city object detection algorithm combining deep learning and feature extraction for the phenomenon of occlusion in smart cities was proposed by Wang et al. [

20]. An adaptive strategy is proposed, which optimizes the search window of the algorithm based on the traditional SSD algorithm. The weighted correlation feature fusion method was combined with the algorithm according to the changes of the target working conditions which improves the accuracy of the objective function. A deep learning-based human track and field object detection and tracking method was proposed by Zhang et al. [

21]. The background subtraction based on an adaptive mixed Gaussian background model was used to detect targets. The video image was denoised and smoothed, and the holes in the foreground area were removed by morphological filtering. The connected areas of the binary image were analyzed to obtain the number and location of the connected areas. The area ratio and length-width ratio of the human body were used to classify and identify human bodies, so as to complete the detection of human track and field sport targets. Based on the structure of deep learning, the method was combined with the detection results of deep learning and LK tracking. PN learning was used to modify the parameters of the superimposed automatic coding machine, which avoids detection errors in deep learning, and finally realized the track and field tracking of humans. The deep learning object detection algorithm is widely used in underwater image detection, medical image detection, smart city object detection, and other fields, and has significant advantages in universality and applicability.

To sum up, to address the complex and dynamic environment problem inside the cigarette factory, an AGV obstacle avoidance system combining the grid method and deep learning algorithm is proposed in this paper. Deep learning is used as an object detection algorithm to detect obstacles in real time for AGVs. The grid method is suitable for many irregular and discontinuous working areas, as it can deal with the scene containing many obstacles and many restrictions properly.

The structure of this article is as follows: the background and research status of this article is introduced in

Section 1; the proposed AGV obstacle avoidance system is introduced in

Section 2; the on-site test results are described in

Section 3; and the conclusion is drawn in

Section 4.

2. Materials and Methods

2.1. Working Principle of AGV Obstacle Avoidance System

The AGV obstacle avoidance system in this paper is mainly composed of a camera, a visual AI platform, a demonstration system, an AGV scheduling system, and an AGV car controller. The deep learning object detection algorithm was used by the system to detect obstacles. At the same time, a grid-based path planning method was used to generate a feasible path suitable for the AGV, and the car movement was controlled by dispatching system to avoid obstacles. The AGV obstacle avoidance application architecture and data flow are shown in

Figure 1. The detailed flow is as follows:

- (1)

Video images of the AGV passage in the tobacco production workshop are obtained through cameras.

- (2)

The object detection algorithm is used to analyze whether there are obstacles in the image after the image is obtained on the visual AI basic platform, and the obstacles are pushed to the AGV scheduling system.

- (3)

The grid method is used to plan the route after obtaining obstacle information, and the optimal route is pushed to the AGV.

- (4)

The real-time position information of the AGV is pushed to the control module on the AGV through the RS232 interface. The corresponding operation according to the control instructions is performed by the AGV; the information is sent to the basic platform through the 5G network for real-time position and video display of the AGV.

- (a)

Camera module:

Image acquisition device (model: DS-2CD2646FWDA2-XZS2.7—12 mm): 2 million 1/2.7 “CMOS black light level cylindrical network camera; minimum illumination: 0.0005 Lux @ (F1.0, AGC ON), 0 Lux with IR, wide dynamic range: 120 dB; maximum image size: 2560 × 1440, with 1 built-in microphone; minimum illumination color: 0.005 lx, black and white: 0.0005 lx; maximum brightness discrimination level (grayscale level) no less than 11 levels; support IP67 dustproof and waterproof.

- (b)

Visual AI Basic Platform Module:

Computing power equipment (Huawei, Shenzhen, China, 2288XV5 12LFF): CPU: Xeon Silver 4208; graphics card: NVIDIA TESLA T4; memory: 128 G; hard disk: 2 TB; network: dual port 10 Gigabit Ethernet card.

- (c)

AGV trolley module:

Brand: Rocla, Järvenpää, Finland; model: AWT16F S1400; guidance mode: laser; communication mode: 5g/WIFI; transfer mode: fork type; walking speed: 0~2 m/s; minimum turning radius: 1800 mm; repetition accuracy: ±5 mm; safety protection: safe laser obstacle avoidance and safety contact.

The autonomous movement obstacle avoidance of the AGV can be realized through the cooperation of different systems, such as the camera’s acquisition of scene information, the visual AI platform algorithm’s processing and visual display of the demonstration system, as well as the coordination of the AGV’s own scheduling system and controller.

2.2. Obstacle Detection

Deep learning object detection algorithms are used by the system to detect obstacles in real-time. With high accuracy, the object detection algorithms, such as YOLO, Faster R-CNN, and SSD, can detect and recognize objects in real-time. The system uses the YOLO algorithm to detect obstacles in the environment, as shown in

Figure 2. The YOLO algorithm divides the environment into multiple grids and detects objects in each grid. The YOLO algorithm can detect multiple objects in real-time and provide accurate object information, such as position and size.

The YOLO (You Only Look Once) algorithm is an object detection algorithm based on convolutional neural networks. The YOLO algorithm has advantages such as fast speed, high accuracy, and end-to-end training, compared to traditional object detection algorithms such as R-CNN.

A single neural network is used by the YOLO algorithm to simultaneously predict the category and position information of multiple targets in the image. The prediction workflow is as follows:

Step 1. Place images on the network for processing.

Step 2. Two bounding boxes are received by each grid after dividing the environment into 49 grids.

Step 3. Multiply the class information predicted by each grid with the confidence information predicted by the bounding boxes to obtain the probability of each candidate box predicting a specific object and the probability of position overlap PrIOU. The value of the equal sign on the right side in Equation (1) is PrIOU.

Step 4. Sort PrIOUs, remove PrIOUs below the threshold, and then perform non-maximum suppression for each category.

The non-maximum suppression mentioned here refers to selecting the candidate box with the highest confidence; it will be deleted if the overlapping area IOU with the current highest scoring candidate box exceeds a certain threshold.

2.3. Path Planning

The feasible paths for AGVs can be generated by path planning based on the grid in the system. The layout of the cigarette factory is shown in

Figure 3 (left). As shown in

Figure 3, the yellow box represents the AGV path, the black box represents the packaging car room, and the red box represents the AGV platform within the packaging unit. The packaging unit is shown in

Figure 3 (right). The AGV needs to carry out the transportation task according to the AGV path to the platform of the target site, if the unit is the target site. The entire rolling car room was divided into four areas with a total of 16 grids based on the factory environment in terms of the path planning. The obstacle avoidance area diagram is shown in

Figure 4. The A* algorithm was used to generate feasible paths for the AGV at the same time, which is mainly used to generate the shortest path from the current position of the AGV to the target position, while also considering obstacles and constraints in the grid map, as shown in

Figure 5.

With good performance and accuracy, the A* (A Star) algorithm is commonly used for path finding and graph traversal.

The priority of each node was calculated by the A* algorithm through the following Formula (2).

where

f(

n) is the comprehensive priority of node

n; the node with the highest comprehensive priority (minimum value) was always chosen when choosing the next node to traverse.

g(

n) is the cost of the distance between node

n and the starting point;

h(

n) is the estimated cost of the distance from node

n to the endpoint, which is the heuristic function of the A* algorithm.

The node with the lowest value of f(n) (the highest priority) from the priority queue was selected as the next node to be traversed each time during the operations by the A* algorithm.

In addition, two sets were used by the A* algorithm to represent the nodes to be traversed, and the nodes that have already been traversed, which are commonly referred to as open_ set and close_ set.

2.4. Obstacle Avoidance Control

The AGV scheduling system was used to adjust the speed and direction of the AGV. By communicating with the control module of the AGV, the scheduling system sends control instructions to the AGV, and controls parameters such as the movement and speed of the AGV. The AGV scheduling system can achieve the following aspects of control:

- (1)

Motion control: the direction of AGV travel, including forward, backward, left turn, right turn, etc., can be controlled by the scheduling system in order to achieve path planning and task scheduling.

- (2)

Speed control: the driving speed of AGVs can be controlled by the scheduling system to adjust according to task requirements, such as acceleration and deceleration.

- (3)

Parking control: the parking and starting of AGVs can be controlled by the scheduling system to achieve tasks such as pausing and resuming.

- (4)

Emergency stop control: emergency stop instructions are sent by the dispatch system to avoid accidents in a timely manner when an emergency situation occurs, such as obstacles, personnel, etc.

The remote and autonomous control of AGVs can be achieved by the scheduling system through the controls listed above in order to achieve logistics automation and intelligence, which improve production and logistics efficiency, and reduce labor and operational costs.

2.5. Rules of AGV Obstacle Avoidance System

2.5.1. Obstacle Discrimination Rules

The video obtained from the tobacco production workshop camera in this design is circulated to the visual AI basic platform for obstacle identification. The obstacle identification rules are as follows:

- (1)

Filter out suspicious obstacles detected by deep learning and filter out pedestrian categories;

- (2)

Confirm the type of obstacle and obtain the coordinates Oi = {oi1, oi2,…, oim} of the corresponding obstacle, where i represents the large area monitored by the camera. The rules are as follows:

If the obstacle category is AGV, it is directly determined as an obstacle.

If the obstacle category is other, calculate the obstacle’s residence time. It is determined as an obstacle if the residence time exceeds the threshold.

2.5.2. Obstacle Positioning Rules

An obstacle needs to be located to facilitate the transmission of the obstacle’s location information to the scheduling system for route planning when a suspicious object is identified as an obstacle. The rules for obstacle positioning are as follows:

- (1)

Each camera monitors a large area, and each large area is preset with several small cells. The coordinates of the preset small areas are Ai = {aj1, aj2,…, ajn}, where i represents the large area monitored by the camera and j represents the preset number of small cells for each large area.

- (2)

After confirming the category of obstacles, convert the coordinates Oi = {oi1, oi2,…, oim} of the corresponding obstacles to an area Si = {si1, si2,…, sim}.

- (3)

Convert the coordinates Ai = {aj1, aj2,…, ajn} of several small regions to the region area Qi = {qj1, qj2,…, qjn}.

- (4)

Calculate the area of obstacles and the area of several small areas to obtain the proportion of obstacles in each small area. The formula is as follows:

- (5)

Position the obstacle at its maximum proportion; the proportion is greater than the threshold α.

2.5.3. Regional Division and Obstacle Avoidance Routes

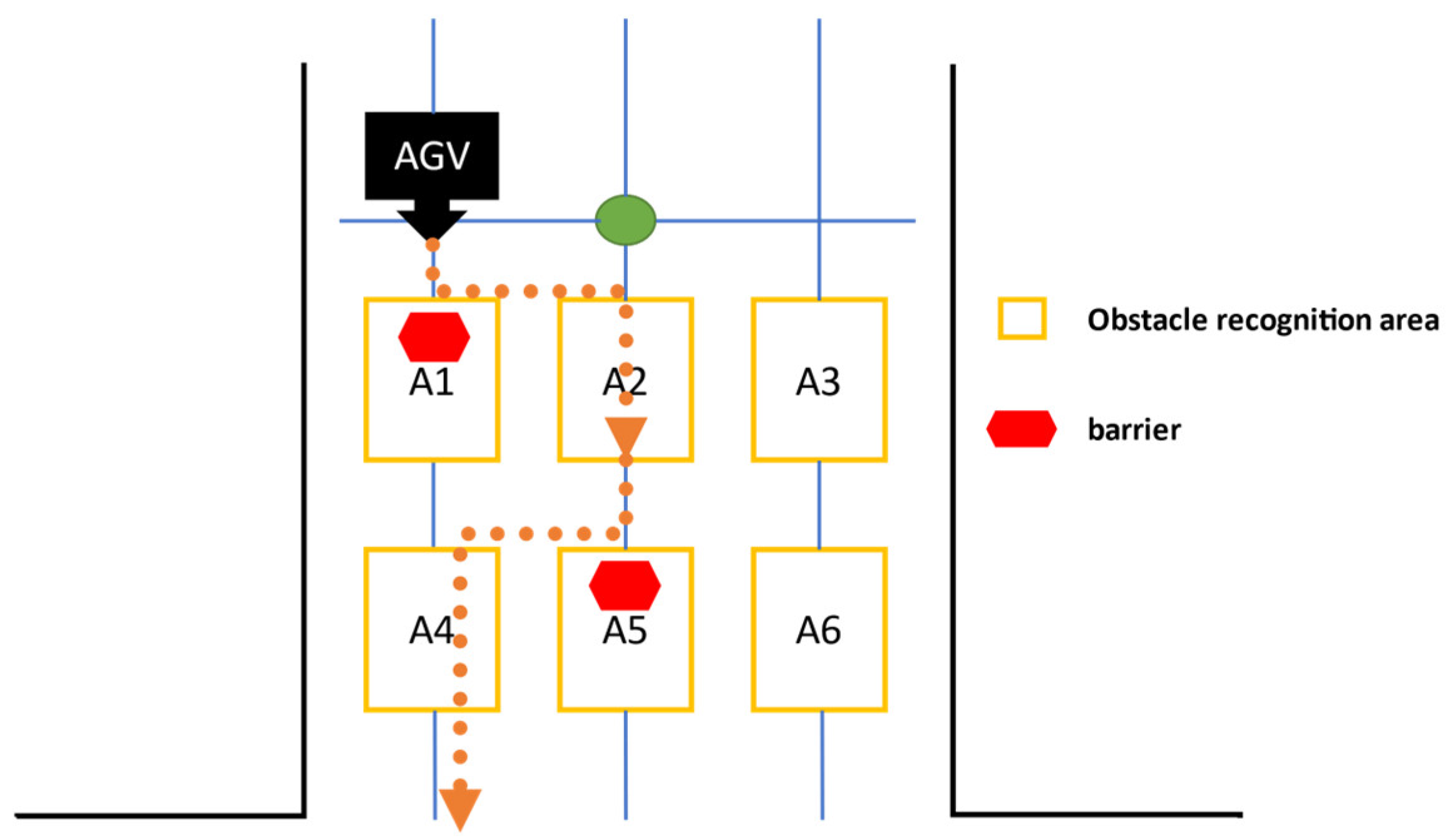

The AGV obstacle avoidance area of the tobacco production workshop was divided into several large areas with several lines using the grid method. The left and right sides are the main road, which are shared for both the up and down directions of the obstacle avoidance line in the middle. Each region is further subdivided into several small communities as obstacle avoidance areas. The obstacle avoidance route diagram is shown in

Figure 6.

2.5.4. Obstacle Avoidance Rules

The corresponding obstacle avoidance rules were formulated by the AGV conditional automatic navigation obstacle avoidance mechanism for 8 actual production environments when encountering obstacles.

Obstacle avoidance rule 1: for the situation where there are obstacles on the main road and the target platform for AGV delivery is in the same horizontal area as the obstacles, the AGV continues to move towards the main road until the infrared module of the car triggers a stop and waits for the obstacles to be removed, as shown in

Figure 7.

Obstacle avoidance rule 2: for situations where there are obstacles on the main road, but the target platform is in the area in front of the obstacle area, the car should follow the main road and deliver goods normally, as shown in

Figure 8.

Obstacle avoidance rule 3: for the situation where there are obstacles on the main road and the target platform for AGV delivery is in the same area as the obstacles, the car follows the main route until the infrared module of the car triggers a stop and waits for the obstacles to be removed, as shown in

Figure 9.

Obstacle avoidance rule 4: for the situation where there are obstacles on the main road and the target platform is behind the area where the obstacles are located, the car first turns into the obstacle avoidance route and then goes back to the main road, as shown in

Figure 10.

Obstacle avoidance rule 5: for the situation that there are obstacles on both the main road and the obstacle avoidance path, and the obstacles are in the same horizontal area, the car continues on the main road until the infrared module of the car triggers a stop, and waits for the obstacles on the main road to be removed, as shown in

Figure 11.

Obstacle avoidance rule 6: for the situation where there are obstacles on both the main road and the obstacle avoidance path, and the obstacles are not in the same horizontal area, the car continues to follow the obstacle avoidance route and then returns to the main route, as shown in

Figure 12.

Obstacle avoidance rule 7: for situations where there are obstacles on both the main road and the obstacle avoidance path, and the obstacles are not in the same horizontal area, the car follows the main route, as shown in

Figure 13.

Obstacle avoidance rule 8: for situations where there are obstacles on the main road and the platform is not currently in that area (obstacles are in A1 or A4, and the target platform is not in Area A), the car should follow the main route, as shown in

Figure 14.

2.5.5. Rules for Avoidance of AGVs

The corresponding avoidance rules were established by the AGV conditional automatic navigation obstacle avoidance mechanism for actual production environments when encountering AGVs:

If both AGVs in the upper and lower directions of the same area enter the obstacle avoidance line, the AGV on the obstacle avoidance line enters the obstacle avoidance line after the AGV in the obstacle avoidance line leaves, as shown in

Figure 15.