A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19

Abstract

1. Introduction

2. Related Work

3. Evaluation

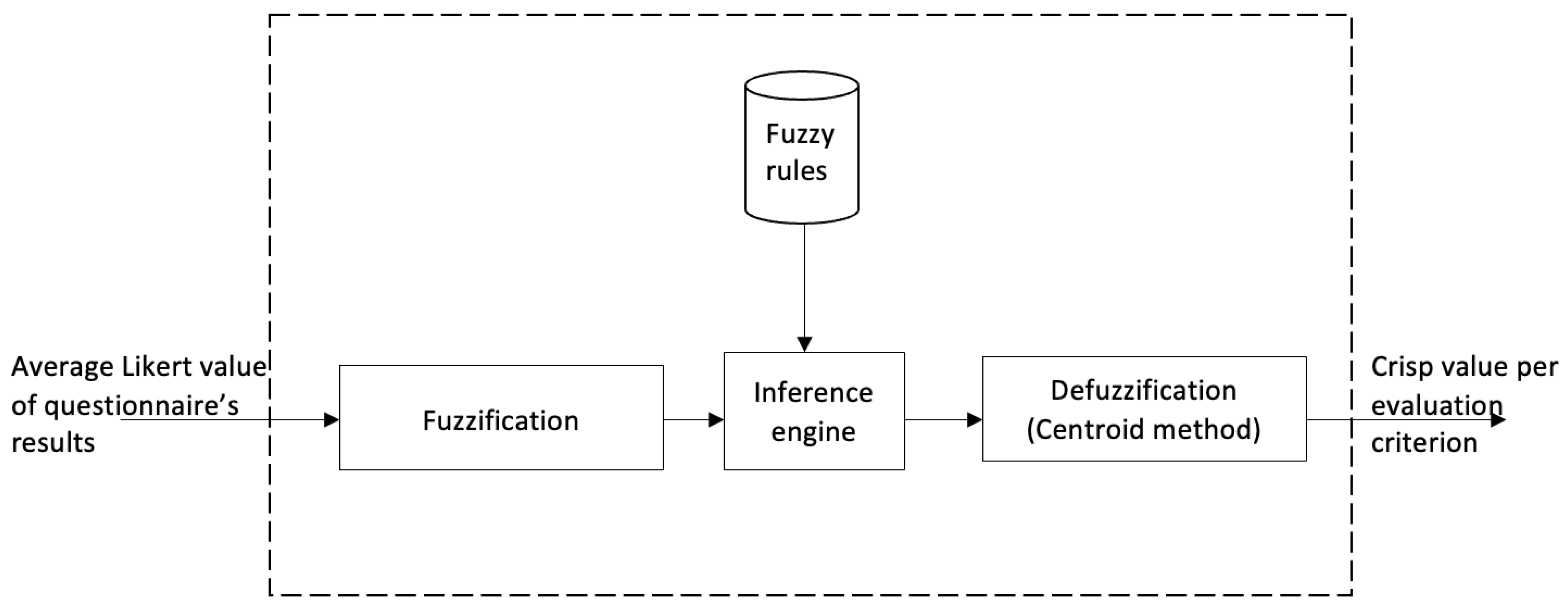

3.1. The Method

3.2. Criteria

- Acceptance: It examines how much the learners like e-learning programs, how willing they are to participate in such programs and how they feel during their enrolment in e-learning programs. This criterion is measured through questions Q1, Q2, Q3 and Q4 of stud_quest and through questions Q1, Q2 and Q3 of instructor_quest.

- Learning effectiveness: It examines how much e-learning improves learning outcomes. Particularly, it concerns performance improvement, the learners’ fatigue during the learning process, the e-learning contribution to the learners’ confidence and self-discipline, and its effect on the course and learning planning and in education in general. This criterion is measured through questions Q5, Q6, Q7, Q8, Q9, Q10, Q11, Q12 and Q13 of stud_quest and through questions Q4, Q5, Q6, Q7, Q8 and Q9 of instructor_quest.

- Engagement: It examines how much e-learning improves the students’ engagement in the learning process and how focused they are while attending an online course. This criterion is measured through questions Q14, Q15, Q16, Q17, Q18 and Q19 of stud_quest and through questions Q10, Q11, Q12, Q13 and Q14 of instructor_quest.

- Socializing and interpersonal relationships in the educational community: It examines the immediacy and the quality of the interpersonal relationships among fellow students and among students and tutors. This criterion is measured through questions Q20, Q21, Q22, Q23 and Q24 of stud_quest and through questions Q15, Q16, Q17 and Q18 of instructor_quest.

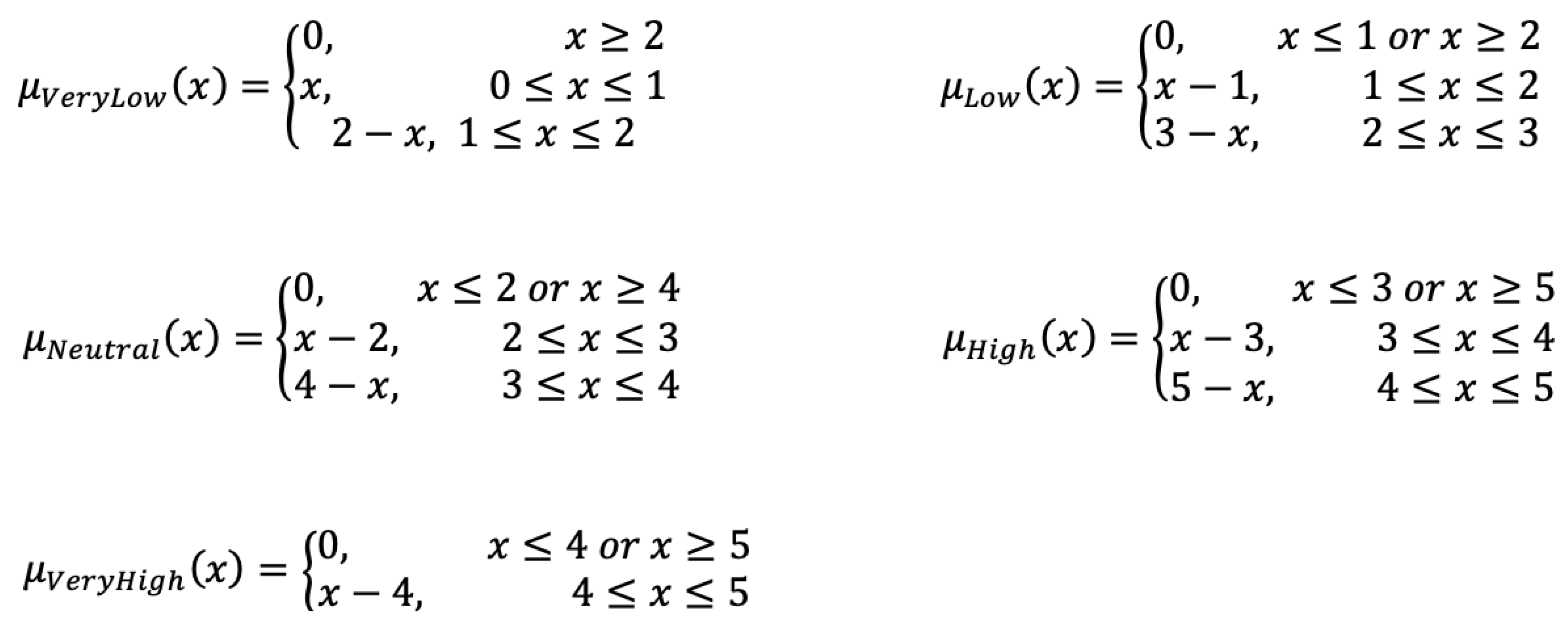

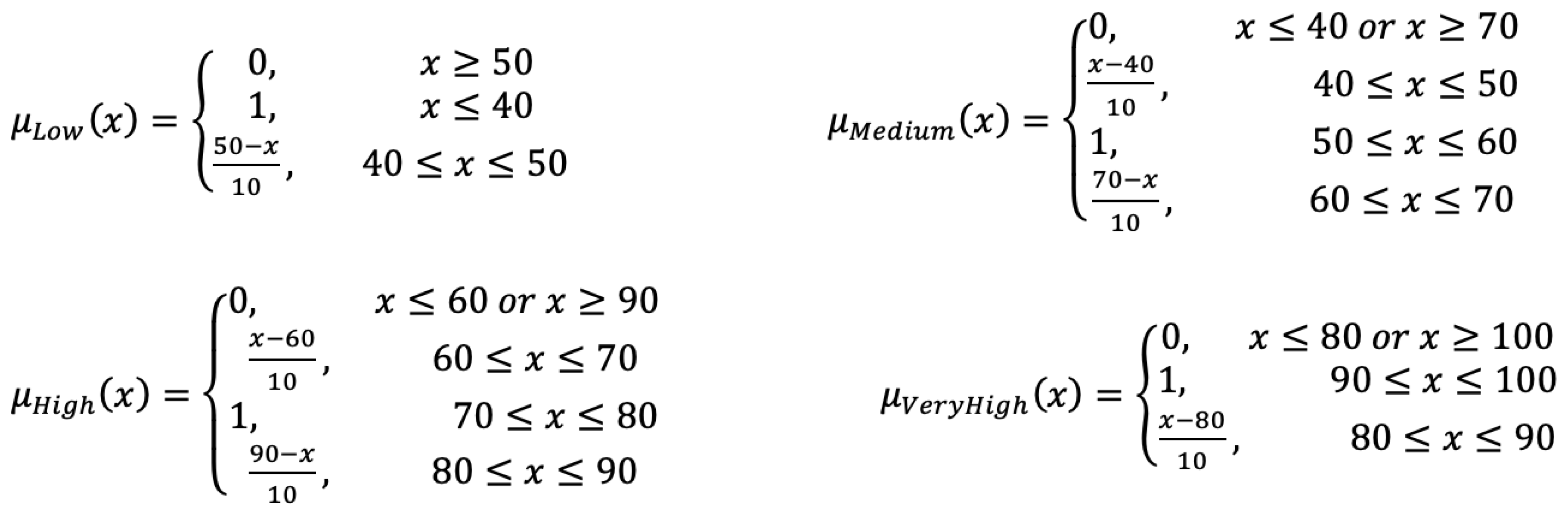

3.3. Fuzzy-Based Evaluation Mechanism

- The fuzzy rules for the estimation of the “acceptance” are 19. Particularly, the format of the used fuzzy rules for questions Q1, Q2 and Q3 are the following:

- ○

- If Qi is “Very Low”, then “acceptance” is “Low”.

- ○

- If Qi is “Low”, then “acceptance” is “Low”.

- ○

- If Qi is “Neutral”, “acceptance” is “Medium”.

- ○

- If Qi is “High”, then “acceptance” is “High”.

- ○

- If Qi is “Very High”, then “acceptance” is “Very High”.

- Furthermore, the fuzzy rules for Q4 are:

- ○

- If Q4 is “Low”, then “acceptance” is “Low”.

- ○

- If Q4 is “Medium”, then “acceptance” is “Medium”.

- ○

- If Q4 is “High”, then “acceptance” is “High”.

- ○

- If Q4 is “Very High”, then “acceptance” is “Very High”.

- The fuzzy rules for the estimation of “learning effectiveness” are 45. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Low”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Neutral”, “learning effectiveness” is “Medium”.

- ○

- If Qi is “High”, then “learning effectiveness” is “High”.

- ○

- If Qi is “Very High”, then “learning effectiveness” is “Very High”.

- The fuzzy rules for questions Q6 and Q7 are of the following format:

- ○

- If Qi is “Very Low”, then “learning effectiveness” is “Very High”.

- ○

- If Qi is “Low”, then “learning effectiveness” is “High”.

- ○

- If Qi is “Neutral”, “learning effectiveness” is “Medium”.

- ○

- If Qi is “High”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Very High”, then “learning effectiveness” is “Low”.

- The fuzzy rules for the estimation of the “engagement” are 30. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “engagement” is “Low”.

- ○

- If Qi is “Low”, then “engagement” is “Low”.

- ○

- If Qi is “Neutral”, “engagement” is “Medium”.

- ○

- If Qi is “High”, then “engagement” is “High”.

- ○

- If Qi is “Very High”, then “engagement” is “Very High”.

- The fuzzy rules for questions Q16, Q18 and Q19 are of the following format:

- ○

- If Qi is “Very Low”, then “engagement” is “Very High”.

- ○

- If Qi is “Low”, then “engagement” is “High”.

- ○

- If Qi is “Neutral”, “engagement” is “Medium”.

- ○

- If Qi is “High”, then “engagement” is “Low”.

- ○

- If Qi is “Very High”, then “engagement” is “Low”.

- The fuzzy rules for the estimation of the “socializing & interpersonal relationships in the educational community” are 25. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “socializing & interpersonal relationships” is “Low”.

- ○

- If Qi is “Low”, then “socializing & interpersonal relationships” is “Low”.

- ○

- If Qi is “Neutral”, “socializing & interpersonal relationships” is “Medium”.

- ○

- If Qi is “High”, then “socializing & interpersonal relationships” is “High”.

- ○

- If Qi is “Very High”, then “socializing & interpersonal relationships” is “Very High”.

- The fuzzy rules for the estimation of the “acceptance” are 15. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “acceptance” is “Low”.

- ○

- If Qi is “Low”, then “acceptance” is “Low”.

- ○

- If Qi is “Neutral”, “acceptance” is “Medium”.

- ○

- If Qi is “High”, then “acceptance” is “High”.

- ○

- If Qi is “Very High”, then “acceptance” is “Very High”.

- The fuzzy rules for the estimation of the “learning effectiveness” are 30. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Low”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Neutral”, “learning effectiveness” is “Medium”.

- ○

- If Qi is “High”, then “learning effectiveness” is “High”.

- ○

- If Qi is “Very High”, then “learning effectiveness” is “Very High”.

- The fuzzy rules for question Q5 are of the following format:

- ○

- If Qi is “Very Low”, then “learning effectiveness” is “Very High”.

- ○

- If Qi is “Low”, then “learning effectiveness” is “High”.

- ○

- If Qi is “Neutral”, “learning effectiveness” is “Medium”.

- ○

- If Qi is “High”, then “learning effectiveness” is “Low”.

- ○

- If Qi is “Very High”, then “learning effectiveness” is “Low”.

- The fuzzy rules for the estimation of the “engagement” are 25. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “engagement” is “Low”.

- ○

- If Qi is “Low”, then “engagement” is “Low”.

- ○

- If Qi is “Neutral”, “engagement” is “Medium”.

- ○

- If Qi is “High”, then “engagement” is “High”.

- ○

- If Qi is “Very High”, then “engagement” is “Very High”.

- The fuzzy rules for question Q14 are of the following format:

- ○

- If Qi is “Very Low”, then “engagement” is “Very High”.

- ○

- If Qi is “Low”, then “engagement” is “High”.

- ○

- If Qi is “Neutral”, “engagement” is “Medium”.

- ○

- If Qi is “High”, then “engagement” is “Low”.

- ○

- If Qi is “Very High”, then “engagement” is “Low”.

- The fuzzy rules for the estimation of the “socializing & interpersonal relationships in the educational community” are 20. Particularly, the fuzzy rules are of the following format:

- ○

- If Qi is “Very Low”, then “socializing & interpersonal relationships” is “Low”.

- ○

- If Qi is “Low”, then “socializing & interpersonal relationships” is “Low”.

- ○

- If Qi is “Neutral”, “socializing & interpersonal relationships” is “Medium”.

- ○

- If Qi is “High”, then “socializing & interpersonal relationships” is “High”.

- ○

- If Qi is “Very High”, then “socializing & interpersonal relationships” is “Very High”.

- Check which questions concern the criterion.

- For each question:

- 2.1

- Calculate the mean answers.

- 2.2

- Calculate the fuzzy sets they belong to.

- 2.3

- Apply the rules to estimate the degree of participants’ opinions for the criterion.

- 2.4

- If more than one rule is applied, aggregate the fuzzy rules’ results.

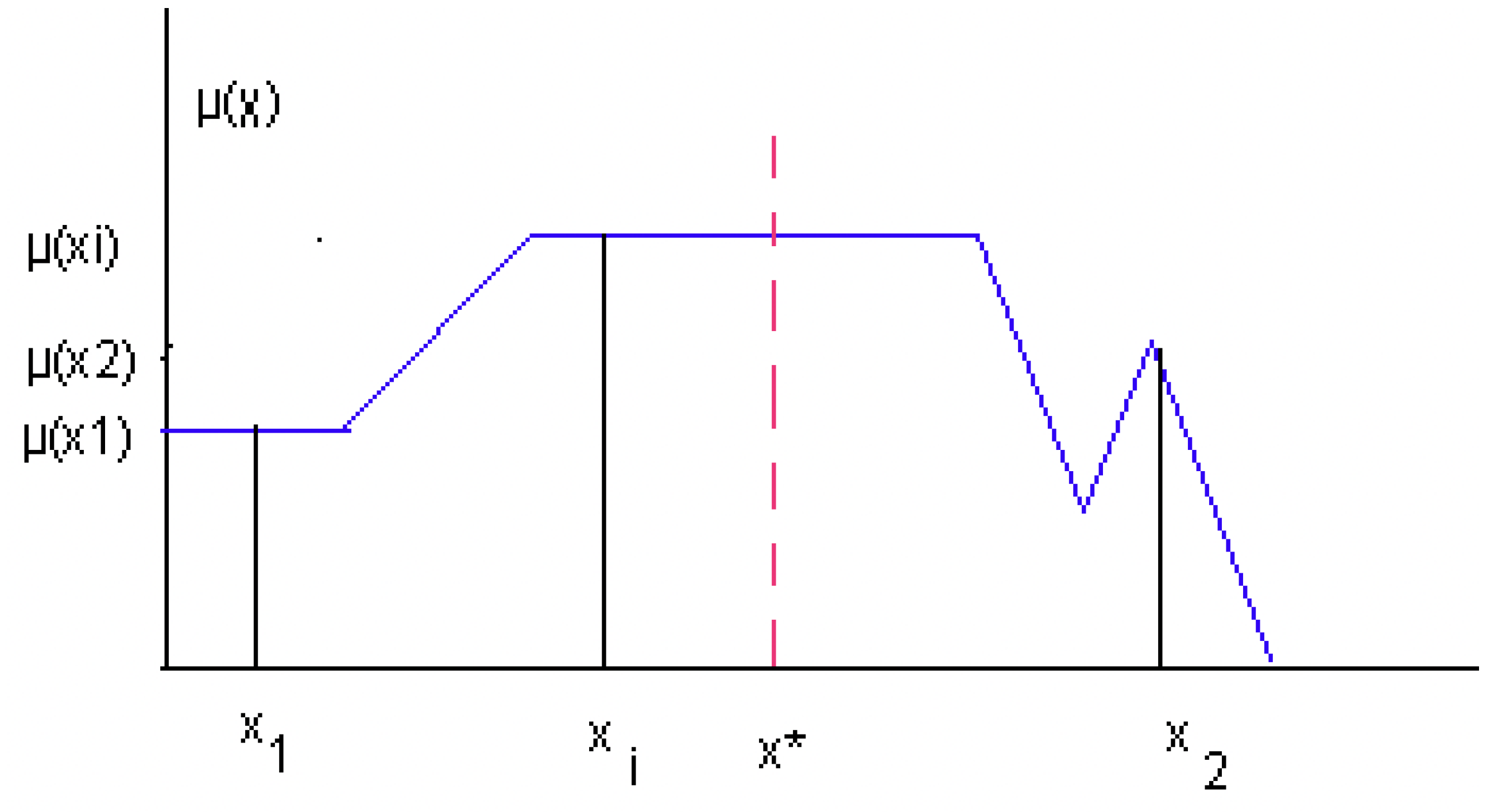

- Use the centroid method [48] to calculate and defuzzify the linguistic value, representing the criterion degree of estimation. The basic principle in the centroid defuzzification method is to find a crisp value x*, for which the total area of the membership function distribution used to represent the aggregated fuzzy rules is divided into two equal masses (Figure 5). The crisp value x* is calculated by the following formula (n is the number of sub-areas, into which the total area is divided, xi is a sample value of x and μ(xi) is the corresponding value of the membership function):

3.4. The Testbed

3.5. Reliability and Validity

3.6. Results and Discussion

- Acceptance:

- Learning effectiveness:

- Engagement

- Socializing and interpersonal relationships in the educational community

4. Discussion and Implications

- The students’ fatigue increases during online courses versus attending a face-to-face course.

- E-learning causes the students’ distraction to increase.

- The students’ communication with their fellow students and their interpersonal relationships deteriorates during e-learning.

- A large percentage of academic students (namely 72%) experienced positive emotions during e-learning, which indicates a positive learning experience.

- E-learning encourages students to be more confident.

- E-learning increases students’ self-discipline.

- E-learning help tools assist students in planning their courses.

- E-learning facilitates students’ participation in courses as students make fewer absences from lessons.

- The teaching of computer programming and lessons, which include a demonstration of computer applications, is facilitated by e-learning.

- E-learning does not affect the students’ performance positively or negatively.

- E-learning does not affect the students’ focus on the course positively or negatively.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. WHO Coronavirus Disease (COVID-19) Dashboard. Available online: https://covid19.who.int/ (accessed on 21 July 2022).

- World Health Organization. Coronavirus Disease (COVID-19) Advice for the Public. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/advice-forpublic (accessed on 29 April 2020).

- World Health Organization. Available online: https://www.who.int/ (accessed on 20 July 2022).

- UNESCO; Global Education Coalition. COVID-19 Education Response. Available online: https://en.unesco.org/covid19/educationresponse/globalcoalition (accessed on 28 May 2020).

- Dhawan, S. Online learning: A panacea in the time of COVID-19 crisis. J. Educ. Technol. Syst. 2020, 49, 5–22. [Google Scholar] [CrossRef]

- Radha, R.; Mahalakshmi, K.; Kumar, V.S.; Sara-vanakumar, A.R. E-Learning during lockdown of Covid-19 pandemic: A global perspective. Int. J. Control Autom. 2020, 13, 1088–1099. [Google Scholar]

- Toth-Stub, S. Countries Face an Online Education Learning Curve: The Coronavirus Pandemic has Pushed Education Systems Online, Testing Countries’ Abilities to Provide Quality Learning for All. Available online: https://www.usnews.com/news/best-countries/articles/2020-04-02/coronaviruspandemic-tests-countries-abilities-to-create-effective-online-education (accessed on 27 April 2020).

- Murgatrotd, S. COVID-19 and Online Learning. In Alberta, Canada. 2020. Available online: https://www.researchgate.net/publication/339784057_COVID-19_and_Online_Learning (accessed on 23 December 2022).

- Maqableh, M.; Alia, M. Evaluation online learning of undergraduate students under lockdown amidst COVID-19 Pandemic: The online learning experience and students’ satisfaction. Child. Youth Serv. Rev. 2021, 128, 106160. [Google Scholar] [CrossRef]

- Pokhrel, S.; Chhetri, R. A literature review on impact of COVID-19 pandemic on teaching and learning. High. Educ. Future 2021, 8, 133–141. [Google Scholar] [CrossRef]

- Abdull Mutalib, A.A.; Jaafar, M.H. A systematic review of health sciences students’ online learning during the COVID-19 pandemic. BMC Med. Educ. 2022, 22, 524. [Google Scholar] [CrossRef]

- Alhammadi, S. The effect of the COVID-19 pandemic on learning quality and practices in higher education—Using deep and surface approaches. Educ. Sci. 2021, 11, 462. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; Corell, A.; Rivero-Ortega, R.; Rodríguez-Conde, M.J.; Rodríguez-García, N. Impact of the COVID-19 on higher education: An experience-based approach. In Information Technology Trends for a Global and Interdisciplinary Research Community; García-Peñalvo, F.J., Ed.; IGI Global: Hershey, PA, USA, 2021; pp. 1–18. [Google Scholar]

- Aivazidi, M.; Michalakelis, C. Exploring Primary School Teachers’ Intention to Use E-Learning Tools during the COVID-19 Pandemic. Educ. Sci. 2021, 11, 695. [Google Scholar] [CrossRef]

- Alkhwaldi, A.F.; Absulmuhsin, A.A. Crisis-centric distance learning model in Jordanian higher education sector: Factors influencing the continuous use of distance learning platforms during COVID-19 pandemic. J. Int. Educ. Bus. 2021, 15, 250–272. [Google Scholar] [CrossRef]

- Adedoyin, O.B.; Soykan, E. Covid-19 pandemic and online learning: The challenges and opportunities. Interact. Learn. Environ. 2020, 1–13. [Google Scholar] [CrossRef]

- Daniel, S.J. Education and the COVID-19 pandemic. Prospects 2020, 49, 91–96. [Google Scholar] [CrossRef]

- Maatuk, A.M.; Elberkawi, E.K.; Aljawarneh, S.; Rashai-deh, H.; Alharbi, H. The COVID-19 pandemic and E-learning: Challenges and opportunities from the perspective of students and instructors. J. Comput. High. Educ. 2022, 34, 21–38. [Google Scholar] [CrossRef] [PubMed]

- Aguilera-Hermida, A.P. College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 2020, 1, 100011. [Google Scholar] [CrossRef] [PubMed]

- Schrum, M.L.; Johnson, M.; Ghuy, M.; Gombolay, M.C. Four years in review: Statistical practices of likert scales in human-robot interaction studies. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020. [Google Scholar]

- Chrysafiadi, K.; Virvou, M. Fuzzy Logic for Adaptive Instruction in an E-learning Environment for Computer Programming. IEEE Trans. Fuzzy Syst. 2015, 23, 164–177. [Google Scholar] [CrossRef]

- Bhardwaj, N.; Sharma, P. An advanced uncertainty measure using fuzzy soft sets: Application to decision-making problems. Big Data Min. Anal. 2021, 4, 94–103. [Google Scholar] [CrossRef]

- Iqbal, A.; Zhao, G.; Cheok, Q.; He, N. Estimation of Machining Sustainability Using Fuzzy Rule-Based System. Materials 2021, 14, 5473. [Google Scholar] [CrossRef]

- Yang, L.H.; Ye, F.F.; Liu, J.; Wang, Y.M.; Hu, H. An improved fuzzy rule-based system using evidential reasoning and subtractive clustering for environmental investment prediction. Fuzzy Sets Syst. 2021, 421, 44–61. [Google Scholar] [CrossRef]

- Chrysafiadi, K.; Papadimitriou, S.; Virvou, M. Cognitive-based adaptive scenarios in educational games using fuzzy reasoning. Knowl.-Based Syst. 2022, 250, 10911. [Google Scholar] [CrossRef]

- Sharma, A.; Alvi, I. Evaluating pre and post COVID-19 learning: An empirical study of learners’ perception in higher education. Educ. Inf. Technol. 2021, 26, 7015–7032. [Google Scholar] [CrossRef]

- Kapasia, N.; Paul, P.; Roy, A.; Saha, J.; Zaveri, A.; Mallick, R.; Barman, B.; Das, P.; Chouhan, P. Impact of lockdown on learning status of undergraduate and postgraduate students during COVID-19 pandemic in West Bengal, India. Child. Youth Serv. Rev. 2020, 116, 105194. [Google Scholar] [CrossRef]

- Chaturvedi, K.; Vishwakarma, D.K.; Singh, N. COVID-19 and its impact on education, social life and mental health of students: A survey. Child. Youth Serv. Rev. 2021, 121, 105866. [Google Scholar] [CrossRef]

- Elberkawi, E.K.; Maatuk, A.M.; Elharish, S.F.; Eltajoury, W.M. Online Learning during the COVID-19 Pandemic: Issues and Challenges. In Proceedings of the 2021 IEEE 1st International Maghreb Meeting of the Conference on Sciences and Techniques of Automatic Control and Computer Engineering MI-STA, Tripoli, Libya, 25–27 May 2021. [Google Scholar]

- García-Alberti, M.; Suárez, F.; Chiyón, I.; Mosquera Feijoo, J.C. Challenges and experiences of online evaluation in courses of civil engineering during the lockdown learning due to the COVID-19 pandemic. Educ. Sci. 2021, 11, 59. [Google Scholar] [CrossRef]

- Ilieva, G.; Yankova, T.; Klisarova-Belcheva, S.; Ivanova, S. Effects of COVID-19 pandemic on university students’ learning. Information 2021, 12, 163. [Google Scholar] [CrossRef]

- Cindy, N.; Fenwick, J.B., Jr. Experiences with online education during COVID-19. In Proceedings of the 2022 ACM Southeast Conference, Virtual, 18–20 April 2022. [Google Scholar]

- YeckehZaare, I.; Grot, G.; Dimovski, I.; Pollock, K.; Fox, E. Another Victim of COVID-19: Computer Science Education. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1, Providence, RI, USA, 3–5 March 2022. [Google Scholar]

- Roy, A.; Yee, M.; Perdue, M.; Stein, J.; Bell, A.; Carter, R.; Miyagawa, S. How COVID-19 Affected Computer Science MOOC Learner Behavior and Achievements: A Demographic Study. In Proceedings of the Ninth ACM Conference on Learning@ Scale, New York, NY, USA, 1–3 June 2022. [Google Scholar]

- Rothkrantz, L. The impact of COVID-19 Epidemic on Teaching and Learning. In Proceedings of the 23rd International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 17–18 June 2022. [Google Scholar]

- Lacave, C.; Molina, A.I. The Impact of COVID-19 in Collaborative Programming. Understanding the Needs of Undergraduate Computer Science Students. Electronics 2021, 10, 1728. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy Logic = Computing with Words. In Computing with Words in Information/Intelligent Systems 1; Studies in Fuzziness and Soft Computing; Zadeh, L.A., Kacprzyk, J., Eds.; Physica: Heidelberg, Germany, 1999. [Google Scholar]

- Gokmen, G.; Akinci, T.Ç.; Tektaş, M.; Onat, N.; Kocyigit, G.; Tektaş, N. Evaluation of student performance in laboratory applications using fuzzy logic. Procedia-Soc. Behav. Sci. 2010, 2, 902–909. [Google Scholar] [CrossRef]

- Izquierdo, N.V.; Lezama, O.B.P.; Dorta, R.G.; Viloria, A.; Deras, I.; Hernández-Fernández, L. Fuzzy logic applied to the performance evaluation. Honduran coffee sector case. In Proceedings of the International Conference on Swarm Intelligence, Shanghai, China, 17–22 June 2018. [Google Scholar]

- Derebew, B.; Thota, S.; Shanmugasundaram, P.; Asfetsami, T. Fuzzy logic decision support system for hospital employee performance evaluation with maple implementation. Arab. J. Basic Appl. Sci. 2021, 28, 73–79. [Google Scholar] [CrossRef]

- Lin, C.T.; Chiu, H.; Tseng, Y.H. Agility evaluation using fuzzy logic. Int. J. Prod. Econ. 2006, 101, 353–368. [Google Scholar] [CrossRef]

- Vivek, K.; Subbarao, K.V.; Routray, W.; Kamini, N.R.; Dash, K.K. Application of fuzzy logic in sensory evaluation of food products: A comprehensive study. Food Bioprocess Technol. 2020, 13, 1–29. [Google Scholar] [CrossRef]

- Idrees, M.; Riaz, M.T.; Waleed, A.; Paracha, Z.J.; Raza, H.A.; Khan, M.A.; Hashmi, W.S. Fuzzy logic based calculation and analysis of health index for power transformer in-stalled in grid stations. In Proceedings of the 2019 International Symposium on Recent Advances in Electrical Engineering (RAEE), Islamabad, Pakistan, 28–29 August 2019. [Google Scholar]

- Mamdani, E.H.; Assilian, S. An Experiment in Linguistic Synthesis with a Fuzzy Logic Controller. Int. J. Man-Mach. Stud. 1975, 7, 1–13. [Google Scholar] [CrossRef]

- Izquierdo, S.; Izquierdo, L.R. Mamdani Fuzzy Systems for Modelling and Simulation: A Critical Assessment. 2017. Available online: http://dx.doi.org/10.2139/ssrn.2900827 (accessed on 23 December 2022).

- Hamam, A.; Georganas, N.D. A comparison of Mamdani and Sugeno fuzzy inference systems for evaluating the quality of experience of Hapto-Audio-Visual applications. In Proceedings of the 2008 IEEE International Workshop on Haptic Audio visual Environments and Games, Ottawa, ON, Canada, 18–19 October 2008. [Google Scholar]

- Gilda, K.; Satarkar, S. Review of Fuzzy Systems through various jargons of technology. Int. J. Emerg. Technol. Innov. Res. 2020, 7, 260–264. [Google Scholar]

- Klir, G.; Yuan, B. Fuzzy Sets and Fuzzy Logic; Prentice Hall: Hoboken, NJ, USA, 1995; Volume 4. [Google Scholar]

- Pallant, J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using IBM SPSS, 6th ed.; Open University Press: Maidenhead, UK, 2016. [Google Scholar]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Shrestha, N. Factor analysis as a tool for survey analysis. Am. J. Appl. Math. Stat. 2021, 9, 4–11. [Google Scholar] [CrossRef]

- Bacon, D.R.; Sauer, P.L.; Young, M. Composite reliability in structural equations modeling. Educ. Psychol. Meas. 1995, 55, 394–406. [Google Scholar] [CrossRef]

| Question | Answers | |||||

|---|---|---|---|---|---|---|

| Q1 | How did your opinion about e-learning change after your participation in e-learning programs during the COVID-19 pandemic? | 1—it got extremely worse | 2 | 3 | 4 | 5—significantly improved |

| Q2 | Would you like to participate in an e-learning program again? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q3 | Would you choose to attend an e-learning course instead of a face-to-face course? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q4 | Note how you felt while attending an online course. |  | ||||

| Q5 | How do you think distance learning has affected your performance? | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q6 | Evaluate your fatigue while attending an online course. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q7 | Evaluate your fatigue while attending an online course in relation to attending a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q8 | Did e-learning help you in the planning of the courses? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q9 | How confident were you while attending an online course? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q10 | How do you think distance learning has affected your confidence in your participation in the learning process? | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q11 | How self-discipline were you while attending an online course? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q12 | How do you think distance learning has affected your self-discipline in your participation in the learning process? | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q13 | Evaluate the impact that distance learning has had on your education as a whole. | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q14 | Evaluate how focused you are when attending an online course. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q15 | Did e-learning help you to be more focused on the learning process? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q16 | Evaluate how much your attention is distracted while attending an online course. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q17 | Did e-learning contribute to your distraction from the educational process? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q18 | How many absences did you make during your participation in online courses? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q19 | Evaluate the absences you made during your participation in e-learning in relation to the absences you usually make during the attending of a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q20 | Evaluate the communication with your fellow students during e-learning. | 1—extremely bad | 2 | 3 | 4 | 5—excellent |

| Q21 | During your participation in e-learning, the communication with your fellow students: | 1—it got extremely worse | 2 | 3 | 4 | 5—significantly improved |

| Q22 | Was the communication with the tutor direct during the e-learning period? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q23 | Evaluate the immediacy in solving questions/problems during the e-learning period. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q24 | During your participation in e-learning, the communication with the instructor. | 1—it got extremely worse | 2 | 3 | 4 | 5—significantly improved |

| Question | Answers | |||||

|---|---|---|---|---|---|---|

| Q1 | How do you think that the learner’s opinion about e-learning has changed after their participation in e-learning programs during the COVID-19 pandemic? | 1—it got extremely worse | 2 | 3 | 4 | 5—significantly improved |

| Q2 | Do learners ask you to offer e-learning courses? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q3 | Evaluate the current requests for e-learning courses in relation to the corresponding requests before the COVID-19 pandemic. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q4 | How do you think distance learning has affected the learners’ performance? | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q5 | Evaluate the learners’ fatigue while attending an online course in relation to attending a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q6 | How confident do you think that the learners were while attending an online course in relation to attending a face-to-face course? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q7 | Evaluate how active the learners were while attending an online course in relation to attending a face-to-face course. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q8 | How do you think distance learning has affected learners’ self-discipline? | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q9 | Evaluate the impact that distance learning has had on the learning process as a whole. | 1—absolutely negative | 2 | 3 | 4 | 5—absolutely positive |

| Q10 | Evaluate the learners’ participation in learning activities while attending an online course in relation to attending a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q11 | Evaluate the learners’ participation in discussions while attending an online course in relation to attending a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q12 | Evaluate how focused the learners are when attending an online course. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q13 | Did e-learning help the learners to be more focused on the learning process? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q14 | Evaluate the absences that the learners made during their participation in e-learning courses in relation to the absences they usually make while attending a face-to-face course. | 1—extremely decreased | 2 | 3 | 4 | 5—extremely increased |

| Q15 | Was the communication with the learners direct during the e-learning period? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q16 | Evaluate the immediacy in solving questions/problems during the e-learning period. | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q17 | How willing were the learners to express their opinion and questions? | 1—not at all | 2 | 3 | 4 | 5—very much |

| Q18 | During e-learning courses, the communication between the instructor and the learners: | 1—it got extremely worse | 2 | 3 | 4 | 5—significantly improved |

| Linguistic Value | Fuzzy Partition |

|---|---|

| Very Low | (0, 1, 2) |

| Low | (1, 2, 3) |

| Neutral | (2, 3, 4) |

| High | (3, 4, 5) |

| Very high | (4, 5, 5) |

| Linguistic Value | Fuzzy Partition |

|---|---|

| Low | (0, 0, 40, 50) |

| Medium | (40, 50, 60, 70) |

| High | (60, 70, 80, 90) |

| Very high | (80, 90, 90, 100) |

| Linguistic Value | Fuzzy Partition |

|---|---|

| Low | (0, 0, 30, 50) |

| Medium | (30, 50, 60, 70) |

| High | (60, 70, 80, 90) |

| Very high | (80, 90, 100, 100) |

| Evaluation Criterion | Questions | Cronbach’s Alpha | AVE | CR | |

|---|---|---|---|---|---|

| Acceptance | Q1–Q3 | 1.49 | 0.69 | 0.87 | |

| Learning effectiveness | Q5–Q13 | 0.77 | 0.82 | 0,97 | |

| Engagement | Q14–Q19 | 0.49 | 0.71 | 0.85 | |

| Socializing and interpersonal relationships | with fellows | Q20–Q21 | 0.80 | 0.85 | 0.83 |

| With instructors | Q22–Q24 | 0.72 | 0.78 | 0.82 | |

| Evaluation Criterion | Questions | Cronbach’s Alpha | AVE | CR |

|---|---|---|---|---|

| Acceptance | Q1–Q3 | 1.21 | 0.63 | 0.84 |

| Learning effectiveness | Q4–Q9 | 0.85 | 0.63 | 0.91 |

| Engagement | Q10–Q14 | 0.54 | 0.59 | 0.72 |

| Interpersonal relationships | Q15–Q18 | 0.78 | 0.51 | 0.80 |

| Question | Likert Value | Mean Answer | Rules | Acceptance | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q1 | 3.88 | (0, 0, 0.12, 0.88, 0) | Neutral and High | 3 and 4 | (0, 0, 1, 0) | High |

| Q2 | 4.24 | (0, 0, 0, 0.76, 0.24) | High and Very High | 9 and 10 | (0, 0, 1, 0) | High |

| Q3 | 3.44 | (0, 0, 0.56, 0.44, 0) | Neutral and High | 13 and 14 | ( 0, 1, 0, 0) | Medium |

| Feeling | Crisp Number | Percentage |

|---|---|---|

| None | 5 | 6.25% |

| Sadness | 2 | 2.5% |

| Fear | 2 | 2.5% |

| Anger | 4 | 5% |

| Anxiety | 5 | 6.25% |

| Pleasure | 12 | 15% |

| Disappointment | 8 | 10% |

| Interest | 16 | 20% |

| Satisfaction | 14 | 17.5% |

| Excitement | 12 | 15% |

| Question | Likert Value | Mean Answer | Rules | Acceptance | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q1 | 4 | (0, 0, 0, 1, 0) | High | 123 | (0, 0, 1, 0) | High |

| Q2 | 3.5 | (0, 0, 0.5, 0.5, 0) | Neutral and High | 127 and 128 | (0, 0.83, 0.17, 0) | Medium and High |

| Q3 | 4.8 | (0, 0, 0, 0.2, 0.8) | High and Very High | 133 and 134 | (0, 0, 0.358, 0.642) | High and Very High |

| Question | Likert Value | Mean Answer | Rules | Learning Effectiveness | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q5 | 3.56 | (0, 0, 0.44, 0.56, 0) | Neutral and High | 22 and 23 | (0, 0.765, 0.235, 0) | Medium and High |

| Q6 | 2.76 | (0, 0.76, 0.24, 0, 0) | Low and Neutral | 26 and 27 | (0, 0.315, 0.685, 0) | Medium and High |

| Q7 | 3.53 | (0, 0, 0.47, 0.53, 0) | Neutral and High | 32 and 33 | (0.86, 0.14, 0, 0) | Low and Medium |

| Q8 | 3.88 | (0, 0, 0.12, 0.88, 0) | Neutral and High | 37 and 38 | (0, 0, 1, 0) | High |

| Q9 | 3.71 | (0, 0, 0.29, 0.71, 0) | Neutral and High | 42 and 43 | (0, 0.44, 0.56, 0) | Medium and High |

| Q10 | 3.82 | (0, 0, 0.18, 0.82, 0) | Neutral and High | 47 and 48 | (0, 0.59, 0.41, 0) | Medium and High |

| Q11 | 3.74 | (0, 0, 0.26, 0.74, 0) | Neutral and High | 52 and 53 | (0, 0.37, 0.63, 0) | Medium and High |

| Q12 | 3.94 | (0, 0, 0.06, 0.94, 0) | Neutral and High | 57 and 58 | (0, 0, 1, 0) | High |

| Q13 | 3.85 | (0, 0, 0.15, 0.85, 0) | Neutral and High | 62 and 63 | (0, 0.06, 0.94, 0) | Medium and High |

| Question | Likert Value | Mean Answer | Rules | Learning Effectiveness | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q4 | 3.4 | (0, 0, 0.6, 0.4, 0) | Neutral and High | 137 and 138 | (0, 1, 0, 0) | Medium |

| Q5 | 3.3 | (0, 0, 0.7, 0.3, 0) | Neutral and High | 142 and 143 | (0.49, 0.51, 0, 0) | Low and Medium |

| Q6 | 3.7 | (0, 0, 0.3, 0.7, 0) | Neutral and High | 147 and 148 | (0, 0.46, 0.54, 0) | Medium and High |

| Q7 | 3.7 | (0, 0, 0.3, 0.7, 0) | Neutral and High | 152 and 153 | (0, 0.46, 0.54, 0) | Medium and High |

| Q8 | 3.9 | (0, 0, 0.1, 0.9, 0) | Neutral and High | 157 and 158 | (0, 0, 1, 0) | High |

| Q9 | 3.6 | (0, 0, 0.4, 0.6, 0) | Neutral and High | 162 and 163 | (0, 0.68, 0.32, 0) | Medium and High |

| Question | Likert Value | Mean Answer | Rules | Engagement | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q14 | 3.15 | (0, 0, 0.85, 0.15, 0) | Neutral and High | 67 and 68 | (0, 1, 0, 0) | Medium |

| Q15 | 2.82 | (0, 0.18, 0.82, 0, 0) | Low and Neutral | 71 and 72 | (0.27, 0.73, 0, 0) | Low and Medium |

| Q16 | 3.53 | (0, 0, 0.47, 0.53, 0) | Neutral and High | 77 and 78 | (0.86, 0.14, 0, 0) | Low and Medium |

| Q17 | 2.94 | (0, 0.06, 0.94, 0, 0) | Low and Neutral | 81 and 82 | (0.03, 0.97, 0, 0) | Low and Medium |

| Q18 | 1.82 | (0.18, 0.82, 0, 0, 0) | Very Low and Low | 85 and 86 | (0, 0, 1, 0) | High |

| Q19 | 2.38 | (0, 0.62, 0.38, 0, 0) | Low and Neutral | 91 and 92 | (0, 0.64, 0.36, 0) | Medium and High |

| Question | Likert Value | Mean Answer | Rules | Engagement | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q10 | 3.5 | (0, 0, 0.5, 0.5, 0) | Neutral and High | 167 and 168 | (0, 0.88, 0.12, 0, 0) | Medium and High |

| Q11 | 3.5 | (0, 0, 0.5, 0.5, 0) | Neutral and High | 172 and 173 | (0, 0.88, 0.12, 0, 0) | Medium and High |

| Q12 | 3.2 | (0, 0, 0.8, 0.2, 0) | Neutral and High | 177 and 178 | (0, 1, 0, 0) | Medium |

| Q13 | 3 | (0, 0, 1, 0, 0) | Neutral | 182 | (0, 1, 0, 0) | Medium |

| Q14 | 2,3 | (0, 0.7, 0.3, 0, 0) | Low and Neutral | 186 and 187 | (0, 0.53, 0.47, 0) | Medium and High |

| Question | Likert Value | Mean Answer | Rules | Socializing and Interpersonal Relationships | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q20 | 2.68 | (0, 0.32, 0.68, 0, 0) | Low and Neutral | 96 and 97 | (0.52, 0.48, 0, 0) | Low and Medium |

| Q21 | 2.5 | (0, 0.5, 0.5, 0, 0) | Low and Neutral | 101 and 102 | (0.81, 0.19, 0, 0) | Low and Medium |

| Q22 | 3.62 | (0, 0, 0.38, 0.62, 0) | Neutral and High | 107 and 108 | (0, 0.64, 0.36, 0) | Medium and High |

| Q23 | 3.94 | (0, 0, 0.06, 0.94, 0) | Neutral and High | 112 and 113 | (0, 0.1, 0.9, 0) | Medium and High |

| Q24 | 3.03 | (0, 0, 0.97, 0.03, 0) | Neutral and High | 117 and 118 | (0, 1, 0, 0) | Medium |

| Question | Likert Value | Mean Answer | Rules | Socializing and Interpersonal Relationships | ||

|---|---|---|---|---|---|---|

| Fuzzy Set | Linguistic Value | Fuzzy Set | Linguistic Value | |||

| Q15 | 3.7 | (0, 0, 0.3, 0.7, 0) | Neutral and High | 192 and 193 | (0, 0.46, 0.54, 0) | Medium and High |

| Q16 | 4 | (0, 0, 0, 1, 0) | High | 198 | (0, 0, 1, 0) | High |

| Q17 | 3.7 | (0, 0, 0.3, 0.7, 0) | Neutral and High | 202 and 203 | (0, 0.46, 0.54, 0) | Medium and High |

| Q18 | 3 | (0, 0, 1, 0, 0) | Neutral | 207 | (0, 1, 0, 0) | Medium |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chrysafiadi, K.; Virvou, M.; Tsihrintzis, G.A. A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19. Electronics 2023, 12, 428. https://doi.org/10.3390/electronics12020428

Chrysafiadi K, Virvou M, Tsihrintzis GA. A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19. Electronics. 2023; 12(2):428. https://doi.org/10.3390/electronics12020428

Chicago/Turabian StyleChrysafiadi, Konstantina, Maria Virvou, and George A. Tsihrintzis. 2023. "A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19" Electronics 12, no. 2: 428. https://doi.org/10.3390/electronics12020428

APA StyleChrysafiadi, K., Virvou, M., & Tsihrintzis, G. A. (2023). A Fuzzy-Based Evaluation of E-Learning Acceptance and Effectiveness by Computer Science Students in Greece in the Period of COVID-19. Electronics, 12(2), 428. https://doi.org/10.3390/electronics12020428