1. Introduction

Multi-spectral remote sensing images contain information of multiple spectral bands, extending from visible light to thermal infrared bands, containing rich image information and spectral information. At the same time, the contour information of land objects in the image is relatively clear, and there is a certain correlation between each band, which can provide a stronger land surface information resolution effect and provide rich data support for the change detection of land objects.

Remote sensing image change-detection technology is designed to determine whether the surface objects have changed in this period through quantitative analysis from two remote sensing image pairs at different times and in the same region. It shows the spectral characteristics of the unchanged region, the changed region and the surface objects in the remote sensing image pairs of two different periods. Remote sensing image change detection can effectively update the change information of surface vegetation, buildings and other surface features, and plays an indispensable role in assessing the level of natural disasters, predicting the development trend of natural disasters, and monitoring land cover information [

1,

2,

3].

Image change detection is an important research direction in computer vision and remote sensing. With the continuous progress of remote sensing technology and image-processing algorithms, it becomes more and more important to detect changes in images. Firstly, ref. [

4] systematically investigated and summarized image change detection algorithms. It provides an overview of different methods, techniques, and evaluation methods, which provides an important reference for the research in the field of image change detection. Secondly, ref. [

5] covers related topics such as acquisition, preprocessing, feature extraction, classification and change detection of multispectral satellite images, and provides specialized techniques and methods for multispectral image change detection. Ref. [

6] is another book published by Springer in 2012 that focuses on 2D image change detection methods. The book presents various techniques and algorithms for analyzing images, including pixel-based and object-based change detection methods. Finally, ref. [

7] discusses the approaches and challenges of using multispectral and hyperspectral images for practical change detection applications. It explores the potential of these types of images to detect changes in different environments and provides insights into limitations and future research directions in the field. In summary, these papers provide valuable information and in-depth research in the field of image change detection, covering all aspects of the field and various techniques and methods used. These research results are of great significance for further promoting the development of image change detection algorithms.

In the field of traditional algorithms, Fung et al. [

8], based on the image difference algorithm, the principal component analysis method, the post-classification comparison algorithm and other major change detection algorithms, conducted in-depth research on threshold division. Mas [

9] in Landsat MultiSpectral Scanner (MSS) tested image difference algorithm and selective principal component analysis (pca) [

10,

11], plant index difference algorithm, multiple data unsupervised classification algorithm and the changes after after classification comparison difference algorithm and image enhancement algorithm is a variety of combination, through calculating the Kappa coefficient evaluation results of the calculation of each algorithm accuracy, Pointed out that the merits of the various algorithms in different environment. Chen [

12] proposed an object-based change detection algorithm. Object-based change detection can improve the influence of background information on the change of objects and further improve the effect of change detection by utilizing the characteristics of remote sensing images with high spatial resolution.

With the rise of technologies like artificial intelligence and deep learning, in order to meet the challenges of high-dimensional data sets (higher spatial resolution and more spectral features), complex remote sensing image data structures (nonlinear and overlapping data) and high computational complexity brought by nonlinear optimization, supervised learning requires a large number of training samples, and the robustness of artificial intelligence-based remote sensing image detection models is difficult to guarantee. Zhang et al. [

13] proposed a change detection method based on a deep twinned semantic network framework. This supervised network uses triplet loss function [

14] for training, which can not only directly extract surface features from remote sensing images with multi-scale information, but also extract surface features from remote sensing images. Moreover, the features of inter-class separability and intra-class separability can be obtained from the learning of semantic relations to make the network more robust. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection (STAnet) proposed by Chen et al. [

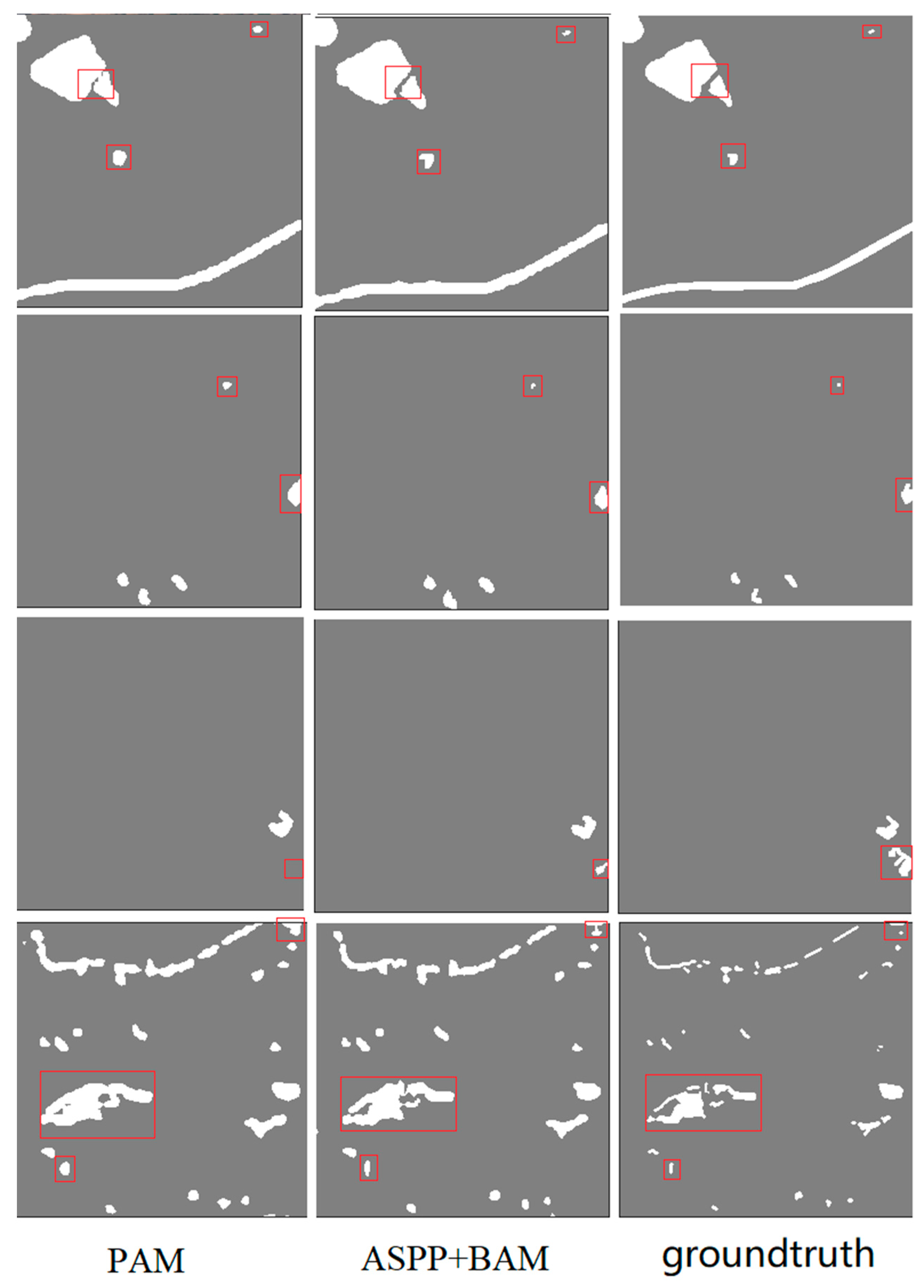

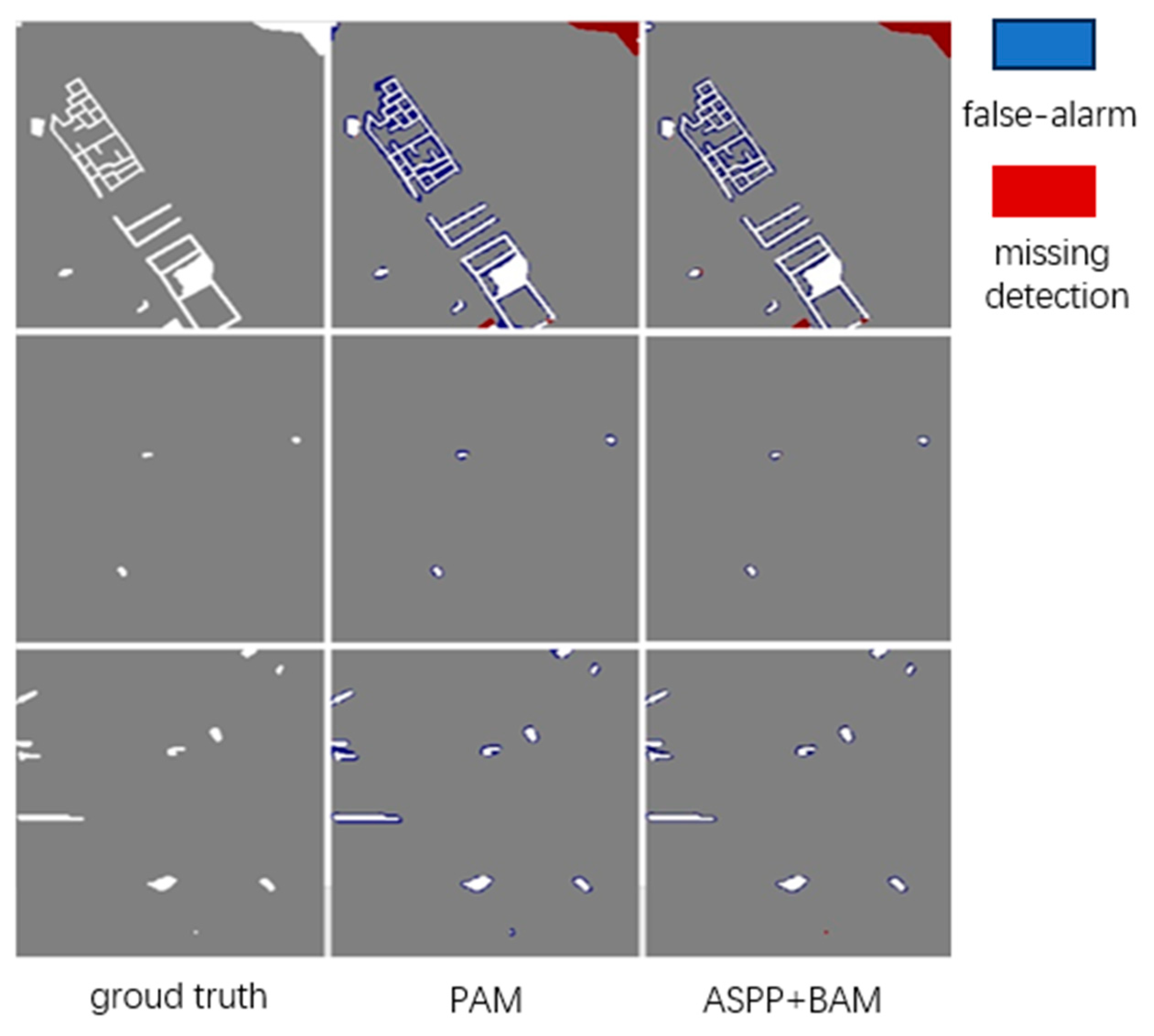

15] designed two kinds of self-attention modules, the basic space-time attention module (BAM) and the pyramidal space-time attention module (PAM). BAM learns to capture the spatio-temporal dependence between any two locations (noting the weights) and calculates the response of each location by weighting the sum of features at all locations in space-time. PAM embedded BAM into a pyramid structure to generate multi-scale representations of attention. However, PAM pyramid attention can combine the shallow information of different areas of the image to generate multi-scale attention feature expression, so that each pixel participates in the self-attention mechanism of sub-regions of different scales. This attention mechanism can effectively extract more fine-grained spatial information, but it is found in the experiment that this attention mechanism can improve the mutual correlation between pixels. As a result, the algorithm model pays more attention to the relationship between multiple pixels in a certain region in the generation of the difference feature map, rather than the single pixel judgment, which leads to the false alarm problem. This feature map, which combines global information and local cross-correlation information, improves the detection rate of changing pixels well, but the high cross-correlation between pixels also leads to the expansion of the contour and the reduction of the detail of the changing region. Therefore, the accuracy will be reduced.

To solve these problems, this paper proposes a change detection algorithm of multi-spectral remote sensing images based on twin neural networks. The feature extraction network based on ResNet-18, which like feature extraction network designed by STA-Net is used to extract the global information of different sizes of two-phase images. On this basis, an ASPP+BAM pyramid attention module is designed in order to retain the fine feature information of large- and small-scale objects, reduce the attention of the algorithm model to the inter-correlation between neighboring pixels, and make the model better understand the features of the image with a relatively small number of pixels in the changing region. At the same time, we propose the concept of adaptive threshold contrast loss function and obtain an adaptive threshold through an adaptive threshold generation network. Because an adaptive threshold is obtained through the convolution of feature graphs, the problem that the given threshold in the basic algorithm is not refined enough in the region of change can be avoided, and the problem of false alarms can be solved.

In summary, the main contributions of this paper include:

We designed a multi-level pyramid attention mechanism (ASPP+BAM) to reduce the attention of the algorithm model to the inter-correlation between neighboring pixels, so that the model can better understand the characteristics of the image with a relatively small proportion of pixels in the changing region, so as to better solve the false alarm problem.

In order to further alleviate the false alarm problem, we designed an adaptive threshold contrast loss function, which can better consider the texture characteristics of the changing region and the unchanged region when the adaptive threshold is used, so that the generated threshold can better judge the boundary of the changing region, sharpen the edge of the changing region, and reduce the expansion region.

2. Related Work

After many years of study and accumulation in the field of remote sensing image change detection, there are enough original remote sensing image data with high spatial resolution and multi-band. The statistical analysis method based on traditional mathematical theory has poor performance in processing a large number of remote sensing image data with high complexity and cannot provide rapid and accurate change detection results in many application scenarios.

Deep neural networks can effectively solve the problem of multi-spectral image resolution matching. Xu et al. [

16] proposed to use an autoencoder to learn the corresponding feature relationship between multi-temporal high-resolution images, determine the threshold between changing pixels and non-changing pixels according to Otsu’s thresholding method (OTSU) [

17], discard the isolated points, and mark the part higher than the threshold as the changing region. Zhang et al. [

18] designed a sparse autoencoder algorithm framework based on spatial resolution changes according to the characteristics of remote sensing images. The autoencoder is stacked to learn the feature representation from the local neighborhood of a given pixel in an unsupervised manner. Gong et al. [

19] proposed a deep confidence network to generate change detection maps directly from two different simultaneous Synthetic Aperture Radar (SAR) images. Two years later, Gong [

20] proposed a new framework for high-resolution remote sensing image change detection, which combined change feature extraction based on superpixels and hierarchical difference representation by neural networks. Liu et al. [

21] proposed a dual-channel convolutional neural network model for SAR image change detection, which simultaneously extracted image deep features from two different simultaneous SAR images. Zhan et al. [

22] proposed a change detection method based on deep twin convolutional networks, in which the twin networks share node weights, so as to directly extract feature information from image pairs. Liu et al. [

23] proposed an unsupervised deep convolutional coupling network for two different types of remote sensing images obtained by optical sensors and SAR images, focusing on the complementary feature points of optical images and SAR images.

However, using only the neural network based on deep learning to extract the change information, it cannot deal with the deeper multi-scale and multi-level change characteristics. In order to adapt objects of various sizes to generate better representations, STAnet uses neural networks to extract global information while adding a self-attention module to capture spatiotemporal dependencies at different scales. Ding et al. [

24] proposed a new two-branch end-to-end network. It innovatively introduces cross-layer plus a skip connection module guided by a spatial attention mechanism to aggregate multi-layer context information, which improves network performance. In order to dig deeper into multi-scale and multi-level features and improve detection accuracy, Li et al. [

25] designed a pyramid attention layer using the spatial attention benefit mechanism. Wang et al. [

26] proposed a high-resolution feature difference attention network for change detection. In this network, a multi-resolution parallel structure is introduced to make comprehensive use of image information of different resolutions to reduce the loss of spatial information, and a differential attention module is proposed to improve the sensitivity of differential information to maintain the change information of a building.

3. Approach

In this paper, a change detection method of multispectral remote sensing images based on twin neural networks is proposed. The pre-trained Deep residual network-18 (ResNet-18) network, which removes the global pooling layer and the fully connected layer, is used for feature extraction of two-phase remote sensing images. In order to capture semantic information of different scales and improve the ability of the algorithm to perceive and express the information of the input image objects, we designed the void space pyramid pool and self-attention module to further enhance the feature map extracted from the feature. In order to make the threshold more sensitive to remote sensing images in the data set, the robustness of the algorithm model is improved. We use an adaptive threshold to generate the network and apply the global judgment threshold generated in real time from the feature map to the contrast loss function. Finally, the threshold segmentation method of the measurement module is used to determine the change region.

3.1. Feature Extraction Module

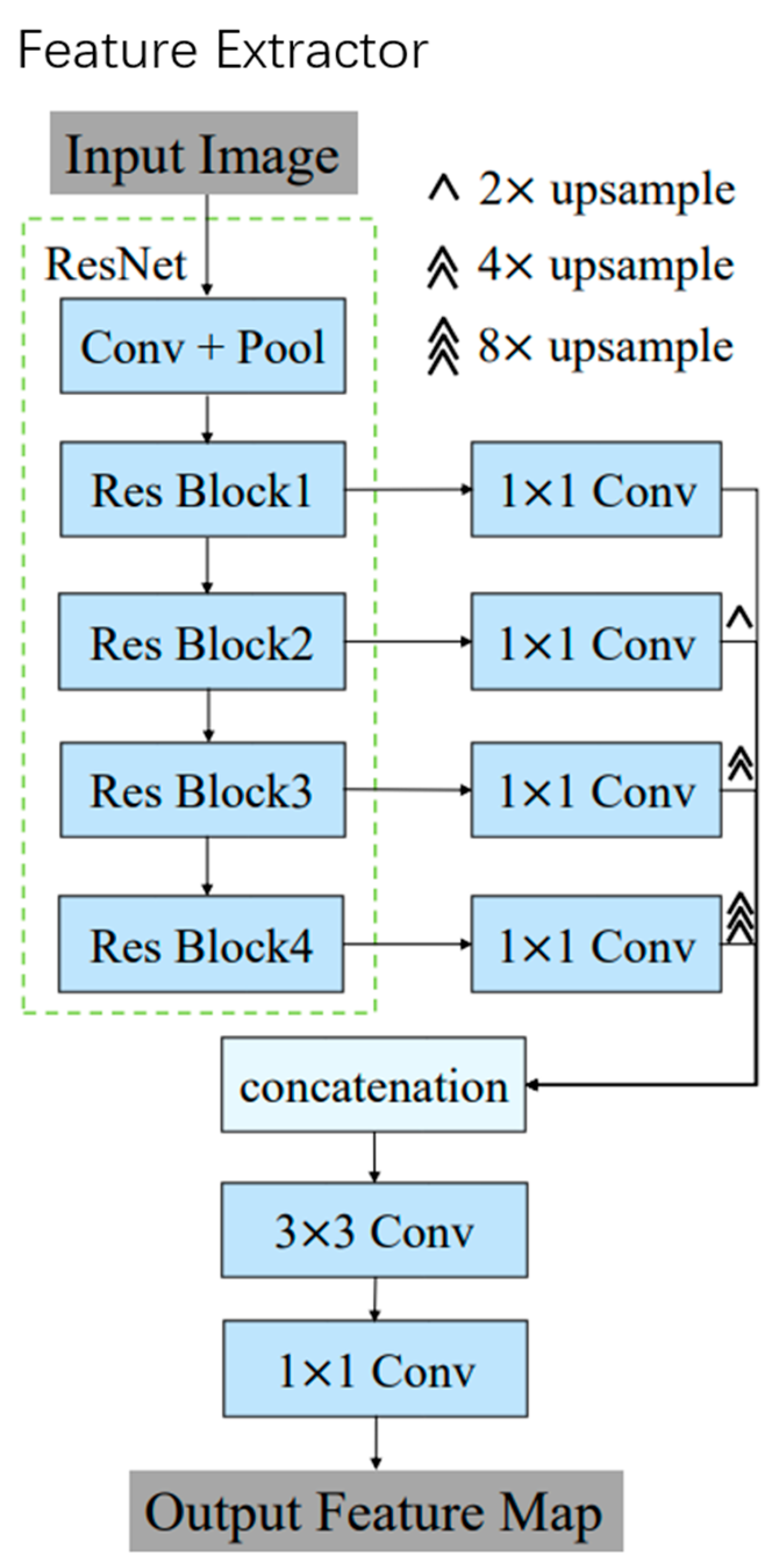

As shown in

Figure 1, the feature extraction module will obtain the corresponding feature map from the output feature map of each Block through a 1 × 1 convolution. Then, the feature map of these four blocks will be up-sampled to change the size of the feature map of Block 1, and finally, the four feature maps will be spliced together. The feature map of remote sensing images is obtained by a 3 × 3 convolution and 1 × 1 convolution.

3.2. Attention Module Network Structure

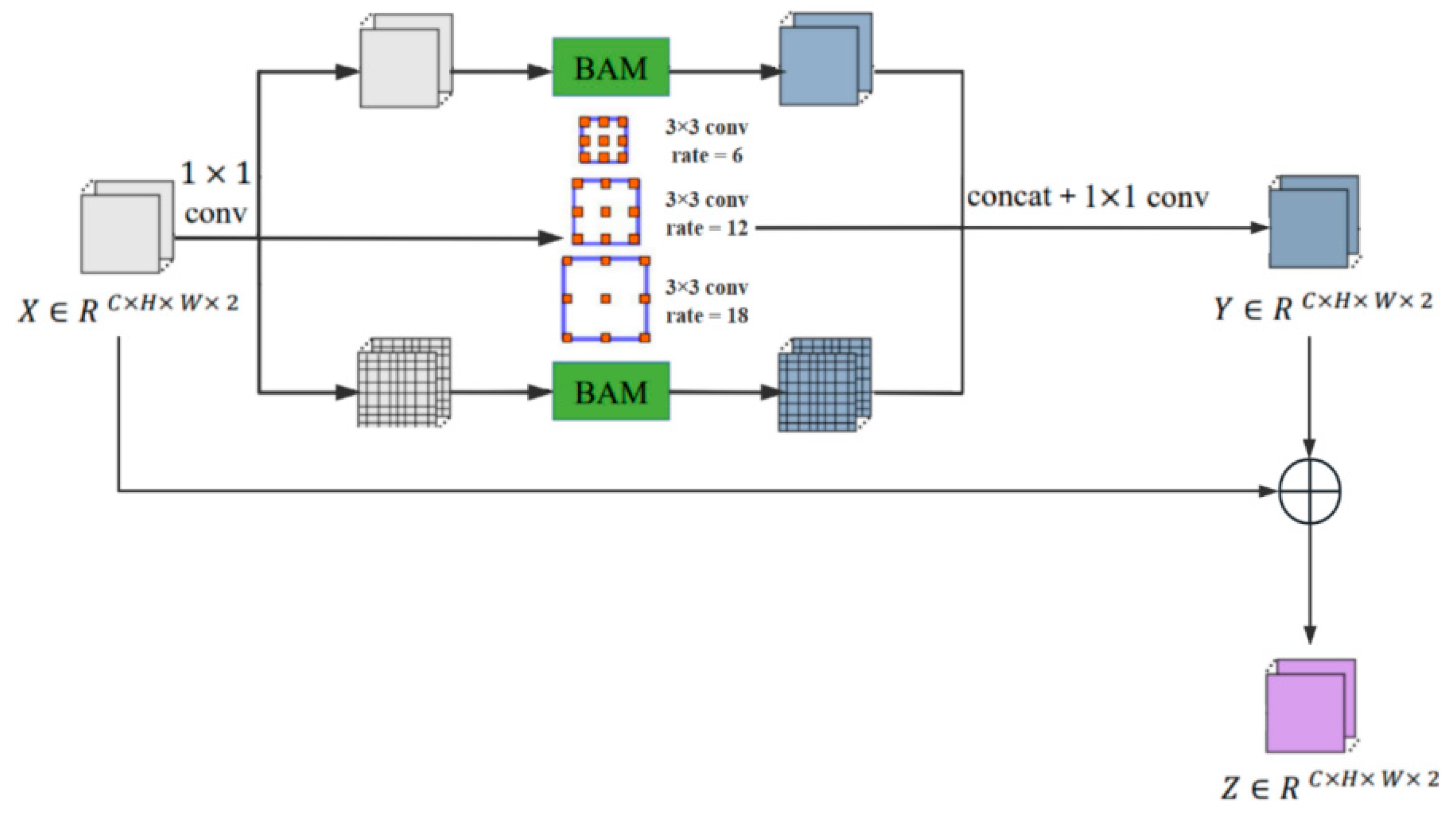

In order to reduce the attention of the algorithm model to the inter-correlation between neighboring pixels, the model can better understand the features of the image with a relatively small proportion of pixels in the changing region. We are fully utilized the input characteristics under the premise of all pixel information, improve receptive field by ASPP cross-regional pixel related information, including global attention since the branch and the smallest scale of regional branch, the attention to maximize degree for two kinds of large scale and small scale fine characteristic information of the object, convolution ASPP branch of a combination of three empty, Maximum use of the input feature information, the characteristics of large scale and small scale object refinement of retain information, and less adjacent area of the cross correlation of pixels. The ASPP+BAM pyramid attention module uses two self-attention modes of different scales to reduce the feature expression of medium-scale objects and reduce the cross-correlation of adjacent pixels. Combined with ASPP’s cross-region pixel correlation information processing, these five forms of branch output feature maps are stacked, channel-fused, and residually connected with input features. The multi-scale and fine-grained ground object information recognition ability of remote sensing images is obtained, which weakens the cross-correlation between adjacent pixels, effectively alleviates the problem that the recall rate is much higher than the accuracy rate and false alarm, and then improves the comprehensive performance of change detection. The ASPP+BAM pyramid attention module network diagram is shown in

Figure 2.

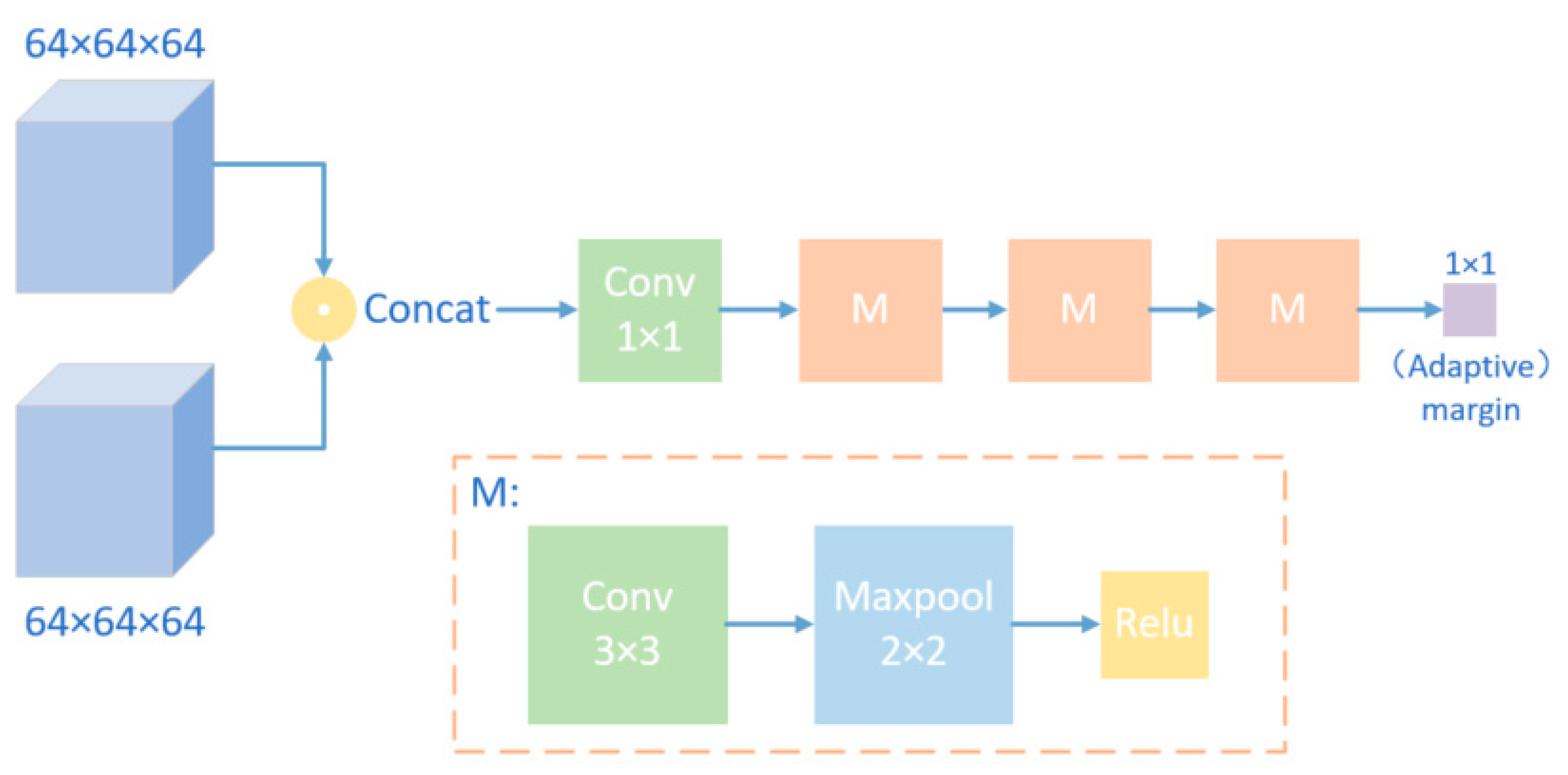

In order to solve the problem of serious false alarms caused by the comparison between the prediction change detection graph and the ground truth, we proposed the adaptive threshold contrast loss function. The adaptive threshold was obtained through the adaptive threshold generation network, as shown in

Figure 3. Therefore, the problem that the given threshold value in the basic algorithm is not finely divided in the changing region can be avoided. The adaptive threshold value is applied to the loss function, and the optimized loss function is obtained, as shown in Formula (1). The adaptive threshold will be updated with loss in the network, which is equivalent to putting higher requirements on the distance graph, making the difference between the changing pixel pairs larger, and thus solving the false alarm problem.

where b, i, j represents the first batch of b, the row first j column of pixels.

and

represente not change and change to the number of pixels, the Numbers can be calculated by the following formula.

The adaptive threshold generation network is a convolutional neural network that takes two feature maps output by the ASPP+BAM pyramid attention module as input and output values. At the beginning, the feature maps obtained after feature extraction and pyramid attention module processing are stacked on two two-phase remote sensing images. Then, the channel fusion is carried out through a convolutional layer, and then three convolutional pooling activation modules are passed through; each module contains a convolutional layer with a convolution kernel size and step size of 2, and a maximum pooling layer has an activation function. The reason for using the maximum pooled layer is that this paper hopes to better consider the texture features of the changing region and the unchanged region when obtaining the adaptive threshold through the feature graph, so that the generated threshold can better judge the boundary of the changing region, thus alleviating the false alarm problem. The reason for using the Relu activation function is that the distance in the distance graph is non-negative. Therefore, the non-negative distance is considered in the threshold value.

4. Data Sets

In the experiment of Change Detection, we used the publicly available change detection data set—the Onera Satellite Change Detection data set (OSCD) [

27]. The OSCD data set was built using images from the Sentinel-2 satellites. The satellite captures images of various resolutions between 10 m and 60 m in 13 bands between ultraviolet and short-wavelength infrared. Twenty-four regions of approximately 600 × 600 pixels at 10 m resolution with various levels of urbanization where urban changes were visible were chosen worldwide. The images of all bands were cropped according to the chosen geographical coordinates, resulting in 26 images for each region, i.e., 13 bands for each of the images in the image pair.

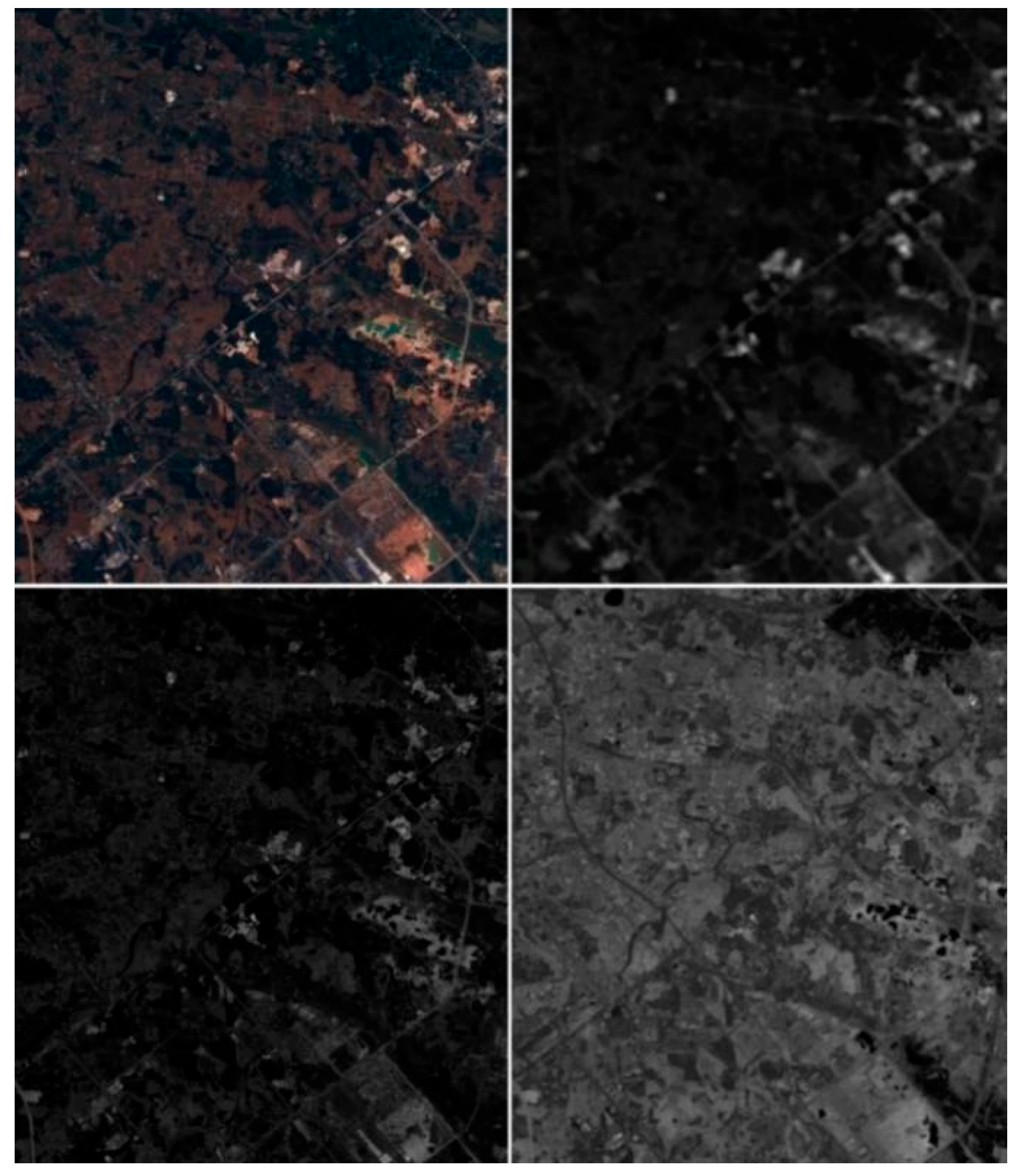

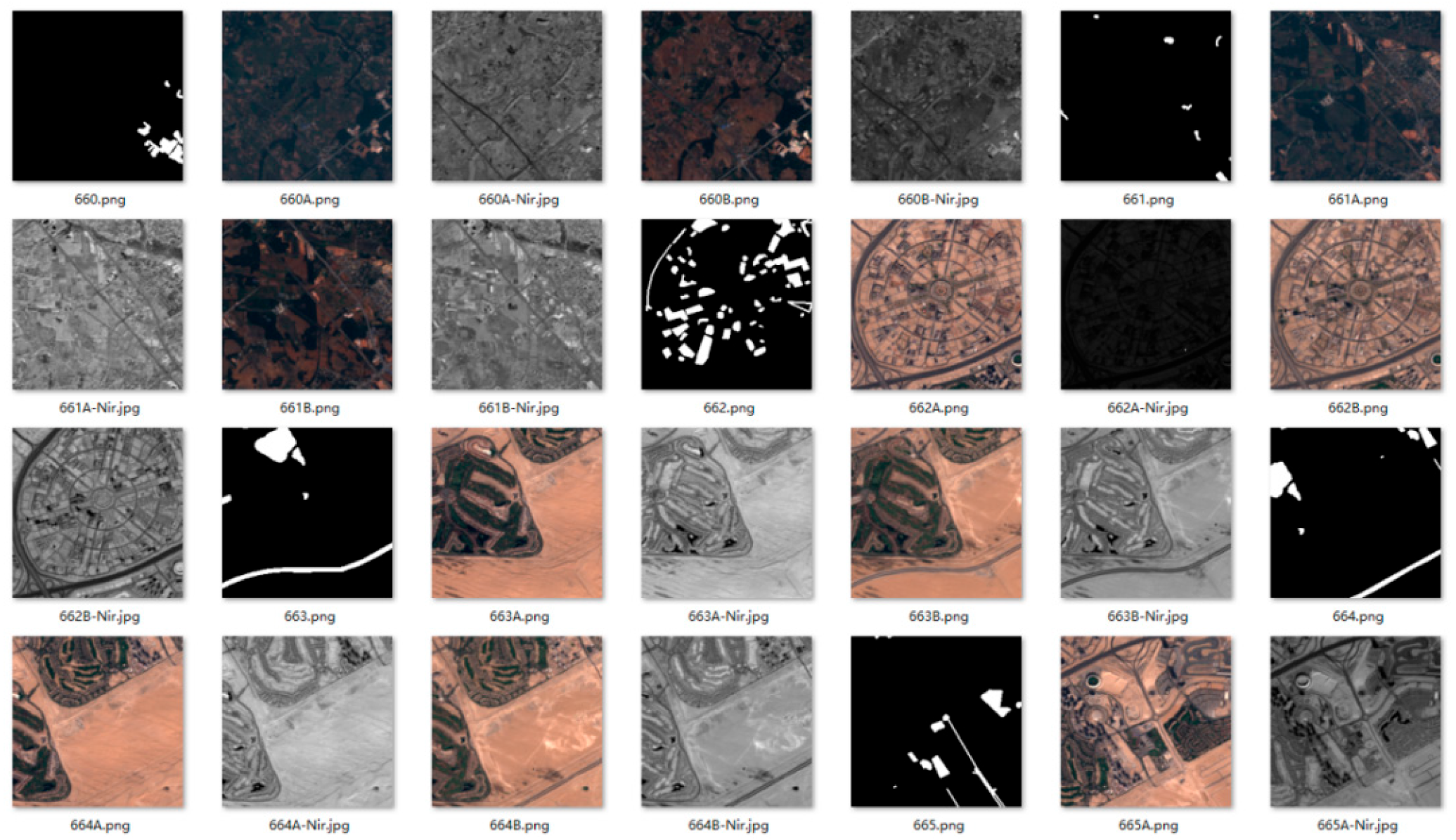

Figure 4 shows the visible light image of a certain area and its corresponding B1, B4, and B11, three single-band remote sensing images of OSCD.

The remote sensing images of this data set are mainly taken in urban areas, and the main marked objects are urban roads and buildings, reflecting the evolution of the city, while ignoring natural changes (such as plant growth or tidal changes). Since the OSCD data set is pixel-level ground truth change-labeling for the change region, the data set supports various complex supervised learning algorithms to learn, understand, and solve many problems in the field of change detection. In the experiment, four bands of remote sensing images were used as the experimental objects, of which B02 represents the central band of 490 mm, the surface resolution of 10 m blue; B03 represents the central band of 560 mm, the surface resolution of 10 m green; B04 represents the central band of 665 mm, the surface resolution of 10 m red; and BO8 represents the central band of 865 mm.

In the process of model training, the data set is divided into the training set and the test set according to the ratio of 7:3. Since the amount of data in the original data set is not enough to support the training requirements, it is necessary to strengthen the data set by pre-processing image enhancement.

Firstly, for the multi-spectral remote sensing images taken at each geographical location, 256 × 256 patches are cropped out in the images, and then image enhancement is carried out. The image enhancement scheme includes: rotation, flip, noise addition, imager addition three kinds. In this paper, three rotation angles of 90°, 180° and 270° are used to rotate the image midpoint clockwise, so as to avoid the situation that other rotation angles lead to the change of image size and the need for numerical filling. After image enhancement of the training set, a total of 1680 256 × 256 patches are obtained for network training, and the test set has 1200 256 × 256 patches for testing the model training effect.

In the process of data pre-processing, it is necessary to downsample the patch pair of category (a) two-phase remote sensing image of the cropped negative sample (without changing the region) image and to remove some public samples from the sample image without changing the region. At the same time, it must be ensured that the ratio of (a) two-phase remote sensing image patch set that does not contain changing regions and (b) two-phase remote sensing image patch set that contains a small amount of changing regions is not greater than 3 after the sum of the number of patches and the (c) two-phase remote sensing image patch set that contains a large number of changing regions. In this way, the ratio of the overall pixel amount in the change region to the total pixel value of the remote sensing image can be ensured to be greater than 1.5%, which is close to the normal level of the change region map proportion in the OSCD training set. Meanwhile, the distribution of positive and negative samples can be more uniform during training, and the overfitting probability of the model can be reduced.

Figure 5 shows the slice patch data set of remote sensing image change detection generated after the above consideration and operation.