Generative Adversarial Network Models for Augmenting Digit and Character Datasets Embedded in Standard Markings on Ship Bodies

Abstract

:1. Introduction

2. Related Work

3. Dataset and Data Augmentation Methods

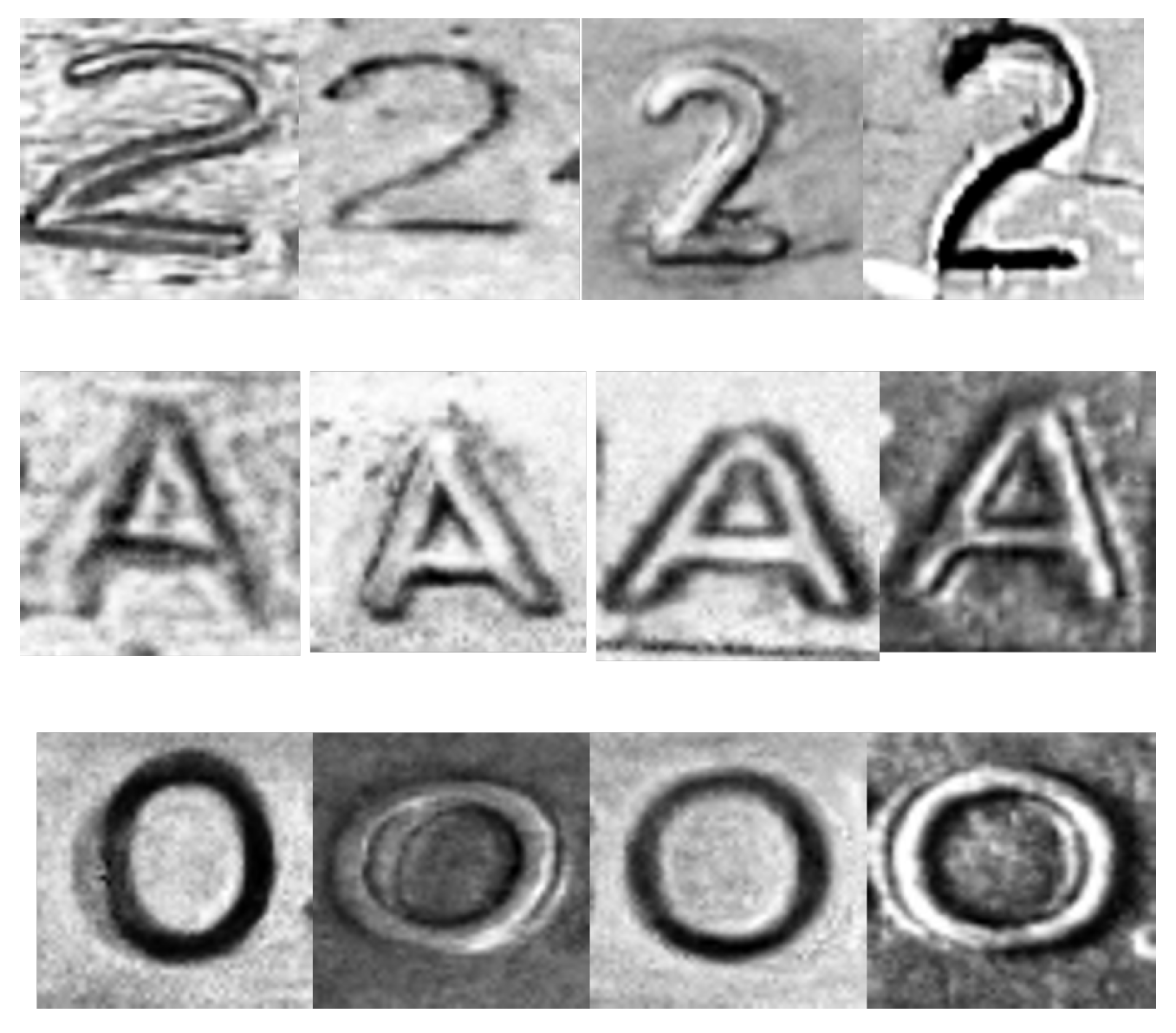

3.1. Datasets

3.2. State-of-the-Art GANs

- GAN [22]: GAN is a fundamental model in which a generator and discriminator are trained in an adversarial manner. The generator aims to produce synthetic samples, while the discriminator distinguishes between real and fake samples. GANs have demonstrated their ability to generate realistic data across various domains.

- Auxiliary Classifier GAN (AC-GAN) [23]: AC-GAN extends the conditional GAN framework by having the discriminator predict the class label of an image instead of receiving it as input. This approach stabilizes training, allows the generation of large, high-quality images, and promotes a latent space representation independent of the class label.

- Boundary-Seeking GAN (BGAN) [24]: BGAN focuses on learning the manifold boundary of the real data distribution by minimizing the classification error of the discriminator near the decision boundary. This encourages the generator to generate samples that lie on the data manifold, resulting in higher-quality and more realistic generated samples.

- Boundary Equilibrium GAN (BEGAN) [25]: BEGAN optimizes a lower bound of the Wasserstein distance using an autoencoder as the discriminator. It maintains equilibrium between generator and discriminator using an additional hyperparameter.

- Deep Convolutional GAN (DCGAN) [26]: DCGAN utilizes CNNs as the generator and discriminator. It introduces architectural constraints to ensure the stable training of CNN-based GANs and demonstrates competitive performance in image classification tasks.

- Wasserstein Generative Adversarial Network (WGAN) [15]: WGAN utilizes the Wasserstein distance to measure the discrepancy between real and generated data distributions. It introduces a critic network and focuses on optimizing the Wasserstein distance for stable training.

- WGAN with GP (WGAN-GP) [27]: WGAN-GP proposes a GP to enforce the Lipschitz constraint in the discriminator, replacing the weight clipping used in WGAN. This penalty improves stability, prevents issues such as mode collapse, and eliminates the need for batch normalization.

- Wasserstein divergence (WGANDIV) [28]: WGANDIV approximates Wasserstein divergence; exhibits stability in training, including progressive growing training; and has demonstrated superior quantitative and qualitative results.

- Deep Regret Analytic GAN (DRAGAN) [29]: DRAGAN applies a GP similar to WGAN-GP but with a focus on real data manifold. Even though DRAGAN is similar to WGAN-GP, it exhibits slightly less stability compared with WGAN-GP.

- Energy-based GAN (EBGAN) [30]: EBGAN models the discriminator as an energy function that assigns low energies to regions near the data manifold. It focuses on capturing regions close to the data distribution.

- FisherGAN [31]: FisherGAN introduces GAN loss based on the Fisher information matrix, maximizing Fisher information to encourage diverse and high-quality sample generation. It improves mode coverage and sample quality, enhancing the performance of GANs in generating realistic and varied data.

- InfoGAN [32]: InfoGAN extends the GAN framework by introducing an additional latent variable that captures the interpretable factors of variation in the generated data. By maximizing the mutual information between this latent variable and the generated samples, InfoGAN enables explicit control over specific attributes of the generated data. It promotes disentangled representations and targeted generation.

- Least-squares GAN (LSGAN) [33]: LSGAN addresses the vanishing gradient problem using the least-squares (L2) loss function instead of cross-entropy. It stabilizes the training process and produces visuals that closely resemble real data.

- MMGAN and Non-Saturating GAN (NSGAN) [34]: NSGAN simultaneously trains the generator (G) and discriminator (D) models. The objective is to maximize the probability of D making a mistake. NSGAN differs from MMGAN in its generator loss. Furthermore, the output of G can be interpreted as a probability.

- RELATIVISTIC GAN (REL-GAN) [35]: It introduces a relativistic discriminator that compares real and generated samples in a balanced manner by considering their relative ordering. This approach reduces bias toward either real or fake samples, resulting in improved training stability and generation quality.

- SGAN [36]: SGAN maintains statistical independence between multiple adversarial pairs, addresses limitations in representational capability, and exhibits improved stability and performance compared with standard methods. SGAN is suitable for various applications and produces a single generator. Future extensions can explore diversity between pairs and consider multiplayer game theory.

3.3. Evaluation Metrics

4. Results and Evaluation

5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Western Central Atlantic Fishery Commission. The Marking and Identification of Fishing Vessels; Food and Agriculture Organization of the United Nations: Rome, Italy, 2017. [Google Scholar]

- Joseph, A.; Dalaklis, D. The international convention for the safety of life at sea: Highlighting interrelations of measures towards effective risk mitigation. J. Int. Marit. Saf. Environ. Aff. Shipp. 2021, 5, 1–11. [Google Scholar] [CrossRef]

- IMO. International Convention for the Safety of Life at Sea: Consolidated Text of the 1974 SOLAS Convention, the 1978 SOLAS Protocol, the 1981 and 1983 SOLAS Amendments; IMO: London, UK, 1986. [Google Scholar]

- Wawrzyniak, N.; Hyla, T.; Bodus-Olkowska, I. Vessel identification based on automatic hull inscriptions recognition. PLoS ONE 2022, 17, e0270575. [Google Scholar] [CrossRef]

- Wei, K.; Li, T.; Huang, F.; Chen, J.; He, Z. Cancer classification with data augmentation based on generative adversarial networks. Front. Comput. Sci. 2022, 16, 1–11. [Google Scholar] [CrossRef]

- Kiyoiti dos Santos Tanaka, F.H.; Aranha, C. Data Augmentation Using GANs. arXiv 2019, arXiv:1904.09135. [Google Scholar]

- Wickramaratne, S.D.; Mahmud, M.S. Conditional-GAN based data augmentation for deep learning task classifier improvement using fNIRS data. Front. Big Data 2021, 4, 659146. [Google Scholar] [CrossRef] [PubMed]

- Moon, S.; Lee, J.; Lee, J.; Oh, A.R.; Nam, D.; Yoo, W. A Study on the Improvement of Fine-grained Ship Classification through Data Augmentation Using Generative Adversarial Networks. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju-do, Republic of Korea, 20–22 October 2021; pp. 1230–1232. [Google Scholar] [CrossRef]

- Shin, H.C.; Lee, K.I.; Lee, C.E. Data Augmentation Method of Object Detection for Deep Learning in Maritime Image. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Republic of Korea, 19–22 February 2020; pp. 463–466. [Google Scholar] [CrossRef]

- Suo, Z.; Zhao, Y.; Chen, S.; Hu, Y. BoxPaste: An Effective Data Augmentation Method for SAR Ship Detection. Remote Sens. 2022, 14, 5761. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9759–9768. [Google Scholar]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A modified faster R-CNN based on CFAR algorithm for SAR ship detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 19–21 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- You, A.; Kim, J.K.; Ryu, I.H.; Yoo, T.K. Application of generative adversarial networks (GAN) for ophthalmology image domains: A survey. Eye Vis. 2022, 9, 1–19. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Escorcia-Gutierrez, J.; Gamarra, M.; Beleño, K.; Soto, C.; Mansour, R.F. Intelligent deep learning-enabled autonomous small ship detection and classification model. Comput. Electr. Eng. 2022, 100, 107871. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, X.; Wang, K.; Shi, J.; Sun, W. Underwater sonar image classification using generative adversarial network and convolutional neural network. IET Image Process. 2020, 14, 2819–2825. [Google Scholar] [CrossRef]

- Starynska, A.; Easton, R.L., Jr.; Messinger, D. Methods of data augmentation for palimpsest character recognition with deep neural network. In Proceedings of the 4th International Workshop on Historical Document Imaging and Processing, Kyoto, Japan, 10–11 November 2017; pp. 54–58. [Google Scholar]

- Wang, J.; Yu, L.; Zhang, W.; Gong, Y.; Xu, Y.; Wang, B.; Zhang, P.; Zhang, D. Irgan: A minimax game for unifying generative and discriminative information retrieval models. In Proceedings of the 40th International ACM SIGIR conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 515–524. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Devon Hjelm, R.; Jacob, A.P.; Che, T.; Trischler, A.; Cho, K.; Bengio, Y. Boundary-Seeking Generative Adversarial Networks. arXiv 2017, arXiv:1702.08431. [Google Scholar]

- Berthelot, D.; Schumm, T.; Metz, L. Began: Boundary equilibrium generative adversarial networks. arXiv 2017, arXiv:1703.10717. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. arXiv 2017, arXiv:1704.00028. [Google Scholar]

- Wu, J.; Huang, Z.; Thoma, J.; Acharya, D.; Van Gool, L. Wasserstein divergence for gans. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 653–668. [Google Scholar]

- Kodali, N.; Abernethy, J.; Hays, J.; Kira, Z. On convergence and stability of gans. arXiv 2017, arXiv:1705.07215. [Google Scholar]

- Zhao, J.; Mathieu, M.; LeCun, Y. Energy-based generative adversarial network. arXiv 2016, arXiv:1609.03126. [Google Scholar]

- Mroueh, Y.; Sercu, T. Fisher GAN. arXiv 2017, arXiv:1705.09675. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable representation learning by information maximizing generative adversarial nets. arXiv 2016, arXiv:1606.03657. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Goodfellow, I. Nips 2016 tutorial: Generative adversarial networks. arXiv 2016, arXiv:1701.00160. [Google Scholar]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Chavdarova, T.; Fleuret, F. Sgan: An alternative training of generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9407–9415. [Google Scholar]

- Borji, A. Pros and Cons of GAN Evaluation Measures. CoRR 2018, 179, 41–65. [Google Scholar] [CrossRef]

- Denton, E.L.; Chintala, S.; Fergus, R. Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks. arXiv 2015, arXiv:1506.05751. [Google Scholar]

- Shmelkov, K.; Schmid, C.; Alahari, K. How good is my GAN? In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 213–229. [Google Scholar]

- Gerhard, H.E.; Wichmann, F.A.; Bethge, M. How sensitive is the human visual system to the local statistics of natural images? PLoS Comput. Biol. 2013, 9, e1002873. [Google Scholar] [CrossRef]

- Zhu, X.; Vondrick, C.; Fowlkes, C.C.; Ramanan, D. Do we need more training data? Int. J. Comput. Vis. 2016, 119, 76–92. [Google Scholar] [CrossRef]

- Sajjadi, M.S.; Bachem, O.; Lucic, M.; Bousquet, O.; Gelly, S. Assessing generative models via precision and recall. arXiv 2018, arXiv:1806.00035. [Google Scholar]

- Barratt, S.; Sharma, R. A note on the inception score. arXiv 2018, arXiv:1801.01973. [Google Scholar]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are GANs created equal? A large-scale study. arXiv 2018, arXiv:1711.10337. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. arXiv 2017, arXiv:1706.08500. [Google Scholar]

| GAN | A | C | D | E | I | L | M | N | O | P | R | S | T |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACGAN | 412.8 | 482.6 | 469.5 | 496.6 | 414.3 | 442.6 | 453.5 | 455.1 | 419.7 | 481.0 | 407.9 | 570.5 | 470.2 |

| BGAN | 299.8 | 298.2 | 242.0 | 229.1 | 311.1 | 296.9 | 272.1 | 294.7 | 312.5 | 340.5 | 268.3 | 287.6 | 297.3 |

| BEGAN | 402.6 | 434.3 | 569.3 | 398.9 | 365.9 | 315.4 | 453.2 | 438.6 | 328.1 | 304.8 | 249.2 | 465.0 | 320.9 |

| DRAGAN | 375.5 | 472.6 | 416.4 | 442.0 | 512.7 | 450.8 | 485.5 | 469.1 | 430.9 | 437.1 | 472.9 | 467.9 | 462.7 |

| EBGAN | 581.3 | 434.9 | 470.3 | 400.4 | 457.3 | 384.8 | 434.6 | 408.9 | 383.9 | 401.7 | 381.0 | 514.4 | 436.1 |

| F-GAN | 525.7 | 499.1 | 441.7 | 497.1 | 462.5 | 530.0 | 553.3 | 498.6 | 447.2 | 491.7 | 502.6 | 551.3 | 526.2 |

| GAN | 242.6 | 316.3 | 258.4 | 218.0 | 316.8 | 273.8 | 280.0 | 260.9 | 300.5 | 232.9 | 245.8 | 295.7 | 229.4 |

| INFOGAN | 479.1 | 481.7 | 443.7 | 493.0 | 529.9 | 515.2 | 522.9 | 484.5 | 438.9 | 449.9 | 492.8 | 505.5 | 527.6 |

| LSGAN | 393.5 | 409.8 | 415.1 | 411.6 | 393.8 | 361.1 | 377.9 | 400.8 | 419.6 | 356.8 | 384.4 | 472.1 | 463.4 |

| MMGAN | 455.9 | 486.2 | 419.2 | 408.4 | 538.5 | 495.5 | 526.4 | 464.5 | 466.2 | 410.1 | 486.2 | 488.6 | 512.5 |

| NSGAN | 449.1 | 478.7 | 416.0 | 408.9 | 519.7 | 453.6 | 486.6 | 459.4 | 432.4 | 436.9 | 463.1 | 485.8 | 478.1 |

| REL-GAN | 300.1 | 359.1 | 383.4 | 393.7 | 368.4 | 385.2 | 376.5 | 432.8 | 434.5 | 391.9 | 373.8 | 468.1 | 421.3 |

| SGAN | 350.4 | 407.9 | 424.3 | 419.5 | 365.6 | 372.8 | 411.3 | 381.8 | 349.6 | 429.4 | 406.2 | 493.2 | 389.0 |

| WGAN | 380.3 | 313.5 | 349.5 | 258.5 | 293.2 | 288.5 | 369.9 | 332.6 | 324.9 | 249.7 | 368.6 | 337.1 | 391.5 |

| WGAN-GP | 261.7 | 271.8 | 188.6 | 197.8 | 268.6 | 236.5 | 247.8 | 294.2 | 230.0 | 211.2 | 258.8 | 307.8 | 290.3 |

| WGANDIV | 231.6 | 224.8 | 210.2 | 215.8 | 279.1 | 241.0 | 252.1 | 245.5 | 283.3 | 213.4 | 255.0 | 290.4 | 285.4 |

| GAN | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| ACGAN | 352.2 | 442.7 | 350.9 | 339.7 | 381.0 | 419.9 | 400.543 | 383.9 | 320.8 | 387.5 |

| BGAN | 250.4 | 278.6 | 282.0 | 269.1 | 314.9 | 220.4 | 286.6 | 293.3 | 292.9 | 291.5 |

| BEGAN | 312.9 | 379.8 | 423.1 | 367.5 | 365.9 | 368.1 | 404.4 | 463.9 | 318.1 | 346.7 |

| DRAGAN | 320.5 | 355.6 | 394.0 | 377.4 | 375.83 | 357.3 | 359.8 | 357.4 | 383.4 | 368.3 |

| EBGAN | 321.8 | 370.4 | 390.0 | 377.4 | 373.4 | 351.3 | 443.6 | 411.7 | 398.0 | 373.9 |

| FISHERGAN | 417.3 | 502.9 | 487.3 | 477.9 | 519.3 | 395.5 | 421.6 | 408.1 | 465.4 | 522.7 |

| GAN | 273.9 | 251.1 | 262.2 | 262.1 | 281.3 | 266.8 | 253.0 | 284.1 | 286.1 | 263.2 |

| INFOGAN | 301.1 | 363.6 | 393.2 | 412.8 | 405.5 | 406.5 | 349.7 | 390.9 | 412.8 | 386.4 |

| LSGAN | 327.6 | 372.2 | 291.4 | 339.0 | 356.4 | 339.4 | 355.3 | 349.4 | 366.8 | 395.7 |

| MMGAN | 438.4 | 339.8 | 351.8 | 349.0 | 366.3 | 390.6 | 322.3 | 344.1 | 368.5 | 412.3 |

| NSGAN | 307.5 | 401.4 | 400.2 | 418.8 | 390.7 | 419.8 | 330.4 | 373.6 | 388.6 | 402.7 |

| REL-GAN | 333.1 | 356.1 | 286.6 | 340.3 | 321.5 | 335.1 | 411.5 | 384.3 | 394.4 | 414.6 |

| SGAN | 322.6 | 389.7 | 342.3 | 362.1 | 374.5 | 374.3 | 389.7 | 356.5 | 370.7 | 391.5 |

| WGAN | 247.8 | 305.2 | 318.4 | 353.0 | 342.0 | 339.9 | 383.0 | 383.0 | 395.4 | 330.6 |

| WGAN-GP | 224.0 | 305.0 | 236.9 | 229.3 | 246.9 | 240.3 | 231.2 | 256.0 | 262.3 | 289.5 |

| WGANDIV | 235.6 | 289.5 | 239.9 | 223.2 | 358.9 | 220.7 | 256.3 | 270.6 | 239.5 | 299.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdulraheem, A.; Suleiman, J.T.; Jung, I.Y. Generative Adversarial Network Models for Augmenting Digit and Character Datasets Embedded in Standard Markings on Ship Bodies. Electronics 2023, 12, 3668. https://doi.org/10.3390/electronics12173668

Abdulraheem A, Suleiman JT, Jung IY. Generative Adversarial Network Models for Augmenting Digit and Character Datasets Embedded in Standard Markings on Ship Bodies. Electronics. 2023; 12(17):3668. https://doi.org/10.3390/electronics12173668

Chicago/Turabian StyleAbdulraheem, Abdulkabir, Jamiu T. Suleiman, and Im Y. Jung. 2023. "Generative Adversarial Network Models for Augmenting Digit and Character Datasets Embedded in Standard Markings on Ship Bodies" Electronics 12, no. 17: 3668. https://doi.org/10.3390/electronics12173668