Ellipse Detection with Applications of Convolutional Neural Network in Industrial Images

Abstract

1. Introduction

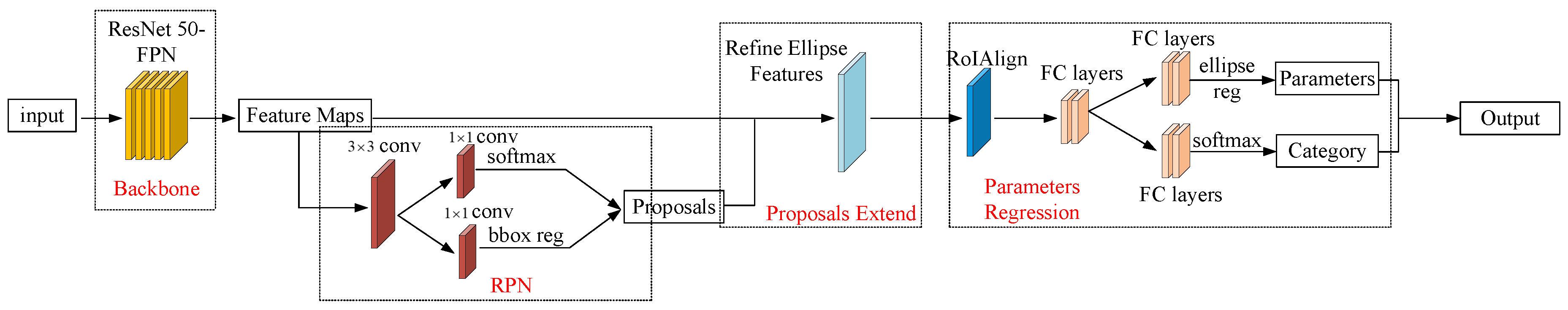

- We propose a CNN-based method for ellipse object detection and apply it specifically to the detection of special component objects, such as the hinge pins in the metallurgical industry. By utilizing the proposed models, we can accurately detect the elliptical shape of the hinge pins in the images.

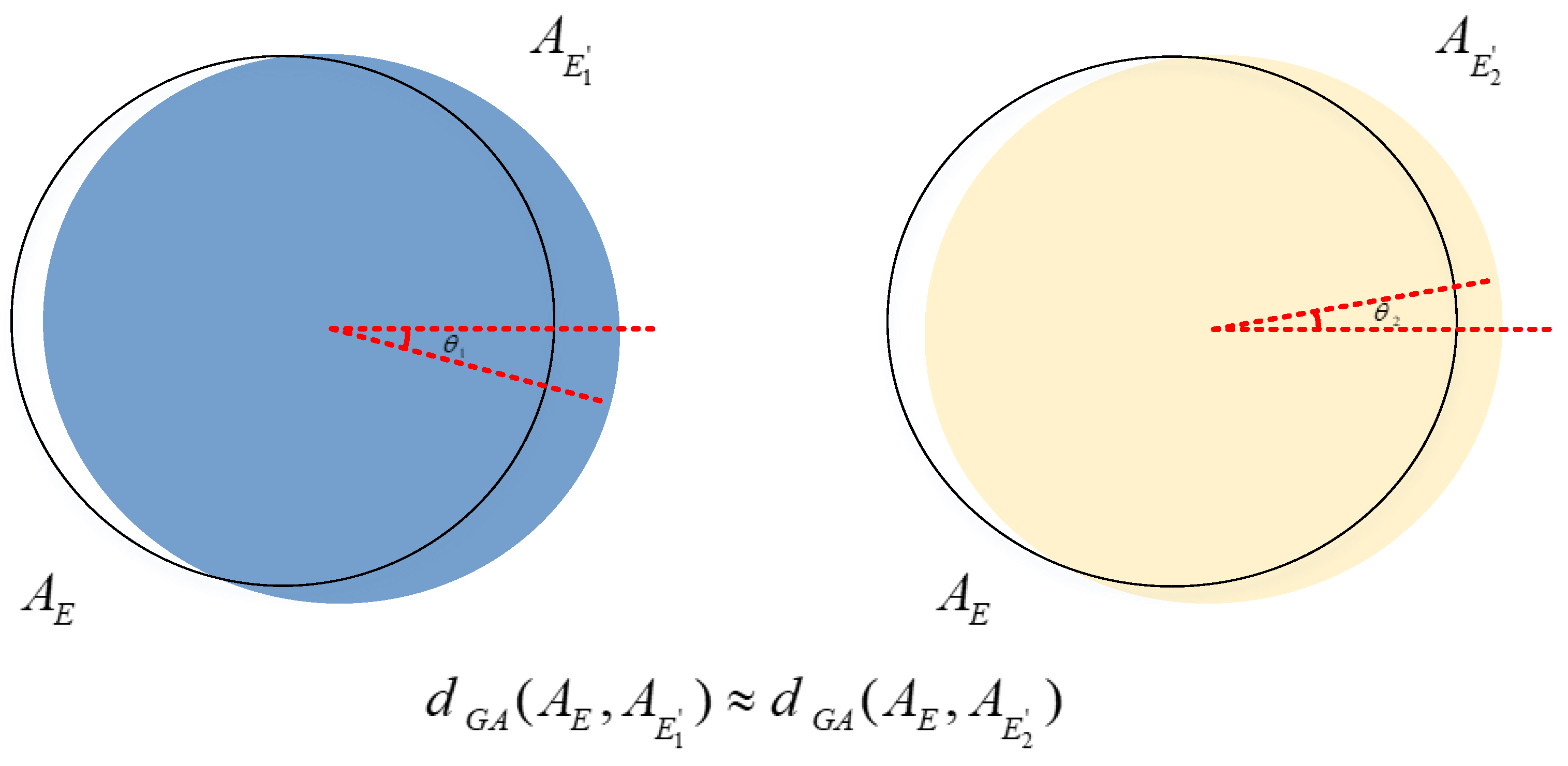

- We employ the extended proposal operation to address the issue of losing the rotation angle direction of the ellipse. Additionally, the Gaussian angle distance function and smooth loss function are combined as the loss function for the ellipse parameter regression task.

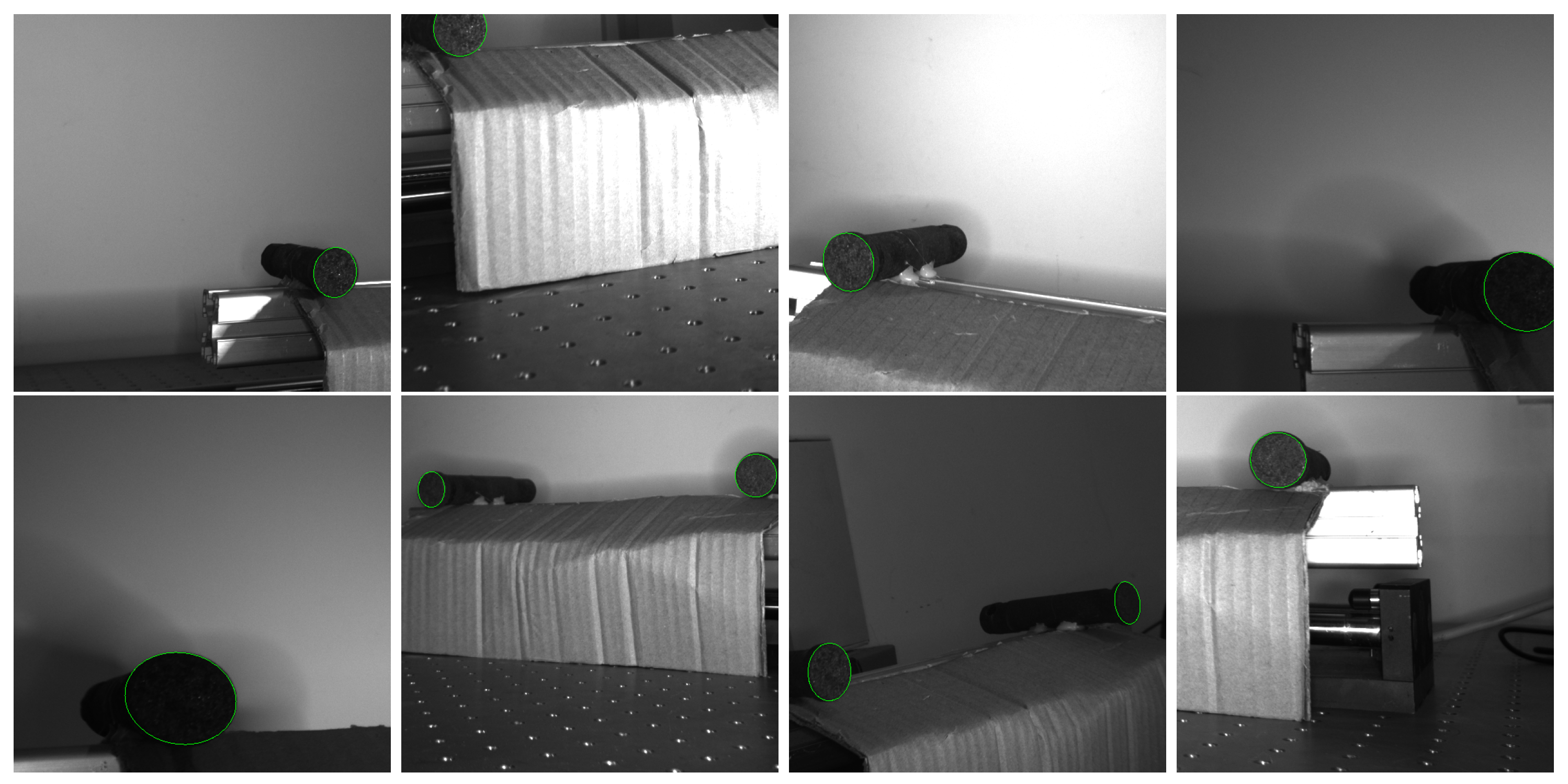

- We create a labeled small-scale dataset of hinge pins and conduct experiments related to this research by using the dataset. We validated its accuracy and robustness by comparing our method with traditional methods and other CNN-based approaches.

2. Review of Related Work

2.1. CNN-Based Object Detection Methods

2.2. Loss Functions

3. Proposed Method

3.1. Ellipse Regression

3.2. Improved Loss Function

- Minimality: when . Indicates that the distance between two ellipses is zero.

- Symmetry: . When and are swapped with each other, the distance between them does not change.

- Triangle Inequality: . When there is a third ellipse , the distances between them satisfy the triangle inequality.

- Similarity Invariance: , where is a similarity transformation. This indicates that the two ellipses undergo the same similarity transformation such as rotation, translation, and scaling in the image, and their distance should remain the same.

4. Experimental Results

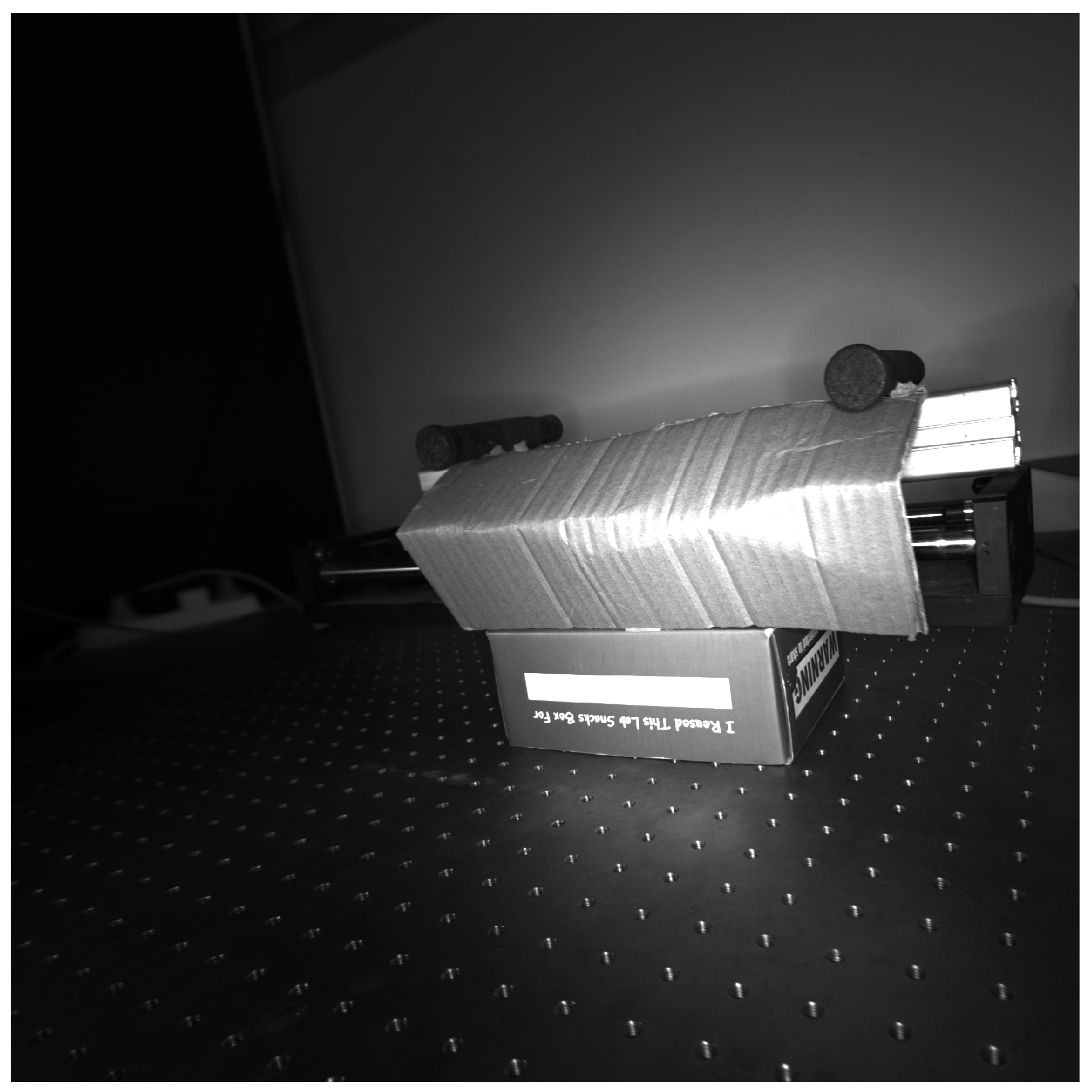

4.1. Hinge Pins Dataset

4.2. Experimental Setup

4.3. Performance

4.3.1. Module Validation and Comparative Experiments

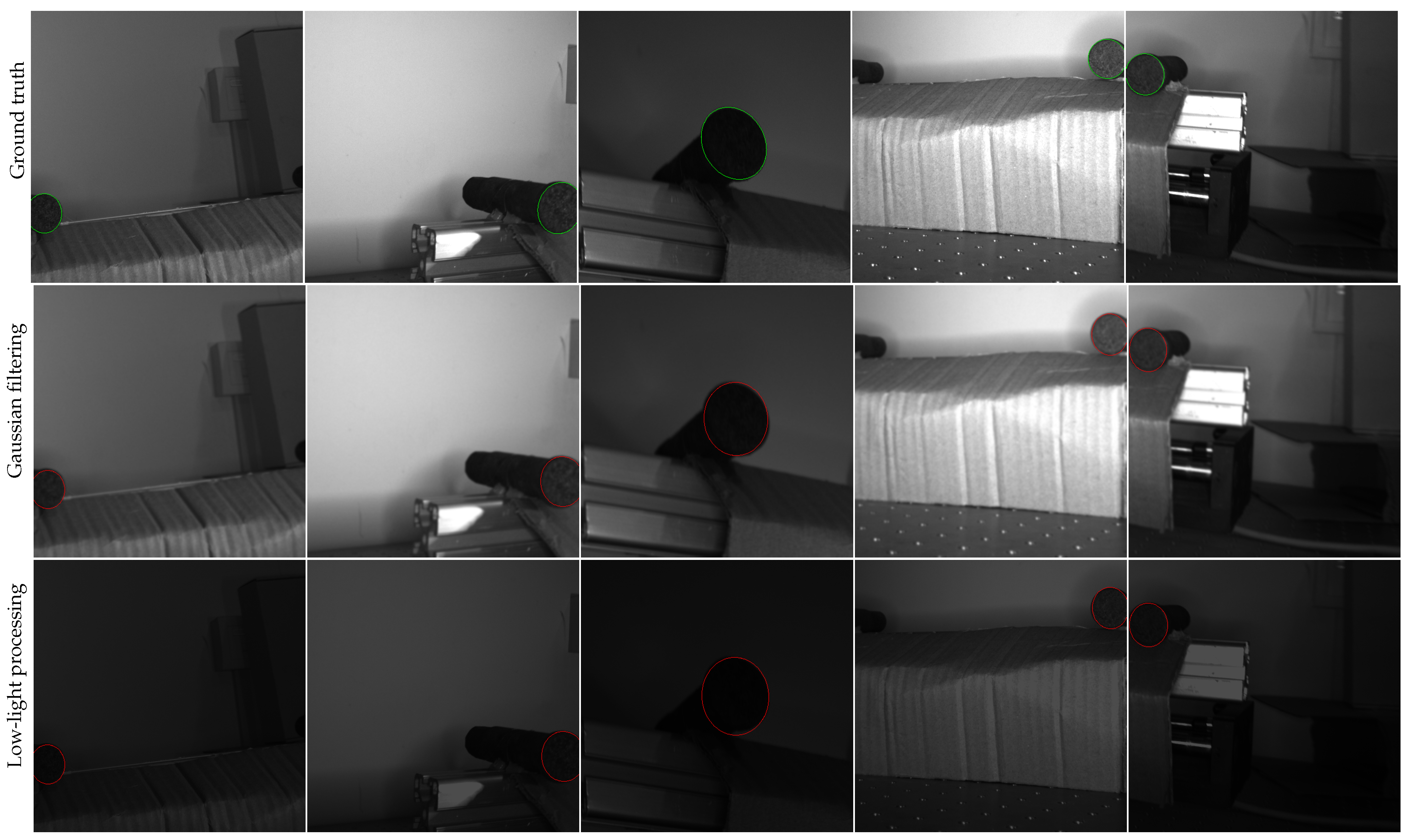

4.3.2. Visualization Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Myrzabekova, D.; Dudkin, M.; Młyńczak, M.; Muzdybayeva, A.; Muzdybayev, M. Concept of preventive maintenance in the operation of mining transportation machines. In Proceedings of the Engineering in Dependability of Computer Systems and Networks: Fourteenth International Conference on Dependability of Computer Systems DepCoS-RELCOMEX, Brunow, Poland, 1–5 July 2019; Springer: Cham, Switzerland, 2020; pp. 349–357. [Google Scholar]

- Havaran, A.; Mahmoudi, M. Markers tracking and extracting structural vibration utilizing Randomized Hough transform. Autom. Constr. 2020, 116, 103235. [Google Scholar] [CrossRef]

- Lei, I.L.; Teh, P.L.; Si, Y.W. Direct least squares fitting of ellipses segmentation and prioritized rules classification for curve-shaped chart patterns. Appl. Soft Comput. 2021, 107, 107363. [Google Scholar] [CrossRef]

- Liu, C.; Chen, R.; Chen, K.; Xu, J. Ellipse detection using the edges extracted by deep learning. Mach. Vis. Appl. 2022, 33, 63. [Google Scholar] [CrossRef]

- Yu, B.; Shin, J.; Kim, G.; Roh, S.; Sohn, K. Non-anchor-based vehicle detection for traffic surveillance using bounding ellipses. IEEE Access 2021, 9, 123061–123074. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Wang, J. A dragon fruit picking detection method based on YOLOv7 and PSP-Ellipse. Sensors 2023, 23, 3803. [Google Scholar] [CrossRef]

- Jin, R.; Owais, H.M.; Lin, D.; Song, T.; Yuan, Y. Ellipse proposal and convolutional neural network discriminant for autonomous landing marker detection. J. Field Robot. 2019, 36, 6–16. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cheng, T.; Wang, X.; Huang, L.; Liu, W. Boundary-preserving Mask R-CNN. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16. Springer: Cham, Switzerland, 2020; pp. 660–676. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar] [CrossRef]

- Dong, W.; Roy, P.; Peng, C.; Isler, V. Ellipse R-CNN: Learning to infer elliptical object from clustering and occlusion. IEEE Trans. Image Process. 2021, 30, 2193–2206. [Google Scholar]

- Loncomilla, P.; Samtani, P.; Ruiz-del Solar, J. Detecting rocks in challenging mining environments using convolutional neural networks and ellipses as an alternative to bounding boxes. Expert Syst. Appl. 2022, 194, 116537. [Google Scholar] [CrossRef]

- Oh, X.; Lim, R.; Foong, S.; Tan, U.X. Marker-Based Localization System Using an Active PTZ Camera and CNN-Based Ellipse Detection. IEEE/ASME Trans. Mechatron. 2023, 1–9. [Google Scholar] [CrossRef]

- Dong, H.; Zhou, J.; Qiu, C.; Prasad, D.K.; Chen, I.M. Robotic manipulations of cylinders and ellipsoids by ellipse detection with domain randomization. IEEE/ASME Trans. Mechatron. 2022, 28, 302–313. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zhou, K.; Zhang, M.; Zhao, H.; Tang, R.; Lin, S.; Cheng, X.; Wang, H. Arbitrary-oriented Ellipse Detector for Ship Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 7151–7162. [Google Scholar] [CrossRef]

- Li, F.; He, B.; Li, G.; Wang, Z.; Jiang, R. Shape-biased ellipse detection network with auxiliary task. IEEE Trans. Instrum. Meas. 2022, 71, 1–13. [Google Scholar] [CrossRef]

- Llerena, J.M.; Zeni, L.F.; Kristen, L.N.; Jung, C. Gaussian bounding boxes and probabilistic intersection-over-union for object detection. arXiv 2021, arXiv:2106.06072. [Google Scholar]

- Christian, J.A.; Derksen, H.; Watkins, R. Lunar crater identification in digital images. J. Astronaut. Sci. 2021, 68, 1056–1144. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Cullinane, M.J. Metric axioms and distance. Math. Gaz. 2011, 95, 414–419. [Google Scholar] [CrossRef]

- Pan, S.; Fan, S.; Wong, S.W.; Zidek, J.V.; Rhodin, H. Ellipse detection and localization with applications to knots in sawn lumber images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3892–3901. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Chen, S.; Xia, R.; Zhao, J.; Chen, Y.; Hu, M. A hybrid method for ellipse detection in industrial images. Pattern Recognit. 2017, 68, 82–98. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Randall, G. A sub-pixel edge detector: An implementation of the canny/devernay algorithm. Image Process. Line 2017, 7, 347–372. [Google Scholar] [CrossRef]

| E | L | MeanIOU | AP | AP | AP | AP | AP | AP | F-1 | Reliability |

|---|---|---|---|---|---|---|---|---|---|---|

| - | - | 88.12 | 77.49 | 90.38 | 36.24 | 27.10 | 29.72 | 29.45 | 51.04 | 50.76 |

| ✓ | - | 88.71 | 80.28 | 90.18 | 49.25 | 33.19 | 39.33 | 36.21 | 60.24 | 60.12 |

| - | ✓ | 88.76 | 80.39 | 90.37 | 49.82 | 34.40 | 40.36 | 37.55 | 59.80 | 58.31 |

| ✓ | ✓ | 89.54 | 80.93 | 90.51 | 51.76 | 36.79 | 46.45 | 37.19 | 64.59 | 64.05 |

| Methods | MeanIOU | AP | AP | AP | AP | AP | AP | F-1 | Reliability |

|---|---|---|---|---|---|---|---|---|---|

| Mask R-CNN (baseline) | 88.12 | 77.49 | 90.38 | 36.24 | 27.10 | 29.72 | 29.45 | 51.04 | 50.76 |

| Mask R-CNN (Gau) | 88.34 | 76.44 | 81.43 | 49.28 | 32.17 | 39.32 | 33.73 | 51.38 | 48.08 |

| Mask R-CNN (KLD) | 85.45 | 73.25 | 80.64 | 45.33 | 29.54 | 37.73 | 30.28 | 50.95 | 46.29 |

| Ellipse R-CNN | 86.99 | 80.39 | 89.38 | 45.50 | 33.59 | 45.50 | 34.61 | 53.70 | 51.92 |

| Our method | 89.54 | 80.93 | 90.51 | 51.76 | 36.79 | 46.45 | 37.19 | 64.59 | 64.05 |

| Methods | Position Error | Radii Error | Angle Error (°) |

|---|---|---|---|

| Mask R-CNN (baseline) | 3.02 | 2.37 | 26.18 |

| Mask R-CNN (Gau) | 3.05 | 2.30 | 26.27 |

| Ellipse R-CNN | 2.30 | 2.26 | 26.21 |

| Our method | 2.40 | 2.14 | 24.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Lu, Y.; Bai, R.; Xu, K.; Peng, T.; Tai, Y.; Zhang, Z. Ellipse Detection with Applications of Convolutional Neural Network in Industrial Images. Electronics 2023, 12, 3431. https://doi.org/10.3390/electronics12163431

Liu K, Lu Y, Bai R, Xu K, Peng T, Tai Y, Zhang Z. Ellipse Detection with Applications of Convolutional Neural Network in Industrial Images. Electronics. 2023; 12(16):3431. https://doi.org/10.3390/electronics12163431

Chicago/Turabian StyleLiu, Kang, Yonggang Lu, Rubing Bai, Kun Xu, Tao Peng, Yichun Tai, and Zhijiang Zhang. 2023. "Ellipse Detection with Applications of Convolutional Neural Network in Industrial Images" Electronics 12, no. 16: 3431. https://doi.org/10.3390/electronics12163431

APA StyleLiu, K., Lu, Y., Bai, R., Xu, K., Peng, T., Tai, Y., & Zhang, Z. (2023). Ellipse Detection with Applications of Convolutional Neural Network in Industrial Images. Electronics, 12(16), 3431. https://doi.org/10.3390/electronics12163431