Depth Image Denoising Algorithm Based on Fractional Calculus

Abstract

:1. Introduction

2. Fractional Calculus Operator Denoising Theory and Method

2.1. Effect of Fractional Integration on Signal and Model Construction

2.2. Construction Based on Fractional Integral Operator

2.3. Fractional Integral Denoising Operator and Convolution Template for Constructing Depth Images

2.4. Fractional Integral Operator Denoising Algorithm Flow for Depth Images

3. Experimental Results and Analysis

3.1. Evaluation Method of Depth Image Denoising Effect

3.2. Simulated Experiment and Analysis of Depth Image

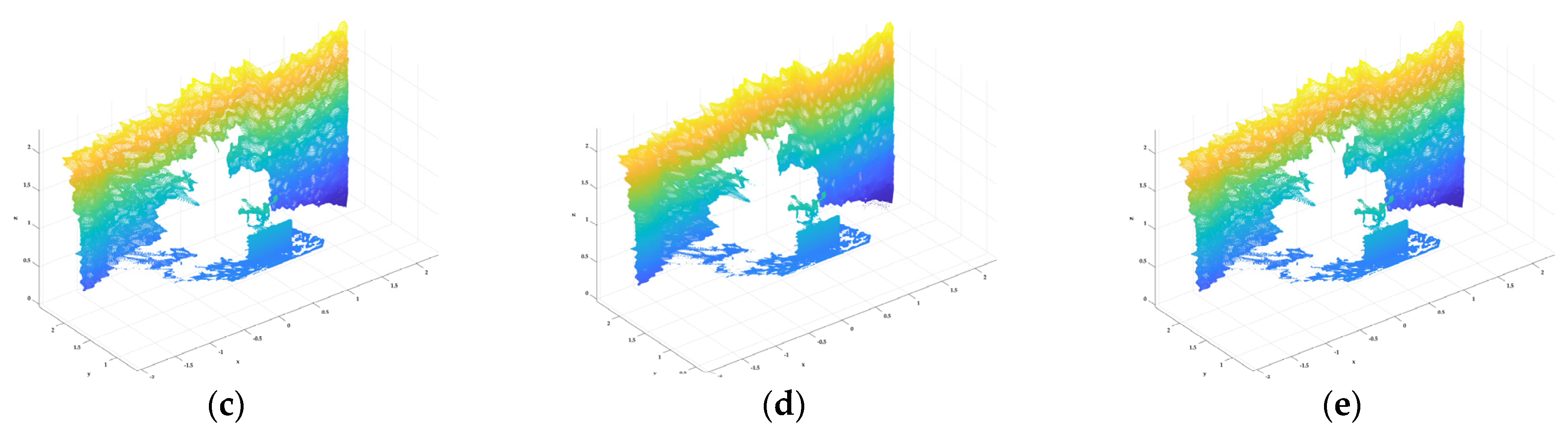

4. Laboratory Field Depth Image Denoising Effect

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zou, Y.; Wu, Q.; Zhang, J.; An, P. Explicit Edge Inconsistency Evaluation Model for Color-Guided Depth Map Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 439–453. [Google Scholar]

- Truong, A.M.; Vealer, P.; Philips, W. Depth Map Inpainting and Super-Resolution with Arbitrary Scale Factors. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 488–492. [Google Scholar]

- Zhu, F.; Li, S.; Guo, X. A 3D reconstruction method based on RGB-D camera. J. Phys. Conf. Ser. 2021, 1802, 42–48. [Google Scholar] [CrossRef]

- Kui, W.; Ping, A.; Zhaoyang, Z.; Hao, C.; Hejian, L. Fast Inpainting Algorithm for Kinect Depth Map. J. Shanghai Univ. 2012, 18, 454–458. [Google Scholar]

- Li, P.; Pei, Y.; Zhong, Y.; Guo, Y.; Zha, H. Robust 3D face reconstruction from single noisy depth image through semantic consistency. IET Comput. Vis. 2021, 15, 393–404. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Y. Kinect depth image filtering algorithm based on joint bilateral filter. J. Comput. Appl. 2014, 34, 2231–2234. [Google Scholar]

- Liu, J.; Wu, W.; Chen, C.; Wang, G.; Zeng, C. Depth image inpainting method based on pixel filtering and median filtering. J. Optoelectron. Laser 2018, 29, 539–544. [Google Scholar]

- Tan, Z.; Ou, J.; Zhang, J.; He, J. A Laminar Denoising Algorithm for Depth Image. Acta Opt. Sin. 2017, 37, 0510002. [Google Scholar]

- Min, D.; Lu, J.; Do, M.N. Depth Video Enhancement Based on Weighted Mode Filtering. IEEE Trans. Image Process. 2012, 21, 1176–1190. [Google Scholar] [PubMed] [Green Version]

- Liu, J.; Gong, X. Guided Depth Enhancement via Anisotropic Diffusion. Pac. Rim Conf. Multimed. 2013, 8294, 408–417. [Google Scholar]

- Liang, J.; Chen, P.; Wu, M. Research on an Image Denoising Algorithm based on Deep Network Learning. J. Phys. Conf. Ser. 2021, 1802, 032112. [Google Scholar] [CrossRef]

- Xu, L.; Huang, G.; Chen, Q.; Qin, H.; Men, T.; Pu, Y. An improved method for image denoising based on fractional-order integration. Front. Inf. Technol. Electron. Eng. 2020, 21, 1485–1493. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, J.; Yu, S.; Tan, L. Noise detection and image denoising based on fractional calculus. Chaos Solitons Fractals 2020, 131, 109463. [Google Scholar] [CrossRef]

- Wei, Y.; Liu, D.; Boutat, D.; Chen, Y. An improved pseudo-state estimator for a class of commensurate fractional order linear systems based on fractional order modulating functions. Syst. Control Lett. 2018, 118, 29–34. [Google Scholar] [CrossRef]

- Pu, Y.; Zhou, J.; Xiao, Y. Fractional Differential Mask: A Fractional Differential-Based Approach for Multiscale Texture Enhancement. IEEE Trans. Image Process. 2010, 19, 491–511. [Google Scholar] [PubMed]

- Hu, J.; Pu, Y.; Zhou, J. Fractional Integral Denoising Algorithm. J. Univ. Electron. Sci. Technol. China 2012, 41, 706–711. [Google Scholar]

- Guo, H.; Li, X.; Li, C.; Wang, M. Image denoising using fractional integral. IEEE Int. Conf. Comput. Sci. Autom. Eng. 2012, 2, 107–112. [Google Scholar]

- Zhou, C.; Yan, T.; Tao, W.; Liu, S. A Study of Images Denoising Based on Two Improved Fractional Integral Marks. Int. Conf. Intell. Comput. 2012, 7389, 386–392. [Google Scholar]

- Podlubny, I.; Chechkin, A.; Skovranek, T.; Chen, Y.; Jara, B.M.V. Matrix approach to discrete fractional calculus II: Partial fractional differential equations. J. Comput. Phys. 2009, 228, 3137–3152. [Google Scholar] [CrossRef] [Green Version]

- Choi, S.; Zhou, Q.; Miller, S.; Koltun, V. A Large Dataset of Object Scans. Comput. Vis. Pattern Recognit. 2016, 1602, 02481. [Google Scholar]

| Dataset Number | V(Best) | PSNR(Best) | PSNR | PSNR |

|---|---|---|---|---|

| Fractional Integral Denoising (dB) | Noise (dB) | Median Filter Denoising (dB) | ||

| 05989 | −0.3 | 52.992 | 47.307 | 37.480 |

| 03236 | −0.3 | 52.070 | 47.599 | 36.107 |

| 03528 | −0.4 | 53.847 | 50.094 | 37.855 |

| 02350 | −0.3 | 57.391 | 54.611 | 36.309 |

| 09860 | −0.4 | 51.289 | 43.457 | 37.326 |

| 08343 | −0.3 | 50.870 | 46.934 | 32.112 |

| Data Set | PSNR (dB) | Valid Point (Normal) | Valid Point (After Statistical Outlier Removal) |

|---|---|---|---|

| Original depth image | 100.00 | 854,500 | 831,126 |

| Median filter denoising | 34.708 | 855,799 | 831,126 |

| Fractional integral ) | 53.764 | 854,575 | 841,309 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, T.; Wang, C.; Liu, X. Depth Image Denoising Algorithm Based on Fractional Calculus. Electronics 2022, 11, 1910. https://doi.org/10.3390/electronics11121910

Huang T, Wang C, Liu X. Depth Image Denoising Algorithm Based on Fractional Calculus. Electronics. 2022; 11(12):1910. https://doi.org/10.3390/electronics11121910

Chicago/Turabian StyleHuang, Tingsheng, Chunyang Wang, and Xuelian Liu. 2022. "Depth Image Denoising Algorithm Based on Fractional Calculus" Electronics 11, no. 12: 1910. https://doi.org/10.3390/electronics11121910