1. Introduction

According to a report from the National Spinal Cord Injury Statistical Center in 2021, 290,000 patients are physically paralyzed owing to acquired spinal cord damage or degenerative neurological diseases such as amyotrophic lateral sclerosis (ALS) in the United States [

1]. There have been several studies on applications to aid the communication of paralyzed patients using bio-signals by developing human–computer interaction (HCI) tools.

Among various bio-signals, electrooculograms have garnered the attention of researchers because eye movements are one of the few communication methods for ALS patients at the later stages. Muscular controllability decreases as the degree of the disease becomes severe; however, the muscles related to eye movements operate even in the later stages of ALS. Utilizing gaze movements is a common communication method for patients with ALS [

2]. The common way to track eye movements is to utilize camera and image recognition techniques. However, recently, electrooculogram (EOG) devices have been studied for tracking gaze movements. The advantages of EOG-based eye-tracking are the low cost of the devices and the ease of use because they can detect eye movements even in closed-eye states.

The main challenge in utilizing EOG-based eye-tracking devices is the instability of the EOG because the signals are often contaminated with noise and artifacts [

3]. EOG-based gaze recognition has been widely used to estimate the directions of instantaneous eye movements. Controlling wheelchairs [

4,

5] or game interfaces [

6,

7] in two to four directions are the main applications of EOG-based eye-tracking; in addition, keyboard-typing systems have been developed using four- to eight-directional eye movements [

8,

9].

In 2007, Tsai et al. demonstrated that EOG signals can be utilized to directly write letters by moving the gaze in a letter form [

10]. Heuristic features were derived from directional changes in the EOG signals to recognize 10 Arabic numerals and four mathematical symbols. The results indicated that 75.5% believability and 72.1% dependability were achieved.

Lee et al. proposed a method to recognize eye-written English alphabets of 26 patterns using dynamic time warping (DTW) to obtain 87.38% accuracy [

11]. Fang et al. recognized 12 basic patterns of eye-written Japanese katakana, achieving 86.5% accuracy by utilizing a neural network and a hidden Markov model [

12]. Chang et al. achieved the highest accuracy of 95.93% in recognizing eye-written letters by combining a support vector machine (SVM) and dynamic positional warping [

13].

One of the major issues in recognizing eye-written characters is the instability of EOG signals [

3]. EOG is often contaminated with crosstalk, drift, and other artifacts related to body status and movements, which cause the misrecognition of eye-written patterns. There are some methods for removing noise and artifacts from EOG, including band-pass and median filters, along with wavelet transforms to extract saccades [

11,

12,

13,

14,

15,

16]. However, it is very difficult to extract eye-movement signals accurately because they can be easily hidden in the artifacts over a short distance. Moreover, the recognition accuracy can be reduced if the EOG signals are distorted when noise is removed.

Over the past decade, the application of deep neural networks (DNNs) has become a popular approach to manage these preprocessing issues [

17]. The raw data are used directly after the minimum data processing, and noise is expected to be removed or ignored with convolutions. One of the limitations of DNNs is that they require numerous data to correctly recognize complicated signals. For example, a DNN achieved 98.8% of the top-5 image classification accuracy; however, it should be noted that there were 14 million images in the dataset [

18,

19]. Obtaining a large dataset is unfeasible in several cases, especially when the data should be measured by human participants, such as eye-written characters. Measuring EOG signals from many participants is often difficult because of research budgets, participants’ conditions, or privacy issues.

This study presents a method to utilize a Siamese network structure [

20,

21] to address the limitation of the small dataset. The Siamese network was developed to recognize images by comparing the two signals. The network does not learn to classify but is trained to recognize whether the two given images are in the same group. A classification problem for multiple groups is simplified into a binary classification with the Siamese network. Because the simpler network requires less data for training, it is suitable for the problems with a limited number of training data.

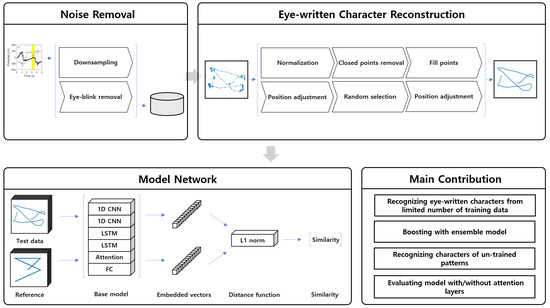

The main contribution of this paper is that a methodology was proposed to recognize the eye-written characters to overcome the data size issue. A neural network structure was proposed by employing the concept of the Siamese network, and a boasting methodology was suggested to be utilized to enhance the performance of the network. The proposed method was validated as reducing the number of the training data.

This paper is organized as follows:

Section 2 presents the methodology to preprocess and classify eye-written characters;

Section 3 shows the experimental results to evaluate the proposed method in different perspectives; and

Section 4 summarizes the current study and indicates the further research issues.

4. Conclusions and Outlooks

This study proposed a method with a Siamese network and an attention mechanism to classify eye-written characters with a limited set of training data. The proposed method achieved 92.78% accuracy with a train–test ratio limited to 2:1. This is an impressive achievement because previous studies used 90–94.4% of data for training and tested with the rest. It was indicated that the recognition accuracy of the proposed method remained approximately 80% even when the proposed model was trained with 50 data and tested with 490 data. Moreover, this study showed the possibility of classifying an untrained pattern by utilizing the Siamese network.

One of the limitations of this study is that a single dataset of Arabic numbers was utilized to validate the proposed method. Although this is the only public database to the best of our knowledge, this issue should be treated in a future study by collaborating with other research groups.

Another limitation of this study is that the study was conducted in a writer-dependent manner, which does not guarantee the performance when a new user’s data is included to test. Although it is assumed that the proposed method may achieve high performance in writer-independent validation because the training–test ratio was lowered to 1:10, experimental results will be required to prove this assumption.

In future studies, the validity of the proposed method using other time-series signals such as online handwritten characters, electrocardiograms, and electromyograms requires further research. In addition, this study can be extended by calculating the similarities for untrained patterns. The performance of the Siamese network is believed to be improved as the variations of the training patterns increase because it learns to calculate the difference between two images in various classes. We are planning to study the accuracy changes according to the number of classes and training patterns in the recent future.