1. Introduction

The motivation for this paper came from the question of whether we—as researchers of decision-making—would recommend that managers decide “straight from the gut” [

1] or “don’t trust your gut” [

2]. Thus, the purpose of this paper is to explore the effects of non-conscious

vs. conscious ways of making decisions in a dynamic decision-making task. More specifically, we wanted to initially ask the question of whether humans perform better when they have substantial time available to think about a dynamic problem, or when time for deliberate consideration is limited. The time to make a decision can be limited because decisions have to be made immediately or because individuals are distracted during their deliberation time.

In the light of some recent findings in psychology, and according to some anecdotal evidence, it seems that non-conscious forms of decision-making are more effective than deliberate forms more often than expected by proponents of rational problem solving. However, these findings were often achieved when static and single tasks had been involved. Therefore, in accordance with the theme of this special issue in dynamic decision-making in controlled experiments, we wanted to investigate the effectiveness of deliberate vs. non-conscious cognitive processing on decision-making in a dynamic context and derive first insights on the domain of dynamic decision-making that subsequently could be used for advanced studies.

To this end, this paper has four more sections. In the second section, we review the status of the (mostly psychological) literature on deliberate vs. non-conscious decision-making. Furthermore, characteristics of dynamic tasks are briefly explained; it is indicated that most experiments on the deliberate/non-conscious difference employ static and single decision tasks. In the third section, the design of an experiment is presented that should help to get preliminary insights on the effects of non-conscious cognitive processing in environments where dynamic complexity is high. In the fourth section, we present and discuss the results of this experiment. The paper closes with a section that discusses implications for further research by formulating a framework for advanced studies in this domain and concrete recommendations for future experiments.

2. Literature Review

2.1. Evidence in Favor of Non-Conscious Deliberation When Making Decisions

Over the last decades, non-conscious cognitive processing of information and subsequent decision-making has received substantial attention in psychological research [

3,

4,

5,

6,

7,

8,

9,

10,

11]. While authors use different terminology (“intuitive”, “unconscious”, “recognition-primed”, “deliberation without attention”, or “thinking without thinking”), they all describe the same phenomenon: making decisions without conscious consideration of what the best decision would be. In this paper, we use the term “non-conscious cognitive processing in decision-making” to refer to this phenomenon. Therefore, the expression of the decision happens consciously, but the preceding cognitive processes are not consciously related to the decision that has to be made. Note that such decision-making is not “magical” or “random” but that people have acquired the ability to make non-conscious decisions over years of being busy with a subject [

9].

Wilson [

6] illustrates the crucial part that non-conscious cognitive processes play with an example of a man who loses his sense of proprioception (

i.e. the sense of the relative position of body parts). With a great deal of effort, the patient had to replace non-conscious proprioception with conscious control of his body. Whenever his concentration was lost, he would lose control over his body and end up “in a heap of tangled limbs on the floor”.

On the basis of experimental as well as real world purchase decisions, Dijksterhuis and colleagues [

12,

13] conclude that non-conscious thinkers are better able to make the best choice among complex products. Conscious thinkers are better able to make the best choice among simple products. Popular books such as Blink [

14] maintain that expert decision-making is often instantaneous, difficult to access by conscious processes and in specific circumstances leads to high-quality decisions.

A classic example of expert decision-making, reported by Simon as early as 1957, already relates to this debate. He studies a chess player who tries to decide on the next move in a game, an activity that is thought to involve a highly analytical approach. Expert chess players indicate that good moves usually come to mind after a few seconds of looking at the board, after which considerable time is spent on verifying that the move does not have hidden weaknesses [

15]. Therefore, although a decision comes to mind almost immediately, an analytical and time-consuming process is used to check this first available option; this analysis may reveal that the first option is not beneficial and should be replaced by another move. Simon [

15] describes how in a situation of stress, for example, intuitive decisions may be based on “primitive urges” such as the need to reduce embarrassment or guilt. Research in biases and heuristics [

16,

17,

18,

19] shows convincingly that human judgment is biased in systematic ways.

Gigerenzer and colleagues argue that the same simplifying heuristics that are responsible for cognitive biases are the cognitive mechanisms “that make us smart” [

20]. Only because they allow us to make fast decisions that are frugal in the sense that they do not require much information as input, we are able to survive in a complex world. Thus, Gigerenzer assumes that non-conscious cognitive processing is a basic characteristic and success factor of human beings. Based on these claims, Mortensen

et al. [

21] claim that decision-makers should be advised to rely on non-conscious information processing when making difficult decisions.

In summary, we can conclude that—despite all the biases and heuristics related to human decision-making and all claims by proponents of rational decision-making—there is evidence that non-conscious processing of information for decision-making is effective on some occasions. This paper tries to shed some additional light in the context of dynamic tasks, which differ from the static, single tasks mostly used in experiments of decision-making.

2.2. Decision-Making in Dynamic Environments

Individual decision-making in dynamic systems differs from static tasks, which are mostly used when non-conscious processing in decision-making is studied. We define dynamic decision-making as characterized by three features:

A series of decisions has to be made, not just one (repeated decision-making);

The system’s state changes over time based on past decisions; and

There is some element of time pressure involved, although not all individuals subjectively experience it to the same degree.

Thus, the current state of a dynamic decision-making environment depends on the past system states and the decisions that have been made in the past.

The distinction between static and dynamic decision-making is related to the different types of complexity people in decision situations experience. In this regard, detail complexity and dynamic complexity can be differentiated [

22,

23]. Detail complexity can be divided further into three sub-components: the number of elements in a system, the number of connections between elements, and the types of functional relations between elements [

24]. The dynamic component of complexity comprises the variability of a system’s behavior over time, and the variability of a system’s structure (assuming that a system can stay the same when its structure changes as long as its underlying goal set is not substantially modified;

cf. [

25]). An important proposition for this study is that, in dynamic decision-making, effects of dynamic complexity are prevalent that do not exist in static decision-making situations. Thus, static and dynamic decision-making might have different characteristics and success strategies due to the effects of dynamic complexity in the latter.

In several fields of expertise, decision makers confronted with time pressure and uncertainty typically carried out the first course of action that came to their mind. This idea is similar to Simon’s assertion that “intuition and judgment—at least good judgment—are simply analyses frozen into habit” [

15] (p. 63). This process, also termed “recognition-primed” decision-making, actually consists of a range of strategies. In its simplest form, the strategy comes down to seizing up the situation and responding with the initial option identified. If the situation is not clear, the decision maker may supplement this strategy by mentally simulating the events leading up to the situation. In a situation that is changing continuously, a different kind of simulation may be applied as well. Here, the proposed plan of action is mentally simulated to see if unintended consequences arise that are unacceptable. In a review study, Klein [

26] considers the conditions under which recognition-primed decision-making appears to be appropriate: when the decision maker has considerable expertise relevant to the situation at hand and is under time-pressure, and when there is uncertainty and/or goals are ill-defined. Recognition-primed decision-making is less likely to be used with combinatorial problems when a justification for the decision is required, and when the views of different stakeholders have to be taken into account.

We understand dynamic decision-making as falling into the first category: We study individual decision-making, stakeholders are not considered, and there is no need to explain (or justify) decisions. By definition, dynamic tasks involve a degree of time pressure and uncertainty about the system’s future, which are each based on the dynamic complexity of the situation and the difficulties people have with estimating developments over time [

27]. Thus, it seems conceivable that for individuals confronted with dynamic problems, non-conscious processing or recognition-primed decision-making is the preferred approach [

28,

29], which is in contrast to the more “classical” assumption that in such cases conscious reasoning and critical deliberation is beneficial [

30,

31]. While the literature is not unanimous when it comes to empirical support for one or the other view based on the usually employed static tasks [

32,

33], we for now consider the arguments of the non-conscious decision-making group as worthwhile enough to base our study of dynamic decision-making on.

In summary, in dynamic situations decision-makers do not only experience the effects of detail complexity as frequently happening in static tasks. In addition, dynamic complexity plays an important role and might hamper effective decision-making. Thus, the initial assumption of this research is the advantage of non-conscious cognitive processing of information in decision-making for dynamic tasks. We want to explore in a pilot study whether the assumed superiority of non-conscious decision-making in static tasks also holds for dynamic tasks or whether a thorough consideration of the situation is required.

3. Experimental Section

We explore whether non-conscious deliberation of the situation is superior to deliberate consideration in dynamic decision-making with the help of a pilot laboratory experiment with three experimental groups. In Group 1, subjects have to start making decisions right after the task has been introduced to them (“immediate condition”). Group 2 is the experimental group that represents conscious decision-making (“consideration condition”). Subjects in this group are given three minutes to contemplate about the task before they are allowed to start with actually working on the task. In experimental Group 3, non-conscious decision-making is tested (“distracted condition”). Subjects in this group are also not allowed to start using the simulator immediately but have to wait for three minutes. However, in contrast to the consideration condition, they are occupied by another cognitive task during this time (in our case, solving simple Sudoku puzzles). All subjects work individually and communication with other participants is not allowed. A timetable of the experiment is depicted in

Table 1, which is the result of two trial runs of the experiment with colleagues and students.

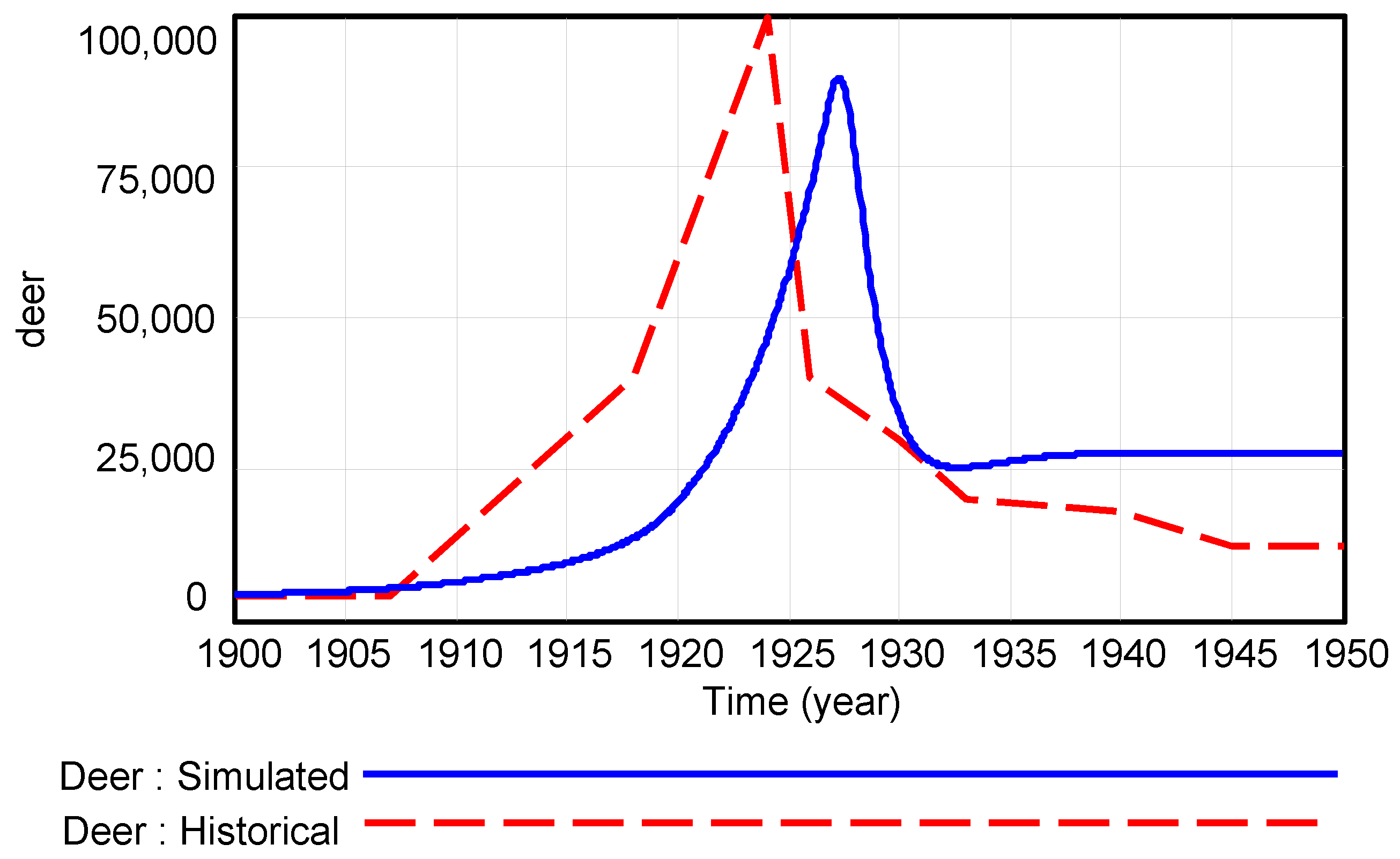

We use a computer simulation as dynamic task in the experiment [

34], which is based on the Kaibab Plateau system dynamics model. This model is well documented in the literature [

35]—

Figure 1 depicts the overshoot and collapse behavior of a deer population which is represented in the model (for a structural explanation of overshoot and collapse

cf. [

22]). The model is based on real events, happening in the Kaibab national park in Arizona between 1900 and 1950. Thus, in the figure, estimated historical data is shown as well; differences to the simulation outcomes are due to estimations (in the model as well as in the real data) and factors not considered in the model, for instance volatile weather conditions. This Kaibab model has been used in a variety of educational settings. Examples include explaining dynamic complexity resulting from feedback loops [

36,

37,

38] and unintended consequences of human policies in ecological systems [

39]; the human interference with nature was the quasi extinction of predators of deer in the years between 1907 and 1920: cougars, wolves, and coyotes.

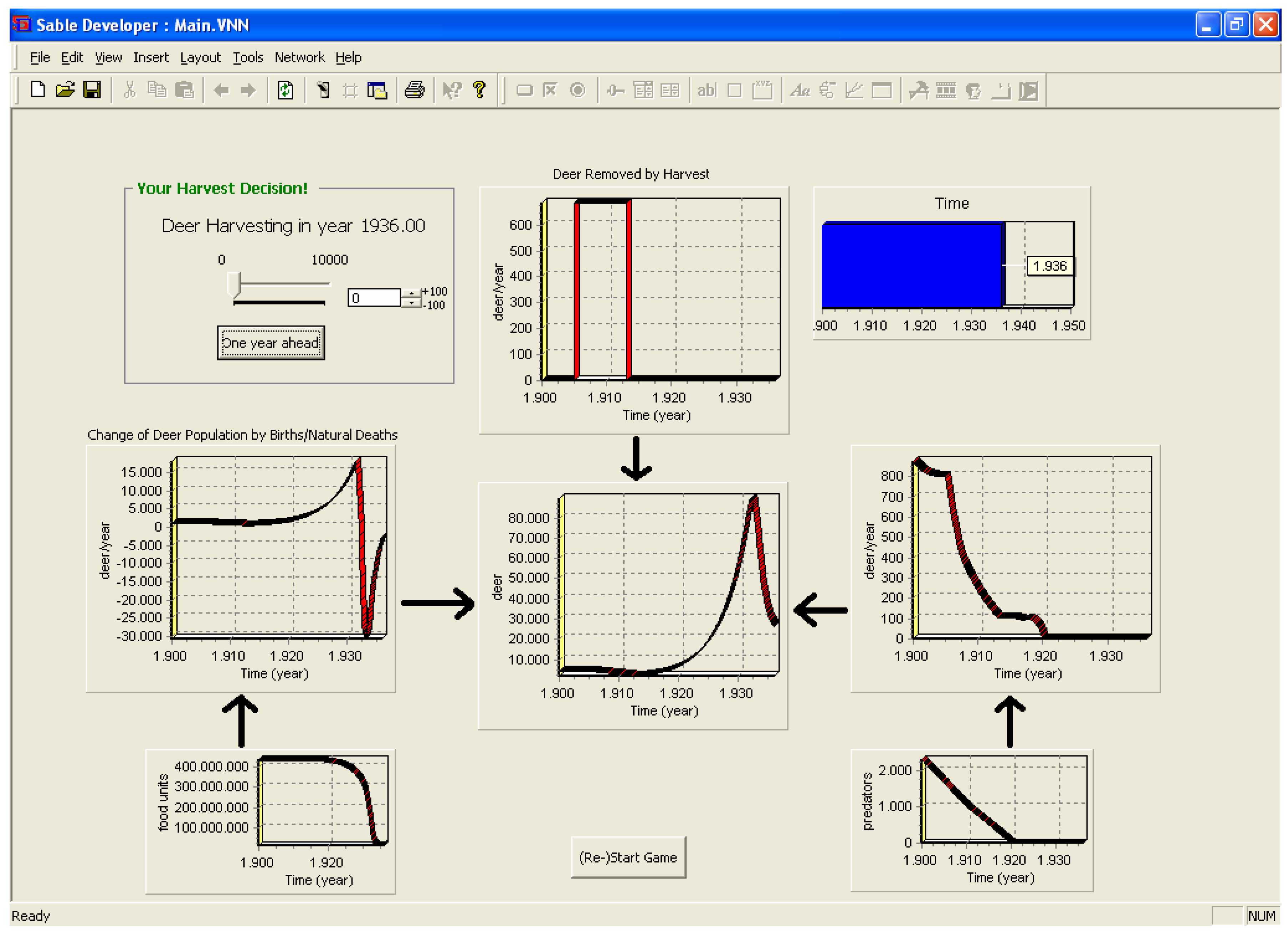

In the simulation, the model is used with one extension: Now, users can decide—for each simulated year—on a “harvest rate” of deer (meaning either the hunting of deer or the transfer of deer to other regions). By sensibly controlling the number of deer, it is possible to avoid the enormous increase of deer and the subsequent collapse of the population due to too little food available. The deer harvest rate is the only decision users have to make. The user interface of the simulator is depicted in

Figure 2. The model is developed using Vensim [

40]; the user interface is programmed in Sable [

41] (for another version of a Kaibab simulator see [

42]; in their simulator the number of predators can be controlled by subjects as well). The goal for subjects is to stabilize the deer population on the highest level possible over the total simulation run.

Subjects were students in a course in the business administration bachelor program at a Dutch university. They were told that participating in the experiment is part of the course; however, it was made clear that performance in the experiment would not influence grading. Subjects’ performance was assessed based on a score that combines the stability of the population with the populations’ height, aggregated over all 50 simulated years. Thus, performance is the average number of deer divided by the standard deviation of the population from the average. This score is in line with the actual goals of natural reserve authorities: On the one hand, they want to maintain a reasonable high number of individuals of a species to secure genetic diversity and to hedge the population against infrequent but not impossible extreme natural conditions (e.g., very harsh winters). On the other hand, the stability in the number of a species minimizes amplifying negative effects on other species (e.g., in a predator-prey situation).

By definition, dynamic tasks take a certain period of time to be completed because several decisions have to be made, and effects only occur after some time. Thus, we assume our results to be affected in two ways:

Differences between the experimental groups are expected be rather small. During the 50 simulation rounds that the subjects play per experimental run, in all experimental conditions, there is some time for non-conscious processing of information while working on the task. For instance, subjects in the distraction condition will not be distracted during the time of actually working with the simulation; subjects in the immediate condition have some time for non-conscious cognitive processing; subjects in the consideration condition have some extra non-conscious cognitive processing time. We discuss this point again in the final section of this paper as the systematic issue of dynamic decision-making research when it comes to non-conscious decisions.

Following the reasoning in 1, we assume that differences between groups will become smaller in the course of the experiment. In particular, differences in experimental run 2 should be much smaller (or even non-existent) compared to experimental run 1.

4. Results and Discussion

In the experiment, which was conducted in 2008, 120 subjects took part. After eliminating 18 cases due to missing or incomplete data, the following group sizes were achieved: immediate condition n = 41, consideration condition n = 30, distraction condition n = 31. In total, 58 subjects were male, 44 were female; the age of subjects ranged from 19 to 30, with the average at about 21. No subject reported to have known the Kaibab Plateau task before. Since subjects were randomly assigned to a time slot, and time slots were randomly allocated to an experimental condition, we assume that experimental groups are randomly composed. We assume that groups were equal with regard to the purpose of our experiment: All subjects are Business Administration students in their second year; there are no statistical differences regarding age or gender distribution between groups. At the end of the experiment, subjects were asked about the perceived degree of difficulty of the task, the time pressure they experienced, and their estimation of the realism of the task. Like with the biographical data, none of these items showed significant differences between experimental groups.

Results of subjects in terms of average deer population are far from optimal. As Sterman [

43] indicates, a deer population of 32,000 can be sustained without substantial fluctuations. In comparison to this, the average population size over all subjects in the experiment is 9433 for the first run and 11,505 for the second run. While one can find a significant increase of the average size of the deer population from run 1 to run 2, it goes along with a significantly larger standard deviation. In other words, while subjects manage to increase the average population size, fluctuations around the mean increase as well, an indication for the common overshoot-and-collapse behavior of the system. Accordingly, the performance score (mean/standard deviation) is not significantly different between the first and the second run over all subjects.

Table 2 and

Table 3 show the results of an analysis of variance for the first and the second run, respectively. We tested the between group differences for the three experimental groups for the performance score. For both runs, within group differences are much bigger than between group differences. There are no statistically significant differences between the three experimental groups concerning the performance score (in both simulator runs).

In the knowledge test conducted at the end of the experiment, subjects had to answer twelve questions concerning their understanding and recollection of the task (shown in the appendix). Each correct answer was coded as one point, leading to a maximum of twelve points in the knowledge test. An analysis of variances between groups for results in the test indicates group differences. In order to find out which groups differed from each other and what the direction of the differences is, we ran T-tests that are summarized in

Table 4.

Between the distraction and the consideration group, no statistical differences can be found. However, there are significant differences between the immediate and the distraction group, and between the immediate and the consideration group. In both cases, subjects in the immediate group scored better in the knowledge test than subjects in the other groups.

A qualitative inspection of subjects’ results indicates that the fewest overshoot-and-collapse behavior modes are to be found for the distraction group (since effects are probably biggest in round 1, only this round is considered): 7 out of 31 (22.6%) subjects showed the typical boom-and-bust cycle. However, for the consideration group, 10 out of 30 (33.3%) showed a collapse, and, for the immediate group, 15 out of 41 (36.6%).

5. Conclusions

The results of our experiment justify a variety of further evaluations. Most strikingly, no differences between groups could be found. Although this result might simply represent a fact (i.e. there are no differences between conscious or non-conscious decision-making in dynamic tasks), we assume—based on the apparent differences for other types of tasks and on the theoretical considerations presented in the literature section—that it is more likely an artifact of the experimental design and of characteristics of the task that we used. Therefore, this section discusses explanations for our findings, resulting in a framework for future research and possible changes in experimental designs.

5.1. A Framework for Researching Non-Conscious Dynamic Decision Making

Since subjects’ performance does not significantly increase from run 1 to run 2, no learning seems to have taken place. While subjects achieve a higher average deer population in the second run, the standard deviation increases as well. We hypothesize that subjects are not able to comprehend the structure of the system and that they cannot deduct and control the corresponding behavior mode (overshoot-and-collapse).

Thus, concerning the nonexistent group differences for task performance, our assumption is that the experimental setting was not differentiating enough between groups. First, the 3-min time span for consideration or distraction might simply be too short, compared to the total duration of the experiment and the complexity of the task. Second, the nature of dynamic tasks makes it difficult to prevent all subjects from either consciously or non-consciously processing task information during the game run since a dynamic task takes a while by definition (and, during this time, experimental groups cannot be treated differently anymore). Therefore, we identify a systematic issue here of experimental research in dynamic decision-making when it comes to non-conscious decision-making.

Another point to consider is the complexity of the task. While structurally not too simple (consisting of more than a dozen feedback loops, two integration structures—deer and food—and some non-linear relationships—for instance, the food regeneration time), participants in two pre-tests considered the task to be understandable. However, there is obviously a substantial gap between knowing what one should do in principle, and actually doing it by controlling a dynamic system that is not open for detailed inspection (only behavior graphs and the principle system structure is shown).

Based on these general insights derived from our exploratory experiment and literature review, we infer that the effects of different decision-making styles (non-conscious

vs. deliberate) might be strongly moderated by task characteristics, most notably the structural complexity of the dynamic system used in the task. Future research in this domain should therefore take task characteristics and system complexity explicitly into account. We summarize this finding in the conceptual model shown in

Figure 3 and formulate the following propositions for future studies:

Decision-making style (non-conscious vs. deliberate) affects performance in a dynamic decision-making task

Due to the nature of dynamic decision-making, the effect of decision-making style on performance in a dynamic decision-making task is relatively small between experimental groups (systematic issue)

The effect of decision-making style on performance in a dynamic decision-making task is strongly moderated by task characteristics

Among the task characteristics that strongly moderate the effect of decision-making style on performance in a dynamic decision-making task, structural complexity of the underlying system plays an important role

5.2. Adjustments to Experimental Design in Potential Subsequent Studies

In the analysis of our data, we found one significant difference between experimental groups—which is the difference for the knowledge test. The direction of the difference (the immediate group scored better than both the consideration and the distraction group) is counter-intuitive. The group that had the least possibility to cognitively process information about the tasks (either consciously or unconsciously) scored best. We can only explain this in terms of a shorter over-all assessment time (subjects being more concentrated and motivated) and with a shorter time from reading the instruction until filling-out the test questionnaire. Since some of the questions are just testing the recollection of facts, a shorter time span might aid in the recalling of these facts. In subsequent research, timings for the asking of the knowledge questions should be harmonized between groups.

Mitigating the systematic issue of non-conscious processing during periods of instructions (which inevitably blur the differences between experimental groups) is more difficult. One suggestion in this regard would be that subjects in the consideration group have to verbally explain all their decisions and that subjects in the distraction group must solve a brief, unrelated “distraction puzzle” before their decisions.

The last improvement point for future experimental designs concerns the performance score that we used. We gave subjects a verbal goal to achieve (“stabilize the deer population on the highest possible level”); however, we did not explain in detail how their performance is measured and did not display the performance score explicitly during the simulation. Related to this, we assume that making the simulation more “transparent” (by providing information about the system structure) might help subjects achieving a better result. However, we question whether this would be more realistic and whether it would interfere with the experimental setting because subjects would then need time to explore the system, during which non-conscious processing of information could take place in all groups. Thus, it would add to the systematic issue of on-going non-conscious processing in dynamic decision-making.

In summary, we believe we address a relevant question. Extending the existing research about non-conscious decision-making to dynamic tasks is worthwhile, since most decision settings in reality are dynamic and research shows that individuals can deal insufficiently with dynamics. However, further attempts have to be made to investigate the effects of conscious vs. non-conscious decision-making using an experimental research design. Such subsequent experiments can also include the participation of non-student subjects, such as decision-makers in companies, and the effects of making decisions in groups (including communication) on decision quality.