Aligning Predictor-Criterion Bandwidths: Specific Abilities as Predictors of Specific Performance

Abstract

1. Introduction

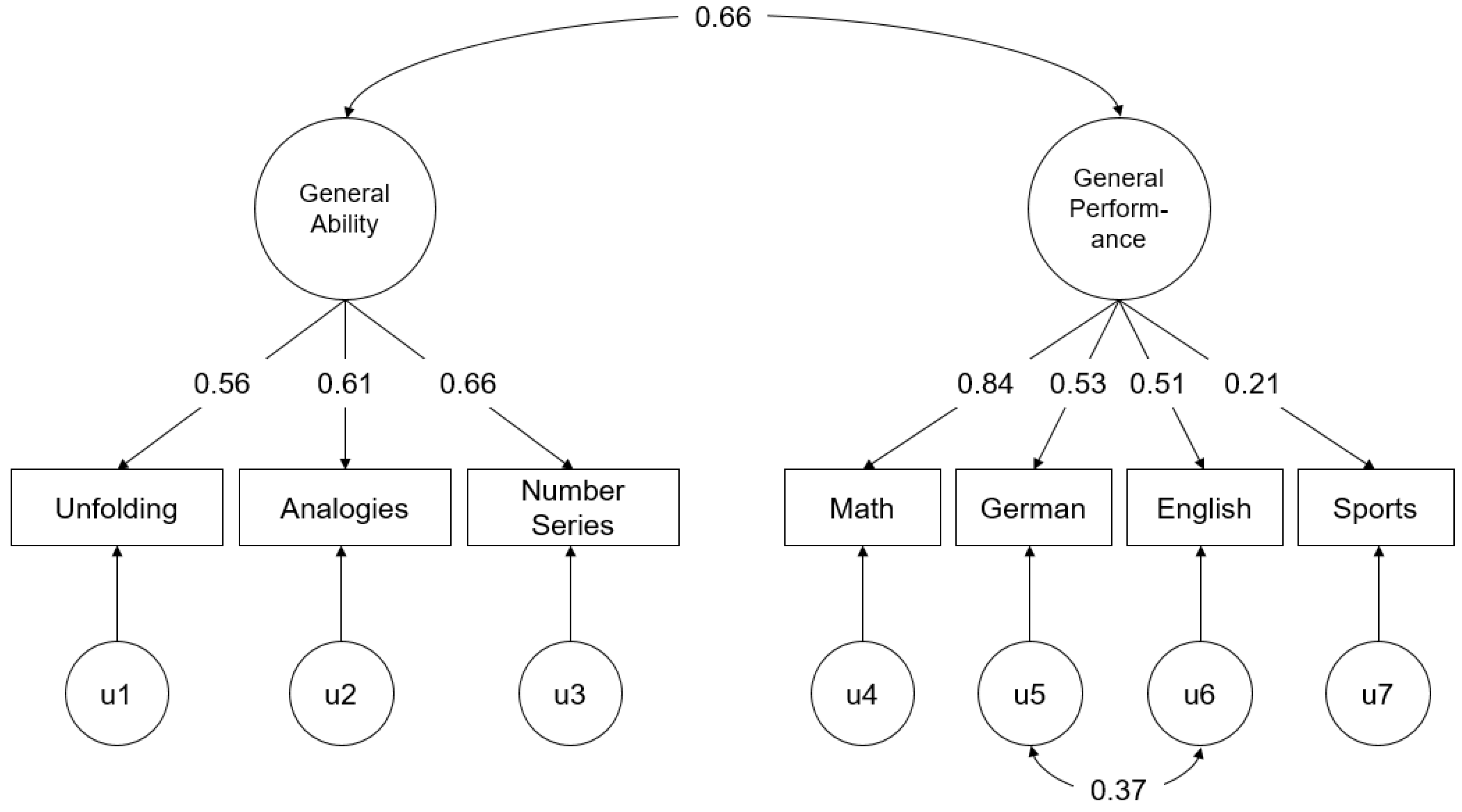

2. Materials and Methods

Analytic Strategy

3. Results

4. Discussion

Limitations and Future Research Directions

Conflicts of Interest

Appendix A

| Subset | R2 | Unfolding (U) | Analogies (A) | Number Series (N) |

|---|---|---|---|---|

| General Academic Performance | ||||

| General Ability (G) | 0.138 | 0.001 | 0.019 | 0.002 |

| G,U | 0.139 | 0.019 | 0.008 | |

| G,A | 0.157 | 0.000 | 0.001 | |

| G,N | 0.140 | 0.007 | 0.018 | |

| G,U,A | 0.158 | 0.026 | ||

| G,U,N | 0.147 | 0.037 | ||

| G,A,N | 0.158 | 0.026 | ||

| G,U,A,N | 0.184 | |||

| Math Performance | ||||

| G | 0.180 | 0.000 | 0.009 | 0.001 |

| G,U | 0.180 | 0.010 | 0.002 | |

| G,A | 0.189 | 0.001 | 0.000 | |

| G,N | 0.181 | 0.001 | 0.008 | |

| G,U,A | 0.190 | 0.029 | ||

| G,U,N | 0.182 | 0.037 | ||

| G,A,N | 0.189 | 0.030 | ||

| G,U,A,N | 0.219 | |||

| German Performance | ||||

| G | 0.068 | 0.001 | 0.008 | 0.006 |

| G,U | 0.069 | 0.010 | 0.006 | |

| G,A | 0.076 | 0.004 | 0.002 | |

| G,N | 0.074 | 0.001 | 0.003 | |

| G,U,A | 0.079 | 0.005 | ||

| G,U,N | 0.075 | 0.009 | ||

| G,A,N | 0.078 | 0.006 | ||

| G,U,A,N | 0.084 | |||

| English Performance | ||||

| G | 0.063 | 0.006 | 0.020 | 0.000 |

| G,U | 0.069 | 0.016 | 0.007 | |

| G,A | 0.083 | 0.001 | 0.004 | |

| G,N | 0.063 | 0.013 | 0.024 | |

| G,U,A | 0.084 | 0.016 | ||

| G,U,N | 0.076 | 0.024 | ||

| G,A,N | 0.087 | 0.013 | ||

| G,U,A,N | 0.100 | |||

| Sports Performance | ||||

| G | 0.001 | 0.003 | 0.004 | 0.000 |

| G,U | 0.003 | 0.002 | 0.001 | |

| G,A | 0.005 | 0.001 | 0.002 | |

| G,N | 0.001 | 0.003 | 0.006 | |

| G,U,A | 0.006 | 0.005 | ||

| G,U,N | 0.004 | 0.007 | ||

| G,A,N | 0.007 | 0.004 | ||

| G,U,A,N | 0.011 | |||

References

- Spearman, C. “General intelligence”, objectively determined and measured. Am. J. Psychol. 1904, 15, 201–292. [Google Scholar] [CrossRef]

- Thurstone, L.L. Psychological implications of factor analysis. Am. Psychol. 1948, 3, 402–408. [Google Scholar] [CrossRef] [PubMed]

- Carroll, J.B. Human Cognitive Abilities: A Survey of Factor Analytic Studies; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- McGrew, K.S. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 2009, 37, 1–10. [Google Scholar] [CrossRef]

- Humphreys, L.G. The primary mental ability. In Intelligence and Learning; Friedman, M., Das, J., O’Connor, N., Eds.; Plenum Press: New York, NY, USA, 1981; pp. 87–102. [Google Scholar]

- Gustafsson, J.-E.; Balke, G. General and specific abilities as predictors of school achievement. Multivar. Behav. Res. 1993, 28, 407–434. [Google Scholar] [CrossRef] [PubMed]

- Yung, Y.-F.; Thissen, D.; Mcleod, L.D. On the relationship between the higher-order factor model and the hierarchical factor model. Psychometrika 1999, 64, 112–128. [Google Scholar] [CrossRef]

- Lang, J.W.B.; Kersting, M.; Hülsheger, U.R.; Lang, J. General mental ability, narrower cognitive abilities, and job performance: The perspective of the nested-factors model of cognitive abilities. Pers. Psychol. 2010, 63, 595–640. [Google Scholar] [CrossRef]

- Schneider, W.J.; Newman, D.A. Intelligence is multidimensional: Theoretical review and implications of specific cognitive abilities. Hum. Resour. Manag. Rev. 2015, 25, 12–27. [Google Scholar] [CrossRef]

- Holzinger, K.J.; Swineford, F. The bi-factor method. Psychometrika 1937, 2, 41–54. [Google Scholar] [CrossRef]

- Carroll, J.B. The higher-stratum structure of cognitive abilities: Current evidence supports g and about ten broad factors. In The Scientific Study of General Intelligence: Tribute to Arthur R. Jensen; Nyborg, H., Ed.; Elsevier Science/Pergamon Press: New York, NY, USA, 2003. [Google Scholar]

- Reise, S.P. The rediscovery of bifactor measurement models. Multivar. Behav. Res. 2012, 47, 667–696. [Google Scholar] [CrossRef] [PubMed]

- Deary, I.J.; Strand, S.; Smith, P.; Fernandes, C. Intelligence and educational achievement. Intelligence 2007, 35, 13–21. [Google Scholar] [CrossRef]

- Coyle, T.R.; Purcell, J.M.; Snyder, A.C.; Kochunov, P. Non-g residuals of the SAT and ACT predict specific abilities. Intelligence 2013, 41, 114–120. [Google Scholar] [CrossRef]

- Gottfredson, L.S. Why g matters: The complexity of everyday life. Intelligence 1997, 24, 79–132. [Google Scholar] [CrossRef]

- Kuncel, N.R.; Hezlett, S.A.; Ones, D.S. Academic performance, career potential, creativity, and job performance: Can one construct predict them all? J. Pers. Soc. Psychol. 2004, 86, 148–161. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, F.L.; Hunter, J. General mental ability in the world of work: Occupational attainment and job performance? J. Pers. Soc. Psychol. 2004, 86, 162–173. [Google Scholar] [CrossRef] [PubMed]

- Ree, M.J.; Earles, J.A. Predicting training success: Not much more than g. Pers. Psychol. 1991, 44, 321–332. [Google Scholar] [CrossRef]

- Ree, M.J.; Earles, J.A.; Teachout, M.S. Predicting job performance: Not much more than g. J. Appl. Psychol. 1994, 79, 518–524. [Google Scholar] [CrossRef]

- Hunter, J.E. Cognitive ability, cognitive aptitudes, job knowledge, and job performance. J. Vocat. Behav. 1986, 29, 340–362. [Google Scholar] [CrossRef]

- Schmidt, F.L.; Hunter, J.E. The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychol. Bull. 1998, 124, 262–274. [Google Scholar] [CrossRef]

- Mount, M.K.; Oh, I.-S.; Burns, M. Incremental validity of perceptual speed and accuracy over general mental ability. Pers. Psychol. 2008, 61, 113–139. [Google Scholar] [CrossRef]

- Mulaik, S.A.; Quartetti, D.A. First order of higher order general factor? Struct. Equ. Model. 1997, 4, 193–211. [Google Scholar] [CrossRef]

- Reeve, C.L. Differential ability antecedents of general and specific dimensions of declarative knowledge: More than g. Intelligence 2004, 32, 621–652. [Google Scholar] [CrossRef]

- Calvin, C.M.; Fernandes, C.; Smith, P.; Visscher, P.M.; Deary, I.J. Sex, intelligence and educational achievement in a national cohort of over 175,000 11-year-old schoolchildren in England. Intelligence 2010, 38, 424–432. [Google Scholar] [CrossRef]

- Stanhope, D.S.; Surface, E.A. Examining the incremental validity and relative importance of specific cognitive abilities in a training context. J. Pers. Psychol. 2014, 13, 146–156. [Google Scholar] [CrossRef]

- Viswesvaran, C.; Ones, D.S. Agreements and disagreements on the role of general mental ability (GMA) in industrial, work, and organizational psychology. Hum. Perform. 2002, 15, 211–231. [Google Scholar]

- Krumm, S.; Schmidt-Atzert, L.; Lipnevich, A.A. Specific cognitive abilities at work: A brief summary from two perspectives. J. Pers. Psychol. 2014, 13, 117–122. [Google Scholar] [CrossRef]

- Wittmann, W.W.; Süß, H.-M. Investigating the paths between working memory, intelligence, knowledge, and complex problem-solving performances via Brunswik symmetry. In Learning and Individual Differences: Process, Trait, and Content Determinants; Ackerman, P.L., Kyllonen, P.C., Roberts, R.D., Eds.; American Psychological Association: Washington, DC, USA, 1999. [Google Scholar]

- Brunswik, E. Perception and the Representative Design of Psychological Experiments; University of California Press: Berkeley, CA, USA, 1956. [Google Scholar]

- Johnson, J.W.; LeBreton, J.M. History and use of relative importance indices in organizational research. Org. Res. Methods 2004, 7, 238–257. [Google Scholar] [CrossRef]

- Budescu, D.V. Dominance analysis: A new approach to the problem of relative importance of predictors in multiple regression. Psychol. Bull. 1993, 114, 542–551. [Google Scholar] [CrossRef]

- Johnson, J.W. A heuristic method for estimating the relative weight of predictor variables in multiple regression. Multivar. Behav. Res. 2000, 35, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Pratt, J.W. Dividing the indivisible: Using simple symmetry to partition variance explained. In Proceedings of the 2nd Tampere Conference in Statistics, Tampere, Finland, 1–4 June 1987; Pukilla, T., Duntaneu, S., Eds.; University of Tampere: Tampere, Finland, 1987. [Google Scholar]

- LeBreton, J.M.; Ployhart, R.E.; Ladd, R.T. A Monte Carlo comparison of relative importance methodologies. Org. Res. Methods 2004, 7, 258–282. [Google Scholar] [CrossRef]

- Muthen, L.K.; Muthen, B.O. MPlus User’s Guide, 7th ed.; Muthen & Muthen: Los Angeles, CA, USA, 2012. [Google Scholar]

- Bentley, P.M. EQS Structural Equations Program Manual; Multivariate Software: Encino, CA, USA, 1995. [Google Scholar]

- Browne, M.W.; Cudeck, R. Alternative ways of assessing model fit. Sociol. Methods Res. 1992, 21, 230–258. [Google Scholar] [CrossRef]

- Hu, L.-T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2015. [Google Scholar]

- Nimon, K.F.; Oswald, F.L.; Roberts, J.K. yhat: Interpreting Regression Effects; R Package Version 2.0-0; R Foundation for Statistical Computing: Vienna, Austria, 2015. [Google Scholar]

- Nimon, K.F.; Oswald, F.L. Understanding the results of multiple linear regression: Beyond standardrized regression coefficients. Org. Res. Methods 2013, 16, 650–674. [Google Scholar] [CrossRef]

- Haberman, S.J. When can subscores have value? J. Educ. Behav. Stat. 2008, 33, 204–229. [Google Scholar] [CrossRef]

- Haberman, S.J.; Sinharay, S. Reporting of subscores using multidimensional item respond theory. Psychometrika 2010, 75, 209–227. [Google Scholar] [CrossRef]

- Ackerman, P.L. A theory of adult intellectual development: Process, personality, interests, and knowledge. Intelligence 1996, 22, 227–257. [Google Scholar] [CrossRef]

- Eccles (Parsons), J.S.; Adler, T.F.; Futterman, R.; Goff, S.B.; Kaczala, C.M.; Meece, J.L.; Midgley, C. Expectations, values, and academic behaviors. In Perspectives on Achievement and Achievement Motivation; Spence, J., Ed.; Freeman: San Francisco, CA, USA, 1983. [Google Scholar]

- Denissen, J.J.; Zarrett, N.R.; Eccles, J.S. I like to do it, I’m able, and I know I am: Longitudinal couplings between domain-specific achievement, self-concept, and interest. Child Dev. 2007, 78, 430–447. [Google Scholar] [CrossRef] [PubMed]

- Wee, S.; Newman, D.A.; Joseph, D.L. More than g: Selection quality and adverse impact implications of considering second-stratum cognitive abilities. J. Appl. Psychol. 2014, 99, 547–563. [Google Scholar] [CrossRef] [PubMed]

- Thomson, G.H. A hierarchy without a general factor. Br. J. Psychol. 1916, 8, 271–281. [Google Scholar]

- Van Der Maas, H.L.J.; Dolan, C.V.; Grasman, R.P.P.P.; Wicherts, J.M.; Huizenga, H.M.; Raijmakers, M.E.J. A dynamical model of general intelligence: The positive manifold of intelligence by mutualism. Psychol. Rev. 2006, 113, 842–861. [Google Scholar] [CrossRef] [PubMed]

- Kan, K.-J.; Kievit, R.A.; Dolan, C.; Van der Maas, H. On the interpretation of the CHC factor Gc. Intelligence 2011, 39, 292–302. [Google Scholar] [CrossRef]

- Wee, S.; Newman, D.A.; Song, Q.C. More than g-factors: Second-stratum factors should not be ignored. Ind. Organ. Psychol. 2015, 8, 482–488. [Google Scholar] [CrossRef]

- Campbell, J.P. All general factors are not alike. Ind. Organ. Psychol. 2015, 8, 428–434. [Google Scholar] [CrossRef]

- Murphy, K.R. What can we learn from “not much more than g”? J. Intell. 2017, 5, 8. [Google Scholar] [CrossRef]

| 1 | These results are supplemented by a hierarchical regression analysis showing the incremental contribution (over general ability) of each specific ability by itself, in a pair, and in a triplet (see Appendix A Table A1). |

| Variable | 1. | 2. | 3. | 4. | 5. | 6. | 7. | 8. | 9. |

|---|---|---|---|---|---|---|---|---|---|

| 1. General Performance | -- | ||||||||

| 2. Math | 0.787 | -- | |||||||

| 3. German | 0.775 | 0.439 | -- | ||||||

| 4. English | 0.757 | 0.427 | 0.542 | -- | |||||

| 5. Sports | 0.442 | 0.183 | 0.156 | 0.108 | -- | ||||

| 6. General Ability | 0.372 | 0.424 | 0.261 | 0.251 | 0.029 | -- | |||

| 7. Unfolding | 0.252 | 0.310 | 0.213 | 0.132 | −0.014 | 0.732 | -- | ||

| 8. Analogies | 0.348 | 0.348 | 0.236 | 0.272 | 0.066 | 0.652 | 0.321 | -- | |

| 9. Number Series | 0.300 | 0.349 | 0.185 | 0.212 | 0.033 | 0.865 | 0.397 | 0.392 | -- |

| M | 4.127 | 3.808 | 3.913 | 3.735 | 5.050 | 0.000 | 0.000 | 0.000 | 0.000 |

| SD | 0.666 | 1.153 | 0.937 | 0.940 | 0.718 | 0.950 | 0.913 | 0.887 | 0.933 |

| Variable | General Performance | Math (res) | German (res) | English (res) | Sports (res) |

|---|---|---|---|---|---|

| General Ability | -- | 0.19 | 0.00 | 0.02 | −0.16 |

| (0.24) | (0.13) | (0.12) | (0.15) | ||

| Unfolding | −0.06 | 0.02 | 0.07 | −0.09 | −0.10 |

| (0.12) | (0.08) | (0.06) | (0.07) | (0.07) | |

| Analogies | 0.12 | −0.02 | −0.01 | 0.08 | 0.01 |

| (0.12) | (0.10) | (0.07) | (0.06) | (0.08) | |

| Number Series | −0.09 | 0.04 | −0.05 | 0.02 | −0.09 |

| (0.19) | (0.10) | (0.07) | (0.07) | (0.08) |

| Metric | General Ability | Unfolding | Analogies | Number Series |

|---|---|---|---|---|

| General Performance | ||||

| r | 0.372 (0.232, 0.497) a, b | 0.252 (0.121, 0.382) a | 0.348 (0.223, 0.472) | 0.300 (0.155, 0.431) b |

| b | −1.745 (−3.303, 0.014) a, b, c | 0.814 (0.113, 1.450) a | 0.732 (0.171, 1.258) b | 1.163 (0.090, 2.083) c |

| B | −2.489 (−4.658, 0.018) a, b, c | 1.115 (0.166, 1.964) a | 0.976 (0.235, 1.684) b | 1.628 (0.131, 2.869) c |

| Raw weight | 0.037 (0.020, 0.068) | 0.029 (0.006, 0.070) | 0.075 (0.025, 0.151) | 0.043 (0.012, 0.094) |

| Scaled weight | 20.109% | 15.761% | 40.761% | 23.370% |

| Math Performance | ||||

| r | 0.424 (0.302, 0.545) a, b | 0.310 (0.175, 0.436) a | 0.348 (0.222, 0.472) | 0.349 (0.216, 0.480) b |

| b | −3.133 (−5.604, −0.360) a, b, c | 1.521 (0.380, 2.512) a, d | 1.262 (0.344, 2.063) a, e | 2.130 (0.453, 3.656) c, d, e |

| B | −2.580 (−4.690, −0.295) a, b, c | 1.204 (0.311, 2.036) a, d | 0.971 (0.269, 1.587) a, e | 1.722 (0.360, 2.942) c, d, e |

| Raw weight | 0.047 (0.028, 0.082) | 0.046 (0.014, 0.099) | 0.067 (0.022, 0.141) | 0.058 (0.021, 0.119) |

| Scaled weight | 21.560% | 21.101% | 30.734% | 26.606% |

| German Performance | ||||

| r | 0.261 (0.132, 0.382) a | 0.213 (0.077, 0.350) | 0.236 (0.101, 0.350) | 0.185 (0.052, 0.319) a |

| b | −1.021 (−3.478, 1.376) | 0.566 (−0.478, 1.621) | 0.496 (−0.277, 1.287) | 0.681 (−0.780, 2.094) |

| B | −1.035 (−3.396, 1.478) | 0.551 (−0.487, 1.566) | 0.469 (−0.253, 1.222) | 0.678 (−0.760, 2.074) |

| Raw weight | 0.017 (0.008, 0.042) | 0.022 (0.003, 0.074) | 0.032 (0.005, 0.081) | 0.013 (0.002, 0.047) |

| Scaled weight | 20.238% | 26.190% | 38.095% | 15.476% |

| English Performance | ||||

| r | 0.251 (0.114, 0.387) a | 0.132 (0.001, 0.273) a | 0.272 (0.135, 0.391) | 0.212 (0.074, 0.360) |

| b | −1.907 (−4.060, 0.475) | 0.817 (−0.160, 1.755) | 0.828 (0.081, 1.565) | 1.268 (−0.093, 2.554) |

| B | −1.927 (−4.169, 0.470) | 0.793 (−0.153, 1.708) | 0.781 (0.069, 1.464) | 1.258 (−0.089, 2.546) |

| Raw weight | 0.019 (0.008, 0.045) | 0.006 (0.001, 0.038) | 0.051 (0.010, 0.115) | 0.024 (0.004, 0.069) |

| Scaled weight | 19.000% | 6.000% | 51.000% | 24.000% |

| Sports Performance | ||||

| r | 0.029 (−0.102, 0.159) | −0.014 (−0.145, 0.116) | 0.066 (−0.072, 0.198) | 0.033 (−0.105, 0.164) |

| b | −0.921 (−2.675, 0.882) | 0.352 (−0.401, 1.110) | 0.345 (−0.206, 0.912) | 0.571 (−0.496, 1.648) |

| B | −1.217 (−3.620, 1.131) | 0.447 (−0.521, 1.476) | 0.426 (−0.259, 1.106) | 0.742 (−0.657, 2.180) |

| Raw weight | 0.002 (0.001, 0.020) | 0.000 (0.000, 0.020) | 0.006 (0.000, 0.041) | 0.003 (0.000, 0.029) |

| Scaled weight | 18.182% | 0.000% | 54.545% | 27.273% |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wee, S. Aligning Predictor-Criterion Bandwidths: Specific Abilities as Predictors of Specific Performance. J. Intell. 2018, 6, 40. https://doi.org/10.3390/jintelligence6030040

Wee S. Aligning Predictor-Criterion Bandwidths: Specific Abilities as Predictors of Specific Performance. Journal of Intelligence. 2018; 6(3):40. https://doi.org/10.3390/jintelligence6030040

Chicago/Turabian StyleWee, Serena. 2018. "Aligning Predictor-Criterion Bandwidths: Specific Abilities as Predictors of Specific Performance" Journal of Intelligence 6, no. 3: 40. https://doi.org/10.3390/jintelligence6030040

APA StyleWee, S. (2018). Aligning Predictor-Criterion Bandwidths: Specific Abilities as Predictors of Specific Performance. Journal of Intelligence, 6(3), 40. https://doi.org/10.3390/jintelligence6030040