A Framework for Measuring Security as a System Property in Cyberphysical Systems

Abstract

:1. Introduction

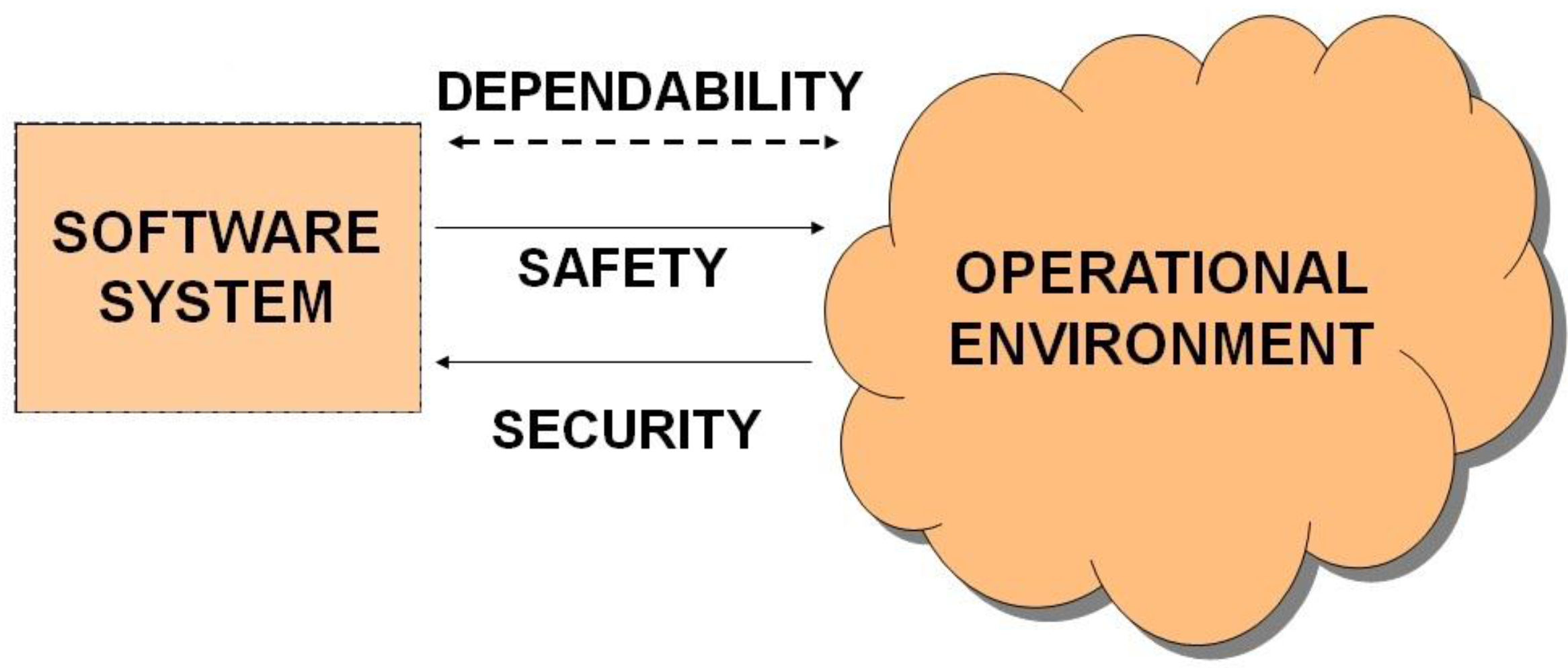

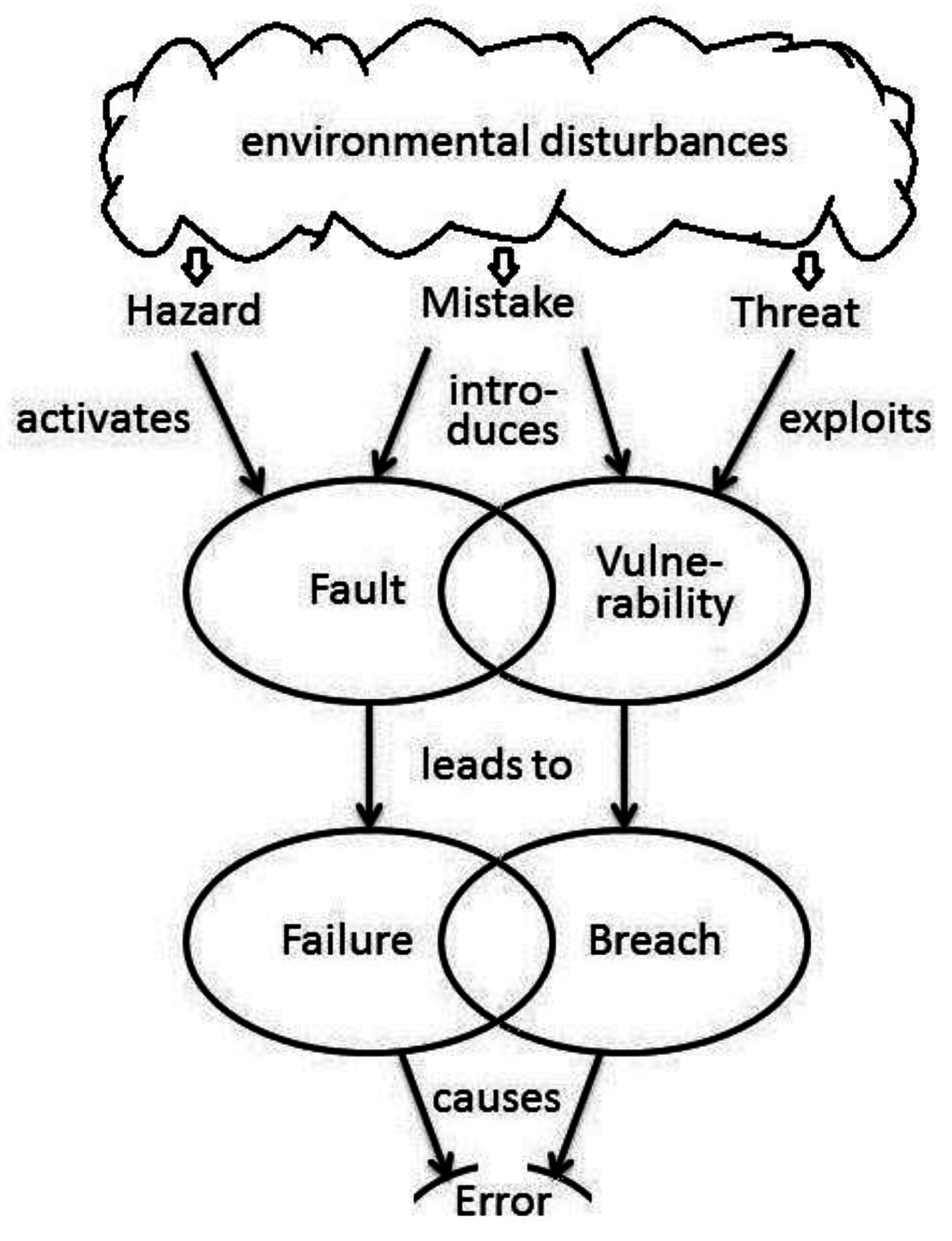

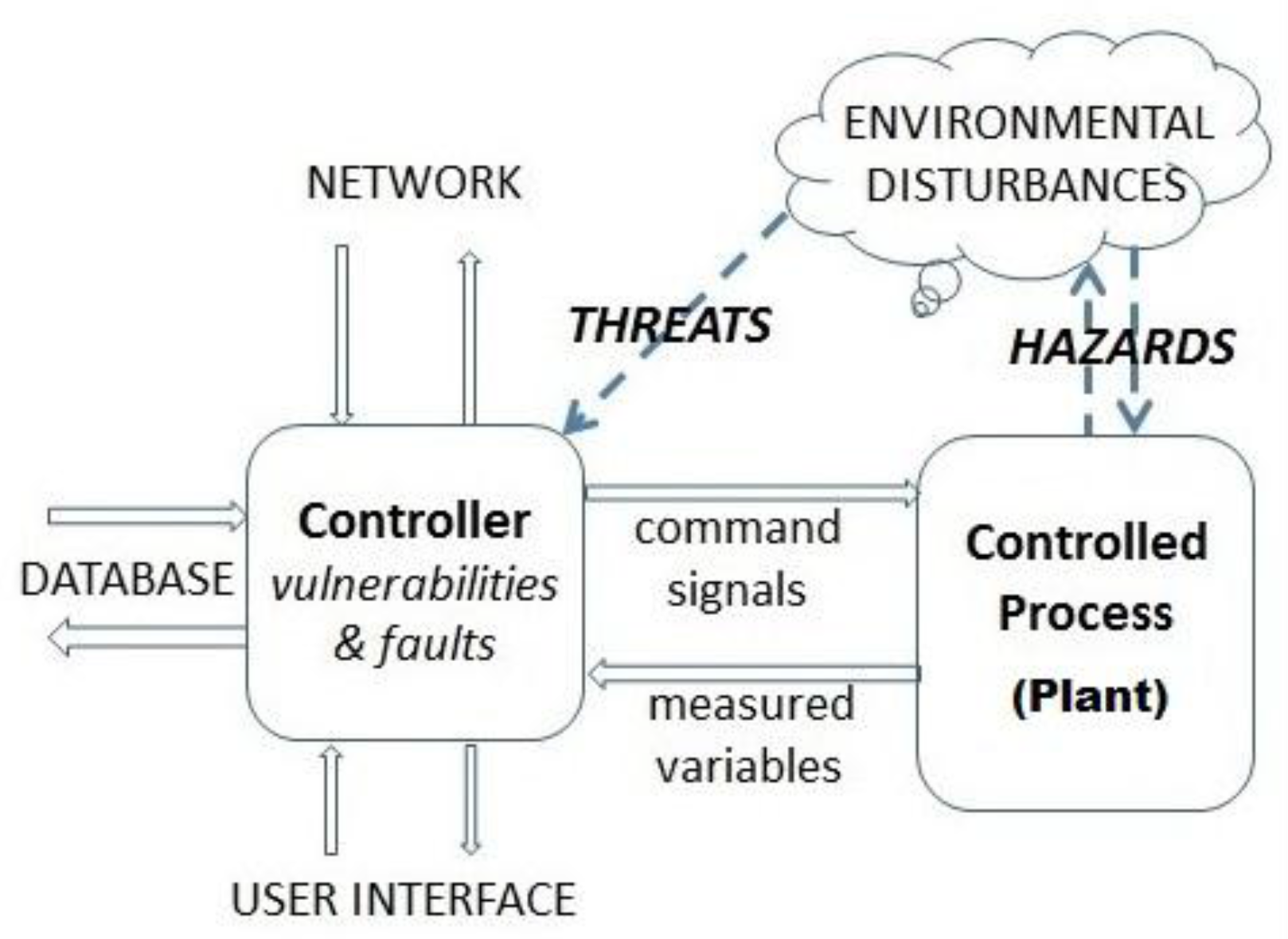

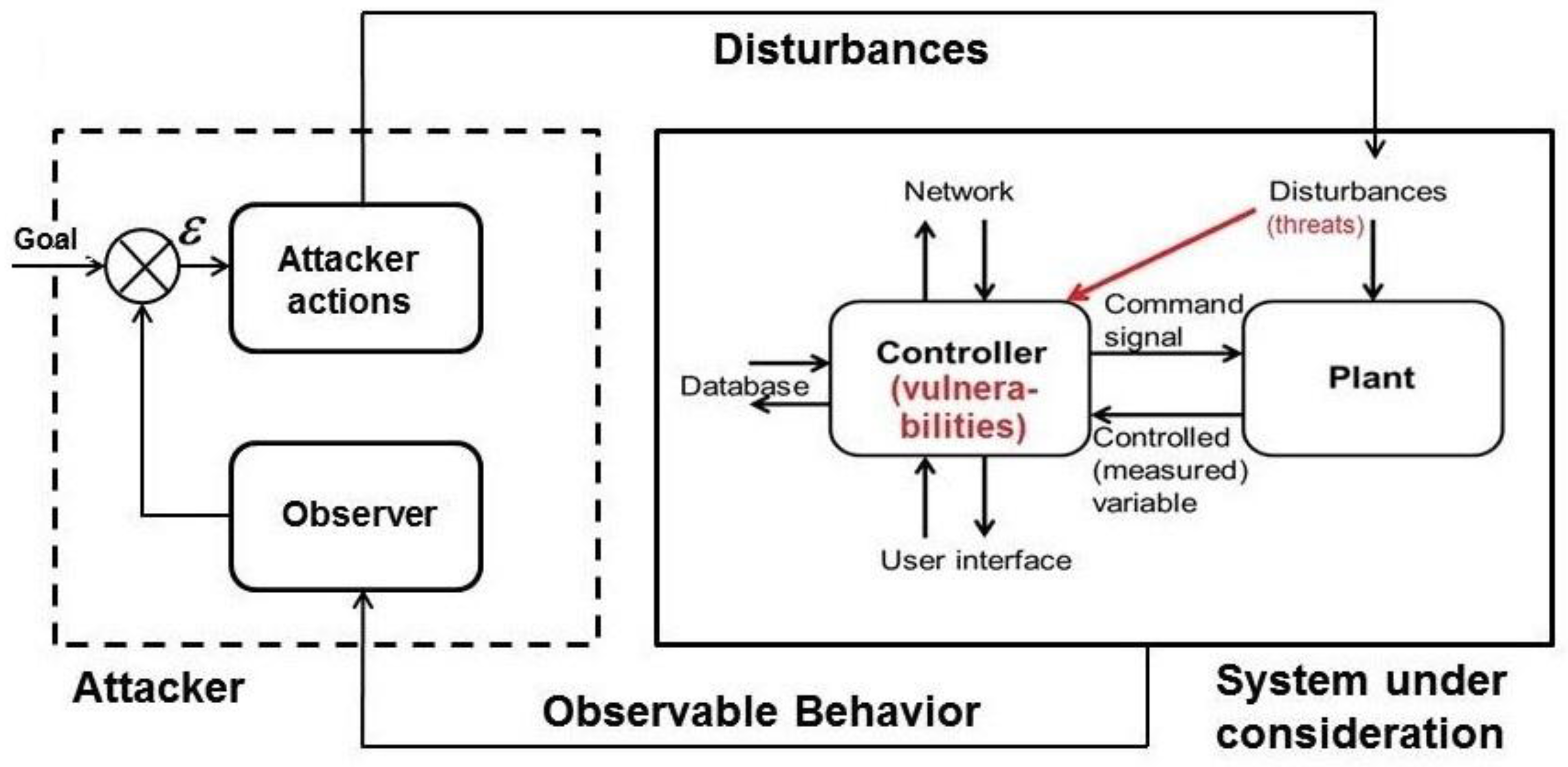

2. Security as a System Property

- Safety is concerned when a failure leads to severe consequences (high risk) for the operational environment;

- Security is concerned when a failure to protect assets (a breach) leads to severe consequences (high risk) for the system itself (and potentially to its operational environment).

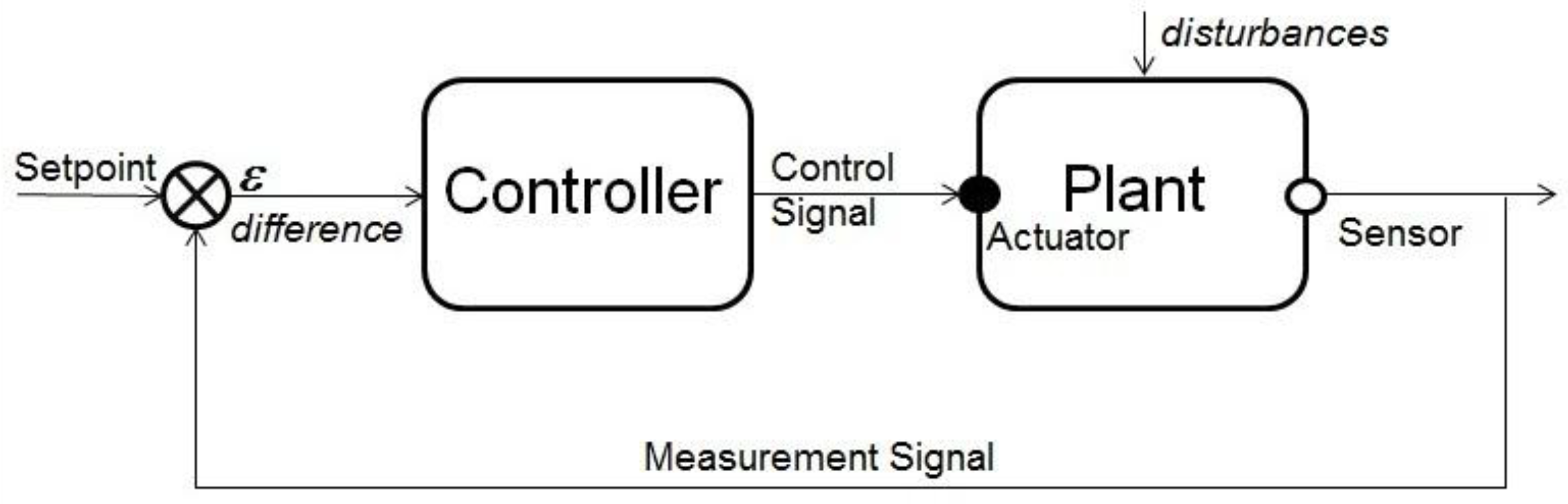

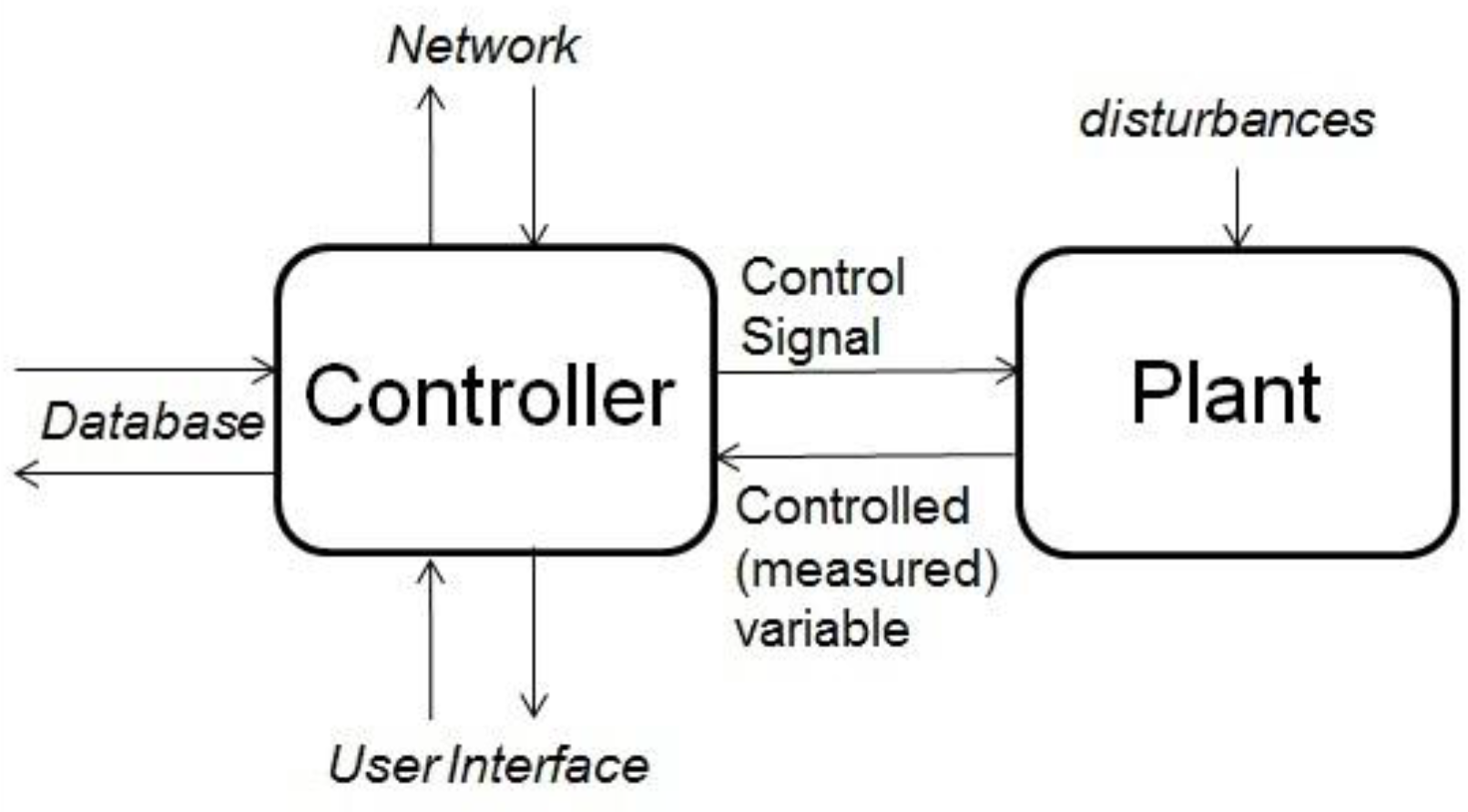

3. Cyberphysical Systems

4. Principles of Measurement

4.1. Hermann von Helmholtz’s Concept of Measurement

- a property (called an attribute by Helmholtz) of an object to be measured;

- a standard, that is, in Helmholtz’ words, the third magnitude, to which others are compared;

- implying an existence of a procedure used to make the comparisons between magnitudes.

4.2. Lessons from Measurements in Physics

- The process of designing a validated metric of security may take years, if not decades;

- Any measures of security must be treated as (physical or mental) measurement devices (instruments), to which regular statistical measurement theory applies;

- Security is likely to be measured only indirectly, possibly via its inherent components.

4.3. Defining the Measurement Process

- Clearly identify the property to be measured. It is at this point where building a model of the phenomenon is necessary. We use the term “property”, which corresponds to von Helmholtz’ “attribute”, although in measurement theory [37], it is called measurand.

- Establish a metric to quantitatively characterize the property. Ideally, this would be a unit of measurement, but for vaguely defined properties it can be just a standard against which measurements are applied, or a scale against which the property can be evaluated.

- Develop a measure, which would apply the metric to related objects under investigation. Ideally, this is just a measuring instrument, but for vaguely defined metrics it can be a formula or any other mental device to apply a metric and make comparisons. One important characteristic of a measure should be its linearity, that is, any two identical changes in the property value should be reflected as two identical changes in the measure.

- Design the measurement process to deliver results. An important part of this process is calibration of the measuring device [37], an activity almost never thought of in soft sciences. Another crucial component of this process is the collection and availability of data.

- Make sure that each instance of a measurement delivers a result composed of the value of the measurement and the estimate of its accuracy (an error). Alternatively, and consistently with current views in measurement theory, it could be a range of values designating one value as the “measured quantity value” [37].

4.4. Guidelines for Applying the Process

5. Case Studies

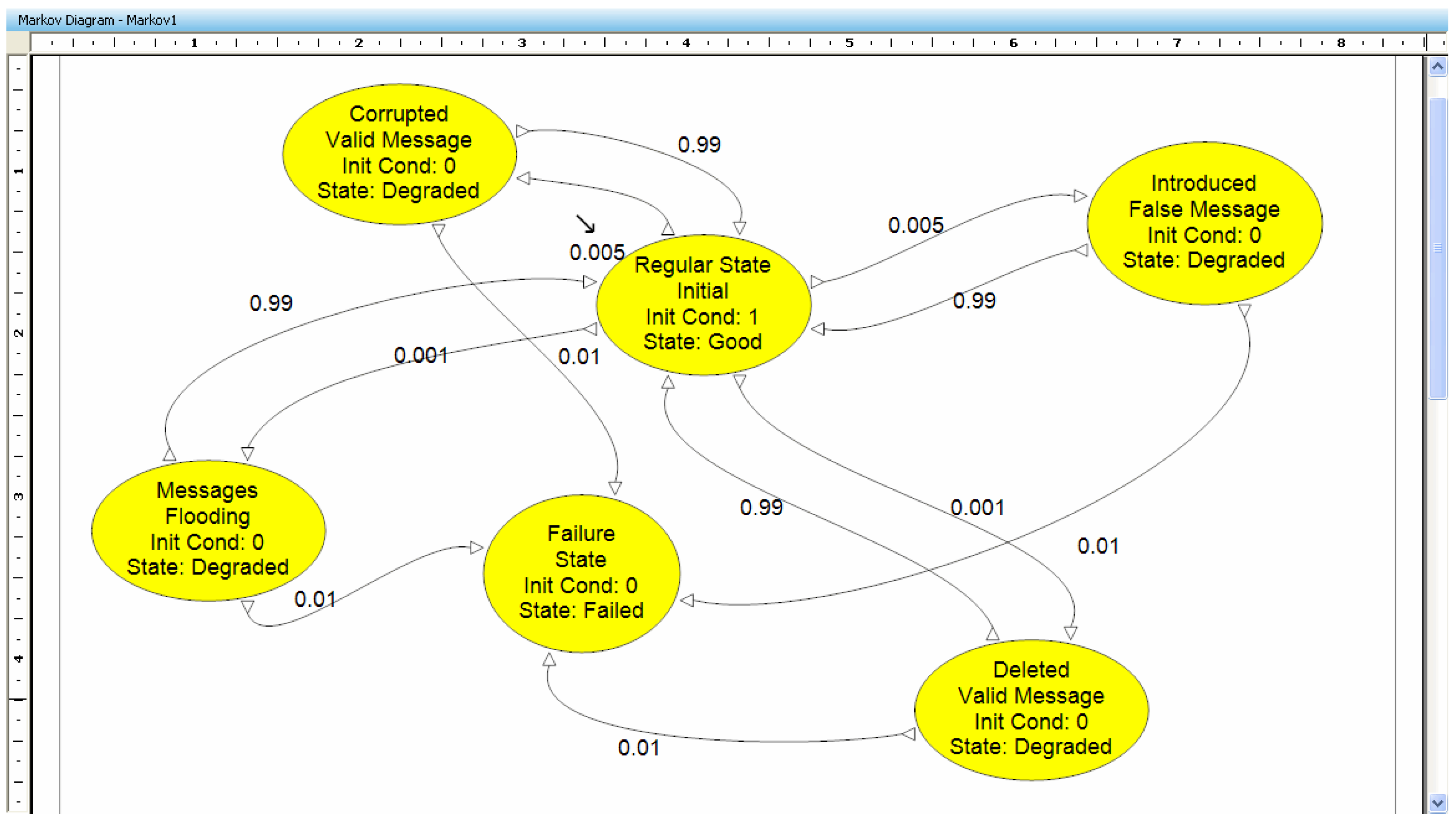

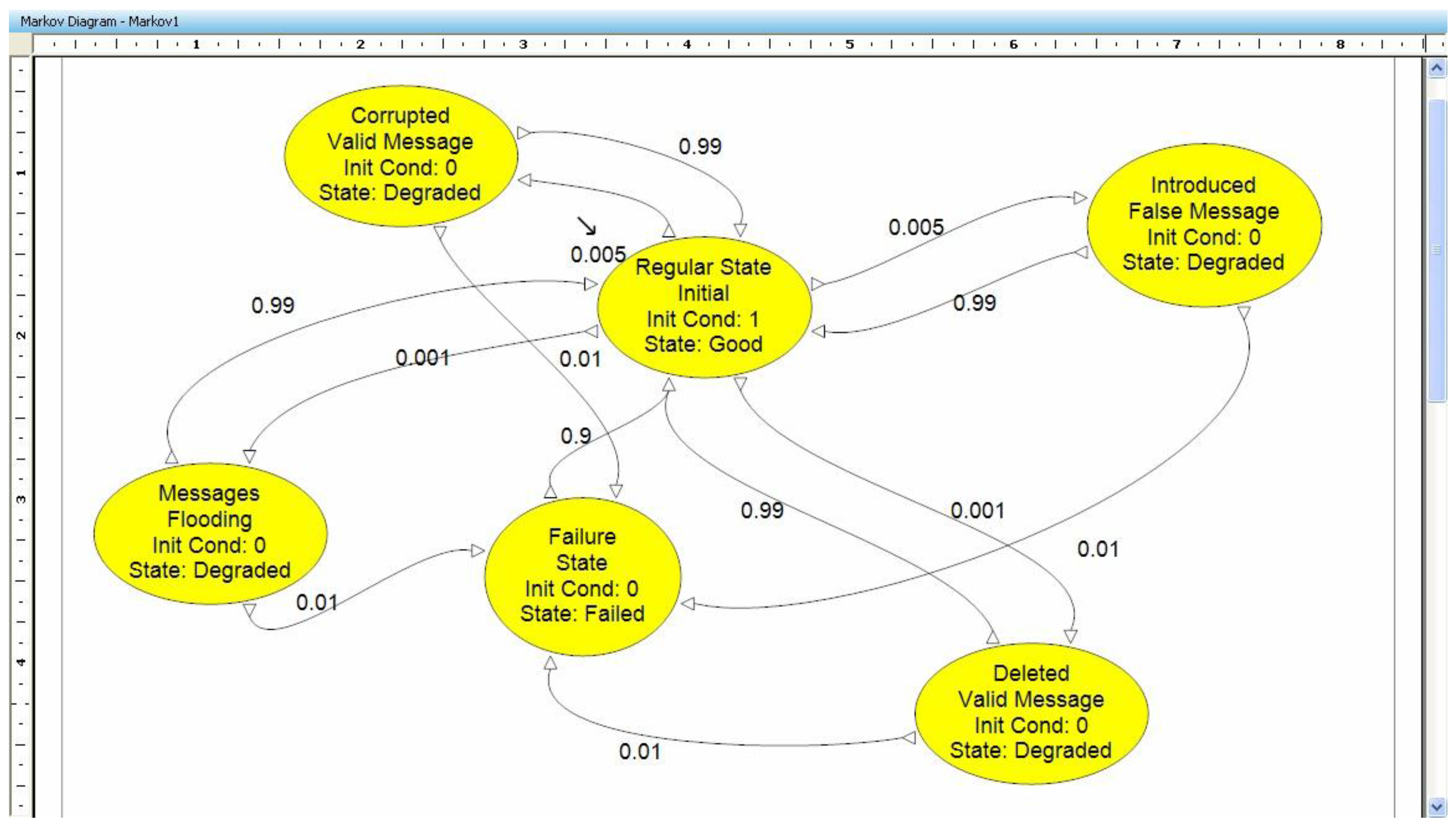

5.1. Case Study #1: Using Markov Chains

5.1.1. Methodology: Markov Model

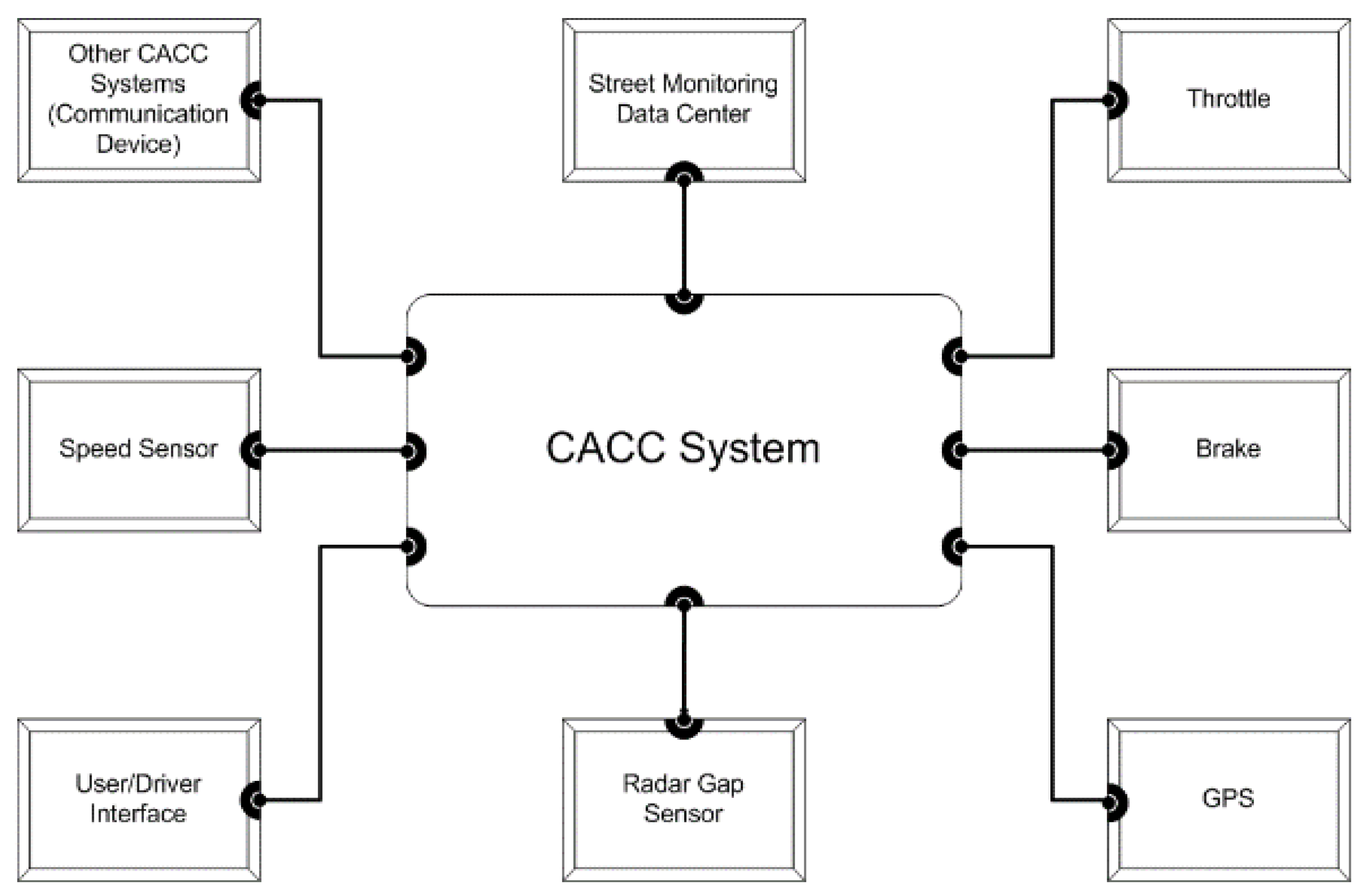

5.1.2. Cooperative Adaptive Cruise Control

- Message Introduction: an untrue SMDC or Other CACC message is injected.

- Message Deletion: SMDC or Other CACC System message is not received by CACC.

- Message Corruption: the contents of an SMDC or Other CACC message are altered before being received by the CACC.

- Message Flooding: multiple frequent SMDC or Other CACC System messages are received causing the CACC to choke and not perform its required tasks within the deadlines.

- It is assumed that only one security breach can occur at any given time.

- Only one message channel is modeled. To model both the SMDC and the Other CACC channels simultaneously, more states and associated transitions would be required.

- It is assumed that an undetected or unsuccessfully handled security breach leads to a system failure even though the system may not physically fail.

5.1.3. Experimental Results

5.1.4. Summary of the Measurement Process

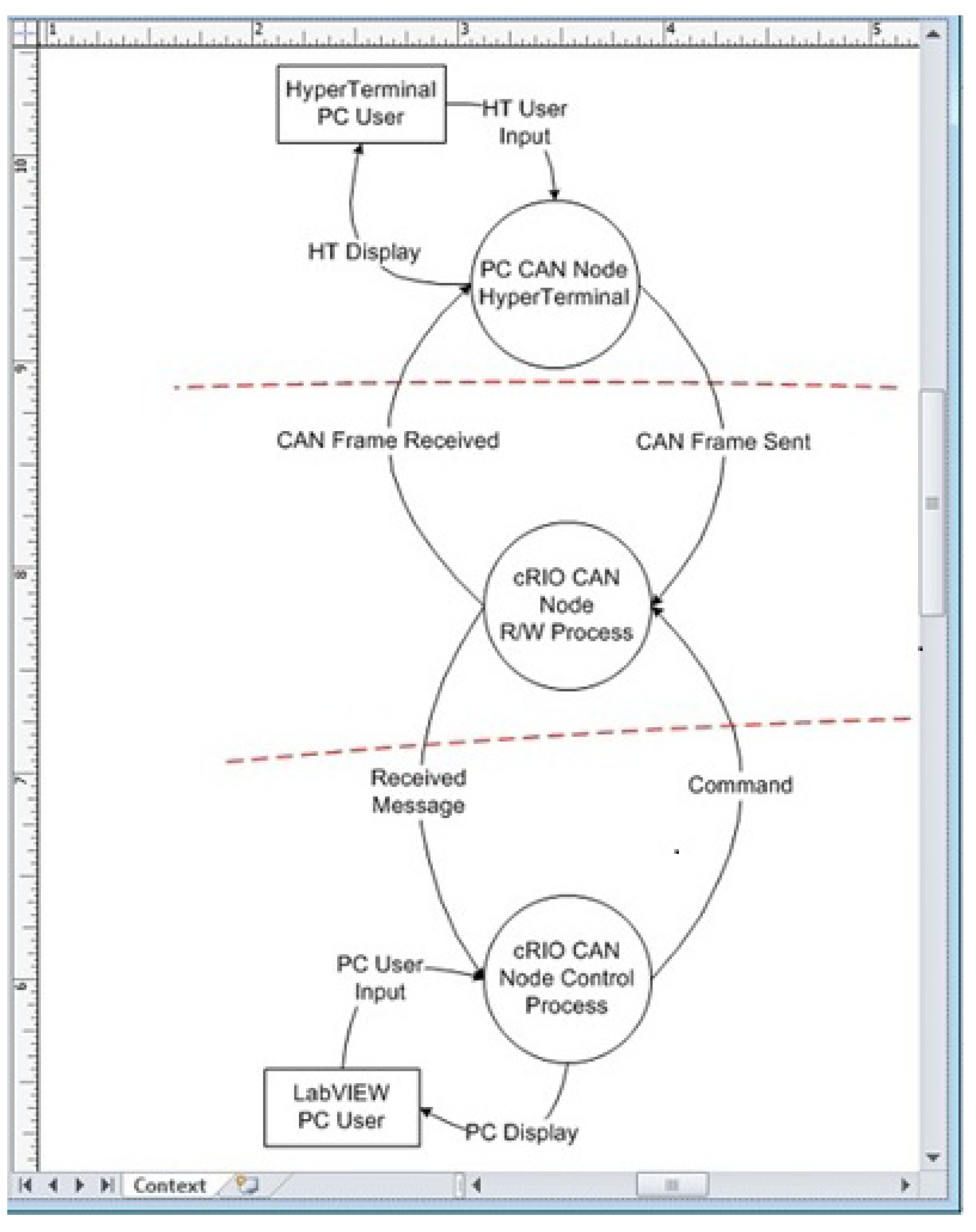

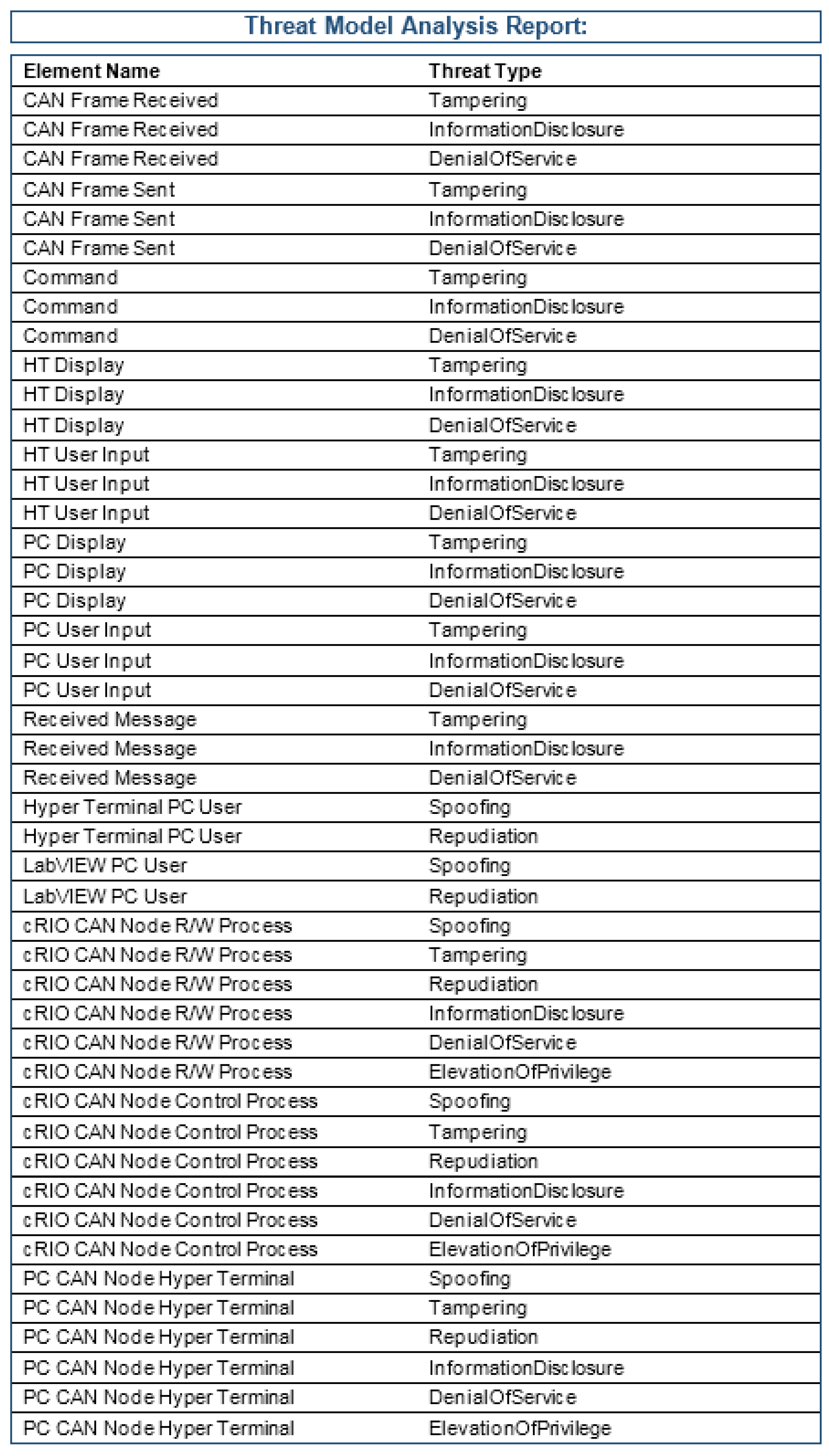

5.2. Case Study #2: Threat Modeling

5.2.1. Outline of Threat Modeling

5.2.2. Inter Vehicle Communication (IVC)

- for car-to-car traffic: either disseminate false messages by generating new message, replaying existing message, and modifying a message, or—disturb the system;

- for car-to-infrastructure applications: either disseminate false messages (in seven different ways), or disturb the system by paralyzing onboard or roadside units, or by affecting the communication channel.

- communication denial

- modified communication data

- intercepted communication data

- falsified communication.

5.2.3. Experimental Results

5.2.4. Summary of the Measurement Process

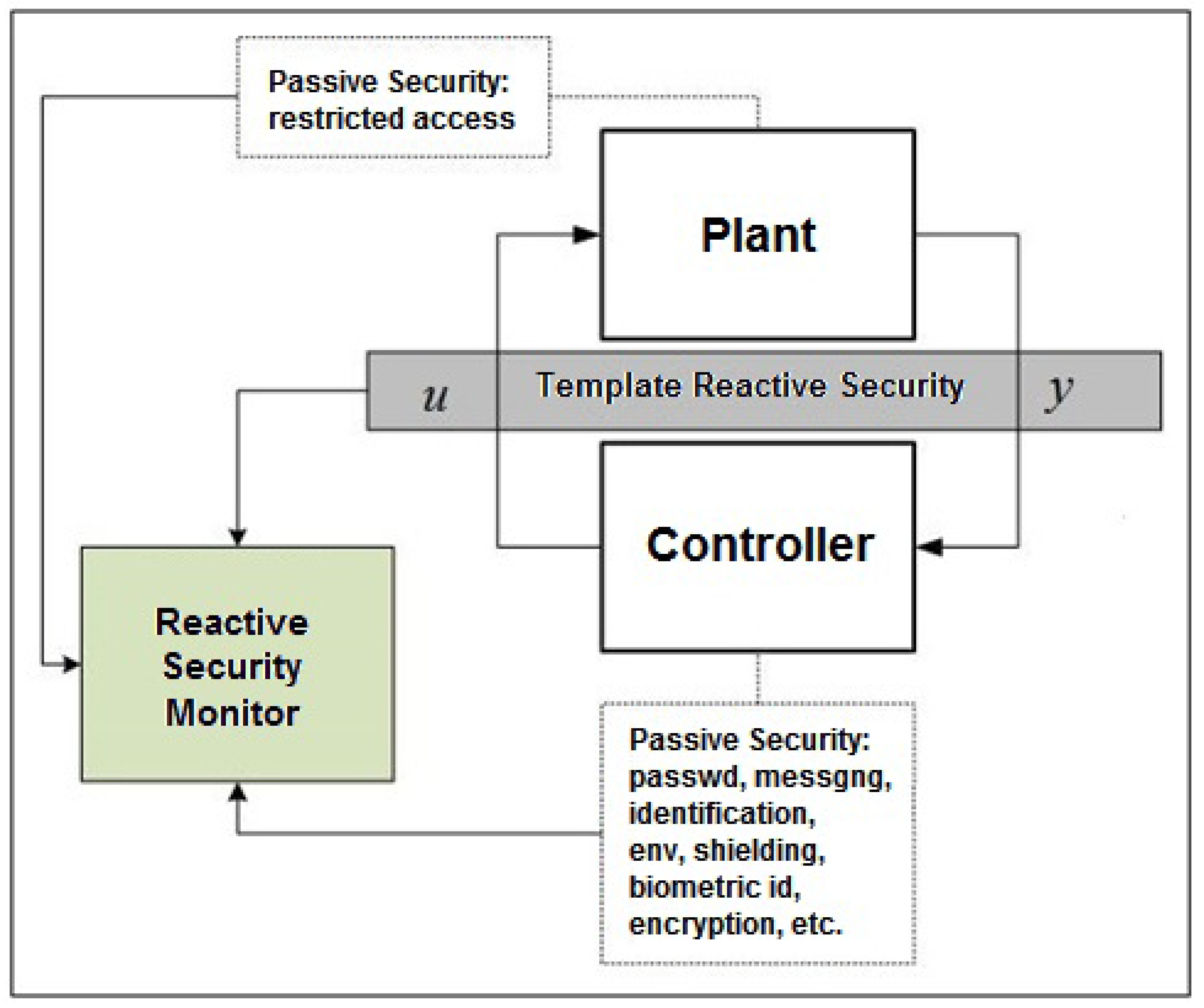

5.3. Case Study #3: Fault Injection in Time-Triggered Systems

5.3.1. Fault Injection Method [52]

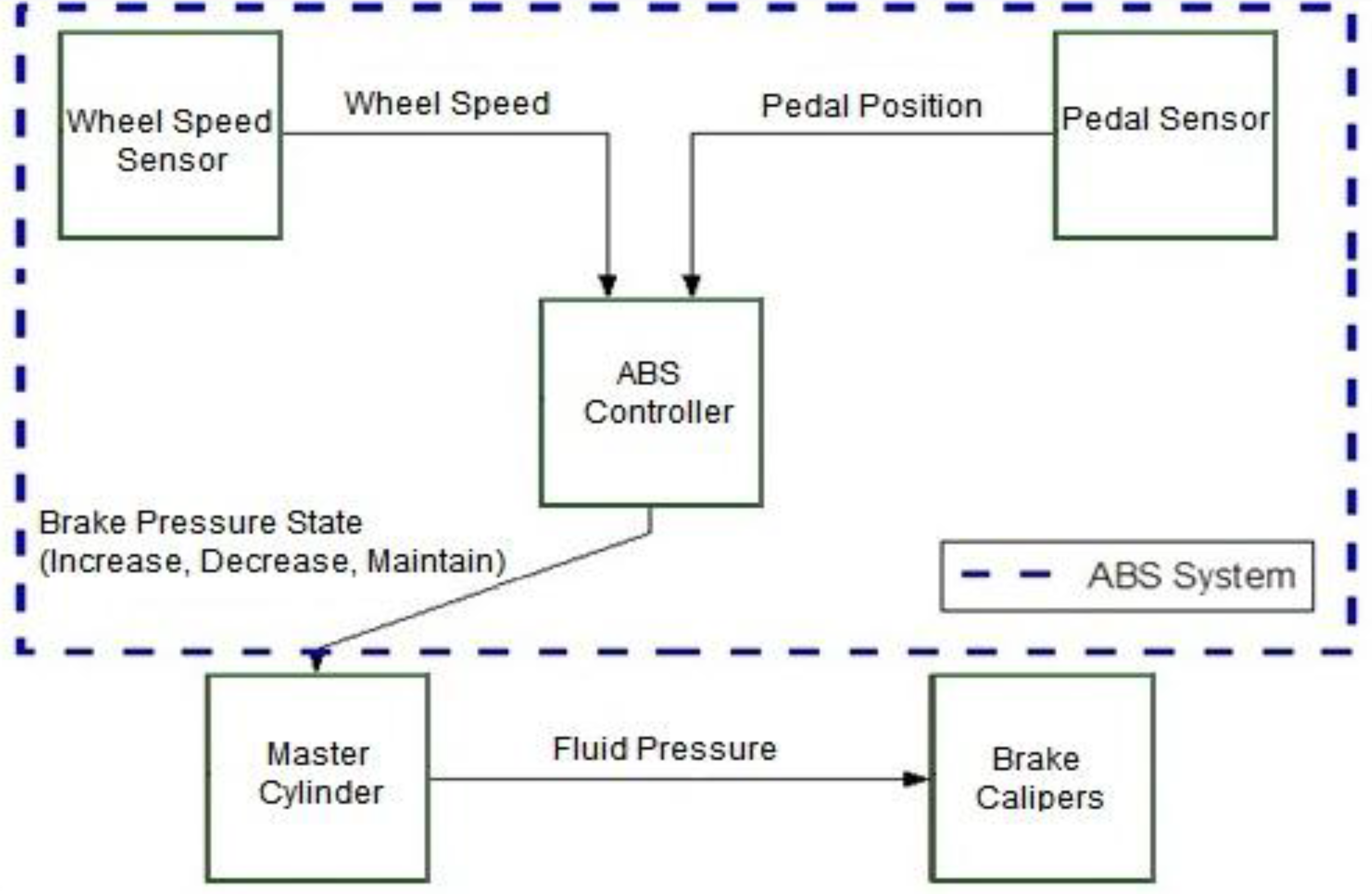

5.3.2. Brake-by-Wire System [51]

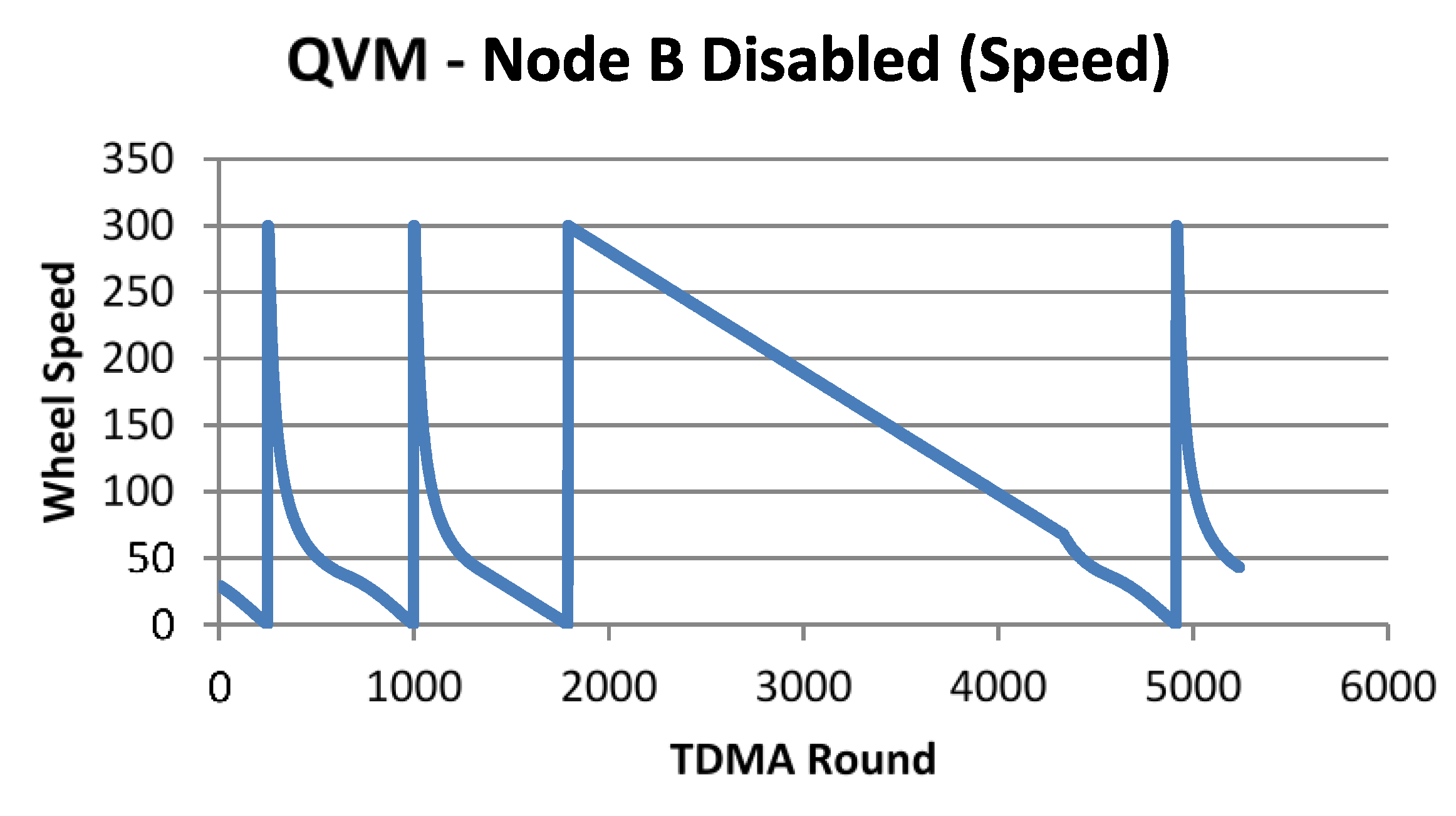

5.3.3. Simulation and Quantitative Analysis

5.3.4. Summary of the Measurement Process

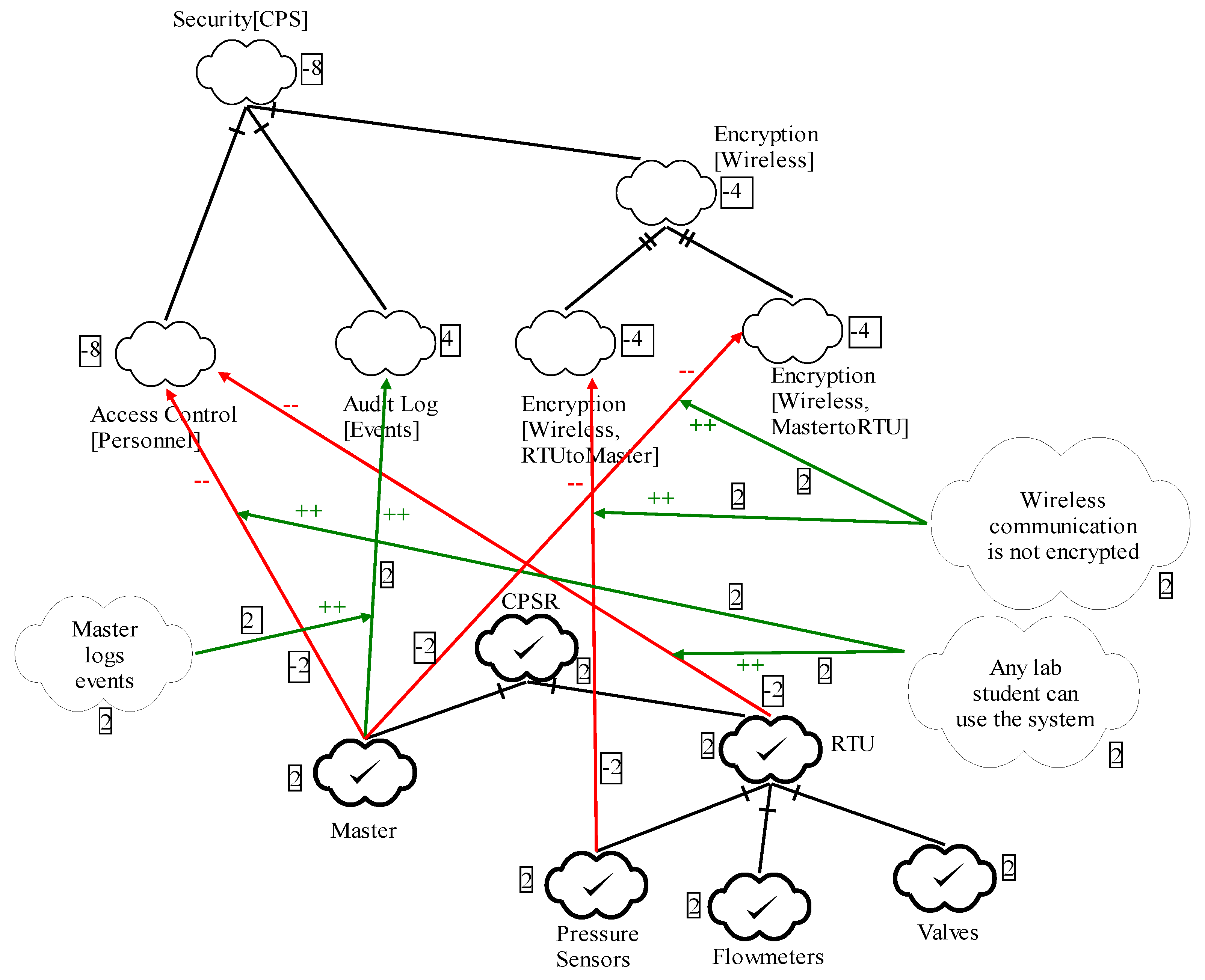

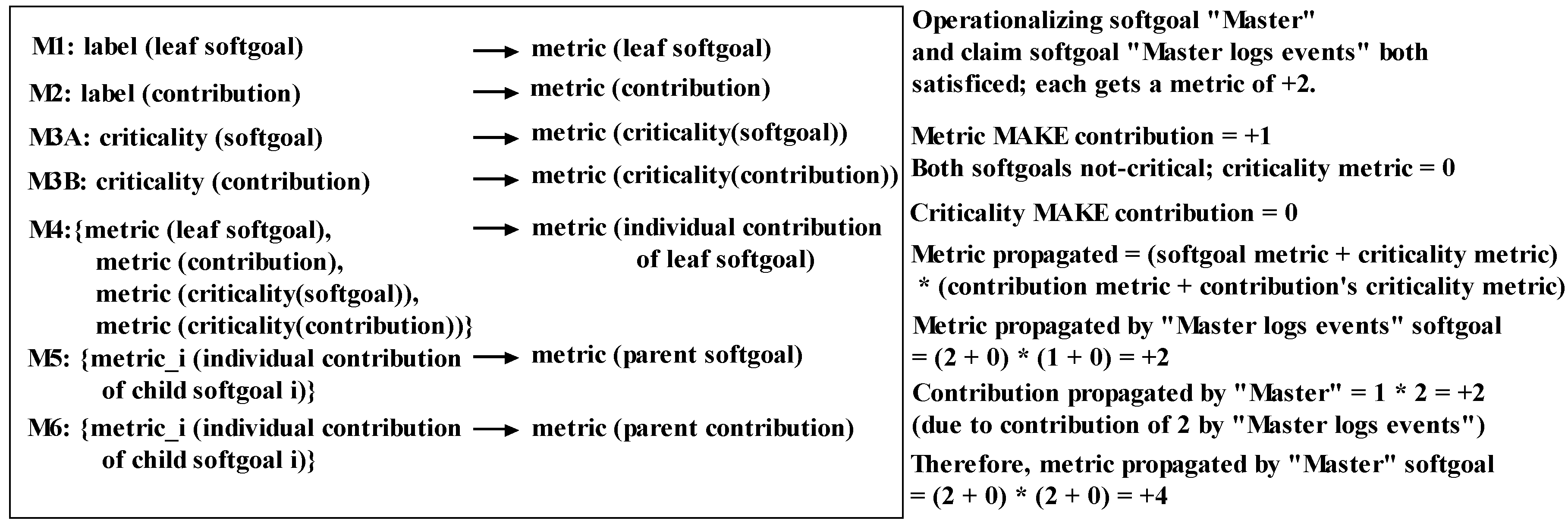

5.4. Case Study #4. Non-Functional Requirements

5.4.1. Outline of the NFR Approach [59]

- Decompose NFR security.

- Decompose the architecture of the system into its constituent operationalizing softgoals.

- Determine the contributions made by the operationalizing softgoals to the NFR softgoals.

- Evaluate the overall security by applying the propagation rules and observing the labels propagated to the softgoals.

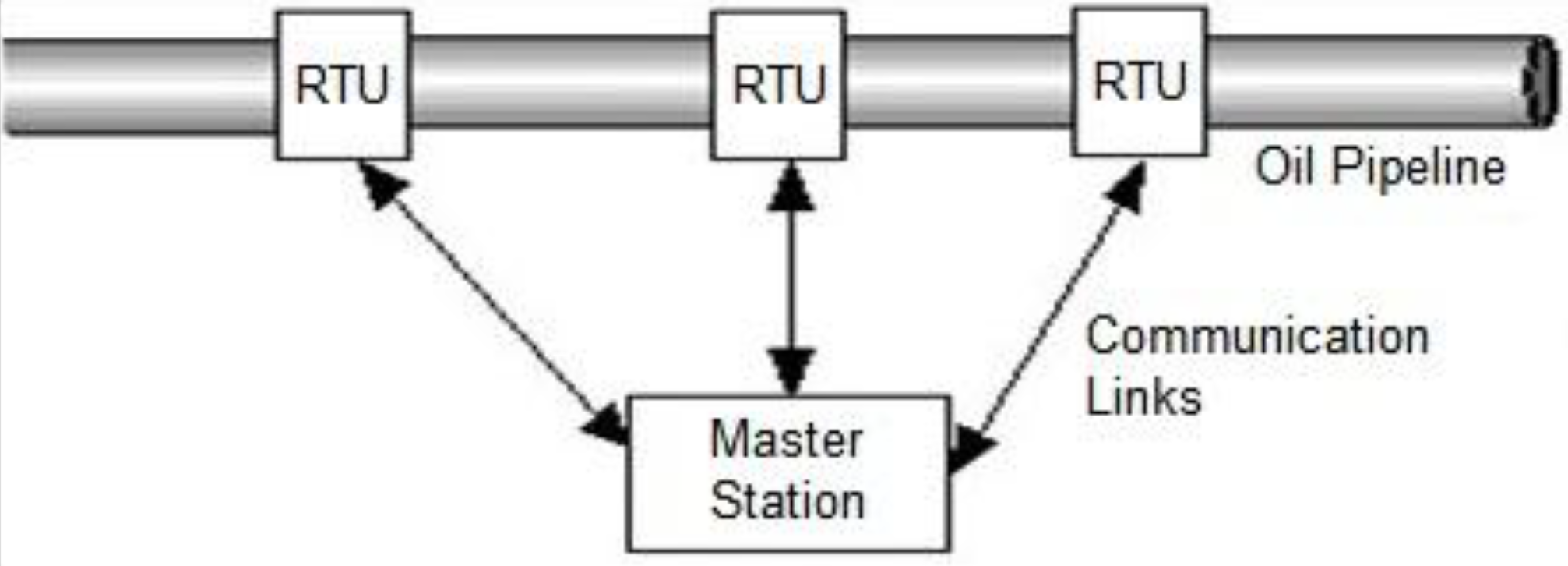

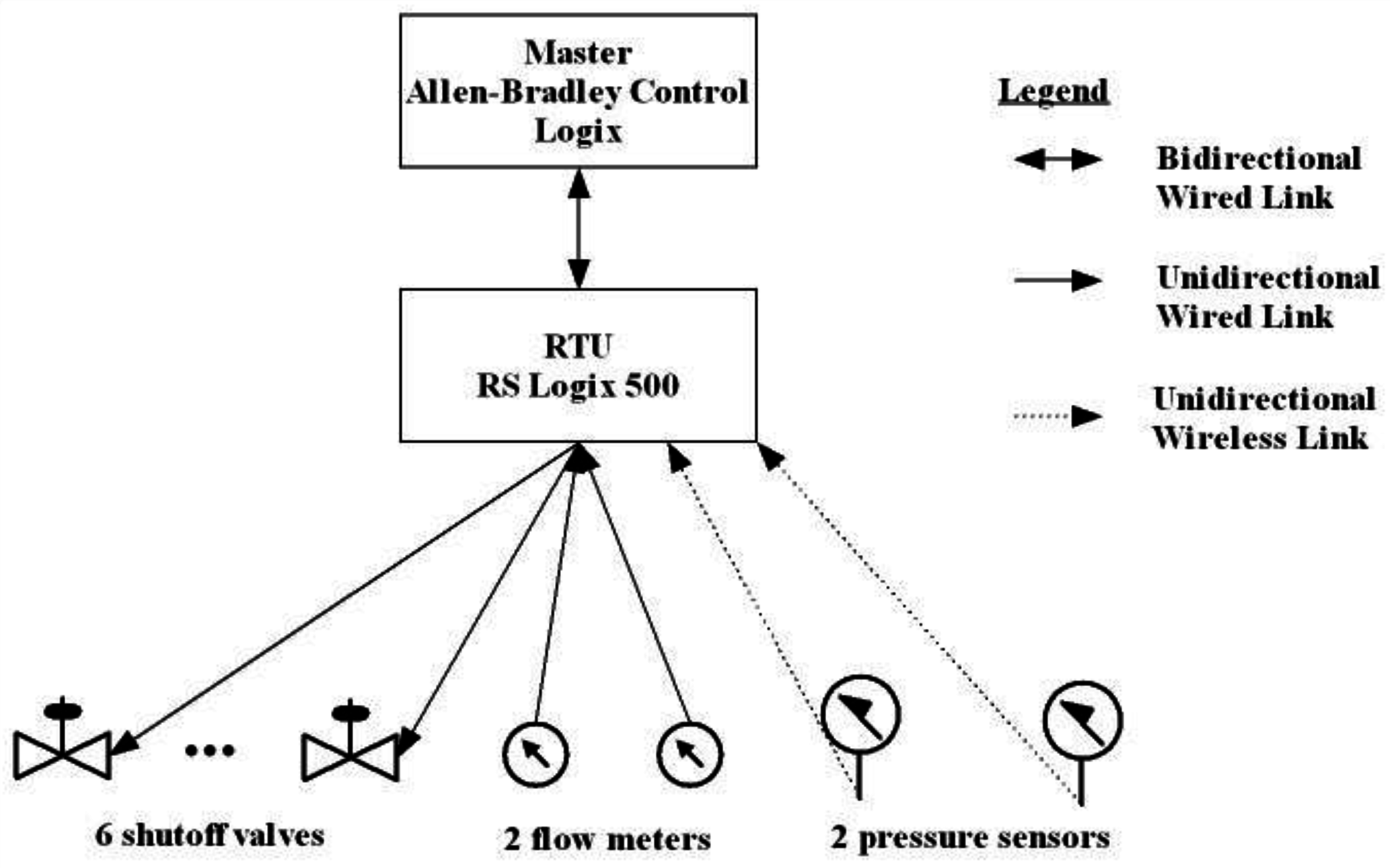

5.4.2. SCADA Installation

5.4.3. Applying the NFR Approach

5.4.4. Summary of the Measurement Process

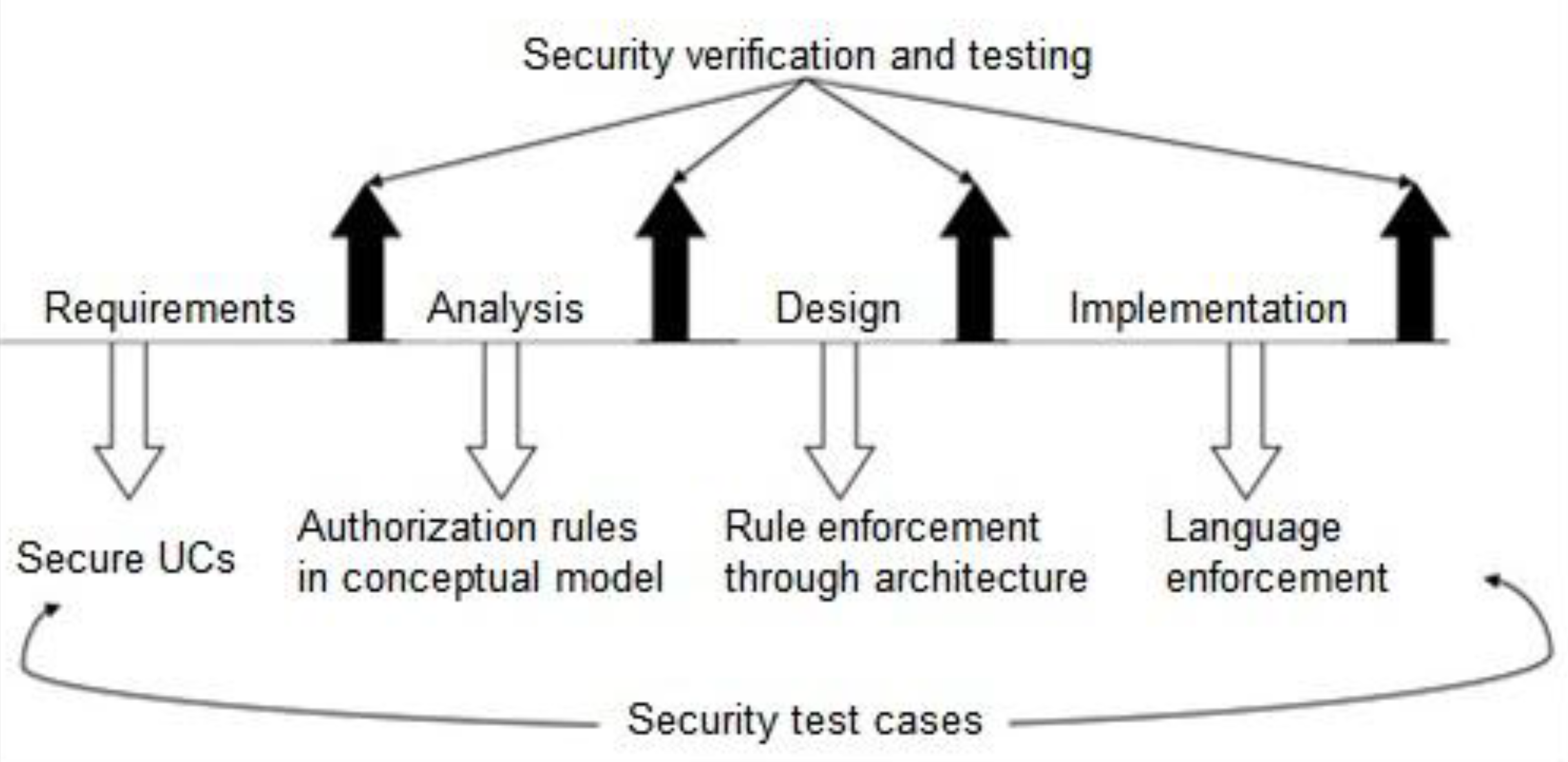

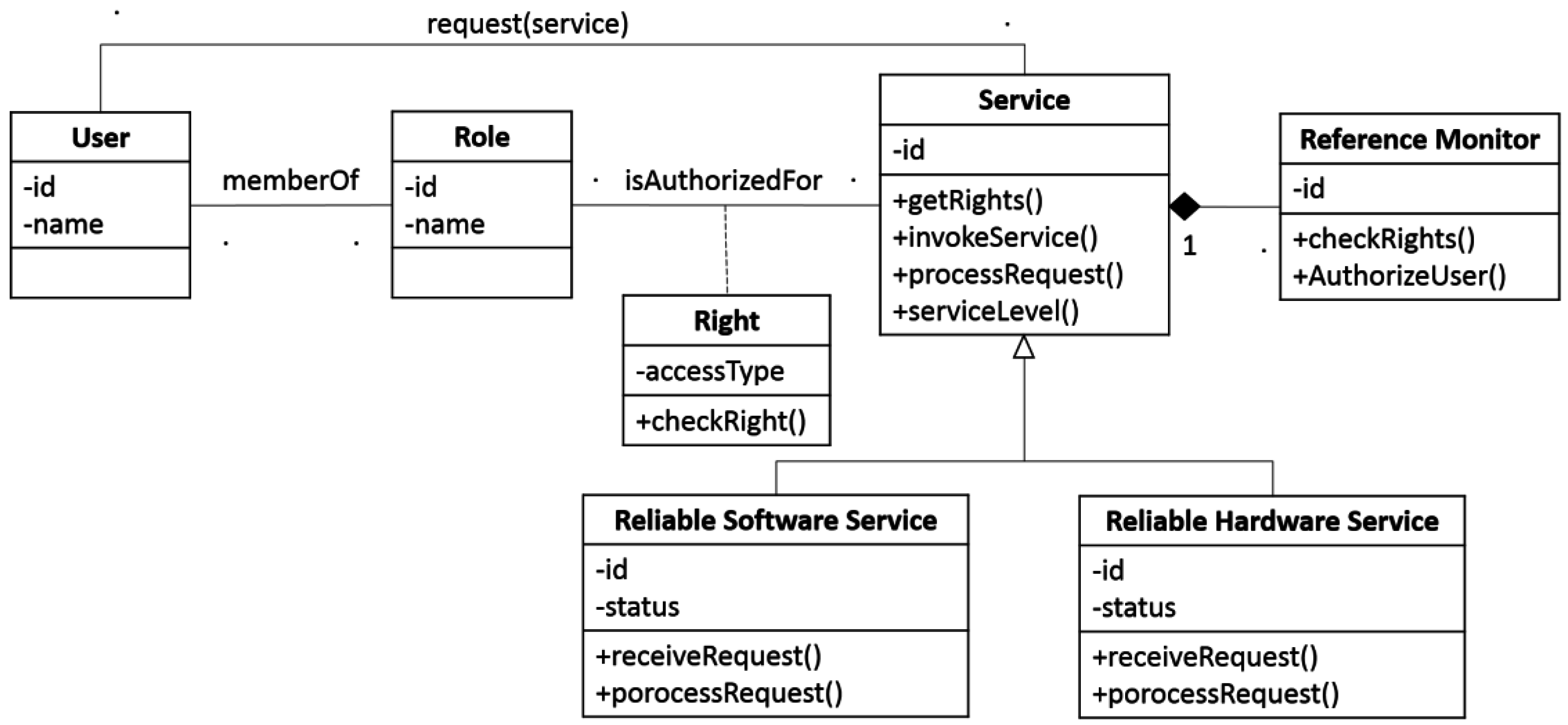

5.5. Case Study #5: Security Patterns

5.5.1. Overview of Security Patterns

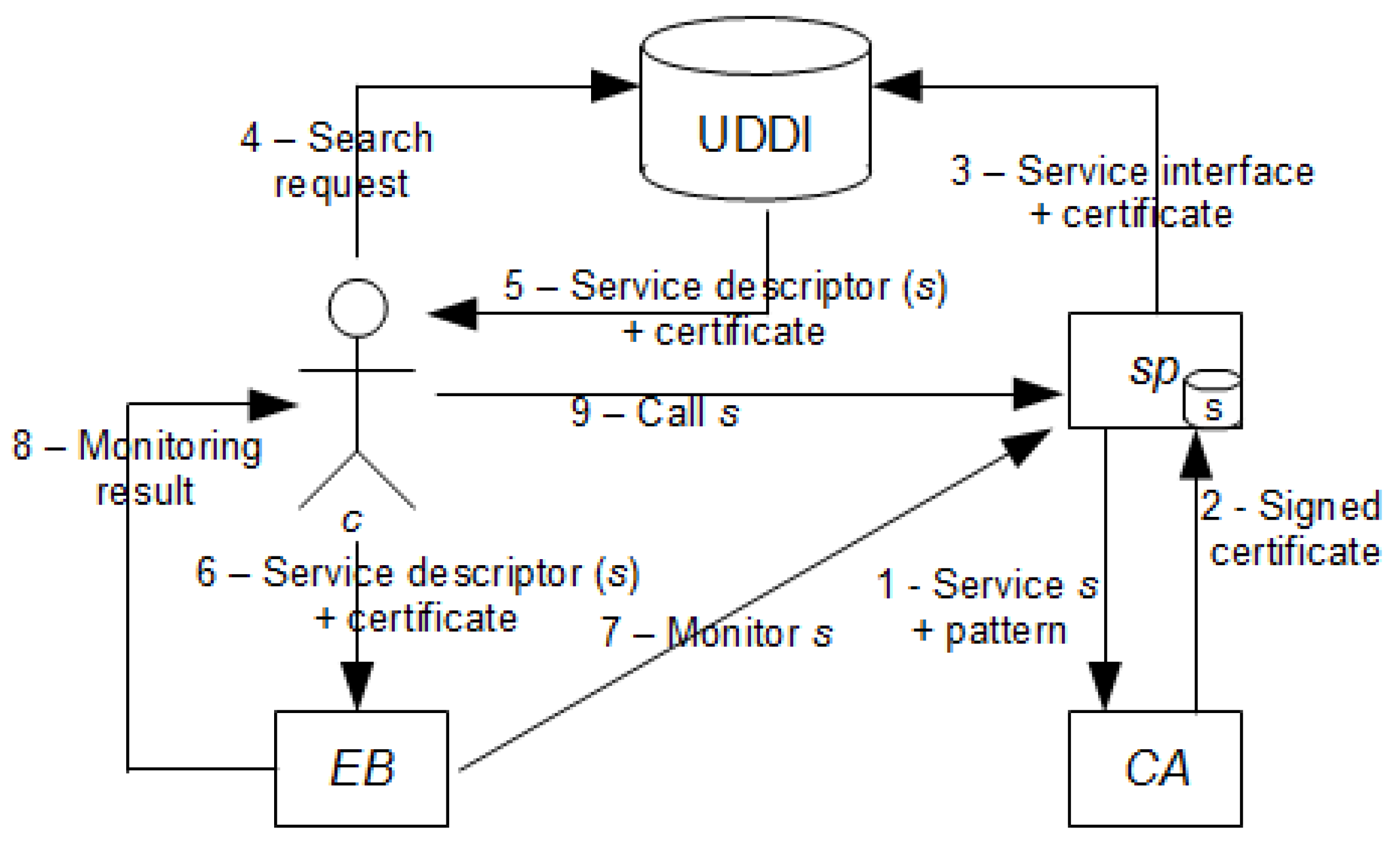

5.5.2. Example of Web Services

5.5.3. Security and Reliability Certificates Checking

- Client (c)—the entity that needs to select or integrate a remote service based on its reliability and security requirements. Here, the Client is a user who would be authorized by the reference monitor defense depicted in Figure 20.

- Service provider (sp)—the entity implementing remote services accessed by c.

- Certification Authority (CA)—an entity trusted by one or more users to assign certificates.

- Evaluation Body (EB)—an independent, trusted component carrying out monitoring activities. EB is trusted by both c and sp to correctly check the certificate validity on the basis of the monitoring rules and metrics.

- Service Discovery (UDDI)—a registry of services enhanced with the support for security and reliability certificates. Universal Description, Discovery, and Integration (UDDI) is an XML-based standard for describing, publishing, and finding web services. It is a specification for a distributed registry of web services that is a platform-independent, open framework.

- Complexity: The feature which refers to the number of security defenses that the pattern provides. For example, one pattern may only provide monitoring while another may conduct monitoring, authentication and authorization of users.

- Usability: The feature which refers the ease with which the user can apply the pattern to solve a problem.

- Ease of Implementation: This feature ties back to the template used to describe a security pattern. Some patterns do not provide enough relevant information to be useful to the user. Here, ease of implementation is measured by how comprehensive the security pattern description is.

- Efficiency: This feature is measured using the degree of the side effects and consequences that is introduced by implementing the security pattern.

5.5.4. Outline of the Measurement Process

6. Conclusions

- Markov chains are an effective tool for modeling degradation of services due to security threats, and allow for an efficient assessment of security in cases when probabilities of state transitions can be reasonably determined;

- threat modeling is useful in investigating system behavior at boundaries forming an attack surface, and—given the tool support—results in analysis of possibilities, which can negatively affect security, thus facilitating use of respective methods for quantitative evaluation of risks;

- fault injection allows introducing deliberate disturbances to a cyberphysical system by distorting messages or message flow in the system; experiments with time-triggered protocol revealed that it is possible to quantify the extent of damage caused by security lapses;

- non-functional requirements approach adds to the spectrum of quantitative evaluation methods the ability to evaluate designs; this capability is crucial in the development of cyberphysical systems, since it enables Design for Security, rather than security assessment as an afterthought;

- security patterns by their very nature allow developing preventive security measures and their quantitative assessment; it makes them a very important tool for software designers, who may not be experts in security.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Herrmann, D.S. Complete Guide to Security and Privacy Metrics: Measuring Regulatory Compliance, Operational Resilience and ROI; Auerbach Publications: London, UK, 2011. [Google Scholar]

- Brotby, W.K.; Hinson, G. Pragmatic Security Metrics: Applying Metametrics to Information Security; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Atzeni, A.; Lioy, A. Why to Adopt a Security Metric? A Brief Survey. In Quality of Protection: Security Measurements and Metrics; Gollmann, D., Massacci, F., Yautsiukhin, A., Eds.; Springer: New York, NY, USA, 2006; pp. 1–12. [Google Scholar]

- Bayuk, J.; Mostashari, A. Measuring Systems Security. Syst. Eng. 2013, 16, 1–14. [Google Scholar] [CrossRef]

- Performance Measurement Guide for Information Security; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2008.

- Jansen, W. Directions in Security Metrics Research; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2009. [Google Scholar]

- Barabanov, R.; Kowalski, S.; Yngström, L. Information Security Metrics: State of the Art; Swedish Civil Contingencies Agency: Stockholm, Sweden, 2011. [Google Scholar]

- A Community Website for Security Practitioners. Available online: http://www.securitymetrics.org (accessed on 7 June 2016).

- Hinson, G. Seven Myths about Security Metric. Available online: http://www.noticebored.com/html/metrics.html (accessed on 7 June 2016).

- Laird, L.M.; Brennan, M.C. Software Measurement and Estimation: A Practical Approach; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Department of Defense Trusted Computer Systems Evaluation Criteria; DoD 5200.28-STD; Department of Defense: Washington, DC, USA, 1985.

- Common Criteria for Information Technology Security Evaluation, Parts 1–3. Documents No. CCMB-2012-09-001, 002 and 003. Available online: http://www.commoncriteriaportal.org/cc/ (accessed on 7 June 2016).

- ISO/IEC. ISO/IEC 15408 Information Technology—Security Techniques—Evaluation Criteria for IT Security—Part 1: Introduction and General Models; ISO/IEC: Geneva, Switzerland, 2009. [Google Scholar]

- Bartol, N.; Bates, B.; Goertzel, K.M.; Winograd, T. Measuring Cyber Security and Information Assurance; Information Assurance Technology Analysis Center (IATAC): Herndon, VA, USA, 2009. [Google Scholar]

- Software Security Assessment Tools Review; Booz Allen Hamilton: McLean, VA, USA, 2009.

- Chapin, D.A.; Akridge, S. How Can Security Be Measured? Inf. Syst. Control J. 2005, 2, 43–47. [Google Scholar]

- Verendel, V. Quantified Security Is a Weak Hypothesis. In Proceedings of the NSPW’09, New Security Paradigms Workshop, Oxford, UK, 8–11 September 2009; ACM: New York, NY, USA, 2009; pp. 37–50. [Google Scholar]

- Littlewood, B.; Brocklehurst, S.; Fenton, N.; Mellor, P.; Page, S.; Wright, D.; Dobson, J.; McDermid, J.; Gollmann, D. Towards Operational Measures of Computer Security. J. Comput. Secur. 1993, 2, 211–229. [Google Scholar] [CrossRef]

- International Electrotechnical Commission (IEC). Electropedia: The World’s Online Electrotechnical Vocabulary; IEC: Geneva, Switzerland; Available online: http://www.electropedia.org/ (accessed on 7 June 2016).

- ISO/IEC/IEEE. 2476-2010 Systems and Software Engineering—Vocabulary; ISO/IEC: Geneva, Switzerland, 2011. [Google Scholar] [CrossRef]

- IEEE Software and Systems Engineering Vocabulary. Available online: http://computer.org/sevocab (accessed on 7 June 2016).

- National Information Assurance (IA) Glossary. Available online: https://www.ncsc.gov/nittf/docs/CNSSI-4009_National_Information_Assurance.pdf (accessed on 7 June 2016).

- Standards for Security Categorization of Federal Information and Information Systems; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2004.

- Zalewski, J. Real-Time Software Architectures and Design Patterns: Fundamental Concepts and Their Consequences. Ann. Rev. Control 2001, 25, 133–146. [Google Scholar] [CrossRef]

- Mayr, O. Zur Frühgeschichte der technischen Regelungen; Oldenburg Verlag: München, Germany, 1969; (English translation: The Origin of Feedback Control; MIT Press: Cambridge, MA, USA, 1970). [Google Scholar]

- ISO/IEC. 27005-2011 Information Technology—Security Techniques—Information Security Risk Management; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- National Physical Laboratory. History of Length Measurement; Teddington: Middlesex, UK; Available online: http://www.npl.co.uk/educate-explore/posters/history-of-length-measurement/ (accessed on 8 June 2016).

- Von Helmholtz, H. Zählen und Messen: Erkentnisstheoretisch betrachtet. In Philosophische Aufsätze: Eduard Zeller zu seinem fünfzigjährigen Doctorjubiläum gewidmet; Fues Verlag: Leipzig, Germany, 1887; pp. 17–52, (English translation: Counting and Measuring; Van Nostrand: New York, NY, USA, 1980). [Google Scholar]

- Definitions of the SI Base Units; National Institute of Standards and Technology: Gaithersburg, MD, USA. Available online: http://physics.nist.gov/cuu/Units/current.html (accessed on 8 June 2016).

- Andreas, B.; Azuma, Y.; Bartl, G.; Becker, P.; Bettin, H.; Borys, M.; Busch, I.; Fuchs, P.; Fujii, K.; Fujimoto, H. Counting the Atoms in a 28Si Crystal for a New Kilogram Definition. Metrologia 2011, 48, S1–S13. [Google Scholar] [CrossRef]

- Evans, D.; Stolfo, S. The Science of Security. IEEE Secur. Priv. 2011, 9, 16–17. [Google Scholar] [CrossRef]

- Glimm, J.; Sharp, D.H. Complex Fluid Mixing Flows: Simulation vs. Theory vs. Experiment. SIAM News 2006, 39, 12. [Google Scholar]

- Longman, R.W. On the Interaction Between Theory, Experiments, and Simulation in Developing Practical Learning Control Algorithms. Intern. J. Appl. Math. Comput. Sci. 2003, 13, 101–111. [Google Scholar]

- Zalewski, J.; Kornecki, A.J.; Wierzchon, S. Reasoning under Uncertainty with Bayesian Belief Networks Enhanced with Rough Sets. Int. J. Comput. 2013, 12, 135–146. [Google Scholar]

- Kornecki, A.J.; Zalewski, J. Experimental Evaluation of Software Development Tools for Safety-Critical Real-Time Systems. Innov. Syst. Softw. Eng. A NASA J. 2005, 1, 176–188. [Google Scholar] [CrossRef]

- Baquero, A.; Kornecki, A.J.; Zalewski, J. Threat Modeling for Aviation Computer Security. CrossTalk J. Def. Softw. Eng. 2015, 28, 21–27. [Google Scholar]

- International Vocabulary of Metrology—Basic and General Concepts and Associated Terms (VIM), 3rd ed.; Report JCGM 200:2012; BIPM Joint Committee for Guides in Metrology: Sèvres, France, 2012.

- Mell, P.; Scarfone, K.; Romanosky, S. (Eds.) CVSS—A Complete Guide to the Common Vulnerability Scoring System; Version 2.0.; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2007; Available online: http://www.first.org/cvss/cvss-guide (accessed on 8 June 2016).

- National Vulnerability Database; Version 2.2; National Institute of Standards and Technology: Gaithersburg, MD, USA. Available online: http://nvd.nist.gov/ (accessed on 8 June 2016).

- Wang, J.A.; Wang, H.; Guo, M.; Xia, M. Security Metrics for Software Systems. In Proceedings of the ACM-SE ’09, 47th Annual Southeast Regional Conference, Clemson, SC, USA, 19–21 March 2009. Article No. 47.

- Kornecki, A.J.; Zalewski, J.; Stevenson, W. Availability Assessment of Embedded Systems with Security Vulnerabilities. In Proceedings of the SEW-34, 2011 IEEE Software Engineering Workshop, Limerick, Ireland, 20–21 June 2011; pp. 42–47.

- Cooperative Adaptive Cruise Control (CSE491–602 Class Projects), 2006. Available online: http://www.cse.msu.edu/~chengb/RE-491/Projects/cacc_msu-ford.pdf (accessed on 8 June 2016).

- Stevenson, W. Evaluating the Impact of Adding Security to Safety Critical Real-Time Systems; Graduate Research Project, Embry-Riddle Aeronautical University: Daytona Beach, FL, USA, 2010. [Google Scholar]

- Relex/Windchill Reliability Prediction Tool; PTC Product Development Company: Needham, MA, USA; Available online: http://www.ptc.com/products/relex/reliability-prediction (accessed on 8 June 2016).

- Swiderski, F.; Snyder, W. Threat Modeling; Microsoft Press: Redmond, DC, USA, 2004. [Google Scholar]

- Zalewski, J.; Drager, S.; McKeever, W.; Kornecki, A. Threat Modeling for Security Assessment in Cyberphysical Systems. In Proceedings of the CSIIRW 2013, 8th Annual Cyber Security and Information Intelligence Workshop, Oak Ridge, FL, USA, 8–10 January 2013. Article No. 10.

- Ingalsbe, J.A.; Kunimatsu, L.; Baten, T.; Mead, N.R. Threat Modeling: Diving into the Deep End. IEEE Softw. 2008, 25, 28–34. [Google Scholar] [CrossRef]

- Aijaz, A.; Bochow, B.; Dötzer, F.; Festag, A.; Gerlach, M.; Kroh, R.; Leinmüller, T. Attacks on Inter Vehicle Communication Systems—An Analysis. In Proceedings of the WIT2006, 3rd International Workshop on Intelligent Transportation, Hamburg, Germany, 14–15 March 2006; pp. 189–194.

- Vestlund, C. Threat Analysis on Vehicle Computer Systems. Master’s Thesis, Linköping University, Linköping, Sweden, 2010. [Google Scholar]

- Common Industrial Control System Vulnerability Disclosure Framework, 2012. Available online: http://www.us-cert.gov/ (accessed on 8 June 2016).

- Czejdo, B.; Zalewski, J.; Trawczynski, D.; Baszun, M. Designing Safety-Critical Embedded Systems with Time-Triggered Architecture. Technol. Railw. Transp. (TTN—Technika Transportu Szynowego, Poland) 2013, 19, 2265–2276. [Google Scholar]

- Trawczynski, D.; Zalewski, J.; Sosnowski, J. Design of Reactive Security Mechanisms in Time-Triggered Embedded Systems. SAE Intern. J. Passeng. Cars Electron. Electr. Syst. 2014, 7, 527–535. [Google Scholar] [CrossRef]

- Arlat, J.; Crouzet, Y.; Karlsson, J.; Folkesson, P. Comparison of Physical and Software-Implemented Fault Injection Techniques. IEEE Trans. Comput. 2003, 52, 1115–1133. [Google Scholar] [CrossRef]

- Trawczynski, D. Dependability Evaluation and Enhancement in Real-Time Embedded Systems. Ph.D. Thesis, Warsaw University of Technology, Warsaw, Poland, 2009. [Google Scholar]

- Rothbart, K.; Neffe, U.; Steger, C.; Weiss, R. High Level Fault Injection for Attack Simulation in Smart Cards. In Proceedings of the ATS 2004, 13th IEEE Asian Test Symposium, Kenting, Taiwan, 15–17 November 2004; pp. 118–121.

- Computer Science and Telecommunications Board. Embedded Everywhere: A Research Agenda for Networked Systems of Embedded Computers; National Research Council: Washington, DC, USA, 2001. [Google Scholar]

- TTTechComputertechnik AG. TTP-Powernode—Development Board. Product Description. Available online: http://www.tttech.com/products/ttp-product-line/ttp-powernode (accessed on 8 June 2016).

- MathWorks. Simulink. Product Description. Available online: http://www.mathworks.com/products/simulink/ (accessed on 8 June 2016).

- Subramanian, N.; Zalewski, J. Quantitative Assessment of Safety and Security of System Architectures for Cyberphysical Systems Using the NFR Approach. IEEE Syst. J. 2016, 10, 397–409. [Google Scholar] [CrossRef]

- Buckley, I.A.; Fernandez, E.B.; Anisetti, M.; Ardagna, C.A.; Sadjadi, M.; Damiani, E. Towards Pattern-based Reliability Certification of Services. In Proceedings of the DOA-SVI’11, 1st International Symposium on Secure Virtual Infrastructures, Hersonissos, Greece, 17–21 October 2011; Lecture Notes in Computer Science 7045, Part II. Springer: Berlin/Heidelberg, Germany, 2011; pp. 558–574. [Google Scholar]

- Buckley, I.A.; Fernandez, E.B. Patterns Combing Reliability and Security. In Proceedings of the Third International Conference on Pervasive Patterns and Applications, Rome, Italy, 25–30 September 2011.

- Schmidt, D.; Stal, M.; Rohnert, H.; Buschmann, F. Pattern-Oriented Software Architecture. In Patterns for Concurrent and Networked Objects; John Wiley & Sons: New York, NY, USA, 2000; Volume 2. [Google Scholar]

- Fernandez, E.B. Security Patterns in Practice: Designing Secure Architectures Using Software; John Wiley & Sons: New York, NY, USA, 2013. [Google Scholar]

- Buschmann, F.; Meunier, R.; Rohnert, H.; Sommerlad, P.; Stal, M. Pattern-Oriented Software Architecture: A System of Patterns; John Wiley & Sons: New York, NY, USA, 1996; Volume 1. [Google Scholar]

- Buckley, I.A.; Fernandez, E.B. Enumerating software failures to build dependable distributed applications. In Proceedings of the HASE 2011, 13th IEEE International Symposium on High Assurance Systems Engineering, Boca Raton, FL, USA, 10–12 November 2011; pp. 120–123.

| Probability | Assigned Values |

|---|---|

| Corrupted Valid Message | Case 1: 0.005/Case 2: 0.05 |

| Introduced False Message | Case 1: 0.005/Case 2: 0.05 |

| Deleted Valid Message | Case 1: 0.001/Case 2: 0.01 |

| Message Flooding | Case 1: 0.001/Case 2: 0.01 |

| Return to Regular (security mitigation works) | 0.99/0.9/0.5 |

| Failing Return to Regular (security mitigation fails) | 0.01/0.1/0.5 |

| Return from Fail to Regular (repair rate) | 0 (no repair)/0.5/0.9 |

| Availability | Security | Correlation | Slope | Intercept | Predicted Security | Prediction Error (%) |

|---|---|---|---|---|---|---|

| case 1: low attack rate/no repair | ||||||

| 97.7408 | 50 | 0.9999996 | 39.324 | −3793.510 | 50.005 | 0.010% |

| 98.7572 | 90 | - | 39.324 | −3793.510 | 89.973 | −0.030% |

| 98.9873 | 99 | - | 39.324 | −3793.510 | 99.022 | 0.022% |

| case1: low attack rate/0.5 repair probability | ||||||

| 98.3877 | 50 | 0.9999998 | 80.005 | −7821.549 | 50.003 | 0.007% |

| 98.8874 | 90 | - | 80.005 | −7821.549 | 89.982 | −0.020% |

| 99.0003 | 99 | - | 80.005 | −7821.549 | 99.015 | 0.015% |

| case1: low attack rate/0.9 repair probability | ||||||

| 98.5872 | 50 | 0.9999998 | 117.478 | −11531.829 | 50.003 | 0.007% |

| 98.9275 | 90 | - | 117.478 | −11531.829 | 89.981 | −0.021% |

| 99.0044 | 99 | - | 117.478 | −11531.829 | 99.015 | 0.015% |

| case 2: high attack rate/no repair | ||||||

| 80.4847 | 50 | 0.9999546 | 4.822 | −338.064 | 50.052 | 0.104% |

| 88.7127 | 90 | - | 4.822 | −338.064 | 89.730 | −0.301% |

| 90.6804 | 99 | - | 4.822 | −338.064 | 99.218 | 0.220% |

| case 2: high attack rate/0.5 repair probability | ||||||

| 85.7505 | 50 | 0.9999823 | 9.736 | −784.806 | 50.032 | 0.064% |

| 89.8385 | 90 | - | 9.736 | −784.806 | 89.831 | −0.187% |

| 90.7943 | 99 | - | 9.736 | −784.806 | 99.137 | 0.138% |

| case 2: high attack rate/0.9 repair probability | ||||||

| 87.3755 | 50 | 0.9999877 | 14.214 | −1191.917 | 50.027 | 0.053% |

| 90.1779 | 90 | - | 14.214 | −1191.917 | 89.859 | −0.156% |

| 90.829 | 99 | - | 14.214 | −1191.917 | 99.114 | 0.115% |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zalewski, J.; Buckley, I.A.; Czejdo, B.; Drager, S.; Kornecki, A.J.; Subramanian, N. A Framework for Measuring Security as a System Property in Cyberphysical Systems. Information 2016, 7, 33. https://doi.org/10.3390/info7020033

Zalewski J, Buckley IA, Czejdo B, Drager S, Kornecki AJ, Subramanian N. A Framework for Measuring Security as a System Property in Cyberphysical Systems. Information. 2016; 7(2):33. https://doi.org/10.3390/info7020033

Chicago/Turabian StyleZalewski, Janusz, Ingrid A. Buckley, Bogdan Czejdo, Steven Drager, Andrew J. Kornecki, and Nary Subramanian. 2016. "A Framework for Measuring Security as a System Property in Cyberphysical Systems" Information 7, no. 2: 33. https://doi.org/10.3390/info7020033

APA StyleZalewski, J., Buckley, I. A., Czejdo, B., Drager, S., Kornecki, A. J., & Subramanian, N. (2016). A Framework for Measuring Security as a System Property in Cyberphysical Systems. Information, 7(2), 33. https://doi.org/10.3390/info7020033