On-Body Smartphone Localization with an Accelerometer

Abstract

:1. Introduction

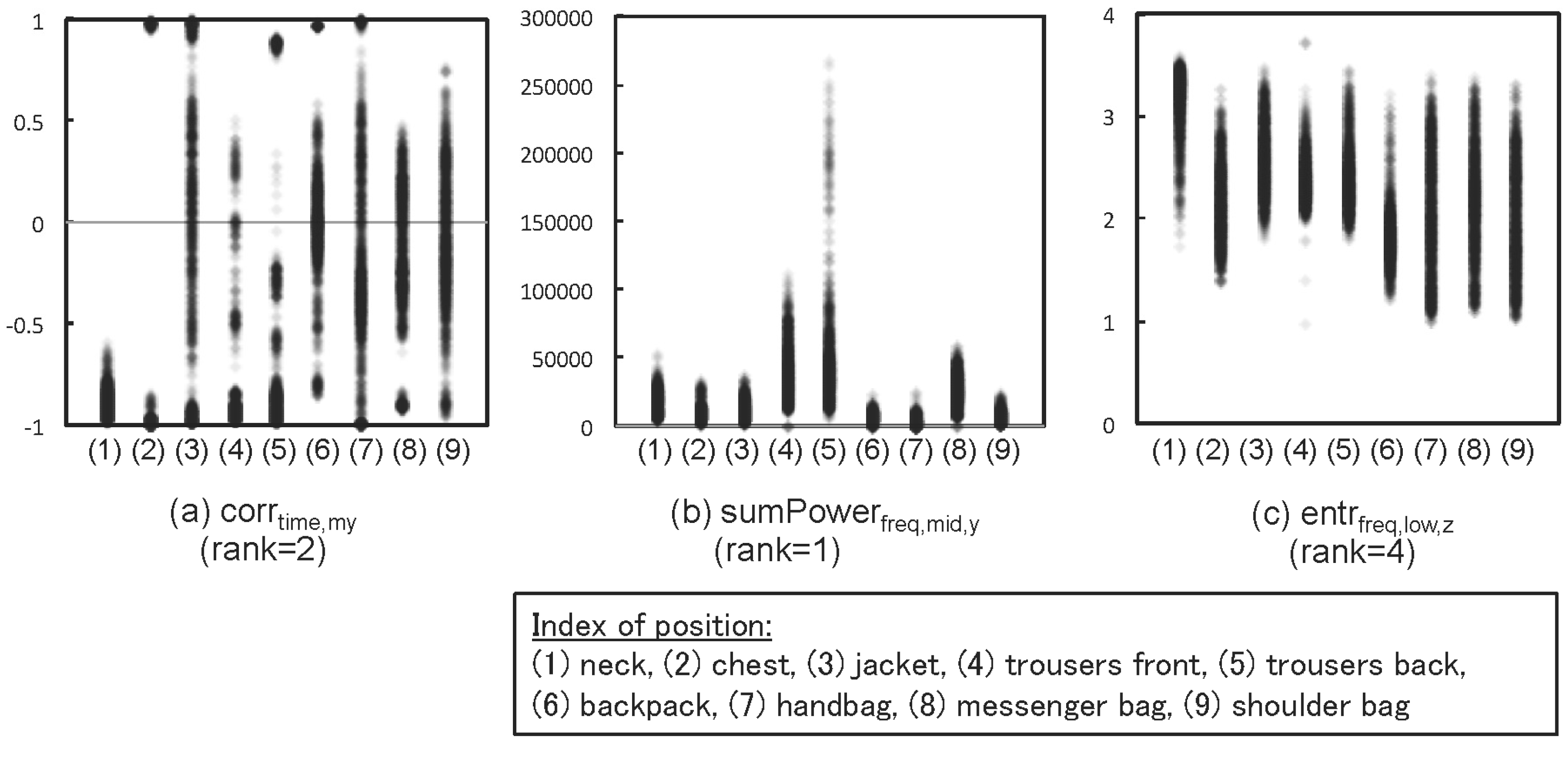

- Recognition features are analyzed from a microscopic point of view, in which a systematic feature selection specified 63 classifier-independent features that are more predictive of classes and less correlated with each other. Especially, we found that: (1) features derived from the y-axis are the most contributive; (2) the correlation between the y-axis and the magnitude of three axes, i.e., the force given to the device, might be useful to capture the characteristics of the propagated ground reaction force within nine storing positions; and (3) the selected features were also effective at classifying three additional classes, i.e., wrist, upper arm and belt.

- A “compatibility” matrix is introduced and showed the possibilities of improving the accuracy by removing a “noisy” dataset of particular persons from a training dataset and training a classifier using a dataset with similar characteristics of the acceleration of a device during walking.

- The high precision against “neck” and “trouser pocket” under leave-one-subject-out cross validation (0.95) allows reliable placement-aware environmental risk alert.

2. Importance of On-Body Position Recognition

2.1. Device Functionality Control

2.2. Accurate Activity Recognition

2.3. Reliable Environmental Sensing

3. Related Work

4. On-Body Smartphone Localization Method

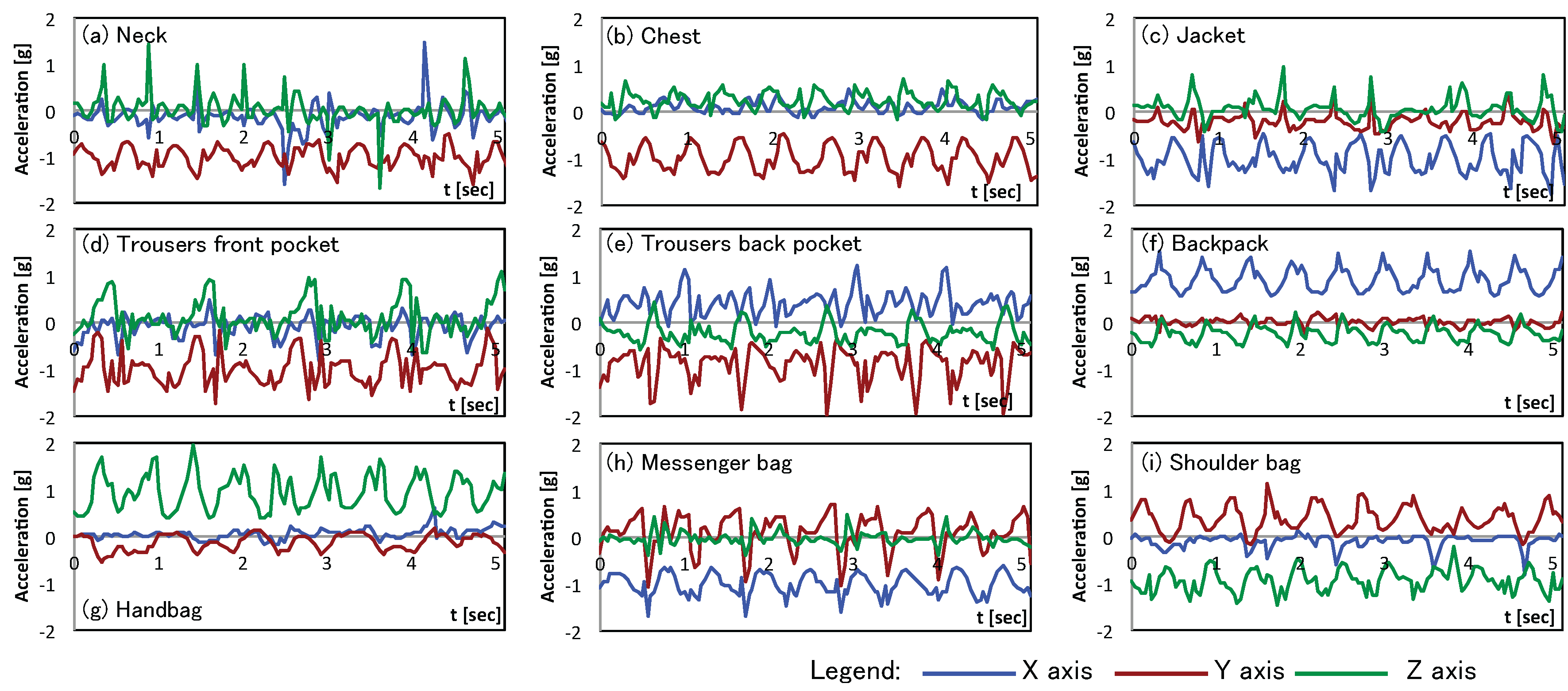

4.1. Target Positions

4.2. Sensor Modality

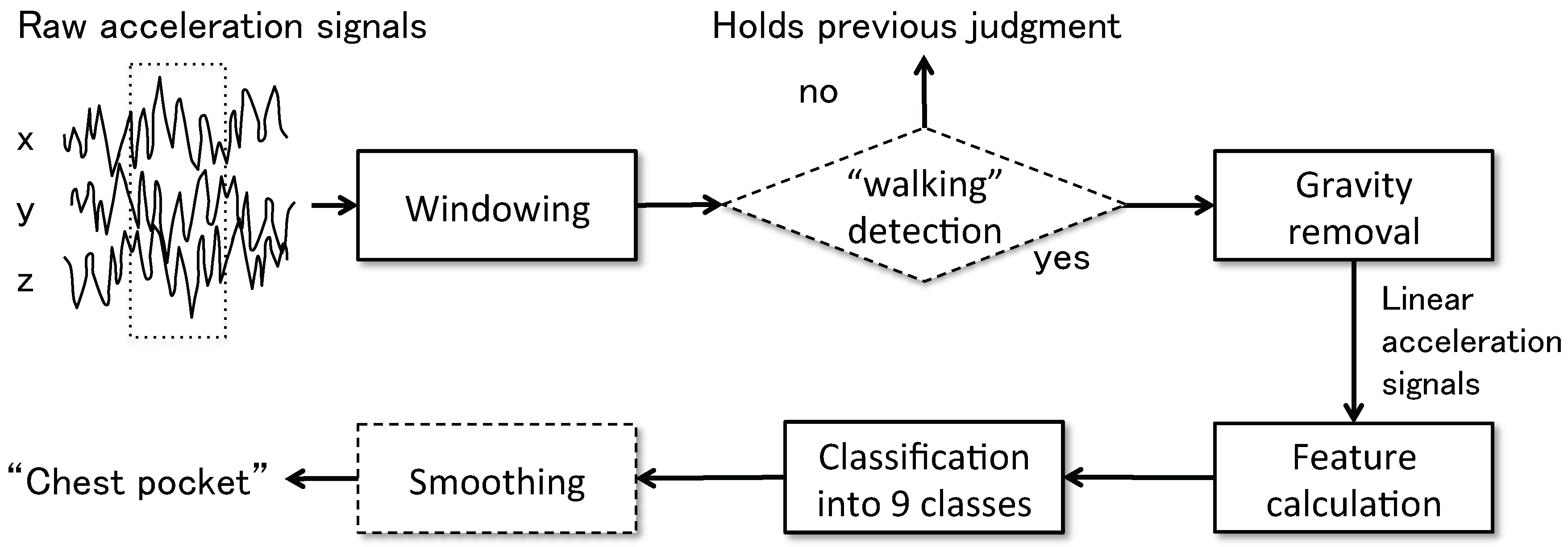

4.3. Flow of Localization

4.4. Recognition Features

5. Experiment

5.1. Dataset

5.2. Basic Performance Evaluation

5.2.1. Method

5.2.2. Results and Analysis

5.3. Feature Selection

5.3.1. Method

5.3.2. Results and Analysis

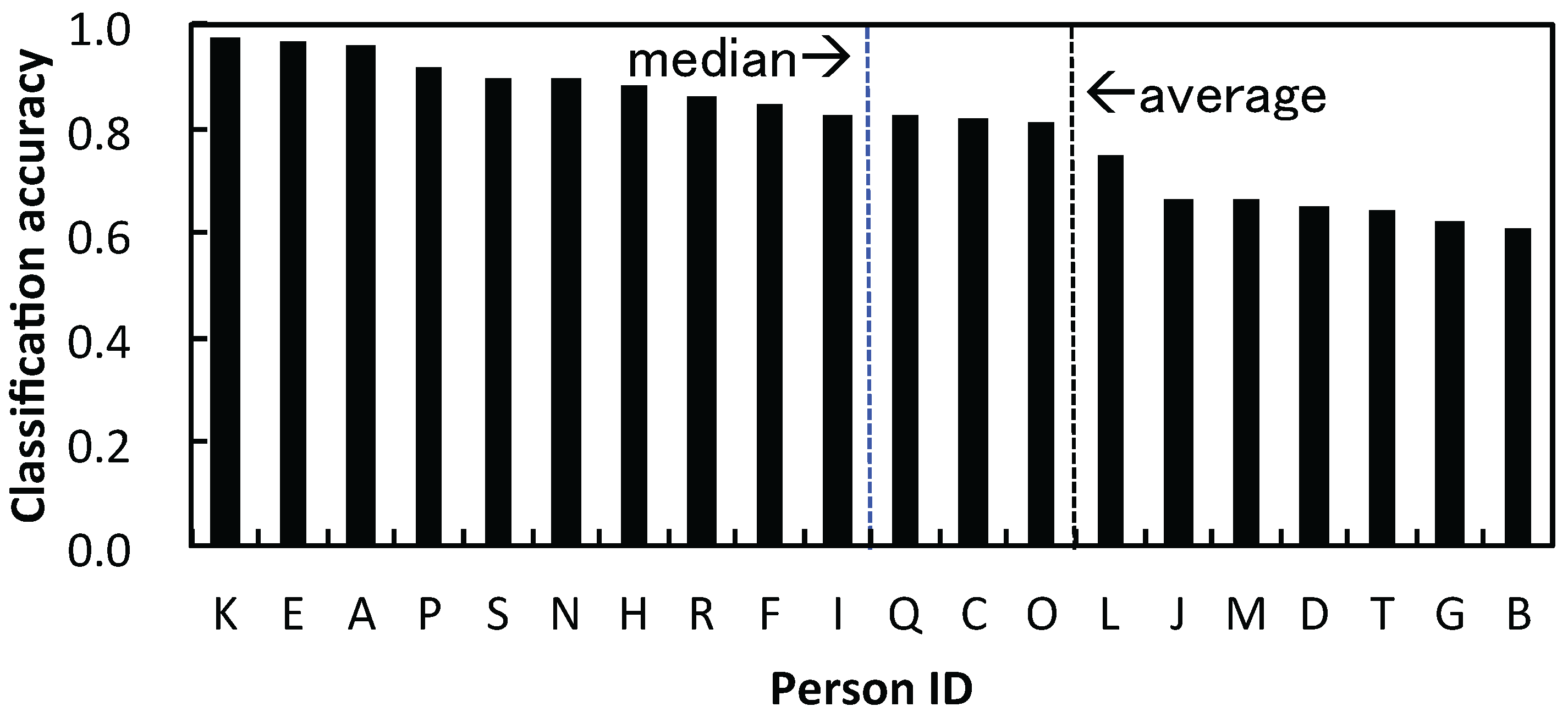

5.4. Evaluation with Unknown Subjects

5.4.1. Method

5.4.2. Results and Analysis

5.5. Compatibility Analysis

5.5.1. Method

5.5.2. Results and Analysis

5.6. Robustness of Selected Features against New Positions

5.6.1. Method

5.6.2. Results and Analysis

5.7. Storing Position Recognition during Periodic Motions other Than Walking

5.7.1. Method

5.7.2. Results and Analysis

6. Discussions

6.1. Improving the Recognition Performance against an Unknown Person

6.2. Storing Position Recognition during Various Activities

6.3. Valid Applications with the Current Recognition Performance

7. Conclusions

Acknowledgments

Conflicts of Interest

References

- Okumura, F.; Kubota, A.; Hatori, Y.; Matsumoto, K.; Hashimoto, M.; Koike, A. A Study on Biometric Authentication based on Arm Sweep Action with Acceleration Sensor. In Proceedings of the International Symposium on Intelligent Signal Processing and Communications (ISPACS ’06), Yonago, Japan, 12–15 December 2006; pp. 219–222.

- Gellersen, H.; Schmidt, A.; Beigl, M. Multi-Sensor Context-Awareness in Mobile Devices and Smart Artifacts. J. Mob. Netw. Appl. 2002, 7, 341–351. [Google Scholar] [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. SIGKDD Explor. Newsl. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Pirttikangas, S.; Fujinami, K.; Nakajima, T. Feature Selection and Activity Recognition from Wearable Sensors. In Proceedings of the International Symposium on Ubiquitous Computing Systems (UCS 2006), Seoul, Korea, 11–13 October 2006; pp. 516–527.

- Blanke, U.; Schiele, B. Sensing Location in the Pocket. In Proceedings of the 10th International Conference on Ubiquitous Computing (Ubicomp 2008), Seoul, Korea, 21–24 September 2008.

- Rai, A.; Chintalapudi, K.K.; Padmanabhan, V.N.; Sen, R. Zee: Zero-effort Crowdsourcing for Indoor Localization. In Proceedings of the 18th Annual International Conference on Mobile Computing and Networking (MobiCom 2012), Istanbul, Turkey, 22–26 August 2012.

- Sugimori, D.; Iwamoto, T.; Matsumoto, M. A Study about Identification of Pedestrian by Using 3-Axis Accelerometer. In Proceedings of the IEEE 17th International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Toyama, Japan, 28–31 August 2011; pp. 134–137.

- Goldman, J.; Shilton, K.; Burke, J.; Estrin, D.; Hansen, M.; Ramanathan, N.; Reddy, S.; Samanta, V.; Srivastava, M.; West, R. Participatory Sensing: A Citizen-Powered Approach to Illuminating the Patterns that Shape Our World. Available online: https://www.wilsoncenter.org/sites/default/files/participatory_sensing.pdf (accessed on 23 March 2016).

- Stevens, M.; D’Hondt, E. Crowdsourcing of Pollution Data using Smartphones. In Proceedings of the 1st Ubiquitous Crowdsourcing Workshop, Copenhagen, Denmark, 26–29 September 2010.

- Cui, Y.; Chipchase, J.; Ichikawa, F. A Cross Culture Study on Phone Carrying and Physical Personalization. In Proceedings of the 12th International Conference on Human-Computer Interaction, Beijing, China, 22–27 July 2007; pp. 483–492.

- Diaconita, I.; Reinhardt, A.; Englert, F.; Christin, D.; Steinmetz, R. Do you hear what I hear? Using acoustic probing to detect smartphone locations. In Proceedings of the IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), Budapest, Hungary, 24–28 March 2014; pp. 1–9.

- Diaconita, I.; Reinhardt, A.; Christin, D.; Rensing, C. Inferring Smartphone Positions Based on Collecting the Environment’s Response to Vibration Motor Actuation. In Proceedings of the 11th ACM Symposium on QoS and Security for Wireless and Mobile Networks (Q2SWinet 2015), Cancun, Mexico, 2–6 November 2015.

- Harrison, C.; Hudson, S.E. Lightweight material detection for placement-aware mobile computing. In Proceedings of the 21st annual ACM symposium on User interface software and technology (UIST 2008), Monterey, CA, USA, 19–22 October 2008.

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Proceedings of the 2nd International Conference on Pervasive Computing (Pervasive 2004), Linz/Vienna, Austria, 18–23 April 2004.

- Atallah, L.; Lo, B.; King, R.; Yang, G.Z. Sensor Placement for Activity Detection Using Wearable Accelerometers. In Proceedings of the 2010 International Conference on Body Sensor Networks (BSN), Singapore, 7–9 June 2010; pp. 24–29.

- Lane, N.D.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A.T. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Miluzzo, E.; Papandrea, M.; Lane, N.; Lu, H.; Campbell, A. Pocket, Bag, Hand, etc.-Automatically Detecting Phone Context through Discovery. In Proceedings of First International Workshop on Sensing for App Phones (PhoneSense 2010), Zurich, Switzerland, 2 November 2010.

- Fujinami, K.; Xue, Y.; Murata, S.; Hosokawa, S. A Human-Probe System That Considers On-body Position of a Mobile Phone with Sensors. In Distributed, Ambient, and Pervasive Interactions; Streitz, N., Stephanidis, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8028, pp. 99–108. [Google Scholar]

- Vaitl, C.; Kunze, K.; Lukowicz, P. Does On-body Location of a GPS Receiver Matter? In International Workshop on Wearable and Implantable Body Sensor Networks (BSN’10); IEEE Computer Society: Los Alamitos, CA, USA, 2010; pp. 219–221. [Google Scholar]

- Blum, J.; Greencorn, D.; Cooperstock, J. Smartphone Sensor Reliability for Augmented Reality Applications. In Mobile and Ubiquitous Systems: Computing, Networking, and Services; Zheng, K., Li, M., Jiang, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 120, pp. 127–138. [Google Scholar]

- Fujinami, K.; Jin, C.; Kouchi, S. Tracking On-body Location of a Mobile Phone. In Proceedings of the 14th Annual IEEE International Symposium on Wearable Computers (ISWC 2010), Orlando, FL, USA, 9–14 July 2010; pp. 190–197.

- Shi, Y.; Shi, Y.; Liu, J. A rotation based method for detecting on-body positions of mobile devices. In Proceedings of the 13th International Conference on Ubiquitous Computing, ACM (UbiComp ’11), Beijing, China, 17–21 September 2011; pp. 559–560.

- Vahdatpour, A.; Amini, N.; Sarrafzadeh, M. On-body device localization for health and medical monitoring applications. In Proceedings of the 2011 IEEE International Conference on Pervasive Computing and Communications, Seattle, WA, USA, 21–25 March 2011; pp. 37–44.

- Kunze, K.; Lukowicz, P.; Junker, H.; Tröster, G. Where am I: Recognizing On-body Positions of Wearable Sensors. In Proceedings of International Workshop on Location- and Context-Awareness (LoCA 2005), Oberpfaffenhofen, Germany, 12–13 May 2005; pp. 264–275.

- Mannini, A.; Sabatini, A.M.; Intille, S.S. Accelerometry-based recognition of the placement sites of a wearable sensor. Perv. Mob. Comput. 2015, 21, 62–74. [Google Scholar] [CrossRef] [PubMed]

- Fujinami, K.; Kouchi, S. Recognizing a Mobile Phone’s Storing Position as a Context of a Device and a User. In Mobile and Ubiquitous Systems: Computing, Networking, and Services; Zheng, K., Li, M., Jiang, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 120, pp. 76–88. [Google Scholar]

- Wiese, J.; Saponas, T.S.; Brush, A.B. Phoneprioception: Enabling Mobile Phones to Infer Where They Are Kept. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’13), Paris, France, 27 April–2 May 2013.

- Alanezi, K.; Mishra, S. Design, implementation and evaluation of a smartphone position discovery service for accurate context sensing. Comput. Electr. Eng. 2015, 44, 307–323. [Google Scholar] [CrossRef]

- Incel, O.D. Analysis of Movement, Orientation and Rotation-Based Sensing for Phone Placement Recognition. Sensors 2015, 15, 25474–25506. [Google Scholar] [CrossRef] [PubMed]

- Kunze, K.; Lukowicz, P. Dealing with Sensor Displacement in Motion-based Onbody Activity Recognition Systems. In Proceedings of the 10th International Conference on Ubiquitous Computing (UbiComp ’08), Seoul, Korea, 21–24 September 2008.

- Zhang, L.; Pathak, P.H.; Wu, M.; Zhao, Y.; Mohapatra, P. AccelWord: Energy Efficient Hotword Detection Through Accelerometer. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys’15), Florence, Italy, 19–22 May 2015.

- Jung, J.; Choi, S. Perceived Magnitude and Power Consumption of Vibration Feedback in Mobile Devices. In Human-Computer Interaction. Interaction Platforms and Techniques; Jacko, J., Ed.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4551, pp. 354–363. [Google Scholar]

- Murao, K.; Terada, T. A motion recognition method by constancy-decision. In Proceedings of the 14th International Symposium on Wearable Computers (ISWC 2010), Seoul, Korea, 10–13 October 2010; pp. 69–72.

- Hemminki, S.; Nurmi, P.; Tarkoma, S. Gravity and Linear Acceleration Estimation on Mobile Devices. In Proceedings of the 11th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services (MobiQuitous 2014), London, UK, 2–5 December 2014; pp. 50–59.

- Cho, S.J.; Choi, E.; Bang, W.C.; Yang, J.; Sohn, J.; Kim, D.Y.; Lee, Y.B.; Kim, S. Two-stage Recognition of Raw Acceleration Signals for 3-D Gesture-Understanding Cell Phones. In Proceedings of the Tenth International Workshop on Frontiers in Handwriting Recognition, La Baule, France, 23–26 October 2006.

- Li, F.-F.; Fergus, R.; Perona, P. One-shot learning of object categories. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 594–611. [Google Scholar]

- Paul, D.B.; Baker, J.M. The Design for the Wall Street Journal-based CSR Corpus. In Proceedings of the Workshop on Speech and Natural Language, Pacific Grove, CA, USA, 19–22 February 1991; pp. 357–362.

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Fusion of Smartphone Motion Sensors for Physical Activity Recognition. Sensors 2014, 14, 10146–10176. [Google Scholar] [CrossRef] [PubMed]

- Weka 3—Data Mining with Open Source Machine Learning Software in Java; Machine Learning Group at University of Waikato: Hamilton, New Zealand. Available online: http://www.cs.waikato.ac.nz/ml/weka/ (accessed on 15 March 2016).

- Hall, M.A. Correlation-Based Feature Selection for Machine Learning. Ph.D. Thesis, The University of Waikato, Hamilton, New Zealand, 1999. [Google Scholar]

| Target Position (Total Number) | Sensor | Evaluation | Subjects | Accuracy (%) | |

|---|---|---|---|---|---|

| Kunze et al. [24] | Head, trousers, breast, wrist (4) | Accelerometer | 10-fold | 6 | 94.0 (walking) |

| Harrison & Hudson [13] | Backpack, jacket, jeans hip, desk, etc. (27) | Multispectral light (active sensing w/ Ir light) | 10-fold | 16 | 94.8 (N/A) |

| Miluzzo et al. [17] | In or out of a pocket (2) | Microphone | 2-fold | 1 | 80 (N/A) |

| Vahdatpour et al. [23] | Upper arm, forearm, waist shin, thigh, head (6) | Accelerometer | 5-fold | 25 | 89 (walking) |

| Shi et al. [22] | Trouser front/back, breast, hand (4) | Accelerometer, gyroscope | 5-fold | 4 | 91.69 (walking) |

| Fujinami et al. [26] | Chest, jacket, trouser front/back of neck, 4 types of bags (9) | Accelerometer | LOSO | 20 | 74.6 (walking) |

| 10-fold | 99.4 (walking) | ||||

| Wiese et al. [27] | Pocket, bag, out of body, hand (4) | Accelerometer, proximity, capacitive | LOSO | 15 | 85 (mixed) |

| Diaconita et al. [11] | Pocket, backpack, desk, hand (4) | Microphone (active sensing w/ vibration) | 10-fold | Not given | 97 (stationary) |

| Diaconita et al. [12] | Pocket, hand, bag, desk (4) | Accelerometer (active sensing w/ vibration) | 10-fold | Not given | 99.2 (mixed) |

| Mannini et al. [25] | Ankle, thigh, hip, arm, waist (5) | Accelerometer | LOSO | 33 | 91.2 (walking) |

| 10-fold | 96.4 | ||||

| Alanezi et al. [28] | Trouser front/back, jacket, hand holding, talking on phone, | Accelerometer | 10-fold | 10 | 88.5 (walking) |

| watching a video (6) | Accelerometer, gyro | 89.3 (walking) | |||

| Incel [29] | Trousers, jacket, 2 types of bags, wrist, hand, arm, belt (8) | Accelerometer | LOSO | max/min/ave | 85.4 (walking) |

| 35/10/15.6 | 76.4 (stationary) | ||||

| 84.3 (mobile) | |||||

| Trouser left/right, upper arm, belt, wrist (5) | Accelerometer, gyro | LOSO | 10 | 95.9 (mixed) | |

| This work | Neck, chest, jacket, | Accelerometer | LOSO | 20 | 80.5 (walking) |

| trouser front/back, 4 types of bags (9) | 10-fold | 99.9 (walking) | |||

| Merged: “trousers”, “bags” (5) | LOSO | 85.9 (walking) |

| Type | Way of Slinging | Relationship with Body |

|---|---|---|

| Backpack | Over both shoulders | On the back (center of the body) |

| Handbag | Holding with hand | In the hand (side of the body) |

| Messenger bag | On the shoulder opposite the bag | Side or back of the body |

| Shoulder bag | On the same side of the shoulder as the bag | Side of the body |

| Type | Description |

|---|---|

| sdevtime | Standard deviation of time series data |

| mintime | Minimum value of time series data |

| maxtime | Maximum value of time series data |

| 3rdQtime | 3rd quartile of time series data |

| IQRtime | Inter-quartile range of time series data |

| RMStime | Root mean square of time series data |

| bin1time | 1st bin of the binned distribution of time series data |

| bin2time | 2nd bin of the binned distribution of time series data |

| bin3time | 3rd bin of the binned distribution of time series data |

| bin4time | 4th bin of the binned distribution of time series data |

| bin5time | 5th bin of the binned distribution of time series data |

| bin6time | 6th bin of the binned distribution of time series data |

| bin7time | 7th bin of the binned distribution of time series data |

| bin8time | 8th bin of the binned distribution of time series data |

| bin9time | 9th bin of the binned distribution of time series data |

| bin10time | 10th bin of the binned distribution of time series data |

| maxfreq,all | Maximum value in an entire frequency spectrum |

| fMaxfreq,all | Frequency that gives maxfreq,all |

| 3rdQfreq,all | 3rd quartile value in the frequency spectrum |

| IQRfreq,all | Inter-quartile range of the values in the frequency spectrum |

| 2ndMaxfreq,all | 2nd maximum value of the frequency spectrum |

| f2ndMaxfreq,all | Frequency that gives 2ndMaxfreq,all |

| maxfreq,low | Maximum value in the low-frequency range |

| maxfreq,mid | Maximum value in the mid-frequency range |

| maxfreq,high | Maximum value in the high-frequency range |

| sdevfreq,low | Standard deviation in the low-frequency range |

| sdevfreq,mid | Standard deviation in the mid-frequency range |

| sdevfreq,high | Standard deviation in the high-frequency range |

| maxSdevfreq,all | Maximum in subwindows in the frequency spectrum |

| fMaxSdevfreq,all | Central frequency of the subwindow that gives maxSdevfreq,all |

| sumPowerfreq,all | Sum of the entire range power |

| sumPowerfreq,low | Sum of the power in the low-frequency range |

| sumPowerfreq,mid | Sum of the power in the mid-frequency range |

| sumPowerfreq,high | Sum of the power in the high-frequency range |

| entrfreq,all | Frequency entropy in the entire range |

| entrfreq,low | Frequency entropy in the low-frequency range |

| entrfreq,mid | Frequency entropy in the mid-frequency range |

| entrfreq,high | Frequency entropy in the high-frequency range |

| Type | Description |

|---|---|

| corrtime | Correlation coefficient in time series data |

| corrfreq,all | Correlation coefficient in an entire frequency spectrum |

| corrfreq,low | Correlation coefficient in the low-frequency range |

| corrfreq,mid | Correlation coefficient in the mid-frequency range |

| corrfreq,high | Correlation coefficient in the high-frequency range |

| Condition | Value |

|---|---|

| Way of walking and orientation of a terminal | Unconstrained |

| Number of subjects | 20 (2 females and 18 males) |

| Trials per position | 10 |

| Duration of walking per trial | 30 s |

| Terminal | NexusOne (HTC) |

| Sampling rate | 25 Hz |

| Classifier | Parameter |

|---|---|

| J48 | -C 0.25 -M 2 |

| Naive Bayes | N/A |

| Support Vector Machine (SVM) | -S 0 -K 2 -D 3 -G 2.0 -R 0.0 -N 0.5 -M 40.0 -C 1.0 -E 0.0010 -P 0.1 -Z |

| Multi-Layer Perceptron (MLP) | -L 0.3 -M 0.2 -N 50 -V 0 -S 0 -E 20 -H a |

| RandomForest | -I 50 -K 0 -S 1 |

| Window Size | J48 | Naive Bayes | SVM | MLP | RandomForest |

|---|---|---|---|---|---|

| 128 | 0.964 | 0.798 | 0.997 | 0.984 | 0.994 |

| 256 | 0.979 | 0.810 | 0.999 | 0.994 | 0.999 |

| 512 | 0.989 | 0.813 | 0.999 | 0.994 | 0.999 |

| Rank | Name | Rank | Name | Rank | Name |

|---|---|---|---|---|---|

| 1 | sumPowerfreq,mid,y | 22 | f2ndMaxfreq,all,y | 43 | sdevfreq,low,z |

| 2 | corrtime,my | 23 | maxtime,z | 44 | corrfreq,all,yz |

| 3 | sumPowerfreq,high,y | 24 | corrfreq,high,mz | 45 | mintime,y |

| 4 | entrfreq,low,z | 25 | corrfreq,low,yz | 46 | bin9time,x |

| 5 | corrtime,mz | 26 | IQRfreq,all,y | 47 | sumPowerfreq,high,m |

| 6 | entrfreq,low,x | 27 | 3rdQfreq,all,z | 48 | bin4time,y |

| 7 | 3rdQfreq,all,y | 28 | corrfreq,low,zx | 49 | corrfreq,low,xy |

| 8 | sumPowerfreq,high,x | 29 | maxfreq,all,x | 50 | bin5time,z |

| 9 | corrfreq,high,my | 30 | sumPowerfreq,mid,x | 51 | sumPowerfreq,low,y |

| 10 | corrfreq,all,zx | 31 | entrfreq,low,y | 52 | corrtime,yz |

| 11 | RMStime,m | 32 | corrfreq,mid,mz | 53 | bin3time,m |

| 12 | corrfreq,all,xy | 33 | fMaxfreq,all,x | 54 | entrfreq,mid,z |

| 13 | maxSdevfreq,all,y | 34 | sumPowerfreq,mid,m | 55 | sumPowerfreq,mid,z |

| 14 | corrfreq,mid,my | 35 | bin6time,x | 56 | bin2time,x |

| 15 | entrfreq,all,z | 36 | corrfreq,all,my | 57 | maxtime,y |

| 16 | entrfreq,all,x | 37 | maxfreq,mid,y | 58 | maxfreq,high,x |

| 17 | sdevfreq,mid,y | 38 | mintime,x | 59 | IQRtime,z |

| 18 | entrfreq,all,y | 39 | corrfreq,mid,mx | 60 | f2ndMaxfreq,all,x |

| 19 | 3rdQfreq,all,x | 40 | corrtime,xy | 61 | corrfreq,mid,xy |

| 20 | corrtime,mx | 41 | sdevfreq,high,y | 62 | bin1time,z |

| 21 | maxfreq,high,y | 42 | bin6time,z | 63 | IQRfreq,all,m |

| Calculation Target | Domain | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Single Axis | Multi Axes (Correlation) | Time | Frequency | |||||||

| Median of rank | 34 | 26.5 | 45 | 27.5 | ||||||

| Proportion of selection | 45/63 | 18/63 | 19/63 | 44/63 | ||||||

| Individual Axis (Axes) Series | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | m | xy | zx | yz | mx | my | mz | |

| Median of rank | 34 | 24 | 43 | 40.5 | 44.5 | 44 | 19 | 29.5 | 11.5 | 24 |

| Proportion of definition | 12/38 | 16/38 | 11/38 | 6/38 | 4/5 | 3/5 | 2/5 | 2/5 | 4/5 | 3/5 |

| Answer\decision | a | b | c | d | e | f | g | h | i | Recall |

|---|---|---|---|---|---|---|---|---|---|---|

| a. neck | 187 | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0.970 |

| b. chest | 0 | 168 | 17 | 0 | 0 | 0 | 1 | 0 | 3 | 0.889 |

| c. jacket | 8 | 14 | 119 | 5 | 3 | 12 | 2 | 7 | 19 | 0.633 |

| d. trouser front | 1 | 0 | 6 | 146 | 20 | 0 | 1 | 16 | 0 | 0.768 |

| e. trouser back | 1 | 3 | 7 | 29 | 145 | 0 | 0 | 9 | 1 | 0.744 |

| f. backpack | 0 | 10 | 1 | 0 | 0 | 156 | 14 | 1 | 15 | 0.794 |

| g. handbag | 0 | 11 | 4 | 0 | 0 | 12 | 162 | 0 | 9 | 0.818 |

| h. messenger bag | 0 | 11 | 12 | 0 | 0 | 1 | 1 | 171 | 3 | 0.853 |

| i. shoulder bag | 0 | 0 | 17 | 0 | 0 | 1 | 19 | 5 | 155 | 0.785 |

| Precision | 0.945 | 0.767 | 0.639 | 0.810 | 0.856 | 0.862 | 0.813 | 0.823 | 0.751 | 0.805 |

| Answer\decision | a | b | c | x | y | Recall |

|---|---|---|---|---|---|---|

| a. neck | 187 | 3 | 3 | 0 | 0 | 0.970 |

| b. chest | 0 | 168 | 17 | 0 | 4 | 0.889 |

| c. jacket | 8 | 14 | 119 | 8 | 40 | 0.633 |

| x. trousers | 1 | 2 | 7 | 170 | 13 | 0.883 |

| y. bag | 0 | 8 | 9 | 0 | 181 | 0.914 |

| Precision | 0.953 | 0.867 | 0.776 | 0.949 | 0.761 | 0.859 |

| Biking | Jogging | Upstairs | Downstairs | |

|---|---|---|---|---|

| Recall of trousers front pocket | 0.009 | 0.673 | 0.815 | 0.150 |

| Most confused class and | Jacket | Trousers back | Neck | Neck |

| the false recognition for the class | 0.321 | 0.240 | 0.118 | 0.806 |

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fujinami, K. On-Body Smartphone Localization with an Accelerometer. Information 2016, 7, 21. https://doi.org/10.3390/info7020021

Fujinami K. On-Body Smartphone Localization with an Accelerometer. Information. 2016; 7(2):21. https://doi.org/10.3390/info7020021

Chicago/Turabian StyleFujinami, Kaori. 2016. "On-Body Smartphone Localization with an Accelerometer" Information 7, no. 2: 21. https://doi.org/10.3390/info7020021