Research and Implementation of Scheduling Strategy in Kubernetes for Computer Science Laboratory in Universities

Abstract

:1. Introduction

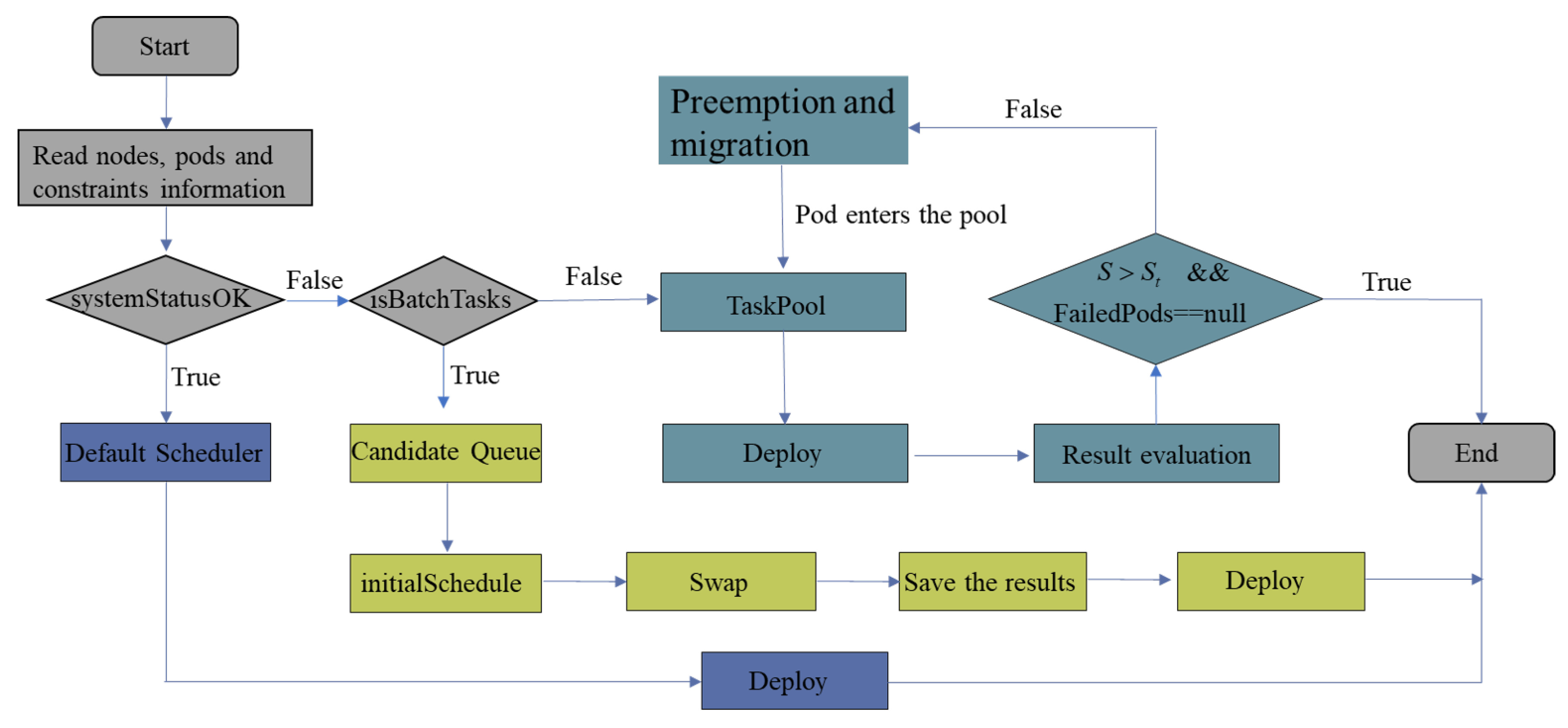

- Two Schedulers, Batch Scheduler and Dynamic Scheduler, are proposed and implemented. These Schedulers are exploited in appropriate situation for different tasks to improve system performance in different aspects.

- The Batch Scheduler contains two steps, greedy method step and local search step, which can search out a better layout in rush hour.

- The Dynamic Scheduler manages tasks by priorities which will dynamically change. These tasks are basically long-term deep learning tasks which the preemption and migration mechanism are mandatory in our system.

- To estimate the Batch Scheduler and the Dynamic Scheduler, some experiments are conducted in our cloud. Compared with the default strategy, the resource utilization rate and efficiency are improved.

2. Related Work

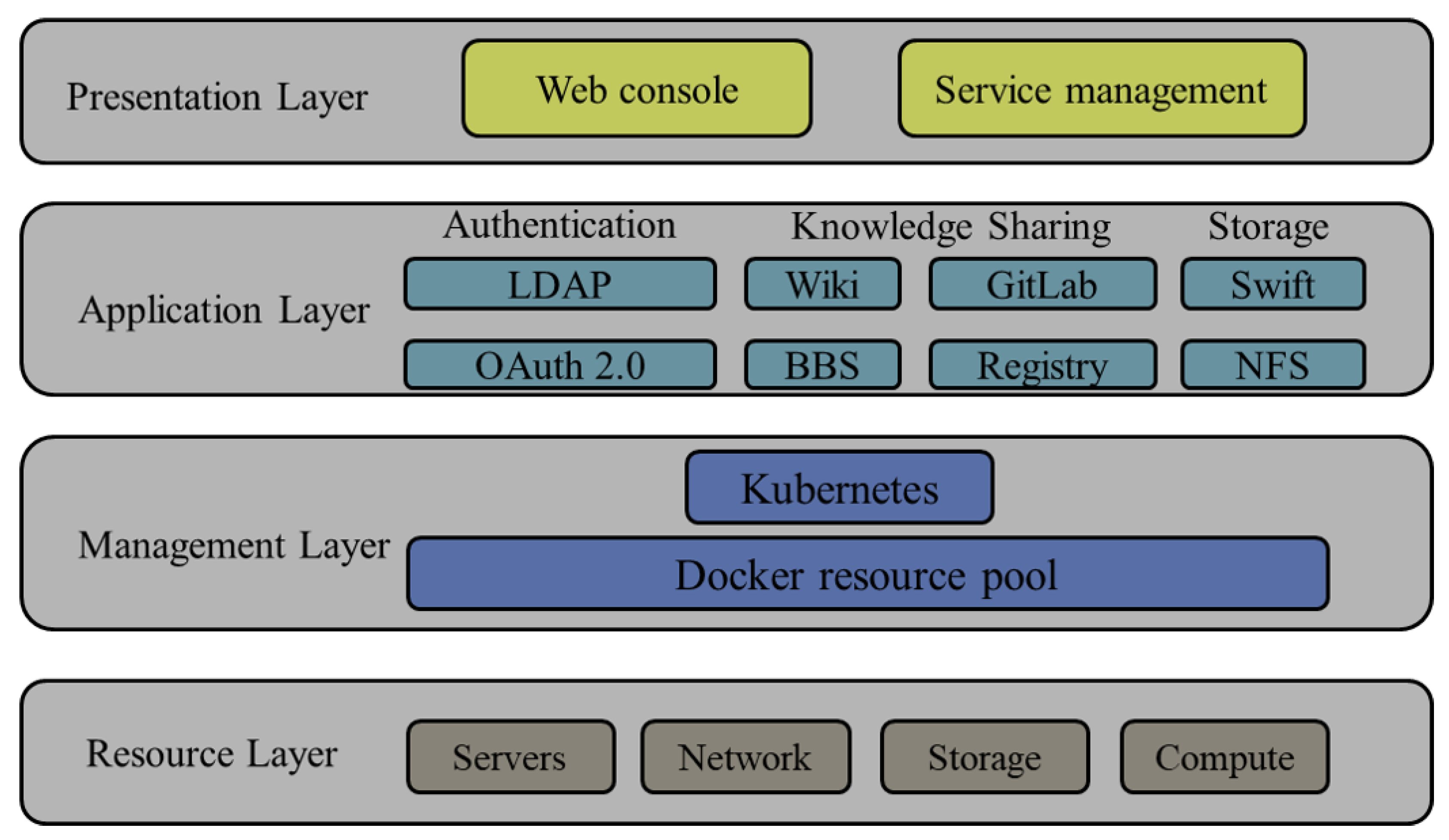

3. Our Private Cloud Platform and Services

4. The Default Scheduling Strategy and Improvements

5. The Implementation of Our Strategy

5.1. Batch Scheduler

| Algorithm 1. Sorting in Candidate Queue Algorithm |

|

| Algorithm 2. Swap Algorithm |

|

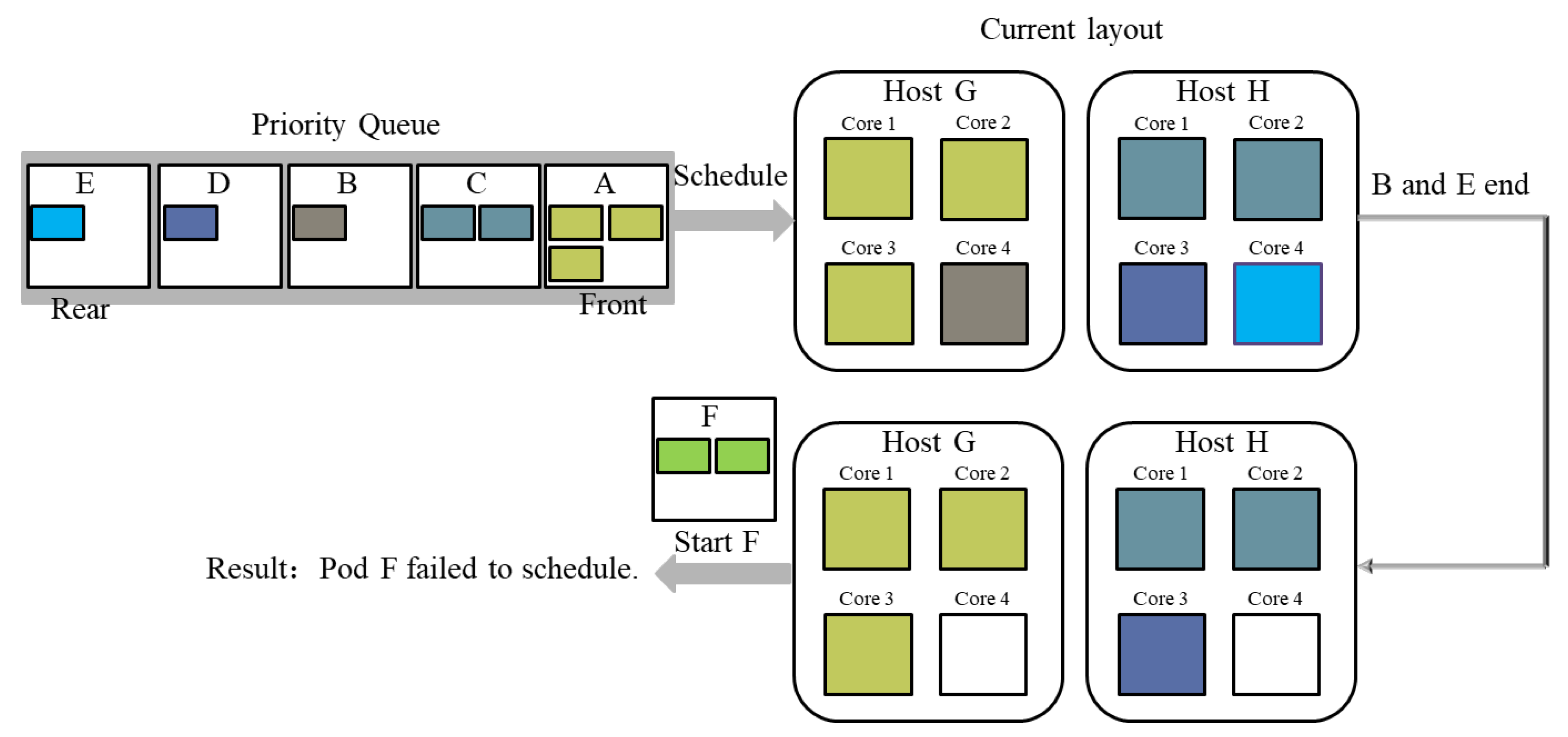

5.2. Dynamic Scheduler

6. Experiments and Results

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CaaS | Software-as-a-Service |

| BCDI | Balanced-CPU-Disk-IO-Priority |

| DRF | Dominant Resources Fairness |

| LDAP | Lightweight Directory Access Protocol |

| NFS | Network File System |

| FLOPS | Floating-point Operations Per Second |

| NAS | Network Architecture Search |

References

- Felter, W.; Ferreira, A.; Rajamony, R.; Rubio, J. An updated performance comparison of virtual machines and Linux containers. In Proceedings of the 2015 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Philadelphia, PA, USA, 29–31 March 2015. [Google Scholar]

- Jennings, B.; Stadler, R. Resource Management in Clouds: Survey and Research Challenges. J. Netw. Syst. Manag. 2015, 23, 567–619. [Google Scholar] [CrossRef]

- Liu, P.; Hu, L.; Xu, H.; Shi, Z.; Tang, Y. A Toolset for Detecting Containerized Application’s Dependencies in CaaS Clouds. In Proceedings of the 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), San Francisco, CA, USA, 2–7 July 2018. [Google Scholar]

- Dragoni, N.; Giallorenzo, S.; Lluch-Lafuente, A.; Mazzara, M.; Montesi, F.; Mustafin, R.; Safina, L. Microservices: Yesterday, today, and tomorrow. In Present and Ulterior Software Engineering; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Singh, V.; Peddoju, S.K. Container-based microservice architecture for cloud applications. In Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 847–852. [Google Scholar] [CrossRef]

- Balalaie, A.; Heydarnoori, A.; Jamshidi, P. Microservices Architecture Enables DevOps: An Experience Report on Migration to a Cloud-Native Architecture. IEEE Softw. 2016, 33, 42–52. [Google Scholar] [CrossRef] [Green Version]

- Bernstein, D. Containers and Cloud: From LXC to Docker to Kubernetes. IEEE Cloud Comput. 2014, 1, 81–84. [Google Scholar] [CrossRef]

- Netto, H.V.; Lung, L.C.; Correia, M.; Luiz, A.F.; Moreira, S.; De Souza, L. State machine replication in containers managed by Kubernetes. J. Syst. Archit. 2017, 73, 53–59. [Google Scholar] [CrossRef]

- Medel, V.; Tolosana-Calasanz, R.; Bañares, J.; Arronategui, U.; Rana, O.F. Characterising resource management performance in Kubernetes. Comput. Electr. Eng. 2018, 68, 286–297. [Google Scholar] [CrossRef] [Green Version]

- The Default Scheduler in Kubernetes. 2020. Available online: https://kubernetes.io/docs/concepts/scheduling-eviction/kube-scheduler/ (accessed on 9 November 2020).

- Ghodsi, A.; Zaharia, M.; Hindman, B.; Konwinski, A.; Shenker, S.; Stoica, I. Dominant resource fairness: Fair allocation of multiple resource types. In Proceedings of the NSDI 2011, Boston, MA, USA, 30 March–1 April 2011. [Google Scholar]

- Mesos: Dominant Resources Fairness. 2020. Available online: http://mesos.apache.org/documentation/latest/app-framework-development-guide/ (accessed on 9 November 2020).

- Grandl, R.; Ananthanarayanan, G.; Kandula, S.; Rao, S.; Akella, A. Multi-resource Packing for Cluster Schedulers. ACM SIGCOMM Comput. Commun. Rev. 2014, 44, 455–466. [Google Scholar] [CrossRef]

- Menouer, T. KCSS: Kubernetes container scheduling strategy. J. Supercomput. 2020. [Google Scholar] [CrossRef]

- Li, D.; Wei, Y.; Zeng, B. A Dynamic I/O Sensing Scheduling Scheme in Kubernetes. In Proceedings of the 2020 4th International Conference on High Performance Compilation, Computing and Communications, Guangzhou, China, 27–29 June 2020; pp. 14–19. [Google Scholar] [CrossRef]

- Zhang, W.; Ma, X.; Zhang, J. Research on Kubernetes’ Resource Scheduling Scheme. In Proceedings of the 8th International Conference on Communication and Network Security, Qingdao, China, 2–4 November 2018. [Google Scholar]

- Orhean, A.I.; Pop, F.; Raicu, I. New scheduling approach using reinforcement learning for heterogeneous distributed systems. J. Parallel Distrib. Comput. 2017, 117, 292–302. [Google Scholar] [CrossRef]

- Huang, J.; Xiao, C.; Wu, W. RLSK: A Job Scheduler for Federated Kubernetes Clusters based on Reinforcement Learning. In Proceedings of the 2020 IEEE International Conference on Cloud Engineering (IC2E), Sydney, Australia, 21–24 April 2020. [Google Scholar]

- Yu, G.; Christina, D. The Architectural Implications of Cloud Microservices. IEEE Comput. Archit. Lett. 2018, 17, 155–158. [Google Scholar]

- Carullo, G.; Mauro, M.D.; Galderisi, M.; Longo, M.; Postiglione, F.; Tambasco, M. Object Storage in Cloud Computing Environments: An Availability Analysis. In Proceedings of the 12th International Conference, GPC 2017, Cetara, Italy, 11–14 May 2017. [Google Scholar]

- Jaramillo, D.; Nguyen, D.; Smart, R. Leveraging microservices architecture by using Docker technology. In Proceedings of the SoutheastCon 2016, Norfolk, VA, USA, 30 March–3 April 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Guan, X.; Wan, X.; Choi, B.Y.; Song, S.; Zhu, J. Application Oriented Dynamic Resource Allocation for Data Centers Using Docker Containers. IEEE Commun. Lett. 2017, 21, 504–507. [Google Scholar] [CrossRef]

- Torroglosa-Garcia, E.; Perez-Morales, A.D.; Martinez-Julia, P.; Lopez, D.R. Integration of the OAuth and Web Service family security standards. Comput. Netw. 2013, 57, 2233–2249. [Google Scholar] [CrossRef]

- Vasanthi, N.A. LDAP: A lightweight deduplication and auditing protocol for secure data storage in cloud environment. Clust. Comput. 2017, 22, 1247–1258. [Google Scholar]

- Chae, C.J.; Kim, K.B.; Cho, H.J. A study on secure user authentication and authorization in OAuth protocol. Clust. Comput. 2017, 22, 1991–1999. [Google Scholar] [CrossRef]

- Rattihalli, G.; Govindaraju, M.; Lu, H.; Tiwari, D. Exploring Potential for Non-Disruptive Vertical Auto Scaling and Resource Estimation in Kubernetes. In Proceedings of the 2019 IEEE 12th International Conference on Cloud Computing (CLOUD), Milan, Italy, 8–13 July 2019. [Google Scholar]

- The Alibaba Dataset. 2020. Available online: https://code.aliyun.com/middleware-contest-2020/django (accessed on 9 November 2020).

| Default Scheduler | Our Algorithm | |||||||

|---|---|---|---|---|---|---|---|---|

| Nodes | 776 | 815 | 847 | 945 | 776 | 815 | 847 | 945 |

| Apps | 382 | 402 | 420 | 413 | 382 | 402 | 420 | 413 |

| Pods | 7423 | 8021 | 8210 | 9241 | 7423 | 8021 | 8210 | 9241 |

| Resource utilization | 69.20% | 57.30% | 67.40% | 59.70% | 91.90% | 84.20% | 89.60% | 87.40% |

| Layout score | 1158 | 1317 | 1295 | 1272 | 1065 | 1174 | 1168 | 1253 |

| Default Scheduler | Our Algorithm | |||||||

|---|---|---|---|---|---|---|---|---|

| Nodes | 46 | 49 | 53 | 60 | 46 | 49 | 53 | 60 |

| Apps | 102 | 106 | 122 | 152 | 102 | 106 | 122 | 152 |

| Pods | 311 | 305 | 364 | 339 | 311 | 305 | 364 | 339 |

| Resource utilization | 60.70% | 57.50% | 65.30% | 61.20% | 85.40% | 79.30% | 81.20% | 83.60% |

| Waiting time | 7.5 h | 6.1 h | 6.3 h | 7.1 h | 2.3 h | 1.6 h | 1.7 h | 2.2 h |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Liu, H.; Han, L.; Huang, L.; Wang, K. Research and Implementation of Scheduling Strategy in Kubernetes for Computer Science Laboratory in Universities. Information 2021, 12, 16. https://doi.org/10.3390/info12010016

Wang Z, Liu H, Han L, Huang L, Wang K. Research and Implementation of Scheduling Strategy in Kubernetes for Computer Science Laboratory in Universities. Information. 2021; 12(1):16. https://doi.org/10.3390/info12010016

Chicago/Turabian StyleWang, Zhe, Hao Liu, Laipeng Han, Lan Huang, and Kangping Wang. 2021. "Research and Implementation of Scheduling Strategy in Kubernetes for Computer Science Laboratory in Universities" Information 12, no. 1: 16. https://doi.org/10.3390/info12010016