A Novel Adaptive LMS Algorithm with Genetic Search Capabilities for System Identification of Adaptive FIR and IIR Filters

Abstract

:1. Introduction

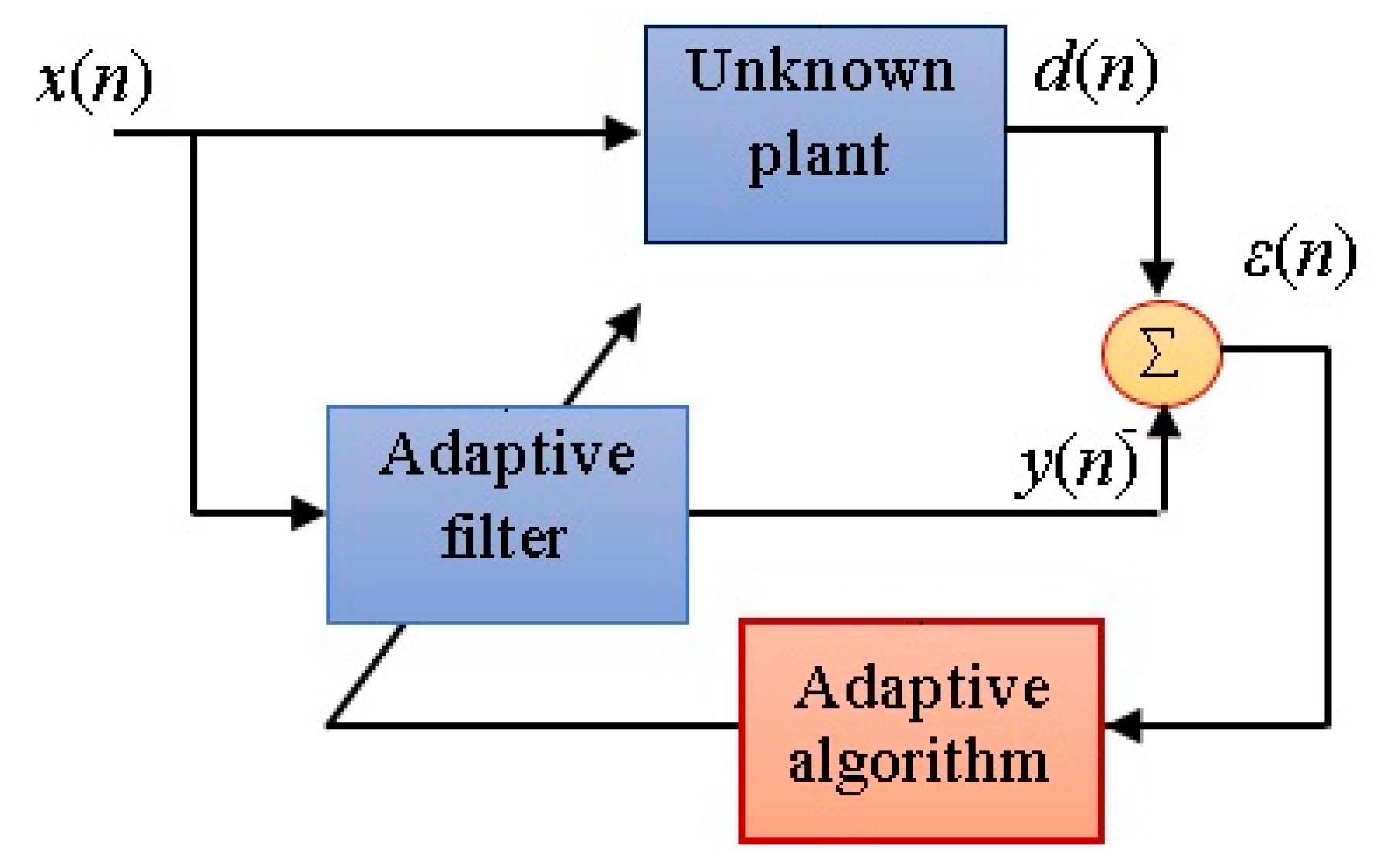

2. System Identification Using Adaptive FIR and IIR Filtering with LMS Algorithm

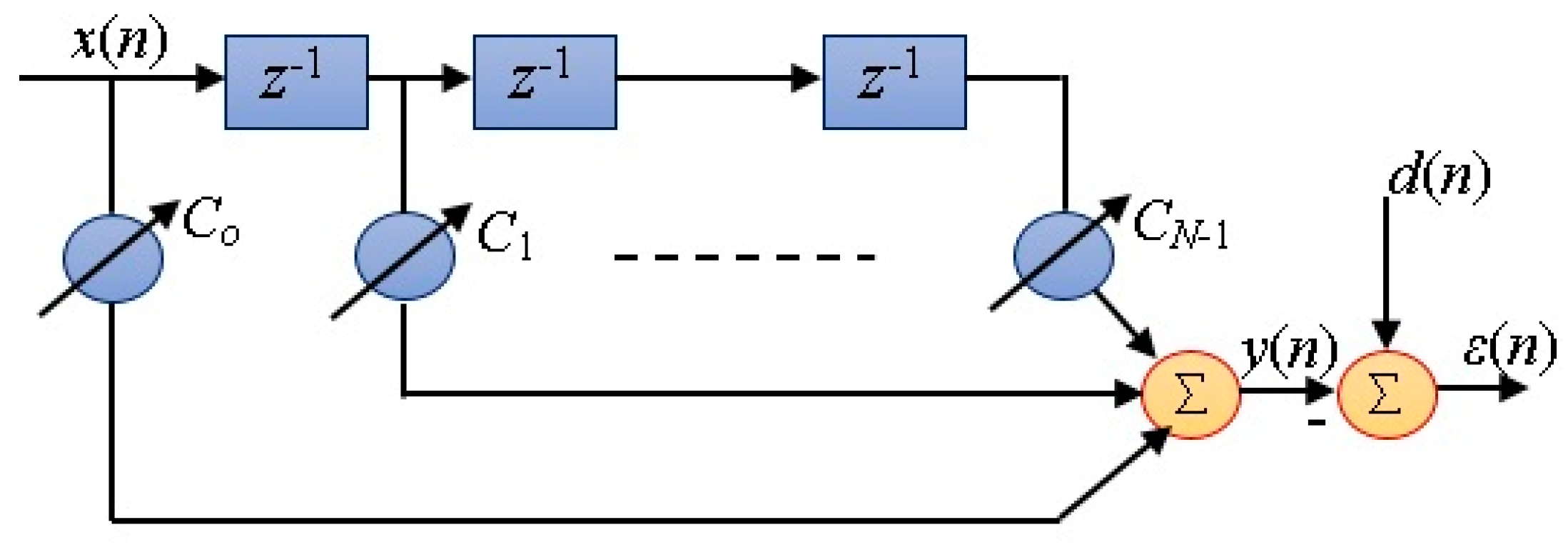

2.1. Preliminaries on Adaptive FIR and IIR Filtering

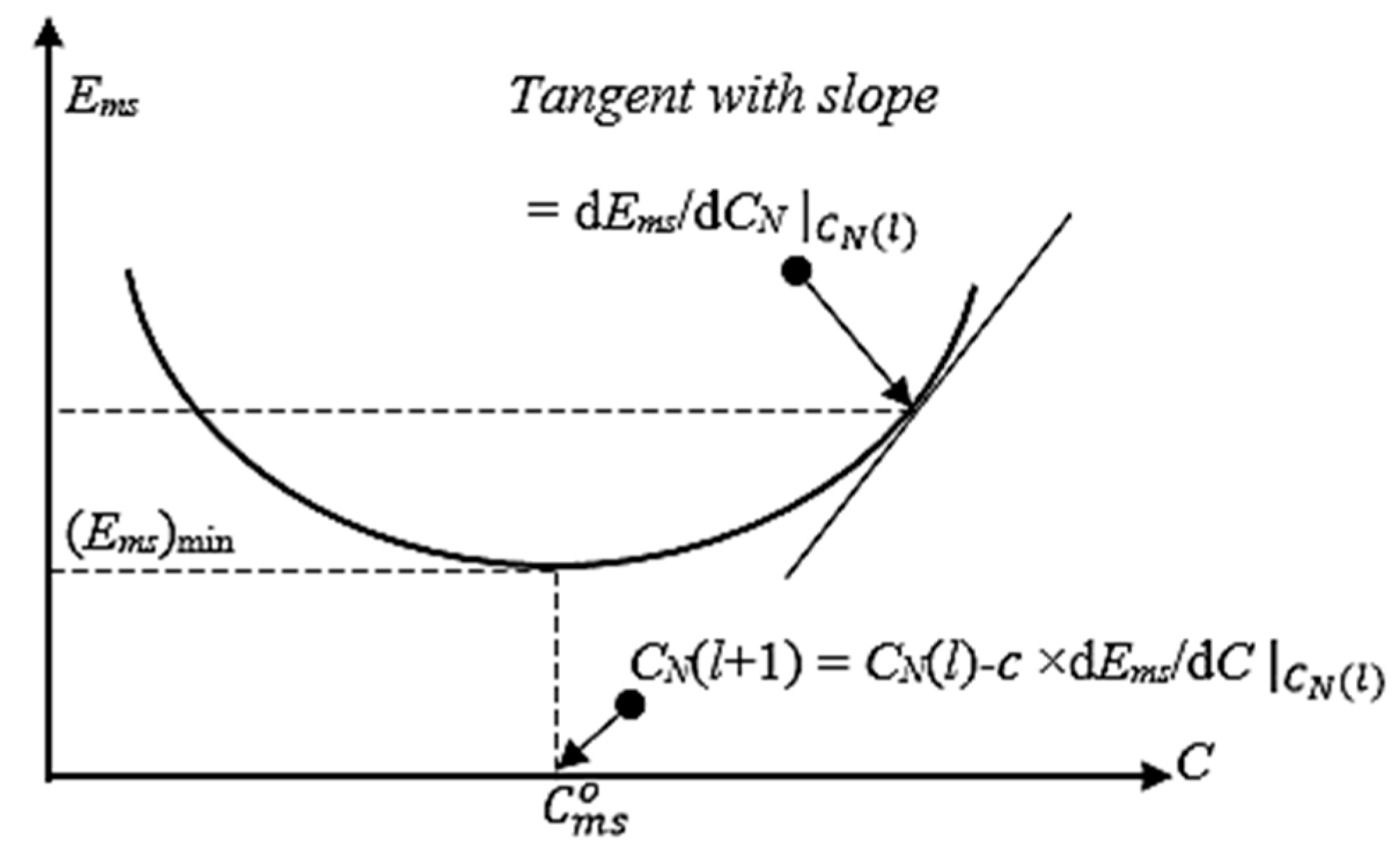

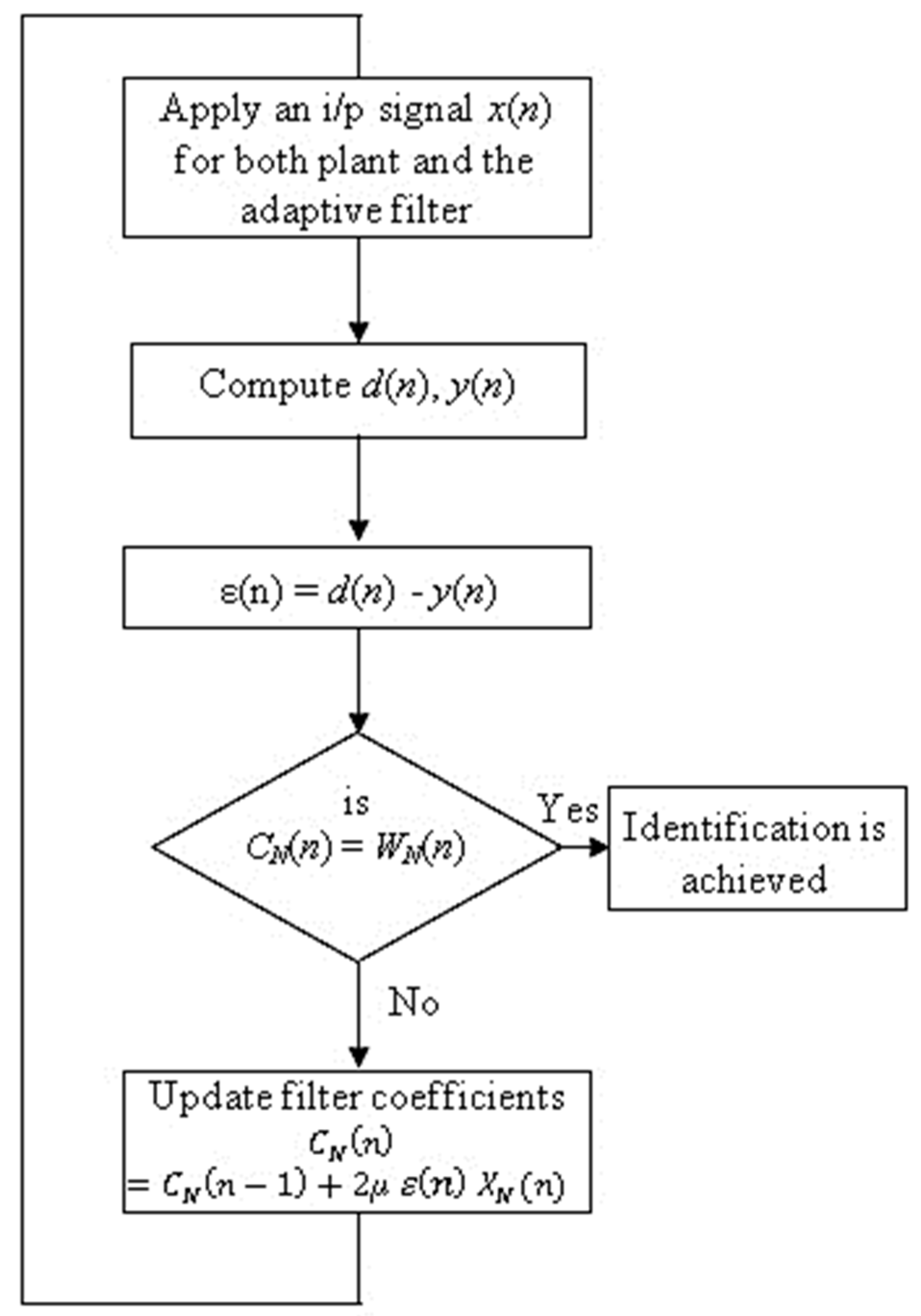

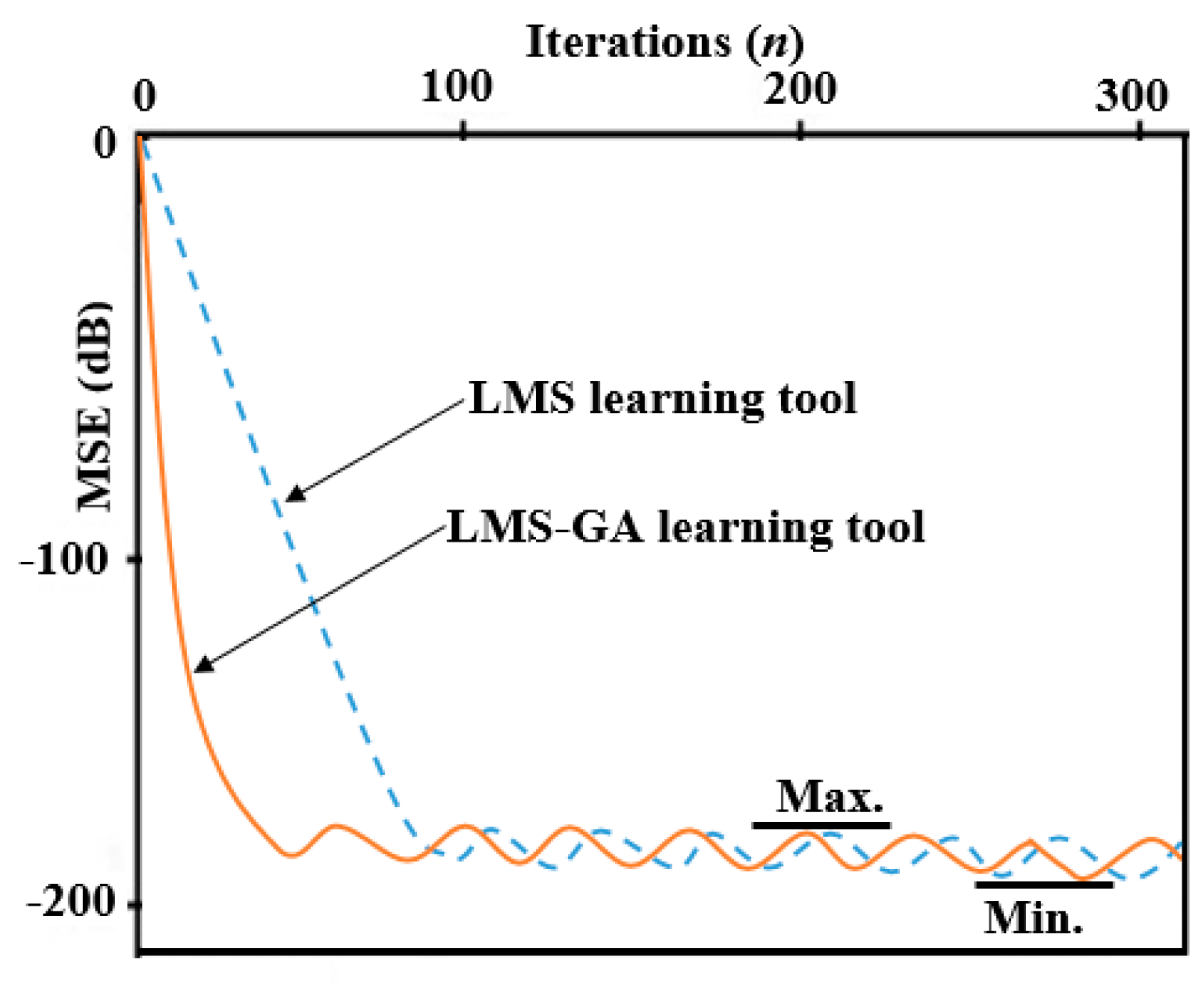

2.2. The LMS Algorithm and Adaptive FIR Filtering

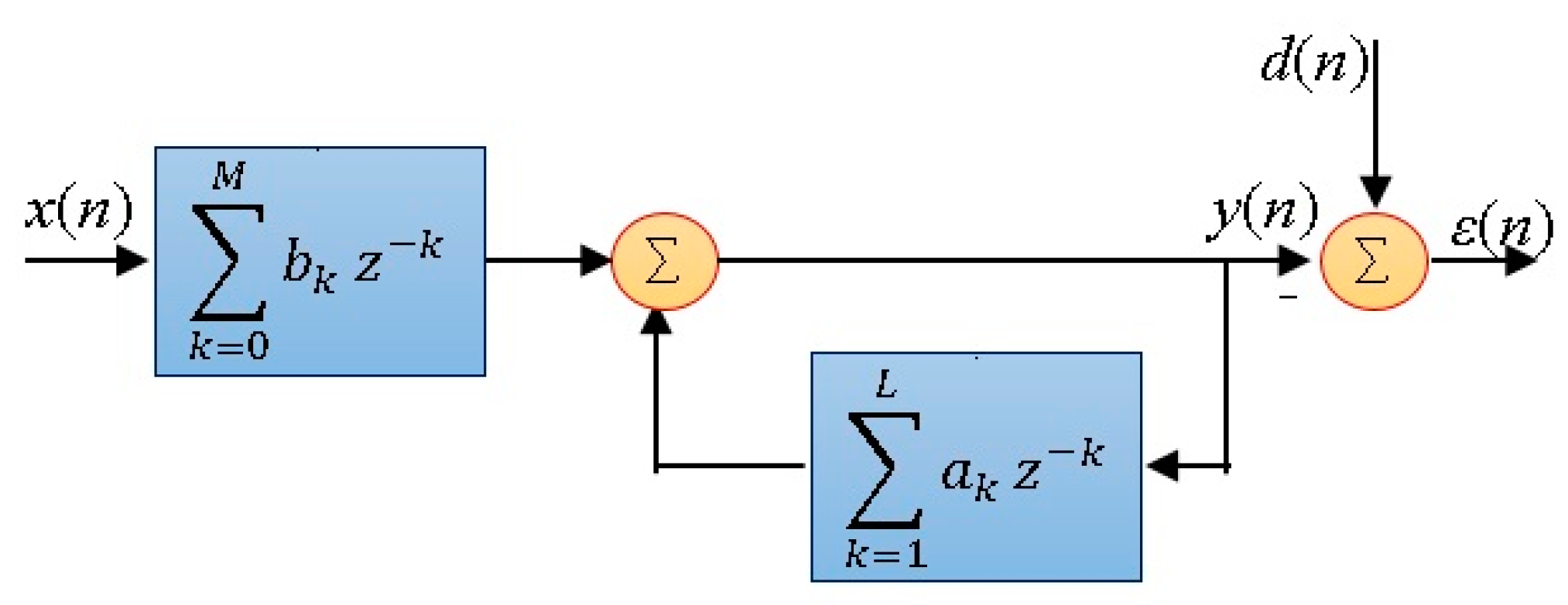

2.3. Adaptation of IIR Digital Filter Based on LMS Algorithm

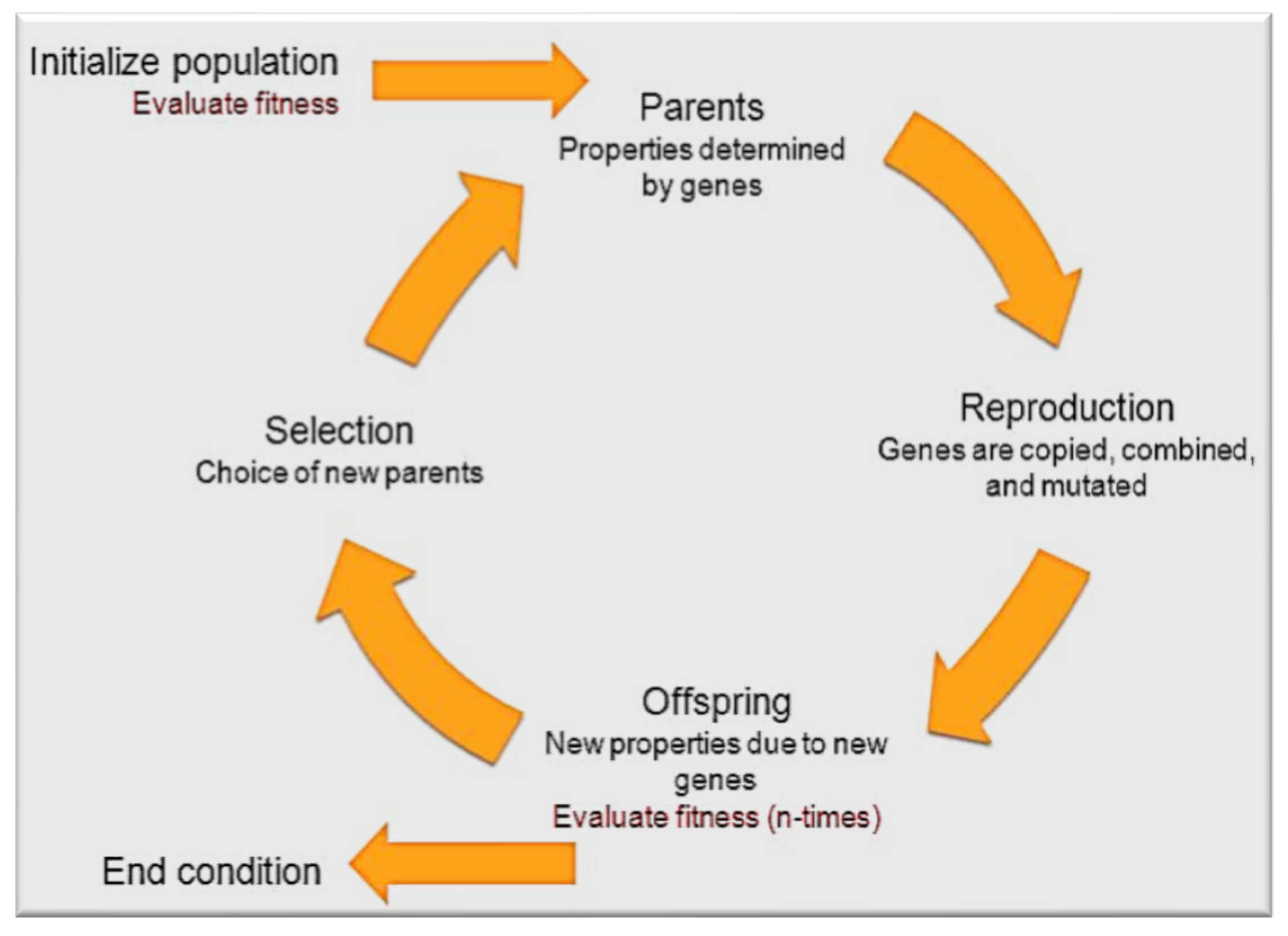

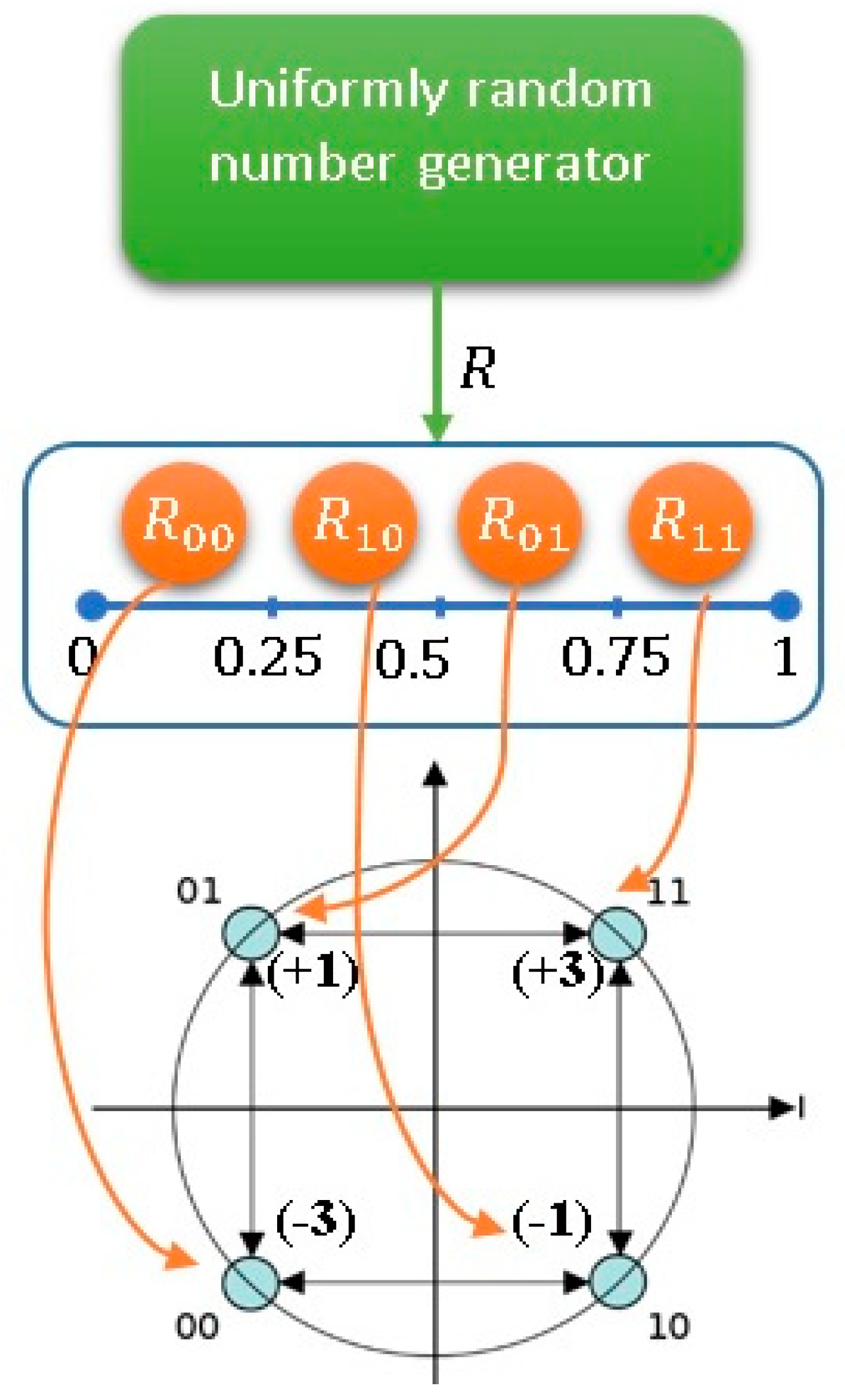

3. Evolutionary Computation: The Genetic Algorithm (GA)

4. Proposed Adaptive System Identification for FIR and IIR Digital Filters

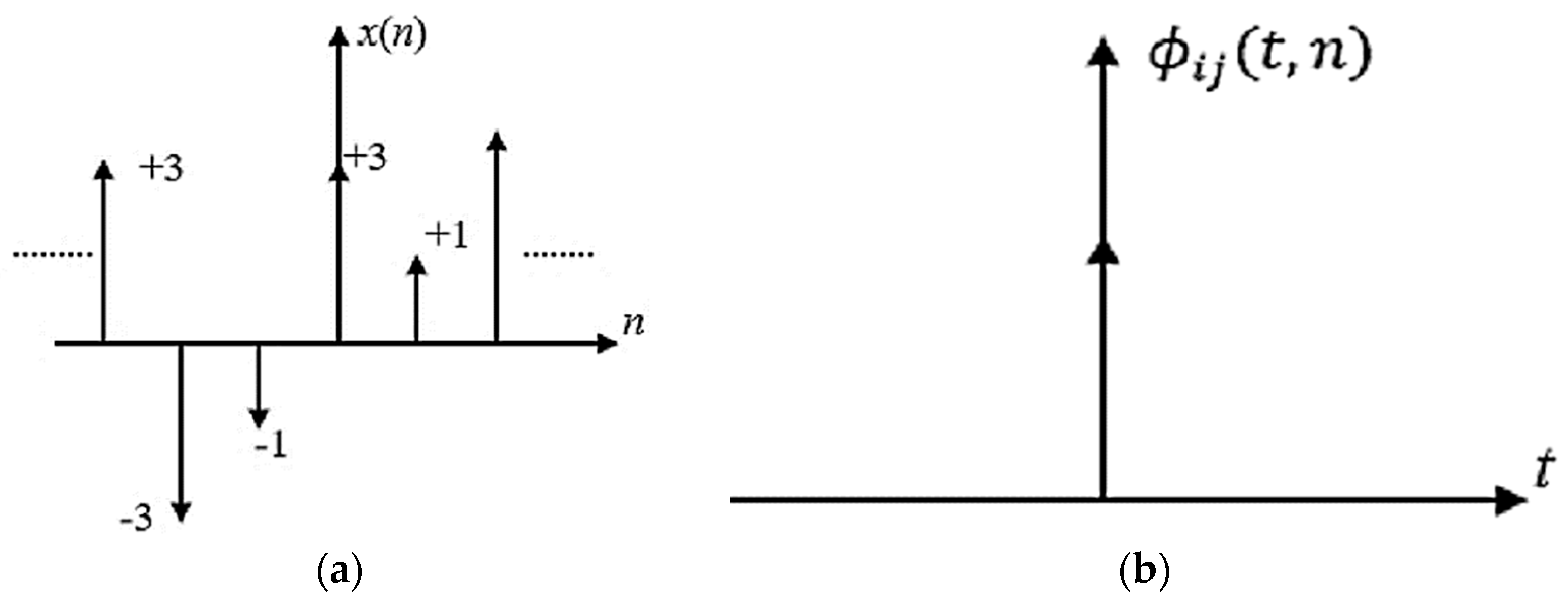

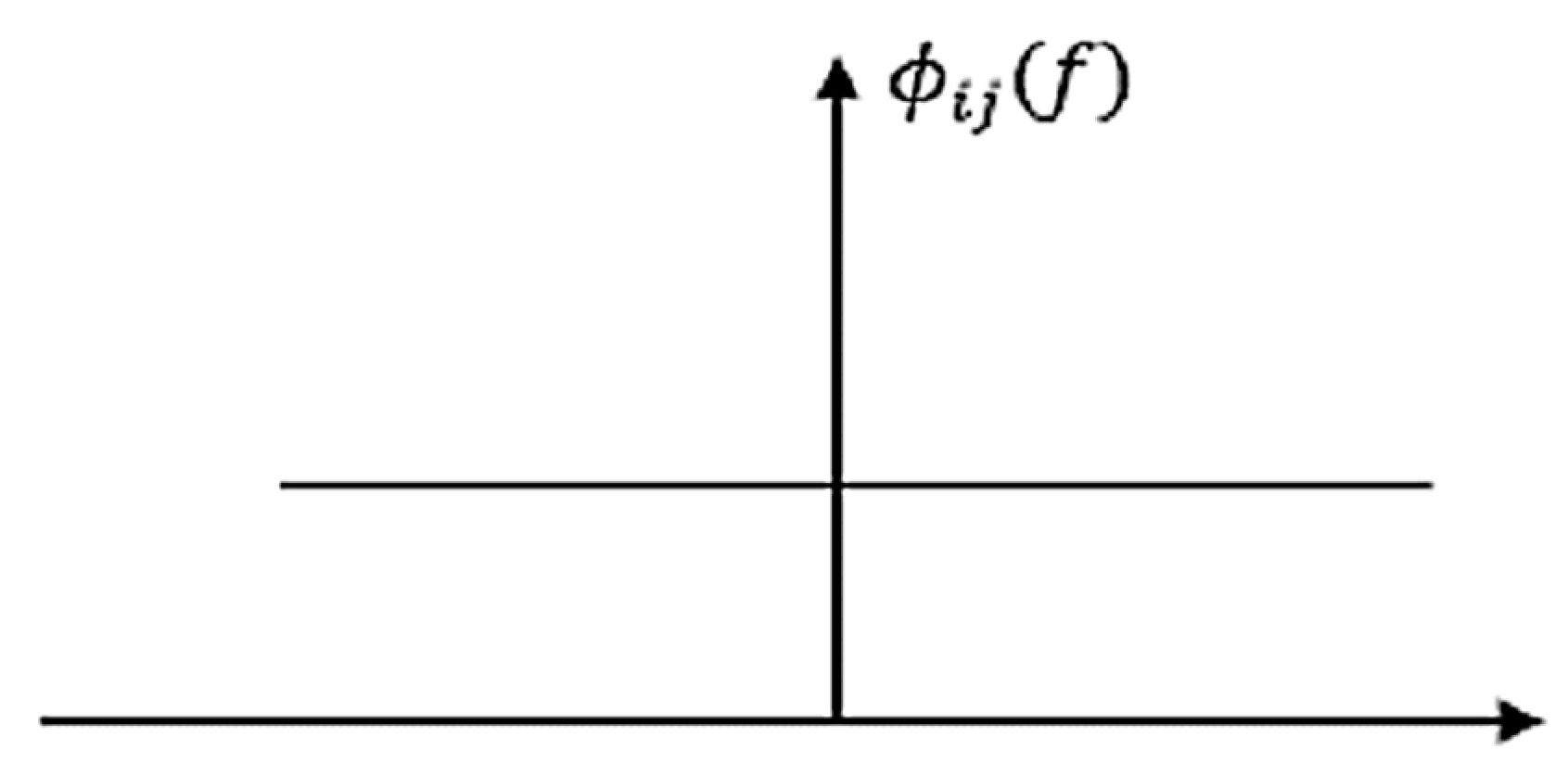

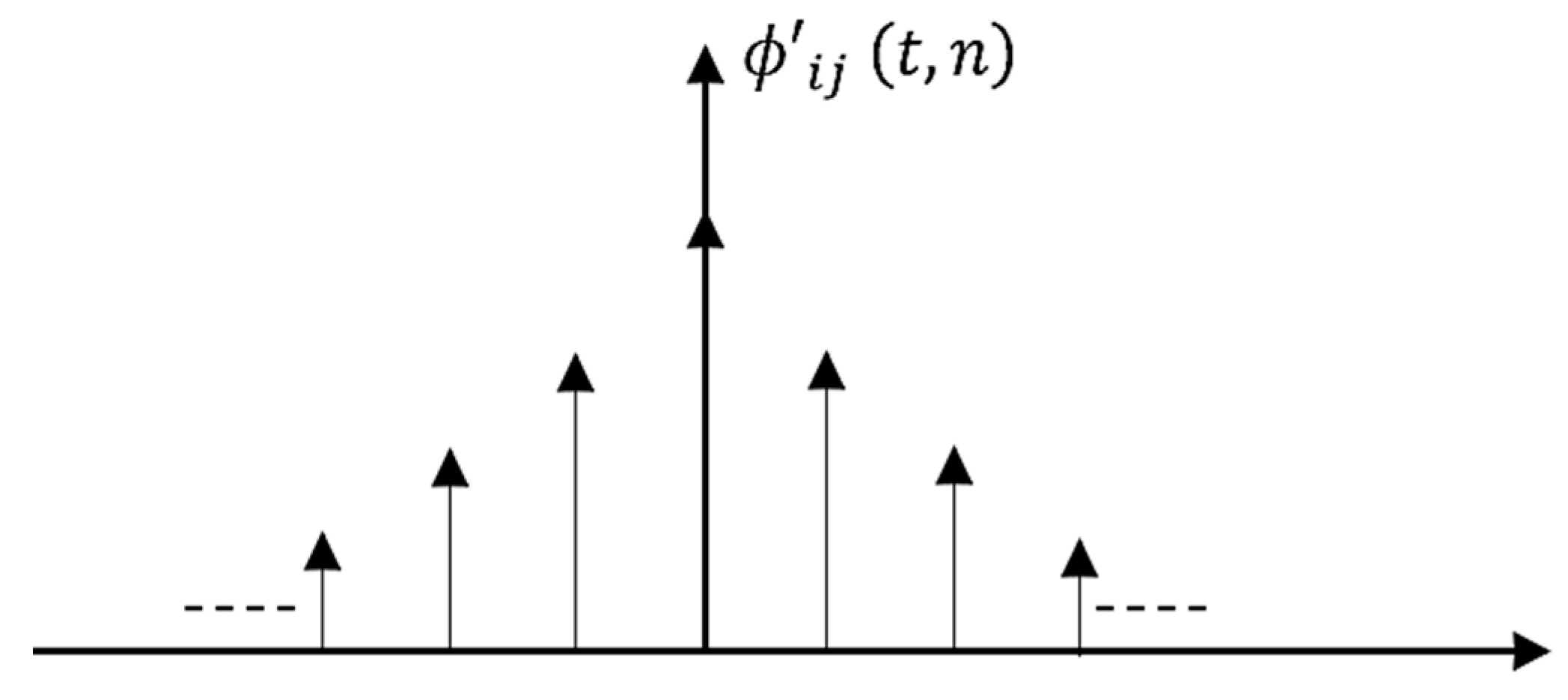

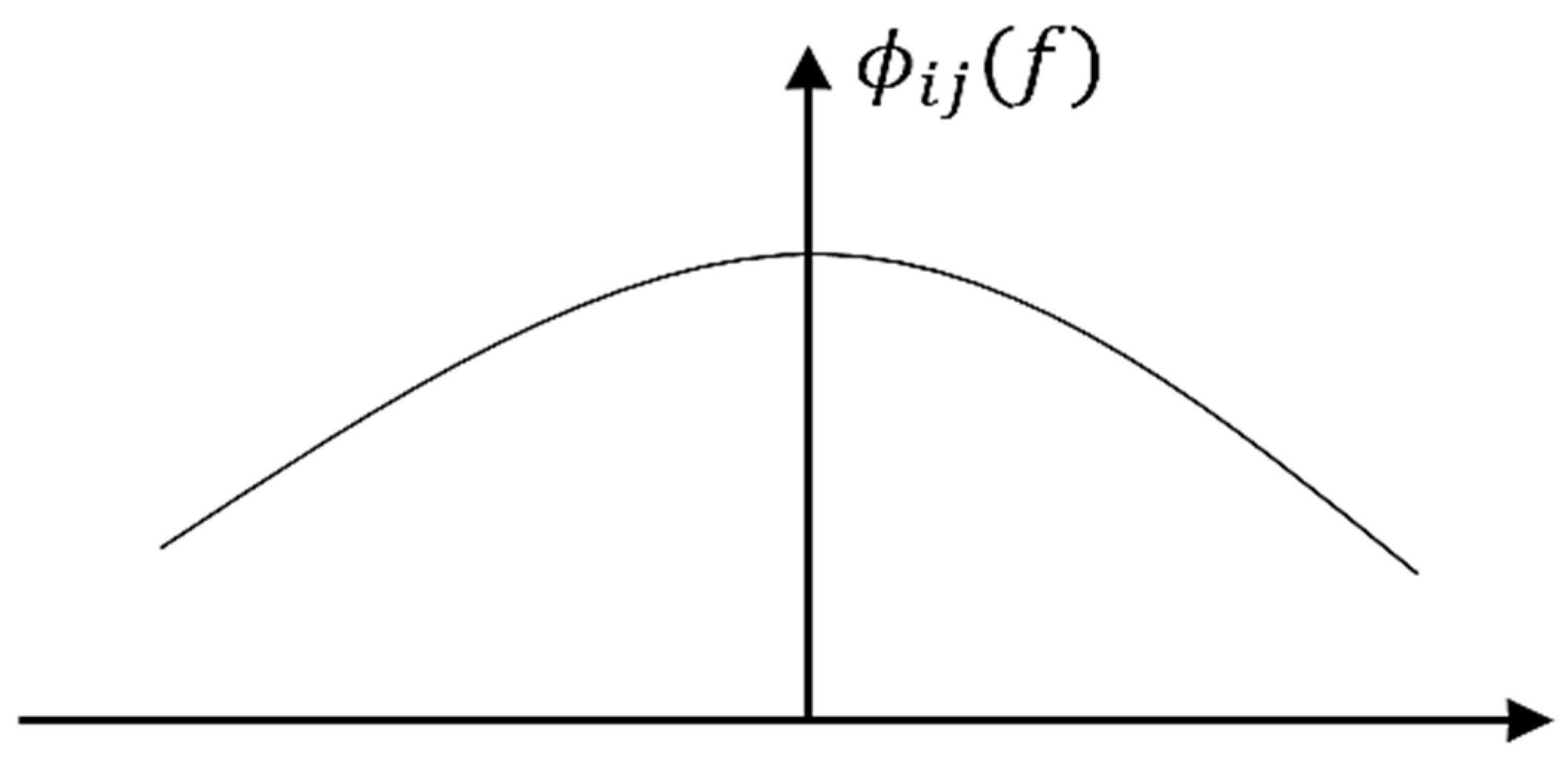

4.1. The Effect of Colored Signal On the Adaptation Process of LMS Algorithm

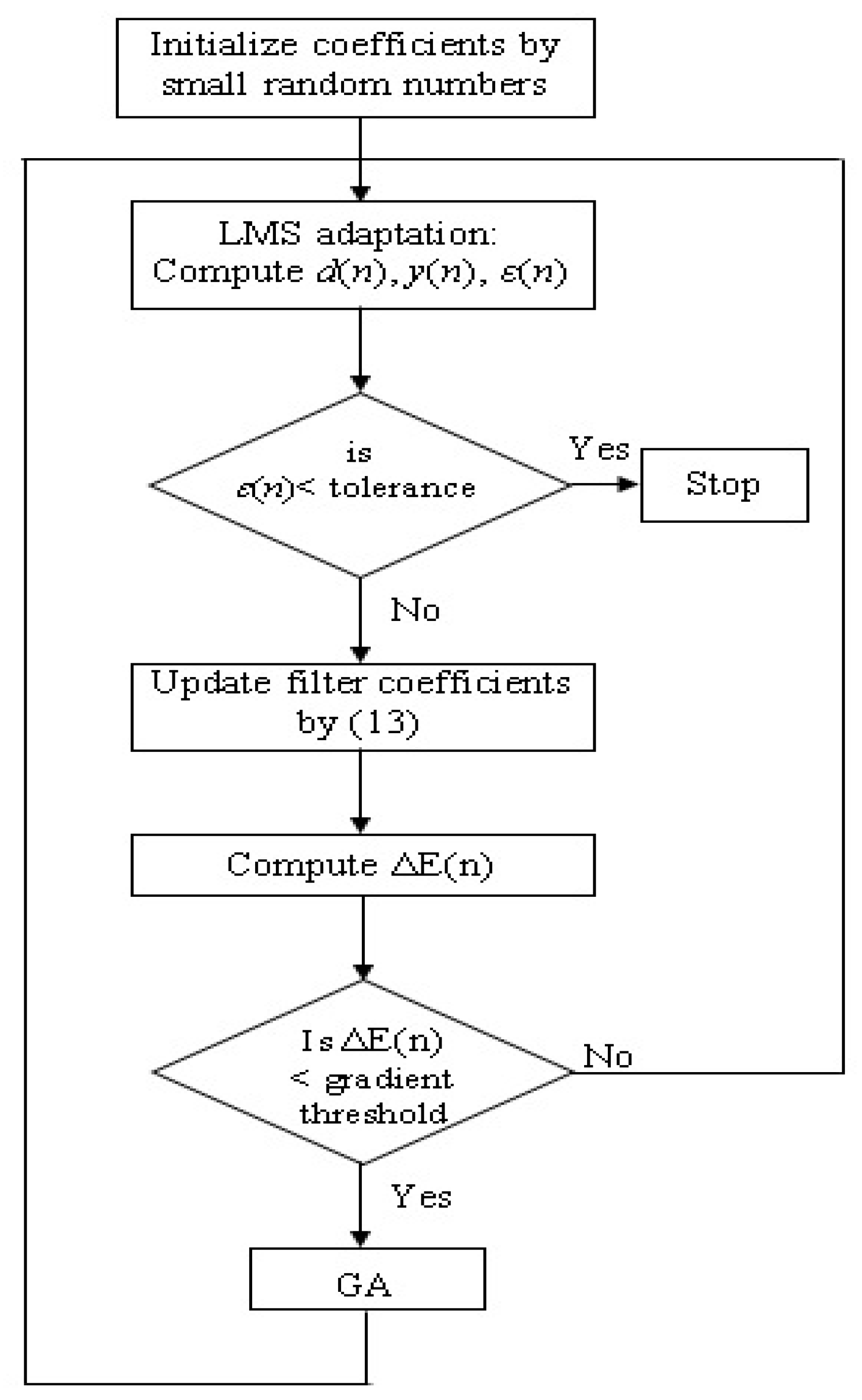

4.2. The Integrated LMS Algorithm with Genetic Search Approach (LMS-GA)

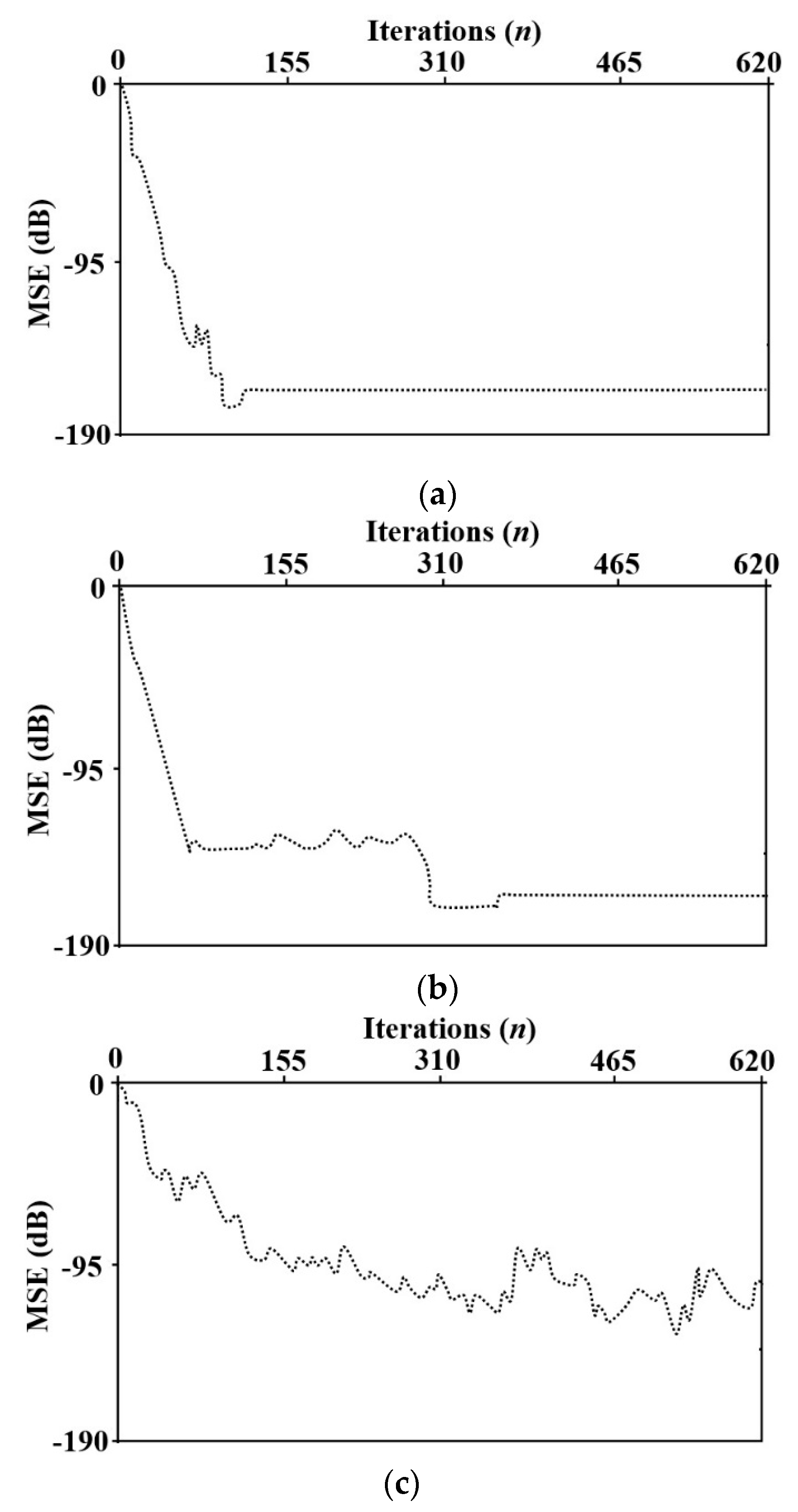

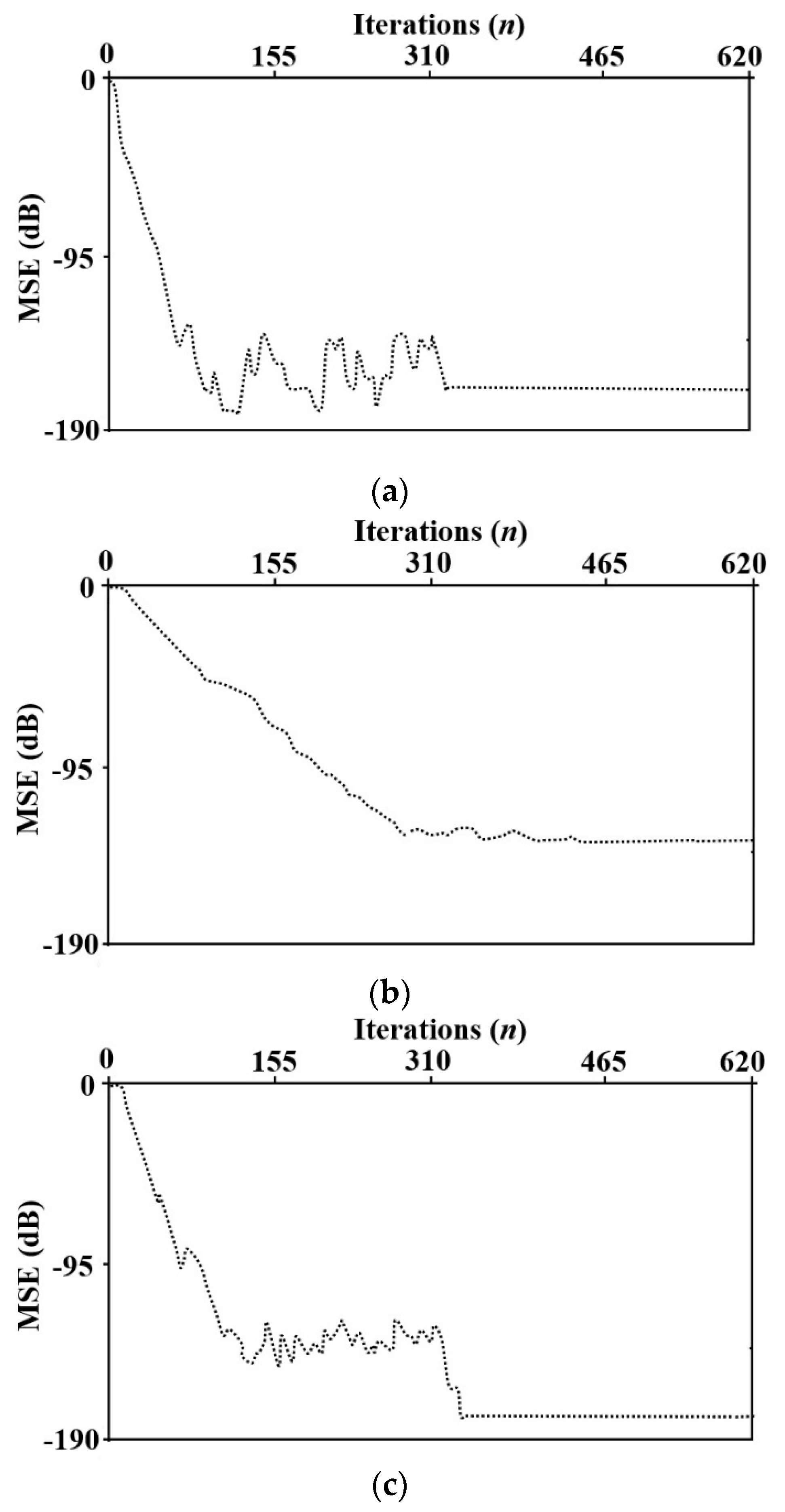

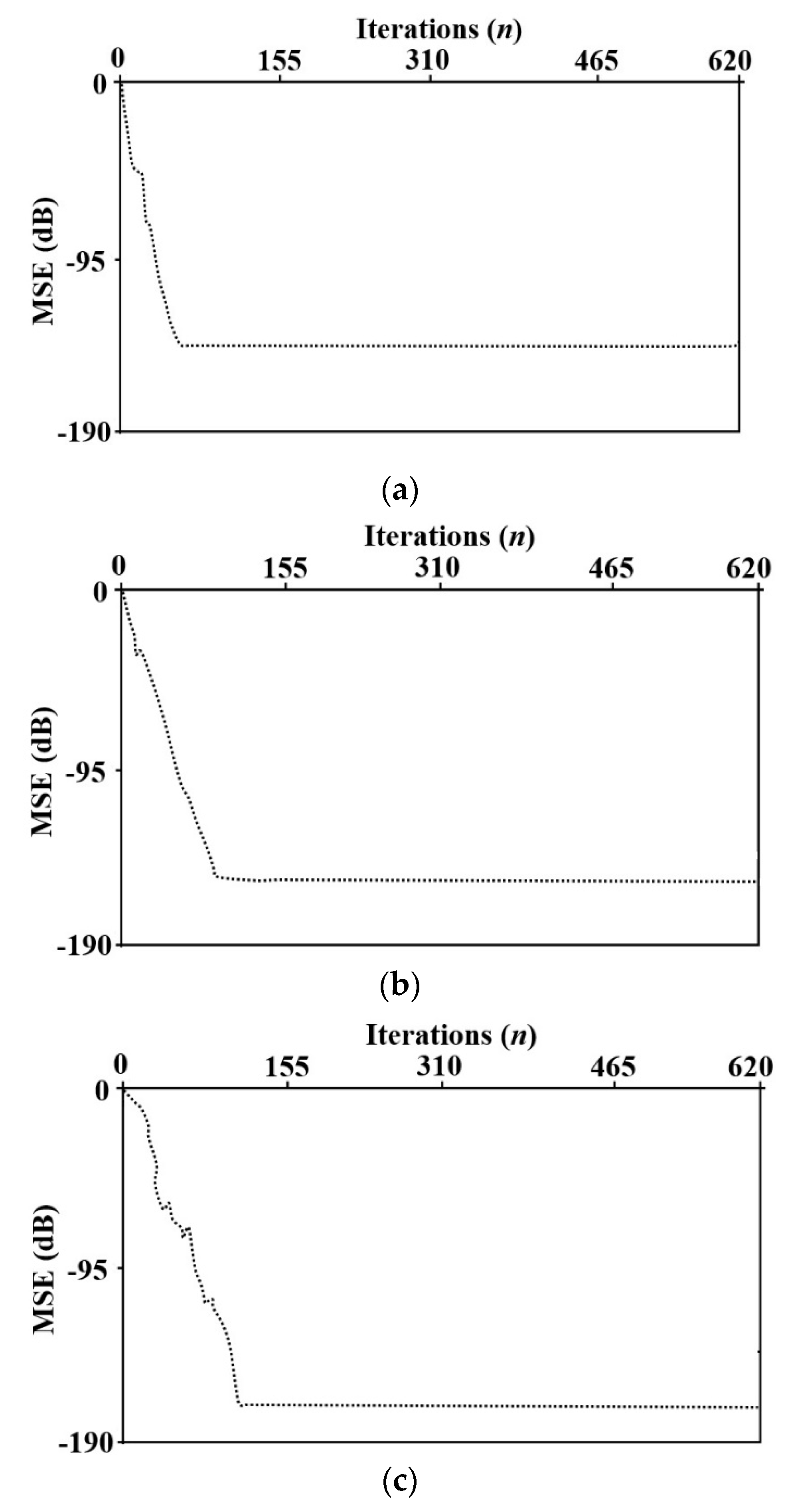

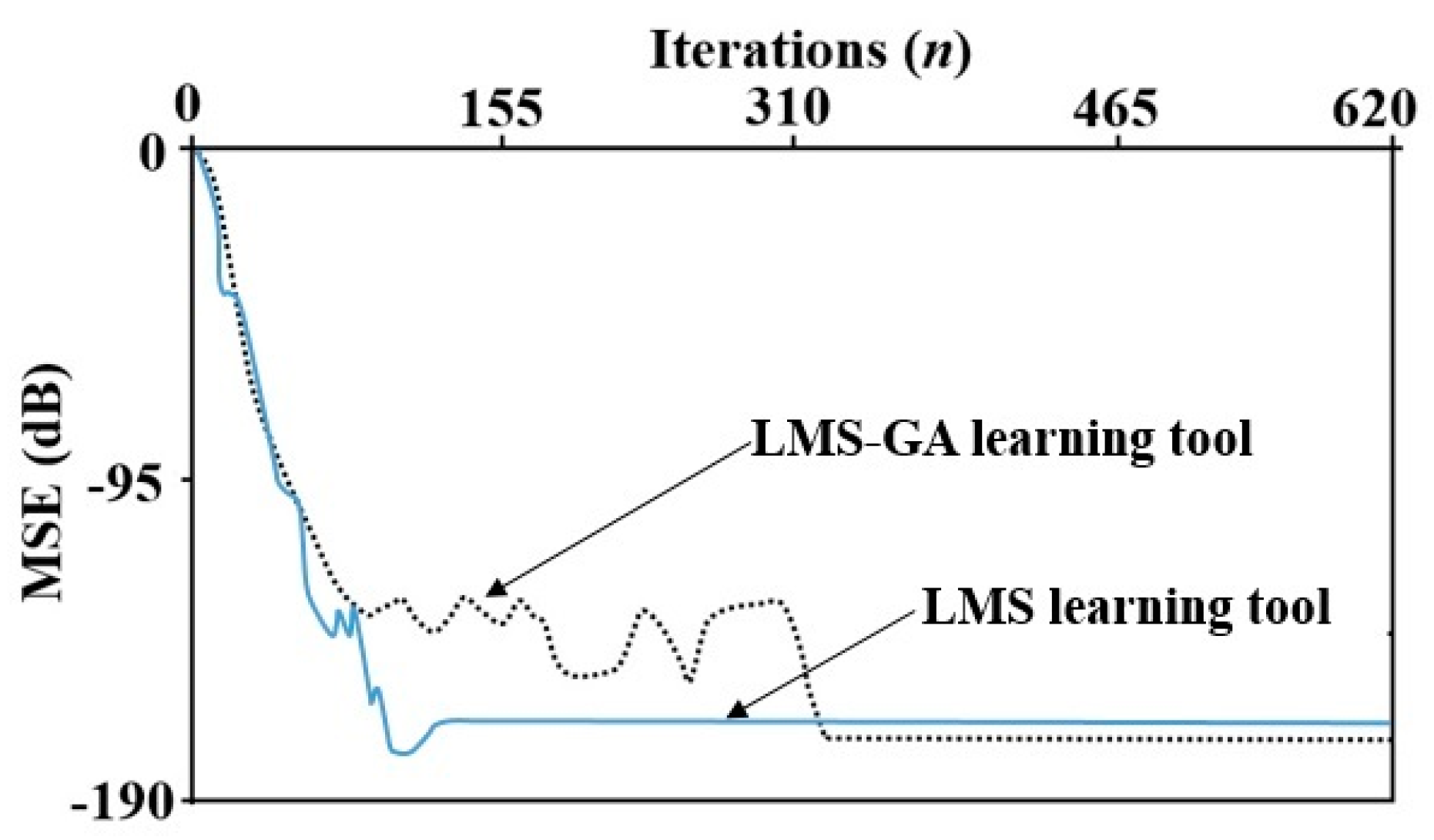

5. Numerical Results and Simulations

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Widrow, B.; Stearns, S.D. Adaptive Signal Processing; Prentice-Hall: Upper Saddle River, NJ, USA, 1985. [Google Scholar]

- Avalos, J.G.; Sanchez, J.C.; Velazquez, J. Applications of Adaptive Filtering. In Adaptive Filtering Applications; InTech: London, UK, 2011; pp. 1–20. ISBN 978-953-307-306-4. [Google Scholar]

- Raitio, T.; Suni, A.; Yamagishi, J.; Pulakka, H.; Nurminen, J.; Vainio, M.; Alku, P. HMM-Based Speech Synthesis Utilizing Glottal Inverse Filtering. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 153–165. [Google Scholar] [CrossRef]

- Karaboga, N.; Latifoglu, F. Elimination of noise on transcranial Doppler signal using IIR filters designed with artificial bee colony—ABC-algorithm. Digit. Signal Process. 2013, 23, 1051–1058. [Google Scholar] [CrossRef]

- Khodabandehlou, H.; Fadali, M.S. Nonlinear System Identification using Neural Networks and Trajectory-Based Optimization. arXiv 2018, arXiv:1804.10346. [Google Scholar]

- Zhang, W. System Identification Based on a Generalized ADALINE Neural Network. In Proceedings of the American Control Conference (ACC), New York, NY, USA, 11–13 July 2007; Volume 11, pp. 4792–4797. [Google Scholar]

- Jaeger, H. Adaptive nonlinear system identification with echo state networks. In Proceedings of the Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2003; pp. 593–600. [Google Scholar]

- Subudhi, B.; Jena, D. A differential evolution based neural network approach to nonlinear system identification. Appl. Soft Comput. J. 2011, 11, 861–871. [Google Scholar] [CrossRef]

- Ibraheem, K.I. System Identification of Thermal Process using Elman Neural Networks with No Prior Knowledge of System Dynamics. Int. J. Comput. Appl. 2017, 161, 38–46. [Google Scholar]

- Panda, G.; Mohan, P.; Majhi, B. Expert Systems with Applications IIR system identification using cat swarm optimization. Expert Syst. Appl. 2011, 38, 12671–12683. [Google Scholar] [CrossRef]

- Sarangi, A.; Sarangi, S.K.; Mukherjee, M.; Panigrahi, S.P. System identification by Crazy-cat swarm optimization. In Proceedings of the 2015 International Conference on Microwave, Optical and Communication Engineering (ICMOCE), Bhubaneswar, India, 18–20 December 2015; pp. 439–442. [Google Scholar]

- Jiang, S.; Wang, Y.; Ji, Z. A new design method for adaptive IIR system identification using hybrid particle swarm optimization and gravitational search algorithm. Nonlinear Dyn. 2014, 79, 2553–2576. [Google Scholar] [CrossRef]

- Widrow, B.; Kamenetsky, M. On the statistical efficiency of the LMS family of adaptive algorithms. IEEE Trans. Inf. Theory 1984, 30, 211–221. [Google Scholar] [CrossRef]

- Widrow, B.; Mccool, J.; Ball, M. The Complex LMS Algorithm. Proc. IEEE 1974, 63, 719–720. [Google Scholar] [CrossRef]

- Ghauri, S.A.; Sohail, M.F. System identification using LMS, NLMS and RLS. In Proceedings of the 2013 IEEE Student Conference on Research and Development (SCOReD), Putrajaya, Malaysia, 16–17 December 2013; pp. 65–69. [Google Scholar]

- Chen, W.; Nemoto, T.; Kobayashi, T.; Saito, T.; Kasuya, E.; Honda, Y. ECG and heart rate detection of prenatal cattle foetus using adaptive digital filtering. In Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 23–28 July 2000; pp. 962–965. [Google Scholar]

- Ma, G.; Gran, F.; Jacobsen, F.; Agerkvist, F.T. Adaptive feedback cancellation with band-limited LPC vocoder in digital hearing aids. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 677–687. [Google Scholar]

- Pereira, R.R.; Silva, C.H.d.; Silva, L.E.B.d.; Lambert-Torres, G. Application of Adaptive Filters in Active Power Filters. In Proceedings of the Brazilian Power Electronics Conference, Bonito-Mato Grosso do Sul, Brazil, 27 September–1 October 2009; Volume 1, p. 264. [Google Scholar]

- Su, F.H.; Tu, Y.Q.; Zhang, H.T.; Xu, H.; Shen, T.A. Multiple Adaptive Notch Filters Based A Time-varying Frequency Tracking Method for Coriolis Mass Flowmeter. In Proceedings of the World Congress on Intelligent Control and Automation (WCICA), Jinan, China, 7–9 July 2010; pp. 6782–6786. [Google Scholar]

- Neubauer, A. Non-Linear Adaptive Filters Based on Genetic Algorithms with Applications to Digital Signal Processing. In Proceedings of the 1995 IEEE International Conference on Evolutionary Computation, Perth, WA, Australia, 29 November–1 December 1995; pp. 3–8. [Google Scholar]

- Nowaková, J.; Pokorný, M. System Identification Using Genetic Algorithms. In Proceedings of the Fifth International Conference on Innovations in Bio-Inspired Computing and Applications IBICA, Ostrava, Czech Republic, 23–25 June 2014. [Google Scholar]

- Xia, W.; Wang, Y. A variable step-size diffusion LMS algorithm over networks with noisy links. Signal Process. 2018, 148, 205–213. [Google Scholar] [CrossRef]

- Niu, Q.; Chen, T. A New Variable Step Size LMS Adaptive Filtering Algorithm. In Proceedings of the Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1–4. [Google Scholar]

- Yang, Q.; Lee, K.; Kim, B. Development of Multi-Staged Adaptive Filtering Algorithm for Periodic Structure-Based Active Vibration Control System. Appl. Sci. 2019, 9, 611. [Google Scholar] [CrossRef]

- Huang, W.; Li, L.; Li, Q.; Yao, X. Diffusion robust variable step-size LMS algorithm over distributed networks. IEEE Access 2018, 6, 47511–47520. [Google Scholar] [CrossRef]

- Bershad, N.J.; Eweda, E.; Bermudez, J.C.M. Stochastic analysis of the LMS algorithm for cyclostationary colored Gaussian and non-Gaussian inputs. Digit. Signal Process. 2019, 88, 149–159. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.S.; Guo, H.; Yang, C.; Wang, X.L.; Liu, N.N. A normalized frequency-domain block filtered-x LMS algorithm for active vehicle interior noise control. Mech. Syst. Signal Process. 2019, 120, 150–165. [Google Scholar] [CrossRef]

- Zenere, A.; Zorzi, M. On the coupling of model predictive control and robust Kalman filtering. IET Control Theory Appl. 2018, 12, 1873–1881. [Google Scholar] [CrossRef]

- Zenere, A.; Zorzi, M. Model Predictive Control meets robust Kalman filtering. IFAC-PapersOnLine 2017, 50, 3774–3779. [Google Scholar] [CrossRef]

- Zorzi, M.; Sepulchre, R. AR Identification of Latent-Variable Graphical Models. IEEE Trans. Automat. Contr. 2016, 61, 2327–2340. [Google Scholar] [CrossRef]

- Ibraheem, K.I.; Alyaa, A.A. Application of an Evolutionary Optimization Technique to Routing in Mobile Wireless Networks. Int. J. Comput. Appl. 2014, 99, 24–31. [Google Scholar]

- Ibraheem, I.K.; Alyaa, A.A. Design of a Double-objective QoS Routing in Dynamic Wireless Networks using Evolutionary Adaptive Genetic Algorithm. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 156–165. [Google Scholar]

- Lu, T.; Zhu, J. Genetic Algorithm for Energy-Efficient QoS Multicast Routing. IEEE Commun. Lett. 2013, 17, 31–34. [Google Scholar] [CrossRef]

- Ahn, C.W.; Ramakrishna, R.S. A Genetic Algorithm for Shortest Path Routing Problem and the Sizing of Populations. IEEE Trans. Evol. Comput. 2002, 6, 566–579. [Google Scholar]

| Step Size µ | No. of Iterations | MSE/dB | Adaptive Filter Coefficients | |||

|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | |||

| 0.04 | 399 | −167.200 | 0.03 | 0.24 | 0.54 | 0.8 |

| 0.045 | 130 | −166.250 | 0.03 | 0.24 | 0.54 | 0.8 |

| 0.095 | 1911 | −166.278 | 0.03 | 0.24 | 0.54 | 0.8 |

| Step Size µ | No. of Iterations | MSE/dB | Adaptive Filter Coefficients | |||

|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | |||

| 0.9 | 2320 | −135.591 | 0.03 | 0.24 | 0.54 | 0.8 |

| 3 | 358 | −163.131 | 0.03 | 0.24 | 0.54 | 0.8 |

| 4 | 362 | −173.604 | 0.03 | 0.24 | 0.54 | 0.8 |

| Step Size µ | No. of Iterations | MSE/dB | Adaptive Filter Coefficients | |

|---|---|---|---|---|

| a | b | |||

| 0.04 | 142 | −157.373 | −0.2 | 0.6 |

| 0.06 | 63 | −143.939 | −0.2 | 0.6 |

| 0.1 | 134 | −174.030 | −0.2 | 0.6 |

| Standard LMS Algorithm with Optimum µ | LMS-GA with Optimum µ | ||

|---|---|---|---|

| No. of Iterations | 130 | 336 | |

| MSE (dB) | −166.250 | −173.604 | |

| Filter Coefficients | C1 | 0.03 | 0.03 |

| C2 | 0.24 | 0.24 | |

| C3 | 0.54 | 0.54 | |

| C4 | 0.8 | 0.8 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Humaidi, A.J.; Kasim Ibraheem, I.; Ajel, A.R. A Novel Adaptive LMS Algorithm with Genetic Search Capabilities for System Identification of Adaptive FIR and IIR Filters. Information 2019, 10, 176. https://doi.org/10.3390/info10050176

Humaidi AJ, Kasim Ibraheem I, Ajel AR. A Novel Adaptive LMS Algorithm with Genetic Search Capabilities for System Identification of Adaptive FIR and IIR Filters. Information. 2019; 10(5):176. https://doi.org/10.3390/info10050176

Chicago/Turabian StyleHumaidi, Amjad J., Ibraheem Kasim Ibraheem, and Ahmed R. Ajel. 2019. "A Novel Adaptive LMS Algorithm with Genetic Search Capabilities for System Identification of Adaptive FIR and IIR Filters" Information 10, no. 5: 176. https://doi.org/10.3390/info10050176

APA StyleHumaidi, A. J., Kasim Ibraheem, I., & Ajel, A. R. (2019). A Novel Adaptive LMS Algorithm with Genetic Search Capabilities for System Identification of Adaptive FIR and IIR Filters. Information, 10(5), 176. https://doi.org/10.3390/info10050176