A Computational Study of Executive Dysfunction in Amyotrophic Lateral Sclerosis

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Computerized Wisconsin Card Sorting Test

2.3. Error Analysis

2.4. Computational Modeling

3. Results

3.1. Error Analysis

3.2. Computational Modeling

4. Discussion

4.1. Implications for Neuropsychological Sequelae of ALS

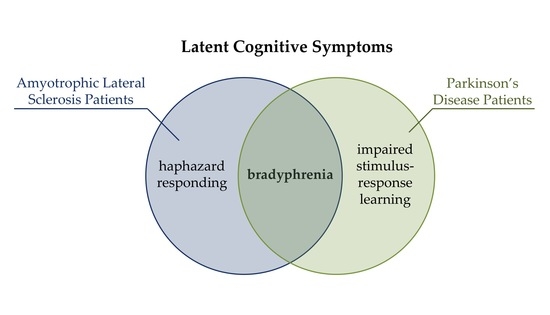

4.2. Computational Modeling Provides Nosologically Specific Indicators of Executive Dysfunctions

4.3. Implications for Neuropsychological Assessment

4.4. Study Limitations and Directions for Future Research

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| HC (N = 21) | ALS (N = 18) | Bayes Factor | ||||||

|---|---|---|---|---|---|---|---|---|

| Maximum | Mean | SD | n | Mean | SD | n | ||

| Age (years) | 57.67 | 9.16 | 21 | 58.11 | 10.05 | 18 | 0.32 | |

| Female [%] | 22% | 29% | 0.91 # | |||||

| Education (years) | 14.29 | 2.93 | 21 | 13.92 | 2.16 | 18 | 0.34 | |

| Disease Duration (months) | 50.47 | 82.93 | 17 | |||||

| FVC | 85.75 | 12.84 | 16 | |||||

| ESS | 24 | 6.12 | 3.06 | 17 | ||||

| ALSFRS-EX: total | 60 | 47.41 | 6.99 | 17 | ||||

| Bulbar Subscore | 16 | 13.94 | 1.89 | 17 | ||||

| Fine Motor Subscore | 16 | 11.41 | 2.87 | 17 | ||||

| Gross Motor Subscore | 16 | 11.12 | 5.02 | 17 | ||||

| Respiratory Subscore | 16 | 10.94 | 1.71 | 17 | ||||

| Progression Rate | 16 | 0.71 | 0.57 | 17 | ||||

| MoCA | 30 | 27.24 | 2.68 | 21 | 26.72 | 3.18 | 18 | 0.35 |

| FAB | 18 | 17.19 | 1.25 | 21 | 16.50 | 2.19 | 16 | 0.57 |

| ECAS: total | 136 | 104.38 | 11.58 | 21 | 103.89 | 12.19 | 18 | 0.32 |

| ECAS: ALS-Specific | 100 | 75.48 | 10.63 | 21 | 75.89 | 10.26 | 18 | 0.40 |

| Language | 28 | 26.62 | 1.94 | 21 | 25.94 | 2.13 | 18 | 0.48 |

| Fluency | 24 | 11.52 | 5.83 | 21 | 10.89 | 4.13 | 18 | 0.33 |

| Executive | 48 | 37.33 | 4.50 | 21 | 39.06 | 5.92 | 18 | 0.48 |

| ECAS: ALS-Nonspecific | 36 | 28.86 | 3.07 | 21 | 28.00 | 3.66 | 18 | 0.31 |

| Memory | 24 | 17.24 | 2.83 | 21 | 16.44 | 3.54 | 18 | 0.40 |

| Visuospatial | 12 | 11.62 | 0.74 | 21 | 11.56 | 0.62 | 18 | 0.32 |

Appendix B

References

- Duncan, J.; Emslie, H.; Williams, P.; Johnson, R.; Freer, C. Intelligence and the frontal lobe: The organization of goal-directed behavior. Cogn. Psychol. 1996, 30, 257–303. [Google Scholar] [CrossRef] [PubMed]

- Grafman, J.; Litvan, I. Importance of deficits in executive functions. Lancet 1999, 354, 1921–1923. [Google Scholar] [CrossRef]

- MacPherson, S.E.; Gillebert, C.R.; Robinson, G.A.; Vallesi, A. Editorial: Intra- and inter-individual variability of executive functions: Determinant and modulating factors in healthy and pathological conditions. Front. Psychol. 2019, 10, 432. [Google Scholar] [CrossRef] [PubMed]

- Diamond, A. Executive functions. Annu. Rev. Psychol. 2013, 64, 135–168. [Google Scholar] [CrossRef] [Green Version]

- Miller, E.K.; Cohen, J.D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 2001, 24, 167–202. [Google Scholar] [CrossRef] [Green Version]

- Berg, E.A. A simple objective technique for measuring flexibility in thinking. J. Gen. Psychol. 1948, 39, 15–22. [Google Scholar] [CrossRef]

- Grant, D.A.; Berg, E.A. A behavioral analysis of degree of reinforcement and ease of shifting to new responses in a Weigl-type card-sorting problem. J. Exp. Psychol. 1948, 38, 404–411. [Google Scholar] [CrossRef]

- Heaton, R.K.; Chelune, G.J.; Talley, J.L.; Kay, G.G.; Curtiss, G. Wisconsin Card Sorting Test Manual: Revised and Expanded; Psychological Assessment Resources Inc.: Odessa, FL, USA, 1993. [Google Scholar]

- MacPherson, S.E.; Sala, S.D.; Cox, S.R.; Girardi, A.; Iveson, M.H. Handbook of Frontal Lobe Assessment; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Lezak, M.D.; Howieson, D.B.; Bigler, E.D.; Tranel, D. Neuropsychological Assessment, 5th ed.; Oxford University Press: New York, NY, USA, 2012. [Google Scholar]

- Strauss, E.; Sherman, E.M.S.; Spreen, O. A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary; Oxford University Press: New York, NY, USA, 2006; ISBN 9780195159578. [Google Scholar]

- Lange, F.; Seer, C.; Kopp, B. Cognitive flexibility in neurological disorders: Cognitive components and event-related potentials. Neurosci. Biobehav. Rev. 2017, 83, 496–507. [Google Scholar] [CrossRef]

- Barceló, F. The Madrid card sorting test (MCST): A task switching paradigm to study executive attention with event-related potentials. Brain Res. Protoc. 2003, 11, 27–37. [Google Scholar] [CrossRef]

- Lange, F.; Kröger, B.; Steinke, A.; Seer, C.; Dengler, R.; Kopp, B. Decomposing card-sorting performance: Effects of working memory load and age-related changes. Neuropsychology 2016, 30, 579–590. [Google Scholar] [CrossRef]

- Lange, F.; Vogts, M.-B.; Seer, C.; Fürkötter, S.; Abdulla, S.; Dengler, R.; Kopp, B.; Petri, S. Impaired set-shifting in amyotrophic lateral sclerosis: An event-related potential study of executive function. Neuropsychology 2016, 30, 120–134. [Google Scholar] [CrossRef] [PubMed]

- Kopp, B.; Maldonado, N.; Scheffels, J.F.; Hendel, M.; Lange, F. A meta-analysis of relationships between measures of Wisconsin card sorting and intelligence. Brain Sci. 2019, 9, 349. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beeldman, E.; Raaphorst, J.; Twennaar, M.; de Visser, M.; Schmand, B.A.; de Haan, R.J. The cognitive profile of ALS: A systematic review and meta-analysis update. J. Neurol. Neurosurg. Psychiatry 2016, 87, 611–619. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lange, F.; Brückner, C.; Knebel, A.; Seer, C.; Kopp, B. Executive dysfunction in Parkinson’s disease: A meta-analysis on the Wisconsin Card Sorting Test literature. Neurosci. Biobehav. Rev. 2018, 93, 38–56. [Google Scholar] [CrossRef]

- Binetti, G.; Magni, E.; Padovani, A.; Cappa, S.F.; Bianchetti, A.; Trabucchi, M. Executive dysfunction in early Alzheimer’s disease. J. Neurol. Neurosurg. Psychiatry 1996, 60, 91–93. [Google Scholar] [CrossRef] [Green Version]

- Crawford, J.R.; Blackmore, L.M.; Lamb, A.E.; Simpson, S.A. Is there a differential deficit in fronto-executive functioning in Huntington’s Disease? Clin. Neuropsychol. Assess. 2000, 1, 4–20. [Google Scholar]

- Wijesekera, L.C.; Leigh, P.N. Amyotrophic lateral sclerosis. Orphanet J. Rare Dis. 2009, 4, 3. [Google Scholar] [CrossRef] [Green Version]

- Abrahams, S.; Goldstein, L.H.; Kew, J.J.M.; Brooks, D.J.; Lloyd, C.M.; Frith, C.D.; Leigh, P.N. Frontal lobe dysfunction in amyotrophic lateral sclerosis. Brain 1996, 119, 2105–2120. [Google Scholar] [CrossRef] [Green Version]

- Kew, J.J.M.; Goldstein, L.H.; Leigh, P.N.; Abrahams, S.; Cosgrave, N.; Passingham, R.E.; Frackowiak, R.S.J.; Brooks, D.J. The relationship between abnormalities of cognitive function and cerebral activation in amyotrophic lateral sclerosis: A neuropsychological and positron emission tomography study. Brain 1993, 116, 1399–1423. [Google Scholar] [CrossRef]

- Pettit, L.D.; Bastin, M.E.; Smith, C.; Bak, T.H.; Gillingwater, T.H.; Abrahams, S. Executive deficits, not processing speed relates to abnormalities in distinct prefrontal tracts in amyotrophic lateral sclerosis. Brain 2013, 136, 3290–3304. [Google Scholar] [CrossRef] [Green Version]

- Tsermentseli, S.; Leigh, P.N.; Goldstein, L.H. The anatomy of cognitive impairment in amyotrophic lateral sclerosis: More than frontal lobe dysfunction. Cortex 2012, 48, 166–182. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, L.H.; Abrahams, S. Changes in cognition and behaviour in amyotrophic lateral sclerosis: Nature of impairment and implications for assessment. Lancet Neurol. 2013, 12, 368–380. [Google Scholar] [CrossRef]

- Ringholz, G.M.; Appel, S.H.; Bradshaw, M.; Cooke, N.A.; Mosnik, D.M.; Schulz, P.E. Prevalence and patterns of cognitive impairment in sporadic ALS. Neurology 2005, 65, 586–590. [Google Scholar] [CrossRef]

- Phukan, J.; Elamin, M.; Bede, P.; Jordan, N.; Gallagher, L.; Byrne, S.; Lynch, C.; Pender, N.; Hardiman, O. The syndrome of cognitive impairment in amyotrophic lateral sclerosis: A population-based study. J. Neurol. Neurosurg. Psychiatry 2012, 83, 102–108. [Google Scholar] [CrossRef] [PubMed]

- Hawkes, C.H.; Del Tredici, K.; Braak, H. A timeline for Parkinson’s disease. Parkinsonism Relat. Disord. 2010, 16, 79–84. [Google Scholar] [CrossRef]

- Braak, H.; Del Tredici, K. Nervous system pathology in sporadic Parkinson disease. Neurology 2008, 70, 1916–1925. [Google Scholar] [CrossRef]

- Demakis, G.J. A meta-analytic review of the sensitivity of the Wisconsin Card Sorting Test to frontal and lateralized frontal brain damage. Neuropsychology 2003, 17, 255–264. [Google Scholar] [CrossRef]

- Lange, F.; Seer, C.; Müller-Vahl, K.; Kopp, B. Cognitive flexibility and its electrophysiological correlates in Gilles de la Tourette syndrome. Dev. Cogn. Neurosci. 2017, 27, 78–90. [Google Scholar] [CrossRef]

- Lange, F.; Seer, C.; Salchow, C.; Dengler, R.; Dressler, D.; Kopp, B. Meta-analytical and electrophysiological evidence for executive dysfunction in primary dystonia. Cortex 2016, 82, 133–146. [Google Scholar] [CrossRef]

- Roberts, M.E.; Tchanturia, K.; Stahl, D.; Southgate, L.; Treasure, J. A systematic review and meta-analysis of set-shifting ability in eating disorders. Psychol. Med. 2007, 37, 1075–1084. [Google Scholar] [CrossRef] [Green Version]

- Romine, C. Wisconsin Card Sorting Test with children: A meta-analytic study of sensitivity and specificity. Arch. Clin. Neuropsychol. 2004, 19, 1027–1041. [Google Scholar] [CrossRef] [PubMed]

- Shin, N.Y.; Lee, T.Y.; Kim, E.; Kwon, J.S. Cognitive functioning in obsessive-compulsive disorder: A meta-analysis. Psychol. Med. 2014, 44, 1121–1130. [Google Scholar] [CrossRef] [PubMed]

- Snyder, H.R. Major depressive disorder is associated with broad impairments on neuropsychological measures of executive function: A meta-analysis and review. Psychol. Bull. 2013, 139, 81–132. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bosia, M.; Buonocore, M.; Guglielmino, C.; Pirovano, A.; Lorenzi, C.; Marcone, A.; Bramanti, P.; Cappa, S.F.; Aguglia, E.; Smeraldi, E.; et al. Saitohin polymorphism and executive dysfunction in schizophrenia. Neurol. Sci. 2012, 33, 1051–1056. [Google Scholar] [CrossRef]

- Roca, M.; Parr, A.; Thompson, R.; Woolgar, A.; Torralva, T.; Antoun, N.; Manes, F.; Duncan, J. Executive function and fluid intelligence after frontal lobe lesions. Brain 2010, 133, 234–247. [Google Scholar] [CrossRef]

- Steinke, A.; Lange, F.; Kopp, B. Parallel model-based and model-free reinforcement learning for card sorting performance. 2020; under review. [Google Scholar]

- Steinke, A.; Lange, F.; Seer, C.; Hendel, M.K.; Kopp, B. Computational modeling for neuropsychological assessment of bradyphrenia in Parkinson’s disease. J. Clin. Med. 2020, 9, 1158. [Google Scholar] [CrossRef]

- Bishara, A.J.; Kruschke, J.K.; Stout, J.C.; Bechara, A.; McCabe, D.P.; Busemeyer, J.R. Sequential learning models for the Wisconsin card sort task: Assessing processes in substance dependent individuals. J. Math. Psychol. 2010, 54, 5–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miyake, A.; Friedman, N.P. The nature and organization of individual differences in executive functions. Curr. Dir. Psychol. Sci. 2012, 21, 8–14. [Google Scholar] [CrossRef]

- Alvarez, J.A.; Emory, E. Executive function and the frontal lobes: A meta-analytic review. Neuropsychol. Rev. 2006, 16, 17–42. [Google Scholar] [CrossRef]

- Nyhus, E.; Barceló, F. The Wisconsin Card Sorting Test and the cognitive assessment of prefrontal executive functions: A critical update. Brain Cogn. 2009, 71, 437–451. [Google Scholar] [CrossRef]

- Steinke, A.; Lange, F.; Seer, C.; Kopp, B. Toward a computational cognitive neuropsychology of Wisconsin card sorts: A showcase study in Parkinson’s disease. Comput. Brain Behav. 2018, 1, 137–150. [Google Scholar] [CrossRef]

- Botvinick, M.M.; Plaut, D.C. Doing without schema hierarchies: A recurrent connectionist approach to normal and impaired routine sequential action. Psychol. Rev. 2004, 111, 395–429. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cooper, R.P.; Shallice, T. Hierarchical schemas and goals in the control of sequential behavior. Psychol. Rev. 2006, 113, 887–916. [Google Scholar] [CrossRef]

- Beste, C.; Humphries, M.; Saft, C. Striatal disorders dissociate mechanisms of enhanced and impaired response selection—Evidence from cognitive neurophysiology and computational modelling. NeuroImage Clin. 2014, 4, 623–634. [Google Scholar] [CrossRef]

- Forstmann, B.U.; Wagenmakers, E.-J. An Introduction to Model-Based Cognitive Neuroscience; Springer: New York, NY, USA, 2015; ISBN 978-1-4939-2235-2. [Google Scholar]

- Palminteri, S.; Justo, D.; Jauffret, C.; Pavlicek, B.; Dauta, A.; Delmaire, C.; Czernecki, V.; Karachi, C.; Capelle, L.; Durr, A.; et al. Critical roles for anterior insula and dorsal striatum in punishment-based avoidance learning. Neuron 2012, 76, 998–1009. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Palminteri, S.; Lebreton, M.; Worbe, Y.; Grabli, D.; Hartmann, A.; Pessiglione, M. Pharmacological modulation of subliminal learning in Parkinson’s and Tourette’s syndromes. Proc. Natl. Acad. Sci. USA 2009, 106, 19179–19184. [Google Scholar] [CrossRef] [Green Version]

- Ambrosini, E.; Arbula, S.; Rossato, C.; Pacella, V.; Vallesi, A. Neuro-cognitive architecture of executive functions: A latent variable analysis. Cortex 2019, 119, 441–456. [Google Scholar] [CrossRef]

- Giavazzi, M.; Daland, R.; Palminteri, S.; Peperkamp, S.; Brugières, P.; Jacquemot, C.; Schramm, C.; Cleret de Langavant, L.; Bachoud-Lévi, A.-C. The role of the striatum in linguistic selection: Evidence from Huntington’s disease and computational modeling. Cortex 2018, 109, 189–204. [Google Scholar] [CrossRef]

- Suzuki, S.; O’Doherty, J.P. Breaking human social decision making into multiple components and then putting them together again. Cortex 2020, 127, 221–230. [Google Scholar] [CrossRef] [Green Version]

- Cleeremans, A.; Dienes, Z. Computational models of implicit learning. In The Cambridge Handbook of Computational Psychology; Sun, R., Ed.; Cambridge University Press: New York, NY, USA, 2001; pp. 396–421. [Google Scholar]

- Beste, C.; Adelhöfer, N.; Gohil, K.; Passow, S.; Roessner, V.; Li, S.-C. Dopamine modulates the efficiency of sensory evidence accumulation during perceptual decision making. Int. J. Neuropsychopharmacol. 2018, 21, 649–655. [Google Scholar] [CrossRef]

- D’Alessandro, M.; Lombardi, L. A dynamic framework for modelling set-shifting performances. Behav. Sci. 2019, 9, 79. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kimberg, D.Y.; Farah, M.J. A unified account of cognitive impairments following frontal lobe damage: The role of working memory in complex, organized behavior. J. Exp. Psychol. Gen. 1993, 122, 411–428. [Google Scholar] [CrossRef] [PubMed]

- Levine, D.S.; Prueitt, P.S. Modeling some effects of frontal lobe damage—Novelty and perseveration. Neural Netw. 1989, 2, 103–116. [Google Scholar] [CrossRef]

- Granato, G.; Baldassarre, G. Goal-directed top-down control of perceptual representations: A computational model of the Wisconsin Card Sorting Test. In Proceedings of the 2019 Conference on Cognitive Computational Neuroscience, Berlin, Germany, 13–16 September 2019; Cognitive Computational Neuroscience: Brentwood, TN, USA, 2019. [Google Scholar]

- Gläscher, J.; Adolphs, R.; Tranel, D. Model-based lesion mapping of cognitive control using the Wisconsin Card Sorting Test. Nat. Commun. 2019, 10, 20. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- D’Alessandro, M.; Radev, S.T.; Voss, A.; Lombardi, L. A Bayesian brain model of adaptive behavior: An application to the Wisconsin Card Sorting Task. arXiv 2020, arXiv:1207.0580. [Google Scholar]

- Caso, A.; Cooper, R.P. A neurally plausible schema-theoretic approach to modelling cognitive dysfunction and neurophysiological markers in Parkinson’s disease. Neuropsychologia 2020, 140, 107359. [Google Scholar] [CrossRef] [PubMed]

- Amos, A. A computational model of information processing in the frontal cortex and basal ganglia. J. Cogn. Neurosci. 2000, 12, 505–519. [Google Scholar] [CrossRef]

- Berdia, S.; Metz, J.T. An artificial neural network stimulating performance of normal subjects and schizophrenics on the Wisconsin card sorting test. Artif. Intell. Med. 1998, 13, 123–138. [Google Scholar] [CrossRef]

- Cella, M.; Bishara, A.J.; Medin, E.; Swan, S.; Reeder, C.; Wykes, T. Identifying cognitive remediation change through computational modelling—Effects on reinforcement learning in schizophrenia. Schizophr. Bull. 2014, 40, 1422–1432. [Google Scholar] [CrossRef]

- Dehaene, S.; Changeux, J.P. The Wisconsin Card Sorting Test: Theoretical analysis and modeling in a neuronal network. Cereb. Cortex 1991, 1, 62–79. [Google Scholar] [CrossRef]

- Farreny, A.; del Rey-Mejías, Á.; Escartin, G.; Usall, J.; Tous, N.; Haro, J.M.; Ochoa, S. Study of positive and negative feedback sensitivity in psychosis using the Wisconsin Card Sorting Test. Compr. Psychiatry 2016, 68, 119–128. [Google Scholar] [CrossRef] [PubMed]

- Kaplan, G.B.; Şengör, N.S.; Gürvit, H.; Genç, İ.; Güzeliş, C. A composite neural network model for perseveration and distractibility in the Wisconsin card sorting test. Neural Networks 2006, 19, 375–387. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Niv, Y. Reinforcement learning in the brain. J. Math. Psychol. 2009, 53, 139–154. [Google Scholar] [CrossRef] [Green Version]

- Silvetti, M.; Verguts, T. Reinforcement learning, high-level cognition, and the human brain. In Neuroimaging—Cognitive and Clinical Neuroscience; Bright, P., Ed.; InTech: Rijeka, Croatia, 2012; pp. 283–296. [Google Scholar]

- Gerraty, R.T.; Davidow, J.Y.; Foerde, K.; Galvan, A.; Bassett, D.S.; Shohamy, D. Dynamic flexibility in striatal-cortical circuits supports reinforcement learning. J. Neurosci. 2018, 38, 2442–2453. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fontanesi, L.; Gluth, S.; Spektor, M.S.; Rieskamp, J. A reinforcement learning diffusion decision model for value-based decisions. Psychon. Bull. Rev. 2019, 26, 1099–1121. [Google Scholar] [CrossRef] [PubMed]

- Fontanesi, L.; Palminteri, S.; Lebreton, M. Decomposing the effects of context valence and feedback information on speed and accuracy during reinforcement learning: A meta-analytical approach using diffusion decision modeling. Cogn. Affect. Behav. Neurosci. 2019, 19, 490–502. [Google Scholar] [CrossRef] [PubMed]

- Caligiore, D.; Arbib, M.A.; Miall, R.C.; Baldassarre, G. The super-learning hypothesis: Integrating learning processes across cortex, cerebellum and basal ganglia. Neurosci. Biobehav. Rev. 2019, 100, 19–34. [Google Scholar] [CrossRef]

- Schultz, W. Neuronal reward and decision signals: From theories to data. Physiol. Rev. 2015, 95, 853–951. [Google Scholar] [CrossRef]

- Kopp, B.; Steinke, A.; Bertram, M.; Skripuletz, T.; Lange, F. Multiple levels of control processes for Wisconsin Card Sorts: An observational study. Brain Sci. 2019, 9, 141. [Google Scholar] [CrossRef] [Green Version]

- Frank, M.J.; Seeberger, L.C.; O’Reilly, R.C. By carrot or by stick: Cognitive reinforcement learning in Parkinsonism. Science 2004, 306, 1940–1943. [Google Scholar] [CrossRef] [Green Version]

- Schultz, W.; Dayan, P.; Montague, P.R. A neural substrate of prediction and reward. Science 1997, 275, 1593–1599. [Google Scholar] [CrossRef] [Green Version]

- Schultz, W. Reward prediction error. Curr. Biol. 2017, 27, 369–371. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Erev, I.; Roth, A.E. Predicting how people play games: Reinforcement learning in experimental games with unique, mixed strategy equilibria. Am. Econ. Rev. 1998, 88, 848–881. [Google Scholar]

- Steingroever, H.; Wetzels, R.; Wagenmakers, E.-J. Validating the PVL-Delta model for the Iowa gambling task. Front. Psychol. 2013, 4, 898. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Luce, R.D. Individual Choice Behaviour; John Wiley & Sons Inc.: New York, NY, USA, 1959. [Google Scholar]

- Daw, N.D.; O’Doherty, J.P.; Dayan, P.; Seymour, B.; Dolan, R.J. Cortical substrates for exploratory decisions in humans. Nature 2006, 441, 876–879. [Google Scholar] [CrossRef]

- Thrun, S.B. The role of exploration in learning control. In Handbook for Intelligent Control: Neural, Fuzzy and Adaptive Approaches; White, D., Sofge, D., Eds.; Van Nostrand Reinhold: Florence, KY, USA, 1992; pp. 527–559. [Google Scholar]

- Lange, F.; Seer, C.; Loens, S.; Wegner, F.; Schrader, C.; Dressler, D.; Dengler, R.; Kopp, B. Neural mechanisms underlying cognitive inflexibility in Parkinson’s disease. Neuropsychologia 2016, 93, 142–150. [Google Scholar] [CrossRef]

- Rogers, D. Bradyphrenia in parkinsonism: A historical review. Psychol. Med. 1986, 16, 257–265. [Google Scholar] [CrossRef]

- Vlagsma, T.T.; Koerts, J.; Tucha, O.; Dijkstra, H.T.; Duits, A.A.; van Laar, T.; Spikman, J.M. Mental slowness in patients with Parkinson’s disease: Associations with cognitive functions? J. Clin. Exp. Neuropsychol. 2016, 38, 844–852. [Google Scholar] [CrossRef] [Green Version]

- Revonsuo, A.; Portin, R.; Koivikko, L.; Rinne, J.O.; Rinne, U.K. Slowing of information processing in Parkinson′s disease. Brain Cogn. 1993, 21, 87–110. [Google Scholar] [CrossRef]

- Peavy, G.M. Mild cognitive deficits in Parkinson disease: Where there is bradykinesia, there is bradyphrenia. Neurology 2010, 75, 1038–1039. [Google Scholar] [CrossRef]

- Knowlton, B.J.; Mangels, J.A.; Squire, L.R. A neostriatal habit learning system in humans. Science 1996, 273, 1399–1402. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yin, H.H.; Knowlton, B.J. The role of the basal ganglia in habit formation. Nat. Rev. Neurosci. 2006, 7, 464–476. [Google Scholar] [CrossRef] [PubMed]

- Shohamy, D.; Myers, C.E.; Kalanithi, J.; Gluck, M.A. Basal ganglia and dopamine contributions to probabilistic category learning. Neurosci. Biobehav. Rev. 2008, 32, 219–236. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cools, R. Dopaminergic modulation of cognitive function-implications for L-DOPA treatment in Parkinson’s disease. Neurosci. Biobehav. Rev. 2006, 30, 1–23. [Google Scholar] [CrossRef]

- Gotham, A.M.; Brown, R.G.; Marsden, C.D. ‘Frontal’ cognitive function in patients with Parkinson’s disease “on” and “off” Levodopa. Brain 1988, 111, 299–321. [Google Scholar] [CrossRef]

- Vaillancourt, D.E.; Schonfeld, D.; Kwak, Y.; Bohnen, N.I.; Seidler, R. Dopamine overdose hypothesis: Evidence and clinical implications. Mov. Disord. 2013, 28, 1920–1929. [Google Scholar] [CrossRef] [Green Version]

- Cools, R.; D’Esposito, M. Inverted-U–shaped dopamine actions on human working memory and cognitive control. Biol. Psychiatry 2011, 69, 113–125. [Google Scholar] [CrossRef] [Green Version]

- Phukan, J.; Pender, N.P.; Hardiman, O. Cognitive impairment in amyotrophic lateral sclerosis. Lancet Neurol. 2007, 6, 994–1003. [Google Scholar] [CrossRef]

- Morris, L.S.; Kundu, P.; Dowell, N.; Mechelmans, D.J.; Favre, P.; Irvine, M.A.; Robbins, T.W.; Daw, N.; Bullmore, E.T.; Harrison, N.A.; et al. Fronto-striatal organization: Defining functional and microstructural substrates of behavioural flexibility. Cortex 2016, 74, 118–133. [Google Scholar] [CrossRef] [Green Version]

- Brooks, B.R.; Miller, R.G.; Swash, M.; Munsat, T.L. El Escorial revisited: Revised criteria for the diagnosis of amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. Other Mot. Neuron Disord. 2000, 1, 293–299. [Google Scholar] [CrossRef]

- Neary, D.; Snowden, J.S.; Gustafson, L.; Passant, U.; Stuss, D.; Black, S.; Freedman, M.; Kertesz, A.; Robert, P.H.; Albert, M.; et al. Frontotemporal lobar degeneration: A consensus on clinical diagnostic criteria. Neurology 1998, 51, 1546–1554. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nelson, H.E. A modified card sorting test sensitive to frontal lobe defects. Cortex 1976, 12, 313–324. [Google Scholar] [CrossRef]

- Kopp, B.; Lange, F. Electrophysiological indicators of surprise and entropy in dynamic task-switching environments. Front. Hum. Neurosci. 2013, 7, 300. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- JASP Team. JASP; Version 0.11.1; JASP Team: University of Amsterdam, Amsterdam, The Netherlands, 2019. [Google Scholar]

- Van den Bergh, D.; van Doorn, J.; Marsman, M.; Draws, T.; van Kesteren, E.-J.; Derks, K.; Dablander, F.; Gronau, Q.F.; Kucharský, Š.; Raj, A.; et al. A tutorial on conducting and interpreting a Bayesian ANOVA in JASP. LAnnee Psychol. 2020, 120, 73–96. [Google Scholar] [CrossRef]

- McCoy, B.; Jahfari, S.; Engels, G.; Knapen, T.; Theeuwes, J. Dopaminergic medication reduces striatal sensitivity to negative outcomes in Parkinson’s disease. Brain 2019, 142, 3605–3620. [Google Scholar] [CrossRef] [Green Version]

- Daw, N.D.; Gershman, S.J.; Seymour, B.; Dayan, P.; Dolan, R.J. Model-based influences on humans’ choices and striatal prediction errors. Neuron 2011, 69, 1204–1215. [Google Scholar] [CrossRef] [Green Version]

- Frank, M.J.; Moustafa, A.A.; Haughey, H.M.; Curran, T.; Hutchison, K.E. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proc. Natl. Acad. Sci. USA 2007, 104, 16311–16316. [Google Scholar] [CrossRef] [Green Version]

- Ahn, W.-Y.; Haines, N.; Zhang, L. Revealing neurocomputational mechanisms of reinforcement learning and decision-making with the hBayesDM package. Comput. Psychiatry 2017, 1, 24–57. [Google Scholar] [CrossRef]

- Müller, J.; Dreisbach, G.; Goschke, T.; Hensch, T.; Lesch, K.-P.; Brocke, B. Dopamine and cognitive control: The prospect of monetary gains influences the balance between flexibility and stability in a set-shifting paradigm. Eur. J. Neurosci. 2007, 26, 3661–3668. [Google Scholar] [CrossRef]

- Dreisbach, G.; Goschke, T. How positive affect modulates cognitive control: Reduced perseveration at the cost of increased distractibility. J. Exp. Psychol. Learn. Mem. Cogn. 2004, 30, 343–353. [Google Scholar] [CrossRef] [Green Version]

- Goschke, T.; Bolte, A. Emotional modulation of control dilemmas: The role of positive affect, reward, and dopamine in cognitive stability and flexibility. Neuropsychologia 2014, 62, 403–423. [Google Scholar] [CrossRef] [PubMed]

- Pedersen, M.L.; Frank, M.J.; Biele, G. The drift diffusion model as the choice rule in reinforcement learning. Psychon. Bull. Rev. 2017, 24, 1234–1251. [Google Scholar] [CrossRef] [PubMed]

- Wagenmakers, E.-J.; Wetzels, R.; Borsboom, D.; van der Maas, H.L.J. Why psychologists must change the way they analyze their data: The case of psi: Comment on Bem (2011). J. Pers. Soc. Psychol. 2011, 100, 426–432. [Google Scholar] [CrossRef] [PubMed]

- Abrahams, S.; Newton, J.; Niven, E.; Foley, J.; Bak, T.H. Screening for cognition and behaviour changes in ALS. Amyotroph. Lateral Scler. Front. Degener. 2014, 15, 9–14. [Google Scholar] [CrossRef] [PubMed]

- Watermeyer, T.J.; Brown, R.G.; Sidle, K.C.L.; Oliver, D.J.; Allen, C.; Karlsson, J.; Ellis, C.M.; Shaw, C.E.; Al-Chalabi, A.; Goldstein, L.H. Executive dysfunction predicts social cognition impairment in amyotrophic lateral sclerosis. J. Neurol. 2015, 262, 1681–1690. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carluer, L.; Mondou, A.; Buhour, M.-S.; Laisney, M.; Pélerin, A.; Eustache, F.; Viader, F.; Desgranges, B. Neural substrate of cognitive theory of mind impairment in amyotrophic lateral sclerosis. Cortex 2015, 65, 19–30. [Google Scholar] [CrossRef]

- Stojkovic, T.; Stefanova, E.; Pekmezovic, T.; Peric, S.; Stevic, Z. Executive dysfunction and survival in patients with amyotrophic lateral sclerosis: Preliminary report from a Serbian centre for motor neuron disease. Amyotroph. Lateral Scler. Front. Degener. 2016, 17, 543–547. [Google Scholar] [CrossRef]

- Narayanan, N.S.; Rodnitzky, R.L.; Uc, E.Y. Prefrontal dopamine signaling and cognitive symptoms of Parkinson’s disease. Rev. Neurosci. 2013, 24, 267–278. [Google Scholar] [CrossRef]

- Hosp, J.A.; Coenen, V.A.; Rijntjes, M.; Egger, K.; Urbach, H.; Weiller, C.; Reisert, M. Ventral tegmental area connections to motor and sensory cortical fields in humans. Brain Struct. Funct. 2019, 224, 2839–2855. [Google Scholar] [CrossRef] [Green Version]

- Shohamy, D.; Adcock, R.A. Dopamine and adaptive memory. Trends Cogn. Sci. 2010, 14, 464–472. [Google Scholar] [CrossRef]

- Aarts, E.; Nusselein, A.A.M.; Smittenaar, P.; Helmich, R.C.; Bloem, B.R.; Cools, R. Greater striatal responses to medication in Parkinson’s disease are associated with better task-switching but worse reward performance. Neuropsychologia 2014, 62, 390–397. [Google Scholar] [CrossRef] [PubMed]

- Sitburana, O.; Ondo, W.G. Brain magnetic resonance imaging (MRI) in parkinsonian disorders. Parkinsonism Relat. Disord. 2009, 15, 165–174. [Google Scholar] [CrossRef] [PubMed]

- Trojsi, F.; Di Nardo, F.; Santangelo, G.; Siciliano, M.; Femiano, C.; Passaniti, C.; Caiazzo, G.; Fratello, M.; Cirillo, M.; Monsurrò, M.R.; et al. Resting state fMRI correlates of Theory of Mind impairment in amyotrophic lateral sclerosis. Cortex 2017, 97, 1–16. [Google Scholar] [CrossRef]

- Canosa, A.; Pagani, M.; Cistaro, A.; Montuschi, A.; Iazzolino, B.; Fania, P.; Cammarosano, S.; Ilardi, A.; Moglia, C.; Calvo, A.; et al. 18 F-FDG-PET correlates of cognitive impairment in ALS. Neurology 2016, 86, 44–49. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bede, P.; Elamin, M.; Byrne, S.; McLaughlin, R.L.; Kenna, K.; Vajda, A.; Pender, N.; Bradley, D.G.; Hardiman, O. Basal ganglia involvement in amyotrophic lateral sclerosis. Neurology 2013, 81, 2107–2115. [Google Scholar] [CrossRef]

- Lange, F. Are difficult-to-study populations too difficult to study in a reliable way? Eur. Psychol. 2020, 25, 41–50. [Google Scholar] [CrossRef]

- Simon, N.; Goldstein, L.H. Screening for cognitive and behavioral change in amyotrophic lateral sclerosis/motor neuron disease: A systematic review of validated screening methods. Amyotroph. Lateral Scler. Front. Degener. 2019, 20, 1–11. [Google Scholar] [CrossRef]

- Palminteri, S.; Wyart, V.; Koechlin, E. The importance of falsification in computational cognitive modeling. Trends Cogn. Sci. 2017, 21, 425–433. [Google Scholar] [CrossRef]

- Johns, M.W. A new method for measuring daytime sleepiness: The Epworth Sleepiness Scale. Sleep 1991, 14, 540–545. [Google Scholar] [CrossRef] [Green Version]

- Abdulla, S.; Vielhaber, S.; Körner, S.; Machts, J.; Heinze, H.-J.; Dengler, R.; Petri, S. Validation of the German version of the extended ALS functional rating scale as a patient-reported outcome measure. J. Neurol. 2013, 260, 2242–2255. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Dubois, B.; Slachevsky, A.; Litvan, I.; Pillon, B. The FAB: A frontal assessment battery at bedside. Neurology 2000, 55, 1621–1626. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ahn, W.-Y.; Krawitz, A.; Kim, W.; Busemeyer, J.R.; Brown, J.W. A model-based fMRI analysis with hierarchical Bayesian parameter estimation. J. Neurosci. Psychol. Econ. 2011, 4, 95–110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Haines, N.; Vassileva, J.; Ahn, W.-Y. The Outcome-Representation Learning model: A novel reinforcement learning model of the Iowa Gambling Task. Cogn. Sci. 2018, 42, 2534–2561. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kruschke, J.K. Doing Bayesian Data Analysis: A Tutorial with R, JAGS, and Stan; Academic Press: New York, NY, USA, 2015. [Google Scholar]

- Lee, M.D. How cognitive modeling can benefit from hierarchical Bayesian models. J. Math. Psychol. 2011, 55, 1–7. [Google Scholar] [CrossRef]

- Lee, M.D.; Wagenmakers, E.-J. Bayesian Cognitive Modeling: A Practical Course; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Rouder, J.N.; Lu, J. An introduction to Bayesian hierarchical models with an application in the theory of signal detection. Psychon. Bull. Rev. 2005, 12, 573–604. [Google Scholar] [CrossRef] [Green Version]

- Shiffrin, R.; Lee, M.D.; Kim, W.; Wagenmakers, E.-J. A survey of model evaluation approaches with a tutorial on hierarchical Bayesian methods. Cogn. Sci. 2008, 32, 1248–1284. [Google Scholar] [CrossRef]

- Stan Development Team. RStan: The R Interface to Stan; Stan Development Team: Columbia University, New York, NY, USA, 2018. [Google Scholar]

- Betancourt, M.J.; Girolami, M. Hamiltonian Monte Carlo for hierarchical models. In Current Trends in Bayesian Methodology with Applications; Upadhyay, S.K., Umesh, S., Dey, D.K., Loganathan, A., Eds.; CRC Press: Boca Raton, FL, USA, 2013; pp. 79–97. [Google Scholar]

- Sharp, M.E.; Foerde, K.; Daw, N.D.; Shohamy, D. Dopamine selectively remediates “model-based” reward learning: A computational approach. Brain 2016, 139, 355–364. [Google Scholar] [CrossRef] [Green Version]

- Gelman, A.; Rubin, D.B. Inference from iterative simulation using multiple sequences. Stat. Sci. 1992, 7, 457–472. [Google Scholar] [CrossRef]

- Marsman, M.; Wagenmakers, E.-J. Three insights from a Bayesian interpretation of the one-sided P value. Educ. Psychol. Meas. 2017, 77, 529–539. [Google Scholar] [CrossRef]

| Effects | P(Inclusion) | P(Inclusion|Data) | BFinclulsion |

|---|---|---|---|

| Error Type | 0.600 | 0.999 | 836.42 ** |

| Group | 0.600 | 0.656 | 1.27 |

| Error Type x Group | 0.200 | 0.385 | 2.50 |

| Parameter | Description | Bayes Factor |

|---|---|---|

| cognitive learning rate following positive feedback | 0.81 | |

| cognitive learning rate following negative feedback | 0.34 | |

| cognitive retention rate | 37.46 * | |

| sensorimotor learning rate following positive feedback | 14.96 * | |

| sensorimotor learning rate following negative feedback | 0.44 | |

| sensorimotor retention rate | 1.49 | |

| inverse temperature parameter | 17.07 * |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Steinke, A.; Lange, F.; Seer, C.; Petri, S.; Kopp, B. A Computational Study of Executive Dysfunction in Amyotrophic Lateral Sclerosis. J. Clin. Med. 2020, 9, 2605. https://doi.org/10.3390/jcm9082605

Steinke A, Lange F, Seer C, Petri S, Kopp B. A Computational Study of Executive Dysfunction in Amyotrophic Lateral Sclerosis. Journal of Clinical Medicine. 2020; 9(8):2605. https://doi.org/10.3390/jcm9082605

Chicago/Turabian StyleSteinke, Alexander, Florian Lange, Caroline Seer, Susanne Petri, and Bruno Kopp. 2020. "A Computational Study of Executive Dysfunction in Amyotrophic Lateral Sclerosis" Journal of Clinical Medicine 9, no. 8: 2605. https://doi.org/10.3390/jcm9082605