On the Reliability of Examining Dual-Tasking Abilities Using a Novel E-Health Device—A Proof of Concept Study in Multiple Sclerosis

Abstract

:1. Introduction

2. Experimental Section

2.1. Patient Sample Description

2.2. Dual-Task

2.3. Implementation of the Dual-Task as Mobile E-Health Tool

2.4. Statistical Analyses

3. Results

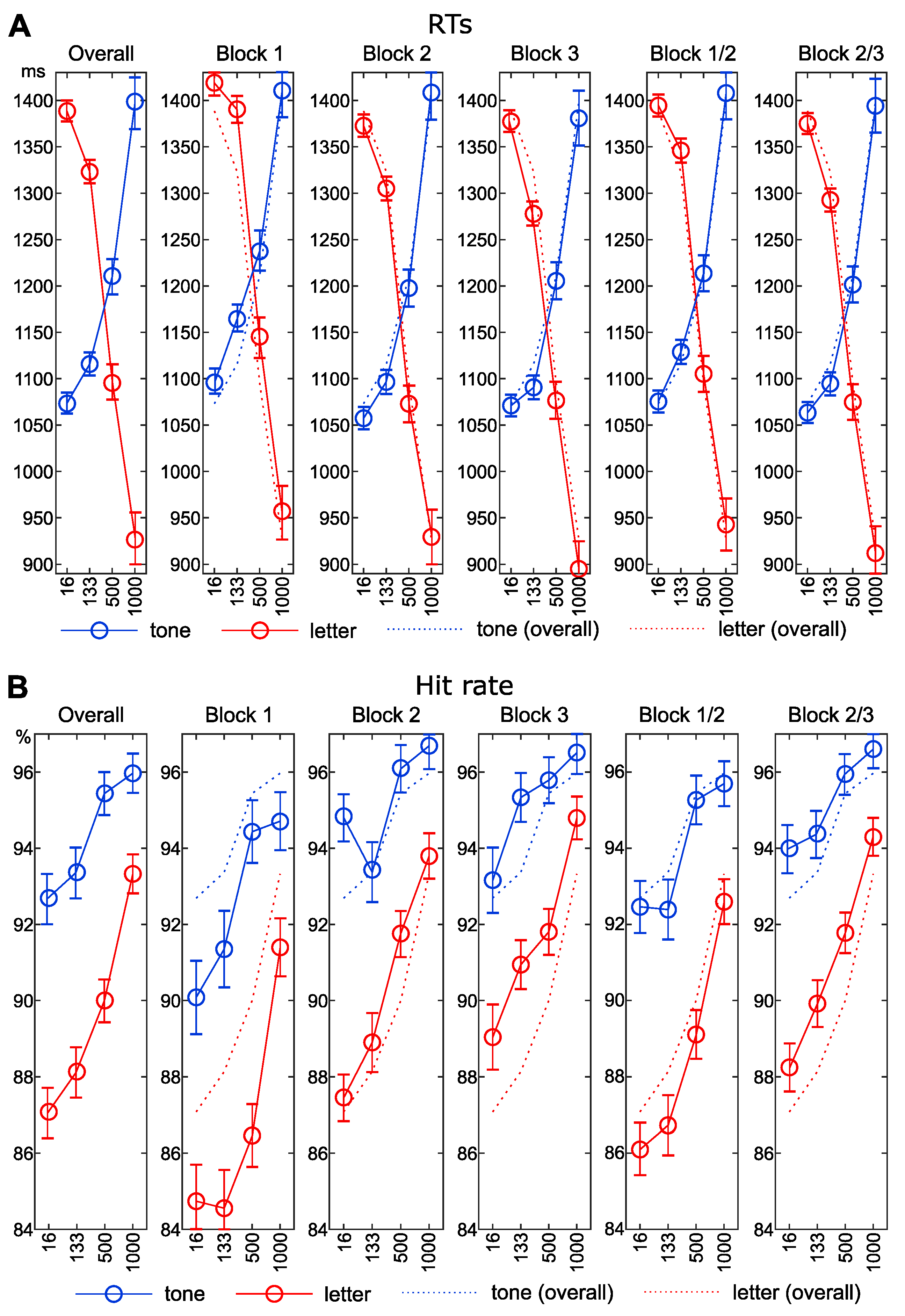

3.1. Reaction Times

3.2. Accuracy Data

3.3. Reliability Analysis

3.4. Correlation Analysis

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chiaravalloti, N.D.; DeLuca, J. Cognitive impairment in multiple sclerosis. Lancet Neurol. 2008, 7, 1139–1151. [Google Scholar] [CrossRef]

- Rao, S.M.; Leo, G.J.; Bernardin, L.; Unverzagt, F. Cognitive dysfunction in multiple sclerosis. I. Frequency, patterns, and prediction. Neurology 1991, 41, 685–691. [Google Scholar] [CrossRef] [PubMed]

- DeLuca, J.; Chiaravalloti, N.D.; Sandroff, B.M. Treatment and management of cognitive dysfunction in patients with multiple sclerosis. Nat. Rev. Neurol. 2020. [Google Scholar] [CrossRef] [PubMed]

- Rocca, M.A.; Amato, M.P.; De Stefano, N.; Enzinger, C.; Geurts, J.J.; Penner, I.-K.; Rovira, A.; Sumowski, J.F.; Valsasina, P.; Filippi, M. Clinical and imaging assessment of cognitive dysfunction in multiple sclerosis. Lancet Neurol. 2015, 14, 302–317. [Google Scholar] [CrossRef]

- Sumowski, J.F.; Benedict, R.; Enzinger, C.; Filippi, M.; Geurts, J.J.; Hamalainen, P.; Hulst, H.; Inglese, M.; Leavitt, V.M.; Rocca, M.A.; et al. Cognition in multiple sclerosis: State of the field and priorities for the future. Neurology 2018, 90, 278–288. [Google Scholar] [CrossRef] [Green Version]

- Ziemssen, T.; Kern, R.; Thomas, K. Multiple sclerosis: Clinical profiling and data collection as prerequisite for personalized medicine approach. BMC Neurol. 2016, 16, 124. [Google Scholar] [CrossRef] [Green Version]

- Amato, M.P.; Langdon, D.; Montalban, X.; Benedict, R.H.B.; DeLuca, J.; Krupp, L.B.; Thompson, A.J.; Comi, G. Treatment of cognitive impairment in multiple sclerosis: Position paper. J. Neurol. 2013, 260, 1452–1468. [Google Scholar] [CrossRef]

- Benedict, R.H.b.; Cookfair, D.; Gavett, R.; Gunther, M.; Munschauer, F.; Garg, N.; Weinstock-Guttman, B. Validity of the minimal assessment of cognitive function in multiple sclerosis (MACFIMS). J. Int. Neuropsychol. Soc. 2006, 12, 549–558. [Google Scholar] [CrossRef]

- Griffa, A.; Baumann, P.S.; Thiran, J.-P.; Hagmann, P. Structural connectomics in brain diseases. NeuroImage 2013, 80, 515–526. [Google Scholar] [CrossRef]

- Bonzano, L.; Pardini, M.; Mancardi, G.L.; Pizzorno, M.; Roccatagliata, L. Structural connectivity influences brain activation during PVSAT in Multiple Sclerosis. NeuroImage 2009, 44, 9–15. [Google Scholar] [CrossRef]

- Bonzano, L.; Tacchino, A.; Roccatagliata, L.; Sormani, M.P.; Mancardi, G.L.; Bove, M. Impairment in explicit visuomotor sequence learning is related to loss of microstructural integrity of the corpus callosum in multiple sclerosis patients with minimal disability. NeuroImage 2011, 57, 495–501. [Google Scholar] [CrossRef] [PubMed]

- Droby, A.; Yuen, K.S.L.; Muthuraman, M.; Reitz, S.-C.; Fleischer, V.; Klein, J.; Gracien, R.-M.; Ziemann, U.; Deichmann, R.; Zipp, F.; et al. Changes in brain functional connectivity patterns are driven by an individual lesion in MS: A resting-state fMRI study. Brain Imaging Behav. 2016, 10, 1117–1126. [Google Scholar] [CrossRef] [PubMed]

- Chmielewski, W.X.; Yildiz, A.; Beste, C. The neural architecture of age-related dual-task interferences. Front. Aging Neurosci. 2014, 6. [Google Scholar] [CrossRef] [PubMed]

- Dux, P.E.; Ivanoff, J.; Asplund, C.L.; Marois, R. Isolation of a Central Bottleneck of Information Processing with time-resolved fMRI. Neuron 2006, 52, 1109–1120. [Google Scholar] [CrossRef] [Green Version]

- Gohil, K.; Stock, A.-K.; Beste, C. The importance of sensory integration processes for action cascading. Sci. Rep. 2015, 5, 9485. [Google Scholar] [CrossRef] [PubMed]

- Gohil, K.; Bluschke, A.; Roessner, V.; Stock, A.-K.; Beste, C. Sensory processes modulate differences in multi-component behavior and cognitive control between childhood and adulthood. Hum. Brain Mapp. 2017, 38, 4933–4945. [Google Scholar] [CrossRef] [Green Version]

- Marois, R.; Larson, J.M.; Chun, M.M.; Shima, D. Response-specific sources of dual-task interference in human pre-motor cortex. Psychol. Res. 2006, 70, 436–447. [Google Scholar] [CrossRef]

- Stelzel, C.; Kraft, A.; Brandt, S.A.; Schubert, T. Dissociable neural effects of task order control and task set maintenance during dual-task processing. J. Cogn. Neurosci. 2008, 20, 613–628. [Google Scholar] [CrossRef]

- Stock, A.-K.; Gohil, K.; Huster, R.J.; Beste, C. On the effects of multimodal information integration in multitasking. Sci. Rep. 2017, 7, 4927. [Google Scholar] [CrossRef]

- Szameitat, A.J.; Lepsien, J.; von Cramon, D.Y.; Sterr, A.; Schubert, T. Task-order coordination in dual-task performance and the lateral prefrontal cortex: An event-related fMRI study. Psychol. Res. 2006, 70, 541–552. [Google Scholar] [CrossRef] [Green Version]

- Yildiz, A.; Beste, C. Parallel and serial processing in dual-tasking differentially involves mechanisms in the striatum and the lateral prefrontal cortex. Brain Struct. Funct. 2014, 1–12. [Google Scholar] [CrossRef]

- Yildiz, A.; Chmielewski, W.; Beste, C. Dual-task performance is differentially modulated by rewards and punishments. Behav. Brain Res. 2013, 250, 304–307. [Google Scholar] [CrossRef] [PubMed]

- Chmielewski, W.X.; Mückschel, M.; Dippel, G.; Beste, C. Concurrent information affects response inhibition processes via the modulation of theta oscillations in cognitive control networks. Brain Struct. Funct. 2016, 221, 3949–3961. [Google Scholar] [CrossRef] [PubMed]

- Downer, M.B.; Kirkland, M.C.; Wallack, E.M.; Ploughman, M. Walking impairs cognitive performance among people with multiple sclerosis but not controls. Hum. Mov. Sci. 2016, 49, 124–131. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, F.; Rochester, L.; Paul, L.; Rafferty, D.; O’Leary, C.; Evans, J. Walking and talking: An investigation of cognitive—Motor dual tasking in multiple sclerosis. Mult. Scler. 2009, 15, 1215–1227. [Google Scholar] [CrossRef] [PubMed]

- Holtzer, R.; Mahoney, J.; Verghese, J. Intraindividual Variability in Executive Functions but Not Speed of Processing or Conflict Resolution Predicts Performance Differences in Gait Speed in Older Adults. J. Gerontol. A Biol. Sci. Med. Sci. 2014, 69, 980–986. [Google Scholar] [CrossRef] [PubMed]

- Holtzer, R.; Wang, C.; Verghese, J. Performance variance on walking while talking tasks: Theory, findings, and clinical implications. AGE 2014, 36, 373–381. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Learmonth, Y.C.; Ensari, I.; Motl, R.W. Cognitive Motor Interference in Multiple Sclerosis: Insights from a Systematic Quantitative Review. Arch. Phys. Med. Rehabil. 2017, 98, 1229–1240. [Google Scholar] [CrossRef]

- Wajda, D.A.; Sosnoff, J.J. Cognitive-Motor Interference in Multiple Sclerosis: A Systematic Review of Evidence, Correlates, and Consequences. Biomed. Res. Int. 2015, 2015. [Google Scholar] [CrossRef] [Green Version]

- Butchard-MacDonald, E.; Paul, L.; Evans, J.J. Balancing the Demands of Two Tasks: An Investigation of Cognitive–Motor Dual-Tasking in Relapsing Remitting Multiple Sclerosis. J. Int. Neuropsychol. Soc. 2017, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Monticone, M.; Ambrosini, E.; Fiorentini, R.; Rocca, B.; Liquori, V.; Pedrocchi, A.; Ferrante, S. Reliability of spatial–temporal gait parameters during dual-task interference in people with multiple sclerosis. A cross-sectional study. Gait Posture 2014, 40, 715–718. [Google Scholar] [CrossRef]

- Beste, C.; Mückschel, M.; Paucke, M.; Ziemssen, T. Dual-Tasking in Multiple Sclerosis - Implications for a Cognitive Screening Instrument. Front. Hum. Neurosci. 2018, 12, 24. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beste, C.; Ziemssen, T. Why Cognitive–Cognitive Dual-Task Testing Assessment Should Be Implemented in Studies on Multiple Sclerosis and in Regular Clinical Practice. Front. Neurol. 2020, 11. [Google Scholar] [CrossRef]

- Welford, A.T. The ‘Psychological Refractory Period’ and the Timing of High-Speed Performance—A Review and a Theory. Br. J. Psychol. Gen. Sect. 1952, 43, 2–19. [Google Scholar] [CrossRef]

- Pashler, H. Processing stages in overlapping tasks: Evidence for a central bottleneck. J. Exp. Psychol. Hum. Percept. Perform. 1984, 10, 358–377. [Google Scholar] [CrossRef]

- Pashler, H. Dual-task interference in simple tasks: Data and theory. Psychol. Bull. 1994, 116, 220–244. [Google Scholar] [CrossRef]

- Wu, C.; Liu, Y. Queuing network modeling of the psychological refractory period (PRP). Psychol. Rev. 2008, 115, 913–954. [Google Scholar] [CrossRef] [Green Version]

- Dell’Acqua, R.; Pashler, H.; Stablum, F. Multitasking costs in close-head injury patients. Exp. Brain Res. 2003, 152, 29–41. [Google Scholar] [CrossRef]

- Van Selst, M.; Ruthruff, E.; Johnston, J.C. Can practice eliminate the Psychological Refractory Period effect? J. Exp. Psychol. Hum. Percept. Perform. 1999, 25, 1268–1283. [Google Scholar] [CrossRef]

- Van Selst, M.; Jolicoeur, P. Decision and Response in Dual-Task Interference. Cogn. Psychol. 1997, 33, 266–307. [Google Scholar] [CrossRef]

- Voigt, I.; Benedict, M.; Susky, M.; Scheplitz, T.; Frankowitz, S.; Kern, R.; Müller, O.; Schlieter, H.; Ziemssen, T. A Digital Patient Portal for Patients with Multiple Sclerosis. Front. Neurol. 2020, 11, 400. [Google Scholar] [CrossRef] [PubMed]

- Rudick, R.A.; Miller, D.; Bethoux, F.; Rao, S.M.; Lee, J.-C.; Stough, D.; Reece, C.; Schindler, D.; Mamone, B.; Alberts, J. The Multiple Sclerosis Performance Test (MSPT): An iPad-Based Disability Assessment Tool. J. Vis. Exp. 2014. [Google Scholar] [CrossRef] [PubMed]

- Cella, D.; Lai, J.-S.; Nowinski, C.J.; Victorson, D.; Peterman, A.; Miller, D.; Bethoux, F.; Heinemann, A.; Rubin, S.; Cavazos, J.E.; et al. Neuro-QOL. Neurology 2012, 78, 1860–1867. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bermel, R.; Mowry, E.M.; Krupp, L.; Jones, S.; Naismith, R.; Boster, A.; Hyland, M.; Izbudak, I.; Lui, Y.W.; Hersh, C.; et al. The Multiple Sclerosis Partners Advancing Technology and Health Solutions (MS PATHS) patient cohort (P4.381). Neurology 2018, 90, P4.381. [Google Scholar]

- Kurtzke, J.F. Rating neurologic impairment in multiple sclerosis. Neurology 1983, 33, 1444. [Google Scholar] [CrossRef] [Green Version]

- Roxburgh, R.H.S.R.; Seaman, S.R.; Masterman, T.; Hensiek, A.E.; Sawcer, S.J.; Vukusic, S.; Achiti, I.; Confavreux, C.; Coustans, M.; le Page, E.; et al. Multiple Sclerosis Severity Score. Neurology 2005, 64, 1144. [Google Scholar] [CrossRef]

- Beste, C.; Yildiz, A.; Meissner, T.W.; Wolf, O.T. Stress improves task processing efficiency in dual-tasks. Behav. Brain Res. 2013, 252, 260–265. [Google Scholar] [CrossRef]

- Manjaly, Z.-M.; Harrison, N.A.; Critchley, H.D.; Do, C.T.; Stefanics, G.; Wenderoth, N.; Lutterotti, A.; Müller, A.; Stephan, K.E. Pathophysiological and cognitive mechanisms of fatigue in multiple sclerosis. J. Neurol. Neurosurg. Psychiatry 2019, 90, 642–651. [Google Scholar] [CrossRef]

- Hanken, K.; Eling, P.; Hildebrandt, H. Is there a cognitive signature for MS-related fatigue? Mult. Scler. 2015, 21, 376–381. [Google Scholar] [CrossRef]

- Miller, D.M.; Bethoux, F.; Victorson, D.; Nowinski, C.J.; Buono, S.; Lai, J.-S.; Wortman, K.; Burns, J.L.; Moy, C.; Cella, D. Validating Neuro-QoL Short Forms and Targeted Scales with Persons who have Multiple Sclerosis. Mult. Scler. 2016, 22, 830–841. [Google Scholar] [CrossRef] [Green Version]

- Lai, J.-S.; Cella, D.; Yanez, B.; Stone, A. Linking Fatigue Measures on a Common Reporting Metric. J Pain Symptom Manag. 2014, 48, 639–648. [Google Scholar] [CrossRef] [PubMed]

- Haase, R.; Schultheiss, T.; Kempcke, R.; Thomas, K.; Ziemssen, T. Use and Acceptance of Electronic Communication by Patients With Multiple Sclerosis: A Multicenter Questionnaire Study. J. Med. Internet Res. 2012, 14, e135. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wojcik, C.M.; Beier, M.; Costello, K.; DeLuca, J.; Feinstein, A.; Goverover, Y.; Gudesblatt, M.; Jaworski, M.; Kalb, R.; Kostich, L.; et al. Computerized neuropsychological assessment devices in multiple sclerosis: A systematic review. Mult. Scler. 2019, 25, 1848–1869. [Google Scholar] [CrossRef]

- Mückschel, M.; Beste, C.; Ziemssen, T. Immunomodulatory treatments and cognition in MS. Acta Neurol. Scand. 2016, 134 (Suppl. 200), 55–59. [Google Scholar] [CrossRef]

| Mean | SD | |

|---|---|---|

| EDSS | ||

| Total score | 2.5 | 1.4 |

| Visual | 0.6 | 0.8 |

| Brainstem | 0.6 | 0.6 |

| Cerebellar | 0.9 | 0.9 |

| Sensory | 1.1 | 1.0 |

| Bowel_Bladder | 0.5 | 0.8 |

| Cerebral | 1.0 | 0.9 |

| Ambulation | 0.8 | 1.8 |

| MSSS | ||

| Total score | 3.2 | 2.0 |

| MSPT | ||

| Processing Speed Test/SDMT | 56.5 | 13.5 |

| Low Contrast Letter Acuity Test | 39.7 | 8.0 |

| Manual Dexterity Test right | 22.6 | 6.2 |

| Manual Dexterity Test left | 22.7 | 5.8 |

| Walking Speed Test | 5.2 | 2.2 |

| Neuro-QoL | ||

| Ability to Participate in Social Roles and Activities | 49.8 | 8.0 |

| Satisfaction with Social Roles and Activities | 49.7 | 7.2 |

| Depression | 44.9 | 7.9 |

| Emotional and Behavioral Dyscontrol | 47.7 | 9.3 |

| Stigma | 44.4 | 7.9 |

| Applied Cognition | 50.7 | 9.5 |

| Positive Affect and Well-Being | 102.1 | 584.9 |

| Fatigue | 45.0 | 10.0 |

| Sleep Disturbance | 48.2 | 10.2 |

| Lower Extremity (Mobility) | 53.6 | 9.0 |

| Upper Extremity (Fine Motor) | 49.5 | 8.9 |

| SOA 16 | SOA 133 | SOA 500 | SOA 1000 | Slope SOA | |

|---|---|---|---|---|---|

| S2 RTs | |||||

| Overall | 1388.5 ± 14 | 1322.9 ± 14.5 | 1095.4 ± 18.9 | 926.5 ± 19.1 | 462 ± 11.2 |

| block 1 | 1419 ± 14.8 | 139.5 ± 16.9 | 1145.3 ± 21 | 957.2 ± 2.5 | 461.9 ± 12.9 |

| block 2 | 1372.5 ± 15.3 | 1304.9 ± 15.7 | 1072.9 ± 19.7 | 929.5 ± 2.4 | 443 ± 12.5 |

| block 3 | 1377.3 ± 14.5 | 1277.7 ± 14.4 | 1076.5 ± 19.7 | 894.9 ± 19 | 482.3 ± 13.3 |

| block 1/2 | 1394.3 ± 14.4 | 1346 ± 15.4 | 1105.2 ± 19.2 | 942.9 ± 19.8 | 451.5 ± 11.6 |

| block 2/3 | 1375 ± 14.4 | 1292.6 ± 14.4 | 1074.6 ± 19 | 912 ± 19.2 | 463 ± 11.8 |

| S2 accuracy | |||||

| Overall | 87.1 ± 1.2 | 88.1 ± 1 | 90 ± 0.9 | 93.3 ± 0.7 | −6.3 ± 0.8 |

| block 1 | 84.7 ± 1.3 | 84.6 ± 1.3 | 86.5 ± 1.2 | 91.4 ± 0.9 | −6.7 ± 1.1 |

| block 2 | 87.5 ± 1.3 | 88.9 ± 1.1 | 91.8 ± 0.9 | 93.8 ± 0.9 | −6.3 ± 1.2 |

| block 3 | 89 ± 1.2 | 9.9 ± 1.1 | 91.8 ± 0.8 | 94.8 ± 0.8 | −5.8 ± 0.9 |

| block 1/2 | 86.1 ± 1.2 | 86.7 ± 1.1 | 89.1 ± 1 | 92.6 ± 0.8 | −6.5 ± 1 |

| block 2/3 | 88.2 ± 1.2 | 89.9 ± 1 | 91.8 ± 0.8 | 94.3 ± 0.8 | −6 ± 0.9 |

| Tone Stimulus (S1) | Cronbach’s α | Sensitivity | α If Deleted | Part-Whole-Correction | Letter Stimulus (S2) | Cronbach’s α | Sensitivity | α If Deleted | Part-Whole-Correction |

|---|---|---|---|---|---|---|---|---|---|

| slopeRT | slopeRT | ||||||||

| block 1 | 0.93 | 0.80 | 0.93 | −0.01 | block 1 | 0.84 | 0.64 | 0.84 | 0 |

| block 2 | 0.89 | 0.86 | 0.07 | block 2 | 0.78 | 0.71 | 0.13 | ||

| block 3 | 0.86 | 0.88 | 0.04 | block 3 | 0.71 | 0.79 | 0.06 | ||

| RTs SOA 16 | RTs SOA 16 | ||||||||

| block 1 | 0.91 | 0.80 | 0.91 | 0.01 | block 1 | 0.94 | 0.85 | 0.93 | 0.01 |

| block 2 | 0.86 | 0.84 | 0.07 | block 2 | 0.91 | 0.89 | 0.05 | ||

| block 3 | 0.82 | 0.87 | 0.04 | block 3 | 0.88 | 0.91 | 0.03 | ||

| RTs SOA 133 | RTs SOA 133 | ||||||||

| block 1 | 0.92 | 0.83 | 0.91 | 0.01 | block 1 | 0.92 | 0.82 | 0.90 | 0.02 |

| block 2 | 0.86 | 0.88 | 0.04 | block 2 | 0.87 | 0.85 | 0.06 | ||

| block 3 | 0.86 | 0.88 | 0.04 | block 3 | 0.83 | 0.89 | 0.03 | ||

| RTs SOA 500 | RTs SOA 500 | ||||||||

| block 1 | 0.93 | 0.80 | 0.94 | −0.01 | block 1 | 0.94 | 0.84 | 0.94 | 0 |

| block 2 | 0.89 | 0.86 | 0.07 | block 2 | 0.91 | 0.89 | 0.05 | ||

| block 3 | 0.86 | 0.89 | 0.04 | block 3 | 0.88 | 0.91 | 0.03 | ||

| RTs SOA 1000 | RTs SOA 1000 | ||||||||

| block 1 | 0.96 | 0.88 | 0.96 | 0 | block 1 | 0.95 | 0.87 | 0.95 | 0 |

| block 2 | 0.94 | 0.92 | 0.04 | block 2 | 0.93 | 0.91 | 0.05 | ||

| block 3 | 0.92 | 0.93 | 0.02 | block 3 | 0.90 | 0.93 | 0.02 | ||

| Tone Stimulus (S1) | Cronbach’s α | Sensitivity | α If Deleted | Part-Whole-Correction | Letter Stimulus (S2) | Cronbach’s α | Sensitivity | α If Deleted | Part-Whole-Correction |

|---|---|---|---|---|---|---|---|---|---|

| slopeHit | slopeRT | ||||||||

| block 1 | 0 | 0.20 | 0 | 0 | block 1 | 0.67 | 0.46 | 0.61 | 0.06 |

| block 2 | 0.11 | 0 | 0 | block 2 | 0.58 | 0.42 | 0.25 | ||

| block 3 | 0.22 | 0.20 | −0.20 | block 3 | 0.42 | 0.65 | 0.02 | ||

| Hits SOA 16 | Hits SOA 16 | ||||||||

| block 1 | 0.72 | 0.57 | 0.61 | 0.11 | block 1 | 0.89 | 0.75 | 0.86 | 0.03 |

| block 2 | 0.54 | 0.66 | 0.06 | block 2 | 0.82 | 0.79 | 0.09 | ||

| block 3 | 0.56 | 0.60 | 0.11 | block 3 | 0.76 | 0.86 | 0.03 | ||

| Hits SOA 133 | Hits SOA 133 | ||||||||

| block 1 | 0.73 | 0.57 | 0.66 | 0.07 | block 1 | 0.86 | 0.72 | 0.83 | 0.03 |

| block 2 | 0.63 | 0.55 | 0.18 | block 2 | 0.75 | 0.78 | 0.07 | ||

| block 3 | 0.52 | 0.70 | 0.03 | block 3 | 0.75 | 0.79 | 0.06 | ||

| Hits SOA 500 | Hits SOA 500 | ||||||||

| block 1 | 0.75 | 0.58 | 0.70 | 0.05 | block 1 | 0.86 | 0.77 | 0.79 | 0.07 |

| block 2 | 0.66 | 0.58 | 0.17 | block 2 | 0.73 | 0.80 | 0.05 | ||

| block 3 | 0.53 | 0.72 | 0.03 | block 3 | 0.75 | 0.80 | 0.06 | ||

| Hits SOA 1000 | Hits SOA 1000 | ||||||||

| block 1 | 0.70 | 0.52 | 0.64 | 0.06 | block 1 | 0.81 | 0.61 | 0.79 | 0.02 |

| block 2 | 0.59 | 0.53 | 0.17 | block 2 | 0.70 | 0.70 | 0.11 | ||

| block 3 | 0.48 | 0.66 | 0.04 | block 3 | 0.67 | 0.73 | 0.08 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Böttrich, N.; Mückschel, M.; Dillenseger, A.; Lange, C.; Kern, R.; Ziemssen, T.; Beste, C. On the Reliability of Examining Dual-Tasking Abilities Using a Novel E-Health Device—A Proof of Concept Study in Multiple Sclerosis. J. Clin. Med. 2020, 9, 3423. https://doi.org/10.3390/jcm9113423

Böttrich N, Mückschel M, Dillenseger A, Lange C, Kern R, Ziemssen T, Beste C. On the Reliability of Examining Dual-Tasking Abilities Using a Novel E-Health Device—A Proof of Concept Study in Multiple Sclerosis. Journal of Clinical Medicine. 2020; 9(11):3423. https://doi.org/10.3390/jcm9113423

Chicago/Turabian StyleBöttrich, Niels, Moritz Mückschel, Anja Dillenseger, Christoph Lange, Raimar Kern, Tjalf Ziemssen, and Christian Beste. 2020. "On the Reliability of Examining Dual-Tasking Abilities Using a Novel E-Health Device—A Proof of Concept Study in Multiple Sclerosis" Journal of Clinical Medicine 9, no. 11: 3423. https://doi.org/10.3390/jcm9113423