Impact of Texture Information on Crop Classification with Machine Learning and UAV Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Datasets

2.2.1. UAV Images

2.2.2. Ground-Truth Data and Land-Cover Map

2.3. Classification Methods and Feature Extraction

2.3.1. Random Forest

2.3.2. Support Vector Machine

2.3.3. Texture Information

2.4. Classification Procedures

2.5. Implementation

3. Results and Discussion

3.1. Parameterization of RF and SVM Classifiers

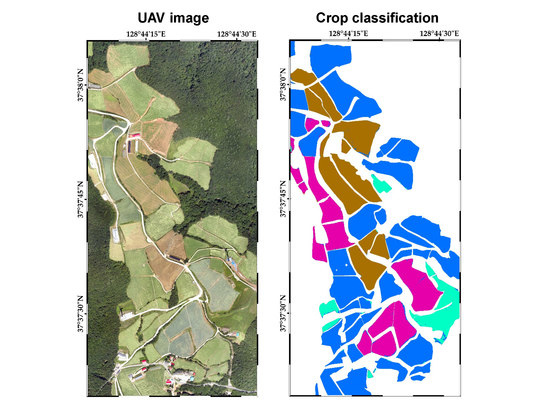

3.2. Visual Assessment of Classification Results

3.3. Quantitative Accuracy Assessment

3.4. Comparison of Spectral and Texture Information

3.5. Time-Series Analysis of Normalized Difference Vegetation Index for Selection of Optimal UAV Image

3.6. Classification Methods

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rosenzweig, C.; Elliott, J.; Deryng, D.; Ruane, A.C.; Müller, C.; Arneth, A.; Boote, K.J.; Folberth, C.; Glotter, M.; Khabarov, N.; et al. Assessing agricultural risks of climate change in the 21st century in a global gridded crop model intercomparison. Proc. Natl. Acad. Sci. USA 2014, 111, 3268–3273. [Google Scholar] [CrossRef] [PubMed]

- Zhong, L.; Gong, P.; Biging, G.S. Efficient corn and soybean mapping with temporal extendability: A multiyear experiment using Landsat imagery. Remote Sens. Environ. 2014, 140, 1–13. [Google Scholar] [CrossRef]

- Na, S.I.; Park, C.W.; So, K.H.; Ahn, H.Y.; Lee, K.D. Development of biomass evaluation model of winter crop using RGB imagery based on unmanned aerial vehicle. Korean J. Remote Sens. 2018, 34, 709–720, (In Korean with English Abstract). [Google Scholar]

- Tilman, D.; Balzer, C.; Hill, J.; Befort, B.L. Global food demand and the sustainable intensification of agriculture. Proc. Natl. Acad. Sci. USA 2011, 108, 20260–20264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, J.; Seo, B.; Kang, S. Development of a biophysical rice yield model using all-weather climate data. Korean J. Remote Sens. 2018, 33, 721–732, (In Korean with English Abstract). [Google Scholar]

- Kim, Y.; Park, N.-W.; Lee, K.-D. Self-learning based land-cover classification using sequential class patterns from past land-cover maps. Remote Sens. 2018, 9, 921. [Google Scholar] [CrossRef]

- Clark, M.L. Comparison of simulated hyperspectral HyspIRI and multispectral Landsat 8 and Sentinel-2 imagery for multi-seasonal, regional land-cover mapping. Remote Sens. Environ. 2017, 200, 311–325. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Mapping crop cover using multi-temporal Landsat 8 OLI imagery. Int. J. Remote Sens. 2017, 38, 4348–4361. [Google Scholar] [CrossRef] [Green Version]

- Friedl, M.A.; McIver, D.K.; Hodges, J.C.F.; Zhang, X.Y.; Muchoney, D.; Strahler, A.H.; Woodcock, C.E.; Gopal, S.; Schneider, A.; Cooper, A.; et al. Global land cover mapping from MODIS: Algorithms and early results. Remote Sens. Environ. 2002, 83, 287–302. [Google Scholar] [CrossRef]

- Yang, C.; Wu, G.; Ding, K.; Shi, T.; Li, Q.; Wang, J. Improving land use/land cover classification by integrating pixel unmixing and decision tree methods. Remote Sens. 2017, 9, 122. [Google Scholar] [CrossRef]

- Hall, O.; Dahlin, S.; Marstorp, H.; Archila Bustos, M.; Öborn, I.; Jirström, M. Classification of maize in complex smallholder farming systems using UAV imagery. Drones 2018, 2, 22. [Google Scholar] [CrossRef]

- Böhler, J.; Schaepman, M.; Kneubühler, M. Crop classification in a heterogeneous arable landscape using uncalibrated UAV data. Remote Sens. 2018, 10, 1282. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Shahbazi, M.; Théau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. GISci. Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

- Latif, M.A. An agricultural perspective on flying sensors. IEEE Geosci. Remote Sens. Mag. 2018, 6, 10–22. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and segmentation of vine canopy in ultra-high spatial resolution RGB imagery obtained from unmanned aerial vehicle (UAV): A case study in a commercial vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- Melville, B.; Fisher, A.; Lucieer, A. Ultra-high spatial resolution fractional vegetation cover from unmanned aerial multispectral imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 14–24. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J.R.; Tullis, J.A. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Li, M.; Zang, S.; Zhang, B.; Li, S.; Wu, C. A review of remote sensing image classification techniques: The role of spatio-contextual information. Eur. J. Remote Sens. 2014, 47, 389–411. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.P.; Lin, L.-M. Spatial and spectral hybrid image classification for rice lodging assessment through UAV imagery. Remote Sen. 2017, 9, 583. [Google Scholar] [CrossRef]

- Zhang, X.; Cui, J.; Wang, W.; Lin, C. A study for texture feature extraction of high-resolution satellite images based on a direction measure and gray level co-occurrence matrix fusion algorithm. Sensors 2017, 17, 1474. [Google Scholar] [CrossRef] [PubMed]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Rango, A.; Havstad, K.M.; Paris, J.F.; Beck, R.F.; McNeely, R.; Gonzalez, A.L. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sens. Environ. 2004, 93, 198–210. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, J.; Pan, Y.; Shuai, G.; Zhu, X.; Zhu, S. An efficient approach based on UAV orthographic imagery to map paddy with support of field-level canopy height from point cloud data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2034–2046. [Google Scholar] [CrossRef]

- de Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Tatsumi, K.; Yamashiki, Y.; Morante, A.K.M.; Fernández, L.R.; Nalvarte, R.A. Pixel-based crop classification in Peru from Landsat 7 ETM+ images using a random forest model. J. Agric. Meteorol. 2016, 72, 1–11. [Google Scholar] [CrossRef]

- Moeckel, T.; Dayananda, S.; Nidamanuri, R.R.; Nautiyal, S.; Hanumaiah, N.; Buerkert, A.; Wachendorf, M. Estimation of vegetable crop parameter by multi-temporal UAV-borne images. Remote Sens. 2018, 10, 805. [Google Scholar] [CrossRef]

- Song, Q.; Xiang, M.; Hovis, C.; Zhou, Q.; Lu, M.; Tang, H.; Wu, W. Object-based feature selection for crop classification using multi-temporal high-resolution imagery. Int. J. Remote Sens. 2018, 1–16. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Blaschke, T.; Li, M.; Tiede, D.; Zhou, Z.; Ma, X.; Chen, D. Evaluation of feature selection methods for object-based land cover mapping of unmanned aerial vehicle imagery using random forest and support vector machine classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- Yuan, Y.; Lin, J.; Wang, Q. Hyperspectral image classification via multitask joint sparse representation and stepwise MRF optimization. IEEE Trans. Cybern. 2016, 46, 2966–2977. [Google Scholar] [CrossRef]

- Xie, F.; Li, F.; Lei, C.; Ke, L. Representative band selection for hyperspectral image classification. ISPRS Int. J. Geo-Inf. 2018, 7, 338. [Google Scholar] [CrossRef]

- Lee, K.-D.; Park, C.-W.; So, K.-H.; Kim, K.-D.; Na, S.-I. Characteristics of UAV aerial images for monitoring of highland Kimchi cabbage. Korean J. Soil Sci. Fertil. 2017, 50, 162–178, (In Korean with English Abstract). [Google Scholar] [CrossRef]

- EGIS (Environmental Geographic Information Service). Available online: https://egis.me.go.kr (accessed on 15 October 2018).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Gehler, P.V.; Schölkopf, B. An introduction to kernel learning algorithms. In Kernel Methods for Remote Sensing Data Analysis; Camps-Valls, G., Bruzzone, L., Eds.; Wiley: Chichester, UK, 2009; pp. 25–48. [Google Scholar]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef] [Green Version]

- Brereton, R.G.; Lloyd, G.R. Support vector machines for classification and regression. Analyst 2010, 135, 230–267. [Google Scholar] [CrossRef]

- Foody, G.M.; Mather, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Meyer, D.; Dimitriadou, E.; Hornik, K.; Weingessel, A.; Leisch, F. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien. Available online: https://CRAN.R-project.org/package=e1071 (accessed on 28 December 2018).

- Tuceryan, M.; Jain, A.K. Texture analysis. In Handbook of Pattern Recognition & Computer Vision, 2nd ed.; Chen, C.H., Pau, L.F., Wang, P.S.P., Eds.; World Scientific Publishing: Singapore, 1999; pp. 207–248. [Google Scholar]

- Castillo-Santiago, M.A.; Ricker, M.; de Jong, B.H.J. Estimation of tropical forest structure from SPOT-5 satellite images. Int. J. Remote Sens. 2010, 31, 2767–2782. [Google Scholar] [CrossRef]

- Johansen, K.; Coops, N.C.; Gergel, S.E.; Stange, Y. Application of high spatial resolution satellite imagery for riparian and forest ecosystem classification. Remote Sens. Environ. 2007, 110, 29–44. [Google Scholar] [CrossRef]

- Szantoi, Z.; Escobedo, F.; Abd-Elrahman, A.; Smith, S.; Pearlstine, L. Analyzing fine-scale wetland composition using high resolution imagery and texture features. Int. J. Appl. Earth Obs. 2013, 23, 204–212. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. Available online: https://www.R-project.org (accessed on 28 December 2018).

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Foody, G.M. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Na, S.I.; Park, C.W.; So, K.H.; Ahn, H.Y.; Lee, K.D. Application method of unmanned aerial vehicle for crop monitoring in Korea. Korean J. Remote Sens. 2018, 34, 829–846, (In Korean with English Abstract). [Google Scholar]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. Remote Sensing and Image Interpretation, 6th ed.; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Atzberger, C.; Klisch, A.; Mattiuzzi, M.; Vuolo, F. Phenological metrics derived over the European continent from NDVI3g data and MODIS time series. Remote Sens. 2014, 6, 257–284. [Google Scholar] [CrossRef]

- Lee, K.D.; Park, C.W.; So, K.H.; Kim, K.D.; Na, S.I. Estimating of transplanting period of highland Kimchi cabbage using UAV imagery. J. Korean Soc. Agric. Eng. 2017, 59, 39–50, (In Korean with English Abstract). [Google Scholar]

- Ji, S.; Zhang, C.; Xu, A.; Shi, Y.; Duan, Y. 3D convolutional neural networks for crop classification with multi-temporal remote sensing images. Remote Sens. 2018, 10, 75. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.-B.; Jenssen, R. Urban land cover classification with missing data modalities using deep convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1758–1768. [Google Scholar] [CrossRef]

- Tan, K.; Wang, X.; Zhu, J.; Hu, J.; Li, J. A novel active learning approach for the classification of hyperspectral imagery using quasi-Newton multinomial logistic regression. Int. J. Remote Sens. 2018, 39, 3029–3054. [Google Scholar] [CrossRef]

- Kim, Y.; Kwak, G.-H.; Lee, K.-D.; Na, S.-I.; Park, C.-W.; Park, N.-W. Performance evaluation of machine learning and deep learning algorithms in crop classification: Impact of hyper-parameters and training sample size. Korean J. Remote Sens. 2018, 34, 811–827, (In Korean with English Abstract). [Google Scholar]

- Yu, L.; Su, J.; Li, C.; Wang, L.; Luo, Z.; Yan, B. Improvement of moderate resolution land use and land cover classification by introducing adjacent region features. Remote Sens. 2018, 10, 414. [Google Scholar] [CrossRef]

| No. | Acquisition Date |

|---|---|

| 1 | 29 June |

| 2 | 12 July |

| 3 | 27 July |

| 4 | 25 August |

| 5 | 13 September |

| 6 | 21 September |

| Classes | Total Area (ha) | Average Area per Parcel (ha) |

|---|---|---|

| Highland Kimchi Cabbage | 22.38 | 0.59 |

| Cabbage | 8.35 | 0.59 |

| Potato | 8.65 | 0.86 |

| Fallow | 3.08 | 0.31 |

| August Image: VNIR Spectral Information | ||||||

| Reference | Highland Kimchi Cabbage | Cabbage | Potato | Fallow | UA (%) | |

| Classification | ||||||

| Highland Kimchi cabbage | 3,074,131 | 342,355 | 49,838 | 84,726 | 86.57 | |

| Cabbage | 230,661 | 869,250 | 65,288 | 6627 | 74.18 | |

| Potato | 107,897 | 124,020 | 1,259,483 | 31,883 | 82.68 | |

| Fallow | 67,045 | 12,343 | 9497 | 375,166 | 80.85 | |

| PA (%) | 88.34 | 64.49 | 91.00 | 75.27 | ||

| OA (%) | 83.13 | |||||

| August Image: VNIR Spectral Information and GK31 Texture Features | ||||||

| Reference | Highland Kimchi Cabbage | Cabbage | Potato | Fallow | UA (%) | |

| Classification | ||||||

| Highland Kimchi cabbage | 3,317,807 | 237,669 | 22,404 | 44,455 | 91.59 | |

| Cabbage | 128,847 | 1,050,991 | 57,648 | 2636 | 84.75 | |

| Potato | 16,522 | 54,544 | 1,294,756 | 18,465 | 93.53 | |

| Fallow | 16,558 | 4764 | 9298 | 432,846 | 93.39 | |

| PA (%) | 95.35 | 77.97 | 93.54 | 86.85 | ||

| OA (%) | 90.85 | |||||

| Multi-Temporal Images: VNIR Spectral Information | ||||||

| Reference | Highland Kimchi Cabbage | Cabbage | Potato | Fallow | UA (%) | |

| Classification | ||||||

| Highland Kimchi cabbage | 3,421,871 | 46,150 | 11,031 | 41,618 | 97.19 | |

| Cabbage | 15,143 | 1,294,009 | 10,189 | 4185 | 97.77 | |

| Potato | 2092 | 4562 | 1,360,566 | 200 | 99.50 | |

| Fallow | 40,628 | 3247 | 2320 | 452,399 | 90.73 | |

| PA (%) | 98.34 | 96.00 | 98.30 | 90.77 | 97.30 | |

| OA (%) | 97.30 | |||||

| Multi-Temporal Images: VNIR Spectral Information and GK31 Texture Features | ||||||

| Reference | Highland Kimchi Cabbage | Cabbage | Potato | Fallow | UA (%) | |

| Classification | ||||||

| Highland Kimchi cabbage | 3,461,811 | 35,558 | 5349 | 16,199 | 98.38 | |

| Cabbage | 7159 | 1,309,847 | 5323 | 2337 | 98.88 | |

| Potato | 1346 | 792 | 1,372,686 | 186 | 99.83 | |

| Fallow | 9418 | 1771 | 748 | 479,680 | 97.57 | |

| PA (%) | 99.48 | 97.17 | 99.17 | 96.24 | 98.72 | |

| OA (%) | 98.72 | |||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwak, G.-H.; Park, N.-W. Impact of Texture Information on Crop Classification with Machine Learning and UAV Images. Appl. Sci. 2019, 9, 643. https://doi.org/10.3390/app9040643

Kwak G-H, Park N-W. Impact of Texture Information on Crop Classification with Machine Learning and UAV Images. Applied Sciences. 2019; 9(4):643. https://doi.org/10.3390/app9040643

Chicago/Turabian StyleKwak, Geun-Ho, and No-Wook Park. 2019. "Impact of Texture Information on Crop Classification with Machine Learning and UAV Images" Applied Sciences 9, no. 4: 643. https://doi.org/10.3390/app9040643