1. Introduction

Mining useful or meaningful information is a major KDD (Knowledge Discovery in Database) task, which has been widely considered as interesting and useful for more than two decades. Association rule mining (ARM) is a basic KDD problem, designed to reveal correlations between purchased items in customer transactional records. A fundamental algorithm named Apriori [

1] was first designed to mine association rules (ARs). It discovers patterns in a level-wise way in a static database. Initially, it finds the set of frequent itemsets based on a parameter called the minimum support threshold. Then, Apriori derives the set of ARs from these itemsets according to a second parameter called the minimum confidence threshold. Although this approach can find all the desired patterns, it can have very long runtimes and huge memory requirements. Based on this observation, the Frequent Pattern (FP)-growth [

2] algorithm was proposed. It speeds up ARM by keeping information required for mining ARs in a compressed FP-tree structure. The FP-tree structure stores frequent items, which are later used for mining patterns in a recursive way, growing patterns from small patterns to longer patterns, without generating candidate patterns and using a depth-first search. Deng et al. [

3] then introduced a new vertical data structure called N-list, which keeps the required information for mining patterns, and an efficient PrePost algorithm to mine the set of frequent patterns. Several algorithms used in different domains and applications [

4,

5,

6,

7] were developed to efficiently find different types of itemsets, respectively.

Most approaches for ARM can only be applied on binary databases. Thus, it is considered that all items are equally interesting or important. The internal values (such as the purchase quantities of items) or the external values (such as the unit profits of items) are not taken into account in ARM. For this reason, ARM is unable to specifically reveal the profitable itemsets. Consequently, retailers or managers cannot take efficient decisions or choose appropriate sales strategies on the basis of the most profitable sets of products. For example, caviar may yield much more profit than bottles of milks in a supermarket. To solve this problem, HUIM (High-utility itemset mining) [

8,

9] was introduced to discover the set of high-utility itemsets (HUIs). To reduce the search space and maintain the downward closure property, a transaction-weighted utility (TWU) model [

10] was developed to find a set of patterns called HTWUIs (High Transaction-Weighted Utilization Itemsets). Discovering HTWUIs can be used to avoid the “combinatorial explosion” problem by maintaining the downward closure property for mining HUIs (High-utility Itemsets). Lin et al. then presented a high-utility pattern (HUP)-tree [

11] that keeps only 1-HTWUIs into a condense tree structure based on the TWU model. Liu et al. [

12] then developed the HUI-Miner algorithm and presented a utility-list (UL) structure for mining HUIs, which is the most efficient structure to mine HUIs. Lan et al. then presented several works to address the problem of HUIM focusing on the on-shelf problem [

13,

14]. Zihayat then presented a top-

k algorithm to efficiently find the top-

k HUIs from the set of the discovered HUIs in the stream dataset [

15]. Several algorithms [

16,

17,

18,

19] were presented for specific applications and designed to mine various types of HUIs.

Although HUIM can identify the set of profitable itemsets for decision-making, the utility of an itemset often increases when its size is increased. For example, any combination of caviar with another item may be considered as a high-utility itemset (HUI) for market basket analytics, which may mislead a manager/retailer and result in taking wrong or risky decisions. An alternative designed by Hong et al. [

20] is HAUIM (High Average-Utility Itemset Mining) to discover the set of HAUIs (High Average-Utility Itemsets) in databases. An Apriori-like approach was first designed that considers the length (size) of each itemset. It calculates the average-utility of each itemset instead of its utility as in HUIM, which provides a flexible way of measuring the importance of itemsets for decision-making. The

auub (Average-Utility Upper Bound) model [

20] was presented to obtain a downward closure property by maintaining the HAUUBIs (High Average-Utility Upper-Bound Itemsets), thus reducing the search space to discover the set of HAUIs. Lin et al. [

21] developed an efficient HAUP-tree (High Average-Utility Pattern tree) to store information related to 1-HAUUBIs in a compact tree structure, and avoid candidate generation. An efficient average average-utility (AU)-list structure [

22] was then developed to efficiently discover the set of HAUIs. Several algorithms [

23,

24] were designed and the design of several extensions are in progress.

Most approaches for HAUI mining were developed to find the set of HAUIs in a static database. When the size of a database is increased or decreased (e.g., after inserting new transactions) [

25,

26,

27], existing algorithms needs to re-mine the updated database in batch mode to update the results. Cheung et al. [

25] presented the FUP (Fast UPdated) concept to maintain the discovered frequent itemsets using incremental updates. It categorizes itemsets into four cases and the itemsets of each case are then, respectively, maintained using a designed procedure. The FUP concept was utilized in HUIM [

27]. In this paper, we utilize the FUP concept to update the results when transactions are instead based on the

auub model and AUL-structures. An efficient FUP-HAUIMI algorithm is presented to incrementally update the discovered information. The major contributions are the following.

A FUP-HAUIMI algorithm is presented to incrementally update the discovered knowledge without candidate generation for transaction insertion. The AUL-structure is utilized in the designed algorithm to efficiently keep information for mining patterns and incrementally updating results.

The FUP concept is also utilized and modified to provide updating procedures for the FUP-HAUIMI algorithm. Based on the FUP concept, the correctness and completeness of the algorithm to update the discovered knowledge is ensured.

Experiments show that the designed FUP-HAUIMI algorithm has better performance to maintain and update the discovered HAUIs than that of the state-of-the-art HAUI-Miner algorithm running in batch mode and the state-of-the-art incremental IHAUPM algorithm.

3. Preliminaries and Problem Statement

In this section, an example is given to explain the definitions of the designed approach, which is shown in

Table 1. Each transaction consists of several distinct items, and purchase quantities are attached to items. The unit profit table of the original database is shown in

Table 2. The user’s minimum high average-utility threshold

is set to 14.4%, which is set by the user based on its preferences and the application domain.

Definition 1. The average-utility of an item in the transaction can be denoted as , which is defined as:where is the quantity of in , and is the unit profit value of . For instance, the average-utilities of Items (b–d) in are calculated as = (= 5), = (= 8), and = (= 8), respectively.

Definition 2. The average-utility of a k-itemset X in a transaction can be denoted as , which is defined as:where k is the number of items in X. For instance, the average-utility of Itemsets (ac) and (acd) in are calculated as = (= 10), and = (= 9.3), respectively.

Definition 3. The average-utility of an itemset X in D is denoted as , and defined as: For instance, the average-utility of Itemset (ac) in the database is calculated as: + (= + )(= 19.5).

Definition 4. The transaction utility of a transaction is denoted as , and defined as: For instance, (= 12 + 4 + 8 + 8)(= 32), (= 77), (= 44), (= 42), and (= 49).

Definition 5. The total utility of a database D is denoted as , which is the sum of all transaction utilities as: For instance, the total utility of

Table 1 is calculated as

(= 32 + 77 + 44 + 42 + 49) (= 244).

To obtain a downward closure property for pruning unpromising candidates early, the average-utility upper bound (

auub) model [

20] was designed. It overestimates the utility of itemsets. This process ensures the correctness and completeness of the process for mining itemsets. The definitions are as follows.

Definition 6 (Transaction-maximum utility, tmu).

The transaction-maximum utility of a transaction is denoted as and defined as: For instance, the tmu of transactions to are calculated as (= 12), (= 24), (= 21), (= 22), and (= 35), respectively.

Definition 7 (Average-utility upper-bound, auub).

The average-utility upper-bound (auub) of an itemset X in the original database is denoted as and defined as:where is the maximum utility of transaction such that . For instance, the auub values of the itemsets (a) and (ac) are calculated as: (= 12 + 21 + 35)(= 68) and (= 12 + 21)(= 33), respectively.

Property 1 (Downward closure of auub).

Let an itemset Y be the superset of an itemset X such that . According to the downward closure property of auub, we obtain that: Thus, if , then holds for any superset of an itemset X.

Definition 8 (High average-utility upper bound itemset, HAUUBI).

An itemset X is a high average-utility upper bound itemset (HAUUBI) in the original database if it satisfies that condition: For instance, assume that the minimum average-utility threshold is set to 14.4%. Thus, the itemset (a) is a high average utility upper bound itemset (HAUUBI) since (= 68 > 244 14.4%)(= 35.13). However, the itemset (ab) is not a HAUUBI since (= 12 < 35.13).

For the incremental mining problem, we assume that several transactions are inserted into the original database. In this example, transactions for insertion are shown in

Table 3. Thus, the problem definition of this paper is defined as follows.

Problem Statement: For incremental HAUIM, it is necessary to design an efficient algorithm for transaction insertion to maintain and update the discovered information, and multiple scans of the updated database should be avoided. Thus, an itemset is considered as a HAUI if it satisfies the following condition:

where

is the updated average-utility of

X,

is the total utility in the original database

D, and

is the total utility in the modified database d. Moreover,

is a user specified minimum average-utility threshold, which can be adjusted by experts for different domains.

4. Proposed FUP-HAUIMI Algorithm with Transaction Insertion

In the past, the HUI-Miner algorithm was used to efficiently mine HUIs using an efficient UL-structure (Utility-List structure). Lin et al. then utilized the UL-structure and developed an efficient AUL-structure (Average-Utility-List structure) and designed the HAUI-Miner algorithm [

22] for mining HAUIs. The HAUI-Miner is based on the well-known

auub model to ensure the correctness and completeness for discovering patterns, and is the state-of-the-art batch algorithm. However, in real-life, databases are often updated by inserting or deleting transactions. Previous HAUIM algorithms must re-scan an updated database in batch mode to update the set of HAUIs. This process wastes computer resources. In this paper, we thus develop an incremental algorithm called FUP-HAUIMI for maintaining a database for the case of transaction insertion. The AUL-structure and the FUP concept are utilized to speed up the mining performance, and ensure the correctness and completeness for discovering HAUIs. A simple join operation is used in this paper to generate patterns for exploring the enumeration tree. Details of the developed FUP-HAUIMI algorithm are explained below.

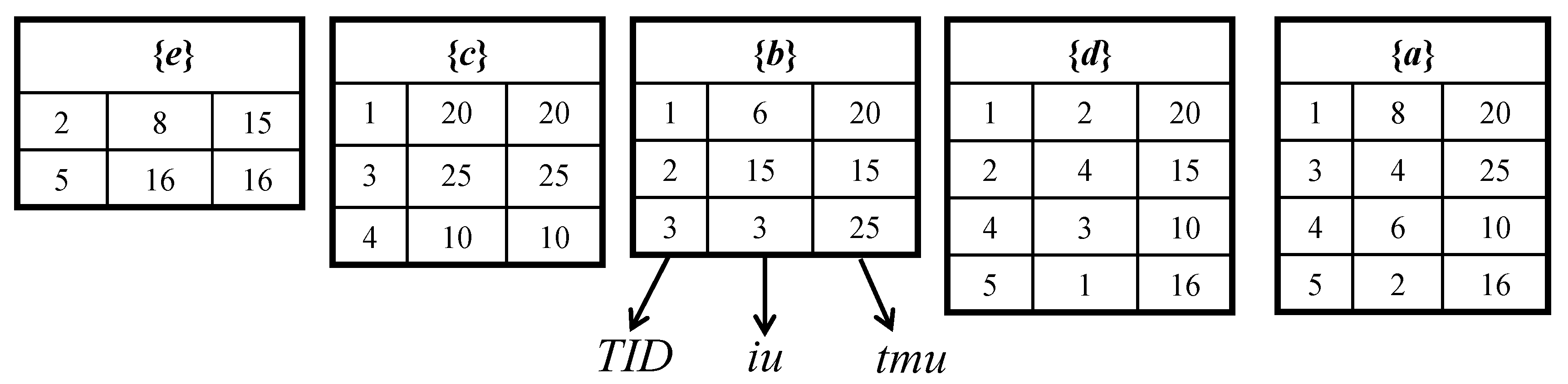

4.1. The Average-Utility-List (AUL)-Structure

The AUL-structure is utilized by the HAUI-Miner algorithm [

22]. Each itemset

X in the AUL-structure structure keeps three fields such as the transaction ID represented as (

tid), the utility of an item

X in a transaction

denoted as (

iu), and the transaction-maximum-utility of an item

X in transaction

denoted as (

tmu). For generating

k-itemsets (

), an enumeration tree representing the search space is explored using a depth-first search to determine whether the superset (

k + 1)-itemsets of

k-itemsets should be generated. If it satisfies the condition, a simple join operation is recursively performed to generate the AUL-structures of

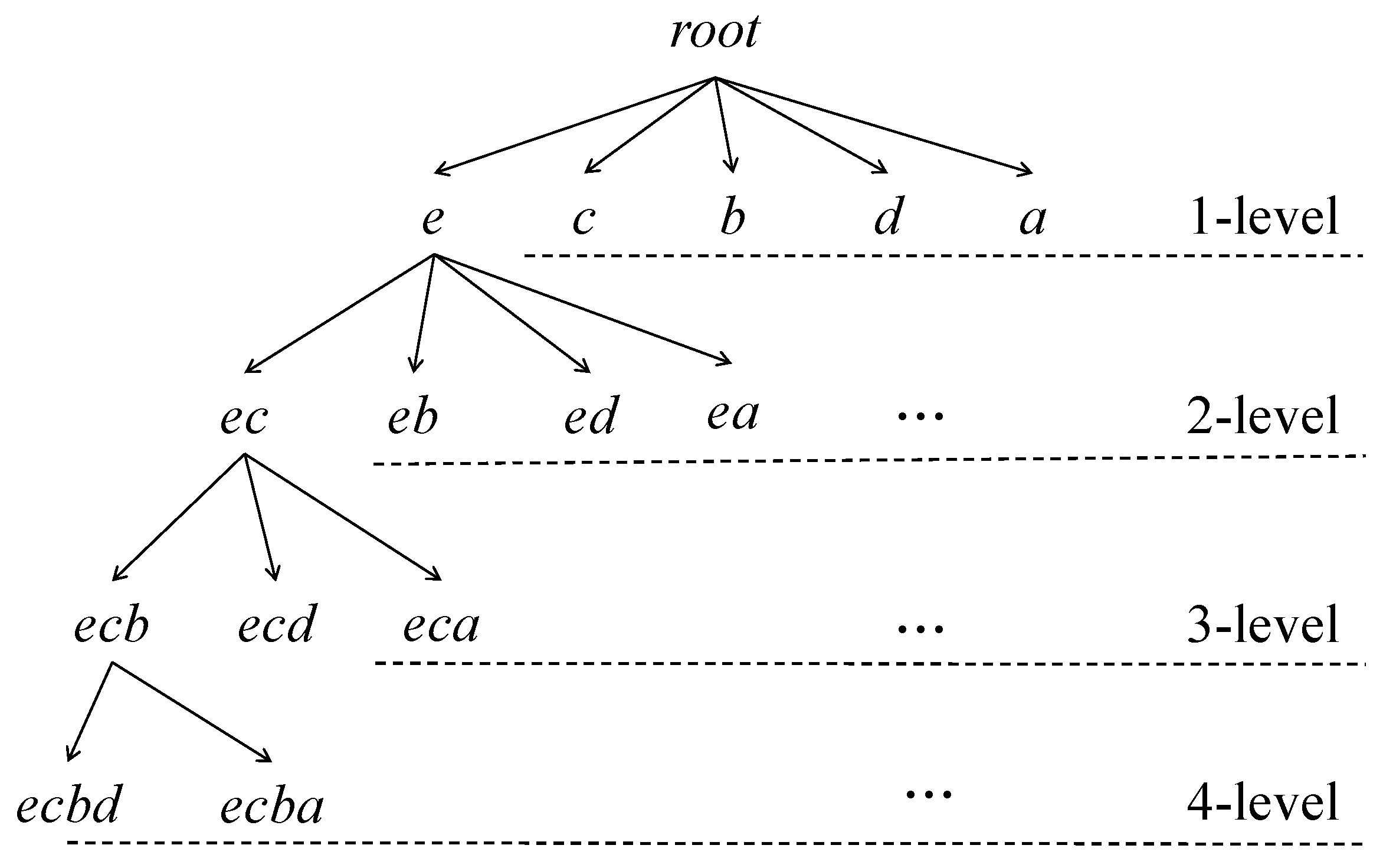

k-itemsets. This process is then recursively performed until no candidates are generated. After that, a database scan is done to find the actual HAUIs. The enumeration tree for the example database is shown in

Figure 2. Based on the AUL-structures, we can obtain the following properties.

Property 2. All the 1-HAUUIs of the original database are sorted by auub-ascending order, which is also used as the construction order for the AUL-structures.

Property 3. To ensure the correctness and completeness for mining HAUUBIs and HAUIs, the AUL-structures of all items in the inserted transactions should be constructed for later maintenance. This is reasonable since the size of the inserted transactions is much smaller than that of the original database in real-life situations.

Property 4. The auub-ascending order of 1-HAUUBIs in the updated database is almost the same as the sorted order in the original database since the size of the inserted transactions is smaller than the size of the original database. Thus, this sorting order of itemsets generally does not change much when the database is updated.

Since all 1-items of inserted transactions are kept to build the initial AUL-structures, the designed FUP-HAUIMI algorithm can efficiently find the updated HAUUBIs and HAUIs without generating candidates and is complete and correct to update HAUUBIs and HAUIs. The AUL-structures of 1-HAUUBIs obtained from the original database are shown in

Figure 3.

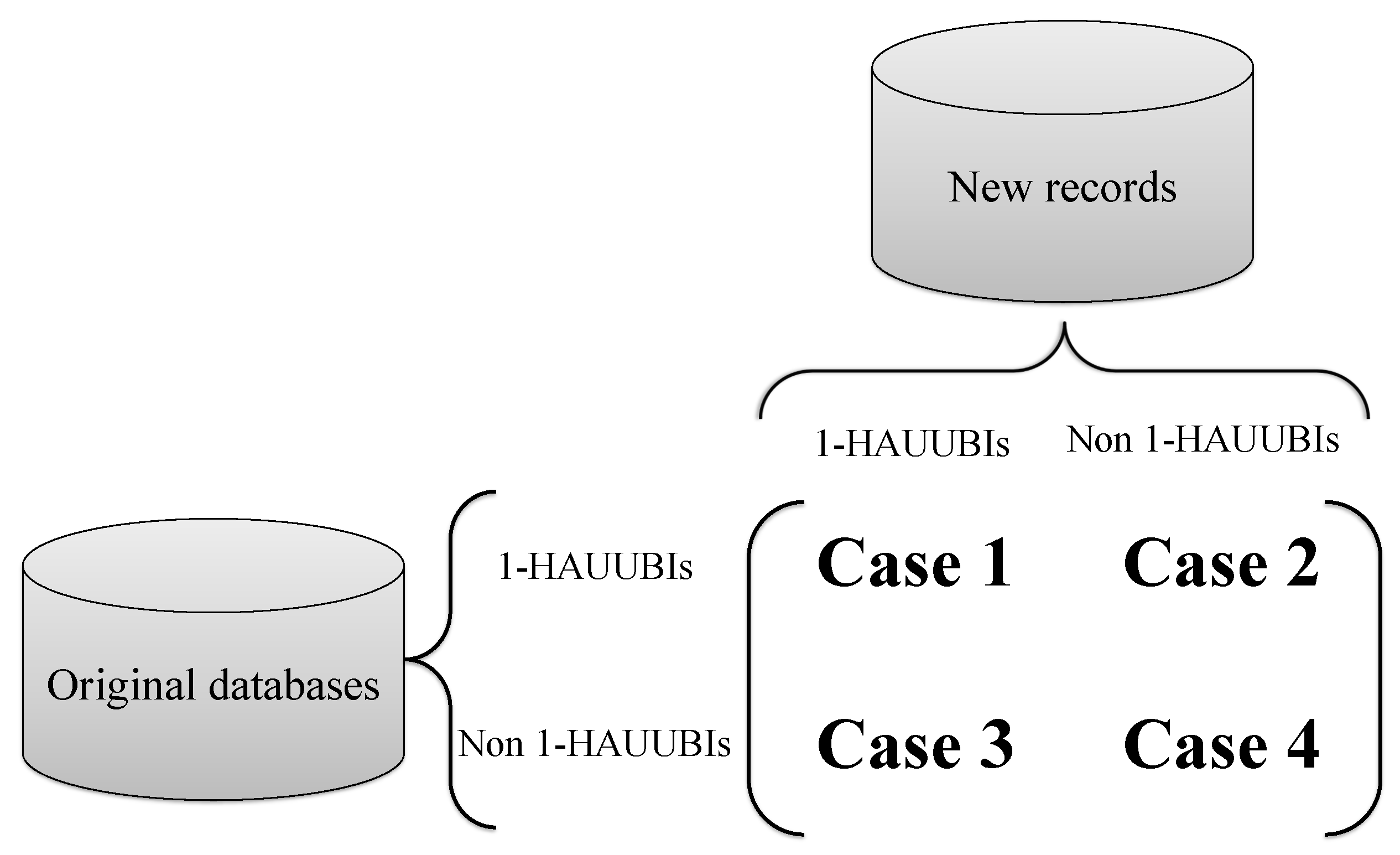

4.2. The Adapted Fast Updated Concept

The designed FUP-HAUIMI algorithm categorizes 1-HAUUBIs into four cases according to the FUP concept, which is extended from [

25]. The 1-HAUUBIs of each case are then, respectively, processed by the designed procedures to update the 1-HAUUBIs. The four cases of the adapted FUP concept are shown in

Figure 4. Thus, we can obtain the following information as:

Case 1: An itemset is a 1-HAUUBI both in the original database and in the inserted transactions. In this case, this itemset remains a 1-HAUUBI after the database is updated.

Case 2: An itemset is a 1-HAUUBI in the original database but not a 1-HAUUBI in the inserted transactions. In this case, the itemset is a 1-HAUUBI or not a 1-HAUUBI after the database is updated.

Case 3: An itemset is a non 1-HAUUBI in the original database but a 1-HAUUBI in the inserted transactions. In this case, an additional database scan is needed to obtain the average-utility of the itemset in the original database, and then update its average-utility after the database is updated.

Case 4: An itemset is a non 1-HAUUBI both in the original database and in the inserted transactions. In this case, an itemset remains a non 1-HAUUBI after the database is updated.

Based on the above cases, lemmas, proofs, and corollaries are provided as follows to ensure the correctness and completeness for discovering 1-HAUUBIs for incremental HAUIM.

Lemma 1. An itemset X is a 1-HAUUBI in the original Database (D) and inserted transactions (d). Thus, it remains a 1-HAUUBI in the updated Database (U).

Proof. Since X is a 1-HAUUBI both in (D) and (d), its and . Thus, the updated result is: = + = = t . Hence, we conclude that the itemset remains a 1-HAUUBI in the updated Database (U). ☐

Corollary 1. An itemset X remains a 1-HAUUBI in (U) if it belongs to Case 1.

Lemma 2. An itemset X is a 1-HAUUBI in (D) but a non 1-HAUUBI in (d). There are two possible situations: (1) it is a 1-HAUUBI in (U); or (2) it becomes a non 1-HAUUBI in (U).

Proof. Since an itemset X is a 1-HAUUBI in (D), it follows that . It is a non 1-HAUUBI in (d) since . There are two possible situations: (1) = ; or (2) . ☐

Corollary 2. Two situations may occur for the itemset X: (1) it remains a 1-HAUUBI in (U); or (2) it is a non 1-HAUUBI in (U) if it belongs to Case 2.

Lemma 3. If an itemset X is a non 1-HAUUBI in (D) but is a 1-HAUUBI in (d), an additional database scan is needed to obtain the . There are two possible situations: (1) it remains a non 1-HAUBBI in (U); or (2) it becomes a 1-HAUUBI in (U).

Proof. An itemset X is a non 1-HAUUBI in (D) such as , and is a 1-HAUUBI in (d) such as . Thus, we can have that: (1) = ≥; or (2) . ☐

Corollary 3. For an itemset X, two situations may occur: (1) it remains a non 1-HAUUBI in (U); or (2) it becomes a 1-HAUUBI in (U) if it appears in Case 3.

Lemma 4. If an itemset X is a non 1-HAUUBI in both (D) and (d), it remains a non 1-HAUUBI in (U).

Proof. An itemset X is a non 1-HAUUBI both in (D) and (d) such that , and . Thus, we can obtain that = . ☐

Corollary 4. An itemset X remains a non 1-HAUUBI in (U) if it appears in Case 4, which can be directly ignored since it would not affect the final rules.

Based on the above lemmas, proofs, and corollaries, the downward closure property of the auub model can still hold for incremental mining. Thus, the discovery of the updated 1-HAUUBIs is correct and complete. The FUP-HAUIMI algorithm initially constructs the AUL-structures of 1-HAUUBIs based on the original database. The AUL-structures of all items in the inserted transactions are also obtained, which can ensure the completeness and correctness of the designed maintenance approaches. When some transactions are inserted into the original database, the designed FUP-HAUIMI algorithm first divides the HAUUBIs into four cases, and the itemsets of each case are then, respectively, maintained and updated by the designed procedures. After that, the k-HAUUBIs are then checked to find their actual k-HAUIs. The pseudocode of the developed FUP-HAUIMI algorithm is provided in Algorithm 1.

| Algorithm 1: Proposed FUP-HAUIMI algorithm |

![Applsci 08 00769 i001]() |

The designed Algorithm 1 first scans the database by considering items in the newly inserted transactions to construct their AUL-structures (Lines 1–4 of Algorithm 1). After that, the AUL-structures built from the original database and inserted transactions are merged (Line 5 of Algorithm 1). Based on the merged AUL-structures, if the average-utility of an itemset is no less than the updated minimum average-utility count, it is considered as a high average-utility itemset (Lines 7–8 of Algorithm 1), and then its supersets are considered using a depth-first search according to the enumeration tree. This process is then recursively repeated until no tree nodes are generated (Lines 9–13 of Algorithm 1). In the Merge function, if an item does not exist in the original database (Line 4 of Algorithm 2), the original database is then rescanned to construct the AUL-structure of the item. The average-utility of the selected itemsets is then calculated (Lines 14–16 of Algorithm 1). After that, the algorithm terminates. The updated patterns have been obtained.

| Algorithm 2: Merge (D.AULs, d.AULs) |

![Applsci 08 00769 i002]() |

The Construct function shown in Algorithm 3 is used to determine whether the supersets of an itemset must be maintained using the set of tids to generate combinations (Lines 1–3 of Algorithm 3). The information about utilities (Lines 4–6 of Algorithm 3) are updated, and the updated AUL-structures are then returned (Lines 7–8 in Algorithm 3).

| Algorithm 3: Construct (X.AUL, Y) |

![Applsci 08 00769 i003]() |

5. Experimental Evaluation

In this section, an evaluation of the runtime, memory usage, number of patterns and scalability of the proposed algorithm is presented. The designed FUP-HAUIMI algorithm is compared with the state-of-the-art HAUI-Miner algorithm running in batch mode [

22], and the state-of-the-art IHAUPM algorithm [

29] running in incremental mode. All the algorithms were written in Java, and executed on an Intel(R) Core(TM) i7-6700 4.00 GHz processor with 8 GB of main memory. Windows 10 was used as operating system for running the experiments. Six datasets were used, including four real-life datasets [

30] and two synthetic datasets. The simulated datasets were generated by the IBM Quest Synthetic data generator [

31]. A two-phase simulation model [

10] is used to generate the internal and external utilities. To describe the datasets,

represents the number of transactions in the dataset;

is the number of items in the dataset;

AveLen indicates the average-length of the transactions in the dataset;

MaxLen denotes the maximal length among all transactions in the dataset; and

Type indicates whether the dataset is sparse or dense. Characteristics of the datasets are shown in

Table 4.

Experiments were performed using various minimum high average-utility thresholds (TH) values and different insertion ratios (IR). The IR is the percentage of transactions in the original dataset that is inserted for incremental maintenance. Results are presented next.

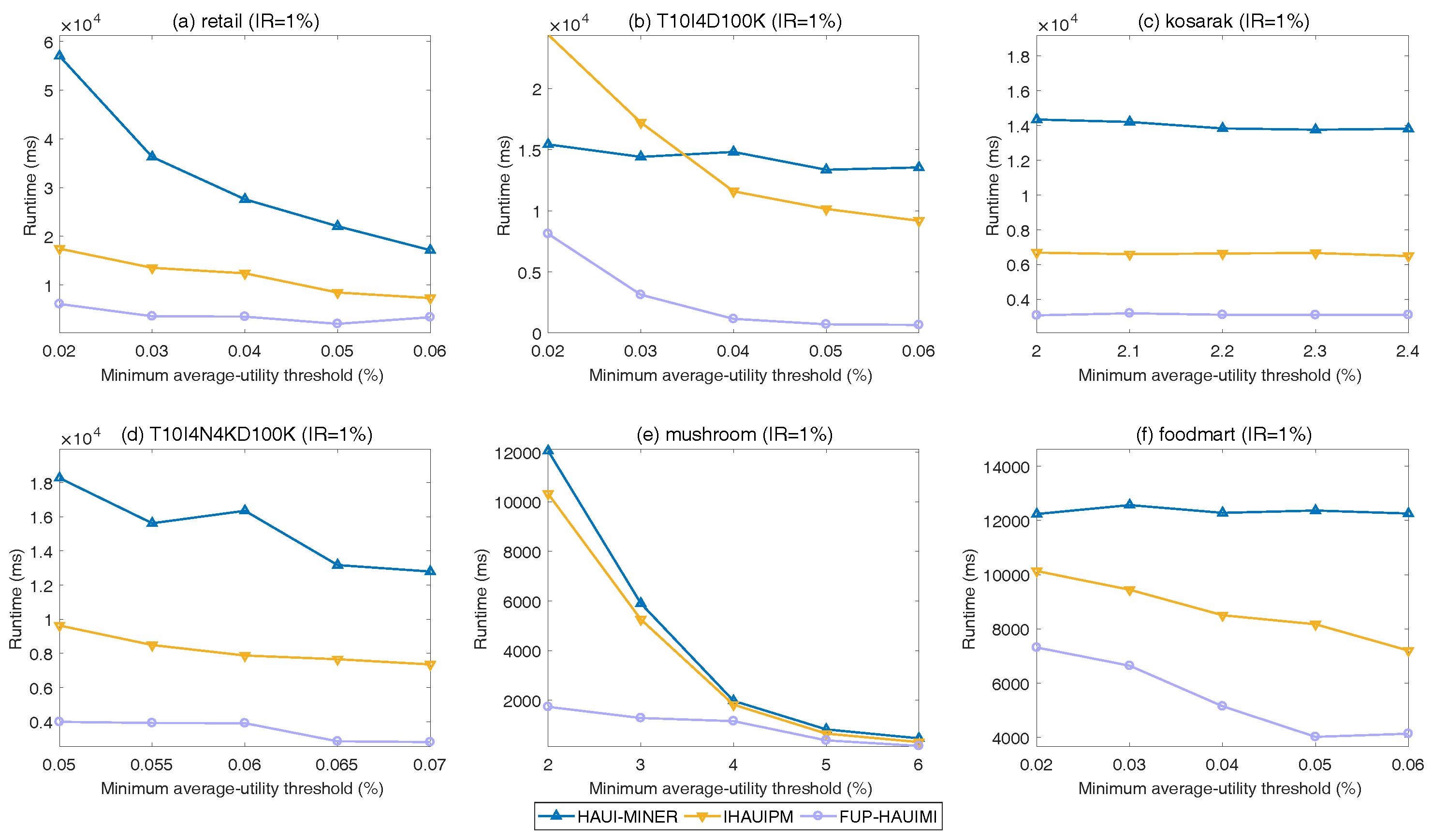

5.1. Runtime

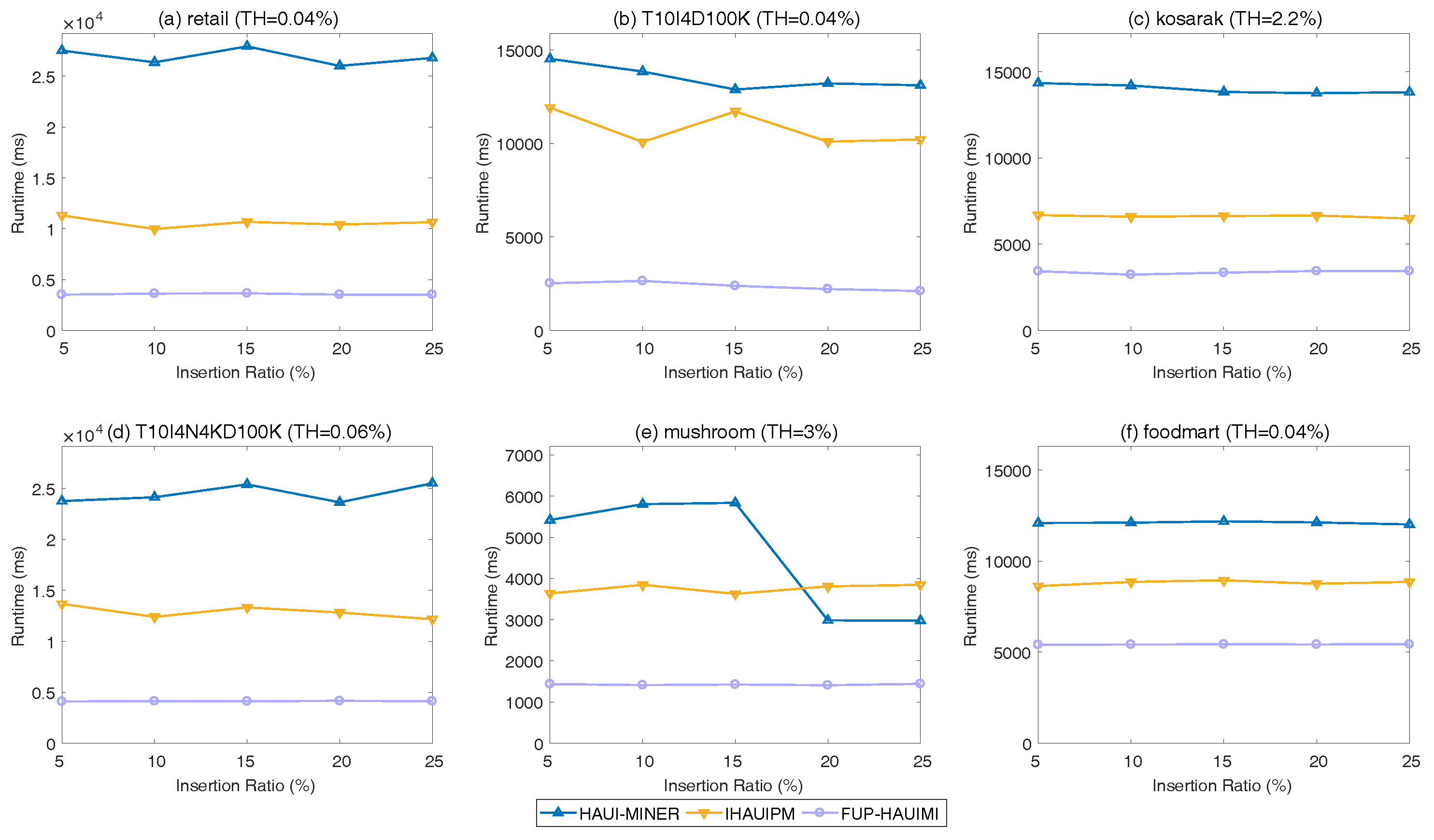

In the experiments, the runtime of three algorithms was measured for various TH values and a fixed IR (= 1%), as shown in

Figure 5. Since the characteristics of the datasets used in the experiments are varied, different TH values are used for different datasets.

In

Figure 5, it is obvious that the designed FUP-HAUIMI algorithm has better performance than the other two algorithms for the six datasets. As the TH value is increased, the runtimes of the three algorithms decrease. This is reasonable since when the TH is increased, fewer HAUIs are discovered. Hence, less runtime is required for the three algorithms. Besides, it can be seen that the designed FUP-HAUIMI algorithm remains stable for various TH values for some datasets such as

Figure 5a,c,d. The reason is that, although the TH is increased, most of the knowledge was discovered for lower minimum average-utility threshold values. It is also worth to mention that HAUI-Miner is the state-of-the-art algorithm for mining HAUIs based on the

auub model, and that IHAUIM is the state-of-the-art algorithm for incremental HAUIM using a tree structure. Thus, it can be concluded that the designed FUP-HAUIMI algorithm performs well for handling a dynamic database with transaction insertion. This is because the designed FUP-HAUIMI algorithm only handles small parts of the inserted transactions, and some itemsets in Case 3 can be ignored. Hence, the runtime is reduced. Thanks to the efficiency of the AUL-strcuture, it is easy to calculate and obtain the required HAUIs. Experiments are also conducted for various IR values with a fixed TH value for the six datasets. Results are shown in

Figure 6.

As shown in

Figure 6, it can be seen that the proposed FUP-HAUIMI algorithm still outperforms the HAUI-Miner and the IHAUPM algorithms. As the IR is increased, it is obvious that all the algorithms remains stable, and especially the designed FUP-HAUIMI algorithm. The reason is that inserted transactions are extracted from the original database according to the percentage of IR, the items in the inserted transactions are sometimes similar to the original one, most knowledge was already discovered, and only fewer itemsets are updated. However, the proposed FUP-HAUIMI still reduces the runtime and outperforms the other algorithms for all datasets. Generally, the designed FUP-HAUIMI helps to reduce the mining cost since the discovered knowledge can be efficiently handled and maintained. In addition, the multiple database scans can be successfully voided in most cases.

5.2. Memory Usage

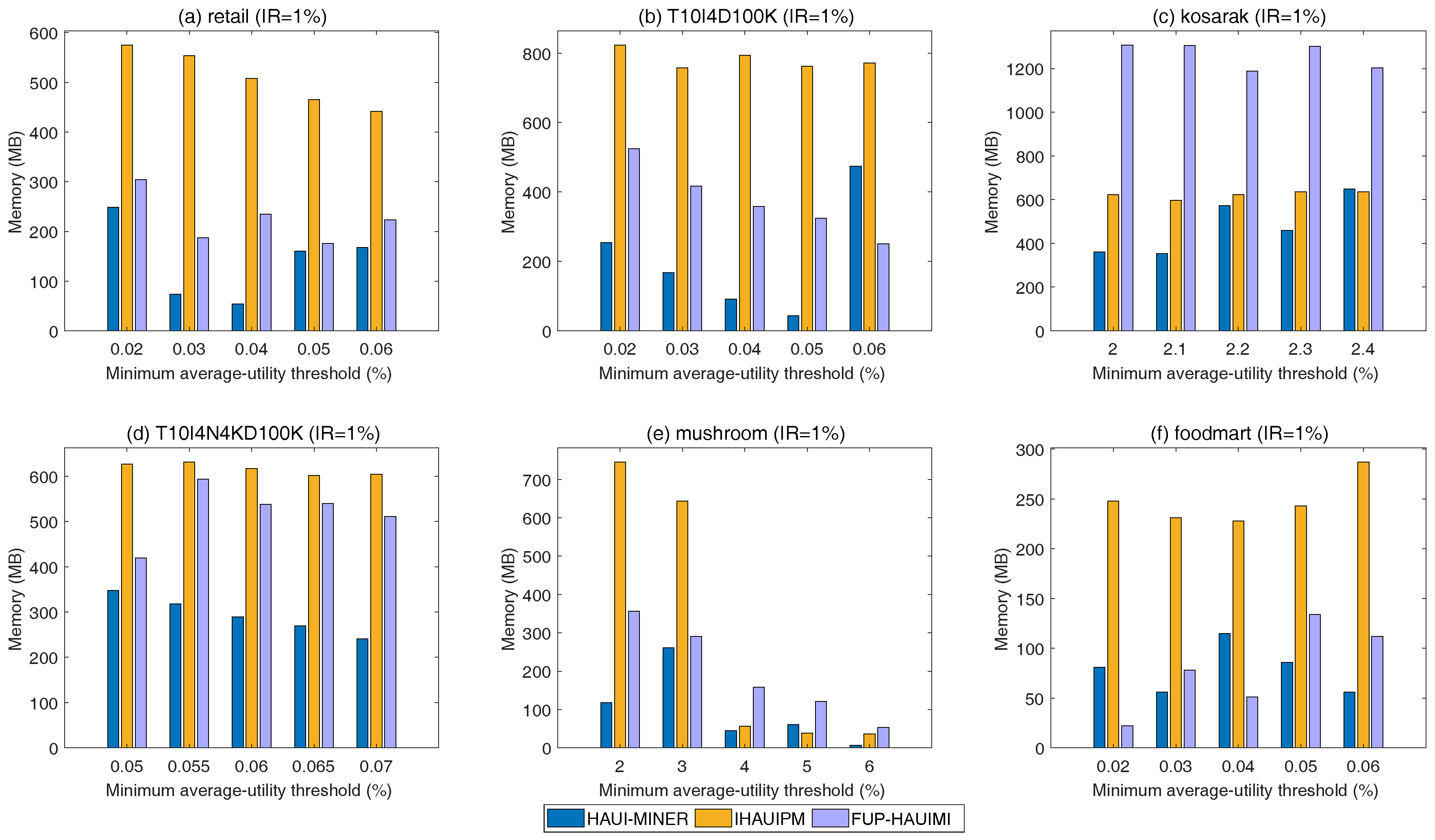

In this section, experiments in terms of memory usage are conducted for various TH values and a fixed IR value. Results are shown in

Figure 7.

In

Figure 7, it can be seen that the HAUI-Miner algorithm outperforms others in terms of memory usage. The reason is that HAUI-Miner uses the utility-list structure to maintain the discovered information, which is a compressed and efficient data structure. Thus, it generally needs less memory than the IHAUPM algorithm since IHAUPM employs a tree structure for incremental maintenance. Besides, the HAUI-Miner algorithm does not need to keep extra information for maintenance. When the size of the database is changed, the HAUI-Miner algorithm re-scans the database to obtain the updated information, which is of course costly but requires less memory. For incremental maintenance, the IHAUPM algorithm generally needs more memory than the developed FUP-IHAUIMI algorithm except for

Figure 7c. This is reasonable since IHAUPM uses the HAUP-tree structure to maintain information, which requires more memory than the AUL-structures of the FUP-HAUIMI algorithm. Moreover, the kosarak dataset is a very sparse dataset with a very long transactions. In this case, the developed FUP-HAUIMI may need to generate more AUL-structures to keep the necessary information for the later mining progress. The results for various IR values and a fixed TH are shown in

Figure 8.

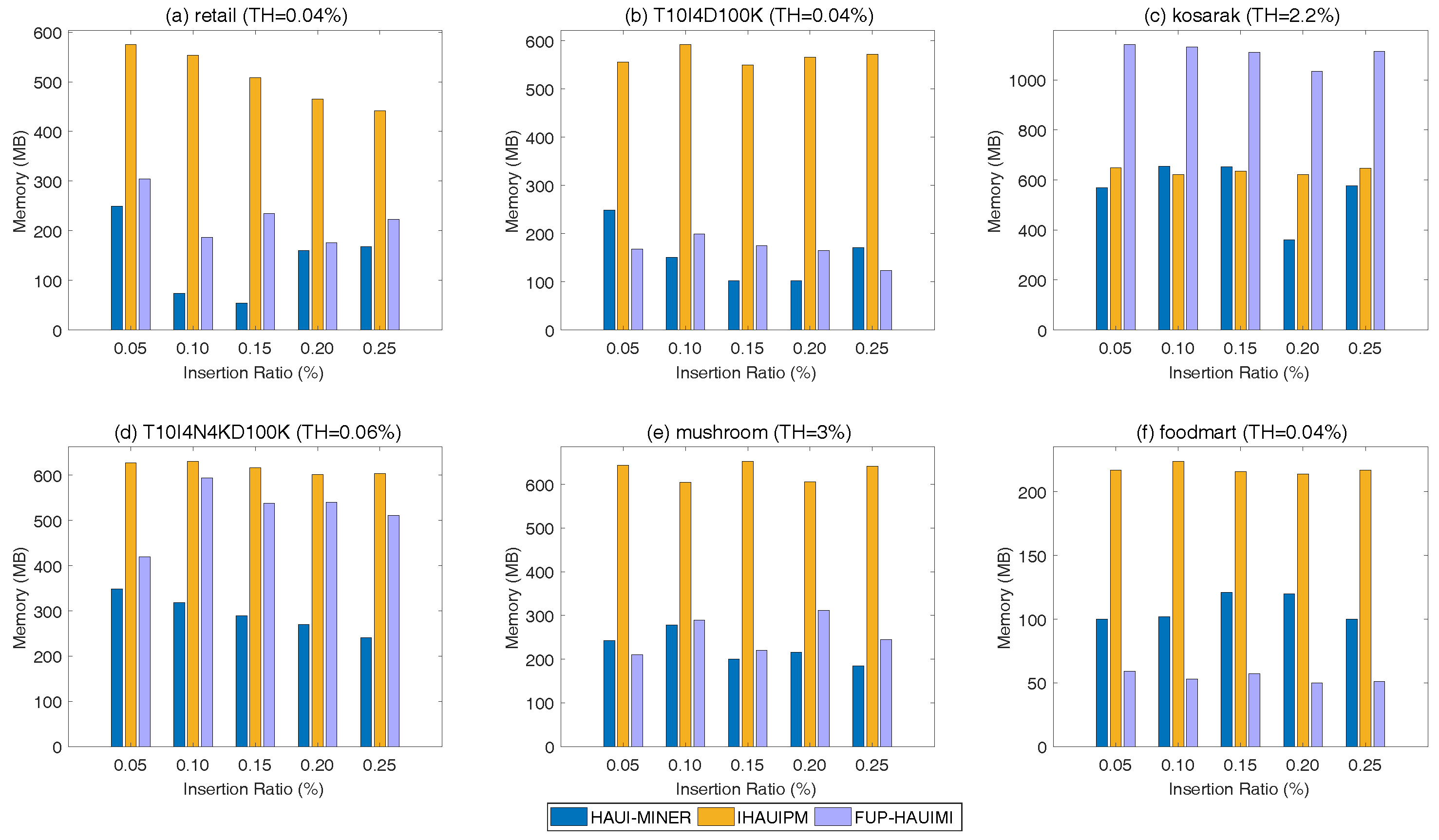

In

Figure 8, similar results to those in

Figure 7 are observed. HAUI-Miner requires less memory usage, and IHAUPM requires more memory than the developed FUP-HAUIMI algorithm except for the kosarak dataset. Thus, we can conclude that the AUL-structure may be not useful to reduce the memory usage while performing the incremental data mining for very sparse datasets with very long transactions. Generally, the designed FUP-HAUIMI outperforms IHAUPM for incremental mining of high average-utility itemsets, especially the AUL-structures can successfully keep the necessary information than that of the tree-based structures.

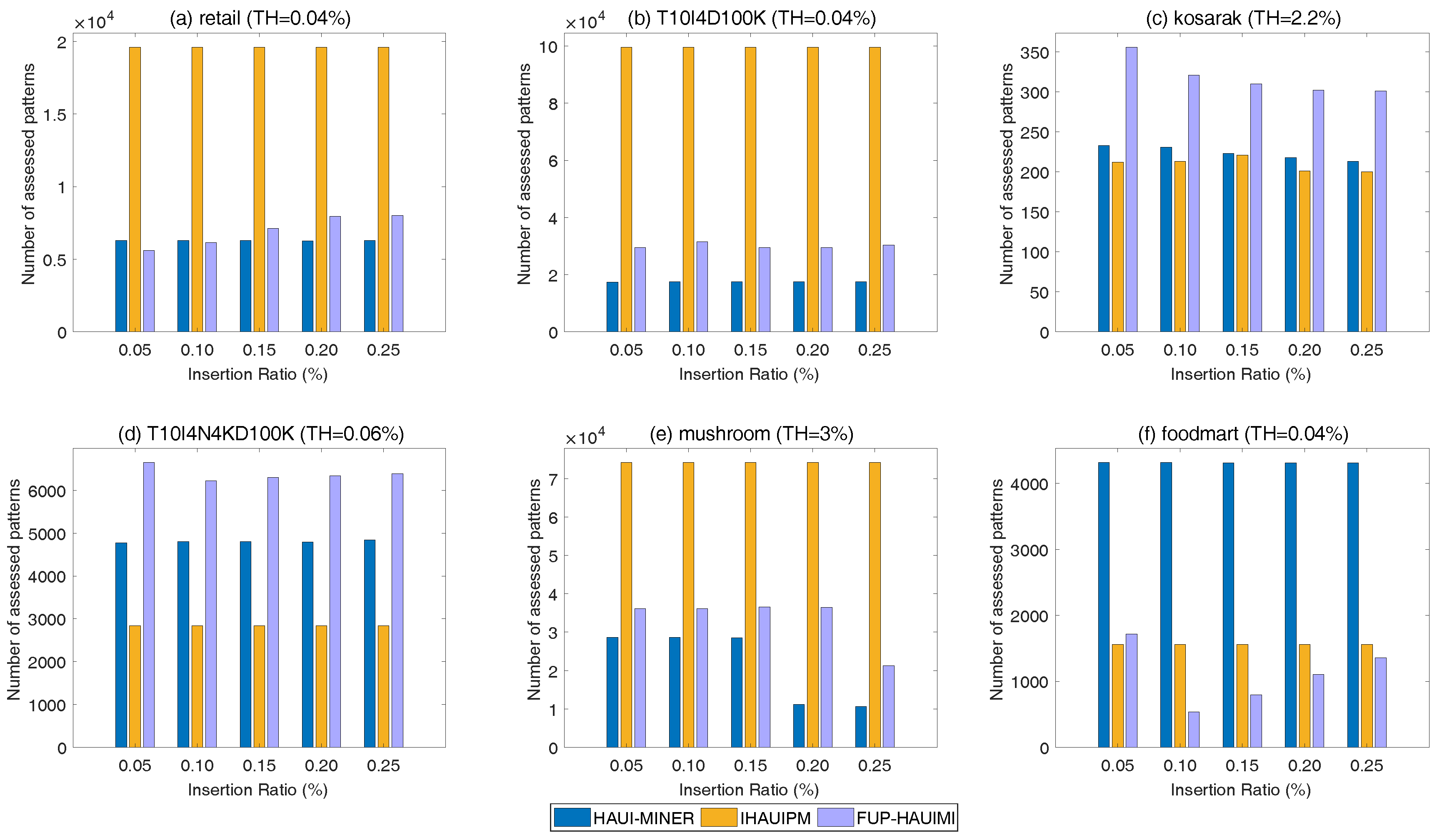

5.3. Number of Patterns

In this section, experiments are presented to assess the number of candidate patterns produced for discovering the actual HAUIs. The results for various TH values and a fixed IR are shown in

Figure 9.

In

Figure 9, we can see that the candidate patterns considered by the proposed FUP-HAUIMI algorithm are much fewer than that of the HAUI-Miner and IHAUPM algorithms except in

Figure 9d. The reason is that the T40I10D100K dataset is dense. Thus, many transactions have the same items for maintenance. Thus, the proposed FUP-HAUIMI algorithm may need to check more patterns in the enumeration tree to check if the supersets must be generated. However, in general cases, the FUP-HAUIMI algorithm still checks less patterns than other algorithms. This shows that the AUL-structures and the adapted FUP concept can successfully reduce the cost of incremental mining of average-utility itemsets. Results for various DR values and a fixed TH are shown in

Figure 10.

Again, we can observe that the FUP-HAUIMI algorithm needs to examine more candidates in very sparse and dense datasets such as the datasets of

Figure 10c,d. However, for the other datasets such as the datasets of

Figure 10a,b,e, the proposed FUP-HAUIMI algorithm outperforms the IHAUPM algorithm, and even has the best results in

Figure 10f. However, in terms of runtime performance, the proposed FUP-HAUIMI algorithm outperforms the other algorithms. Thanks to the efficient FUP concept and the AUL-structures, the runtime of the FUP-HAUIMI can be greatly reduced. From the above results, we can thus conclude that although extra memory usage is required and more candidate patterns are checked by the designed FUP-HAUIMI algorithm, this latter can still achieve better efficiency and effectiveness in most cases except for a very sparse dataset with long transactions or for a very dense dataset.

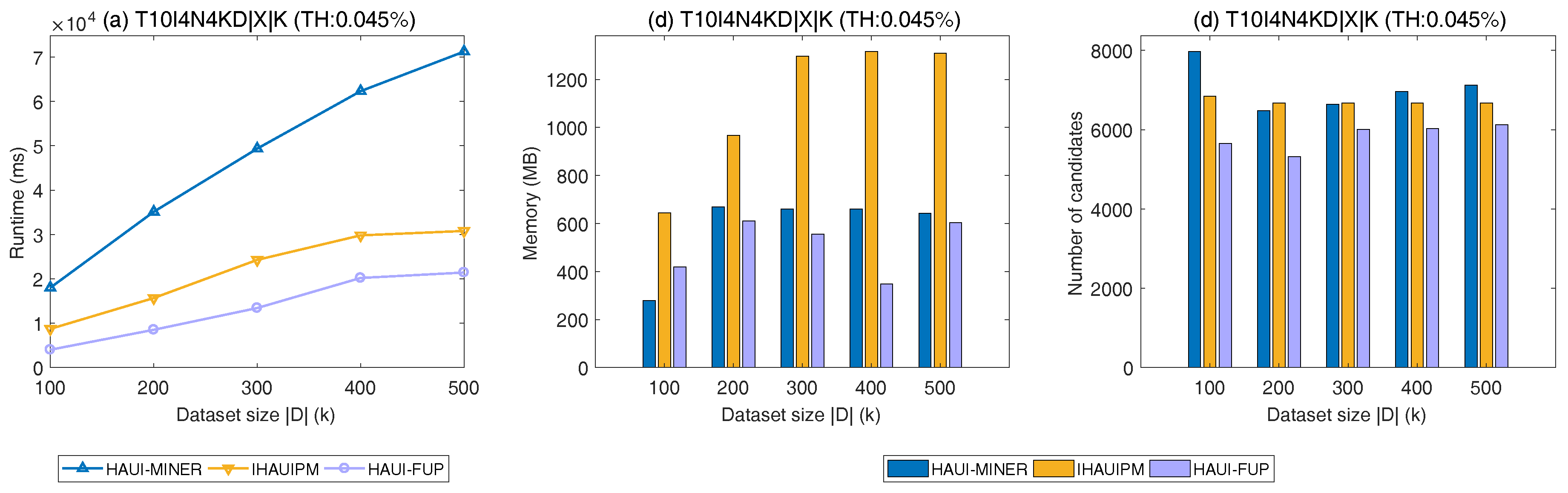

5.4. Scalability

In this section, the scalability of three algorithms were compared on a synthetic dataset, which is generated by the IBM Generator [

31]. The size of the database (scalability) is varied from 100 K to 500 K transactions, with a 100 K increment. The results are shown in

Figure 11.

It can be observed in

Figure 11 that the proposed FUP-HAUIMI algorithm has better scalability than HAUI-Miner and IHAUPM. As

is increased, the runtime of the HAUI-Miner increases since the database needs to be scanned multiple times. However, the runtime of FUP-HAUIMI only slightly increases. In addition, we can see that for these datasets, the designed FUP-HAUIMI algorithm performs better than the other two algorithms in terms of runtime, memory usage, and the number of assessed patterns. Thanks to the AUL-structures, the memory usage can be greatly reduced, which can be seen in

Figure 11b. In addition, the FUP concept achieves good efficiency since it can reduce the number of assessed patterns, as it can be seen in

Figure 11c. Based on the adapted FUP concept, the proposed FUP-HAUIMI algorithm can evaluate fewer patterns and the correctness and completeness of the algorithm is ensured for mining the updated HAUIs. Generally, the proposed FUP-HAUIMI algorithm has better performance than that of the state-of-the-art batch mode HAUI-Miner algorithm and the incremental IHAUPM algorithm.