1. Introduction

Clouds are aerosols consisting of large amounts of frozen crystals, minute liquid droplets, or particles suspended in the atmosphere (

https://www.weather.gov/). Their size, type, composition and movement reflect the atmospheric motion. Especially the cloud type, as one of crucial cloud macroscopic parameters in the cloud observation, plays a vital role in the weather prediction and climate change research [

1]. Currently, a large quantity of labor and material resources are consumed because ground-based cloud images are classified by qualified professionals. Therefore, developing automatic techniques for ground-based cloud recognition is vital. To date, there are various devices for digitizing ground-based clouds, for example the whole sky imager (WSI) [

2], the infrared cloud imager (ICI) [

3], and the whole-sky infrared cloud-measuring system (WSIRCMS) [

4] etc. With the help of these devices, various methods for automatic ground-based cloud recognition [

5,

6,

7] have been proposed. However, the cloud features used in these methods are not discriminative enough to represent cloud images.

Practically, the appearance of clouds can be regarded as a type of natural texture [

8]. Hence making it reasonable to use texture descriptors to portray cloud appearances. Inspired by the success of local features in the texture recognition field [

9,

10,

11,

12], some local features are proposed to recognize ground-based cloud images [

13,

14]. This kind of method includes two procedures; initially, the cloud image is described as a feature vector using local features. Secondly, the Euclidean distance or chi-square distance is utilized in the matching or recognizing process.

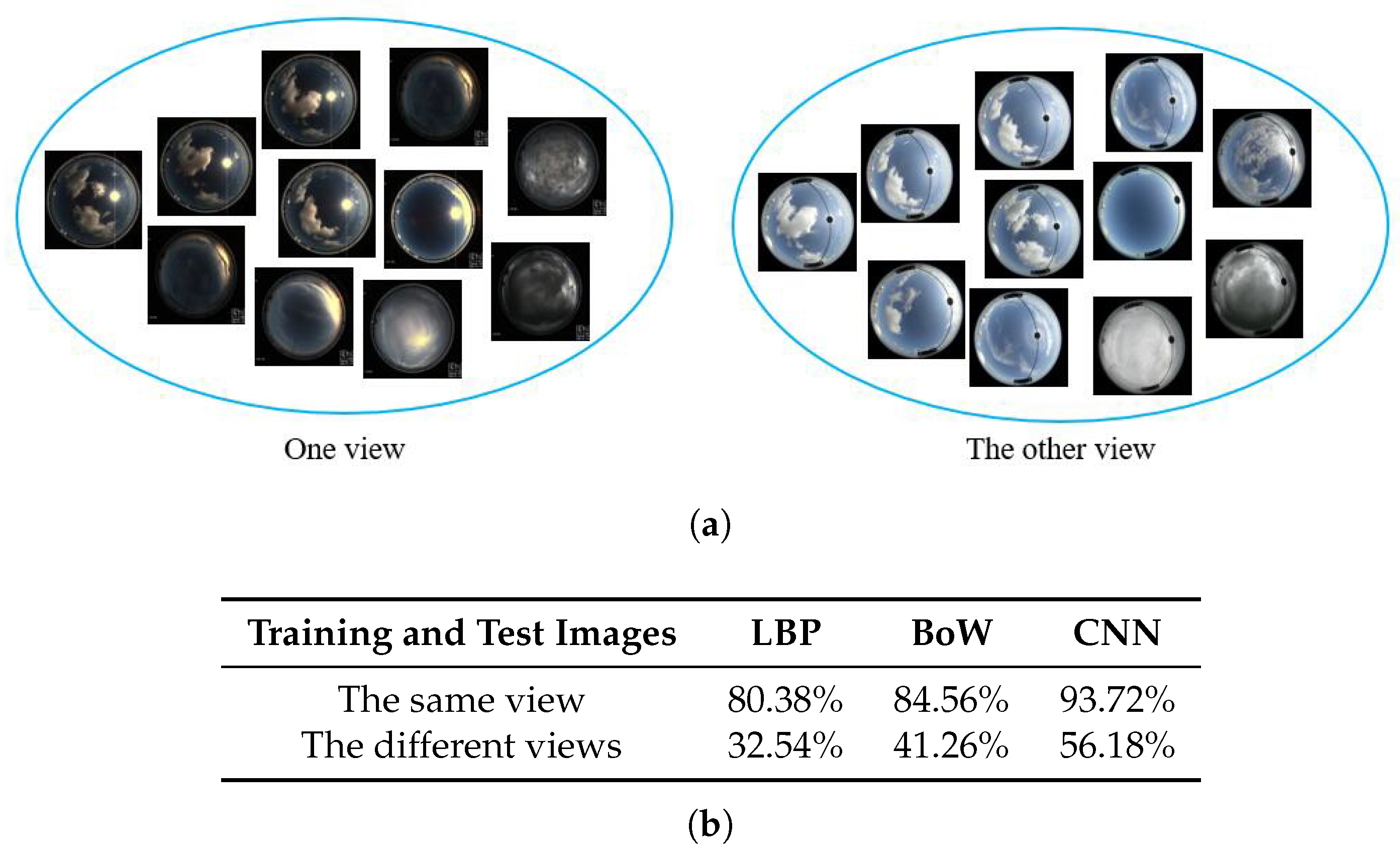

The major focal point of the existing methods is based on recognizing cloud images which originate from similar views. These methods are implemented under the condition that the training and test images come from the same feature space. Nevertheless, these methods are not suitable for multi-view cases. This is because the cloud images captured from different views belong to different feature spaces. Practically, we often handle cloud images in two views. For instance, the cloud images collected by a variety of weather stations possess variances in image resolutions, illuminations, camera settings, occlusions and so on. This kind of cloud images actually distributes in different feature spaces. As illustrated in

Figure 1a, the cloud images are captured in multiple views, and vary greatly in appearance. The competitive methods for ground-based cloud recognition, i.e., local binary patterns (LBP) [

15], the bag-of-words (BoW) model [

16], and the convolutional neural network (CNN) [

17], generally achieve promising results when training and testing in the same feature space, while the performances degrade significantly when training and testing in different feature spaces, as shown in

Figure 1b. Therefore, we hope to employ cloud images from one view (feature space) to train a classifier, which is then used to recognize cloud images from other views (feature spaces). This is a kind of view shift problem, and we define it as the multi-view ground-based cloud recognition. It is very common worldwide. For instance, for the sake of obtaining completed weather information, it is essential to set up more new weather stations to capture cloud images. However, due to the fact that there are insufficient labelled cloud images in the new weather stations to train a robust classifier makes it unrealistic to expect users to label the cloud images for new weather stations. This is time-consuming and a dissipate of manpower. Considering that there are many labelled cloud images accumulated in the established weather stations, we aspire to employ such labelled cloud images to train a classifier which can be used to recognize cloud images in new weather stations.

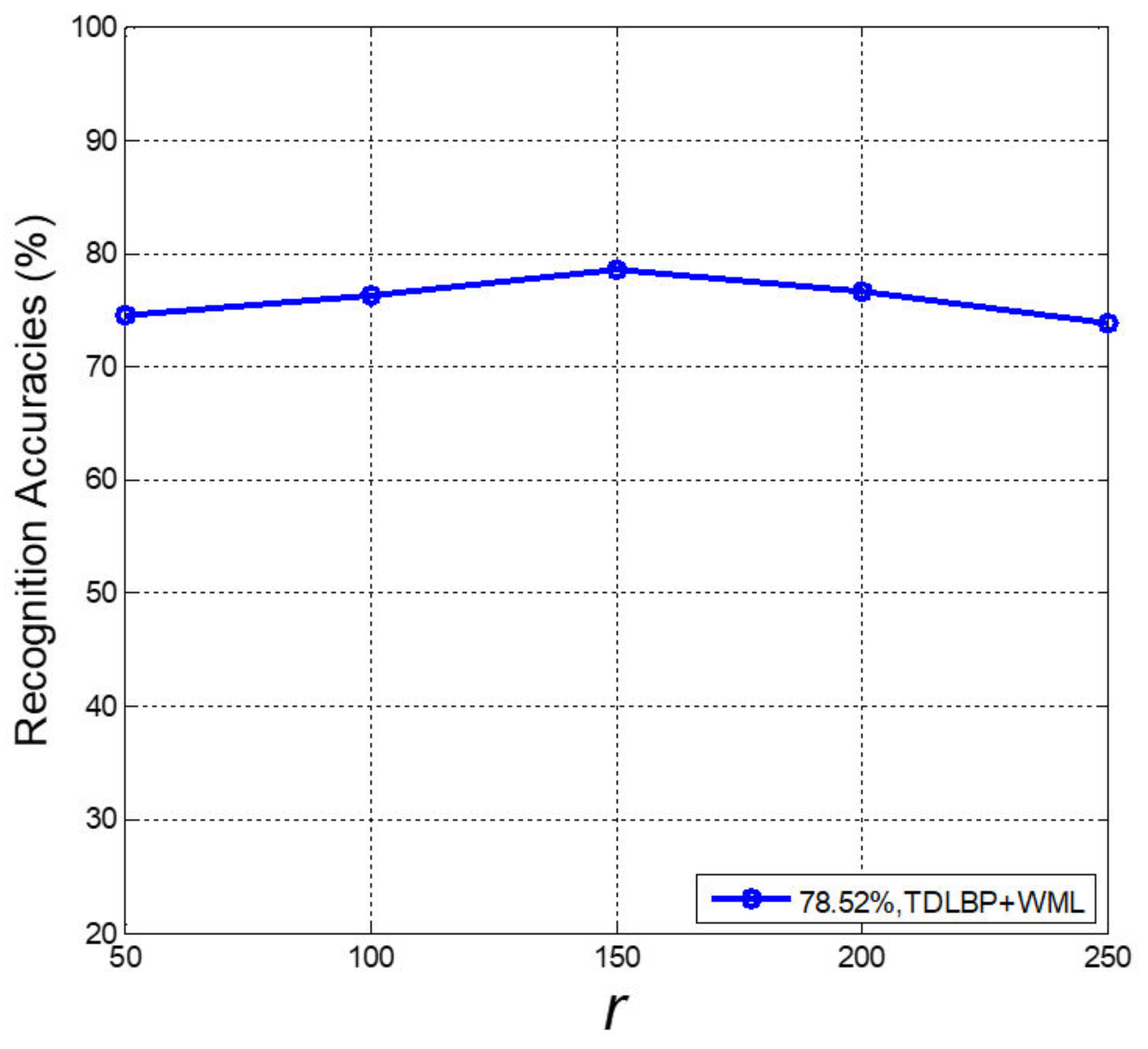

In this paper, we propose a novel multi-view ground-based cloud recognition method by transferring deep visual information. The cloud features used in the existing methods are not discriminative enough to sufficiently describe cloud images when presented with view shift, and therefore we propose an effective method named transfer deep local binary patterns (TDLBP) for feature representation. Concretely, we first train a CNN model, and we propose part summing maps (PSMs) based on all feature maps for one convolutional layer. Then we extract LBP in local regions from the PSMs, and each local region is represented as a histogram. Finally, in order to adapt view shift, we discover the maximum occurrence to make a stable representation.

After cloud images are represented as feature vectors, we compute the similarity between feature vectors to classify ground-based cloud images. Classical distance metrics are predefined, such as the Euclidean distance [

18], chi-square metric [

13] and quadratic-chi metric [

19]. Hence, we propose a learning-based method called weighted metric learning (WML) which aims to utilize sample pairs to learn a transformation matrix. In

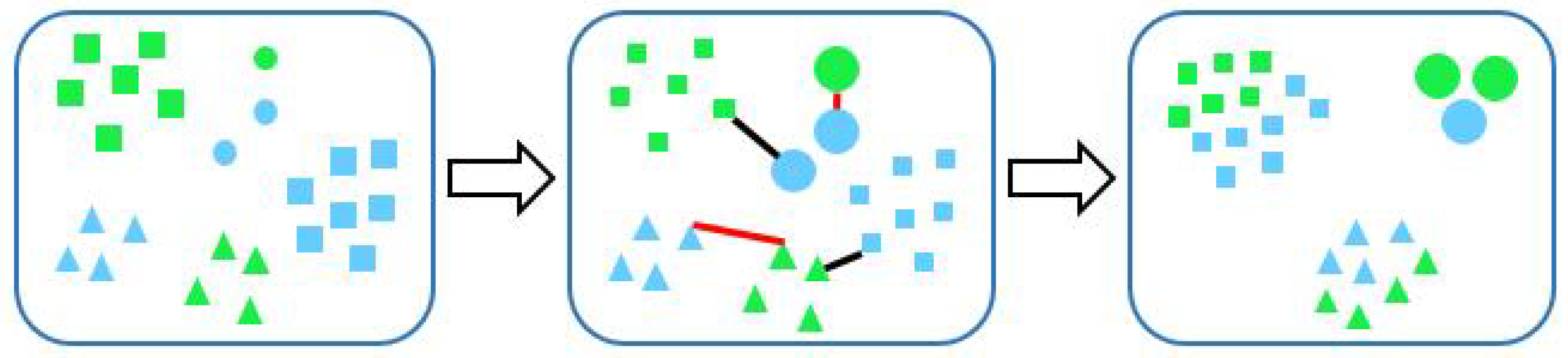

Figure 2, green and blue indicate two kinds of feature spaces. Two samples from both feature spaces comprise a sample pair. Here, the red lines denote similar pairs, while black lines denote dissimilar pairs. In practice, the number of cloud images in each category greatly differs. For example, there are many clear sky images as the clear sky appears frequently, while there are few images of altocumulus which has a low probability of occurrence. There exists an unbalance problem of sample pairs when we learn the transformation matrix. Hence, to avoid the learning process being dominated by sample pairs in which clouds appear frequently, and neglecting limited sample pairs in which clouds occur rarely, we propose a weighted strategy for metric learning. We assign a corresponding weight for sample pairs in each category. Thus, we assign a small weight to sample pairs that possess a large number (squares in

Figure 2) and assign a large weight to sample pairs that possess a small number (circles in

Figure 2). Finally, we utilize the nearest neighborhood classifier, where the distances are determined by the proposed distance metric, to classify cloud images which are from another feature space.

The rest of this paper is organized as follows.

Section 2 presents the related work including feature representation for ground-based cloud recognitions and metric learning. The details of the proposed TDLBP and WML are introduced in

Section 3. In

Section 4, we conduct a series of experiments to verify the proposed method.

Section 5 summarizes the paper.

2. Related Work

In recent years, researchers have developed a number of algorithms for ground-based cloud recognition. The co-occurrence matrix and edge frequency were introduced in [

5] to extract local features to describe cloud images, and recognized five different sky conditions. The work [

20] extended to classify cloud images into eight sky conditions by utilizing Fourier transformation and statistical features. Since the BoW model is an effective algorithm for texture recognition, some extension methods [

21,

22] were proposed. Since the appearance of clouds is a kind of natural texture, Sun et al. [

23] employed LBP to classify infrared cloud images. Liu et al. [

19] proposed illumination-invariant completed local ternary patterns (ICLTP), which can effectively handle the illumination variations. They soon proposed the salient LBP (SLBP) [

13] to capture descriptive cloud information. The desirable property of SLBP is the robustness to noises. However, these features are not robust to view shift for describing cloud images.

Recently, due to the inspiration caused by the success of convolutional neural networks (CNNs) in image recognition [

17,

24], Ye et al. [

25] first proposed to apply CNNs to ground-based cloud recognition. They employed Fisher Vector (FV) to encode the last conventional layer of CNNs, and they further proposed to extract the deep convolutional visual features to represent cloud images in [

26]. Shi et al. [

27] employed the deep convolutional activations-based features (DCAFs) to describe cloud images. These aformentioned methods showed promising recognition results when trained and tested on the same feature space. In other words, these features are also not robust to view shift.

In the recognition procedure to compute similarities or distances between two feature vectors, many predefined metrics cannot show the desirable topology that we are trying to capture. A sought-after alternative is to apply metric learning in place of these predefined metrics. The key idea of metric learning is to conduct a Mahalanobis distance where a transformation matrix is applied to compute the distance between a sample pair. Since metric learning has shown remarkable performance in various fields, such as image retrieval and classification [

28], face recognition [

29,

30,

31] and human activity recognition [

32,

33], we employ the framework of metric learning to ground-based cloud recognition and meanwhile consider the sample imbalance problem.

5. Conclusions

In conclusion, we have proposed TDLBP + WML for multi-view ground-based cloud recognition. Specifically, a novel feature representation called TDLBP has been proposed which is robust to view shift, such as variances in locations, illuminations, resolutions and occlusions. Furthermore, since the numbers of cloud images in each category is different, we propose WML which assigns different weights to each category when learning the transformation matrix. We have verified TDLBP + WML with a series of experiments on three cloud datasets, i.e., the MOC_e, CAMS_e, and IAP_e. Compared to other competitive methods, TDLBP + WML achieves better performance.