Optic Disc Detection from Fundus Photography via Best-Buddies Similarity

Abstract

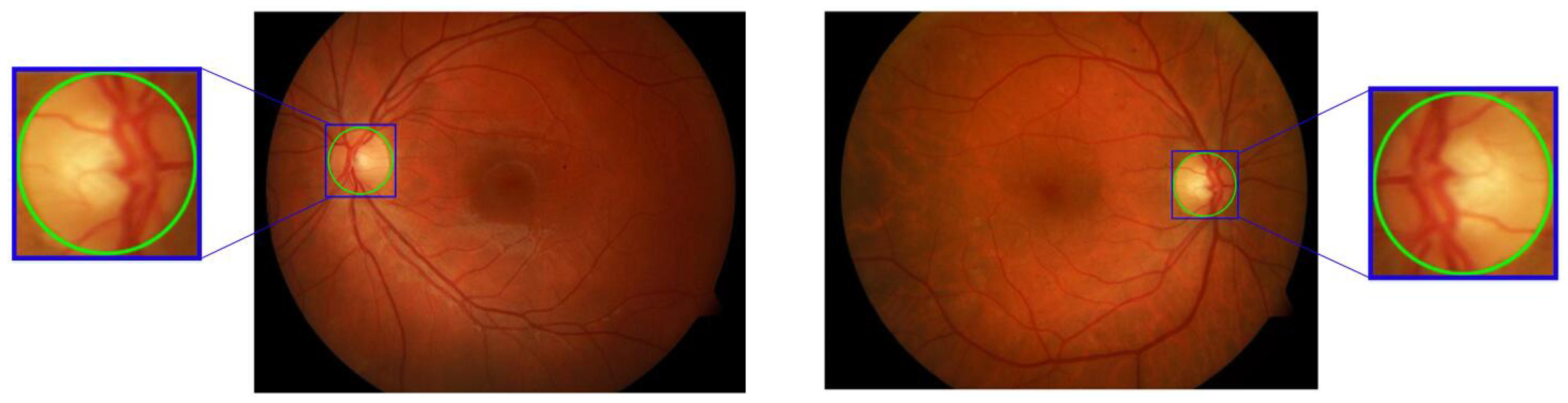

:1. Introduction

2. Materials and Methods

2.1. Best-Buddies Similarity

2.2. Template Matching Based on BBS

| Algorithm 1: Optic Disc Detection using Best-Buddies-Similarity-based Template Matching. |

Require: Template image T; target image I, patch size k.

|

2.2.1. Image Preprocessing

2.2.2. BBS Computation

2.2.3. Finding the Best Result

3. Results

3.1. Data and Parameter Settings

3.2. Results Analysis

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, H.; Chutatape, O. Automated feature extraction in color retinal images by a model based approach. IEEE Trans. Biomed. Eng. 2004, 51, 246–254. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Cen, R.J.; Xiao-Ming, W.U. The pressure distribution in the entrance region of a tapered vessel. J. Jinan Univ. 2000. [Google Scholar]

- Bhuiyan, A.; Kawasaki, R.; Wong, T.Y.; Rao, K. A New and Efficient Method for Automatic Optic Disc Detection Using Geometrical Features. In World Congress on Medical Physics and Biomedical Engineering; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1131–1134. [Google Scholar]

- Chen, X.; Xu, Y.; Yan, S.; Wong, D.W.K.; Wong, T.Y.; Liu, J. Automatic Feature Learning for Glaucoma Detection Based on Deep Learning; Springer International Publishing: Cham, Switzerland, 2015; pp. 669–677. [Google Scholar]

- Monteiro, F.C.; Cadavez, V. Optic disc detection by earth mover’s distance template matching. In Proceedings of the International Conference on Medical Image and Signal Computing, World Academy of Science, Engineering and Technology (WASET), Washington, DC, USA, 16–18 May 2011; Number 59. pp. 1254–1258. [Google Scholar]

- Quigley, H.A.; Varma, R.; Tielsch, J.M.; Katz, J.; Sommer, A.; Gilbert, D.L. The relationship between optic disc area and open-angle glaucoma: the Baltimore Eye Survey. J. Glaucoma 1999, 8, 347–352. [Google Scholar] [CrossRef] [PubMed]

- Ullah, H.; Jan, Z.; Qureshi, R.J.; Shams, B. Automated localization of optic disc in colour fundus images. World Appl. Sci. J. 2013, 28, 1579–1584. [Google Scholar]

- Devasia, T.; Jacob, P.; Thomas, T. Automatic Optic Disc Boundary Extraction from Color Fundus Images. Int. J. Adv. Comput. Sci. Appl. 2014, 5. [Google Scholar] [CrossRef]

- Baroni, M. Multiscale Filtering And Neural Network Classification for Segmentation and Analysis of Retinal Vessels. Biomed. Eng. 2012, 134, 9–20. [Google Scholar]

- Chen, H.T.; Wang, C.M.; Chan, Y.K.; Yang-Mao, S.F.; Chen, Y.F.; Lin, S.F. Statistics-based initial contour detection of optic disc on a retinal fundus image using active contour model. J. Med. Biolog. Eng. 2013, 33, 388–393. [Google Scholar] [CrossRef]

- Zhang, D.; Yi, Y.; Shang, X.; Peng, Y. Optic disc localization by projection with vessel distribution and appearance characteristics. In Proceedings of the International Conference on Pattern Recognition, Tsukuba, Japan, 11–15 November 2012; pp. 3176–3179. [Google Scholar]

- Sinthanayothin, C.; Boyce, J.F.; Cook, H.L.; Williamson, T.H. Automated localisation of the optic disc, fovea, and retinal blood vessels from digital colour fundus images. Br. J. Ophthalmol. 1999, 83, 902–910. [Google Scholar] [CrossRef] [PubMed]

- Sinthanayothin, C.; Kongbunkiat, V.; Phoojaruenchanachai, S.; Singalavanija, A. Automated screening system for diabetic retinopathy. In Proceedings of the International Symposium on Image and Signal Processing and Analysis, Rome, Italy, 18–20 September 2003; Volume 2, pp. 915–920. [Google Scholar]

- Akyol, K.; Şen, B.; Bayır, Ş. Automatic Detection of Optic Disc in Retinal Image by Using Keypoint Detection, Texture Analysis, and Visual Dictionary Techniques. Comput. Math. Methods Med. 2016. [Google Scholar] [CrossRef] [PubMed]

- Osareh, A.; Mirmehdi, M.; Thomas, B.; Markham, R. Automated identification of diabetic retinal exudates in digital colour images. Br. J. Ophthalmol. 2003, 87, 1220–1223. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wang, H. A study of 3D model similarity based on surface bipartite graph matching. Eng. Comput. 2017, 34, 174–188. [Google Scholar] [CrossRef]

- Lalonde, M.; Beaulieu, M.; Gagnon, L. Fast and robust optic disc detection using pyramidal decomposition and Hausdorff-based template matching. IEEE Trans. Med. Imaging 2001, 20, 1193–1200. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Chutatape, O. Automatic location of optic disk in retinal images. In Proceedings of the 2001 International Conference on Image Processing, Thessaloniki, Greece, 7–10 October 2001; Volume 2, pp. 837–840. [Google Scholar]

- Youssif, A.A.H.A.R.; Ghalwash, A.Z.; Ghoneim, A.A.S.A.R. Optic disc detection from normalized digital fundus images by means of a vessels’ direction matched filter. IEEE Trans. Med. Imaging 2008, 27, 11–18. [Google Scholar] [CrossRef] [PubMed]

- Mahfouz, A.E.; Fahmy, A.S. Fast localization of the optic disc using projection of image features. IEEE Trans. Image Process. 2010, 19, 3285–3289. [Google Scholar] [CrossRef] [PubMed]

- Mahfouz, A.E.; Fahmy, A.S. Ultrafast localization of the optic disc using dimensionality reduction of the search space. Med. Image Comput. Comput. Assist. Interv. 2009, 12, 985–992. [Google Scholar] [PubMed]

- Foracchia, M.; Grisan, E.; Ruggeri, A. Detection of optic disc in retinal images by means of a geometrical model of vessel structure. IEEE Trans. Med. Imaging 2004, 23, 1189–1195. [Google Scholar] [CrossRef] [PubMed]

- Ruggeri, A.; Forrachia, M.; Grisan, E. Detecting the optic disc in retinal images by means of a geometrical model of vessel network. In Proceedings of the International Conference on Engineering in Medicine and Biology Society, Cancun, Mexico, 17–21 September 2003; Volume 1, pp. 902–905. [Google Scholar]

- Wang, Y.; Zhang, H.; Yang, F. A Weighted Sparse Neighbourhood-Preserving Projections for Face Recognition. Iete J. Res. 2017, 63, 358–367. [Google Scholar] [CrossRef]

- Zhang, H.; Cao, L.; Gao, S. A locality correlation preserving support vector machine. Pattern Recognit. 2014, 47, 3168–3178. [Google Scholar] [CrossRef]

- Dekel, T.; Oron, S.; Rubinstein, M.; Avidan, S.; Freeman, W.T. Best-buddies similarity for robust template matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2021–2029. [Google Scholar]

- Staal, J.; Abràmoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Walter, T.; Klein, J.C.; Massin, P.; Erginay, A. A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina. IEEE Trans. Med. Imaging 2002, 21, 1236–1243. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, A.; Moghaddam, H.A.; Moin, M.S. Optic disc localization in retinal images using histogram matching. EURASIP J. Image Video Process. 2012, 2012, 19. [Google Scholar] [CrossRef]

- Rangayyan, R.M.; Zhu, X.; Ayres, F.J.; Ells, A.L. Detection of the optic nerve head in fundus images of the retina with Gabor filters and phase portrait analysis. J. Digit. Imaging 2010, 23, 438–453. [Google Scholar] [CrossRef] [PubMed]

- Ying, H.; Zhang, M.; Liu, J.C. Fractal-based automatic localization and segmentation of optic disc in retinal images. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 4139–4141. [Google Scholar]

- Godse, D.A.; Bormane, D.S. Automated localization of optic disc in retinal images. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 65–71. [Google Scholar]

| Dataset | Number of Images | Optic Disc Detected | Average Error Distance (Pixels) | Success Rate |

|---|---|---|---|---|

| DRIVE | 40 | 40 | 10.4 | 100% |

| DIARETDB1 | 89 | 87 | 12.9 | 97.7% |

| Localization Methods | Dataset | Number of Images | Average Error Distance (Pixels) | Success Rate |

|---|---|---|---|---|

| Sinthanayothin et al. [12] | DRIVE | 40 | - | 60% |

| Walter et al. [28] | DRIVE | 40 | - | 80% |

| Dehghani et al. [29] | DRIVE | 40 | 15.9 | 95% |

| Rangayyan et al. [30] | DRIVE | 40 | 23.2 | 100% |

| Ying et al. [31] | DRIVE | 40 | 27 | 100% |

| Kemal Akyol et al. [14] | DRIVE | 40 | - | 95% |

| DIARETDB1 | 89 | - | 94.38% | |

| Godse and Bormane. [32] | DRIVE | 40 | - | 100% |

| DIARETDB1 | 89 | - | 96.62% | |

| The proposed method | DRIVE | 40 | 10.4 | 100% |

| DIARETDB1 | 89 | 12.9 | 97.7% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, K.; Liu, N.; Jia, W.; He, Y.; Lian, J.; Zheng, Y. Optic Disc Detection from Fundus Photography via Best-Buddies Similarity. Appl. Sci. 2018, 8, 709. https://doi.org/10.3390/app8050709

Hou K, Liu N, Jia W, He Y, Lian J, Zheng Y. Optic Disc Detection from Fundus Photography via Best-Buddies Similarity. Applied Sciences. 2018; 8(5):709. https://doi.org/10.3390/app8050709

Chicago/Turabian StyleHou, Kangning, Naiwen Liu, Weikuan Jia, Yunlong He, Jian Lian, and Yuanjie Zheng. 2018. "Optic Disc Detection from Fundus Photography via Best-Buddies Similarity" Applied Sciences 8, no. 5: 709. https://doi.org/10.3390/app8050709

APA StyleHou, K., Liu, N., Jia, W., He, Y., Lian, J., & Zheng, Y. (2018). Optic Disc Detection from Fundus Photography via Best-Buddies Similarity. Applied Sciences, 8(5), 709. https://doi.org/10.3390/app8050709