1. Introduction

In recent decades, with the dramatic progress in computing, control and sensing, significant types of prostheses, orthoses and exoskeletons have been designed and developed to assist and support human limbs for various tasks. In order to increase the operator’s strength and endurance, the exoskeleton is a typical class of wearable mechatronic robotic systems, which are designed to meet this demand. A particular feature of this kind of exoskeleton is that they are fully driven by the pilot intention and follow the pilot movements in synchrony. The application feasibility includes functional rehabilitation for the injured, physical assistance for the disabled and elderly and fatigue relief for heavy-duty workers. To recognize human intention, two main groups of sensors, i.e., cognitive-based sensors, as well as physical-based sensors, are usually applied to capture the information of the human interaction or the muscle signals.

By measuring the electric signals from the musculoskeletal system or the nervous system, the operator’s intention read by the cognitive-based sensors can be interpreted as the input to the exoskeleton system controller. A successful practical application of these kinds of exoskeletons is the hybrid assistive limb (Tsukuba University and Cyberdyne, Japan) [

1,

2], which is structured for whole-body assistance. The muscular activity signal is collected using surface electromyography (sEMG) sensors. In addition, the exploration for brain-machine interface (BMI) control is implemented at the University of Tubingen in Germany [

3]. The hybrid BMI system consists of an EMG continuous decoder, as well as an electroencephalogram (EEG) binary classifier. Consequently, the crucial advantage of cognitive-based sensors is that the pilot or the patient intention can still be read, even if the subject cannot provide necessary joint torques. Thus, these types of the sensor systems are widely utilized in rehabilitation and medical application.

An alternative sensor system recognizes the human intentions by applying physical-based sensors. The Body Extender [

4] is designed to handle and transport heavy loads up to 100 kg. Consequently, the human intentions are read by the force sensors mounted on the interaction part between the pilot and exoskeleton. The velocity input to the controller is converted by the impedance control algorithm [

5,

6]. Moreover, for low impedance, back drivability and precise control, a compact rotary series elastic actuator is designed in [

7] to fulfill these requirements. The input to the system controller is derived from the deformation of the series elastic module. Also note that an improvement of system stability is introduced in [

8], which addresses the time-delay effect of the series elastic module.

Apart from the sensor-based human intention recognition, the sensitivity amplification control is proposed in [

9,

10] for the Berkeley Lower Extremity Exoskeleton. The design conception of control algorithm aims to enable a pilot’s locomotion with little effort on any type of terrain. In addition, the control scheme demands on interaction sensors between the pilot, as well as the exoskeleton. Nevertheless, the controller has little robustness to model uncertainties or parameter variations.

Although the sensors mounted between the pilot and the exoskeleton can simplify the control algorithm, a crucial issue that should be addressed is that the time-delay effect cannot be avoided. Consequently, the control performance is limited due to this issue, even if the robustness can be enhanced by the compensation of the disturbance observer [

11] or the internal model control [

12]. The problem of time-delay effect may be solved by the cognitive-based sensors [

13,

14], since the human intention can be acquired before the movement primitives. Nevertheless, these signals derived from the sensors suffer from unwanted noise, as well as the overlap of the spectrum with other signals.

The sensitivity amplification control (SAC) control scheme has provided a solution for the human performance augmentation exoskeletons. However, such an algorithm suffers from weak robustness, and it barely destroys the control system. Thus, a sophisticated identification process is required to improve system stability, as detailed in [

15]. The second drawback is that the fixed sensitivity factor cannot manage the uncertainties of the interaction model.

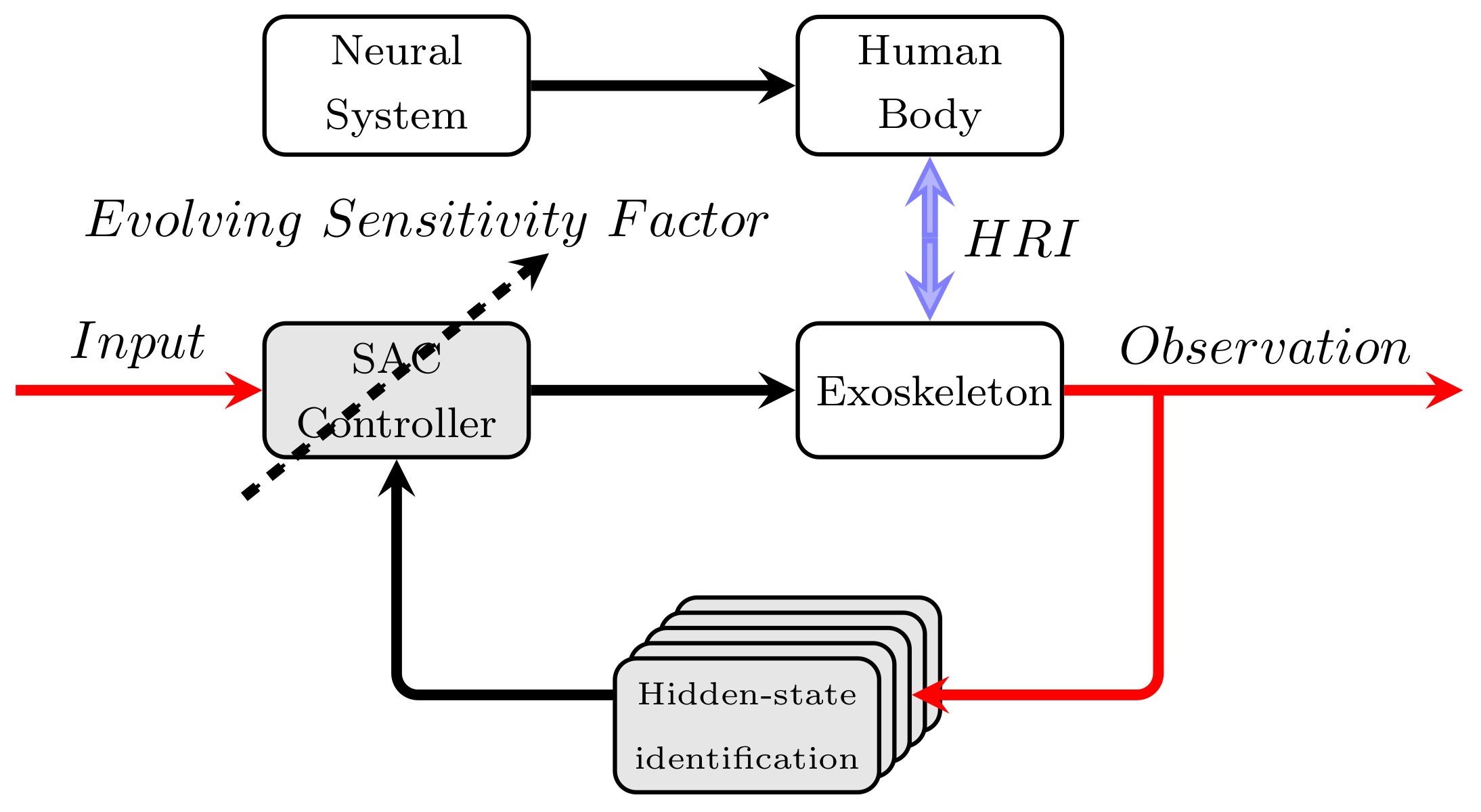

In this paper, we retain the advantages of the SAC control scheme and propose a novel control scheme, so-called probabilistic sensitivity amplification control, to address the two crucial issues, as shown in

Figure 1. Rather than identifying the system dynamics, we infer the state-space model based on a novel distributed identification process. To address the challenge of dealing with the uncertainties of the interaction model, an online reinforcement learning algorithm based on the derandomized evolution strategy with covariance matrix adaptation (CMA-ES) is presented for the adaptation of sensitivity factors. Thus, the sensitivity factors can be effectively updated in a constraint domain of interest according to the torque tracking error penalty.

The remainder of the paper is organized as follows:

After the Introduction,

Section 2 is devoted to outlining the main routine of our proposed distributed hidden-state identification to address the issue of weak robustness. In

Section 3, the novel evolving learning of sensitivity factors is presented to deal with the variational HRI. The implementation of the experiments and the comparison of the control algorithms are presented in

Section 4. Finally,

Section 5 concludes with a summary.

2. Distributed Hidden-State Identification

For realizing efficient control of the exoskeleton, it is essential to understand the human intention. According to our control scheme, the intention can be interpreted as the system state variables. Although tremendous attention has been paid to filtering and smoothing [

16,

17,

18], these algorithms do not focus on the identification process, but on inference. In addition, the Gaussian process (GP) state-space model presented in [

19] has not addressed the crucial issue of computation expense, which we will carefully discuss in this section. Therefore, the graphic explanation of the crucial difference between the GP state-space inference [

17,

18,

19] and our proposed distributed hidden-state identification are shown in

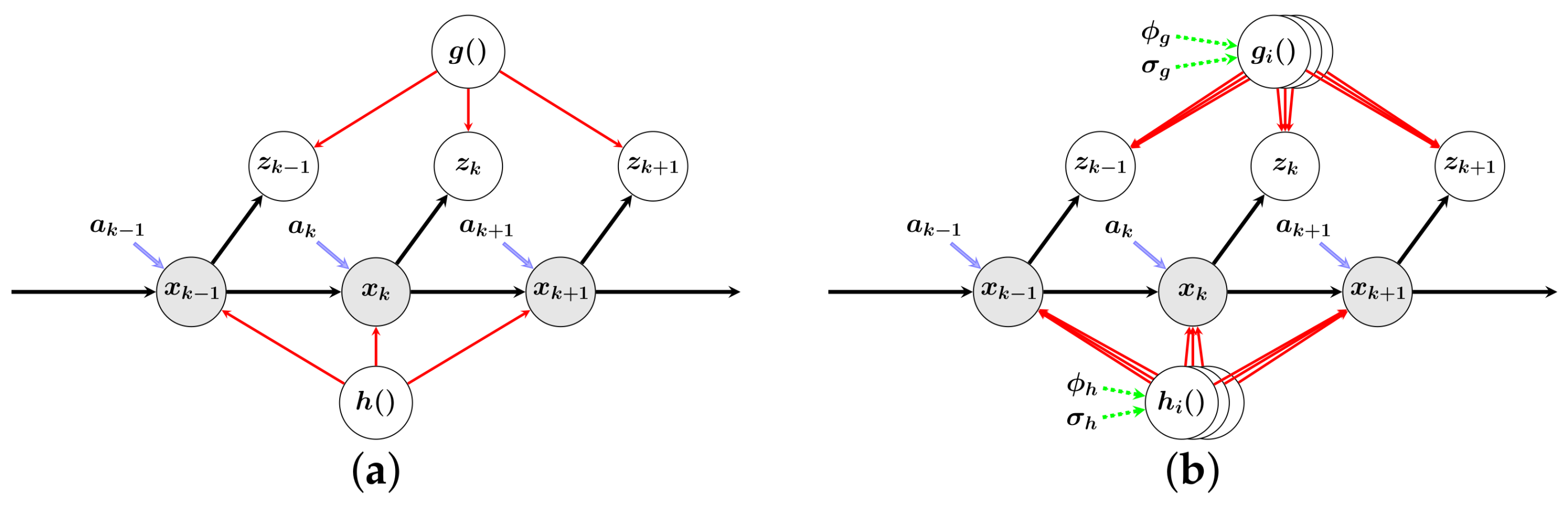

Figure 2.

In this section, firstly, we give a comprehensive explanation of the distributed model learning for the identification process based on the expectation maximization. Then, we introduce the online inference under a distributed framework and Markov chain Monte Carlo, also along with the fusion method, so-called generalized product-of-expert (PoE).

2.1. Model Learning

Although the Gaussian process has a substantial impact in many fields, the crucial limit is that the computation expense scales in

for training, while regarding prediction, the computed expense is

if caching the kernel matrix. Thus, we employ the distributed Gaussian process [

20] to address this issue, since this method can handle arbitrarily large datasets with sufficient hierarchical product-of-expert (PoE) models.

The Gaussian process, fully specified by a mean function

and a covariance function

, is a probabilistic distribution over functions

f. The latent function

can be learned from a training dataset

, where

and

. In addition, the posterior predictive distribution of the function

by a given set of hyper-parameters

is defined with mean and covariance, respectively

With

defining input,

and

. Note that the hyper-parameters

of the covariance function consist of the length-scale

, the signal variance

and the noise variance

. Besides, the hyper-parameters are of significance to the covariance function defining the non-parametric model behavior [

21].

According to the independent distributed assumption, the marginal likelihood

can be decomposed into

M individual products:

With M the total number of experts. In the model learning process, the hyper-parameters are shared for all M GPs. Moreover, a usual method for optimizing the hyper-parameters is to maximize the evidence maximization. Nevertheless, we apply a more efficient method in this paper, i.e., expectation maximization with Monte Carlo sampling.

Therefore, the general class of latent state-space models with the Gaussian process is given below:

With . The is a latent state that evolves over time, while can be read from actual measurement data. Both additive system noise, as well as measurement noise are assumed i.i.d. If not stated otherwise, both the measurement function and dynamic function are implicit, but instead can be described with the probability distributions over these Gaussian functions. Here, the functions are a Gaussian process non-parametric model, defined as and , separately.

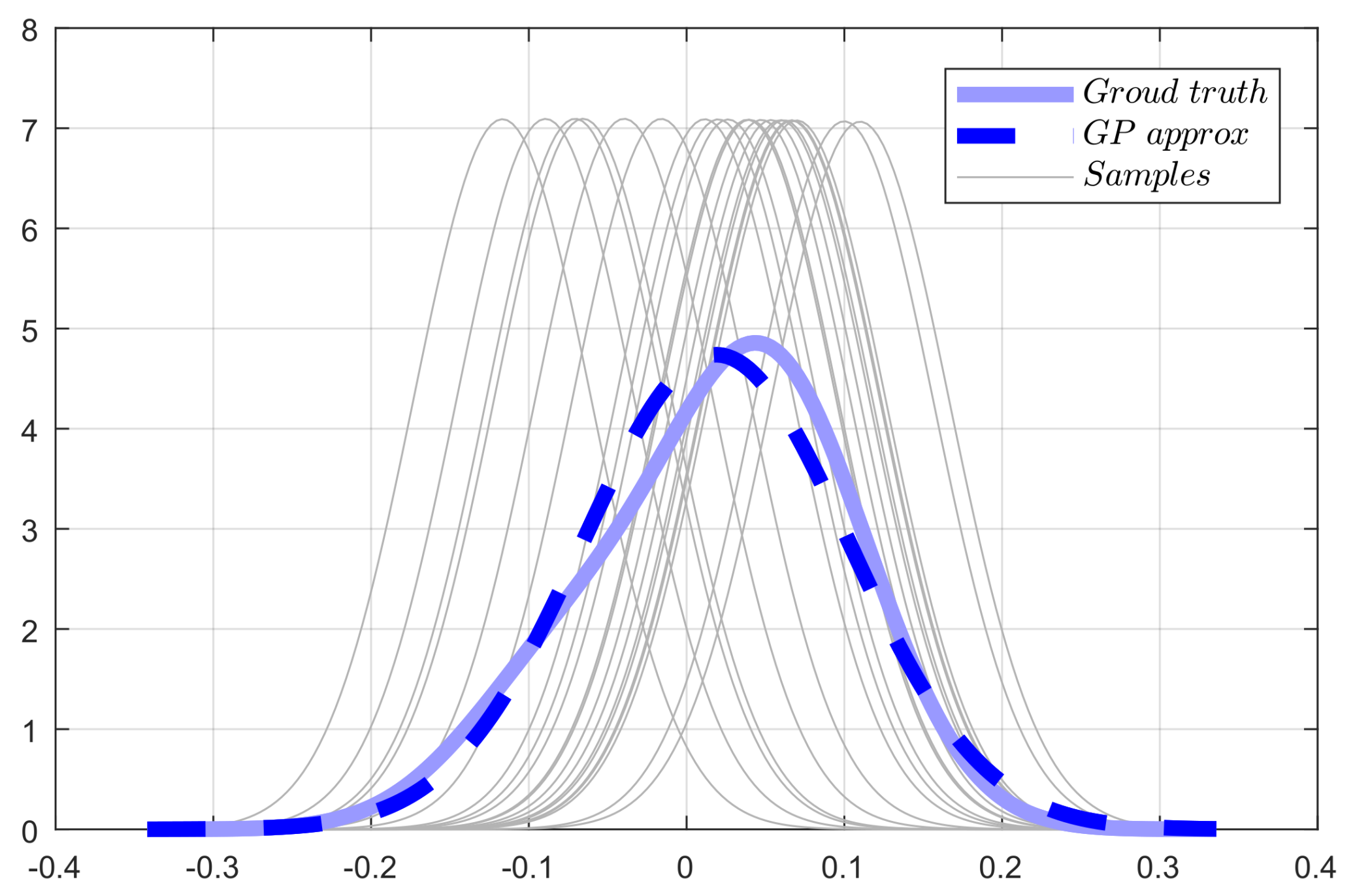

Limited by the space of this paper, we briefly explain the learning process of the dynamic function

. In the expectation step, we compute the posterior distribution of the system states

, where

and

. Nevertheless, the posterior distribution is not a Gaussian process and hence is not easy to obtain analytically. Consider the situation in which we draw samples from the posterior distribution and the marginal likelihood is approximated by Monte Carlo integration, as shown in

Figure 3. Consequently, in the maximization step, the hyper-parameters are given by maximizing the obtained likelihood

with stochastic conjugate gradient optimization. The whole learning routine is give in Algorithm 1. Note that though the learning procedure is time consuming, this is nota problem since the training process of hyper-parameters is off-line.

| Algorithm 1: Calculate hyper-parameter values with Monte Carlo expectation maximization. |

![Applsci 08 00525 i001]() |

2.2. Distributed Identification Process

To identify the latent state

, we have to compute the posterior distribution

. Note that, even if the system states can be obtained from the observation, the measurement data are not accurate owing to the system noise. Thus, the system state

is seen as a hidden variable that should be identified. Moreover, the

k time step system state only depends on

time step state and the

k time step observation, which hence is a typical hidden Markov model. According to the previous measurements and current time step prediction, Bayes’ law yields:

where

is defined as a prior of the posterior distribution and

is determined by the measurements. In addition, to improve the identification process, we reuse the observation data, i.e., current, previous and future:

For the recursive process, the smooth hidden states

are given from

to 2:

In light of the work in [

22], the updated posterior distribution of the latent state for the

m-th (

m =

) GP can be obtained:

Not akin to [

22], to infer a hidden state with a corresponding observation input, we should combine all the M GPs to fusion of the M predictions. Therefore, in this paper, we compare four fusion algorithms, i.e., product of expert (PoE) [

23], generalized product of expert (gPoE)[

24], Bayesian committee machine (BCM) [

25] and robust Bayesian committee machine (rBCM) [

20]. The only difference between the gPoE and PoE is the different precisions, since the important weights are set to

to avoid an unreasonable error bar. Moreover, the improvement made in the BCM is that it incorporates the GP prior explicitly when predicting the joint distribution. Hence, the prior precision should be added to the posterior predictive distribution. The rBCM seeks to add the flexibility of gPoE to BCM to enhance predictive performance.

Moreover, we conduct an experiment with a one dimension problem [

26].

Figure 4 shows the performance comparison of four distributed identification process (PoE , gPoE, BCM, rBCM) and robust Rauch-Tung-Striebel smoother for GP dynamic systems (GP-RTSS) [

18], which is implemented under the full GP scheme. The PoE and BCM distributed schemes overestimate the variances in the regime of the series hidden states, which leads to overconfident precision compared with GP-RTSS. Moreover, the prediction of the rBCM is too conservative, although the rBCM aims to provide a more robust solution. The gPoE distributed scheme provides more reasonable identification of the hidden states than any other models in

Figure 4.

Moreover,

Figure 5 shows two aspects of the distributed identification framework with the different number of GP experts: (1) a comparison of approximation quality; (2) computation expense. According to the information in

Figure 5, for a certain set of training data, with the increase of the GP experts, the computation time significantly decreases. However, the computation expense reaches a minimum when there are five GP experts. Besides, the interesting properties are that there is no significant difference (root-mean-square-error (RSME): 0.01 and negative log-likelihood (NLL): 0.2) between the identification framework with one GP expert (full GP) and more than one expert. Furthermore, note that a better approximation quality can be expected with ten GP experts with the minima of RSME or with eight GP experts according to the NLL criterion. Thus, although we do not argue that our proposed hidden-state identification scheme outperforms the full GP identification algorithm concerning precision, the computation expense has been dramatically reduced. Moreover, the overall identification procedure is concluded in Algorithm 2.

| Algorithm 2: Inference with Monte Carlo Markov chain. |

![Applsci 08 00525 i002]() |

3. Evolving Learning of the Sensitivity Factor

The sensitivity amplification control proposed in [

9] aims to improve the control performance without directly measuring the interaction information with the pilot. Thus, by inserting a sensitivity factor

in dynamic equation:

The interaction between the pilot and the exoskeleton is given:

With perfect estimation

and

and sufficient large

. The interaction

d can approach zero. Nevertheless, the sensitivity factor should not be selected with a sufficiently large parameter due to the weak robustness. Moreover, in an actual situation, the interaction varies owing to stochastic environment factors. Therefore, choosing a fixed sensitivity factor is not a good option. An improvement has been made in [

27], where the sensitivity factor is learned with Qlearning. Compared with the classic SAC, the interaction force has been reduced. However, the learning algorithm mainly copes with the discrete region of interest. In addition, the optimization of the constraint domain of the sensitivity factor is not addressed in the reference.

In this paper, the sensitivity factor is evolved in real time in a continuous constraint domain. By applying

covariance matrix adaptation evolution strategy (

-CMA-ES) [

28], the sensitivity factor seeks the optimal value owing to tracking error penalty.

3.1. (1 + 1)-CMA-ES

Derived from the CMA-ES, a crucial feature of the

-CMA-ES is that it directly operates on Cholesky decomposition and is about

-times faster than the classic CMA-ES. The

-CMA-ES adapts not only the entire covariance matrix of its offspring candidates, but also the entire step size. Since the covariance matrix

is definitely positive, owing to Cholesky decomposition,

, with

an

matrix. Thus, the offspring candidate

is written:

where

is the step size,

is the corresponding parental candidate and

is derived from normal distribution. In addition, the adaptation strategy maintains a search path in order to save an exponentially-fading record

. Thus, a successful offspring candidate can be obtained from the covariance matrix:

With

. Rather than dealing with the Cholesky decomposition operation in every loop, the matrix

can be updated as follows:

With

. To enhance the learning efficiency, if the offspring candidate solution is extremely unsuccessful, the algorithm should also learn from failure cases. Therefore, the covariance matrix update is written:

where

; similarly, the equivalent update of matrix

is given:

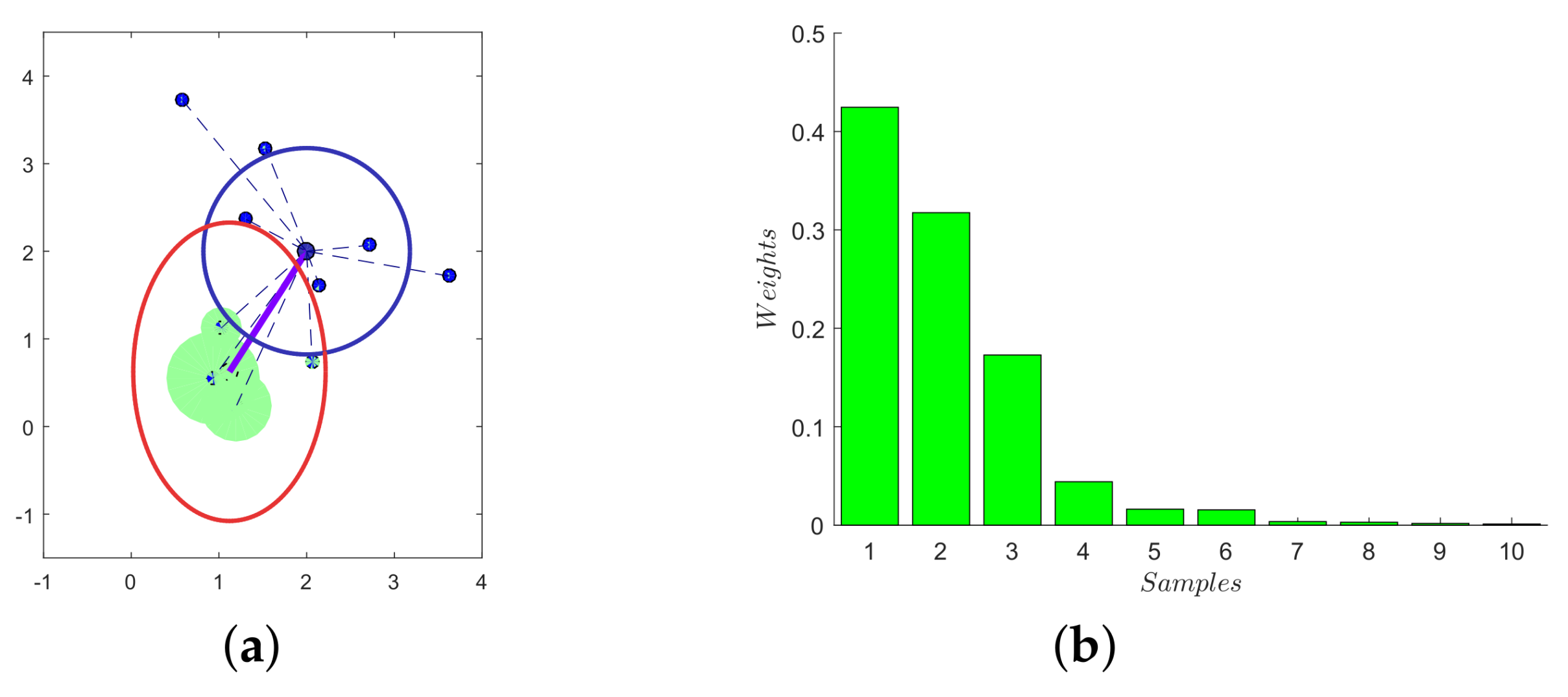

The graphic interpretation of CMA-ES is shown in

Figure 6. More specifically, the contributions of each sample are ranked according to corresponding penalties, which will be explained in the next subsection. Not all samples, but several high-contribution points are used during the update procedure. Thus, the new distribution is evolved from the previous step distribution with an adaptive step size.

3.2. Constraint Optimization

Since the sensitivity factor

is constrained regarding the optimization domain, to deal with this problem, the variance of the offspring distribution is reduced in the normal vector direction in the vicinity of the corresponding parental candidate. For the constraints

, an exponential fading vector is defined following [

29] and updated when the optimization is lessened by the boundary:

where

is a parameter that determines the convergence speed. Equation (

20) can be seen as a low-pass filter, which guarantees that the tangential components of

have been canceled out.

In these situations, the Cholesky factor

should also be rewritten to meet the demand of the restrained boundary:

With

and

if

, otherwise zero. The update size is controlled by the parameter

. Moreover, compared with Equations (

17) and (

19), Cholesky decomposition

A without a scalar greater than one aims to decrease the update step size to approach the boundary of constraints.

The solution to generating offspring candidates is explained in

Figure 7. In the direction of the normal vector, the variance of the offspring is reduced as shown in

Figure 7a. After the operation, the evolved variance is tangential to the constraint boundary in

Figure 7b. Thus, the offspring candidates can be sampled and derived from the evolved variance

. Note that, in

Figure 7c, after constraint optimization, the constraint angle has been increased due to the change of the offspring distribution.

In terms of the sequence hidden-states

, as well as actual data, the penalty function for updating of the sensitivity factor is given:

where

and

are the joint velocity and angle, separately. Note that not akin to the control algorithm in [

27], where the penalty function consists of the previous loop trajectory data, the desired control input

is computed and predicted from the identification process. Therefore, our scheme is more suitable for improving real-time control performance. The first term in Equation (

22) is to avoid high jerk. Furthermore, the last two terms are penalized for tracking error. From our intuition, with less interaction force between the human and the exoskeleton, the pilot will feel more comfortable. According to this point view, punishing the last two terms may enhance the tracking performance. The main routine of the evolving learning of sensitivity factors scheme is presented in Algorithm 3.

| Algorithm 3: Evolving learning of the sensitivity factor. |

![Applsci 08 00525 i003]() |

4. Experiment and Discussion

Although the overall algorithm has been introduced in the previous part of this paper, the control performance of the algorithm should be verified. Consequently, in this section, first, we briefly explain our exoskeleton platform and distributed identification mode learning; then, we compare several state-of–the-art control methods (SAC, SAC + Qlearning) with our proposed control framework.

4.1. Experiment Setting and Model Learning

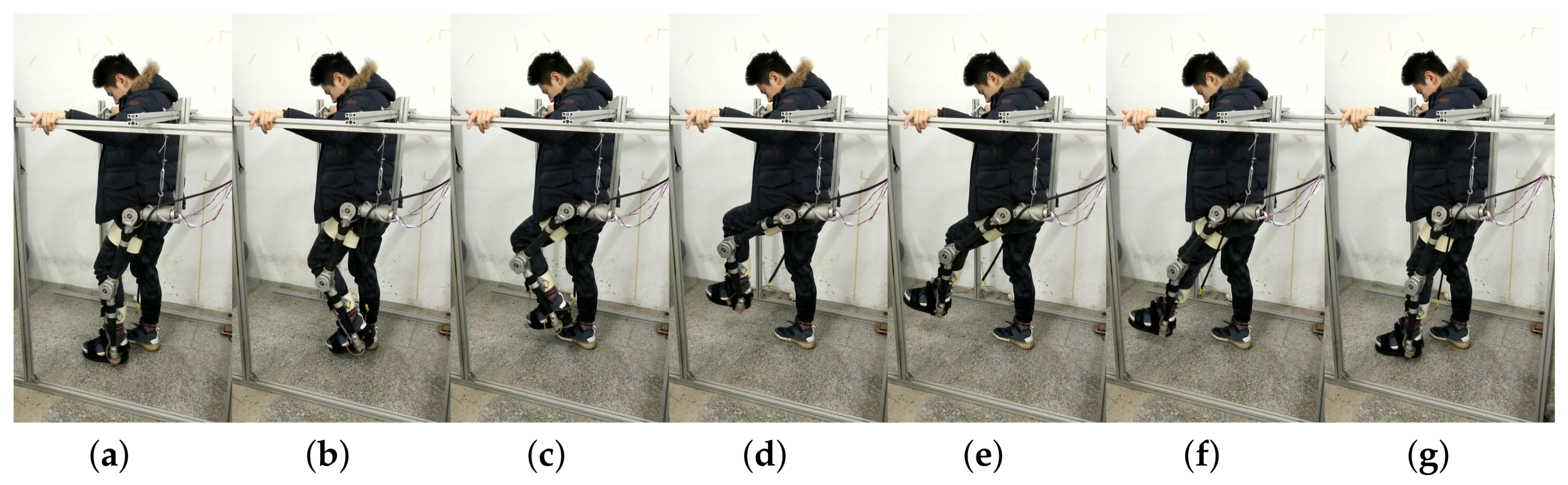

The developed single-leg exoskeleton platform is as shown in

Figure 8, which is a robust and ergonomic device for endurance enhance and strength augmentation. The necessary auxiliary facilities are embedded industrial personal computer (IPC), PMAC (programmable multi-axis controller), Copley actuators and power supply unit. The power module should provide three kinds of voltage, i.e., 5 V, 12 V and 24 V. The observation data, derived from inertial measurement unit (IMU) and magnetic encoders, is collected by PMAC. The high-level control scheme is calculated in the embedded PC, and the driven commands are sent to the actuator through the Copley driver. For more details, we refer to our previous work in [

30].

Before the implementation of the experiments, the dynamic and measurement models should be learned off-line. Therefore, to learning dynamic and measurement models, the necessary components are input, as well as observation. For dynamic models, the training input is given in a series of tuples () with and the next step state as training outputs. The input signal Nm is selected randomly and is constrained for safety. In addition, for measurement models, the training inputs are defined as system state given by the angles , and the corresponding angular velocities , , for hip joint and knee joint, respectively. To observe the system states, we apply the encoder and IMUs to measure the joint angles and angular velocities separately. Therefore, the training outputs of the measurement models are defined.

The learning result of the dynamic model of the hip joint is presented in

Figure 9. Consequently, in order to learn the hyper-parameters each GP takes about 30–40 trails. Furthermore, the initial value of each function may be different owing to random initialization. Moreover, to demonstrate the feasibility, we test 600 unused data pairs with the learned model as shown in

Figure 10. The error of the identification result compared with the system value is about 0.01 rad given in the lower figure of

Figure 10.

4.2. Flat Walking Experiment

In the experiment, the pilot is asked to naturally swing his left leg, as shown in

Figure 11. The control algorithms compared in the experiment are SAC [

10], SAC + Qlearning [

27], SAC + CMA-ES and our proposed algorithm.

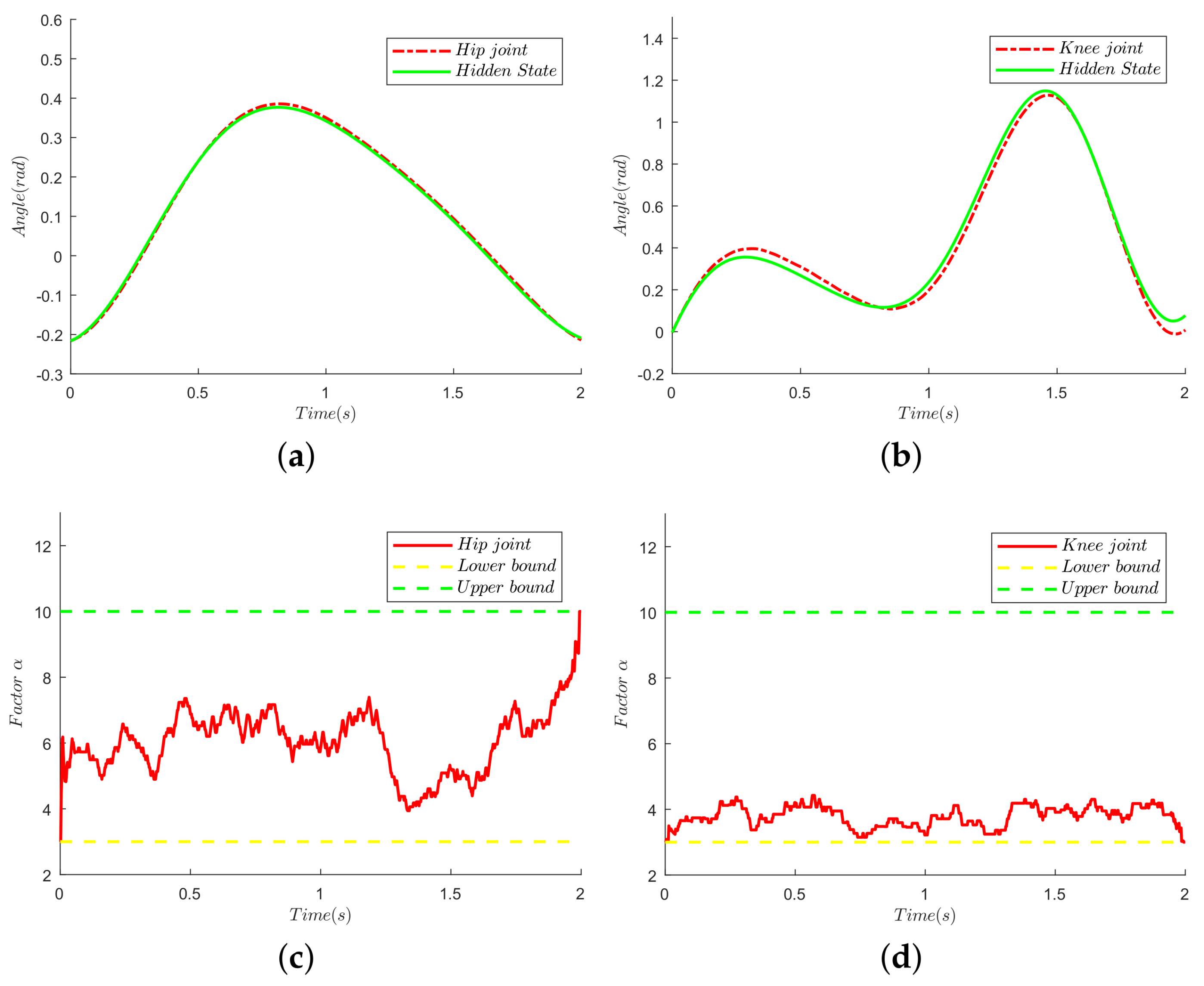

The tracking performances of the knee joint and the hip joint are given in

Figure 12a,b. Since the hidden states

are identified, the single exoskeleton leg can follow the human motion in real time. Furthermore, owing to the CMA-ES algorithm, the evolution of the sensitivity factor

for the hip joint is shown in

Figure 12c, along with the evolution for the knee joint presented in

Figure 12d. To be more specific, the updated distribution is derived from the contributions of 15 samples selected from 20 candidates. The lower bound and upper bound are set to be three and 10, respectively, according to experimental experience. Furthermore, from

Figure 12c, the sensitivity factor of the hip joint increases as the pilot puts down his leg, which means that the HRI force decreases, and the exoskeleton does not need too much additional torque from the pilot. Consequently, setting a fixed sensitivity factor is not a good option. Moreover, compared with the hip-joint sensitivity factor, the knee-joint sensitivity factor almost keeps a lower one, fluctuating around four. The reason for this situation is that the interaction between the thigh and the exoskeleton is more compliant than the interaction between the shank and the exoskeleton. Therefore, the sensitivity of the hip joint is better than that of the knee joint.

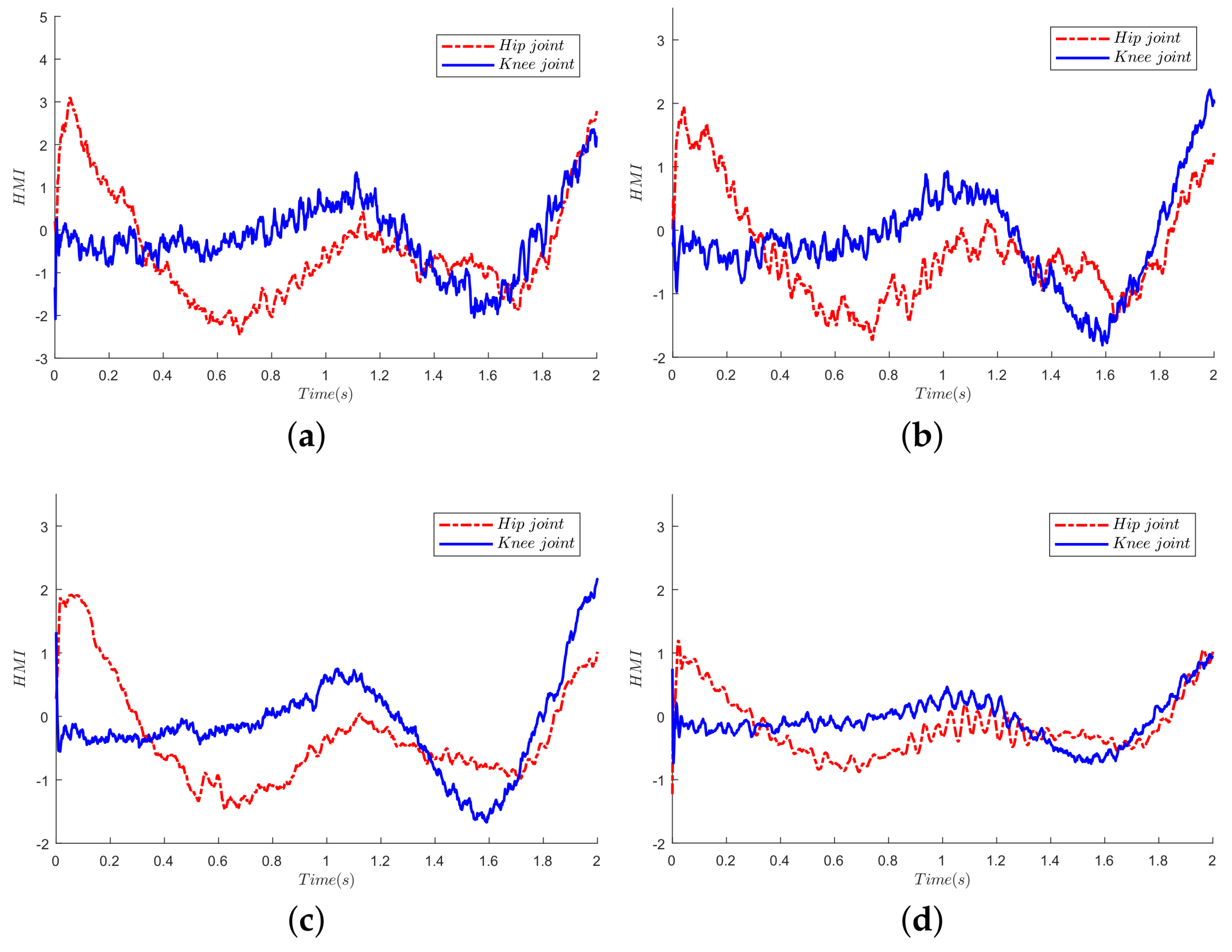

In order to assess the control performance of our proposed algorithm, we compare the HRI force of four algorithms as shown in

Figure 13. Since there is no sensor mounted between the pilot and exoskeleton, the HRI force is estimated by the disturbance observer. From intuition, the pilot feels more comfortable with less interaction force in the walking pattern. Therefore, the control performance may be evaluated from the information of the interaction force. As we all know, the ideal control performance is that the interaction force is zero, which means that the exoskeleton can follow the human motion perfectly.

Thus, to quantitatively evaluate the four control schemes, we compute the RMSE of the HRI of each joint. From

Table 1, compared with the SAC control scheme, both SAC + Qlearning and SAC + CMA-ES own lower RMSE in both joints, since both algorithms learn an adaptive sensitivity factor. Furthermore, a small difference is shown in

Table 1 between the SAC + Qlearning, as well as SAC + CMA-ES, and SAC + CMA-ES performs a little better. Moreover, a significant reduction of RMSE can be seen in

Table 1 in our proposed algorithm, which is nearly two-times less than the other control schemes.

5. Conclusions

In this paper, a novel control framework has been proposed to address the crucial issues of SAC, i.e., weak robustness, disability to reject the disturbance and model uncertainties. The proposed control methodology, so-called probabilistic sensitivity amplification control, is two-fold: distributed hidden-state identification and evolving learning of sensitivity factors. The identification aims to enhance the robustness and to reject the undesired disturbance. The computation expense is significantly reduced owing to the distributed GP scheme. Moreover, the evolving learning is mainly designed to reduce the HRI between the pilot and the exoskeleton. Since the sensitivity factor can be evolved due to the punishment of the tracking error, the controller can learn from different HRI. Finally, several experiments have been implemented to verify the feasibility of our control scheme. The experimental result shows promising application feasibility. In our future work, we will extend our control scheme to a more complex situation, i.e., upper limb exoskeleton and whole body exoskeleton.